Abstract

Artificial Neural Networks performs a specific task much better than a human but fail at toddler level skills. Because this requires learning new things and transferring them to other contexts. So, the goal of general AI is to make the models continually learning as in humans. Thus, the concept of continual learning is inspired by lifelong learning in humans. However, continual learning is a challenge in the machine learning community since acquiring knowledge from data distributions that are non-stationary in general leads to catastrophic forgetting also known as catastrophic interference. For those state-of-art deep neural networks which learn from stationary data distributions, this would be a drawback. In this survey, we summarize different continual learning strategies used for classification problems which include: Regularization strategies, memory, structure, and Energy-based models.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Hassabis, D., Kumaran, D., Summerfield, C., Botvinick, M.: Neuroscience-inspired artificial intelligence. Neuron Rev. 95(2), 245–258 (2017)

Thrun, S., Mitchell, T.: Lifelong robot learning. Robot. Auton. Syst. 15, 25–46 (1995)

McClelland, J.L., McNaughton, B.L., O’Reilly, R.C.: Why there are complementary learning systems in the hippocampus and neocortex: insights from the successes and failures of connectionist models of learning and memory. Psychol. Rev. 102, 419–457 (1995)

McCloskey, M., Cohen, N.J.: Catastrophic interference in connectionist networks: the sequential learning problem. Psychol. Learn. Motiv. 24, 104–169 (1989)

Ditzler, G., Roveri, M., Alippi, C., Polikar, R.: Learning in nonstationary environments: a survey. IEEE Comput. Intell. Mag. 10(4), 12–25 (2015)

Mermillod, M., Bugaiska, A., Bonin, P.: The stability-plasticity dilemma: investigating the continuum from catastrophic forgetting to age-limited learning effects. Front. Psychol. 4, 504 (2013)

Grossberg, S.: How does a brain build a cognitive code? Psychol. Rev. 87, 1–51 (1980)

Grossberg, S.: Adaptive resonance theory: how a brain learns to consciously attend, learn, and recognize a changing world. Neural Netw. 37, 1–41 (2012)

Rebuffi, S.-A., Kolesnikov, A., Sperl, G., Lampert, C.H.: iCaRL: incremental classifier and representation learning. In: Conference on Computer Vision and Pattern Recognition, Honolulu, pp. 5533–5542. IEEE (2017)

Kirkpatrick, J., et al.: Overcoming catastrophic forgetting in neural networks. Proc. Natl. Acad. Sci. USA 114, 3521–3526 (2017)

Zenke, F., Poole, B., Ganguli, S.: Continual learning through synaptic intelligence. In: International Conference on Machine Learning, Sydney, pp. 3987–3995. PMLR (2017)

Li, Z., Hoiem, D.: Learning without forgetting. IEEE Trans. Pattern Anal. Mach. Intell. 40, 2935–2947 (2017)

Jung, H., Ju, J., Jung, M., Kim, J.: Less-forgetting learning in deep neural networks. In: AAAI 2018, New Orleans, LA (2018)

Aljundi, R., Babiloni, F., Elhoseiny, M., Rohrbach, M., Tuytelaars, T.: Memory aware synapses: learning what (not) to forget. In: Ferrari, V., Hebert, M., Sminchisescu, C., Weiss, Y. (eds.) ECCV 2018. LNCS, vol. 11207, pp. 144–161. Springer, Cham (2018). https://doi.org/10.1007/978-3-030-01219-9_9

Ebrahimi, S., Elhoseiny, M., Darrell, T., Rohrbach, M.: Uncertainty-guided continual learning with Bayesian neural networks. In: ICLR 2020 (2020)

Mallya, A., Lazebnik, S.: PackNet: adding multiple tasks to a single network by iterative pruning. In: IEEE Conference on Computer Vision and Pattern Recognition (CVPR) (2018)

Serra, J., Suris, D., Miron, M., Karatzoglou, A.: Overcoming catastrophic forgetting with hard attention to the task. In: Dy, J., Krause, A. (eds.) Proceedings of the 35th International Conference on Machine Learning. Volume 80 of Proceedings of Machine Learning Research, pp. 4548–4557. PMLR (2018)

Kemker, R., Kanan, C.: FearNet: brain-inspired model for incremental learning. In: ICLR 2018 (2018)

Lopez-Paz, D., et al.: Gradient episodic memory for continual learning. In: Advances in Neural Information Processing Systems (NeurIPS), p. 30 (2017)

Chaudhry, A., et al.: Efficient lifelong learning with A-GEM. arXiv https://arxiv.org/abs/1812.00420, 2 December 2018

Yoon, J., Yang, E., Lee, J., Hwang, S.J.: Lifelong learning with dynamically expandable networks. In: International Conference on Learning Representations (2018)

Rusu, A., et al.: Progressive neural networks. arXiv preprint arXiv:1606.04671 (2016)

Shin, H., et al.: Continual learning with deep generative replay. In: Advances in Neural Information Processing Systems (2017)

Liu, Y., et al.: Human replay spontaneously reorganizes experience. Cell 178, 640–652 (2019)

Robins, A.: Catastrophic forgetting, rehearsal and pseudorehearsal. Connect. Sci. 7, 123–146 (1995)

Schwarz, J., et al.: Progress & compress: a scalable framework for continual learning. In: Proceedings of the International Conference on Machine Learning, pp. 4535–45442 (2018)

Nguyen, C.V., Li, Y., Bui, T.D., Turner, R.E.: Variational continual learning. In: International Conference on Learning Representations (2018)

Lee, S.-W., Kim, J.-H., Jun, J., Ha, J.-W., Zhang, B.-T.: Overcoming catastrophic forgetting by incremental moment matching. In: Advances in Neural Information Processing Systems, pp. 4652–4662 (2017)

Lee, S.W., Kim, J.H., Ha, J.W., Zhang, B.T.: Overcoming catastrophic forgetting by incremental moment matching. arXiv preprint arXiv:1703.08475 (2017)

Farquhar, S., Gal, Y.: Towards robust evaluations of continual learning. arXiv preprint arXiv:1805.09733 (2018)

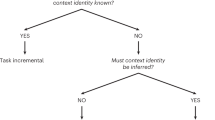

van de Ven,G.M., Tolias, A.S.: Three scenarios for continual learning. arXiv preprint arXiv:1904.07734 (2019)

Goodfellow, I.J., Mirza, M., Xiao, D., Courville, A., Bengio, Y.: An empirical investigation of catastrophic forgetting in gradient-based neural networks. arXiv 4.2.1, A.1, A.5 (2013)

Li, S., Du, Y., van de Ven, G.M.: Energy-based models for continual learning. arXiv preprint (2020)

Kemker, R., McClure, M., Abitino, A., Hayes,T.: Measuring catastrophic forgetting in neural networks. In: Proceedings of the AAAI (2018)

Hadsell, R., Rao, D., Rusu, A.A., Pascanu, R.: Embracing change: continual learning in deep neural networks. Trends Cogn. Sci. 24(12), 1028–1040 (2020)

Kitamura, T., Ogawa, S.K., Roy, D.S., Okuyama, T., Morrissey, M.D., Smith, L.M., et al.: Engrams and circuits crucial for systems consolidation of a memory. Science 356, 73–78 (2017)

Parisi, G.I., Kemker, R., Part, J.L., Kanan, C., Wermtera, S.: Continual lifelong learning with neural networks: a review. Neural Netw. 113, 54–71 (2019)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2021 IFIP International Federation for Information Processing

About this paper

Cite this paper

Vijayan, M., Sridhar, S.S. (2021). Continual Learning for Classification Problems: A Survey. In: Krishnamurthy, V., Jaganathan, S., Rajaram, K., Shunmuganathan, S. (eds) Computational Intelligence in Data Science. ICCIDS 2021. IFIP Advances in Information and Communication Technology, vol 611. Springer, Cham. https://doi.org/10.1007/978-3-030-92600-7_15

Download citation

DOI: https://doi.org/10.1007/978-3-030-92600-7_15

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-92599-4

Online ISBN: 978-3-030-92600-7

eBook Packages: Computer ScienceComputer Science (R0)