Abstract

Locally refined B-spline (LRB) surfaces provide a representation that is well suited to scattered data approximation. When a data set has local details in some areas and is largely smooth elsewhere, LR B-splines allow the spatial distribution of degrees of freedom to follow the variations of the data set. An LRB surface approximating a data set is refined in areas where the accuracy does not meet a required tolerance. In this paper we address, in a systematic study, different LRB refinement strategies and polynomial degrees for surface approximation. We study their influence on the resulting data volume and accuracy when applied to geospatial data sets with different structural behaviour. The relative performance of the refinement strategies is reasonably coherent for the different data sets and this paper concludes with some recommendations. An overall evaluation indicates that bi-quadratic LRB are preferable for the use cases tested, and that the strategies we denote as “full span" have the overall best performance.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

1 Introduction

Tensor-product B-spline surfaces are a mature and standardized geometry representation that has been known at least since the 1970s. The first uses of Tensor-product B-splines were in Computer Aided Design (CAD). In Isogeometric Analysis (IgA) [6], B-splines replace the traditional shape functions used in Finite Element Analysis (FEA). A guide to splines can be found in [9]. Tensor-product spline surfaces have very good numerical properties, but lack local refinement of the spline space. In recent years several approaches have been proposed for local refinement of spline surfaces including T-splines [3, 20], Truncated Hierarchical B-splines (THB) [11], and LR B-splines (LRB) [2, 7]. We will, in this paper, focus on the use of LRB surfaces for approximation of scattered data.

We perform a systematic study on the effects of different strategies for local refinement of LRB surfaces for the approximation of geospatial point clouds. Section 2 provides an overview of relevant locally refined spline methods and outlines the algorithmic approach we employ for scattered data approximation. Section 3 gives a brief overview over previously published local refinement strategies for bi-variate splines and discusses the concept of a good refinement strategy for approximation of large point clouds. A set of candidate strategies are defined in Sect. 4. Section 5 presents five data sets and the corresponding approximation results for the selected refinement strategies along with analysis of the result related to each data set. In Sect. 6 the case specific analysis is summarized to provide a unified understanding, and finally in Sect. 7 some conclusions are drawn.

2 Background

Section 2.1 presents locally refined splines in general and LRB in particular. We also explain why we focus on LRB rather than T-splines and THB. We then turn to scattered data approximation in Sect. 2.2.

2.1 Locally Refined Splines

The lack of local refinement for tensor-product splines provides severe restrictions for IgA as well as for scattered data approximation. Using tensor product B-splines will in most cases introduce significantly more degrees of freedom than actually needed. This makes the data volume grow and restricts the size of problems that can be addressed. Two basic approaches exist for building local spline surfaces:

-

Refinement in the mesh of vertices/control points: the approach used for T-splines.

-

Refinement in the parameter domain: the approach used for THB and LRB.

For simulation and approximation purposes, it is convenient to span the locally refined spline space by a set of linearly independent functions. T-spline, LRB and THB spaces are all spanned by functions that are composed from tensor products of univariate B-splines. It is also attractive to have quasi interpolants for hierarchical spaces [22].

2.1.1 T-Splines

T-splines were introduced by Sederberg et. al. [20] to enhance the modelling flexibility in CAD design. The starting point of the T-spline T-mesh is the regular grid of control points in a tensor product B-splines surface. New control points in the T-mesh are added on axis parallel lines between existing control points according to a set of T-spline rules. Each control point in the T-mesh corresponds to a tensor product B-spline. The knot vectors of each such B-spline are identified by traversing the T-mesh starting from the control point and going outwards, in all four axis parallel directions, until a degree dependent number of meshlines are intersected. In the bi-cubic case this traversing stops after two lines in the T-mesh are intersected in each of the four directions. The new control points are used to model local details in a preexisting surface. Frequently, the B-splines spanning the T-spline space have to be scaled to form a partition of unity.

The most general version of T-splines possesses neither nested spline spaces nor a guarantee for linear independence [5] of B-splines. In IgA, linear independence is important and additional rules were added to the T-spline creation algorithm to ensure it, giving rise to Analysis Suitable T-splines [3].

2.1.2 Truncated Hierarchical B-Splines

Hierarchical B-splines (HB), introduced by Forsey and Bartels [10], are based on a dyadic sequence of grids determined by scaled lattices over which uniform spline spaces are defined. HB provide nested spline spaces spanned by tensor product B-splines, but do not form a partition of unity and they are not linearly independent. How to select B-splines that gives linearly independent HB was solved in [15]. To provide partition of unity for HB, THB [11] were introduced. THB allow non-uniform spline spaces, are linearly independent and reduce the support of the basis functions. The basis functions of THB are made by truncating tensor product B-splines with tensor product B-splines from finer refinement levels. That is, they are made by eliminating the contribution corresponding to the subset of the finer B-splines included in the hierarchical basis from the representation of such coarser B-splines. The truncated B-splines can be described as a sum of scaled tensor product B-splines from finer levels.

2.1.3 Locally Refined B-Splines

An LRB surface is a piecewise polynomial or piecewise rational polynomial surface defined on an LR-mesh. An LR-mesh is a locally refined mesh made by applying a sequence of refinements starting from a tensor-product mesh. LRB are algorithmically defined throughout the refinement process of the mesh. An LRB surface is defined as

where \(P_i\), \(i=1,\ldots ,L\) are the surface coefficients, and \(s_i\) are scaling factors introduced to ensure partition of unity of the resulting collection of tensor product B-splines. The tensor product B-splines \(R_{i,p_1,p_2}\) are of bi-degree \((p_1,p_2)\) defined on knot vectors of lengths \(p_1 + 2\) and \(p_2 + 2\) on the parametric domain in the u and v directions respectively. \(N_{i,p_1,p_2}\) are the tensor product B-splines multiplied with their scaling factor.

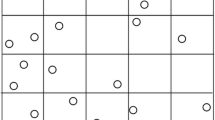

The initial tensor-product mesh corresponding to the LR-mesh shown in Fig. 1 is given by the knot vectors \([u_1, u_1, u_1, u_2, u_4, u_6, u_7, u_7, u_7]\) and \([v_1, v_1, v_1, v_3, v_5, v_6, v_6, v_6]\). It corresponds to a polynomial spline surface of degree two in both parameter directions with multiple knots in the end parameters. The LR-mesh is constructed by first inserting knots at \(v_2\) and \(v_4\) covering a part of the surface domain, and next inserting knots at \(u_3\) and \(u_5\). The B-spline with support shown in red has the knot mesh \([u_1,u_2,u_4,u_6] \times [v_2, v_3, v_4, v_5]\). Some lines of the LR-mesh intersecting the support of such B-spline do not correspond to knotlines of its knot mesh as they do not traverse the support completely.

The procedure for refining an LRB surface is the following:

-

1.

Add a new line segment that triggers the refinement of at least one existing tensor-product B-spline. It can be an extension of an existing line segment, can be independent of existing line segments, or increase the multiplicity of an existing the line segment. Thus, a line segment going from \((u_5,v_4)\) to \((u_5,v_5)\) is a legal choice.

-

2.

Subdivide all tensor product B-splines with support that is completely traversed by the new line/extended line.

-

3.

The tensor product B-splines are required to have minimal support. This means that all line segments traversing the support of a tensor product B-spline are required to be knotline segments of the B-spline taking the multiplicity of line segments into account. After inserting a new line segment and performing the subdivision in Step 2., there might still be tensor product B-splines that do not have minimal support with respect to the LR-mesh. Consequently all such B-splines must be refined. This process is continued until all tensor product B-splines have minimal support.

LRB surface refinement is described in detail in [14]. If more than one new knotline segment is defined simultaneously, the refinement process is applied to one segment at the time.

The LRB construction results in a sequence of nested spline spaces. LRB are non-negative and have compact support. The scaling factors \(s_i\) are computed during the refinement process to ensure partition of unity. LRB are not guaranteed to be linearly independent, but a dependency relation can be detected and resolved by dedicated knot insertions. Linear dependency can only occur in a situation with overloaded LR B-splines. An element is overloaded if it belongs to the support of more LRBs than necessary to span the polynomial space over the element. An LR B-spline is overloaded if all elements in its support are overloaded. Overloading can be detected by the peeling algorithm [7]. Patrizi and Dokken address configurations that can lead to a linear dependency relation in [18]. In a situation with local linear independence over all polynomial elements all weights, \(s_i\) \(i=1, \ldots , L\), will be equal to one [2].

2.1.4 Why the Focus on LRB?

THB refinement requires that a refinement region is defined that is wide enough to contain the support of at least one B-spline on the refined level. LRB is more flexible as a single mesh line segment can be inserted thus allowing more targeted refinements. Comparing T-splines and LRB refinement is not so simple, as T-splines refine in the coefficient T-mesh, while LRB refines in the LR-mesh. The LR-mesh corresponds to the extended T-mesh of T-spline. T-spline refinement has to be performed between adjacent control points connected by an axis parallel line in the T-mesh, thus relating to two B-splines. We focus on LRB refinement as refinement is allowed as long as the support of one B-spline is split. It is thus less restrictive.

2.2 Scattered Data Approximation

The aim is to approximate a scattered data point cloud by an LRB surface. We focus on data sets with projectable points that can be parameterized by their xy-coordinates leaving the z-coordinate to be approximated by a height function. Also non-projectable points being parameterized by some appropriate method can be handled by the general algorithm used in this paper.

Algorithm 1 gives an overview of the iterative approximation algorithm. The starting point is a tensor-product B-spline surface defining an initial spline space.

The focus of this article is to investigate the term “Refine the surface where needed” of Algorithm 1. The elements of the surface mesh will, during the computation, keep track of the data points situated within their domain as well as some accuracy statistics. This includes maximum and average distances between the surface and the points in the element, and the number of points with a distance to the surface that is larger than the specified tolerance. This information provides a basis for selecting where and how new degrees of freedom shall be added.

Two surface approximation methods are applied during the iterative algorithm: Least squares approximation with a smoothing term and multi-level B-spline approximation (MBA). Let \({\mathbf{x}}=(x_k,y_k,z_k), k=1,\ldots ,K\) be the projectable point cloud we want to approximate. Least squares approximation with a smoothing term is a global method where a minimization functional

is differentiated leading to a sparse, linear equation system. Linear independence of the B-splines is a prerequisite for a non-singular equation system. F(x, y), as defined in Eq. 1, denotes the LRB height function we want to obtain and \(\mathbf{P }= {P_1, \ldots P_L}\) are the coefficients of this function. The solution to this functional results in the best possible approximation with the given degrees of freedom in a least squares sense. The actual smoothing term J(F(x, y)) is described in [21] and other possible smoothing terms can be found in [17]. Our focus is on the approximation. As the input points may or may not represent a smooth surface, the weight \(\alpha _1\) on smoothing must be kept low. Still the term is important to handle areas in the surface domain without points.

The multi-level B-spline approximation algorithm (MBA) was introduced by Lee et. al. in [16] for scattered data interpolation, and is described in detail [23]. It is an iterative, local method, where the coefficients are calculated individually. The result is a hierarchical structure of tensor-product B-spline surfaces.

Assume that \(N_i\) is a B-spline of the residual surface for which we want to calculate the coefficient \(Q_i\),

Here the coefficients \(\phi _{i,c}\) are calculated by a local surface approximation of the residuals, \({\mathbf{x}_c}=(x_c,y_c,z_c),\) \(c=1,\ldots ,C\), that are inside the support of \(N_i\). All B-splines that have a support that overlaps any of these residuals take part in the approximation. The local approximation is formulated as an under-determined equation system

The equation for residual c only involves the B-splines that are none zero at \((x_c,y_c)\). Consequently which B-splines, \(N_{h,c}\), \(h=1,\ldots ,H_c\), \(c=1,\ldots ,C\), are involved in the equation is dependent on the residual addressed in the equation. The solution selected for the under-determined system is

From the above coefficients we select \(\phi _{i,c}, \, c=1,\ldots ,C,\) to be used in (2). This equation is obtained by minimizing with respect to \(Q_i\) the error

In the LRB setting, the residual surface is incorporated in the expression for the approximating surface avoiding a hierarchical representation. The MBA method does not require linearly independent LRB to find a solution.

Both approximation methods are in some sense minimizing the average distance between the point cloud and the surface. Thus, neither the maximum distance nor the number of points outside the tolerance can be expected to decrease monotonically. The average distance will in general be steadily reduced, but temporary stagnation may occur in particular in the context of outliers or if the elements have been refined to the extent that we model noise. In general we expect the input point clouds to have high data sizes and a varying degree of smoothness over the domain of the point cloud, which makes the property of local refinement essential.

3 Refinement Strategies and Success Criteria

LRB surfaces are appropriate for approximation of large scattered data sets due to the ability of increasing the data size in the areas where more degrees of freedom are required while keeping the data size low in other areas. However, it is not obvious how to decide where new mesh line segments should be inserted. There is a wide range of possibilities in selecting these lines, and we will investigate the effect of different choices.

Previous studies on refinement strategies for LRB have focused on the use of LRB in isogeometric analysis or refinement strategies that ensure local or global linear independence. Local linear independence ensures that a minimum amount of the B-spline supports overlap each element of the mesh. Global linear independence implies that a linear equation system originating from a least squares approximation or finite element analysis is non-singular.

Johannessen et. al. give an introduction to LRB in the context of isogeometric analysis in [14] where a detailed description of the refinement basics is provided and the effect on a number of test cases is investigated for the three following refinement strategies:

- Full span::

-

Given an element selected for refinement, all B-splines with a support that overlaps this element are refined by adding a line traversing the element.

- Minimum span::

-

The shortest possible line overlapping a chosen element that splits the support of at least one B-spline, is selected. Several candidate B-splines can exist and even if additional selection criteria are added, the candidate may not be unique. The selected line is not necessarily symmetric with respect to the element.

- Structured mesh::

-

Choose a B-spline and refine all knot intervals in this B-spline.

The sensitivity towards the different choices of refinement strategies were in [14] found to be moderate. However, the authors also investigated the effect of different knot multiplicity and found that meshes with low knot multiplicity tended to give less error in the computation compared to meshes with higher multiplicity for the same number of degrees of freedom. In [12] the structured mesh approach is analyzed theoretically and numerically in a set of test cases.

Bressan and Jüttler [4] look at refinement of LRB surfaces from the perspective of local linear independence and present a mesh construction where this property is proved. Patrizi et. al. [19] propose a practical refinement strategy where local linear independence is ensured. The strategy consists of a modified structured mesh refinement where some of the splits are prolonged so the refined mesh satisfies the so called non-nested support property, see [19] for a definition.

Other authors have focused on refinement of hierarchical B-spline surfaces or T-spline surfaces. Bracco et al. define two classes of admissible meshes for hierarchical B-splines and compare them for use in isogeometric analysis in [1]. A comparison between two refinement strategies applied to hierarchical B-splines and T-splines in the context of IgA is investigated in [13].

The applications of scattered data approximation and isogeometric analysis have some fundamental differences. In the context of IgA, the refined meshes normally belong to an intermediate stage in the computations. Thus, the possibly large locally refined spline surfaces are not kept. Moreover, the need for refinement in an adaptive isogeometric analysis computation is typically concentrated in localized areas. One of the main motivations for approximating scattered data with a locally refined surface is the need for a compact representation of data with a locally non-smooth behaviour. Areas where extra degrees of freedom are required for an accurate approximation, may be scattered around in the entire domain of the data set.

We restrict ourselves to polynomial LRB surfaces of bi-degree one, two and three and place all new knotline segments in the middle of existing knot intervals. With this configuration, no linear dependence relation has been encountered.

If there exist data points within an element with a distance to the surface larger than the tolerance, there is either a need for more degrees of freedom corresponding to the element, or the required accuracy cannot be met by a smooth surface due to outliers or a lack of smoothness in the data points. Any B-spline whose support overlaps this element will give new degrees of freedom to the element, if refined. This implies that there is a choice of how new degrees of freedom are defined. It is also clear that all choices are not equally good.

We define some criteria for a good refinement strategy:

-

1.

Best possible accuracy with the minimum degrees of freedom.

-

2.

If supported by the data set, it should be possible to adapt the surface to the point cloud within the prescribed tolerance.

-

3.

Avoid a premature stop where the requested accuracy is not reached and the maximum number of iteration steps is not performed.

-

4.

A steady improvement in accuracy when adding new degrees of freedom.

-

5.

Keep the execution time low.

-

6.

Keep the memory consumption low.

-

7.

Affected elements should preferably be split in the middle if a new mesh line is positioned in the middle of some element.

-

8.

Linear independence or local linear independence of B-splines.

We will mainly focus on the first five criteria. Critera 5 and 6 are to some extent linked and also dependent on the previous ones. A lean surface will lead to lower memory consumption and the part of the execution time spent in surface refinement is connected to the number of coefficients. The execution time also depends on the number of steps applied in the iterative algorithm and whether or not the knot insertion at late iteration steps is focused in a few areas or spread out in the entire surface domain.

The importance of linear independence is related to the use of the resulting surface. The approximation algorithm outlined in Algorithm 1 does not rely on linear independence. We will, thus, not focus on the last criterion.

We can now formulate two empirical rules for a good refinement strategy. They are taken into account when the strategies to be tested are defined in the next section:

- Rule 1::

-

A gradual introduction of new degrees of freedom gives better approximation efficiency.

- Rule 2::

-

An improved accuracy can be blocked by a failure to identify one or more B-splines that need to be refined. A disproportionate high number of B-spline supports overlapping an element is not desirable.

An important term in this discussion is approximation efficiency. It is defined as the number of resolved points divided by the number of surface coefficients for a particular refinement strategy and a particular iteration level. This figure will, together with other criteria, be used to evaluate the success of one refinement strategy compared to others.

4 Applied Refinement Strategies

4.1 Main Categories

The selected data sets are approximated using a variety of refinement strategies. They belong to four main categories: Full span, Minimum span and Structured mesh as described in Sect. 3 and an additional category named Restricted mesh. In the full span and minimum span strategies an element is selected for refinement while in the structured and restricted mesh strategies the refinement process is started from a selected B-spline.

In the full span strategy all B-splines with a support that overlaps the selected element are refined. The new mesh line splits the element in half in the direction of refinement and it is sized to split the support of all associated B-splines.

In the minimum span strategy one B-spline with a support overlapping the element, is selected. We will test three different minimum span strategies. They differ in how the B-spline to be refined is chosen. The selection criteria are: the candidate B-spline with largest support; the B-spline that has the highest number of unresolved points compared to the total number of points in the support; and, a combination of the two where the criteria are given equal weights. Several B-splines may fit the selected criterion equally well. In case of doubt, the most centered B-spline with respect to the initial element is selected. If there is still no unique B-spline satisfying the criterion, one candidate is randomly chosen.

In the structured mesh strategy, the selected B-spline is refined in the middle of all knot intervals. This is similar to the refinement strategy for hierarchical B-splines. In the restricted mesh strategy, refinement is performed in knot intervals where the corresponding elements contain significant points outside the tolerance with respect to number and distance. Let the elements of one knot interval in one parameter direction be denoted an element strip. The distances between the points and the surface that exceed the tolerance are accumulated for all such strips and scaled with respect to the element sizes. Furthermore the element strip measures are scaled with the position of the strip in the B-spline thereby prioritizing the middlemost knot intervals. Knot intervals with strips that have a measure larger than the average strip or larger than half the maximum strip measure are refined. In addition, knot intervals that exceed the average knot interval in the B-spline support by a factor of three are refined. This implies that refinement can be performed in one or more knot intervals in one or two parameter directions. The minimum span strategies and the restricted mesh strategy are similar in the respect that they are both restrictive, but differ in how the refinement selection is made. The effect of the strategies differ as can be seen in Sect. 5.

4.2 Restrictions to the Introduction of New Knots

We want to test the empirical rule that a gradual introduction of new degrees of freedom will lead to a lean final surface with good accuracy. In strategies with refinement in alternating parameter directions the surfaces are refined in the first parameter direction at odd iterations levels and in the second parameter direction at even levels. Thus, the number of potential new knot line segments is limited compared to the case for strategies that perform refinement in both parameter directions at each level.

A threshold can also be applied to restrict the number of new mesh line segments at each step, possibly in addition to alternating parameter directions and/or restrictions inherent in the strategy itself. Thresholds can imply that only the elements or B-splines with the most significant approximation errors, either with respect to distance or number of unresolved points, are selected for refinement. The threshold factor is set globally for each iteration level. In the restricted mesh strategy, thresholds can be used to reduce the number of knot intervals that are refined in the selected B-spline. A refinement strategy can be combined with zero, one or two different types of thresholds as described in Sect. 4.4.

4.3 Modifications to Particularly Restricted Strategies

The restricted mesh strategy may fail to capture elements with significant points outside the tolerance belt. This relates to situations where the corresponding element strips of the B-spline support otherwise have few unresolved points, and refinement in the associated knot interval is not performed. The situation occurs typically at the border of the point set. To ensure refinement in such elements, an element extension to the strategy is applied. Elements with a significant approximation error that are not already split, are identified at each iteration level and trigger an additional refinement related to the full span strategy. However, refinement may be performed in one parameter direction only, even if the main strategy imposes refinement in both parameter directions.

In addition to the restricted mesh strategy, the various minimum span strategies can lead to few new lines being inserted at each iteration level, in particular if the strategy is combined with a threshold. We investigate the effect of combining these strategies with a full span strategy as this is less restrictive in the introduction of new mesh line segments. Line segments added by the full span strategy will most often be longer than line segments added by the minimum span strategy. The two strategies, which are combined, are applied with refinement in the same number of parameter directions. The switch between strategies is performed when the fraction between newly resolved points in the last iteration and the number of new coefficients drops below 0.1.

4.4 Components for Refinement Strategies

Each refinement strategy and each combination of strategy and threshold is given a unique label. Each label is a compositions of letters. The following explains the meaning of such letters:

- Main category::

-

Full span (F), Minimum span (M), Structured mesh (S) or Restricted mesh (R).

- Element extension to restricted mesh strategy:

-

Select significant elements left out in the B-spline refinement (L).

- Minimum span selection criterion::

-

B-spline with largest support overlapping the element (l), B-spline with a support that overlaps the element with most unresolved points (u) or a combination (c).

- Parameter direction::

-

Refine in both (B) or alternating (A) parameter directions at each iteration level.

- Threshold::

-

With respect to distance (td), the number of unresolved points in an element (tn) or the number of unresolved points in a strip of elements corresponding to a B-spline knot interval (tk).

Let maxdist denote the maximum distance between the surface and the point cloud and let avdist be the average distance in out-of-tolerance points globally. Let further nmb be the number of points situated in an element, \(nmb\_out\) the number of unresolved points in the element and avdist2 the average distance in these unresolved points. We can now define the threshold factors as:

- td = :

-

(tolerance + maxdist + avdist)/3. An element or a B-spline is selected for refinement if the maximum distance between the points in the support of this B-spline or element and the surface exceeds the threshold.

- tn = :

-

factor\(\times \) min(wgt) + (1-factor)\(\times \) average(wgt) where wgt = nmb_out + scale\(\times \) avdist2 in each element with unresolved points. Early in the computation nmb_out is large and this term will dominate the formula for wgt. Later the distance in the points will dominate. The scale is introduced to make the expression independent of the distance unit. It is set to one in the examples in this article. The weight scores are used to rank the elements with respect to importance. The factor depends on the range between the minimum and maximum score. If this range is small then the importance of the elements cannot be distinguished and all elements lead to refinement. If the range is large compared to the size of this score, only the highest ranked elements trigger refinement.

- tk = :

-

max(average(nmb_out), 0.01\(\times \) average(nmb)) for all elements. The tk threshold is only applied to the restricted mesh strategy. A knot interval in a selected B-spline is refined only if the number of out-of-tolerance points in the corresponding element strip exceeds this threshold factor.

The tk threshold is very strong as the number of points outside the tolerance in an element strip is compared to the number of points in the entire B-spline support. In general, the threshold types and associated factors are set experimentally. The aim is to get an impression of how different approaches influence the approximation process. All threshold factors are reduced with successive iterations.

4.5 Composition of Refinement Strategies for the Tests

The refinement strategies are applied to a number of test cases. Each strategy is tested with and without threshold and with different choices in the number of parameter directions to refine at each iteration step. Table 1 shows how the strategy labels are composed. Here the type specifies the strategy category as full span, minimum span, structured mesh or reduced mesh. The sub type applies to the minimum span strategy only. If several strategies are applied during the iterations, a second category (type2) is specified. The two strategies can be applied simultaneously as in the case for the element extended restricted mesh strategy, or one after the other. Two strategies or thresholds that are applied to the same iteration level are combined with + in the label, and with/if they are used at different levels. An extensive set of combinations of strategies and threshold types are tested, but there are still possible combinations that are not applied. We believe, however, that the results give a broad basis for drawing conclusions on the suitability of the various strategies.

5 Refinement Study

Five geospatial data sets are used in the study. All data sets have points that are projectable on the xy-plane, but are different in size and properties. The number of points varies from 71 888 to 131 160 220. The regularity distribution varies from completely regular to scanlines where the distances between scans are very large compared to the distances between points in the scans. The smoothness also varies from data set to data set. Two data sets contain known outlier points.

The point clouds are approximated using various combinations of refinement strategies and thresholds. After defining a tensor-product spline surface using the least squares method the approximation is continued with the least squares method applied to an LRB surface before turning to the MBA. The swap is performed after five iteration steps or earlier. The least squares method involves solving a linear equation system. This is done by an iterative method, which may struggle with convergence when the point distribution is very uneven. In such situation, the MBA method is a better choice. Moreover, an early switch is made if the approximation accuracy is not improved.

All data sets, but one, are tested with a tolerance of 0.5 m. The number of iterations varies according to properties of the data set, and to some extent with regard to whether alternating parameter directions are applied. Varying the tolerance and the number of iterations for each data set could bring additional information to the range of refinement strategies, but fall outside the scope of this article. The study is run using bi-degrees (1,1), (2,2) and (3,3).

The information related to each data set includes measurements of execution time. The recorded time includes all aspects of the approximation, but excludes reading and writing to file. The computations are performed on a stationary desktop with 64 GB of DDR4-2666 RAM. It has a i9-9900K CPU with 8 cores and 16 threads, but a single core implementation is used in the experiments. Approximation results are collected at the final stage of the computations and at an intermediate stage. This stage will be defined according to the properties of each data set.

The presentation of each data set follows the same pattern. First the data set is introduced, then the range in execution time and number of surface coefficients is presented, and finally some selected details. A table is also presented, showing results from a number of strategies: strategies that have low execution time; strategies that produce few coefficients; strategies that have an overall good behaviour; strategies that have the highest number of unresolved points at the end of the computations; and some additional strategies to complement the picture or unveil differences between strategies.

For each strategy the following information is given: iteration level at the two stages; the number of points with a distance larger than the tolerance; the corresponding number of coefficients; the maximum distance; and the execution time. Distances are given in meters. Refinement strategies having the best overall results at the intermediate or final stage are highlighted using bold font and also single best results for some criteria is shown in bold font. The worst result for a given criterion is highlighted using italic font. Graphs providing more continuous accuracy information will complement the tabular entries.

Similar tabulated results on the accuracy of all refinement strategies and combinations with threshold types will be made available in a separate report along with more analysis of the results.

5.1 Banc du Four

5.1.1 Data Set

Figure 2a shows a sub sea data set representing sand dunes, (b) zooms in on a detail. The data is obtained from the Banc de Four outside the coast of Brittany. The data set consists of 5 054 827 well distributed points. The elevation range is \([-92.76, -54.84]\) and the standard deviation is 8.08. Sand dunes have a relatively smooth shape and the point cloud does not contain outliers. The approximating surface, Fig. 2c, has an accuracy of 0.2 m and is approximated using refinement strategy RB tk and bi-quadratic splines. The approximation is in this case performed using least squares approximation for two iteration steps before turning to MBA.

5.1.2 Experience Setup and Performance Ranges

We use a tolerance of 0.2 m for this data set. The approximation algorithm is allowed to run for 40 iterations. The intermediate stage is defined as: At least 99.9% of the points, are closer to the surface than the tolerance and the maximum distance between the point cloud and the surface is less than 0.5 m. This implies that at most 5 055 points are further away from the surface than the tolerance.

The data set is well adapted for approximation with LRB surfaces and most combinations of refinement strategies and threshold types converge within the prescribed number of iterations. Exceptions exist, some cases are referenced in Table 3. Moreover, the execution time and final number of coefficients differ. Table 2 presents the minimum and maximum execution time at the end of the computations and the final number of coefficients together with the corresponding refinement strategies. The structured mesh strategy appears both as the strategy with the lowest execution time and the highest number of coefficients. The minimum number of coefficients are found for restricted mesh strategies while a minimum span strategy with threshold, MuB td, has the highest computational time. This strategy also fails to converge completely. In the bi-linear, bi-quadratic and bi-cubic cases respectively 3, 6 and 7 points remain with a larger distance to the surface than 0.5 m.

The execution time and number of coefficients increase with increasing polynomial degree. Some strategies converge well for the bi-quadratic and bi-cubic cases while they fail in the bi-linear case. One example is FA tn, see Table 3.

5.1.3 Selected Refinement Strategies

Table 3 shows the result for a set of selected strategies including the best performing ones. Some strategy combinations provide both a low execution time and a lean final surface. This is the case for FA, in particular when combined with threshold tn for the bi-quadratic and bi-cubic cases. FB has lower computation times, but results in surfaces with more coefficients. Refinement in both parameter directions at every iteration level tends to result in fewer iterations, and to some extent in a lower execution time. An exaggerated introduction of new mesh lines sometimes leads to fast convergence, but for many cases also results in a high number of coefficients. This is the case for the structured mesh strategy SB. Adding a threshold reduces only to a limited extent the number of surface coefficients, see SB td in the bi-linear and bi-cubic cases.

The restricted span strategy RB tk yields good results both at the intermediate and final stage even though it has higher computation time than the fastest strategies and a high number of iterations between the intermediate and final stage. RA tk also performs well at the intermediate stage, but does not converge within the prescribed number of iterations. Restricted mesh strategies tend to perform better at the intermediate than the final stage, in particular when a threshold is involved. However, extending the method with refinement based on elements, e.g., R+LA tk, speeds up the convergence in the latter part of the computation.

Minimal span strategies do not always converge. In particular MuA struggles also without a threshold. Note also that MuB quadratic has unresolved points at the end of the computations without reaching the maximum number of iterations. One or more critical mesh line segments are not inserted due to restrictions in the strategy.

A continuous picture of the performance of the selected best strategies at the intermediate and final stage is given in Figs. 3 and 4. Figure 3 relates the number of coefficients to the number of points within the tolerance for a further reduced set of strategies. RB tk bi-cubic has high approximation efficiency throughout the computations and finishes with relatively few coefficients despite a large increase in number after the stage where almost all points are resolved. However, the execution time is more than the double of FB bi-cubic. RB tk with bi-quadratic splines has a relatively poor performance in the first part of the computation, but reduces the maximum distance quickly after about 40 000 coefficients and has a good approximation efficiency after 12 iterations.

The relative performance of the strategies with respect to approximation efficiency is, with the exception of RB tk bi-cubic, mainly retained throughout the computations. Typically the approximation efficiency is lower for strategies that refine in both parameter directions simultaneously. The increase in the number of coefficients is rapid and the corresponding decrease in the number of unresolved points is not comparatively high. The ranking of the strategies with respect to the maximum distance varies throughout the iterations. For instance the minimum span strategy McB with bi-linear splines increases the maximum distance early in the computation. It continues with a high distance until it drops below the distance of several other strategies in the last part of the computation.

Minimal span strategy McA tn with bi-quadratic splines has the best performance throughout the computation. The maximum distance is constant in the lower part of the group and the approximation efficiency lies in the group with highest efficiency if RB tk bi-cubic is kept out. Furthermore, it finishes well within the allowed number of iterations and with an acceptable computation time, see Table 3.

The two bi-cubic strategies in Fig. 5 can be found at both ends of the spectrum. The strategies are both of the reduced mesh type, but differ with respect to the number of parameter directions to refine at each level and threshold. The latter difference is probably the main source of the difference in behaviour. In general a threshold with respect to the number of out-of-tolerance points will lead to fewer coefficients with regard to the number of points resolved. Figures 6 and 7 compare respectively the evolution of the maximum distance between the surface and the point cloud, and the approximation efficiency for the various full span refinement strategies in the bi-quadratic case.

We see in both Figures that the graphs of the refinement strategies fall into two groups, however, the groups are different. The maximum distance (Fig. 6) is reduced more quickly for strategies that are combined with the distance based threshold type td. The evolution for other strategies diverge at a later stage of the computations. Strategies with alternating parameter directions finish with fewer coefficients than strategies that refine in both parameter directions at every iteration step. Figure 7 shows that strategies with alternating parameter directions have higher approximation efficiency than the others. Within each group, the strategy that is combined with threshold type td has the highest efficiency at each iteration level, but needs also a high number of iterations to converge. This threshold may be beneficial if the computation is stopped at an early stage, but not necessarily if a sufficient number of iterations to reach convergence are allowed.

5.2 Gaustatoppen

5.2.1 Data Set

Figure 8a shows a completely regular point set containing 490 000 points. The corresponding surface in (b) is bi-linear and created with refinement strategy FA and a tolerance of 0.5 m. Least squares approximation is used for the first five iteration steps, then MBA is used. The points are extracted from a sparse raster approximating measurement data from the mountain area Gaustatoppen in Norway. The elevation range is [216.6, 1877.3] measured in meters and the standard deviation is 370.542. The data set covers an area of 6 507 times 6 507 m\(^2\). The data set is regular, but very sparse. It has already been processed and thinned considerably. Every point carries a lot of information, thus an accurate approximation with a lean surface is not feasible. However, the data set can still be used to distinguish between different refinement strategies, and in particular evaluate the strategies with respect to robustness.

5.2.2 Experience Setup and Performance Ranges

The Gaustatoppen terrain is much more demanding than in the previous example. The area has one high mountain peak and a valley with a river. There are also several other peaks and valleys in the neighbourhood. We specify a tolerance of 0.5 m and a maximum number of iterations of 40. The intermediate stage is defined as the iteration level where the maximum distance between the point cloud and the surface is less than two meters, 99% of the points are resolved meaning that the number of points where the tolerance is not satisfied does not exceed 4 900, and the average distance in out-of-tolerance points is less than one meter.

Table 4 presents the ranges in computational time and number of surface coefficients for the bi-linear, bi-quadratic and bi-cubic cases. The range in execution time is wide for this data set. A high number of surface coefficients and some distributions of new mesh line segments cause the time spent in maintaining the data structure of the surface to increase very rapidly during the iteration. The highest execution times occur for MuB td strategy. The minimum execution times are obtained for the full span strategy with alternating parameter directions and the structured mesh strategy with refinement in both directions. The latter strategy occurs also as the one that results in most coefficients, and opposite to the pattern found in the other test cases bi-linear splines lead to the highest number of coefficients. The surfaces with fewest coefficients are obtained for various restricted mesh strategies with threshold.

5.2.3 Selected Refinement Strategies

Table 5 presents selected results for the Gaustatoppen example. Several refinement strategies fail to resolve all points and also to reach the intermediate stage. The required maximum distance of less than two meters is most demanding.

Bi-linear approximation struggles if a threshold is applied. A restraint selection of elements to refine leads to convergence failure also without additional thresholds. In particular, most minimum span element based refinement strategies fail to reach the required accuracy within the specified number of iterations. The full span strategies converge in most cases, the exception is FA td. B-spline based strategies converge more frequently, but also these strategies are unstable, especially when a threshold is applied.

The bi-quadratic and bi-cubic approximation have similar convergence patterns as in the bi-linear case, but less extreme. More strategies reach the intermediate stage. The bi-cubic case results in more coefficients and shows higher execution times than the bi-quadratic case.

The best performing strategies at the final stage refine in alternating parameter directions. FA gets reasonably good numbers for all polynomial degrees and when comparing with FB we see that FA results in fewer coefficients and comparable execution times. The same relation can be seen for RA tk and RB tk degree two and three.

The restricted mesh strategies in general perform well although there are some exceptions in the bi-linear case, e.g. RA td+k. This strategy does well in the bi-quadratic and bi-cubic case although the execution times are not among the best. Introducing the element extension to these strategies does not make a big difference.

The full span strategy FA has good results, in particular at the final stage and combined with threshold tn. The minimum span strategies Mu, and to some degree Mc, struggle with convergence and do not always reach the intermediate stage. The minimum and full span combination Mc/FA tn has the same results as McA tn at the intermediate stage, but ensures convergence at the final stage for bi-quadratic splines. In Fig. 11 we address combined strategies.

The structured mesh strategies lead to many surfaces coefficients and do not have, in contrast to the case for the Banc du Four data set, particularly low computation times. We will, based on the results for the two first data sets, omit further testing of the structured mesh and the minimum span strategies with sub-type u. The first approach produces surfaces with too much data and the second is too restrictive.

Figures 9 and 10 provide more information on the behaviour of the selected best strategies throughout the entire computations. Figure 11 shows the approximation efficiency for some combined refinement strategies. Figure 9 shows that the maximum distance decreases steadily for a while before it for most strategies starts to oscillate. The oscillations are strongest in the bi-linear case and least profound for bi-cubic splines. It is no correspondence between lack of oscillation and few coefficients at the final stage.

The maximum distance is, over most of the computations, lowest with respect to the number of coefficients for RA tk bi-quadratic. This strategy also has the highest approximation efficiency, see Fig. 10. The best quadratic strategies have the least overall number of coefficients in the final surfaces and the highest approximation efficiency. RB tk bi-cubic has a steep descent in approximation efficiency, but ends up at the same level as several of the other strategies and the resulting number of coefficients (261 462) is quite competitive compared to other bi-cubic strategies.

Figure 11 compares the approximation efficiency of some minimum span strategies with the corresponding combined strategy for the middle and last part of the computation. We see that RA tk quadratic has the best efficiency. This strategy can also be found in Fig. 10 and can thus support a comparison between the two graphs along with the line marking an approximation efficiency of 2.2. R+LA tk finishes second best, but has a lower efficiency in some part of the iterations. McA tn does not converge completely alone, but does so when combined with a full span strategy. The B-spline based strategies covered here have better execution times than the minimum span strategies.

5.3 Scan Lines

5.3.1 Data Set

Figure 12a shows a small data set of 71 888 points. The data set is obtained from the English channel and the points are organized in scan lines with varying distances. A detail can be seen in (b). The point set contains a number of outlier points situated off the scan lines. These are inconsistent with the general trend of the data. The regular points represent a smooth part of the sea bed. The data set covers about 23 km\(^2\), the elevation range is \([-47.8, -12.1]\) m and the standard deviation is 2.408. The corresponding surface (c) is created with an accuracy of 0.5 m and is approximating also the outlier points closely. The surface is bi-cubic and the applied refinement strategy is FA. Least squares approximation is performed for two iterations before the algorithm switches to MBA.

5.3.2 Experience Setup and Performance Ranges

The approximation is run with a tolerance of 0.5 m and the maximum number of iterations is 30 and 40 for refinement strategies refining in both parameter directions at every iteration level and with alternating parameter directions, respectively. An intermediate stage is defined when 99% of the points are within the resolution. This corresponds to 719 unresolved points. Due to the outliers, no condition is put on the maximum distance at the intermediate stage.

The current test case contains few points and this is reflected in fast execution times also for the strategies with the poorest performance as can be seen in Table 6. FB is the fastest strategy while minimum span strategies are the slowest and do not always converge within the given number of iterations.

5.3.3 Selected Refinement Strategies

Table 7 presents the results from a set of refinement strategies at the intermediate stage and final stage. Several minimum and full span strategies obtain the same lowest computational time at the intermediate stage for bi-linear splines. The increases in iteration level and execution time from the intermediate to the final stage for these strategies are systematically higher for the minimum span strategies than for full span.

The combination strategy Mc/FA tn bi-linear performs identically to McA tn at the intermediate stage, but converges in contrast to the pure minimum strategy at the final stage. Similar pairs can be found for McB tn and Mc/FB tn in the bi-linear case, and McA tn and Mc/FA tn in both the bi-quadratic and bi-cubic cases. The combined strategy performs in all cases better than the pure one. Combinations including the restricted mesh strategy also perform better than the pure restricted mesh strategy Here the element extension in R+LA tk is better than R/FA tk/n in the bi-cubic case. R/FA tk/n needs more iterations than specified to converge.

The full span strategy yields good results both with and without a threshold and FA is superior to FB when it comes to the number of coefficients in the surface. FB delivers the lowest computational time.

The strategies that perform best at the intermediate stage are not always those that finish with the best result. Figures 13 and 14 give a more continuous picture of the behaviour of the selected strategies. Approximation with bi-cubic splines results in most coefficients, and RA tk and R/FA tk/n have low approximation efficiency in the later part of the iterations. Early in the iterations these two strategies have a good efficiency. Bi-linear approximation gives the least number of coefficients. McA tn bi-linear does not converge in isolation, but changing to FA tn when the convergence speed decreases results in convergence and a moderate number of coefficients. Most strategies maintain a high maximum distance in the start before it starts decreasing and in particular RA bi-linear has a very rapid decrease.

The maximum distance for FA td quadratic decreases fast and the approximation efficiency is high for most of the iterations. In the bi-quadratic case FA td maintains its lead on FA tn throughout the computation despite a high number of iterations, while in the bi-cubic case FA td does not finish with all points resolved, see Table 7. This pattern with a fast decrease in maximum distance, high approximation efficiency and the need for many iterations to converge can be found also for other polynomial degrees and refinement strategies when this threshold is applied.

The scan line data set is, despite the outlier points, approximated with good accuracy at the cost of surface smoothness. An alternative stop criteria for configurations where particular points have a severe lack of convergence should be considered.

5.4 Søre Sunnmøre, Sea Bed

5.4.1 Data Set

The point cloud, Fig. 15a, consists of 11 150 110 points obtained from a 881 100 m\(^2\) area at Søre Sunnmøre in Norway. In (b) the points are scaled with a factor of 10, zoomed in and seen from the right corner to show some features. It is a sub sea data set in shallow waters, the depth ranges from –27.94 to –0.55 m. The point cloud is dense with some holes representing land. It has some outliers, although less extreme than for the scanline data set. At one position, two points with the same xy-coordinates and differing depth with a distance of 2.38 m are given. Thus, the theoretical smallest obtainable maximum distance between the point cloud and the surface is 1.19 m. In contrast to the previous example, the outliers are situated close to other points in the cloud. The point cloud has a standard deviation of 2.52 m. The surface in (c) is bi-quadratic and created with strategy FA td. The approximation algorithm switches from the least squares method to MBA after five iterations.

5.4.2 Experience Setup and Performance Ranges

Despite it being impossible to reach this resolution, we apply a tolerance of 0.5 m and run with a maximum number of iterations of 30 for refinement strategies refining in both parameter directions and 40 for strategies with alternating parameter directions. The intermediate stage is where 99.0% of the points are within the tolerance, meaning that at most 11 150 points have a larger distance to the surface than 0.5 m. In most cases, the iteration runs the maximum allowed number of steps.

Table 8 presents the minimum and maximum execution times and the lowest and highest numbers of coefficients in the final surfaces for the bi-linear, bi-quadratic and bi-cubic cases. We see that the ranges between best and worst are large, both with respect to time and size and for all polynomial degrees. The largest differences can be found internal to each polynomial degree. Bi-linear splines lead in general to lower execution times and less coefficients, while the bi-cubic splines give results at the other end of the scale.

Due to outlier points, none of the refinement strategies converge with all points within the resolution. The minimum number of out-of-tolerance points obtained is 469 for RA and RA td with bi-linear splines. We regard all results with less than 480 points outside the tolerance and a maximum distance of 1.19 m as having an accepted accuracy. The least possible maximum distance is reached by all strategies except bi-quadratic McA td, which finishes with a maximum distance of 1.191 m.

5.4.3 Selected Refinement Strategies

Table 9 presents the results of some strategies at the intermediate and final stage. Strategies with refinement in alternating parameter directions have, for this data set, lower computation time than corresponding strategies that refine in both directions. A complete convergence is not possible in this case, and the extra number of iterations in the case of alternating directions does not account for the additional number of possible knot insertions when refinement is performed in both directions. The least number of coefficients is obtained with alternating parameter directions.

At the final stage, the full span strategy without threshold delivers the best results for all polynomial degrees and evaluation categories. This result does not change when observing the situation at the stage where the computation is regarded as converged. Variations of the full span strategy dominate also at the intermediate stage.

Several minimum span strategies finish with more than 480 points outside the tolerance, Table 9 lists some occurrences. This occurs for strategies with alternating parameter directions in particular. Minimum span strategies and some restricted mesh strategies, both with threshold, are the strategies that have the highest computation time and result in the surfaces with most coefficients.

Restricted mesh refinement strategies behave very similar with and without element extension, see RA tk and R+LA tk in Table 9. The final number of coefficients and the execution time are much higher than for corresponding full span strategies. Combining RA tk with FA tn gives better results for the bi-linear and bi-quadratic cases although it is not compatible with the pure full span strategy.

Most strategies continue several iterations after the stage where the number of unresolved points and the maximum distance are regarded as satisfactory. The number of coefficients is increased, in some cases considerably, during these iterations. The differences are typically largest for the strategies that refine in both parameter directions and in particular for the restricted mesh strategies. For instance the number of coefficients for RB increases by 122 418 during the last 13 iterations in the bi-cubic case. Other examples are bi-quadratic FA tn that reaches 478 unresolved points at level 37 and increases the number of coefficients with 2 379 in the last three iterations, and bi-linear FB that goes from 475 to 473 unresolved points and gets 8 015 in new coefficients the last eight iterations. A more precise criterion for stopping the iterations should be applied to avoid unnecessary data size and execution time.

Figures 16 and 17 give a more continuous picture of the performance of the strategies selected as best at the intermediate and final stage. Bi-cubic splines have the slowest decrease in the maximum distance and most coefficients, and this behaviour can be seen throughout the approximation process. Cases with bi-linear splines are found at the other end of the spectrum, but FA tn bi-quadratic is compatible with the bi-linear cases during most of the process.

The full span strategies give the best results for this data set as can be seen from Table 9. However, some combinations of minimum span strategies and threshold have good scores at the intermediate stage. Combinations of a minimum span strategy and a full span strategy turn in several cases the accuracy result from not accepted to accepted. These combinations are only tested with threshold type tn. Figures 18 and 19 compare the performance of two full span strategies with two minimum span strategies, MlA and McA, and combinations between minimum and full span strategies. The maximum distance decreases most rapidly for FA tn and stays low during the computation. This strategy also has a high approximation efficiency. FA has a better total score at the finishing stage, but for most of the computations the threshold improve the performance. Also the minimum span strategy Mc/FA tn has a higher approximation efficiency during large parts of the computation. Combining this strategy with the full span strategy leads to convergence with an acceptable number of coefficients, see Table 9, but the execution time is higher than for full span strategies also at the intermediate stage.

5.5 Sea Bed, Large Point Cloud

5.5.1 Data Set

The final data set contains 131 160 220 well distributed points acquired from the English Channel. The point cloud is relatively well behaved and represents an area of mostly smooth sea bed, with some areas of reduced smoothness. Approximation with an LRB surface should, thus be well adapted to the properties of the data set. The data set covers about 285 km\(^2\) of shallow waters including a few meters above sea level. The elevation ranges from –32.46 to 3.26 m and the standard deviation is 8.04. The outline of the point cloud, a scaled version of the point cloud to visualize some features and the surface created with strategy R+LA and bi-quadratic splines are shown in Fig. 20a–c. The approximation uses the least squares method for three iterations, then turns to MBA due to lack of convergence while solving the linear equation system from the least squares approximation.

5.5.2 Experience Setup and Performance Ranges

We apply a tolerance of 0.5 m and we let the maximum number of iterations be 60 for alternating directions and 40 if we refine in both parameter directions at each level. An intermediate stage is defined where either 99.9% of the points are within the tolerance (this equals 131 160 points with a larger distance to the surface than 0.5 m), or the maximum distance is less than two meters.

The current point cloud has two complicating factors, the number of data points and the fact that some points stand out as untypical compared to other points in the neighbourhood. The maximum distance is for all combinations of refinement strategies and thresholds quickly reduced to about five meters, then the distance is kept at this level for a number of iterations before it decreases again, the effect can be observed in Fig. 21 for some strategies. Most strategies converge within the specified number of iterations, a few fail to reach the specified accuracy and some strategies have a very slow reduction in the number of points outside the tolerance for the last iterations, a few examples can be found in Table 10.

The point cloud really distinguishes the strategies both with respect to the number of coefficients, the execution time and whether or not the strategy converges.

Table 10 shows the ranges in execution time and number of coefficients in the final surface for the various polynomial degrees applied. The difference between the best and worst result is tremendous especially with regard to time. In the quadratic case the minimum span strategy MlB td results in the surface with the maximum number of coefficients and the highest execution time. In the linear and cubic case, restricted mesh strategies have the poorest performance with regard to time. The lowest computational time is, for both bi-degree two and three, obtained for FB.

5.5.3 Selected Refinement Strategies

Table 11 summarizes the results of some selected strategies. The strategies with the least number of coefficients tend also to have a relatively low computational time. Bi-cubic splines at the intermediate stage deviate from this trend, but the strategy resulting in the least number of coefficients still has an execution time in the lower range also in this case, see Table 10. Strategies that refine in both parameter directions simultaneously tend to have the lowest computational time, while strategies with alternating directions tend to result in the least number of coefficients. The difference between the iteration levels at the intermediate and final stage differs between the strategies, but is in general high. This indicates slow convergence in the latter part of the computation.

Various versions of the full span strategy stand out. Also some versions of the restricted mesh strategy show good results at the intermediate stage, but fail in several cases to converge in the end. Strategies with a very restrictive introduction of new degrees of freedom can yield good results early in the computations, but may fail to insert crucial new mesh line segments in the later stages and finish with a large number of coefficients, high execution times and no convergence. RB td+k is a very restrictive strategy that struggles already at the intermediate stage and finishes with poor results for all polynomial degrees.

Table 11 includes some strategies that perform reasonably well without being amongst the best. In the bi-linear case we compare some full span strategies with alternating parameter directions and different thresholds. Threshold tn performs best, which matches the normal threshold pattern. In the bi-quadratic case we see that the threshold slows down the convergence for FA and the final number of coefficients is only slightly lower. Applying a threshold has little effect for the element extended restricted mesh strategy R+LA, but the version without a threshold performs slightly faster. In the bi-cubic case we can compare the restricted mesh with and without element extension. The difference is small for this data set.

Figure 21 shows the continuous development of the maximum distance between the point cloud and the surface throughout the computation for some selected methods. The distance quickly reduces to about five meters, is maintained at that level for several iterations while the number coefficients increases. Then the distance drops again for most strategies. The exceptions are two restricted mesh strategies, RA tk bi-linear and RB tk bi-quadratic. In the linear case, also the combination of this restricted mesh strategy with a full span strategy is shown and results in few coefficients. The corresponding numbers can be found in Table 10.

The bi-linear surfaces have the least number of coefficients, then come the bi-quadratic surfaces and finally the bi-cubic ones. The difference in the number of coefficients is large even for strategies with the same polynomial degree.

Figure 22 shows the number of coefficients given the number of out-of-tolerance points for the same strategies. Only the last part of the computation is shown and the development is from right to left. The number of coefficients increases rapidly towards the end of the computation while the decrease in the number of points outside the tolerance is slow. The ranking of the strategies is stable until less than 100 000 points (less than 0.1% of the total number of points) are unresolved; then there is a shift. This indicates that whether or not we want to continue the computation to a complete convergence must be taken into account when selecting the refinement strategy.

6 Summary of Results

This section provides a summary of the results for the individual data sets. The full span strategy and the structured mesh strategy are very clearly defined. All other strategies have an element of choice and tuning included in them. Even for the minimum span strategies Ml there might be more than one candidate B-spline of the same size.

Bi-cubic approximation almost always results in more coefficients in the resulting surface and higher execution times than corresponding strategies for lower order polynomial degrees. The pattern regarding the relative performance of the strategies for a given data set is quite similar in the bi-quadratic and the bi-cubic case. The number of surface coefficients and execution time is often low in the bi-linear case, but strategies with restrictions on the introduction of new mesh lines are more vulnerable with respect to convergence for this degree.

Full span strategies have the most consistent behaviour throughout the data sets and tend to have the lowest execution times. The resulting surfaces do not always have the smallest number of coefficients, but the results are always among the best.

Minimum span strategies have a high risk of lack of convergence. The effect is most pronounced for bi-linear splines and reinforced if thresholds are applied. Minimum span strategies perform better in the first part of the computation than for the final iterations. The combined strategy for selecting the B-spline to subdivide performs better than focusing either on the size of the B-spline support or the number of out-of-tolerance points in the support.

The execution time for the structured mesh strategy is low, but the number of coefficients is very high for the tested versions of the strategy, which is omitted for further testing after two data sets.

The restricted mesh strategy does not have a consistent behaviour for all data sets. It often has a high approximation efficiency in the first and middle part of the computation, but may need a back-up strategy to converge completely. Applying a threshold most often reduces the number of surface coefficients, but increases the risk of not converging. All variants of the restricted mesh strategy lead to a high number of coefficients for the Søre Sunnmøre data set.

Strategies with alternating parameter directions tend to lead to fewer coefficients in the final surface. The execution time is often lowest when refinement is performed in both parameter directions simultaneously, but the difference is moderate.

Applying a threshold in general reduces the number of surface coefficients and increases the computation time, but is not always effective. The selected B-splines are not always those that lead to the highest improvement in accuracy when refined. The effect of applying a threshold depends on the type. Thresholding with respect to distance (td), tends to reduce the maximum distance quite rapidly, but has little effect on the number of resolved points. These strategies will often result in more coefficients than strategies without a threshold. This threshold type in general has a more positive effect on the B-spline based strategies than on the element based ones. A threshold with respect to the number of out-of-tolerance points (tn and tk) often leads to good approximation efficiency, that is, it leads to few coefficients with respect to the number of resolved points. The effect on the final surface size varies, the surface can be more lean when applying a threshold, but this is not always the case. It would probably be beneficial if applied thresholds are released more rapidly than the case in this study.

Two partly conflicting rules were formulated in Sect. 3. Both are confirmed. A reduced pace in the introduction of new knots results in leaner surfaces, while a too restrictive approach has the opposite result.

The convergence is typically rapid in the start of the approximation before it slows down drastically towards the end. The cost of resolving the last data points is high both with regard to data size and time consumption.

7 Conclusion

We have performed a study to find out how different strategies for knot selection in adaptive refinement of LRB surfaces perform in the context of scattered data approximation. The tests on the refinement strategies are applied to five different geospatial data sets with a large variety of sizes and properties. It is not the case that one size fits all. The various strategies perform differently on different data sets and the performance of each refinement strategy varies throughout the computations. The purpose of approximating a point cloud with an LRB surface is not always to achieve an accurate approximation. It can also be to represent the smooth part of the data points with a smooth surface in order to analyze the residuals. A very accurate approximation of noisy data is also unattractive. The recommendation of the refinement strategy to select depends on the goals for the approximation.

Bi-cubic splines should only be used if a surface with a continuous second derivative is required. A bi-linear surface is a good choice if no smoothness is needed. However, in general, we recommend the use of bi-quadratic splines.

If the aim is to create very accurate surfaces with respect to the point data, the full span strategy with alternating parameter directions should be applied. A threshold with respect to the number of points outside the given tolerance should probably be considered, but there is room for improvement regarding the actual composition. Thresholding with respect to the maximum distance should not be applied in this case.

If the aim is to get a smallest possible surface after a restricted number of iterations or when applying a stop criterion different from complete convergence, also other strategies are applicable. The restricted mesh strategy performs well for four out of five test cases. Minimum span strategies are more seldom selected as the best choice, but the results for the best versions are good at the intermediate stage and quite consistent with regard to the various data sets for bi-degree two and three. In both cases a switch to the full span strategy should be applied when the convergence slows down. The full span strategy is applicable also in this case.

Refinement in alternating parameter directions is recommended also for early finalization of the computation. Threshold with respect to distance should be used only if the most important aim is to reduce the maximum distance rapidly with less focus on the number of out-of-tolerance points. A threshold with respect to the number of points is recommended.

The applied study is extensive. More combinations are possible, but it is questionable whether or not they will provide more insight. The criteria used to define thresholds and restricted strategies have room for improvement.

The study focuses on the number of surface coefficients, execution time and approximation accuracy. The structure of the polynomial mesh is not considered, although it may be of importance. Structured and restricted mesh strategies give a more orderly mesh than the full and minimum span strategies. Furthermore, an analysis of the level of the accuracy threshold and the best criterion to stop the iteration would complement this study in a good way. A good refinement strategy should not break down when pushed to the limits. However, the most accurate surface does not necessarily give the best representation of the terrain or sea bed as the quality of the point clouds may vary.

References

Bracco, C., Giannelli, C., Vázquez, R.: Refinement algorithms for adaptive isogeometric methods with hierarchical splines. Axioms 7, 43 (2018)

Bressan, A.: Some properties of LR-splines. Comput. Aided Geometr. Design 30, 778–794 (2013)

Bressan, A., Buffa, A., Sangalli, G.: Characterization of analysis-suitable T-splines. Comput. Aided Geometr. Design 39, 17–49 (2015)

Bressan, A., Jüttler, B.: A hierarchical construction of LR meshes in 2D. Comput. Aided Geometr. Design 37, 9–24 (2015)

Buffa, A., Cho, D., Sangalli, G.: Linear independence of the T-spline blending functions associated with some particular T-meshes. Comput. Methods Appl. Mech. Eng. 199, 1437–1445 (2010)

Cottrell, J.A., Hughes, T.J.R., Bazilevs, Y.: Isogeometric Analysis: Toward Integration of CAD and FEA. Wiley, Chichester (2009)

Dokken, T., Pettersen, K.F., Lyche, T.: Polynomial splines over locally refined box-partitions. Comput. Aided Geometr. Design 30, 331–356 (2013)

Dokken, T., Skytt, V., Barrowclough, O.: Trivariate spline representations for computer aided design and additive manufacturing. Comput. Math. Appl. 78, 2168–2182 (2019)

Farin, G.: Curves and Surfaces for CAGD, A Practical Guide, 5th edn. Morgan Kaufmann Publishers (1999)

Forsey, D.R., Bartels, R.H.: Hierarchical B-spline refinement. ACM SIGGRAPH Comput. Graph. 4, 205–212 (1988)

Giannelli, C., Speleers, H., Jüttler, B.: THB-splines: the truncated basis for hierarchical splines. Comput. Aided Geometr. Design 29, 485–498 (2012)

Gu, J., Yu, T., Lich, L.V., Nguyen, T.-T., Bui, T.Q.: Adaptive multi-patch isogeometric analysis based on locally refined B-splines. Comput. Methods Appl. Mech. Eng. 339, 704–738 (2018)

Hennig, P., Kästner, M., Morgenstern, P., Peterseim, D.: Adaptive mesh refinement strategies in isogeometric analysis - a computational comparison. Comput. Methods Appl. Mech. Eng. 316, 424–448 (2017)