Abstract

In their daily practice, physicians with different professional backgrounds often diagnose a patient’s problem collaboratively. In those situations, physicians not only need to be able to diagnose individually, but also need additional collaborative competences such as information sharing and negotiation skills (Liu et al., 2015), which can influence the quality of the diagnostic outcome (Tschan et al., 2009). We introduce the CDR model, a process model for collaborative diagnostic reasoning processes by diagnosticians with different knowledge backgrounds. Building on this model, we develop a simulation in order to assess and facilitate collaborative diagnostic competences among advanced medical students. In the document-based simulation, learners sequentially diagnose five patients by inspecting a health record for symptoms. Then, learners request a radiological diagnostic procedure from a simulated radiologist. By interacting with the simulated radiologist, the learners elicit more evidence for their hypotheses. Finally, learners are asked to integrate all information and suggest a final diagnosis.

You have full access to this open access chapter, Download chapter PDF

Similar content being viewed by others

Keywords

- Collaborative diagnostic competence

- Agent-based simulation

- Medical education

- Collaboration scripts

- Reflection phases

This chapter’s simulation at a glance

Domain | Medicine |

|---|---|

Topic | Collaboratively diagnosing patients suffering from fever of unknown origin |

Learner’s task | Taking on the role of an internist to identify likely explanations for the patient’s fever and interacting with a simulated radiologist to reduce uncertainty with respect to the assumed explanations |

Target group | Advanced medical students and early-career physicians |

Diagnostic mode | Collaborative diagnosing by internist and radiologist |

Sources of information | Documents (patient’s history, laboratory results, etc.); radiological findings that can be requested from a simulated radiologist |

Special features | Focus on collaborative diagnostic reasoning; development was based on a model of collaborative diagnostic reasoning (CDR); adaptive and standardized responses from a simulated radiologist (i.e., a computer agent) |

10.1 Introduction

Medical students’ diagnostic competences have been investigated mainly as individual competences (Kiesewetter et al., 2017; Norman, 2005). This is not congruent with the daily practice of physicians, as they collaborate with physicians of the same or another specialization on a regular basis (for a definition of collaborative diagnostic competences, see section Collaborative Diagnostic Competences). For example, physicians regularly discuss patients’ diagnoses and treatment plans in groups. In such so-called consultations, the physicians in charge confer with more specialized physicians to hear their opinions. In roundtables such as tumor boards, several physicians with different specializations discuss and negotiate patient cases to come to an optimal diagnosis or treatment plan for a patient. There is also a need to collaborate with different health care professionals such as nurses (Kiesewetter et al., 2017). Medical educators have recognized the importance of collaborative competences in medical education . For example, the German national competency-based catalogue of learning goals and objectives (NKLM, Nationaler Kompetenz-basierter Lernzielkatalog Medizin) emphasizes the role of physicians as communicators and as members of a team (MFT Medizinischer Fakultätentag der Bundesrepublik Deutschland e. V., 2015). Additionally, several simulation centers at university hospitals such as the one at the University Hospital of LMU Munich have recognized the importance of team trainings (Human simulation center, http://www.human-simulation-center.de/). They offer full-scale trainings of different scenarios with simulated patients, ambulances, and even helicopters. Such simulation-based trainings provide opportunities for practice in a controlled and safe environment. However, full-scale trainings are expensive and time-consuming. Physicians and medical students hence do not actively participate in such trainings regularly and instead spend much time observing peers acting in the simulation (Zottmann et al., 2018). In order to learn complex competences and cognitive skills such as collaborative diagnostic competences, it is necessary that learners practice repeatedly, that they focus on subtasks that are particularly difficult to master (i.e., deliberate practice), and that they reflect on their actions and cognition. In doing so, learners develop internal scripts that guide collaborative practices and, if necessary, modify scripts that do not result in understanding or beneficial actions (Fischer et al., 2013). This project addresses collaborative diagnostic competences and means to assess and facilitate them empirically by introducing the model for collaborative diagnostic reasoning (CDR) and developing a simulation in which medical students can repeatedly interact with a simulated physician.

10.2 Collaborative Diagnostic Competences

To facilitate and assess collaborative diagnostic competences in simulations, it is important to understand the underlying processes of collaborative diagnostic reasoning. Contemporary frameworks conceptualize collaborative problem-solving (CPS) as an interplay of cognitive and social skills (Graesser et al., 2018). Cognitive skills refer to problem-solving skills that are also necessary for individual problem-solving. For example, in the ACT21S collaborative problem-solving framework, Hesse et al. (2015) suggest task regulation as well as learning and knowledge building as key cognitive skills for collaborative problem-solving. As we are interested in diagnosing, which we consider a specific form of reasoning, we follow the suggestions presented in the introduction by Fischer et al. (2022) to base cognitive skills on eight diagnostic activities (problem identification, questioning, hypothesis generation, artifact construction, evidence generation, evidence evaluation, drawing conclusions, and communicating and scrutinizing; Fischer et al., 2014; Chernikova et al., 2022) that successful diagnosticians need to be able to perform with high quality. However, we go beyond their definition by additionally describing social skills necessary when diagnosing collaboratively. Different frameworks (e.g., ATC21S, PISA 2017) identify social skills that differ mainly in their granularity. For example, Liu et al. (2015) suggest four social skills (sharing ideas, negotiating ideas, regulating problem-solving, and maintaining communication) and provide a coding scheme to categorize team talk (Hao et al., 2016). Hesse et al. (2015) propose three main skills (perspective-taking, participation, and social regulation) with two to four subskills each. Particularly in knowledge-rich domains such as medicine, both cognitive and social skills are based on the diagnosticians’ professional knowledge base, which consists of conceptual and strategic knowledge (Förtsch et al., 2018). Based on CPS frameworks and diagnostic activities, we define collaborative diagnostic competence as the competence to diagnose a patient’s problem by conducting diagnostic activities and by sharing, eliciting, and negotiating evidence and hypotheses and regulating the interaction by recognizing both one’s own and the collaboration partner’s knowledge and skills. The quality of the diagnosis is defined as its accuracy and efficiency (Chernikova et al., 2022).

While there are a number of models describing the structure of collaborative problem-solving skills (i.e., skills and subskills making up this competence), there is a lack of models describing the processes of collaborative problem-solving (i.e., activities and their reciprocal influences). In this chapter, we propose a process model of collaborative diagnostic reasoning (CDR) that is intended to explain the collaborative diagnostic reasoning of two actors (in our example, medical specialists) with respect to a patient case. The model further makes assumptions about the development of collaborative diagnostic reasoning. Thus, the model allows for predictions about the facilitation of collaborative diagnostic reasoning. Below, we describe the CDR model as well as theoretical and empirical findings relevant to it. In addition, we derive empirically testable statements from the model.

10.2.1 CDR Model: Collaborative Diagnostic Reasoning

The CDR model describes a collaborative diagnostic situation in which two diagnosticians with different professional backgrounds collaboratively diagnose patients by generating, evaluating, sharing, eliciting, and negotiating hypotheses and evidence. Although the model is introduced here in a medical context, we assume that it is, in principle, also valid for other contexts, such as collaborative diagnostic reasoning among teachers. Although the model in its basic form is limited to two diagnosticians, we do not see any reason limiting the generalization of the model to bigger groups in principle.

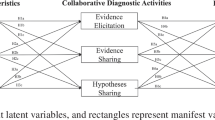

The CDR model (see Fig. 10.1) builds on Klahr and Dunbar’s (1988) scientific discovery as dual search model (SDDS) , but goes beyond it by distinguishing between individual and collaborative cognitive processes. Prior attempts to transfer the SDDS to a collaborative context by Gijlers and de Jong (2005) cannot replace the CDR model, as the extended SDDS describes the structure of individual and shared knowledge but does not identify predictions with respect to individual or collaborative cognitive processes. To describe individual and collaborative cognitive processes, the CDR model builds on the diagnostic activities (generation and evaluation of evidence, generating hypotheses, drawing conclusions) and social activities (sharing, eliciting, negotiating, coordinating) described above. We hereafter term these individual and collaborative diagnostic activities. Individual diagnostic activities are conceptualized as the process of coordinating empirical evidence generated by experimenting with hypotheses. Here, we distinguish between a hypotheses space and an evidence space (Klahr & Dunbar, 1988). In the medical context, a diagnostic process is typically triggered by information about the system being diagnosed. The system to be diagnosed is considered to be an external system containing all information about the patient and their social environment that can be considered in the diagnostic process, including, for instance, test results, information about the patients’ lifestyle, and symptoms. The diagnosticians start the individual diagnostic process by generating and evaluating evidence. A piece of evidence is information on a system with the potential to influence the diagnosis of the system’s state by reducing or increasing its likelihood. In the context of medical diagnosing, the evidence typically consists of findings (e.g., laboratory values), enabling conditions (e.g., pre-existing illnesses of family members), and patient symptoms (e.g., stomachache). Evidence is generated by interpreting patient information, sorting out the relevant from the irrelevant information, and generating new information, for example, by conducting a medical test (Fischer et al., 2014). For instance, a radiologist conducts a radiologic test or an internist identifies a patient’s lipase laboratory value as abnormally high. Ideally, the generated evidence is evaluated with respect to its validity (e.g., what are the sensitivity and specificity of the test? Are there technical reasons for a false positive value for this test?). Evidence is kept in the evidence space. During the generation and evaluation phases, we assume that participants generate hypotheses and draw conclusions based on the collected evidence (Fischer et al., 2014). A hypothesis is a statement about a possible state of the system. The generated hypotheses are stored in the hypotheses space and tested in the evidence space by evaluating whether the evidence matches the predictions derived from the hypotheses (Klahr & Dunbar, 1988). By testing hypotheses, diagnosticians draw conclusions which are also stored in the hypotheses space. In our example, the internist who found that a patient has an increased lipase value could generate the hypothesis that the patient suffers from pancreatitis. If the internist finds that the patient additionally suffers from upper abdominal pain (evidence generation), the internist may draw the conclusion that these pieces of evidence speak in favor of the proposed hypothesis.

In collaborative diagnostic situations, physicians additionally engage in collaborative diagnostic activities. In such situations, there is a need to coordinate the evidence and hypotheses space of not one but two professionals. For effective collaboration, it is necessary that the collaborators construct an at least partially shared mental representation of the diagnostic situation (Rochelle & Teasley, 1995). Therefore, we assume that in collaborative diagnostic reasoning, there are two further cognitive spaces in addition to the individual diagnostic spaces: a shared evidence space and a shared hypotheses space. These spaces consist of evidence and hypotheses that are shared among the diagnosticians. All individual diagnostic processes as well as their outcomes (evidence, hypotheses, and conclusions) can become part of one of the shared diagnostic spaces by engaging in the collaborative activities of sharing and elicitation, negotiation, and coordination (Liu et al., 2015; Hesse et al., 2015; Zehner et al., 2019; Mo, 2017). In the literature, the need to share and process information on a group level has been stressed as key to constructing a shared mental representation and successfully collaborating (Hesse et al., 2015; Meier et al., 2007; Larson et al., 1998). The pooling of information allows collaborators to use team members as a resource. Information (i.e., evidence, hypotheses, and conclusions) can be pooled either by eliciting information from the other team member or by externalizing one’s own knowledge (Fischer & Mandl, 2003). Negotiating the meaning of evidence and hypotheses are also key for successful diagnosing. The successful negotiation of evidence and hypotheses by two or more diagnosticians can prevent physicians from selecting and interpreting evidence in a way that supports their own beliefs (confirmation bias; Nickerson, 1988; Patel et al., 2002). Concerning the coordination of collaborative diagnostic reasoning, little research has been conducted. However, findings in the context of collaborative learning underline the importance of coordinating goals, motivation, emotions, and strategies in order to successfully solve problems collaboratively (Järvelä & Hadwin, 2013). Finally, before integrating shared evidence and shared hypotheses in the individual reasoning processes, we expect that diagnosticians evaluate the evidence and hypotheses with respect to their validity. Based on shared evidence and hypotheses, the diagnosticians optimally conclude with a diagnosis. In this context, a diagnosis is a decision about the most likely current state of a system that is based on data and allows and/or demands concrete diagnostic and/or therapeutic decisions.

The presented model not only describes the collaborative diagnostic process among two diagnosticians, but makes further assumptions about factors influencing the collaborative and individual processes. Below, four factors are introduced, namely the professional knowledge base, professional collaboration knowledge, general cognitive and social skills. We acknowledge that the proposed factors are not exhaustive and that other variables influencing the outcome of (collaborative) diagnosing such as interest (Rotgans & Schmidt, 2014) or personality traits (Pellegrino & Hilton, 2013; Mohammed & Angell, 2003) are missing. Nevertheless, the CDR model is focused on influential factors that directly affect cognitive processes and can be altered by training.

Professional Knowledge Base

Professional knowledge , which refers to knowledge about concepts as well as knowledge about strategies and procedures, is important both for competence development (VanLehn, 1996) and for problem-solving (Schmidmaier et al., 2013). Whereas conceptual knowledge refers to knowledge about terms and their relationships (e.g., What are the contraindications for contrast media? What is the physical principle of computed tomography? What is the definition of community-acquired pneumonia?), strategic knowledge refers to knowledge about appropriate strategies and procedures in specific situations (e.g., How can pneumonia be proven radiologically? How can pulmonary embolism be ruled out? How is triple contrast media generated?; Förtsch et al., 2018). Both types of knowledge form the basis for each diagnostician to generate meaningful evidence, correctly evaluate evidence, correctly relate evidence to hypotheses, and draw conclusions. With increasing experience, strategic and conceptual knowledge becomes encapsulated, resulting in a higher diagnostic efficiency compared to novices (encapsulation effect, Schmidt & Boshuizen, 1992).

Professional Collaboration Knowledge

Another aspect that has been stressed to influence interaction among problem-solvers is meta-knowledge about the collaboration partner. Meta-knowledge is knowledge about collaboration partners and their disciplinary background, including their goals, measures, and typical priorities. Meta-knowledge is often a result of joint phases in formal education and joint collaborative practices by professionals with different backgrounds (e.g., internists and radiologists). Having a joint basis of professional knowledge is certainly an advantage for collaboration among medical specialists: Findings from the context of collaborative learning suggest that problem-solvers with meta-knowledge about their collaboration partners begin sharing relevant information earlier (Engelmann & Hesse, 2011) and learn more from each other compared to collaboration partners without such meta-knowledge (Kozlov & Große, 2016). However, the literature also suggests that only having meta-knowledge is not sufficient for successful collaboration (Schnaubert & Bodemer, 2019; Dehler et al., 2011; Engelmann & Hesse, 2011). In the script theory of guidance (Fischer et al., 2013), the authors argue that collaborative practices are dynamically shaped by internal collaboration scripts. Internal collaboration scripts consist of four hierarchically ordered types of components (play, scene, scriptlet, and role) that dynamically configure the internal collaboration script to guide the collaborative process. The configuration of the internal collaboration script is influenced by collaboration partners’ goals and perceived situational characteristics (Fischer et al., 2013). Hence, whether and how diagnosticians interact with each other depends on their internal collaboration scripts, which are shaped by their prior experience in similar collaborative practices. We consider both functional internal collaboration skills as well as meta-knowledge as important subcomponents of professional collaboration knowledge.

General Cognitive and Social Skills

There is much less focus in research on the role of general knowledge and skills that might be applicable across several domains (e.g., complex problem-solving; Hetmanek et al., 2018; Wüstenberg et al., 2012). The evidence seems clear that general cognitive knowledge and skills do not play a major role for the quality of diagnostic activities and the quality of diagnoses (e.g., Norman, 2005). However, their role for early phases of skill development has not been studied systematically in either medical education or research on collaborative problem-solving in knowledge-rich domains (Kiesewetter et al., 2016). It is likely that general cognitive abilities play a certain role in learning and problem-solving, at least in early phases, where collaborators do not have much specific knowledge and experience (Hetmanek et al., 2018). In addition, more general social skills that individuals develop beginning in early childhood, like participation, theory of mind and perspective-taking (Osterhaus et al. 2016, 2017), might play a role during collaborative diagnostic reasoning. Especially in early phases, when more specific meta-knowledge and script components are not accessible or less functional to medical students, it is likely that they try to apply more generic social skills (Fischer et al., 2013).

10.2.2 The Development of Collaborative Diagnostic Reasoning

In the preceding part of this section, the CDR model was used as a descriptive and explanatory model of collaborative diagnostic reasoning and its underlying competences. However, the CDR model also entails assumptions about how the underlying competences develop. These developmental propositions are: (1) The quality of collaborative diagnostic activities and the collaborative diagnoses further improve through multiple encounters with understanding, engaging in and reflecting upon collaborative diagnostic situations (Fischer et al., 2013). (2) Conceptual and strategic knowledge are more closely associated in intermediates and experts as compared to novices, and this is associated with higher diagnostic efficiency in experts and intermediates as compared to novices (encapsulation effect, Schmidt & Boshuizen, 1992). (3) Professional collaboration knowledge becomes more differentiated through experience with reflection on collaborative diagnostic situations entailing feedback. (4) The influence of general abilities, knowledge and skills on the quality of diagnostic activities and the quality of the diagnosis are high when professional knowledge on collaboration is low. (5) As professional knowledge becomes increasingly available, the influence of general cognitive skills on diagnostic activities decreases. These developmental propositions are not represented in Fig. 10.1.

10.3 Developing a Simulation to Investigate Collaborative Diagnostic Competences and Their Facilitation

In what follows, we describe the development of a simulation aimed first and foremost at enabling the empirical investigation of collaborative diagnostic competences and their facilitation, building on the CDR model introduced in the preceding section.

Specifying a Medical Context

Most literature on collaborative diagnostic reasoning focusses on the sharing of information. As in other contexts as well (e.g., political caucuses, Stasser & Titus, 1985), shared information (i.e., information that is known to all team members) is more likely to be considered in clinical decision-making processes compared to unshared information. This often leads to inaccurate diagnoses and/or treatment decisions (Tschan et al., 2009; Larson et al., 1998). Tschan et al. (2009) call the unsuccessful exchange of information an illusory transactive memory system, because team members act as if the information exchange was functioning well. Apparently, information exchange seems to be particularly negatively influenced during times of high workloads (Mackintosh et al., 2009). Kripalani et al. (2007) conducted a systematic review of the quality of information exchange between hospital-based and primary care physicians. The authors rated the general information exchange as rather poor. In most of the analyzed articles, important information such as diagnostic test results, discharge medications, treatment course data, or follow-up plans were reported to be missing. Also, health care professionals interviewed by Suter et al. (2009) agreed that information was often not conveyed appropriately for the intended audience. Nevertheless, it seems that it is the relevance and quality of the shared information rather than the quantity that affects the quality of the diagnosis. There is no evidence that the quality of diagnoses increases when more information is shared among team members (Kiesewetter et al., 2017; Tschan et al., 2009). To simulate collaborative diagnostic competences, we first chose a collaborative situation between internists and radiologists as the simulation context. This decision was made based on practitioners’ experiences that these two professions interact regularly in the hospital. Afterwards, we conducted interviews with seven practitioners from both disciplines to identify a specific situation that is considered problematic. The interviews revealed that the main problem is suboptimal quality of requests from clinicians for radiological imaging (i.e., elicitation of new evidence from a collaboration partner). A main issue here is unprecise justifications for the examination (e.g., missing relevant patient information) and a lack of clustering of patient information (i.e., low-quality sharing of evidence and hypotheses). These findings are in line with prior empirical findings on sharing skills and suggest that being able to conduct collaborative activities, in particular sharing and eliciting evidence and hypotheses, is particularly important in this specific situation (Davies et al., 2018). Therefore, we decided to focus on the collaborative diagnostic activities of sharing and elicitation. Additionally, we analyzed and compared different learning platforms in order to identify the most suitable platform. We chose the learning platform CASUS (https://www.instruct.eu/) as this platform is suitable for case-based learning and medical students at many universities across the globe are familiar with it.

Design and Development

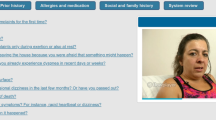

There are different ways to assess and simulate collaborative processes. Traditionally, a team or group of learners is confronted with a problem or patient, respectively (Hesse et al., 2015; Rummel & Spada, 2005). However, there are several issues that go along with this type of simulation. A main issue is that in such situations, the collaboration is influenced by variables such as personality, group constellation, or motivation (Graesser et al., 2018). This makes it more difficult to assess collaborative competences, as the assessments might be confounded. With respect to facilitating collaborative competences, simulations allow learners to deliberately practice subtasks repeatedly in order to improve the quality of specific activities. This is hardly possible during collaboration with real collaboration partners. A more recent approach that might provide a remedy is to use simulated agents (i.e., computer-simulated persons) as collaboration partners (e.g., Mo, 2017). The use of computer agents addresses the aforementioned issues, as the collaboration partners are standardized and hence, the assessment is not affected by variables such as group constellation, personality, or motivation. In this form, the collaboration is of course less flexible (e.g., less conditional branching) but easier to evaluate. Furthermore, a simulated collaboration partner is patient with respect to errors and repetitions and can easily be adjusted to the learners’ needs to increase training effects. After we had defined the context of the simulation and decided to use a simulated agent, we developed a schematic representation of the diagnostic situation based on the conducted interviews and further discussions with experts from internal medicine and radiology. The schematic representation (see Fig. 10.2) constrained the storyboard of the simulation and included information about the simulation procedure and possibilities to interact with the simulated radiologist in different ways. The schema was discussed and refined in discussions with experts from medicine, psychology, and software development. During this process, we further decided to construct a document based simulation since routine interactions between internists and radiologists in clinical practice are to a large extent document based. Moreover, this format can also be implemented easily and economically for the training of medical students.

Evaluation

The simulation was evaluated twice during its development: once by student participants and once by experts. After completion, a validation study was conducted, which is sketched out below. Firstly, a patient case was developed by two physicians, implemented in the learning platform, and presented to eight medical students in a pilot study. The pilot study aimed at evaluating the simulation’s user experience (UEQ, Laugwitz et al., 2008). The results indicated high values on the subscales attractiveness (overall impression of the simulation), perspicuity (simplicity of using the simulation), stimulation (how motivating the simulation is perceived to be), and novelty (degree of innovation), but rather low values on the dependability subscale (perception of control over the simulation). After some adjustments to increase the perceived control, nine additional patient cases were developed by a team consisting of a general practitioner, internists, and radiologists. To do so, complex patient health records and findings from different radiological tests were selected and designed. All health records and radiological findings were structured identically (see Case Part 1: Health record). The radiological findings each consisted of a description of the applied radiological technique, a description of the radiological findings, and an interpretation of these findings. Secondly, to ensure that the cases and their diagnoses were reasonably authentic, all fictitious patient cases were discussed and revised in an expert workshop by experienced practitioners from internal medicine and radiology.

10.4 The Simulation

The developed simulation consists of the familiarization and the fiction contract as well as three sections per patient case, each described in more detail below (see Fig. 10.2). The medical students are first familiarized with the diagnostic situation represented by the simulation by watching a short video. Each patient case is then structured in three parts: medical students first generate, evaluate, and integrate the evidence available in the health records; then they interact with the simulated radiologist to elicit additional evidence; and finally document the diagnostic outcome in the health record.

Familiarization and Fiction Contract

At the beginning of the simulation, all participants are introduced to the technical details of the simulation and the diagnostic situation by watching a short video clip. By diagnostic situation, we mean the real-world situation that is represented in the simulation and to which we expect the learners to transfer their knowledge and skills. The learners are informed that they are playing the role of an internist-in-training in a medium-sized hospital and that they will be diagnosing patients’ diseases in collaboration with a radiologist. The learners are told that they have seen the patients in the morning and are now revisiting their health records before proceeding with the further diagnostic process. The video clip also clarifies our expectations. For example, learners are reminded that radiological tests are costly, time-intense, and invasive for the patient and that they should try to work as efficiently as possible. In addition, the video clip familiarizes participants with the limitations of the simulation. For instance, the radiologist can only answer priorly defined questions about the radiologic findings (e.g., meaning of specific radiologic terms). We note this explicitly during the introduction in order to avoid confusion.

Case Part 1: Health Record

Each patient case starts with a document-based health record containing an introduction to the patient, information from the history-taking, physical examination, laboratory findings, previous diseases, and medication. All cases are structurally equal with respect to the information presented. Learners can take notes while reading the health records. In addition, learners are not interrupted in the first phase of diagnosing. As soon as the learners decide they have collected sufficient evidence to consult with the radiologist, they click the button “request a radiological test.” The learners can return to the patient’s health record at any time. Log files provide information about the time spent on evidence generation.

Case Part 2: Collaborative Diagnostic Activities

Learners in the role of an internist and the simulated radiologist collaborate via a request form and test results. They first elicit the generation of evidence by choosing a radiological test from 42 different combinations of methods and body regions (e.g., cranial CT, chest MRI) and then share relevant evidence (i.e., symptoms and findings) and/or hypotheses (i.e., differential diagnoses). In this way, the learners justify the request and give information relevant to properly conduct and interpret the test results to the radiologist. Specifically, the participants receive a form on which they can tick off symptoms and findings from the health record as well as type in possible diagnoses. The request form allows us to directly measure the quantity and quality of the elicitation of evidence, sharing of evidence and sharing of hypotheses. Only learners who engage in good collaborative diagnostic activities (i.e., appropriately elicit and share evidence and hypotheses) receive the results of the radiological test. Otherwise, the radiologist refuses to conduct the radiological examination and asks the medical student to revise the request form. The result of the radiological test consists of a description of the radiological findings, a short interpretation of the radiological findings, and, only if provided by the learner, an evaluation of the shared hypotheses by the simulated radiologist. As in the health record, we measure the time learners in the role of internist spend evaluating the new radiological evidence. After having read, evaluated, and integrated the results, medical students can ask further questions about the radiological findings to the radiologist by clicking on the respective terms or request additional examinations by the radiologist.

Case Part 3: Diagnostic Outcome

To solve the patient case, participants are asked to document the results of their individual and collaborative diagnostic activities in the patient’s health record. To do so, they are asked to draw conclusions by suggesting a final diagnosis, backing it up with justifying evidence, suggesting further important differential diagnoses as well as the most important next step in the diagnostic process or treatment. This documentation serves as a basis for assessing the diagnostic quality: Based on the final diagnoses and the provided differential diagnoses, we assess diagnostic accuracy. Diagnostic efficiency is assessed by weighing the diagnostic accuracy against the time needed to solve the patient case (i.e., the more time is needed for an accurate diagnosis, the lower the diagnostic efficiency). After each patient case, learners receive a short sample solution including the most likely diagnosis, the most important findings, as well as differential diagnoses.

In sum, medical students are supposed to first generate, evaluate, and integrate evidence from a patient health record to come up with a hypothesis about the patient’s problem and elicit the generation of new evidence in a process of sharing relevant evidence and hypotheses with the simulated radiologist. The newly generated evidence is then integrated with prior evidence to make a final diagnosis. Thus, the simulation allows us to assess and facilitate both collaborative diagnostic activities, namely the elicitation and sharing of evidence and hypotheses, as well as diagnostic quality (i.e., diagnostic accuracy of the final diagnosis and diagnostic efficiency).

10.5 Validation of the Simulation

Before we can use the simulation to validate the CDR model and assess and facilitate collaborative diagnostic competences, we need to test its external validity. We therefore conducted a validation study (Radkowitsch et al., 2020a). Validation is the process of collecting and validating validity evidence with the goal of judging the appropriateness of interpretations of the assessment results (Kane, 2006). We consider the following aspects as evidence for satisfactory validity. Firstly, practitioners in the field rate the simulation and simulated collaboration as authentic (Shavelson, 2012). Secondly, medical students and medical practitioners with high prior knowledge show better collaborative diagnostic activities, higher diagnostic accuracy, higher diagnostic efficiency, and lower intrinsic cognitive load compared to medical students with low prior knowledge (VanLehn, 1996; Sweller, 1994).

We conducted a quasi-experiment in which N = 98 medical students with two different levels of prior knowledge as well as internists with at least 3 years of clinical working experience participated. Each participant worked on five patient cases. Experienced internists rated the authenticity of the simulation overall as well as with respect to the collaborative diagnostic process after the second and fifth patient cases. Additionally, we assessed the quality of the collaborative diagnostic activities (sharing and elicitation of evidence and hypotheses), their diagnostic accuracy and efficiency as well as their intrinsic cognitive load.

The results of the study show that the simulation seems to be a sufficiently valid representation of the chosen situation. Internists rated the simulation and collaborative diagnostic processes as rather authentic. Additionally, internists and advanced medical students outperformed medical students with fewer semesters of study with respect to diagnostic efficiency, displayed better sharing and elicitation activities, and reported lower intrinsic cognitive load. Only with respect to diagnostic accuracy did performance not differ across conditions. The reasons for this are probably ceiling effects due to very high solution rates for three of the patient cases—the cases were too easy under the given conditions, so that participants were able to try out a lot of different pathways, repeat the same steps if they wanted to, and share and elicit a multitude of findings and hypotheses with or from the radiologist. However, the diagnostic efficiency clearly demonstrated that experts are better able to solve patient cases within the simulation.

We conclude from our validation study that the evidence for the validity of our simulation is sufficient: we found the expected differences between prior knowledge groups on the most important measures (diagnostic efficiency, sharing and elicitation, intrinsic cognitive load), and the relatively high authenticity rating indicates that the simulation accurately represents collaboration between internists and radiologists. The rather low case difficulty has been increased for upcoming studies.

10.6 Further Questions for Research

Since we found validity evidence for the simulation, our goals for further research are twofold: validating the proposed CDR model and facilitating medical students’ collaborative diagnostic competences using scaffolds.

The first goal is to validate the proposed CDR model. As the developed simulation can be considered sufficiently valid, it allows us to test and determine the influence of general cognitive and social skills, professional conceptual and strategic knowledge, as well as professional knowledge regarding collaboration on individual and collaborative diagnostic activities as well as the quality of diagnoses. To validate the CDR model, we propose the following testable predictions based on the description above that will be addressed in upcoming studies: (1) The quality of evidence generation and evidence evaluation depends on strategic and conceptual knowledge and general cognitive skills. (2) The quality of hypothesis generation and drawing conclusions depends on strategic and conceptual knowledge, general cognitive skills, and the quality of the evidence in the evidence space. (3) The quality of sharing, elicitation, negotiation, and coordination depends on professional collaboration knowledge and general social skills. (4) The quality of the evidence in the evidence space depends on the quality of evidence generation and evidence evaluation, the quality of the evidence in the shared evidence space, general cognitive skills, and the professional knowledge base. (5) The quality of the hypotheses in the hypotheses space depends on the quality of hypothesis generation and drawing conclusions, the quality of the hypotheses in the shared hypotheses space, general cognitive skills, and the professional knowledge base. (6) The accuracy of the diagnosis depends on the quality of the evidence in the evidence space and the quality of the hypotheses in the hypotheses space. (7) The quality of shared evidence in the shared evidence space is influenced by the quality of evidence in the individual evidence spaces, the quality of the collaborative diagnostic activities, professional collaboration knowledge, and general social skills. (8) The quality of shared hypotheses in the shared hypotheses space is influenced by the quality of hypotheses in the individual hypotheses spaces, the quality of the collaborative diagnostic activities, professional collaboration knowledge, and general social skills. (9) The influence of professional knowledge on individual and collaborative diagnostic activities is greater than the influence of general cognitive and social skills. (10) The proposed relations are found in different domains in which diagnosticians with different knowledge backgrounds diagnose collaboratively (e.g., teaching).

In the validation study, we not only found validity evidence for the simulation, but also showed that, indeed, medical students with low prior knowledge show low diagnostic efficiency and less advanced collaborative diagnostic activities. This is in line with the reviewed literature (e.g., Tschan et al., 2009) and supports the conclusion that findings from these different medical contexts can be generalized to document-based collaboration in a simulated consultation between an internist and radiologist. Therefore, we seek to address the question under which conditions the simulation can effectively facilitate collaborative diagnostic competences. Socio-cognitive scaffolding or external collaboration scripts are instructional techniques that have been shown to have large positive effects on collaboration skills (Radkowitsch et al., 2020b; Vogel et al., 2017). Thus, we are interested in under which conditions external collaboration scripts are effective when learning with simulations. In particular, we examine whether and how adapting collaboration scripts to learners’ needs enhances their effectiveness. We assume that adaptive external collaboration scripts could be used to directly scaffold the sharing and elicitation process and thus enhance learners’ collaborative diagnostic competences. While external collaboration scripts should have a direct effect on collaboration skills, reflection, a well-analyzed instructional support in medical education (Mamede et al., 2014), should have an indirect effect on the collaborative diagnostic process. The combination of both instructional techniques therefore seems promising for the development of collaborative diagnostic competence, but has not been empirically analyzed yet.

Overall, by addressing these questions, we mainly seek to contribute to Questions 2 and 4 of the overarching research questions mentioned in the introduction by Fischer et al. (2022) and the concluding chapter by Opitz et al. (2022). Moreover, we go beyond these questions by additionally validating the proposed CDR model.

10.7 Conclusion

Collaborative diagnostic competences have been rarely investigated empirically, and little is known about how individual and collaborative diagnostic processes influence each other. We therefore proposed the CDR model to close this gap and to guide further research. To validate the CDR model, we developed a simulation that allows us to assess collaborative diagnostic processes in a standardized environment. As prior findings (Tschan et al., 2009) and the results of interviews we conducted show that medical students and practitioners often have difficulties sharing relevant information, we focused on sharing and elicitation activities during a consultation between internists and radiologists. Through a process analysis, our validation study went beyond just showing that experts perform better than novices. In future research, we will address the question of how using scaffolding with external collaboration scripts and reflection phases facilitates the learning of collaborative diagnostic competences within the simulation. The research that emerges on the use of our simulation and model may also lead to progress in research on collaborative problem-solving (Hesse et al., 2015) and may be transferred to other areas of collaborative problem-solving where learners with different knowledge backgrounds collaborate with each other.

References

Chernikova, O., Heitzmann, N., Opitz, A., Seidel, T., & Fischer, F. (2022). A theoretical framework for fostering diagnostic competences with simulations. In F. Fischer & A. Opitz (Eds.), Learning to diagnose with simulations—Examples from teacher education and medical education. Springer briefs in education series. Springer.

Davies, S., George, A., Macallister, A., Barton, H., Youssef, A., Boyle, L., et al. (2018). “It’s all in the history”: A service evaluation of the quality of radiological requests in acute imaging. Radiography, 24, 252–256. https://doi.org/10.1016/j.radi.2018.03.005

Dehler, J., Bodemer, D., Buder, J., & Hesse, F. W. (2011). Guiding knowledge communication in CSCL via group knowledge awareness. Computers in Human Behavior, 27, 1068–1078. https://doi.org/10.1016/j.chb.2010.05.018

Engelmann, T., & Hesse, F. W. (2011). Fostering sharing of unshared knowledge by having access to the collaborators’ meta-knowledge structures. Computers in Human Behavior, 27, 2078–2087. https://doi.org/10.1016/j.chb.2011.06.002

Fischer, F., & Mandl, H. (2003). Being there or being where? Videoconferencing and cooperative learning. In H. van Oostendorp (Ed.), Cognition in a digital world (pp. 205–223). Lawrence Erlbaum.

Fischer, F., Kollar, I., Stegmann, K., & Wecker, C. (2013). Toward a script theory of guidance in computer-supported collaborative learning. Educational Psychologist, 48, 56–66. https://doi.org/10.1080/00461520.2012.748005

Fischer, F., Kollar, I., Ufer, S., Sodian, B., Hussmann, H., Pekrun, R., et al. (2014). Scientific reasoning and argumentation: Advancing an interdisciplinary research agenda in education. Frontline Learning Research, 5, 28–45. https://doi.org/10.14786/flr.v2i3.96

Fischer, F., Chernikova, O., & Opitz, A. (2022). Learning to diagnose with simulations: Introduction. In F. Fischer & A. Opitz (Eds.), Learning to diagnose with simulations—examples from teacher education and medical education. Springer.

Förtsch, C., Sommerhoff, D., Fischer, F., Fischer, M. R., Girwidz, R., Obersteiner, A., et al. (2018). Systematizing professional knowledge of medical doctors and teachers: Development of an interdisciplinary framework in the context of diagnostic competences. Educational Sciences, 8, 207–224. https://doi.org/10.3390/educsci8040207

Gijlers, H., & de Jong, T. (2005). The relation between prior knowledge and students’ collaborative discovery learning processes. Journal of Research in Science Teaching, 42, 264–282. https://doi.org/10.1002/tea.20056

Graesser, A., Fiore, S., Greiff, S., Andrews-Todd, J., Foltz, P., & Hesse, F. (2018). Advancing the science of collaborative problem solving. Psychological Science in the Public Interest: A Journal of the American Psychological Society, 19, 59–92. https://doi.org/10.1177/1529100618808244

Hao, J., Liu, L., von Davier, A., Kyllonen, P., & Kitchen, C. (2016). Collaborative problem solving skills versus collaboration outcomes: Findings from statistical analysis and data mining. In T. Barnes, M. Chi, & M. Feng (Eds.), Proceedings of the 9th International Conference on Educational Data Mining.

Hesse, F., Care, E., Buder, J., Sassenberg, K., & Griffin, P. (2015). A framework for teachable collaborative problem solving skills. In P. Griffin & E. Care (Eds.), Assessment and teaching of 21st century skills (pp. 37–56). Springer.

Hetmanek, A., Engelmann, K., Opitz, A., & Fischer, F. (2018). Beyond intelligence and domain knowledge: Scientific reasoning and argumentation as a set of cross-domain skills. In F. Fischer (Ed.), Scientific reasoning and argumentation: The roles of domain-specific and domain-general knowledge (pp. 203–226). Routledge.

Järvelä, S., & Hadwin, A. (2013). New frontiers: Regulating learning in CSCL. Educational Psychologist, 48, 25–39. https://doi.org/10.1080/00461520.2012.748006

Kane, M. T. (2006). Validation. In R. L. Brennan (Ed.), Educational measurement (pp. 17–64). Praeger.

Kiesewetter, J., Fischer, F., & Fischer, M. R. (2016). Collaboration expertise in medicine—No evidence for cross-domain application from a memory retrieval study. PLoS One, 11, e0148754. https://doi.org/10.1371/journal.pone.0148754

Kiesewetter, J., Fischer, F., & Fischer, M. R. (2017). Collaborative clinical reasoning—A systematic review of empirical studies. Journal of Continuing Education in the Health Professions, 37, 123–128. https://doi.org/10.1097/CEH.0000000000000158

Klahr, D., & Dunbar, K. (1988). Dual space search during scientific reasoning. Cognitive Science, 12, 1–48. https://doi.org/10.1207/s15516709cog1201_1

Kozlov, M. D., & Große, C. S. (2016). Online collaborative learning in dyads: Effects of knowledge distribution and awareness. Computers in Human Behavior, 59, 389–401. https://doi.org/10.1016/j.chb.2016.01.043

Kripalani, S., LeFevre, F., Phillips, C. O., Williams, M. V., Basaviah, P., & Baker, D. W. (2007). Deficits in communication and information transfer between hospital-based and primary care physicians: Implications for patient safety and continuity of Care. JAMA, 297, 831–840. https://doi.org/10.1001/jama.297.8.831

Larson, J. R., Christensen, C., Franz, T. M., & Abbott, A. S. (1998). Diagnosing groups: The pooling, management, and impact of shared and unshared case information in team-based medical decision making. Journal of Personality and Social Psychology, 75, 93–108. https://doi.org/10.1037/0022-3514.75.1.93

Laugwitz, B., Held, T., & Schrepp, M. (2008). Construction and evaluation of a user experience questionnaire. In A. Holzinger (Ed.), USAB 2008. Lecture notes in computer science (Vol. 5298). Springer. https://doi.org/10.1007/978-3-540-89350-9_6

Liu, L., Hao, J., von Davier, A., Kyllonen, P., & Zapata-Rivera, D. (2015). A tough nut to crack: Measuring collaborative problem solving. In Y. Rosen, S. Ferrara, & M. Mosharraf (Eds.), Handbook of research on technology tools for real-world skill development (pp. 344–359). IGI Global.

Mackintosh, N., Berridge, E.-J., & Freeth, D. (2009). Supporting structures for team situation awareness and decision making: Insights from four delivery suites. Journal of Evaluation in Clinical Practice, 15, 46–54. https://doi.org/10.1111/j.1365-2753.2008.00953.x

Mamede, S., van Gog, T., Sampaio, A. M., de Faria, R. M. D., Maria, J. P., & Schmidt, H. G. (2014). How can students’ diagnostic competence benefit most from practice with clinical cases? The effect of structured reflection on future diagnosis of the same and novel diseases. Academic Medicine, 89, 121–127. https://doi.org/10.1097/ACM.0000000000000076

Meier, A., Spada, H., & Rummel, N. (2007). A rating scheme for assessing the quality of computer-supported collaboration processes. International Journal of Computer-Supported Collaborative Learning, 2, 63–86. https://doi.org/10.1007/s11412-006-9005-x

MFT Medizinischer Fakultätentag der Bundesrepublik Deutschland e. V. (2015). Nationaler Kompetenzbasierter Lernzielkatalog Medizin (NKLM). Retrieved from June 11, 2019, from http://www.nklm.de/kataloge/nklm/lernziel/uebersicht.

Mo, J. (2017). How does PISA measure students’ ability to collaborate? In PISA in focus (Vol. 77). OECD.

Mohammed, S., & Angell, L. C. (2003). Personality heterogeneity in teams. Small Group Research, 34, 651–677. https://doi.org/10.1177/1046496403257228

Nickerson, R.-S. (1988). Confirmation bias: A ubiquitous phenomenon in many guises. Review of General Psychology, 2, 175–220. https://doi.org/10.1037/1089-2680.2.2.175

Norman, G. (2005). Research in clinical reasoning: Past history and current trends. Medical Education, 39, 418–427. https://doi.org/10.1111/j.1365-2929.2005.02127.x

Opitz, A., Fischer, M., Seidel, T., & Fischer, F. (2022). Conclusions and outlook: Toward more systematic research on the use of simulations in higher education. In F. Fischer & A. Opitz (Eds.), Learning to diagnose with simulations—examples from teacher education and medical education. Springer.

Osterhaus, C., Koerber, S., & Sodian, B. (2016). Scaling of advanced theory-of-mind tasks. Child Development, 87, 1971–1991. https://doi.org/10.1111/cdev.12566

Osterhaus, C., Koerber, S., & Sodian, B. (2017). Scientific thinking in elementary school: Children’s social cognition and their epistemological understanding promote experimentation skills. Developmental Psychology, 53, 450–462. https://doi.org/10.1037/dev0000260

Patel, V., Kaufman, D., & Arocha, J. (2002). Emerging paradigms of cognition in medical decision-making. Journal of Biomedical Informatics, 35, 52–75. https://doi.org/10.1016/S1532-0464(02)00009-6

Pellegrino, J. W., & Hilton, M. L. (2013). Education for life and work: Developing transferable knowledge and skills in the 21st century. National Academies Press.

Radkowitsch, A., Fischer, M. R., Schmidmaier, R., & Fischer, F. (2020a). Learning to diagnose collaboratively: Validating a simulation for medical students. GMS Journal for Medical Education, 37(5), 2366–5017.

Radkowitsch, A., Vogel, F., & Fischer, F. (2020b). Good for learning, bad for motivation? A meta-analysis on the effects of computer-supported collaboration scripts. International Journal of Computer-Supported Collaborative Learning, 15, 5–47. https://doi.org/10.1007/s11412-020-09316-4

Rochelle, J., & Teasley, S. (1995). The construction of shared knowledge in collaborative problem solving. In C. O’Malley (Ed.), Computer-supported collaborative learning (Vol. 128, pp. 66–97). Springer.

Rotgans, J. I., & Schmidt, H. G. (2014). Situational interest and learning: Thirst for knowledge. Learning and Instruction, 32, 37–50. https://doi.org/10.1016/j.learninstruc.2014.01.002

Rummel, N., & Spada, H. (2005). Learning to collaborate: An instructional approach to promoting collaborative problem solving in computer-mediated settings. Journal of the Learning Sciences, 14, 201–241. https://doi.org/10.1207/s15327809jls1402_2

Schmidmaier, R., Eiber, S., Ebersbach, R., Schiller, M., Hege, I., Holzer, M., et al. (2013). Learning the facts in medical school is not enough: Which factors predict successful application of procedural knowledge in a laboratory setting? BMC Medical Education, 13, 28. https://doi.org/10.1186/1472-6920-13-28

Schmidt, H. G., & Boshuizen, H. P. A. (1992). Encapsulation of biomedical knowledge. In D. A. Evans & V. L. Patel (Eds.), Advanced models of cognition for medical training and practice (Vol. 97, pp. 265–282). Springer.

Schnaubert, L., & Bodemer, D. (2019). Providing different types of group awareness information to guide collaborative learning. International Journal of Computer-Supported Collaborative Learning, 14, 7–51. https://doi.org/10.1007/s11412-018-9293-y

Shavelson, R. J. (2012). Assessing business-planning competence using the collegiate learning assessment as a prototype. Empirical Research in Vocational Education and Training, 4(1), 77–90.

Stasser, G., & Titus, W. (1985). Pooling of unshared Infomration in group decision making: Biased information sampling during discussion. Journal of Personality and Social Psychology, 48(6), 1467–1478.

Suter, E., Arndt, J., Arthur, N., Parboosingh, J., Taylor, E., & Deutschlander, S. (2009). Role understanding and effective communication as core competencies for collaborative practice. Journal of Interprofessional Care, 23, 41–51. https://doi.org/10.1080/13561820802338579

Sweller, J. (1994). Cognitive load theory, learning difficulty, and instructional design. Learning and Instruction, 4, 295–312. https://doi.org/10.1016/0959-4752(94)90003-5

Tschan, F., Semmer, N. K., Gurtner, A., Bizzari, L., Spychinger, M., Breuer, M., et al. (2009). Explicit reasoning, confirmation bias, and illusory transactive memory. Small Group Research, 40, 271–300. https://doi.org/10.1177/1046496409332928

VanLehn, K. (1996). Cognitive skill acquisition. Annual Review of Psychology, 47, 513–539. https://doi.org/10.1146/annurev.psych.47.1.513

Vogel, F., Wecker, C., Kollar, I., & Fischer, F. (2017). Socio-cognitive scaffolding with collaboration scripts: A meta-analysis. Educational Psychology Review, 29, 477–511. https://doi.org/10.1007/s10648-016-9361-7

Wüstenberg, S., Greiff, S., & Funke, J. (2012). Complex problem solving—More than reasoning? Intelligence, 40, 1–14. https://doi.org/10.1016/j.intell.2011.11.003

Zehner, F., Weis, M., Vogel, F., Leutner, D., & Reiss, K. (2019). Kollaboratives Problemlösen in PISA 2015: Deutschland im Fokus. Zeitschrift für Erziehungswissenschaft, 22, 617–646. https://doi.org/10.1007/s11618-019-00874-4

Zottmann, J., Dieckmann, P., Taraszow, T., Rall, M., & Fischer, F. (2018). Just watching is not enough: Fostering simulation-based learning with collaboration scripts. GMS Journal for Medical Education, 35, 1–18. https://doi.org/10.3205/zma001181

Acknowledgments

The research presented in this chapter was funded by a grant from the Deutsche Forschungsgemeinschaft (DFG-FOR 2385) to Frank Fischer, Martin R. Fischer, and Ralf Schmidmaier (FI 792/11-1).

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Open Access This chapter is licensed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license and indicate if changes were made.

The images or other third party material in this chapter are included in the chapter's Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the chapter's Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder.

Copyright information

© 2022 The Author(s)

About this chapter

Cite this chapter

Radkowitsch, A., Sailer, M., Fischer, M.R., Schmidmaier, R., Fischer, F. (2022). Diagnosing Collaboratively: A Theoretical Model and a Simulation-Based Learning Environment. In: Fischer, F., Opitz, A. (eds) Learning to Diagnose with Simulations . Springer, Cham. https://doi.org/10.1007/978-3-030-89147-3_10

Download citation

DOI: https://doi.org/10.1007/978-3-030-89147-3_10

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-89146-6

Online ISBN: 978-3-030-89147-3

eBook Packages: Behavioral Science and PsychologyBehavioral Science and Psychology (R0)