Abstract

This chapter presents the technique route of model updating in the presence of imprecise probabilities. The emphasis is put on the inevitable uncertainties, in both numerical simulations and experimental measurements, leading the updating methodology to be significantly extended from deterministic sense to stochastic sense. This extension requires that the model parameters are not regarded as unknown-but-fixed values, but random variables with uncertain distributions, i.e. the imprecise probabilities. The final objective of stochastic model updating is no longer a single model prediction with maximal fidelity to a single experiment, but rather the calibrated distribution coefficients allowing the model predictions to fit with the experimental measurements in a probabilistic point of view. The involvement of uncertainty within a Bayesian updating framework is achieved by developing a novel uncertainty quantification metric, i.e. the Bhattacharyya distance, instead of the typical Euclidian distance. The overall approach is demonstrated by solving the model updating sub-problem of the NASA uncertainty quantification challenge. The demonstration provides a clear comparison between performances of the Euclidian distance and the Bhattacharyya distance, and thus promotes a better understanding of the principle of stochastic model updating, as no longer to determine the unknown-but-fixed parameters, but rather to reduce the uncertainty bounds of the model prediction and meanwhile to guarantee the existing experimental data to be still enveloped within the updated uncertainty space.

You have full access to this open access chapter, Download chapter PDF

Similar content being viewed by others

8.1 Introduction

Computational models of large-scale structural systems with acceptable precision, robustness and efficiency are critical, especially for applications where a large amount of experimental data is hard to be obtained such as the aerospace engineering. Model updating has been developed as a typical technique to reduce the discrepancy between the numerical simulations and the experimental measurements. Recently, it is a tendency to consider the inevitable uncertainties involved in both simulations and experiments. A better understanding of the discrepancy between them, in the background of uncertainty, would achieve a better outcome of model updating.

In structural analysis, the source of uncertainties, i.e. the reason of the discrepancy between simulations and measurements, can be classified into the following categories:

-

Parameter uncertainty: Imprecisely known input parameters of the numerical model, such as material properties of novel composites, geometry sizes of complex components and random boundary conditions;

-

Modelling uncertainty: Unavoidable simplifications and idealisations, such as linearised representations of nonlinear behaviours and frictionless joint approximations;

-

Experimental uncertainty: Hard-to-control random effects, such as environment noise, measurement system errors and human subjective judgments.

The above uncertainties motivate the trend to extend model updating from the deterministic sense to the stochastic sense. The stochastic updating techniques draw massive attention in the literature, in which the majority is based on the framework of imprecise probability [4]. Considering the very typical categorisation of uncertainties, the term “imprecise probability” can be understood separately as “probability” corresponding to the aleatory uncertainty, and “imprecise” corresponding to the epistemic uncertainty. The epistemic uncertainty is caused by the lack of knowledge. As a better understanding of the problem is achieved, this part of uncertainty can be reduced by model updating. The aleatory uncertainty represents the natural randomness of the system, such as the random wind load on launch vehicles, manufacture and measurement system errors. This part of uncertainty is irreducible, however, an appropriate quantification of the aleatory uncertainty is still required in stochastic model updating.

The involvement of aleatory and/or epistemic uncertainties provides a clear logic for the categorisation of model input parameters:

-

I

Parameters without any aleatory or epistemic uncertainty, appearing as explicitly determined constants;

-

II

Parameters with only epistemic uncertainty, which are still constants, however, with an undetermined position in an interval;

-

III

Parameters with only aleatory uncertainty, which are no longer constants, but presented as random variables with exactly known distribution characteristics;

-

IV

Parameters with both aleatory and epistemic uncertainties, which are random variables with undetermined distribution characteristics.

The above parameters with various uncertainty characteristics lead the model predictions into the fourth category, i.e. the outputs with imprecise probabilities. Consequently, the objective of stochastic model updating is no longer a single model prediction with maximal fidelity to a single experiment, but rather a minimised uncertainty space of the outputs, whose bounds should still encompass the existing experimental data. In order to achieve this objective, a novel Uncertainty Quantification (UQ) metric based on the Bhattacharyya distance is introduced in this chapter. The UQ metric refers to an explicit value quantifying the discrepancy between simulations and measurements. Clearly, this metric is expected to be as comprehensive as possible to capture all sources of uncertainty information simultaneously. Furthermore, the overall updating procedure is committed to being simple enough, by employing a uniform framework applicable to both of the classical Euclidian distance and the novel Bhattacharyya distance. Within this uniform framework, comparison between these two distance-based metrics can be performed conveniently, and therefore a better understanding of the difference between the deterministic and stochastic updating is achieved with significantly reduced calculation cost.

The following parts of this chapter are organised as follows. Section 8.2 gives an overview of the state of the art of deterministic and stochastic model updating where key literature is provided. Section 8.3 presents the overall technique route with description of the key aspects, which can be helpful to generate a preliminary figure of the overall model updating campaign. As the emphasis, the parameter calibration procedure with uncertainty treatment is explained in Sect. 8.4, where the Bhattacharyya distance-based UQ metric is presented along with the Bayesian updating framework. The NASA UQ challenge problem is solved by the proposed approach and some interesting results are compared and analysed in Sect. 8.5. Section 8.6 presents the conclusions and prospects.

8.2 Overview of the State of the Art: Deterministic or Stochastic?

Although this chapter focuses on the stochastic model updating, the deterministic updating is still the footstone of its stochastic extension. A comprehensive review of the deterministic model updating techniques can be found from Ref. [14]. The readers are also suggested to refer to the fundamental book by Friswell and Mottershead [15] on this subject covering key aspects including model preparation, vibration data acquisition, sensitivity analysis, error localisation, parameter calibration, etc.

Among the plentiful techniques for deterministic parameter calibration, the sensitivity-based method is one of the most popular approaches based on the linearisation of the generally nonlinear relation between the model inputs and outputs. Mottershead et al. [20] provide tutorial literature for the sensitivity-based updating procedure of finite element models with both demonstrative and industry-scale examples. However, the sensitivity-based method is only valid for typical outputs, e.g. natural frequencies, modal shapes and displacement, of modal analysis or static analysis. Other more complex applications such as strong nonlinear dynamics or transient analysis lead the analytically solved sensitivity to be unpractical. Consequently, the random sampling method, more specifically the Monte Carlo method, attracts more and more interest by providing a direct connection between the model parameters and any output features via multiple deterministic model evaluations. The rapid development of computational hardware makes it possible for large-size samples, from which the statistical information of the inputs/outputs can be precisely estimated [17, 18]. Such Monte Carlo-based methods have been successfully applied in large-scale structures, see e.g. Refs. [10, 16].

The widely used Monte Carlo methods obviously benefit to the research of stochastic model updating, meanwhile, its conjunction with the Bayesian theory further promotes this topic. Beck and Katafygiotis [3] proposed the fundamental framework of Bayesian updating, which was further developed via the Markov Chain Monte Carlo (MCMC) algorithm by Beck and Au [2]. The Bayesian updating framework in conjunction with the MCMC algorithm possesses the advantage to capture the uncertainty information presented by rare experimental data. This approach has been developed as a standard solution of stochastic model updating for different applications such as uncertainty characterisation [22].

Besides the Bayesian interface, other imprecise probability techniques also have considerable potential to be applied in stochastic model updating, such as interval probabilities [13], evidence theory [21], info-gap theory [5] and fuzzy probabilities [19]. For the background of imprecise probability, the comprehensive review by Beer et al. [4] is suggested for an overall understanding of this topic.

8.3 Overall Technique Route of Stochastic Model Updating

The implement of stochastic model updating requires a complete theoretic system including various key steps from the originally developed model, with a series of techniques to define the features, to select and calibrate the parameters, to locate and reduce the modelling errors, and finally to validate the model with independent measurements. Special treatment of uncertainty propagation and quantification promotes the extension of model updating from deterministic domain to stochastic domain. This extension is specifically implemented to key steps such as parameter calibration, model adjustment and validation. Overview of all related steps in model updating is provided in the following subsections.

8.3.1 Feature Extraction

A numerical model cannot be universally feasible for all scenarios with different output features. Here, the feature is defined as the quantity that the engineer wants to predict with the model and it is also dependent on the capacity of the practical experimental set-up. Clearly, different features lead to different sensitivities of parameters, and require different strategies for uncertainty quantification. And therefore the first step of model updating is to define a suitable feature according to the existing experimental setup and the practical application. The most typical features in structural updating are the modal quantities, e.g. the natural frequencies and modal shapes. Multiple orders of frequencies constitute a vector and the absolute mean error between two vectors can be easily utilised to quantify the discrepancy between the simulated and measured data. For modal shapes, the Modal Assurance Criterion (MAC) [1] is the most popular tool to quantify the correlation between two sets of eigenvectors. The continuous quantities are also commonly utilised as features, such as the displacement response in time domain and Frequency Response Functions (FRFs) in frequency domain. Classical techniques to evaluate the difference between two complex and continuous quantities are the Signature Assurance Criterion (SAC) and Cross Signature scale Factor (CSF). Reference [8] provides an integrated application of SAC and CSF for a comprehensive comparison between two FRFs.

8.3.2 Parameter Selection

A sophisticated model of a large structure system always contains a massive number of parameters, which lead to a huge calculation burden and even failure of the updating procedure. Parameter selection is therefore a key step to select or filter parameters according to their significances to the features defined in the first step. The core technique for parameter selection is the sensitivity analysis focusing on a quantitative measurement of the parameter significance. The classical sensitivity analysis technique is the Sobol variance-based method [25]. For a comprehensive knowledge of the global sensitivity analysis inspired by Sobol’s method, the well-written book by Saltelli et al. [24] is suggested to the readers. Another extension of Sobol method includes, e.g. the Analysis of Variance (ANOVA) based on the hypothesis testing in probabilistic theory. Reference [7] proposes an integrated application of ANOVA and Design of Experiment (DoE), which provides a significant coefficient matrix containing the complete sensitivity information of a multiple parameter–multiple feature system. When uncertainties are involved, the sensitivity analysis requires extension from the deterministic procedure to the stochastic procedure. The sensitivity of a certain parameter, in the background of uncertainty treatment, can be represented as the degree of how much the uncertainty space of the features is reduced, when the epistemic uncertainty space of the parameter is completely reduced. This requires additional techniques for uncertainty propagation and quantification, which will be addressed in the following parameter calibration step. A comprehensive literature review on the subject of sensitivity analysis can be found in Ref. [26].

8.3.3 Surrogate Modelling

The employment of surrogate models, known as mate-models in some literature, becomes increasingly important along with the sampling-based updating methodologies where a large number of model evaluations are generally required. A surrogate model is a fast-running script between inputs and outputs, which can replace the time-consuming model, e.g. the large finite element model, in the updating procedure. An original input/output sample, i.e. a training sample, generated from the complex model is required to train the surrogate model. Since the surrogate model is proposed to handle the conflict between efficiency and precision, the training sample is expected to be as small as possible, while the precision of the surrogate model according to the original model should be high enough. The typical types of surrogate models include the polynomial function, radial basis function, support vector machine, Kriging function neural network, etc. The selection of a suitable surrogate model type is determined by various aspects such as its efficiency, generality, and nonlinearity. Another technique, in conjunction with the surrogate modelling, is the DoE with the purpose to efficiently and uniformly configure a spatial distribution of the training sample within the whole parameter space. A comprehensive review of the existing techniques of surrogate modelling and DoE can be found in Ref. [27].

8.3.4 Test Analysis Correlation: Uncertainty Quantification Metrics

Test Analysis Correlation (TAC) is the core step of the overall updating procedure, not only because it significantly influences the updating outcome but also because it is the part mostly extended by the uncertainty treatment. TAC refers to the process to quantitatively measure the agreement (or lack thereof) between test measurements and analytical simulations, taking uncertainties into account. It therefore requires a comprehensive metric which is capable of capturing multiple uncertainty sources simultaneously. This chapter proposes UQ metrics under various distance concepts. The Euclidian distance, i.e. the absolute geometry distance between two single points, is probably the most common metric used in deterministic updating approaches. However, it becomes insufficient for stochastic updating where multiple simulations and multiple tests are presented. The Mahalanobis distance is a weighted distance considering the covariance between two datasets. And the alternative Bhattacharyya distance is a statistical distance measuring the overlap between two random distributions. A comprehensive comparison among the three distances in model updating and validation can be found in Ref. [9], where the Bhattacharyya distance is found to be more comprehensive to capture more sources of uncertainty information. In the example section, the Bhattacharyya distance-based UQ metric is applied within a Bayesian updating framework, and the result is compared with the one using the typical Euclidian distance. This work does not address the Mahalanobis distance, since it has been found to be infeasible for parameter calibration, although it contains the covariance information among multiple outputs. Nevertheless, the Mahalanobis distance has the potential to be utilised in model validation as demonstrated in Ref. [9].

8.3.5 Model Adjustment and Validation

After the model parameters are calibrated, the model still needs to pass the validation procedure before it can be utilised in a practical application. The validation procedure contains a series of criteria with increasing requirements: (1) The updated model should predict the existing measurements; (2) The updated model should predict another set of measurement data which is different from the ones used for updating; (3) The updated model should predict any modification of the physical system by making the same modification on the model; (4) The updated model, when utilised as a component of a whole system, should improve the prediction of the whole system model. In the background of uncertainty treatment, the fit between the prediction and the measurement should be assessed by not only the precision but also the stochastic characteristics, e.g. probabilities, intervals and probability boxes. Model adjustment is a procedure to deal with the modelling uncertainty. When the updated model fails to fulfil the validation criteria, or some updated parameters are found to be unphysical, e.g. a minus density value, the modelling uncertainty is too severe to be compensated by calibrating the parameters. The model can be adjusted by increasing the resolution, changing the element type and adding a more detailed geometry description, etc. Another round of parameter calibration is performed for the adjusted model until the validation criterion is found to be fulfilled.

8.4 Uncertainty Treatment in Parameter Calibration

8.4.1 The Bayesian Updating Framework

The typical Bayesian model updating methodology is based on the following Bayes’ equation:

with the key elements described as follows:

-

\(\textit{P} (\theta )\) is the prior distribution of the parameters, representing the prior knowledge before model updating;

-

\(\textit{P}(\theta | \mathbf{X} _{exp})\) is the posterior distribution of the parameters conditional to the existing measurement, i.e. \(\textit{P}(\theta | \mathbf{X} _{exp})\) is the outcome of Bayesian updating;

-

\(P(\mathbf{X} _{exp} )\) is the so-called “normalisation factor” guaranteeing the integration of the posterior distribution \(\textit{P}(\theta | \mathbf{X} _{exp})\) equal to one;

-

\(P_{L} (\mathbf{X} _{exp} | \theta )\) is the likelihood function defined as the probability of the existing measurements conditional to an instance of the parameters.

The likelihood represents the probability of the measurement data under each instance of the updating parameters \(\theta \). And thus the objective of model updating in the Bayesian background is expressed as: to find the specific instance of the parameters allowing the experimental measurement to possess the largest probability, in other words, allowing the likelihood \(P_{L} (\mathbf{X} _{exp} | \theta )\) reach the maximum. See Chap. 1 for additional background on Bayesian inference.

However, one of the difficulties in Bayesian updating is relative to the normalisation fact \(P(\mathbf{X} _{exp} )\). The direct integration of the posterior distribution over the whole parameter space is quite difficult in practical application, especially when the number of parameters is large and the distribution format is complex, leading the direct evaluation of the normalisation factor impractical. The well-known MCMC algorithm is popular to solve this difficulty by replacing Eq. (8.1) with

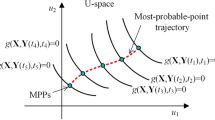

where \(\beta \) is the weighting coefficient fallen within the interval [0, 1]. When \(\beta \) equals to zero, the right part of Eq. (8.2) is the prior distribution; when \(\beta \) equals to one, the right part of Eq. (8.2) converges to the posterior distribution. In the j-th iteration of the MCMC algorithm, random samples are generated from the intermediate distributions with weighting coefficient \(\beta _{j}\in [1, 0]\). In the (j \(+\) 1)-th iteration, parameter points which lead to higher likelihood are selected from the random samples in the j-th iteration. New Markov chains are generated using the selected parameter points, and thus \(\beta _{j+1}\) is updated. The intermediate distribution converges to the posterior distribution when \(\beta _{j}=1\). The MCMC algorithm has been developed as a standard tool to stepwise generate samples from very complex target distributions. For the detailed description of the MCMC algorithm, Refs. [2, 11] are suggested to the readers. More applications of this algorithm can be found in the fields from stochastic model updating [6, 22] to structural health monitoring [23]. See Chap. 2 for additional background on Monte Carlo methods.

8.4.2 A Novel Uncertainty Quantification Metric

The MCMC algorithm makes it possible to avoid the evaluation of the normalisation fact in Bayes’ equation. However, the evaluation of the likelihood is inevitable and it becomes even more critical along with the uncertainty treatment. In the presence of multiple sets of measurement data, the theoretical definition of the likelihood is

where \(N_{exp}\) is the number of existing experiments. This equation requires accurate knowledge of the distribution of each measurement data point \(P(\mathrm {x}_{k} | \theta )\). An accurate estimation of the distribution requires a large number of samples, which means a large number of model evaluations.

Clearly, a direct evaluation of Eq. (8.3) leads to a huge calculation cost. This is why the Approximate Bayesian Computation (ABC) becomes increasingly popular in Bayesian applications. Considering the principle of the likelihood function, it is natural to propose an approximate function to replace Eq. (8.3), as long as this approximate function still contains the information of the existing measurement data and an instance of updating parameters. In this work, an approximate likelihood based on the Gaussian function is proposed as

where \(d(\mathbf{X} _{exp} , \mathbf{X} _{sim})\) is the distance between the experimental and simulated feature data. Equation (8.4) serves as an elegant connection between the Bayesian updating framework and the distance concepts. It provides a uniform framework for various distance concepts. The approximate likelihood is applicable for not only the Euclidian distance but also for the Mahalanobis and Bhattacharyya distances, in a uniform updating framework. As explained in Sect. 8.3.4, the Mahalanobis distance is not utilised in this work. The Euclidian distance is evaluated as

where \(\mathbf{X} _{exp}\) and \(\mathbf{X} _{sim}\) are the experimental and simulated feature data matrices, respectively; \(\overline{ \mathbf{X} }\) denotes the mean vector of the matrix. Clearly, the Euclidian distance only handles the mean of the data. The Bhattacharyya distance has the definition as

where P(x) is the PDF of the feature; \(\mathbb {X}\) is the feature space, implying \(\int _{\mathbb {X}}^{}\) is the integration performed over the whole feature variable space. More detailed information about the evaluation method of the Bhattacharyya distance can be found in Ref. [6].

8.5 Example: the NASA UQ Challenge

The NASA UQ challenge [12] has been developed as a benchmark problem for uncertainty treatment techniques in multidisciplinary engineering. This challenge problem contains a series of subproblems such as uncertainty characterisation, sensitivity analysis, uncertainty propagation, etc. In this work, only the Subproblem A (Uncertainty Characterisation) is investigated since it is equivalent to the task of model updating herein. Figure 8.1 illustrates the key components of this problem, which contains one output evaluated via a black-box model using five input parameters. According to the categorisation strategy in Sect. 8.1, the five parameters are classified into three categories, as shown in Table 8.1.

Only the parameters involving epistemic uncertainties, i.e. Categories II and IV parameters, require to be updated in this context. The uncertainty characteristics, including distribution types and distribution coefficients, are predefined as listed in Table 8.1. The predefined intervals of the distribution coefficients represent the epistemic uncertainty of the parameters. An output sample with 50 data points is available in the problem, which is generated by assigning a set of “true” values of the distribution coefficients from the predefined intervals. The objective of this problem is, based on the existing 50 output points, to reduce the epistemic uncertainty space of the parameters, i.e. to reduce the predefined intervals of the distribution coefficients. Although the number of the model parameter is five, there are totally eight updating coefficients controlling the epistemic uncertainty space of the parameters, as shown in the last a column of Table 8.1.

To solve the problem, the Euclidian and Bhattacharyya distances are utilised to construct the approximate likelihood functions, respectively. The Bayesian updating framework employs these two distances as UQ metrics and generates two independent sets of results. This treatment is intended to make a clear distinction between the deterministic updating and the stochastic updating, and furthermore to reveal the merits and demerits of these two distances. In practical applications, however, a combined application of these two distances is suggested by first using the Euclidian distance for the mean updating, and second using the Bhattacharyya distance for the variance updating. This two-step strategy has been demonstrated as a success in solving this problem in Ref. [6].

The posterior distributions of the eight updating coefficients using respectively the Euclidian and Bhattacharyya distances as the UQ metrics are illustrated in Fig. 8.2. In the figure, the objects (“Samples” or “PDFs”) with the suffix “_ED” denote the results updated using the Euclidian distance metric; and the ones with the suffix “_BD” denote the results using the Bhattacharyya distance metric. Except the distribution of \(\theta _1\), most of the posterior distributions with the Euclidian distance metric are still close to uniform, implying the deterministic updating procedure employing the Euclidian distance is incapable of solving this problem. As an obvious comparison, the updating procedure employing the Bhattacharyya distance performs well for most of the updating coefficients by providing very peaked posterior distributions, such as \(\theta _2\), \(\theta _3\), \(\theta _6\), and \(\theta _7\). The vertical line in each subfigure represents the true value of the updating coefficient, which were used to generate the existing 50 output samples. By comparing the position of the vertical line with the peak of the posterior distribution, the updating precision is assessed. For \(\theta _1\), the peak of the distribution with the Euclidian distance is apart from the vertical line, while the peak of the distribution with the Bhattacharyya distance is quite close to the vertical line. This means the Bhattacharyya distance performs better than the Euclidian distance when updating \(\theta _1\). The same conclusion is also achieved for \(\theta _3\), \(\theta _4\), and \(\theta _7\). However, for \(\theta _2\), and \(\theta _5\), although the distributions with the Bhattacharyya distance have clear poles, they do not converge to the vertical lines. The updating precision of these two coefficients is possible to be further improved by the two-step strategy as described in Ref. [6]. Note that, there are still two coefficients (\(\theta _5\) and \(\theta _8\)), which cannot be calibrated by neither the Euclidian distance nor the Bhattacharyya distance. A potential explanation is that the sensitivity of these two coefficients to the output is extremely low, leading the inverse procedure impossible to locate the “true” value.

The quantitative updating results are detailed in Table 8.2, where the true values of the updating coefficients and the updated ones are provided. The updated values in the last two columns of Table 8.2 are obtained by determining the exact position of the distribution peaks in Fig. 8.2. For the posterior distributions which are still close to uniform distribution, the determining process is meaningless and thus omitted. This is why only two updated coefficients are provided in the column with the Euclidian distance. Note that, although the true values of the coefficients are released in Table 8.2, they are not necessarily to be treated as the final target of this updating problem. In the background of uncertainty treatment, the objective of this problem, stipulated by the problem designer [12], is to reduce the epistemic uncertainty space of the parameters, while making sure that the existing output sample can still be included in the output uncertainty space. Hence, it makes more sense to assess how much the output uncertainty space has been reduced after the intervals of the coefficients are reduced in the updating procedure. This is relative to the tasks uncertainty propagation and model validation, which are out of the scope of this example for parameter calibration. A complete validation procedure considering the uncertainty space of the output for this problem can be found in Ref. [6]. Nevertheless, the stochastic Bayesian updating framework employing the Bhattacharyya distance has been demonstrated to be more comprehensive and feasible than the Euclidian distance in solving this NASA UQ challenge problem.

8.6 Conclusions and Prospects

The tendency of uncertainty analysis has been rendering the typical model updating full of vitalities but also challenges. This chapter reviews the key techniques and components of the overall model updating campaign. Main emphasis is put on the involvement of uncertainty, which leads the transformation from the deterministic approach to the stochastic approach. The stochastic model updating is executed within the Bayesian model updating framework, where the Bhattacharyya distance is proposed as a novel UQ metric. The approximate likelihood is critical by providing a uniform connection between the Bayesian framework and various types of distance metrics. The example demonstrates that the Bhattacharyya distance is more comprehensive and feasible than the Euclidian distance to calibrate distribution coefficients of parameters with imprecise probabilities.

The Bhattacharyya distance is designed as a universal tool of UQ, which can be conveniently embedded into a technique route similar as the deterministic approach, but provides stochastic outcomes by capturing more uncertainty information. The tendency of uncertainty analysis will be further promoted by the novel UQ metric in not only the stochastic parameter calibration but also other procedures, e.g. the stochastic sensitivity analysis and model validation, which will establish the complete scenario of the stochastic model updating.

References

R. Allemang. The modal assurance criterion: Twenty years of use and abuse. Sound and vibration, 8:14–21, 2008.

J. Beck and S.K. Au. Bayesian updating of structural models and reliability using markov chain monte carlo simulation. Journal of Engineering Mechanics, 128(4):380–391, 2002.

J. Beck and L. Katafygiotis. Updating models and their uncertainties. i: Bayesian statistical framework. Journal of Engineering Mechanics, 124(4):455–461, 1998.

M. Beer, S. Ferson, and V. Kreinovich. Imprecise probabilities in engineering analyses. Mechanical Systems and Signal Processing, 37(1-2):4–29, 2013.

Y. Ben-Haim. Info-gap decision theory: Decisions under severe uncertainty. Elsevier, 2006.

S. Bi, M. Broggi, and M. Beer. The role of the bhattacharyya distance in stochastic model updating. Mechanical Systems and Signal Processing, 117:437–452, 2019.

S. Bi, Z. Deng, and Z. Chen. Stochastic validation of structural fe-models based on hierarchical cluster analysis and advanced monte carlo simulation. Finite Elements in Analysis and Design, 67:22–33, 2013.

S. Bi, M. Ouisse, and E. Foltête. Probabilistic approach for damping identification considering uncertainty in experimental modal analysis. AIAA Journal, 56(12):4953–4964, 2018.

S. Bi, S. Prabhu, S. Cogan, and S. Atamturktur. Uncertainty quantification metrics with varying statistical information in model calibration and validation. AIAA Journal, 55(10):3570–3583, 2017.

A. Calvi. Uncertainty-based loads analysis for spacecraft: Finite element model validation and dynamic responses. Computers & Structures, 83(14):1103–1112, 2005.

J. Ching and Y. Chen. Transitional markov chain monte carlo method for bayesian model updating, model class selection, and model averaging. Journal of Engineering Mechanics, 133(7):816–832, 2007.

L. Crespo, S. Kenny, and D. Giesy. The nasa langley multidisciplinary uncertainty quantification challenge. In 16th AIAA Non-Deterministic Approaches Conference.

M. Faes, M. Broggi, E. Patelli, Y. Govers, J. Mottershead, M. Beer, and D. Moens. A multivariate interval approach for inverse uncertainty quantification with limited experimental data. Mechanical Systems and Signal Processing, 118:534–548, 2019.

M. Friswell and J. Mottershead. Model updating in structural dynamics: a survey. Journal of Sound and Vibration, 162(2):347–375, 1993.

M. Friswell and J. Mottershead. Finite Element Model Updating in Structural Dynamics. Kluwer Academic Press, Dordrecht, Netherlands, 1995.

B. Goller, M. Broggi, A. Calvi, and G.I. Schueller. A stochastic model updating technique for complex aerospace structures. Finite Elements in Analysis and Design, 47(7):739–752, 2011.

Y. Govers and M. Link. Stochastic model updating - covariance matrix adjustment from uncertain experimental modal data. Mechanical Systems and Signal Processing, 24(3):696–706, 2010.

C. Mares, J. Mottershead, and M Friswell. Stochastic model updating: Part 1 - theory and simulated example. Mechanical Systems and Signal Processing, 20(7):1674–1695, 2006.

B. Möller and M. Beer. Fuzzy Randomness: Uncertainty in Civil Engineering and Computational Mechanics. Springer, 2004.

J. Mottershead, M. Link, and M Friswell. The sensitivity method in finite element model updating: A tutorial. Mechanical Systems and Signal Processing, 25:2275–2296, 2011.

W. Oberkampf and J. Helton. Evidence theory for engineering applications. In Engineering design reliability handbook, chapter 10, pages 197–226. CRC Press, 2004.

E. Patelli, D. Alvarez, M. Broggi, and M. De Angelis. Uncertainty management in multidisciplinary design of critical safety systems. Journal of Aerospace Information Systems, 12(1):140–169, 2015.

R. Rocchetta, M. Broggi, Q. Huchet, and E. Patelli. On-line bayesian model updating for structural health monitoring. Mechanical Systems and Signal Processing, 103:174–195, 2018.

A. Saltelli, M. Ratto, T. Andres, F. Campolongo, J. Cariboni, D. Gatelli, M. Saisana, and S. Tarantola. Global Sensitivity Analysis. The Primer. John Wiley & Sons, 2008.

I. Sobol. Sensitivity estimates for nonlinear mathematical models. Mathematical Modeling and Computational experiment, 1(4):407–414, 1993.

P. Wei, Z. Lu, and J. Song. Variable importance analysis: A comprehensive review. Reliability Engineering and System Safety, 142:399–432, 2015.

R. Yondo, E. Andrés, and E. Valero. A review on design of experiments and surrogate models in aircraft real-time and many-query aerodynamic analyses. Progress in Aerospace Sciences, 96:23–61, 2018.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Open Access This chapter is licensed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license and indicate if changes were made.

The images or other third party material in this chapter are included in the chapter's Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the chapter's Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder.

Copyright information

© 2022 The Author(s)

About this chapter

Cite this chapter

Bi, S., Beer, M. (2022). Overview of Stochastic Model Updating in Aerospace Application Under Uncertainty Treatment. In: Aslett, L.J.M., Coolen, F.P.A., De Bock, J. (eds) Uncertainty in Engineering. SpringerBriefs in Statistics. Springer, Cham. https://doi.org/10.1007/978-3-030-83640-5_8

Download citation

DOI: https://doi.org/10.1007/978-3-030-83640-5_8

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-83639-9

Online ISBN: 978-3-030-83640-5

eBook Packages: Mathematics and StatisticsMathematics and Statistics (R0)