Abstract

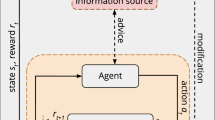

Reinforcement Learning (RL) is a machine learning approach based on how humans and animals learn new behaviors by actively exploring their environment that provides them positive and negative rewards. The interactive RL approach incorporates a human-in-the-loop that can guide a learning RL-based agent to personalize its behavior and/or speed up its learning process. To enable HCI researchers to make advances in this area, we introduce an interactive RL framework that outlines HCI challenges in the domain. By following this taxonomy, HCI researchers can (1) design new interaction techniques and (2) propose new applications. To help the role (1) researchers, we describe how different types of human feedback can adapt an RL model to perform as the users intend. We help researchers perform the role (2) by proposing generic design principles to create effective RL applications. Finally, we list current open challenges in interactive RL and what we consider the most promising research directions in this research area.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Abbeel P, Ng AY (2004) Apprenticeship learning via inverse reinforcement learning. In: Proceedings of the twenty-first international conference on Machine learning. ACM, p 1

Adadi A, Berrada M (2018) Peeking inside the black-box: a survey on Explainable Artificial Intelligence (XAI). IEEE Access 6:52138–52160

Agogino AK, Tumer K (2004) Unifying temporal and structural credit assignment problems. In: Proceedings of the third international joint conference on autonomous agents and multiagent systems-vol 2. IEEE Computer Society, pp 980–987

Akalin N, Loutfi A (2021) Reinforcement learning approaches in social robotics. In: Sensors 21.4, p 1292

Amershi S et al (2014) Power to the people: the role of humans in interactive machine learning. AI Mag 35(4):105–120

Amir D, Amir O (2018) Highlights: summarizing agent behavior to people. In: Proceedings of the 17th international conference on autonomous agents and multiagent systems. International Foundation for Autonomous Agents and Multiagent Systems, pp 1168–1176

Amir O et al (2016) Interactive teaching strategies for agent training. In: In Proceedings of CAI 2016. https://www.microsoft.com/en-us/research/publication/interactive-teaching-strategies-agent-training/

Amodei D et al (2016) Concrete problems in AI safety. arXiv:1606.06565

Arakawa R et al (2018) DQN-TAMER: human-in-the-loop reinforcement learning with intractable feedback. arXiv:1810.11748

Arumugam D et al (2019) Deep reinforcement learning from policy-dependent human feedback. arXiv:1902.04257

Arzate Cruz C, Igarashi T (2020) A survey on interactive reinforcement learning: design principles and open challenges. In: Proceedings of the 2020 ACM designing interactive systems conference, pp 1195–1209

Arzate Cruz C, Igarashi T (2020) MarioMix: creating aligned playstyles for bots with interactive reinforcement learning. In: Extended abstracts of the 2020 annual symposium on computer-human interaction in play, pp 134–139

Arzate Cruz C, Ramirez Uresti J (2018) HRLB\(\wedge \)2: a reinforcement learning based framework for believable bots. Appl Sci 8(12):2453

Bai A, Wu F, Chen X (2015) Online planning for large markov decision processes with hierarchical decomposition. ACM Trans Intell Syst Technol (TIST) 6(4):45

Bianchi RAC et al (2013) Heuristically accelerated multiagent reinforcement learning. IEEE Trans Cybern 44(2):252–265

Brockman G et al (2016) OpenAI Gym. arXiv:1606.01540

Brys T et al (2015) Reinforcement learning from demonstration through shaping. In: Proceedings of the 24th international conference on artificial intelligence. CAI’15. Buenos Aires, Argentina: AAAI Press, pp 3352–3358. isbn: 978-1-57735-738-4. http://dl.acm.org/citation.cfm?id=2832581.2832716

Cerf M et al (2008) Predicting human gaze using low-level saliency combined with face detection. Adv Neural Inf Process Syst 20:1–7

Christiano PF et al (2017) Deep reinforcement learning from human preferences. In: Advances in neural information processing systems, pp 4299–4307

Clark J, Amodei D (2016) Faulty reward functions in the wild. Accessed: 2019–08-21. https://openai.com/blog/faulty-reward-functions/

European Commission (2018) 2018 reform of EU data protection rules. Accessed: 2019–06-17. https://ec.europa.eu/commission/sites/beta-political/files/data-protection-factsheet-changes_en.pdf

Cruz F et al (2015) Interactive reinforcement learning through speech guidance in a domestic scenario. In: 2015 international joint conference on neural networks (IJCNN). IEEE, pp 1–8

Cruz F et al (2016) Training agents with interactive reinforcement learning and contextual affordances. IEEE Trans Cogn Dev Syst 8(4):271–284

Cuccu G, Togelius J, Cudré-Mauroux P (2019) Playing atari with six neurons. In: Proceedings of the 18th international conference on autonomous agents and multiagent systems. International Foundation for Autonomous Agents and Multiagent Systems, pp 998–1006

Dietterich TG (2000) Hierarchical reinforcement learning with the MAXQ value function decomposition. J Artif Intell Res 13:227–303

Dodson T, Mattei N, Goldsmith J (2011) A natural language argumentation interface for explanation generation in Markov decision processes. In: International conference on algorithmic decision theory. Springer, pp 42–55

Dubey R et al (2018) Investigating human priors for playing video games. arXiv:1802.10217

Elizalde F, Enrique Sucar L (2009) Expert evaluation of probabilistic explanations. In: ExaCt, pp 1–12

Elizalde F et al (2008) Policy explanation in factored Markov decision processes. In: Proceedings of the 4th European workshop on probabilistic graphical models (PGM 2008), pp 97–104

Fachantidis A, Taylor ME, Vlahavas I (2018) Learning to teach reinforcement learning agents. Mach Learn Knowl Extr 1(1):21–42. issn: 2504–4990. https://www.mdpi.com/2504-4990/1/1/2. https://doi.org/10.3390/make1010002

Fails JA, Olsen Jr DR (2003) Interactive machine learning. In: Proceedings of the 8th international conference on intelligent user interfaces. ACM, pp 39–45

Griffith S et al (2013) Policy shaping: integrating human feedback with reinforcement learning. In: Advances in neural information processing systems, pp 2625–2633

Griffith S et al (2013) Policy shaping: integrating human feedback with reinforcement learning. In: Proceedings of the international conference on neural information processing systems (NIPS)

Hadfield-Menell D et al (2017) Inverse reward design. In: Guyon I et al (eds) Advances in neural information processing systems, vol 30. Curran Associates Inc, pp 6765–6774. http://papers.nips.cc/paper/7253-inverse-reward-design.pdf

Ho MK et al (2015) Teaching with rewards and punishments: reinforcement or communication? In: CogSci

Isbell CL et al (2006) Cobot in LambdaMOO: an adaptive social statistics agent. Auton Agents Multi-Agent Syst 13(3):327–354

Isbell Jr CL, Shelton CR (2002) Cobot: asocial reinforcement learning agent. In: Advances in neural information processing systems, pp 1393–1400

Jaques N et al (2016) Generating music by fine-tuning recurrent neural networks with reinforcement learning

Jaques N et al (2018) Social influence as intrinsic motivation for multi-agent deep reinforcement learning. arXiv:1810.08647

Kaelbling LP, Littman ML, Moore AW (1996) Reinforcement learning: a survey. J Artif Intell Res 4:237–285

Kaochar T et al (2011) Towards understanding how humans teach robots. In: International conference on user modeling, adaptation, and personalization. Springer, pp 347–352

Karakovskiy S, Togelius J (2012) The mario ai benchmark and competitions. IEEE Trans Comput Intell AI Games 4(1):55–67

Khalifa A et al (2020) Pcgrl: procedural content generation via reinforcement learning. arXiv:2001.09212

Knox WB, Stone P (2010) Combining manual feedback with subsequent MDP reward signals for reinforcement learning. In: Proceedings of the 9th international conference on autonomous agents and multiagent systems: volume 1-Volume 1. International Foundation for Autonomous Agents and Multiagent Systems, pp 5–12

Knox WB, Stone P (2012) Reinforcement learning from simultaneous human and MDP reward. In: Proceedings of the 11th international conference on autonomous agents and multiagent systems-volume 1. International Foundation for Autonomous Agents and Multiagent Systems, pp 475–482

Knox WB, Stone P, Breazeal C (2013) Training a robot via human feedback: a case study. In: International conference on social robotics. Springer, pp 460–470

Knox WB et al (2012) How humans teach agents. Int J Soc Robot 4(4):409–421

Knox WB, Stone P (2009) Interactively shaping agents via human reinforcement: the TAMER framework. In: The fifth international conference on knowledge capture. http://www.cs.utexas.edu/users/ai-lab/?KCAP09-knox

Korpan R et al (2017) Why: natural explanations from a robot navigator. arXiv:1709.09741

Krening S, Feigh KM (2019) Effect of interaction design on the human experience with interactive reinforcement learning. In: Proceedings of the 2019 on designing interactive systems conference. ACM, pp 1089–1100

Krening S, Feigh KM (2018) Interaction algorithm effect on human experience with reinforcement learning. ACM Trans Hum-Robot Interact (THRI) 7(2):16

Krening S, Feigh KM (2019) Newtonian action advice: integrating human verbal instruction with reinforcement learning. In: Proceedings of the 18th international conference on autonomous agents and multiagent systems. International Foundation for Autonomous Agents and Multiagent Systems, pp 720–727

Lazic N et al (2018) Data center cooling using model-predictive control

Lee Y-S, Cho S-B (2011) Activity recognition using hierarchical hidden markov models on a smartphone with 3D accelerometer. In: International conference on hybrid artificial intelligence systems. Springer, pp 460–467

Leike J et al (2018) Scalable agent alignment via reward modeling: a research direction. arXiv:1811.07871

Lelis LHS, Reis WMP, Gal Y (2017) Procedural generation of game maps with human-in-the-loop algorithms. IEEE Trans Games 10(3):271–280

Lessel P et al (2019) “Enable or disable gamification” analyzing the impact of choice in a gamified image tagging task. In: Proceedings of the 2019 CHI conference on human factors in computing systems. CHI ’19. ACM, Glasgow, Scotland Uk , 150:1–150:12. isbn: 978-1-4503-5970-2. https://doi.org/10.1145/3290605.3300380

Li G et al (2018) Social interaction for efficient agent learning from human reward. Auton Agents Multi-Agent Syst 32(1):1–25. issn: 1573–7454. https://doi.org/10.1007/s10458-017-9374-8

Li G et al (2013) Using informative behavior to increase engagement in the tamer framework. In: Proceedings of the 2013 international conference on autonomous agents and multi-agent systems. AAMAS ’13. International Foundation for Autonomous Agents and Multiagent Systems, St. Paul, MN, USA, pp 909–916. isbn: 978-1-4503-1993-5. https://dl.acm.org/citation.cfm?id=2484920.2485064

Li J et al (2016) Deep reinforcement learning for dialogue generation. arXiv:1606.01541

Li TJ-J et al (2019) Pumice: a multi-modal agent that learns concepts and conditionals from natural language and demonstrations. In: Proceedings of the 32nd annual ACM symposium on user interface software and technology, pp 577–589

Li Y, Liu M, Rehg JM (2018) In the eye of beholder: joint learning of gaze and actions in first person video. In: Proceedings of the European conference on computer vision (ECCV), pp 619–635

Little G, Miller RC (2006) Translating keyword commands into executable code. In: Proceedings of the 19th annual ACM symposium on User interface software and technology, pp 135–144

Liu Y et al (2019) Experience-based causality learning for intelligent agents. ACM Trans Asian Low-Resour Lang Inf Process (TALLIP) 18(4):45

Liu Y et al (2019) Experience-based causality learning for intelligent agents. ACM Trans Asian Low-Resour Lang Inf Process 18(4):45:1–45:22. issn: 2375–4699. https://doi.org/10.1145/3314943

MacGlashan J et al (2017) Interactive learning from policy-dependent human feedback. In: Proceedings of the 34th international conference on machine learning-volume 70. JMLR. org, pp 2285–2294

Martins MF, Bianchi RAC (2013) Heuristically accelerated reinforcement learning: a comparative analysis of performance. In: Conference towards autonomous robotic systems. Springer, pp 15–27

McGregor S et al (2017) Interactive visualization for testing markov decision processes: MDPVIS. J Vis Lang Comput 39:93–106

Meng Q, Tholley I, Chung PWH (2014) Robots learn to dance through interaction with humans. Neural Comput Appl 24(1):117–124

Miltenberger RG (2011) Behavior modification: principles and procedures. Cengage Learning

Mindermann S et al (2018) Active inverse reward design. arXiv:1809.03060

Mnih V et al (2015) Human-level control through deep reinforcement learning. Nature 518(7540):529–533

Mnih V et al (2015) Human-level control through deep reinforcement learning. Nature 518(7540):529

Morales CG et al (2019) Interaction needs and opportunities for failing robots. In: Proceedings of the 2019 on designing interactive systems conference, pp 659–670

Mottaghi R et al (2013) Analyzing semantic segmentation using hybrid human-machine crfs. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 3143–3150

Mottaghi R et al (2015) Human-machine CRFs for identifying bottlenecks in scene understanding. IEEE Trans Pattern Anal Mach Intell 38(1):74–87

Myers CM et al (2020) Revealing neural network bias to non-experts through interactive counterfactual examples. arXiv:2001.02271

Nagabandi A et al (2020) Deep dynamics models for learning dexterous manipulation. In: Conference on robot learning. PMLR, pp 1101–1112

Ng AY, Harada D, Russell S (1999) Policy invariance under reward transformations: theory and application to reward shaping. In: ICML, vol. 99, pp 278–287

OpenAI et al (2019) Dota 2 with large scale deep reinforcement learning. arXiv: 1912.06680

Parikh D, Zitnick C (2011) Human-debugging of machines. NIPS WCSSWC 2(7):3

Peng B et al (2016) A need for speed: adapting agent action speed to improve task learning from non-expert humans. In: Proceedings of the 2016 international conference on autonomous agents & multiagent systems. International Foundation for Autonomous Agents and Multiagent Systems, pp 957–965

Puterman ML (2014) Markov decision processes: discrete stochastic dynamic programming. Wiley

Ribeiro MT, Singh S, Guestrin C (2016) Why should i trust you? Explaining the predictions of any classifier. In: Proceedings of the 22nd ACM SIGKDD international conference on knowledge discovery and data mining, pp 1135–1144

Risi S, Togelius J (2020) Increasing generality in machine learning through procedural content generation. Nat Mach Intell 2(8):428–436

Rosenfeld A et al (2018) Leveraging human knowledge in tabular reinforcement learning: a study of human subjects. In: The knowledge engineering review 33

Russell SJ, Norvig P (2016) Artificial intelligence: a modern approach. Pearson Education Limited, Malaysia

Sacha D et al (2017) What you see is what you can change: human-centered machine learning by interactive visualization. Neurocomputing 268:164–175

Saran A et al (2018) Human gaze following for human-robot interaction. In: 2018 IEEE/RSJ international conference on intelligent robots and systems (IROS). IEEE, pp 8615–8621

Shah P, Hakkani-Tur D, Heck L (2016) Interactive reinforcement learning for task-oriented dialogue management

Shah P et al (2018) Bootstrapping a neural conversational agent with dialogue self-play, crowdsourcing and on-line reinforcement learning. In: Proceedings of the 2018 conference of the North American chapter of the association for computational linguistics: human language technologies, volume 3 (Industry Papers), pp 41–51

Silver D et al (2017) Mastering the game of go without human knowledge. Nature 550(7676):354–359

Sørensen PD, Olsen JM, Risi S (2016) Breeding a diversity of super mario behaviors through interactive evolution. In: 2016 IEEE conference on computational intelligence and games (CIG). IEEE, pp 1–7

Suay HB, Chernova S (2011) Effect of human guidance and state space size on interactive reinforcement learning. In: 2011 Ro-Man. IEEE, pp 1–6

Sutton R, Littman M, Paris A (2019) The reward hypothesis. Accessed: 2019–08-21. http://incompleteideas.net/rlai.cs.ualberta.ca/RLAI/rewardhypothesis.html

Sutton RS (1996) Generalization in reinforcement learning: successful examples using sparse coarse coding. In: Advances in neural information processing systems, pp 1038–1044

Sutton RS (1985) Temporal credit assignment in reinforcement learning

Sutton RS, Barto AG (2011) Reinforcement learning: an introduction

Sutton RS, Precup D, Singh S (1999) Between MDPs and semi-MDPs: a framework for temporal abstraction in reinforcement learning. Artif Intell 112(1-2):181–211

Taylor ME, Stone P (2007) Cross-domain transfer for reinforcement learning. In: Proceedings of the 24th international conference on Machine learning. ACM, pp 879–886

Taylor ME, Bener Suay H, Chernova S (2011) Integrating reinforcement learning with human demonstrations of varying ability. In: The 10th international conference on autonomous agents and multiagent systems-volume 2. International Foundation for Autonomous Agents and Multiagent Systems, pp 617–624

Tenorio-González A, Morales E, Villaseñor-Pineda L (2010) Dynamic reward shaping: training a robot by voice, pp 483–492. https://doi.org/10.1007/978-3-642-16952-6_49

Thomaz AL, Breazeal C (2006) Adding guidance to interactive reinforcement learning. In: Proceedings of the twentieth conference on artificial intelligence (AAAI)

Thomaz AL, Breazeal C (2008) Teachable robots: understanding human teaching behavior to build more effective robot learners. Artif Intell 172(6–7), 716–737

Thomaz AL, Hoffman G, Breazeal C (2005) Real-time interactive reinforcement learning for robots. In: AAAI 2005 workshop on human comprehensible machine learning

Usunier N et al (2016) Episodic exploration for deep deterministic policies: an application to starcraft micromanagement tasks. arXiv:1609.02993

Vaughan JW (2017) Making better use of the crowd: how crowdsourcing can advance machine learning research. J Mach Learn Res 18(1):7026–7071

Velavan P, Jacob B, Kaushik A (2020) Skills gap is a reflection of what we value: a reinforcement learning interactive conceptual skill development framework for Indian university. In: International conference on intelligent human computer interaction. Springer, pp 262–273

Wang N et al (2018) Is it my looks? Or something i said? The impact of explanations, embodiment, and expectations on trust and performance in human-robot teams. In: International conference on persuasive technology. Springer, pp 56–69

Warnell G et al (2018) Deep tamer: interactive agent shaping in high dimensional state spaces. In: Thirty-second AAAI conference on artificial intelligence

Wiewiora E, Cottrell GW, Elkan C (2003) Principled methods for advising reinforcement learning agents. In: Proceedings of the 20th international conference on machine learning (ICML-03), pp 792–799

Wilson A, Fern A, Tadepalli P (2012) A bayesian approach for policy learning from trajectory preference queries. In: Advances in neural information processing systems, pp 1133–1141

Woodward M, Finn C, Hausman K (2020) Learning to interactively learn and assist. Proc AAAI Conf Artif Intell 34(03):2535–2543

Yang Q et al (2018) Grounding interactive machine learning tool design in how non-experts actually build models. In: Proceedings of the 2018 designing interactive systems conference, pp 573–584

Yannakakis GN, Togelius J (2018) Artificial intelligence and games, vol. 2. Springer

Yu C et al (2018) Learning shaping strategies in human-in-the-loop interactive reinforcement learning. arXiv:1811.04272

Zhang R et al (2018) Agil: learning attention from human for visuomotor tasks. In: Proceedings of the European conference on computer vision (eccv), pp 663–679

Zhang R et al (2020) Atari-head: atari human eye-tracking and demonstration dataset. Proc AAAI Conf Artif Intell 34(04):6811–6820

Zhang R et al (2020) Human gaze assisted artificial intelligence: a review. In: CAI: proceedings of the conference, vol 2020. NIH Public Access, p 4951

Ziebart BD et al (2009) Human behavior modeling with maximum entropy inverse optimal control. In: AAAI spring symposium: human behavior modeling, p 92

Ziebart BD et al (2008) Maximum entropy inverse reinforcement learning

Acknowledgements

This work was supported by JST CREST Grant Number JPMJCR17A1, Japan. Additionally, we would like to thank the reviewers of our original DIS paper. Their kind suggestions helped to improve and clarify this manuscript.

Author information

Authors and Affiliations

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2021 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this chapter

Cite this chapter

Arzate Cruz, C., Igarashi, T. (2021). Interactive Reinforcement Learning for Autonomous Behavior Design. In: Li, Y., Hilliges, O. (eds) Artificial Intelligence for Human Computer Interaction: A Modern Approach. Human–Computer Interaction Series. Springer, Cham. https://doi.org/10.1007/978-3-030-82681-9_11

Download citation

DOI: https://doi.org/10.1007/978-3-030-82681-9_11

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-82680-2

Online ISBN: 978-3-030-82681-9

eBook Packages: Computer ScienceComputer Science (R0)