Abstract

Educational research expanded rapidly in the twentieth century. This expansion drove the interested “amateurs” out of the field; the scientific community of peers became the dominant point of orientation. Authorship and authority became more widely distributed; peer review was institutionalized to monitor the flow of ideas within the scientific literature; reference lists in journals demonstrated the adoption of cumulative ideals about science. The historical analysis of education journals presented in this chapter looks at the social changes which contributed to the ascent of an “imagined” community of expert peers in the course of the twentieth century. This analysis also helps us in imagining ways in which improvements to the present academic evaluative culture can be made.

You have full access to this open access chapter, Download chapter PDF

Similar content being viewed by others

Keywords

Introduction

The research programme in sociology of science, which Robert K. Merton began to envisage in the mid-twentieth century, focused on the normative structure of science. Echoing broader democratic concerns, Merton depicted the peer review system, developed for scientific journals, “as crucial for the effective development of science” (1973, p. 461). As an evaluation mechanism, it provided an “institutionalised form for the application of standards of scientific work” (1973, p. 469). Despite its many imperfections, “the structure of authority in science, in which the referee system occupies a central place, provides an institutional basis for the comparative reliability and cumulation of knowledge” (1973, p. 495). In the view of Merton and his collaborators, the scientific system was largely self-organizing and self-policing, and the scientific literature with its peer review system was largely where that happened (see also Hollinger, 1990; Jacobs, 2002; Baldwin, 2015; Csiszar, 2018, pp. 1–21).

One crucial problem with this approach is that the “structure of authority in science” is presented as a “natural” feature of how the scientific system is supposed to operate. This approach builds upon the idea that the publication norms and practices have remained more or less constant throughout their existence—from the first scientific journals in the seventeenth century to its successors in the early twenty-first century. The idea, however, that there is essential stability from the first, early modern scientific journals to their contemporary counterparts has encouraged sociologists and historians of science to project back onto earlier epochs’ contemporary sensibilities about what journals are for, and how scientific communities ought to operate. It has not encouraged them to analyse more closely how peer review has become a sine qua non of scholarly journals and publication practices, and how this evaluation mechanism has changed the scientific system itself (for discussions of the state of the art, see Hirschauer, 2004; Bornmann, 2011; Pontille & Torny, 2015).

In this chapter, an analysis is presented of relevant changes in publication and evaluation practices in one field of research, namely education, and more particularly in the journals published by the largest association in this field, namely the American Educational Research Association (AERA). Founded in 1916, this association was originally known as the National Association of Directors of Educational Research (NADER). Shortly after World War I, however, it opened active membership to anyone who displayed the ability to conduct research: “the criterion for inclusion became demonstrated competence as a researcher—and the primary indicator of that competence was written work … that the members of the policy-making Executive Committee could assess” (Mershon & Schlossman, 2008, p. 319). More inclusive names were adopted to reflect this shift: first Educational Research Association of America (ERAA), and shortly afterwards American Educational Research Association (AERA).

In the course of its history, this association has launched several scientific journals. In 1930, it started with the publication of the Review of Educational Research (RER). Although RER was AERA’s only journal for about three decades, the association expanded rapidly in the course of the 1960s and 1970s. The American Educational Research Journal (AERJ) first appeared in 1964, and Educational Researcher (ER), emanating from AERA’s member newsletter, was published in 1972. One year later, the annual Review of Research in Education (RRE) started to appear. Two other, more specialized journals came out in the latter half of the 1970s: the Journal of Educational and Behavioral Statistics (JEBS), in 1976, and Educational Evaluation and Policy Analysis (EEPA), in 1979. More recently, in 2015, the association also launched AERA Open, an open-access online journal. All of these journals rank among the most influential publication outlets in the field of education.

The following analyses, which build on previously published work (Vanderstraeten et al., 2016), make use of two types of material. On the one hand, quantitative material on all the articles published in RER and AERJ is presented. Because the coverage of the content of the older volumes of the AERA journals is often incomplete in the existing bibliographical databases, the data were hand-checked and cleaned with the help of the content pages of all journal issues themselves. On the other hand, all editorial documents and guidelines that have appeared in AERA journals were analysed. Despite the fact that I did not have access to the journals’ archives, the editorial documents allow me to provide a sociological history of the evolution of the publication and evaluation practices in the field of education. Because the journals are used as source materials, their contents are hereafter cited by referring to the journal, publication year and page numbers. In order to avoid overburdening the reader, particular attention is paid to the publication and evaluation practices in AERA’s oldest journals, RER and AERJ, although it is worth noting that the data gathered for the other AERA journals confirm the analyses based on these two (Vanderstraeten et al., 2016).

The focus of this chapter thus is on the changing publication and review practices in the AERA journals. The field of education research allows for an interesting case study, not only because it is perceived to be interdisciplinary oriented, with close ties to psychology, philosophy and sociology, but also because it generally does not enjoy high status, and therefore seems quite receptive to changes in other fields of research (Vanderstraeten, 2011; Jacobs, 2013, pp. 100–120). From this perspective, the discussion first focuses on changing expectations regarding editorship and authorship, as well as changing forms of authority and inclusion in authorial roles. Next, attention is paid to how publication pressures (“publish or perish”) and evaluation mechanisms delimit what is valued in the scientific system, namely peer-reviewed papers. Afterwards, the focus is on changing citation cultures, and thus on the question of how authors are expected to incorporate and build on the arguments developed in other publications, which have gone through the process of peer review. In the more general reflections, with which this chapter concludes, I try to illustrate that my analyses not only shed light on the ways the scientific system organizes itself, but also help in imagining ways in which improvements can be made.

Reviewers and Authors

Initial Expectations

Of course, scientific journals have never been characterized by any truly unified format. Even during the last century, journals have varied widely in the nature of their contents, their size, frequency, and submission and acceptance procedures. Papers have varied not only in their length, from short notes or letters to more extended memoirs, but also in the genre expectations of diverse research fields (see Bazerman, 1988; Gross et al., 2002). Despite such variations, however, it is widely accepted that scientific journals constitute a special class of publications that can be demarcated from other forms of literature. Evaluation mechanisms, based on peer review, are often understood to protect the integrity of this corpus. These evaluation mechanisms can also be seen to separate a small body of legitimate scholarly work from other, unscientific enterprises. Editors or experts called on to judge whether a paper ought to be published are imagined as doing their duty not only to a journal’s reputation and prestige, but to science as a whole (e.g., Merton, 1973).

The evolution of the AERA journals shows, however, that the review mechanisms also display much historical variation. The ways in which editors and reviewers are able to understand or define their own role have changed quite considerably. How we conceive of authority in the system of science is the outcome of a series of attribution and evaluation processes. How editors and reviewers position themselves and their journals, and how authors can take both credit and responsibility for particular publication output, also is the result of a series of historically contingent choices. At the same time, the perceived scientific eminence of the editors and the authors, as well as the representation of the various interest groups to which the journals intend to direct themselves, also seems instrumental in establishing and maintaining the authority of the journals and their association.

Overall, RER was in its first decades not what we would now call a “traditional” journal: it did not publish original research papers. It was rather conceived as a periodical reference work, regularly summarizing recent research on “the whole field” of education (RER, 1931, p. 2). It was to appear five times per year, with each issue devoted to a specific topic. In the first issue, the editors presented a cycle of 15 topics to be addressed over a three-year period. RER’s first volumes dealt with topics such as the curriculum, teacher personnel, school organization, finances, intelligence and aptitude tests, and so on. The last topic of the first cycle was “methods and technics of educational research.” For each issue (and thus for each topic), the idea also was to assign an issue editor and a committee of experts, who were to solicit and review all manuscripts. As it turned out, these designated editors and experts would frequently author several review articles themselves.

The original aim of the journal was to disseminate the results of scientific research to a broader audience: “to review earlier studies” and “to summarize the literature” for an audience of “teachers, administrators, and general students of education” (RER, 1931, p. 2). But this editorial strategy was characterized by a hierarchical structure. It is quite clear that authority and authorship were closely connected: Issue editors and authors were chosen because of their authority on the topics, but inclusion in RER also granted the issue editors and authors considerable authority.

Interestingly, some authorship problems appeared. Authorship was held to be exclusive; it was not easily extended beyond a small group of specialists. Co-authorship, in the strict sense of two names listed alongside one another at the front of a text, was not self-evident. Several authors of early RER articles were aided by “assistants.” Sometimes authors published “in cooperation with” others—but neither the assistants nor the “cooperating” contributors were identified as full co-authors. In 1935 and 1936, moreover, errata had to be published to add co-authors to reviews that had appeared in print in previous issues (see Excerpts 1+2). Although the inclusion of these errata illustrates that the attribution of authorship could be contested (no other errata appeared in the early volumes), RER did, in the first decades of its existence, entrust only a few scholars with reviewing the relevant research. The journal entrusted and authorized only a few scholars to summarize and review what was considered to be the relevant research and hence to speak to the broader community of people interested in education and the results of education research. The editors and experts appointed by the journal often filled the pages of the journal with their own contributions.

Further Expansion

For almost four decades, the editors of RER stayed close to their ambition to treat “the whole field” by means of a cyclical coverage of all important topics in education. Already in the 1930s, however, questions emerged as to the proper readership of RER. The interests of education practitioners, on the one hand, and education researchers, on the other, proved difficult to align. In 1938 and 1939, for example, the editorial board adopted five new topics to be covered in three-year cycles. In an editorial foreword, it was underlined that the new strategy would allow focussing on instruction and therefore be of benefit to practitioners in schools instead of to researchers in universities. As no scholars specialize in such instructional areas, “they are much more difficult to prepare,” but, as the editors added, “it is hoped that they will render a larger service to a greater number of users and thus justify the increased effort that they call for” (RER, 1940, p. 75).Footnote 1 In the following decades, however, AERA would increasingly orient itself to the growing and influential community of education researchers instead of to education practitioners.

Prompted by the rapid expansion of education research, especially in the decades after World War II, RER adopted, beginning in 1970, a new editorial policy in which each issue was expected to include unsolicited reviews on topics of the authors’ choice. The incoming editor, Gene V Glass, stated “the new editorial policy” as follows: “The purpose of the Review has always been the publication of critical, integrative reviews of published education research. In the opinion of the Editorial Board, this goal can now best be achieved by pursuing a policy of publishing unsolicited reviews of research on topics of the contributor’s choosing … The reorganization of the Review of Educational Research is an acknowledgment of a need for an outlet for reviews of research that are initiated by individual researchers and shaped by the rapidly evolving interests of these scholars” (RER, 1970, p. 323). The last issue that reflected the old editorial policy appeared in 1971.

At that time, the landscape of scholarly publishing in the field of education had already changed. In 1964, AERA began publishing AERJ, with a mission to publish “original reports of experimental and theoretical studies in education.” In the rapidly expanding field of scientific journals, AERJ was a “traditional” journal that put emphasis on the presentation of novel findings. Its establishment was an indication of the fact that AERA aspired to a more active, innovative role at the level of scholarly communication about education (see AERJ, 1966, pp. 211–221, 1968, pp. 687–700). In the same period of time, moreover, the RER editors put forward their new expectations regarding the content and orientation of articles and submissions. RER shifted its emphasis from summaries or reviews to critical evaluations; it now explicitly required its authors to provide an overview of the strengths and shortcomings of the existing knowledge base. Articles now had to advance research on the topics they discussed. Glass wrote: “It is hoped that the new editorial policy of the Review, with its implicit invitation to all scholars, will contribute to the improvement and growth of disciplined inquiry on education” (RER, 1970, p. 324). No doubt, these new expectations corresponded with changes in the composition of AERA’s membership and RER’s readership base. Its readership came to consist mainly of specialists, who did not need a “review” to learn about developments in their field of research. The raison d’être of RER—as well as of the other AERA journals that were established in the 1960s and 1970s—now lay in the presentation of findings that were relevant primarily to other researchers. Seen in this light, the new editorial policy expressed by RER disqualified most of the journal’s own early educational publications as either unoriginal or not properly scientific.

In the same editorial, Glass also indicated that “the role played by the Review in the past [would] be assumed by an Annual Review of Research in Education, which AERA [was] planning” (RER, 1970, p. 323). The first volume of the Review of Research in Education appeared only three years later. RRE again solicited reviews in particular research areas. In this regard, the “Statement From the Editor” accompanying the first issue of the Review of Research in Education was reminiscent of the old editorial policy of RER: “The more important areas will appear periodically but not necessarily regularly. Some areas, relatively dormant or unproductive, may not appear for years” (RRE, 1973, p. vii; see also ER, 1976/11, p. 10). However, the RRE editor also took pains to underline that the new venue would orient itself towards scholars, who would read it to inform themselves about ongoing education research. “Summaries of research studies are valuable and appropriate, but too much summary distracts from criticism and perspective” (RRE, 1973, p. vii). And the RRE editor added: “Many conceive of reviewing as the summarizing of research studies and trends in order to inform readers and keep them abreast of their fields. Such an annotated bibliographic approach can have little impact, however” (RRE, 1973, p. vii). Although it thus proved difficult to give up the idea that the research field could be authoritatively surveyed by a few leading scholars, the expectations regarding the role of editors and reviewers changed around 1970. Instead of filling the pages of the journal with their own contributions, the editors and reviewers became increasingly engaged as gatekeepers of scientific communication channels (Vanderstraeten, 2010, 2011).

Authors and Reviewers

Community of Peers

The expression “publish or perish,” which became widely used in the 1960s and 1970s, can be seen to signal the institutionalization of a “communication imperative” in science (see also de Solla Price, 1963). Publications have not only become increasingly perceived as indices of full membership in the scientific community, but peer-reviewed papers have also become a base unit for sizing up careers, with publication lists a significant factor in decisions about hiring, tenure and grants.Footnote 2 In the process, changing expectations emerged for journal editors, reviewers and authors.

Underlying this evolution were important demographic changes within the academic system. As already mentioned, the field of education research was a clear beneficiary of the expansion of the American system of higher education in the 1950s and 1960s. In his presidential address presented at the AERA 1966 Annual Meeting, which was published in the first issue of AERA’s new journal, AERJ, the educational psychologist Benjamin Bloom provided a short overview of this rapid expansion. “From the level of support of 1960,” Bloom estimated, the growth in federal funding of education research and development had been “of the order of 2,000 per cent” (AERJ, 1966, p. 211). The number of education researchers had also increased substantially during that period; Bloom noted that in the previous five years, membership in AERA had grown “at the rate of about 25 per cent per year” (AERJ, 1966, p. 213). The growing number of journals devoted to education was another factor in (and indicator of) the expansion and “academization” of this field. If the 1960s constituted a “Renaissance” in education research, the expansion and ensuing professionalization of research drove the “amateurs” out of the association (ER, 1982/9, pp. 7–10). As a result of the growth of the scholarly community, researchers had to direct their communications to other researchers instead of to “those off campus” (see AERJ, 1973, pp. 173–177; RER, 1999, pp. 384–396). New forms of competition and/or collaboration between potential authors also emerged.

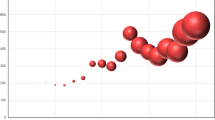

To clarify the extent of these changes, it is interesting to point to developments at the level of the authorial roles. Figure 3.1 displays the evolution of the number of authors or co-authors per published article in RER and AERJ. It is clear that single-authored articles were the norm for a relatively long time. In 1931, all but two RER articles were single-authored (although “assistants” contributed to four of these articles). Forty years later, the majority of the articles in RER were still written by single authors. But the expectations and conventions quickly changed after that. In the case of RER, which adopted a new editorial policy in the 1970s, the average number of authors per article increased from 1.05 in 1931 to 1.21 in 1970 and 3.61 in 2018 (with a standard deviation of 2.75). In the case of AERJ, there was a relatively steady increase in the number of co-authored articles; the average changed from 1.42 in 1965 to 2.30 in 1990 to 2.66 in 2018 (with a standard deviation of 2.03). In 2018, only about 1 in 6 RER and 1 in 5.5 AERJ articles were single-authored. Co-authored, if not multiple-authored, publications have become the norm. For sure, the rise of “big science” has influenced this evolution (de Solla Price, 1963). But the rise of co-authored publications also implies that forms of peer review become incorporated into the publications themselves. More and more peers now are (co-)authors, involved in the production—and not just the evaluation—of papers. For many scholars, collaboration with peers has become part of their research and publication strategies.

Blind Peer Review

It is also interesting to direct attention to the new evaluation mechanisms that were expected to replace the former system of invited submissions. Not just in the field of education, but in a broad variety of scientific specializations, forms of blind and double-blind peer review were introduced in the decades after World War II. Manuscripts now had to be evaluated impartially by referees or reviewers as acceptable for publication; editorial decisions had to be governed by the scholarship displayed in the papers, not by the reputation claims of their authors. Scientific journals thus also adapted themselves to the wider cultural confidence in anonymous criticism (Powell, 1985).

The AERA officers and AERJ editors believed that the new submission and evaluation process would allow for a fresh start. As Bloom stated, in his aforementioned AERA presidential address, “there is much repetition in educational research, and this is particularly apparent in any careful scrutiny of the research summarized in the Review of Educational Research over the past twenty-five years” (AERJ, 1966, p. 220). And he added: “It is this redundancy that in part explains why there are so few examples of crucial research in the period under consideration” (ibid.). But Bloom also believed that major improvements could be realized in education research, provided that some structural changes were implemented. In part, his plea reminds of free market ideologies. The rapid communication of research findings had to be facilitated; journals had to focus on the publication of new, innovative findings, instead of on summarizing existing research. Priority had to be given to submissions based on the initiative of individual researchers, but some form of invisible hand (peer review) was thought to be necessary. In this way, the system would benefit the entire scientific community.

In order to maintain authority and trust in the field, the journal editors were also forced to take a distanced stance on all decisions that could be perceived as injurious to others (such as rejections of individual contributions). To maintain authority, they could not be perceived as exercising it (see also Pontille & Torny, 2015). They rather assigned editorial responsibility to others. In 1973, the AERJ editors appointed two “Reviewers-at-Large … [to] serve as a regulatory agent over the editorial process” (AERJ, 1973, p. 174). At the same time, they promised to protect the diversity of the publication output. They strived for a “corporate identity” that could represent the field as a whole, and rely on the expertise available in the field as a whole. Although “the basic mechanism for maintenance of high standards remains to be a peer review carried out anonymously and in good professional taste,” they promised to call upon “all the expertise in the AERA” (ibid.). A longer citation may illustrate the editors’ prevailing concerns: “In the past, it has been customary for the editors to appoint a board of consulting editors and, after screening out some manuscripts for policy and load reasons, to refer all the rest to the board for review and recommendation. For instance, the 1971–1972 board had 35 members on it, many providing their precious service for six long years … (Incidentally, and unfortunately, only one of the 35 was female.) This system of a fixed body of readers works well in a monolithic professional organization which, alas, the AERA is not. Though unintentionally, it is easy for this sort of board to become homogeneous in composition, narrow in focus, and dogmatic in judgment. To avoid these dangers and to allow readers a greater share of responsibility for their magazine, we have done away with the arrangement and, instead, decided to rely upon a large number of consultants selected from the general AERA membership and, if deemed necessary or desirable, even from outside the organization” (AERJ, 1973, p. 174–175).

By stressing the decisive role of the assessments of the various expert reviewers, the AERJ editors also tried to respond to “some irate colleagues” (AERJ, 1973, p. 176). The editors of all AERA journals, they stated in their somewhat unconventional “Message From the Editors,” do “not meet or work as a group, even though all are doing what they can to contribute to the production of fine, worthwhile publications. They certainly do not ‘conspire’ for or against any authors, subjects, or types of study” (ibid.). Moreover, “frequent phone calls or letters to the editorial office do not facilitate the review process. Once a manuscript has been sent out to consultants, editors do not have any further information until the reviews and recommendations are back” (ibid.). They added, moreover, that “the editors are not monsters with sinister motives, out to get this author or insult that scholar … [They make mistakes but] they are not so bad as to justify unbridled invectives and tirades on the part of some of our fellow educational researchers” (AERJ, 1973, p. 177). In short, the development of the discipline required discipline of all its members. The new evaluation mechanisms built on the institutionalization of different judging instances, but also required some difficult socialization processes on the part of editors, reviewers, (would-be) authors and readers.

Involvement in peer review could be legitimated in terms of membership of the research community. By involving an increasing number of education researchers in the role of referee, the editors could hope for a better understanding of the complexities of the decision-making processes they were involved in. By adopting various role perspectives, especially those of author and of referee, and thus quite literally taking the part of the other, researchers could be expected to understand and accept the expectations of the other. By taking up the role of referee, they could learn to meet the demands of referees and editors. Being asked to act as referee thus could also be seen to constitute a privilege that would bring its own rewards. This psycho-social integration into the entire process of scientific communication could be presented as acting as accumulation of advantage that accrues to scholars, who are perceived to be successful in their field of expertise, just as much as the more tangible advantages of research grants and large labs (Merton, 1973, pp. 439–459; Bazerman, 1988, p. 146). Full membership of the scientific community seemed to involve individuals in the roles of author and referee, but its ideological roots of this line of thinking are obvious. The structures of an “audit culture” became gradually visible (Power, 1997).

Another remark may be added. While the invention of new editorial positions to handle issues of general policy and of referees to handle issues concerning individual contributions may have helped the editors and their journals maintain authority and trust, the underlying concerns also led to a somewhat paradoxical strategy. The AERA journals, like many other scientific journals, started to publish—mostly annual—lists of scholars who served as referees. Displaying the identity of their (anonymous) reviewers seems necessary to enhance the journals’ prestige in the field. In the light of the institutionalization of more complicated procedures of double-blind peer review, the journals obviously can no longer only build on the visibility and scientific eminence of their editors.

Excerpt 3: A list of “anonymous” referees and editorial consultants included in RERFootnote 3

Papers and References

Suggestions for Contributors

As already mentioned, the shifting editorial strategies had an impact on the publication formats of the journals. It has been suggested that the introduction of double-blind peer review has gone along with the standardization of publication output (Bazerman, 1988; Grafton, 1997; Gross et al., 2002). Standardization of publication formats can also be observed in the AERA journals in the course of the 1970s. Shortly before the introduction of RER’s new editorial policy, for example, broad editorial guidelines were communicated: “There are no restrictions on the size of the manuscripts nor on the topics reviewed” (e.g., RER, 1969, inside cover). One decade later, much more detailed instructions were common in all AERA journals. Not only were strict page limitations introduced, but prospective authors were also referred to the publication manual of the American Psychological Association, which included (and includes) detailed guidelines on manuscript structure and content, writing styles, referencing methods and so forth. Manuscripts now also needed to be accompanied by an abstract of 100–150 words. To enable blind review, the list of authors had to be typed on a separate sheet (e.g., RER, 1980, p. 201; AERJ, 1980, pp. 1, 125). As more emphasis was placed on individual scholarship, and as more scholars were pushed to submit manuscripts to peer-reviewed journals, the publication formats also became increasingly regulated and predefined. To make fair comparisons of the scientific quality of different manuscripts possible, and thus to enable fair editorial decisions about acceptance or rejection, standardization seemed imperative.

More detailed “suggestions for contributors,” which pertained to the content and orientation of the articles that could be considered for publication, were also put forward.Footnote 4 At the time that RER shifted its emphasis from summaries or reviews to critical evaluations, it started to require that all submitted manuscripts would provide an overview of the strengths and shortcomings of the existing knowledge base. Articles now had to advance research on the topics they discussed; would-be authors had to display familiarity with the existing body of specialized knowledge and present their own work as a new, innovative contribution to this body (see also ER, 2006/6, pp. 33–40). It should thereby be taken into account that, in the case of RER, individual articles now often had to be placed in an issue without any substantive relation to the topics being discussed in the other articles of the same issue.

Citation Consciousness

Following the shift of attention towards the published paper, as the accredited product of research, it has increasingly become expected that papers build upon, and refer to, other publications. They are expected to build upon the authority of other publications, of publications which have gone through double-blind peer review themselves. At the same time, they are expected to invite responses, that is, become cited, and thereby further advance research (Stichweh, 2001). The readership of the journals at present predominantly consists of potential authors of new journal papers. The focus on the paper hence supports the image of science as a cumulative endeavour; it also supports the image of a self-regulating social system with the scientific literature and its gatekeepers at its core.

In all AERA journals, the reference lists have over time gained much weight. As Fig. 3.2 shows, there was a significant rise in the number of references per article over the last five decades. (For the articles published before 1956 no citation data have been collected by the Web of Science [WoS].) For AERJ, the average number of references per article per year multiplied by a factor of 7.5 in a period of half-a-century, from an average of about 10 references during the mid-1960s and early 1970s to an average of 75 references in the most recent years. Most of the increase took place between the 1980s and the 2010s, thus in 30 years’ time. For RER, it should come as no surprise that the historical change is somewhat different, as this journal was traditionally focused on summarizing and reviewing a broad body of literature. In the course of the last 50 years, however, the average number of references per article doubled within this journal. There is more variation in RER than in AERJ, but RER articles now list on average some 120 publications in their reference sections.

In a broad sense, a “citation consciousness” is thought to be an essential part of good scholarly practice; scholars have to build on and refer to the scholarly work that is relevant to their topic. By citing particular work, they add their voices to already-published papers and to the journals which validated these papers. But, of course, authors can use citations for many different reasons: giving credit to related publications, criticizing previous work, substantiating claims, providing background reading and so on. Also, citation does not necessarily indicate use. While reference lists do not distinguish between these different reasons, it also becomes difficult to make sense of these lists. All references count in the same way. As the reference lists gained increasing importance, however, journals also started to focus attention on the citations their papers received (Pontille & Torny, 2015). Editorial boards were no longer only expected to select papers that were of high quality, but now also had to accept for publication papers that had the potential of becoming oft-cited.

Some of the changed expectations were already discussed in an early reflective AERJ article, which critically looked back at the first AERJ issues: “As an instrument of communication, a journal is a receiver of information to the extent that its articles cite articles published in other journals; it is a source of information to the extent that its articles are cited as bibliographical references in other journals. Assuming that a journal should serve more than an archival function, the latter is the more important index of a journal’s impact” (AERJ, 1968, p. 694). Already in the 1960s, the journals were prompted to reflect on the impact they could have on education and education research.Footnote 5

In the same period of time, Eugene Garfield had already started to market science citation indexes and impact factors with his Institute for Scientific Information (see also Garfield, 2004). The Web of Science impact factors indicate that the AERA journals occupy central positions within the field of education research (Vanderstraeten et al., 2016). However, the databases of the Web of Science also show that the relatively high impact factors of the AERA journals are the result of the visibility of a small number of papers. In the case of AERJ, a few papers are now highly referenced (nine are cited more than 500 times, two more than 1000 times), but half of the referenced AERJ papers are cited ten times or less, and one-third are cited three times or less. In the case of RER, seven papers are now cited more than 1000 times, but two-third of the referenced RER papers are cited ten times or less, while more than half of them are cited three times or less. For all AERA journals (but most pronounced for RER), there is a major gap between a tiny core of highly cited papers and the vast majority of the other work, which is barely referenced at all. The overall impact of these journals is very much dependent on the visibility of a few papers.

The highly skewed visibility of the RER and AERJ papers displays that the ways in which referees and editors evaluate submissions strongly differ from the ways in which published papers are referenced or valued in other publications.Footnote 6 Merton was aware of such divergences, but they did not lead him question his faith in the peer review system of scientific journals. He rather seemed to believe that improvements in editorial decision-making procedures could bring both forms of evaluation in line with one another (1973, p. 476, note 18). However, given the fact that journal rankings and impact factors have become incorporated into the everyday decision-making routines of (would-be) authors, editors and science administrators alike, it no longer makes sense to conceive of peer review as the epitome of legitimate scientific assessment (see Sïle & Vanderstraeten, 2019). Other mechanisms are now increasingly used to value and measure the products of scientific work. Neither theoretically nor historically, there are good reasons to attribute privileged status to the system of double-blind peer review. In the course of the past century, the structural units of science have been more fluid than they might seem. We may therefore also question whether we still need to depict science as a self-regulating social system with the scientific literature and its gatekeepers at its core.

Conclusion

As we have seen, RER was initially conceived of as a journal that had to compile and review research findings, which in most cases were not readily available to its subscribers. The role which this journal initially fulfilled was mainly one of critically reporting on developments in the field of education and education science. For the editors, to publish in their own journal or their special issue was no abuse of privilege. Setting the tone by including work of their own and thereby making public judgements about the work of others was rather understood as the prerogative and even the duty of the editors. In the early decades of RER, the boundaries between the roles of author, reviewer and editor were blurry. The author was a reviewer, while the editor or reviewer also was an author! (Moreover, some editors/authors believed that they could withhold authorship credit from collaborators.)

With the expansion and increasing specialization of education research, structural changes in the publication process took place. The raison d’être of RER shifted: from summarizing existing research to presenting new, original findings. Like other scientific journals (including AERJ), RER came to rely on unsolicited papers. As a consequence, the distinction between different roles, especially roles for reviewers and for authors, became more pronounced. The journals’ editors and reviewers became gatekeepers. As the journals started to rely on (double-blind) peer review as the way to organize and legitimate the editorial selection process, the article itself also acquired increasing importance. The prestige of these evaluation structures delimited the type of output that is valued in the scientific system. Since the latter part of the last century, the peer-reviewed paper has become widely used to identify who counts as a legitimate scientific practitioner and as a qualified expert in particular fields of research (Csiszar, 2018). In education research, as in other fields of research, individual careers have become dependent on publication lists, on authorship of peer-reviewed articles.

The focus on journals, journal articles and double-blind peer review has led to new conceptions of science. More democratic ideas about scientific communities have gained acceptance. Following the expansion and rapid specialization of different fields of research, small elite networks could no longer be entrusted with assessing the claims made in unsolicited submissions. Authorship and authority became more widely distributed; peers have become expected to monitor the flow of ideas within the scientific literature. Likewise, publications have to incorporate cumulative ideals about science. Authors have to highlight their reliance on other authors through citations and references. As our analysis of the education journals shows, the ascent of an “imagined” community of expert peers was the result of changes which took place in the course of the twentieth century. It was not a relatively stable social structure that made it possible to govern scientific activities.

The credit that comes from publishing papers in peer-reviewed journals has privileged certain kinds of scientific activity. The value ascribed to publishing research papers, and the general expectations about the format that those papers ought to take, has a strong influence on the types of projects scholars choose to pursue, the modes of collaboration that they are apt to engage in and the kinds of knowledge that make it into print. Among the ironic consequences of this focus on journals is the legitimation of short, standardized articles as equal to or even preferable to longer texts and books. Short articles, especially when they are stripped of materials that present the broader context but instead focus on standardized presentations of research results, are typically of use only to the most informed inner circle of experts (Johns, 1998). In this sense, the structure of science has contributed to a specialized notion of science that set it apart from other forms of knowledge exchange. The focus on peer review certainly contributed to securing and strengthening this orientation.

The way we nowadays conceive of scientific exchange—with authors, editors, reviewers, papers, reference lists and so on—is the outcome of a series of historical contingencies. The formation and institutionalization of these basic units made it relatively easy to speak of a self-organizing and self-regulating system of scientific research. If this mode of self-organization provides the basis for the reliability and the cumulative structure of science, as, for example, R. K. Merton put it, it does not seem advisable to call any of its basic units into question. Analyses of the historical contingencies of peer review, however, make it possible to shed light on the very social structure that made modern science possible. As we have seen, the social structure of science has been much less stable than it still seems to be. When the historical contingencies underlying this structure are taken into account, it should not be too difficult to imagine alternatives (see Vanderstraeten, 2019).

Change history

01 January 2022

A correction has been published.

Notes

- 1.

At the same time, more emphasis was put on research methods to help researchers cope with a proliferation of both quantitative and qualitative techniques (e.g., RER, 1939, p. 451, 1956, pp. 323–343). Clearly, some inconsistencies were part of the editorial strategies of the AERA journals.

- 2.

Within AERA, the differential value attributed to peer-reviewed journal papers also became evident. While presentations at annual meetings were valued, more value was attached to what could, after peer review, be published in the AERA journals. “[In the 1950s] … members who proposed a paper for the program were generally assured that it would be accepted” (ER, 1982, p. 9).

- 3.

Of course, the annual publication of lists of consulted referees is also a way to give credit to the scholars on whose expertise the editors relied. Databases, such as Publons, now also allow reviewers to get credit for work that would otherwise remain invisible. On the other hand, a small but growing group of periodicals have turned to open peer review, to give—among other things—recognition to the efforts of their reviewers.

- 4.

A related discussion concerns the rapid diffusion of the IMRAD (Introduction, Methods, Results, Analysis and Discussion) structure for scientific papers. In the health sciences, it became in the 1980s the only pattern adopted in original papers. In education research, more diversity remained possible, although standards for reporting the findings of empirical research were also imposed (see ER, 2006/6, pp. 33–40).

- 5.

For another illustration, see the aforementioned, provocative AERA presidential address by Bloom. Looking back at what had been accomplished during the past quarter of a century, which was characterized by rapid growth, Bloom argued: “Approximately 70,000 studies were listed in the Review of Educational Research over the past 25 years. Of these 70,000 studies, I regard about 70 as being crucial for all that follows. That is, about 1 out of 1,000 reported studies seem to me to be crucial and significant, approximately 3 studies per year” (AERJ, 1964, p. 218). He thus also questioned the review practices that had prevailed within RER.

- 6.

The Gini indexes of the distributions for both journals are quite similar (>0.90). A comparison of the results with the indexes for wealth distributions within countries (for which the Gini index is commonly used) is telling. The distribution of citations to the AERA journals is worse than the figure for the distribution of wealth in the most unequal countries in the world, like Haiti, South Africa or Botswana (±0.65).

References

Baldwin, M. (2015). Making “Nature”: The history of a scientific journal. University of Chicago Press.

Bazerman, C. (1988). Shaping written knowledge: The genre and activity of the experimental article in science. University of Wisconsin Press.

Bornmann, L. (2011). Scientific peer review. Annual Review of Information Science and Technology, 45(1), 197–245.

Csiszar, A. (2018). The scientific journal: Authorship and the politics of knowledge in the nineteenth century. University of Chicago Press.

de Solla Price, D. J. (1963). Little science, big science. Columbia University Press.

Garfield, E. (2004). The intended consequences of Robert K. Merton. Scientometrics, 60(1), 51–61.

Grafton, A. (1997). The footnote: A curious history. Harvard University Press.

Gross, A. G., Harmon, J. E., & Reidy, M. (2002). Communicating science: The scientific article from the 17th century to the present. Oxford University Press.

Hirschauer, S. (2004). Peer Review Verfahren auf dem Prüfstand. Zeitschrift für Soziologie, 33(1), 62–83.

Hollinger, D. A. (1990). Free enterprise and free inquiry: The emergence of laissez-faire communitarianism in the ideology of science in the United States. New Literary History, 21(4), 897–919.

Jacobs, J. A. (2013). In defense of disciplines: Interdisciplinarity and specialization in the research university. University of Chicago Press.

Jacobs, S. (2002). The genesis of ‘scientific community’. Social Epistemology, 16(2), 157–168.

Johns, A. (1998). The nature of the book: Print and knowledge in the making. University of Chicago Press.

Mershon, S., & Schlossman, S. (2008). Education, science, and the politics of knowledge: The American Educational Research Association, 1915–1940. American Journal of Education, 114(3), 307–340.

Merton, R. K. (1973). The sociology of science: Theoretical and empirical investigations. University of Chicago Press.

Pontille, D., & Torny, D. (2015). From manuscript evaluation to article valuation: The changing technologies of journal peer review. Human Studies, 38(1), 57–79.

Powell, W. W. (1985). Getting into print: The decision-making process in scholarly publishing. University of Chicago Press.

Power, M. (1997). The audit society: Rituals of verification. Oxford University Press.

Sïle, L., & Vanderstraeten, R. (2019). Measuring changes in publication patterns in a context of performance-based research funding systems: The case of educational research in the University of Gothenburg (2005–2014). Scientometrics, 118(1), 71–91.

Stichweh, R. (2001). History of scientific disciplines. In N. J. Smelser & P. B. Baltes (Eds.), International encyclopedia of the social and behavioral sciences (Vol. 20, pp. 13727–13731). Elsevier.

Vanderstraeten, R. (2010). Scientific communication: Sociology journals and publication practices. Sociology, 44(3), 559–576.

Vanderstraeten, R. (2011). Scholarly communication in education journals. Social Science History, 35(1), 109–130.

Vanderstraeten, R. (2019). Systems everywhere? Systems Research & Behavioral Science, 36(3), 255–262.

Vanderstraeten, R., Vandermoere, F., & Hermans, M. (2016). Scholarly communication in AERA journals, 1931 to 2014. Review of Research in Education, 40, 38–61.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Open Access This chapter is licensed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license and indicate if changes were made.

The images or other third party material in this chapter are included in the chapter's Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the chapter's Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder.

Copyright information

© 2022 The Author(s)

About this chapter

Cite this chapter

Vanderstraeten, R. (2022). “‘Disciplining’ Educational Research in the Twentieth Century”. In: Forsberg, E., Geschwind, L., Levander, S., Wermke, W. (eds) Peer review in an Era of Evaluation. Palgrave Macmillan, Cham. https://doi.org/10.1007/978-3-030-75263-7_3

Download citation

DOI: https://doi.org/10.1007/978-3-030-75263-7_3

Published:

Publisher Name: Palgrave Macmillan, Cham

Print ISBN: 978-3-030-75262-0

Online ISBN: 978-3-030-75263-7

eBook Packages: EducationEducation (R0)