Abstract

Improved methods for border surveillance are necessary to ensure an effective and efficient EU border management. In the border control context, as defined by the Schengen Border Code, border surveillance is defined as “the surveillance of borders between border crossing points and the surveillance of border crossing points outside the fixed opening hours, in order to prevent persons from circumventing border checks”. In this paper, an overview of an EU-funded program related to border security called FOLDOUT is described. FOLDOUT’s focus is on through foliage detection in the inner and outermost regions of the EU. The proposed FOLDOUT system will assist border guards by providing prompt detection of illegal activity at borders and trace the movement and routes prior to arrival in border areas. Different sensors will be developed to detect and locate people and vehicles operating under the coverage of trees and other foliage over large areas. In achieving this, a holistic system employing fused advanced ground, aerial and space-based sensors mounted on StratobusTM and satellite platforms is presented. The system will be demonstrated in different countries in order to test the performance under different weather conditions. Current results are related to FOLDOUT fusion demonstrator system which takes detections from three independent sensor types and unifies detections to eliminate inconsistencies and allow for a global view of activity in the border area.

You have full access to this open access chapter, Download chapter PDF

Similar content being viewed by others

Keywords

- POV

- Surveillance

- Security

- Land borders

- Situational awareness

- Procurement

- Innovation

- Video analytics

- Data fusion

- Research and development

- Sensors

- Radars

- Aerostat

- Boats

- Border authorities

1 Introduction

In the border control context, as defined by the Schengen Border Code, border surveillance is defined as “the surveillance of borders between border crossing points and the surveillance of border crossing points outside the fixed opening hours, in order to prevent persons from circumventing border checks” [1]. Border surveillance shall be to prevent unauthorised border crossings, to counter cross-border criminality and to take measures against persons who have crossed the border illegally. A person who has crossed a border illegally and who has no right to stay on the territory of the member state concerned shall be apprehended and made subject to expulsion.

To achieve an effective and efficient border management, there should be used technologies and personnel that (a) supervise border sections between border crossing points, (b) supervise border crossing points (border gates) outside opening hours and (c) control movement in order to prevent persons from circumventing border checks.

In achieving this and with regard to the surveillance environment, border guards work with shifts on a 24/7 basis that take place at central offices as well as different places along the border. In general, border control units are well equipped with state-of-the-art surveillance equipment. Border guards use systems that produce alarms (a special graphical frame and/or a sound alarm) each time, either a target has been detected without filtering and needed to be clarified through and high-definition (HD)/thermal camera (fixed or mobile) or through the motion of an object/human/animal. When a C2 operator performs the programming of the sensor, it is often being done either manually or partially assisted. However, this requires a twofold skill from the operator: knowledge of sensors needed to perform the mission and knowledge of programming for all sensors involved in the mission. These activities greatly increase the operator’s workload without an effective gain. In addition, manual programming makes it harder to distinguish between bad configurations and false negatives (especially an issue for RADAR-like systems).

Border authorities are disadvantaged in preventing illegal border activities in areas where objects to be detected, like people and vehicles, are concealed by foliage. Such environments are extremely challenging due to people and vehicles being hidden behind opaque layers as well as under the cover of darkness and/or under reduced visibility. For example, if a patrol finds people moving into forests or other harsh and unstructured environments, they are not able to follow them. No border fence or surveillance system can protect the border by itself. Rapid Intervention Troops and/or Border Police Teams are required to be deployed at the scene and being thoroughly informed as soon as possible. This would be a key to an effective border security.

The technologies currently available to border guards do not match many of their needs. Large areas require monitoring, and with modern technology (such as smart phones for communicating new routes and exchanging information about the activities of border control units) aiding the smugglers and traffickers, it is necessary to improve the detection capabilities of the border guards. To effectively monitor border areas, it is necessary not only to have the ability to scan a specific area but also to predict where the next illegal crossing will take place.

In particular, solutions are needed that do not only detect persons and vehicles crossing land borders illegally but also being able to do under harsh and unstructured environments, such as a canopy of foliage. Solutions are needed to be able to provide border guards with improved situational awareness of border regions including robust detection of people and vehicles, groups, recognition of abnormal behaviours and predicting routes of individuals and small groups. Technologies customized for foliage penetration should be integrated into a quick decision system that consists of autonomous ground-based sensors, with high mobility even on challenging and harsh terrain (able to perform 24 hours), as well as aerial and/or space-based systems for pre-warning of ground-based sensors and quick interventions.

In this paper, an overview of an EU-funded programme related to border security called FOLDOUT is presented [2]. The main goal of FOLDOUT is to develop, test and demonstrate a system and solution to detect and locate people and vehicles operating in illegal cross-border activities under the coverage of trees and other foliage over large areas. The planned improvements through FOLDOUT to the current situation of border surveillance will be evaluated on a threefold basis through the development of required mechanisms for effective detection of (a) irregular border crossings (illegal migrants + vehicles) in forest terrain, (b) persons and vehicles in a search and rescue situation in forest terrain and (c) illegal transport and entry of goods (i.e. human trafficking and goldmines) in temperate broadleaf forest and mixed terrain. Overall, in order to achieve FOLDOUT’s main goal, a multi-sensorial platform will be designed and developed. This platform shall incorporate end-users’ requirements by integrating, ground, air, space and in situ sensor systems.

2 FOLDOUT User Requirements

Based on interviews with FOLDOUT’s end-users’ (practitioners from border authorities from Greece, Bulgaria, Poland, French Guiana, Finland and Lithuania), system requirements were identified and defined. Interviewees mentioned that formation of the surveillance ground area varied significantly including landfill and smooth, plains and hills/mountains, rocky ground, big altitude differences, bogs, moraine and uneven woodland. They also stated that they were more interested in detecting people as well as detecting, recognizing and tracking vehicles. The minimum detection distance before crossing the border, necessary to be able to react and intercept, was reported to be a few kilometres from the border line for a vehicle and several meters for people. Regarding response time from detection to tracking, sensor fusion and situation awareness, due to the complexity of the analysis algorithms that are employed, the respondents indicated that the maximum delay should be up to a few seconds. For satellite systems , the delay was defined to almost a day between the event/alarm and data availability. Regarding the importance for the FOLDOUT solution to be integrated with existing systems at borders, it was determined to be a key requirement. In a related context, interviewees indicated that they mostly operate Synthetic Aperture Radar (SAR), RADAR and LIDAR sensors.

In the following Table 16.1, an overview of end-user requirements is presented.

3 FOLDOUT Architecture Design

To design FOLDOUT system, we used service-oriented architecture (SOA) , which is more flexible and suitable for large and complex systems. In this term, we did not have to describe each single component of the SoS at structural level but just to define a set of services (e.g. command and control (C2) service, data fusion service, sensors service), the interfaces among them and how they collaborate to provide the final service to end-users. In this way, the different system components had been described like services. As a matter of example, each sensor was not seen with respect to its structure, but as an object providing some functions/services to other objects (i.e. C2 service, fusion service, etc.) through well-defined interfaces.

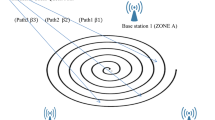

Overall, in order to achieve FOLDOUT’s main goal, a multi-sensor platform was designed and will be developed. This platform shall incorporate end-users’ requirements by integrating, ground, air, space and in situ sensorial techniques. More specifically, FOLDOUT’s architecture design focus is on detecting and tracking activities in foliated areas, in the inner and outermost regions of the EU. FOLDOUT will build a system that combines various sensors and technologies and intelligently fuses these into an effective and robust intelligent detection platform, as illustrated in Fig. 16.1. To support detection and tracking activities of border guards in foliated areas, the FOLDOUT system consists of the following main subsystems:

-

(a)

Sensors layer that will receive information from registered visual and non-visual sensors. This concept for border surveillance includes mobile platforms equipped with or without wireless connection to ground sensors (radio spectrum, RADAR, LIDAR, EOS, RGB, visible and thermal cameras, acoustic sensors). These platforms are fully autarkical, providing also computational resources for the processing and automatic analysis of the sensor data. Further miniaturization of specific sensors (camera, acoustic) will facilitate deployment of resource limited lightweight smart ground sensors, which are used temporarily and complementarily, in dense forests. StratobusTM is finally introduced to border surveillance as a quasi-static platform able to operate over longer timespans at altitudes above 20 km by that filling a gap between satellites and UAV.

-

(b)

Fusion platform that is a high-level processing component responsible for performing data fusion algorithms based on machine learning and providing sensors’ fused detections, tracking and alarms to the C2 platform.

-

(c)

C2 subsystem that combines the information received from the sensors layer and the fusion platform with external data sources (such as weather conditions and maps) and provides alarms and relative information to C2 operators through a GIS-based real-time web platform. The subsystem includes modern command and control tools and provides a live action map with terrain and environment information continuously updated with real-time information. Moreover, through this subsystem, border guards can also (a) register and manage (when possible) sensors and (b) plan interception of targets by utilizing assets from the C2 system.

It reinforces the decision-making process and provides operation dispatching capabilities thus allowing end-users to set and monitor activities, send and receive event-related messages but also to include ad hoc information from sensors or sensor networks. In achieving this paper’s aim, in the following paragraphs, ground sensors, StratobusTM and satellite sensor technologies that had been used in the design of FOLDOUT will be further analysed.

3.1 Ground Sensors

Ground sensors considered in FOLDOUT include radio spectrum, LIDAR, EOS, RGB, visible and thermal cameras and acoustic sensors. While all sensors can complement to detect an object, foliage detection introduces challenges to state-of-the-art camera-based systems [3,4,5] which basically breaks down in the case of fragmented occlusion [6]. Fragmented occlusion occurs very frequently in forests as tree and bush leaves occlude target objects irregularly. This is in contrast to simpler cases of occlusion such as partial occlusion where parts of the object are still visible and recognisable. In FOLDOUT, a new kind of ground sensor will be developed: SMARTSENSE (Fig. 16.2). This new sensor technology offers through foliage detection of persons and vehicles by combining high-resolution thermal (LWIR) and visual (4K RGB) sensor modalities and by fully exploiting the available information in the sensory data with innovative analysis techniques based on temporal and spatiotemporal processing, neural networks and deep learning. The software is inbuilt to form a smart sensor running on Nvidia’s Jetson Xavier embedded board for efficient computation and data transfer between the inbuilt optical sensors, the internal memory and the FOLDOUT environment. The use of LWIR and RGB data combines complementary properties of the data, for example, high-resolution colour information with contrast in LWIR at farther distances. Furthermore, thermal images are not influenced by the illumination variations and shadows, and objects can be distinguished from the background as the background is normally colder. In addition, thermal infrared tracking can be used in total darkness, where visual cameras have no signal (Table 16.2).

The idea of SMARTSENSE is to generalise single images to video and to spatiotemporally analyse the data. It has been shown that video captures important additional information to solve the problem of fragmented occlusion [7]. We follow an approach where we pre-process the raw video data and extract temporal information by learning online a model of static background. This model is then used to estimate foreground pixels in the video frame which potentially form the appearance of occluded target objects . In a second step, these foreground masks are used as region proposals to refine and improve classical two-stage neural object detectors [3, 5] to better cope with fragmented occlusion.

3.2 Sensor Mounted on a StratobusTM

StratobusTM is a high-altitude platform station (HAPS) of between 100 and 140 meters long and 30 meters in diameter that fills the operational gap between satellites and unmanned aerial systems (UAS). This airship-based platform can operate above airplanes at 20 km, in the low layers of the stratosphere. From this operational point, it provides multimission capability with powerful payloads of about 250 kg and 5 kW.

It offers real-time, stationary satellite-like capabilities over wide areas of more than 100,000 km2 for missions up to 1 year. Exclusively powered by solar energy, it flies autonomously, storing during daytime the energy needed for the night. Thanks to high-density rechargeable batteries, enough solar energy is stored to maintain its position at any time of the year and for wind speeds of up to 25 m/s (90 km/h). Its unique feature of envelope rotation to permanently face the sun allows maximum energy collection all year long. The hull, filled with helium, is made of an advanced high strength and very light material and UV-resistant and with very low permeability.

StratobusTM offers flexibility in missions: it provides permanent surveillance, telecommunication and monitoring services for both defence, institutional and civil applications. Thales Alenia Space is planning to finalize necessary adaptations to provide solutions by 2023.

Thanks to the power and mass available for payloads on the StratobusTM, various remote sensors are conceivable to perform a permanent surveillance above a specific area or above the border. StratobusTM could be easily connected to satellites and drones or interconnected to other StratobusTM, via RF or laser link for combination of multiple sensors over different areas to achieve global missions. StratobusTM is an unmanned platform, piloted from the ground to perform its mission. It requires an annual ground preventive maintenance of few days and a major overhaul after 5 years of operation. Maintenance is also an opportunity to switch payloads for a different mission or to embark newer payloads to remain at the cutting edge of the technology. Transfer from the take-off site to its operational station-keeping site is easy within few days by using its electrical propellers (Fig. 16.3).

The concept airborne RADAR system of CORISTA (Consortium of Research on Advanced Remote Sensing Systems) represents an excellent candidate to be mounted on board StratobusTM. The mentioned radar concept is a low-frequency radar developed by CORISTA and funded by ASI (Italian Space Agency). It is a multi-mode and multi-frequency radar , which has been designed with the aim of transportability and as easy installation. The instrument is completely stand-alone, with the power supply connector being the only electrical interface [8].

Currently the system works in sounder mode and in the SAR mode at two different P-Band carrier frequencies. Radar sounding is a powerful technique for detecting, localizing and identifying dielectric interfaces underneath planet’s surface. The transmitted radar pulse is capable of penetrating below the surface and is reflected by dielectric discontinuities. SAR is a technique that allows to get high-resolution radar images from data collected by side-looking radar instruments carried by aircraft or spacecraft. The entire CORISTA Radar System is quite compact: its dimensions are 50 cm × 50 cm × 60 cm, for a weight of about 35 kg; it can be easily mounted on board relatively small airplanes or helicopters [9].

During the FOLDOUT programme, the feasibility of embarking the CORISTA concept P-band radar on board stratospheric platform as well as the suitable and necessary modifications (mechanical and electronic) will be verified. The possibility of having a radar system with foliage penetration functionality on a HAPS like StratobusTM would give the possibility of continuously monitoring (without delay of relevant data) forested areas of interest any time (day/night) and regardless of weather conditions, with a direct access link to the instrument.

3.3 Sensor Mounted on a Satellite

The satellite system studied in the frame of the FOLDOUT project aims to provide geo-located images (2D) and derived products (target detection metadata) with the use of Synthetic Aperture Radar (SAR). The system is based on constellation of LEO orbit (around 600 Km) satellites. The satellite SAR works at low frequency, 435 MHz, which permits foliage penetration capabilities and the detection of metallic objects (e.g. trucks, infrastructures) with a footprint up to 100 × 100 Km2 (swath-width) covered by vegetation. The layout of the system architecture and interfaces is depicted in the following Fig. 16.4:

The Ground Segment (G/S) aims to perform the main functions/operations, at ground level, needed to manage the FOLDOUT mission, in terms of both satellite control and data management:

-

Satellite Control System (SCS) : performs routine activities on the satellite and execution of planned payload operations (mainly, instrument data acquisitions and transmission to the ground).

-

Mission Control System (MCS) : is mainly devoted to the development of planning activities. The activities include the preparation of the mission plans, solving the possible conflicts on the spacecraft, the commands to be uplinked (safety on board, attitude and orbit maintenance, sensor operative mode setting, on-board S/W patches to be uplinked).

-

Data Processing System : is the core element which is charge of the processing of the satellite raw data with the aim to provide Level 1 (images) and Level 2 (metadata) products to the FOLDOUT interface. Images and metadata are stored in the Archive and Catalogue system.

Centralized vs Distributed Ground Segment configurations are envisaged and will be ranked according to evaluation criteria which take into account performances (i.e. system response time), costs (CAPEX and OPEX) and complexities:

-

Option 1 – Centralized Architecture: envisages the use of a single data receiving ground station located near the polar region in order to maximize the contact time per day. The data acquisition ground station will be located in Svalbard (or Kiruna). The Data Processing and Data Distribution are located in the same centre and are common for all countries/border authorities.

-

Option 2 – Distributed Architecture: envisages to use of dedicated Data Processing and Distribution Centre per each country in order to allow an autonomous data processing and storage.

The Space Segment is constituted of a constellation of LEO satellites which preliminary orbit data are described in Table 16.3. The orbit has been selected according to the following criteria and assumptions:

-

Access to all FOLDOUT border area of interest (AOI) including French Guiana (global access)

-

SAR instrument access area better than 20° to 45° in term of incidence angle (that corresponds at about 300 km on ground)

-

Provision of the right operational conditions for the SAR instrument

A single satellite with a SAR instrument allows a 100% access to the target AOI in less than 9 days. In particular, the AOIs with small surface, such as Greece and French Guyana, are covered in about 3 days (always inside of the cycle of 9 days). Countries like Finland with a very large surface require many passages for complete coverage (about 7 days). In order to reduce the gap between the acquisitions, the number of satellites on the orbit needs to be increased. In particular, according to Fig. 16.5 with two or three satellite, it is possible to reduce of two or three times the revisit time.

The preliminary satellite SAR performances, according to system requirements and analyses, to achieve the FOLDOUT target detection objectives are provided in Table 16.3.

In order to validate and refine the satellite SAR performances, the acquisition of airborne datasets which are representative of the satellite system will take place. The campaign will be based on an airborne P-band SAR that has been developed by CORISTA in the framework of the Italian Space Agency (ASI) technological project (contract ref. I/062/10/0 and ref. 2015-029-I.0). The campaign is expected to be performed at Bulgarian border area. The Fig. 16.6 provides a snapshot of the layout of the CORISTA system (kindly granted for publication by Italian Space Agency).

3.4 Fusion of Ground Sensors, StratobusTM and Satellite Data

FOLDOUT’s core functionality is the combination of various sensors and technologies and intelligently fusing these into an effective and robust intelligent detection platform. The clear advantage is that fusing several sensor signals increases the effectiveness of detection. Furthermore, combining information from various sensors allows for a better interpretation of the current situation in the surveyed area (situational awareness) and inference of possible threats (alarming).

Fusion can only be performed on registered data. In the FOLDOUT system, a registration component will work explicitly to convert local coordinate measurements from heterogeneous sensors into a common reference frame (i.e. WGS84). If, for instance, SMARTSENSE, StratobusTM and/or satellite sensors achieve the detection of a target, the positions of the target will be converted from the sensor frame of reference to the chosen common reference frame (i.e. WGS84). The fusion engine will then associate the observations from different sensors so that targets which are detected on several sensors will be unified into a single entity. The fusion module will work on detection variability likely to exist among sensors; that is, depending on sensor characteristics, weather conditions, etc., some sensors will detect the object with high confidence, some sensors may have only partial detection, and some sensors may not detect the object at all. For instance, specifically in relation to the StratobusTM and satellite sensors, data from the latter provides large area coverage but refreshed only on hourly basis, while data from the former should be near to permanently available and in real-time resolution but on a smaller coverage. SMATSENSE detections may correspond to a smaller selected area where cameras are pointed out. The fusion module will thus aggregate evidence from all sensors into individual “heat maps”, which can be interpreted as detection probability maps with a time delay. The FOLDOUT Fusion system can also be perfectly used with current technology. Current border surveillance includes the use of visible, thermal cameras, PIR sensors, seismic, RADAR and LIDAR sensors as among the most employed types of sensors. While current solutions provide stand-alone systems to end-users, FOLDOUT will fuse all information and give a unified picture of activity to end-users. Fusion will as well solve inconsistencies that may exist on object classification (person/vehicle) and through synchronisation of heterogeneous data; by this, it is meant that data itself is heterogeneous as input to fusion which may include location of detected objects, object classification, type of material, etc.

The last layer of analysis on fused objects is situational awareness and alarming. This component reasons on the behaviour of detected targets and current situation to decide whether to issue an alarm [10,11,12]. The current situation will be partly asserted from modelling threat situations incorporated from end-users’ requirements and from real-time querying C2 system for real-time external/contextual data (including map information such as nearby routes to border, type of terrain, elevation, etc.). Such contextual information will allow probabilistic inference of next movements of the targets to allow border guards to take appropriate action and prove them sufficient response time.

4 Scenarios Description

The architecture will lead to the development of FOLDOUT platform that will be tuned and tested with data collected in four different European land borders under realistic and harsh conditions in Bulgaria, Finland, French Guiana and Greece. In doing this, the main difficulty when developing such systems, which is the lack of representative data for tuning and testing, will be overcome. As a minimum, the following use cases will be considered: (i) detection of irregular border crossings (illegal migrants and vehicles) in forest terrain, border surveillance (Bulgarian and Greek scenarios); (ii) detection of illegal transport and entry of goods (trafficking) in temperate broad leaf forest and mixed terrain, border surveillance (French Guiana Scenario); and (iii) detection of persons and vehicles in a search and rescue operation in forest terrain. For each scenario, practitioners will present relevant use cases (e.g. use of an unmanned vehicle to confirm and verify detection made by ground mobile and fixed ground surveillance assets or re-planning and re-tasking on a mission that shifts from illegal immigrants tracking to search and rescue).

In addition, to optimize data analytics, reference data is needed fitting two major constraints:

-

(a)

Representativeness: the reference data must correspond to real-life scenarios, including events/actions as those encountered by border guards in their daily practice; the quality of data must correspond to the data used for investigation (e.g. video surveillance camera); the data must be sufficiently represent different variations in the environment and the events.

-

(b)

Availability of the “ground truth” annotation corresponding to the data: the events/persons/objects to be detected must be known and precisely documented in order to measure the performances. To take an example, for the measurement of person tracking performances, the ground truth must document the actual track of the various persons. In doing this, two purposes will be served: on one hand, the scenarios will form a body of preliminary work to be used as a baseline for end-user expectations. On the other hand, the use cases will be extremely important for technical adjustments in particular concerning necessary sensors, interfaces and input/output.

5 Current Results

In order to give a demonstration of the importance of the core capabilities of the FOLDOUT system, its main components (sensor layout, fusion, command and control) have been deployed and connected to some of the most commonly employed sensors in current border surveillance technology, namely, visible, thermal cameras and PIR sensors. Figure 16.7 shows the layout of sensors deployed for this demonstration in a simulated border between two countries (Fig. 16.8).

Employing the FOLDOUT system, border guards have the benefit of exploring terrain activity on a global map instead of being obliged to look at separate stand-alone systems. The system will not only unify detections from different sensor types but will also analyse the confidence of individual detections to decide if one sensor might be firing incorrectly and thus decrease the false-positive ratio overall. Indeed, an important aspect of through foliage detection is the frequent occurrence of false-positive detections due to movement of vegetation, of branches and leaves, in wind and rain. Many sensor technologies employed in border security, such as RADAR and passive thermal IR detectors, are affected by this, leading to additional work load on the operators to manually verify the situation in visual or thermal IR camera images. Tests of the FOLDOUT demonstrator in strong windy conditions confirmed the appearance of false-positive detections, at the level of individual sensors, which could successfully be filtered out with the FOLOUT system. The proposed multi-sensor fusion approach thus potentially allows to reduce the number of false positives by filtering out detections that are not coinciding in the spatiotemporal domain. Through foliage detection, challenges are facilitated in FOLDOUT in part by including a fusion system in its core functionalities.

6 Conclusion

The ambitions of this concept for border surveillance with respect to air and space are threefold:

-

(i)

To improve situational awareness through fusion of advanced aerial and space-based sensor platforms into one surveillance solution. Currently no high-rising, fixed sensor platform offering an optimal, unobstructed field-of-view exists for border surveillance. StratobusTM as a quasi-static high-rising sensor platform promises to fill this gap.

-

(ii)

To exploit low frequency SAR in the P and L bands which in principle allows to penetrate foliage. Tomography, interferometry techniques with change detection are developed, to extract suitable data from the SAR sensor.

-

(iii)

To fuse aerial, space as well as ground-based sensor data by registering multiple events into a common geographical map. Ground sensors play a vital role in border surveillance, hence ideas of mobile, autarkical, smart sensors allowing a better coverage of dense forest. Ground and airborne sensors will together provide and enhanced coverage of the area, particularly important in FOLDOUT through foliage challenge. Abnormality detection is performed by behavioural analysis within the common geographical map by also taking contextual information into account. It is believed that the developed concept of border surveillance is also fruitful for studies of the environment where instead of vehicles and persons, larger gradual spatiotemporal changes as well as specific local patterns are of interest.

FOLDOUT has received funding from the European Union’s Horizon 2020 research and innovation programme under grant agreement no. 787021. The opinions expressed in this paper reflect only the author’s view and reflects in no way the European Commission’s opinions. The European Commission is not responsible for any use that may be made of the information it contains.

Change history

24 June 2022

Chapter 16, “FOLDOUT: A Through Foliage Surveillance System for Border Security” was previously published non-open access. It has now been changed to open access under a CC BY 4.0 license and the copyright holder updated to ‘The Author(s)’. The book has also been updated with this change.

Bibliography

Regulation (EC) No 562/2006 of the European Parliament and of the Council of 15 March 2006, establishing a Community Code on the rules governing the movement of persons across borders, OJ 2006, L 105, p.1.

Grand Agreement, “FOLDOUT” Number 787021, European Commission H2020, April 2018. (www.foldout.eu).

Ren, S., He, K., Girshick, R., & Sun, J. (2017). Faster R-CNN: Towards real-time object detection with region proposal networks. IEEE Transactions on Pattern Analysis and Machine Intelligence, 39(6), 1137–1149. https://doi.org/10.1109/TPAMI.2016.2577031.

Liu, W., et al. (2016). SSD: Single shot multibox detector. In B. Leibe, J. Matas, N. Sebe, & M. Welling (Eds.), Computer vision – ECCV 2016. ECCV 2016. Lecture notes in computer science (Vol. 9905). Cham: Springer.

He, K., Gkioxari, G., Dollár, P., & Girshick, R. (2020). Mask R-CNN. IEEE Transactions on Pattern Analysis and Machine Intelligence, 42(2), 386–397. https://doi.org/10.1109/TPAMI.2018.2844175.

Pegoraro, J., & Pflugfelder, R. (2020). The problem of fragmented occlusion in object detection, to appear at the Austrian Joint Computer Vision and Robotics Workshop, Sept 2020.

Black, M. J., & Anandan, P. (1996). The robust estimation of multiple motions: Parametric and piecewise-smooth flow fields. Journal of Computer Vision and Image Understanding, 63(1), 75–104.

Papa, C., et al. (2014). Design and validation of a multimode multifrequency VHF/UHF airborne radar. IEEE Geoscience and Remote Sensing Letters, 11(7), 1260–1264.

Perna, S., et al. (2019). The ASI P-Band Helicopter-Borne integrated sounder-SAR system: Preliminary results of the 2018 Morocco desert campaign, submitted to International geoscience and Remote Sensing Symposium (IGARSS), Yokohama, Japan.

Patino, L., & Ferryman, J. (2016). Semantic modelling for behavior characterization and threat detection, IEEE conference on computer vision and pattern recognition (CVPR) workshops.

Patino, L., Ferryman, J., & Beleznai, C. (2015). Abnormal behaviour detection on queue analysis from stereo cameras, AVSS 2015 - 12th IEEE international conference on advanced video and signal based surveillance.

Patino, L., & Ferryman, J. (2014). Multiresolution semantic activity characterization and abnormality discovery in videos. Applied Soft Computing, 25, 485–495.

Acknowledgement

| This article is based on research, inter alia, undertaken in the context of the EU-funded FOLDOUT project, which has received funding from the European Union’s Horizon 2020 research and innovation programme under grant agreement no. 787021. The views expressed in this article are those of the authors alone and are in no way intended to reflect those of the European Commission. |

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Open Access This chapter is licensed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license and indicate if changes were made.

The images or other third party material in this chapter are included in the chapter's Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the chapter's Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder.

Copyright information

© 2021 The Author(s)

About this chapter

Cite this chapter

Bolakis, C. et al. (2021). FOLDOUT: A Through Foliage Surveillance System for Border Security. In: Akhgar, B., Kavallieros, D., Sdongos, E. (eds) Technology Development for Security Practitioners. Security Informatics and Law Enforcement. Springer, Cham. https://doi.org/10.1007/978-3-030-69460-9_16

Download citation

DOI: https://doi.org/10.1007/978-3-030-69460-9_16

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-69459-3

Online ISBN: 978-3-030-69460-9

eBook Packages: EngineeringEngineering (R0)