Abstract

The spread of fake news remains a serious global issue; understanding and curtailing it is paramount. One way of differentiating between deceptive and truthful stories is by analyzing their coherence. This study explores the use of topic models to analyze the coherence of cross-domain news shared online. Experimental results on seven cross-domain datasets demonstrate that fake news shows a greater thematic deviation between its opening sentences and its remainder.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

The impact of news on our daily affairs is greater than it has ever been. Fabrication and dissemination of falsehood have become politically lucrative endeavors, thereby harming public discourse and worsening political polarization [1]. These motivations have led to a complex and continuously evolving phenomenon mainly characterized by dis- and misinformation, commonly collectively referred to as fake news [2]. This denotes various kinds of false or unverified information, which may vary based on their authenticity, intention, and format [3]. Shu et al. [4] define it as “a news article that is intentionally and verifiably false.”

The dissemination of fake news is increasing, and because it appears in various forms and self-reinforces [1, 3], it is difficult to erode. Therefore, there is an urgent need for increased research in understanding and curbing it. This paper considers fake news that appears in the form of long online articles and explores the extent of internal consistency within fake news vis-à-vis legitimate news. In particular, we run experiments to determine whether thematic deviations—i.e., a measure of how dissimilar topics discussed in different parts of an article are—between the opening and remainder sections of texts can be used to distinguish between fake and real news across different news domains.

1.1 Motivation

A recent study suggests that some readers may skim through an article instead of reading the whole content because they overestimate their political knowledge, while others may hastily share news without reading it fully, for emotional affirmation [5]. This presents bad actors with the opportunity of deftly interspersing news content with falsity. Moreover, the production of fake news typically involves the collation of disjoint content and lacks a thorough editorial process [6].

Topics discussed in news pieces can be studied to ascertain whether the article thematically deviates between its opening and the rest of the story, or if it remains coherent throughout. Thematic analysis is useful here for two reasons. First, previous studies show that the coherence between units of discourse (such as sentences) in a document is useful for determining its veracity [6, 7]. Second, analysis of thematic deviation can identify general characteristics of fake news that persist across multiple news domains.

Although topics have been employed as features [8,9,10], they have not been applied to study the unique characteristics of fake news. Research efforts in detecting fake news through thematic deviation have thus far focused on spotting incongruences between pairs of headlines and body texts [11,12,13,14]. Yet, thematic deviation can also exist within the body text of a news item. Our focus is to examine these deviations to distinguish fake from real news.

To the best of the authors’ knowledge, this is the first work that explores thematic deviations in the body text of news articles to distinguish between fake and legitimate news.

2 Related Work

The coherence of a story may be indicative of its veracity. For example, [7] demonstrated this by applying Rhetorical Structure Theory [15] to study the discourse of deceptive stories posted online. They found that a major distinguishing characteristic of deceptive stories is that they are disjunctive. Also, while truthful stories provide evidence and restate information, deceptive ones do not. This suggests that false stories may tend to thematically deviate more due to disjunction, while truthful stories are likely to be more coherent due to restatement. Similarly, [6] investigated the coherence of fake and real news by learning hierarchical structures based on sentence-level dependency parsing. Their findings also suggest that fake news documents are less coherent.

Topic models are unsupervised algorithms that aid the identification of themes discussed in large corpora. One example is Latent Dirichlet Allocation (LDA), which is a generative probabilistic model that aids the discovery of latent themes or topics in a corpus [16]. Vosoughi et al. [17] used LDA to show that false rumor tweets tend to be more novel than true ones. Novelty was evaluated using three measures: Information Uniqueness, Bhattacharyya Distance, and Kullback-Leibler Divergence. Likewise, [18] used LDA to assess the credibility of Twitter users by analyzing the topical divergence of their tweets to those of other users. They also assessed the veracity of users’ tweets by comparing the topic distributions of new tweets against historically discussed topics. Divergence was computed using the Jensen-Shannon Divergence, Root Mean Squared Error, and Squared Error. Our work primarily differs from these two in that we analyze full-length articles instead of tweets.

3 Research Goal and Contributions

This research aims to assess the importance of internal consistency within articles as a high-level feature to distinguish between fake and real news stories across different domains. We set out to explore whether the opening segments of fake news thematically deviates from the rest of it, significantly more than in authentic news. We experiment with seven datasets which collectively cover a wide variety of news domains, from business to celebrity, to warfare. Deviations are evaluated by calculating the distance between the topic distribution of the opening part of an article, to that of its remainder. We take the first five sentences of an article as its opening segment.

Our contributions are summarized as follows:

-

1.

We present new insights towards understanding the underlying characteristics of fake news, based on thematic deviations between the opening and remainder parts of news body text.

-

2.

We carry out experiments on five cross-domain datasets. The results demonstrate the effectiveness of thematic deviation for distinguishing fake from real news.

4 Experiments

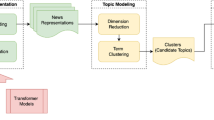

We hypothesize the following: the opening sentences of a false news article will tend to thematically deviate more from the rest of it, as compared to an authentic article. To test this hypothesis, we carried out experiments in the manner shown in Algorithm 1. We use Python and open-source packages for all computations.

Procedure.

All articles (\( S_{bg} \)) are split into two parts: its first \( x \)Footnote 1 sentences, and the remaining \( y \). Next, \( N \) topics are obtained from \( x \) and \( y \) using an LDA model trained using GensimFootnote 2 on the entire dataset. For \( i = \left( {1, . . .,m} \right) \) topics, let \( p_{x} = \left( {p_{x1} , . . . , p_{xm} } \right) \) and \( p_{y} = \left( {p_{y1} , . . . , p_{ym} } \right) \) be two vectors of topic distributions, which denote the prevalence of a topic \( i \) in the opening text \( x \) and remainder \( y \) of an article, respectively. The following are metrics used to measure the topical divergence between parts \( x \) and \( y \) of an article:

-

Chebyshev (\( D_{Ch} \)):

$$ {\text{D}}_{{\rm Ch}} \left( {{\text{p}}_{{\rm xi}} , {\text{p}}_{{\rm yi}} } \right) \,{ = }\, \mathop { \max }\limits_{{\rm i}} \; \left| {{\text{p}}_{{\rm xi}} - {\text{p}}_{{\rm yi}} } \right| $$(1) -

Euclidean (\( D_{E} \)):

$$ \text{D}_{{\rm E}} \left( {\text{p}_{{{\rm xi}}} , \text{p}_{{{\rm yi}}} } \right) = \left\| {\text{p}_{{{\rm xi}}} - \text{p}_{{{\rm yi}}} } \right\| \, = \,\sqrt {\sum\nolimits_{i = 1}^{m} {\left( {\text{p}_{{{\rm xi}}} - \text{p}_{{{\rm yi}}} } \right)^{2} } } $$(2) -

Squared Euclidean (\( D_{SE} \)):

$$ \text{D}_{{{\rm SE}}} \left( {\text{p}_{{{\rm xi}}} , \text{p}_{{{\rm yi}}} } \right) = \sum\nolimits_{i = 1}^{m} {\left( {\text{p}_{{{\rm xi}}} - \text{p}_{{{\rm yi}}} } \right)^{2} } $$(3)

Intuitively, Chebyshev distance is the greatest difference found between any two topics in \( x \) and \( y \). The Euclidean distance measures how “far” the two topic distributions are from one another, while the Squared Euclidean distance is simply the square of that “farness”.

Finally, the average and median values of each distance are calculated across all fake (\( S_{f} \)) and real (\( S_{r} \)) articles. We repeated these steps with varying values of \( N \) (from 10 to 200 topics) and \( x \) (from 1 to 5 sentences).

Pre-processing.

Articles are split into sentences using the NTLKFootnote 3 package. Each sentence is tokenized and lowercased to form a list of words, from which stop words are removed. Bigrams are then formed and added to the vocabulary. Next, each document is lemmatized using spaCyFootnote 4, and only noun, adjective, verb, and adverb lemmas are retained. A dictionary is formed by applying these steps to \( S_{bg} \). Each document is converted into a bag-of-words (BoW) format, which is used to create an LDA model (\( {\mathcal{M}}_{bg} \)). Fake and real articles are subsequently pre-processed likewise (i.e., from raw text data to BoW format) before topics are extracted from them.

We consider the opening sentences of articles to be sufficient for capturing the lead or “opening theme” of the story, which will likely fall within the first paragraph. The first paragraph may in some cases be either too short or long for this, especially for fake articles that often lack a proper structure. Overly short or lengthy texts will influence the extraction of topics more adversely than if a set number of sentences are used.

Datasets.

Table 1 summarizes the datasets (after pre-processing) used in this study and lists the domains (as stated by the dataset provider) covered by each. An article’s length (Avg. length) is measured by the number of words that remain after pre-processing. The article maximum lengths (Max. length) is measured in terms of the number of sentences. We use the following datasets:

-

BuzzFeed-Webis Fake News Corpus 2016Footnote 5 (BuzzFeed-Web) [19]

-

BuzzFeed Political News DataFootnote 6 (BuzzFeed-Political) [20]

-

FakeNewsAMT + Celebrity (AMT + C) [21]

-

Falsified and Legitimate Political News DatabaseFootnote 7 (POLIT)

-

George McIntire’s fake news dataset (GMI)Footnote 8

-

University of Victoria’s Information Security and Object Technology (ISOT)Footnote 9 Research Lab [22]

-

Syrian Violations Documentation Centre (SVDC)Footnote 10 [23]

Evaluation.

We evaluate differences in coherence of fake and real articles using the T-test at 5% significance level. The null hypothesis is that the mean coherence of fake and real news is equal. The alternative hypothesis is that the mean coherence of real news is greater than that of fake news. We expect that there will be a greater topic deviation in fake news and thus, its coherence will be lesser than that of real news.

5 Results and Discussion

Results of the experimental evaluation using the different divergence measures are shown in Table 2. We observe that fake news is generally likely to show greater thematic deviation (lesser coherence) than real news in all datasets. Table 3 shows the mean \( {\text{D}}_{{\rm Ch}} \) deviations of fake and real articles across \( {\text{N = }}\left\{ { 1 0 , 2 0 , 3 0 , 4 0 , 5 0 , 1 0 0 , 1 5 0 , 2 0 0} \right\} \) topics. Although results for AMT+C and BuzzFeed-Web are not statistically significant according to the T-test and therefore, do not meet our expectations, results for all other datasets are. Nonetheless, the mean and median values for fake news are lower than those of real news for these datasets. Table 3, which shows mean and median \( D_{Ch} \) deviations of fake and real articles across all values of \( N \). Figure 1 shows mean and median results for comparing topics in the first five and the remaining sentences. Results for values of \( N \) not shown are similar (with \( D_{Ch} \) gradually decreasing as \( N \) increases).

We found that comparing the first five sentences to the rest of the article yielded the best results (i.e., greatest disparity between fake and real deviations) for most datasets and measures. This is likely due to the first five sentences containing more information. For example, five successive sentences are likely to entail one another and contribute more towards a topic than a single sentence.

It is worth highlighting the diversity of datasets used here, in terms of domain, size, and the nature of articles. For example, the fake and real news in the SVDC dataset have a very similar structure. Both types of news were mostly written with the motivation to inform the reader on conflict-related events that took across Syria. However, fake articles are labeled as such primarily because the reportage (e.g., on locations and number of casualties) in them is insufficiently accurate.

To gain insight into possible causes of greater deviation in fake news, we qualitatively inspected the five most and least diverging fake and real articles (according to \( D_{Ch} \)). We also compared a small set of low and high number of topics (\( N \le 30 \) and \( N \ge 100 \)). We observed that fake openings tend to be shorter, vaguer, and less congruent with the rest of the text. By contrast, real news openings generally give a better narrative background to the rest of the story.

Although the writing style in fake news is sometimes unprofessional, this is an unlikely reason for the higher deviations in fake news. Both fake and real news try to expand on the opening lead of the story, with more context and explanation. Indeed, we observed that real news tends to have longer sentences, which give more detailed information about a story, and are more narrative. It can be argued that the reason behind this is that fake articles are designed to get readers’ attention, whereas legitimate ones are written to inform the reader. For instance, social media posts which include a link to an article are sometimes displayed with a short snippet of the article’s opening text or its summary. This section can be designed to capture readers’ attention.

It also conceivable that a bigger team of people working to produce a fake piece may contribute to its vagueness. They may input different perspectives that diversify the story and makes it less coherent. This may be compared to real news, whereby there typically are one or two professional writers and therefore, better coherence.

6 Conclusion

Fake news and deceptive stories tend to open with sentences which may be incoherent with the rest of the text. It is worth exploring if the consistency of fake and real news can distinguish between the two. Accordingly, we investigated the thematic deviations of seven cross-domain fake and real news using topic modeling. Our findings suggest that the opening sentences of fake articles topically deviate more from the rest of the article, as compared to real news. The next step is to find possible reasons behind these deviations through in-depth analyses of topics. In conclusion, this paper presents valuable insights into thematic differences between fake and authentic news, which may be exploited for fake news detection.

Notes

- 1.

We used only articles with at least x + 1 sentences.

- 2.

- 3.

- 4.

- 5.

- 6.

- 7.

- 8.

https://github.com/GeorgeMcIntire/fake_real_news_dataset (accessed 5 November 2018).

- 9.

- 10.

References

Waldman, A.E.: The marketplace of fake news. Univ. Pennsylvania J. Const. Law 20, 846–869 (2018). https://scholarship.law.upenn.edu/jcl/vol20/iss4/3

Wardle, C.: Fake News. It’s Complicated. First Draft (2017). https://firstdraftnews.org/fake-news-complicated/. Accessed 24 Jan 2019

Zhou, X., Zafarani, R.: Fake news: a survey of research, detection methods, and opportunities (2018). http://arxiv.org/abs/1812.00315. Accessed 10 Nov 2019

Shu, K., Sliva, A., Wang, S., Tang, J., Liu, H.: Fake news detection on social media. ACM SIGKDD Explor. Newsl. 19(1), 22–36 (2017). https://doi.org/10.1145/3137597.3137600

Anspach, N.M., Jennings, J.T., Arceneaux, K.: A little bit of knowledge: facebook’s news feed and self-perceptions of knowledge. Res. Polit. 6(1) (2019). https://doi.org/10.1177/2053168018816189

Karimi, H., Tang, J.: Learning hierarchical discourse-level structure for fake news detection. In: Proceedings of the 2019 Conference of the North, pp. 3432–3442 (2019). https://doi.org/10.18653/v1/n19-1347

Rubin, V.L., Lukoianova, T.: Truth and deception at the rhetorical structure level. J. Assoc. Inf. Sci. Technol. 66(5), 905–917 (2015). https://doi.org/10.1002/asi.23216

Das Bhattacharjee, S., Talukder, A., Balantrapu, B.V.: Active learning based news veracity detection with feature weighting and deep-shallow fusion. In: Proceedings - 2017 IEEE International Conference on Big Data, Big Data 2017, vol. Jan. 2018, pp. 556–565 (2018). https://doi.org/10.1109/bigdata.2017.8257971

Benamira, A., Devillers, B., Lesot, E., Ray, A.K., Saadi, M., Malliaros, F.D.: Semi-supervised learning and graph neural networks for fake news detection, pp. 568–569 (2019). https://hal.archives-ouvertes.fr/hal-02334445/. Accessed 29 Nov 2019

Li, S., et al.: Stacking-based ensemble learning on low dimensional features for fake news detection. In: Proceedings - 17th IEEE International Conference on Smart City and 5th IEEE International Conference on Data Science and Systems, HPCC/SmartCity/DSS 2019, pp. 2730–2735 (2019). https://doi.org/10.1109/hpcc/smartcity/dss.2019.00383

Chen, Y., Conroy, N.J., Rubin, V.L.: Misleading online content: recognizing clickbait as ‘false news’. In: WMDD 2015 - Proceedings of the ACM Workshop on Multimodal Deception Detection, co-located with ICMI 2015, pp. 15–19 (2015). https://doi.org/10.1145/2823465.2823467

Sisodia, D.S.: Ensemble learning approach for clickbait detection using article headline features. Inf. Sci. 22(2019), 31–44 (2019). https://doi.org/10.28945/4279

Ferreira, W., Vlachos, A.: Emergent: a novel data-set for stance classification. In: 2016 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, NAACL HLT 2016 - Proceedings of the Conference, pp. 1163–1168 (2016). https://doi.org/10.18653/v1/n16-1138

Yoon, S., et al.: Detecting incongruity between news headline and body text via a deep hierarchical encoder. In: Proceedings of AAAI Conference on Artificial Intelligence, vol. 33, pp. 791–800 (2019). https://doi.org/10.1609/aaai.v33i01.3301791

Mann, W.C., Thompson, S.A.: Rhetorical structure theory: toward a functional theory of text organization. Text – Interdiscip. J. Study Discourse 8(3), 243–281 (1988). https://doi.org/10.1515/text.1.1988.8.3.243

Blei, D.M., Ng, A.Y., Jordan, M.I.: Latent Dirichlet allocation. J. Mach. Learn. Res. 3(Jan), 993–1022 (2003). Accessed 01 Dec (2019). http://jmlr.csail.mit.edu/papers/v3/blei03a.html

Vosoughi, S., Roy, D., Aral, S.: The spread of true and false news online. Science 359(6380), 1146–1151 (2018). https://doi.org/10.1126/science.aap9559

Ito, J., Toda, H., Koike, Y., Song, J., Oyama, S.: Assessment of tweet credibility with LDA features. In: WWW 2015 Companion - Proceedings of the 24th International Conference on World Wide Web, pp. 953–958 (2015). https://doi.org/10.1145/2740908.2742569

Potthast, M., Kiesel, J., Reinartz, K., Bevendorff, J., Stein, B.: A stylometric inquiry into hyperpartisan and fake news. In: ACL 2018 - 56th Annual Meeting of the Association for Computational Linguistics, Proceedings of the Conference (Long Papers), vol. 1, pp. 231–240 (2018). https://doi.org/10.18653/v1/p18-1022

Horne, B.D., Adali, S.: This just in: fake news packs a lot in title, uses simpler, repetitive content in text body, more similar to satire than real news (2017). http://arxiv.org/abs/1703.09398

Pérez-Rosas, V., Kleinberg, B., Lefevre, A., Mihalcea, R.: Automatic detection of fake news. In: Proceedings of the 27th International Conference on Computational Linguistics, pp. 3391–3401 (2018). https://www.aclweb.org/anthology/C18-1287/. Accessed 07 Dec 2019

Ahmed, H., Traore, I., Saad, S.: Detection of online fake news using n-gram analysis and machine learning techniques. In: Traore, I., Woungang, I., Awad, A. (eds.) ISDDC 2017. LNCS, vol. 10618, pp. 127–138. Springer, Cham (2017). https://doi.org/10.1007/978-3-319-69155-8_9

Abu Salem, F., Al Feel, R., Elbassuoni, S., Jaber, M., Farah, M.: Dataset for fake news and articles detection (2019). https://doi.org/10.5281/zenodo.2532642

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Open Access This chapter is licensed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license and indicate if changes were made.

The images or other third party material in this chapter are included in the chapter's Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the chapter's Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder.

Copyright information

© 2020 The Author(s)

About this paper

Cite this paper

Dogo, M.S., Deepak P., Jurek-Loughrey, A. (2020). Exploring Thematic Coherence in Fake News. In: Koprinska, I., et al. ECML PKDD 2020 Workshops. ECML PKDD 2020. Communications in Computer and Information Science, vol 1323. Springer, Cham. https://doi.org/10.1007/978-3-030-65965-3_40

Download citation

DOI: https://doi.org/10.1007/978-3-030-65965-3_40

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-65964-6

Online ISBN: 978-3-030-65965-3

eBook Packages: Computer ScienceComputer Science (R0)