Abstract

This work introduces novel techniques to improve the translation between arithmetic and binary data types in secure multi-party computation. We introduce a new approach to performing these conversions using what we call extended doubly-authenticated bits (edaBits), which correspond to shared integers in the arithmetic domain whose bit decomposition is shared in the binary domain. These can be used to considerably increase the efficiency of non-linear operations such as truncation, secure comparison and bit-decomposition.

Our edaBits are similar to the daBits technique introduced by Rotaru et al. (Indocrypt 2019). However, we show that edaBits can be directly produced much more efficiently than daBits, with active security, while enabling the same benefits in higher-level applications. Our method for generating edaBits involves a novel cut-and-choose technique that may be of independent interest, and improves efficiency by exploiting natural, tamper-resilient properties of binary circuits that occur in our construction. We also show how edaBits can be applied to efficiently implement various non-linear protocols of interest, and we thoroughly analyze their correctness for both signed and unsigned integers.

The results of this work can be applied to any corruption threshold, although they seem best suited to dishonest majority protocols such as SPDZ. We implement and benchmark our constructions, and experimentally verify that our technique yields a substantial increase in efficiency. EdaBits save in communication by a factor that lies between 2 and 60 for secure comparisons with respect to a purely arithmetic approach, and between 2 and 25 with respect to using daBits. Improvements in throughput per second slightly lower but still as high as a factor of 47. We also apply our novel machinery to the tasks of biometric matching and convolutional neural networks, obtaining a noticeable improvement as well.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

1 Introduction

Secure multi-party computation, or MPC, allows a set of parties to compute some function f on private data, in such a way that the parties do not learn anything about the actual inputs to f, beyond what could be computed given the result. MPC can be used in a wide range of applications, such as private statistical analysis, machine learning, secure auctions and more.

MPC protocols can vary widely depending on the adversary model that is considered. For example, protocols in the honest majority setting are only secure as long as fewer than half of the parties are corrupt and colluding, whilst protocols secure against a dishonest majority allow all-but-one of the parties to be corrupt. Another important distinction is whether the adversary is assumed to be semi-honest, that is, they will always follow the instructions of the protocol, or malicious, and can deviate arbitrarily.

The mathematical structure underpinning secure computation usually requires to fix what we call a computation domain. The most common examples of such domains are computation modulo a large number (prime or power of two) or binary circuits (computation modulo two). In terms of cost, the former is more favorable to integer computation such as addition and multiplication while the latter is preferable for highly non-linear functions such as comparisons.

Applications often feature both linear and non-linear functionality. For example, convolution layers in deep learning consist of dot products followed by a non-linear activation function. It is therefore desirable to convert between an arithmetic computation domain and binary circuits. This has led to a line of works exploring this possibility, starting with the ABY framework [20] (Arithmetic-Boolean-Yao) in the two-party setting with semi-honest security. Other works have extended this to the setting of three parties with an honest majority [2, 30], dishonest majority with malicious security [33], as well as creating compilers that automatically decide which parts of a program should done in the binary or arithmetic domain [9, 12, 28].

A particular technique that is relevant for us is so-called daBits [33] (doubly-authenticated bits), which are random secret bits that are generated simultaneously in both the arithmetic and binary domains. These can be used for binary/arithmetic conversions in MPC protocols with any corruption setting, and have in particular been used with the SPDZ protocol [19], which provides malicious security in the dishonest majority setting. Later works have given more efficient ways of generating daBits [1, 3, 32], both with SPDZ and in the honest majority setting.

Another recent work uses function secret sharing [6] for binary/arithmetic conversions and other operations such as comparison [7]. This approach leads to a fast online phase with just one round of interaction and optimal communication complexity. However, it requires either a trusted setup, or an expensive preprocessing phase which has not been shown to be practical for malicious adversaries.

Limitations of daBits. Using daBits, it is relatively straightforward to convert between two computation domains. However, we found that in application-oriented settings the benefit of daBits alone is relatively limited. More concretely, if daBits are used to compute a comparison between two numbers that are secret-shared in \(\mathbb {Z}_M\), for large arithmetic modulus M, the improvement is a factor of three at best. The reason for this is that the cost of creating the required daBits comes quite close to computing the comparison entirely in \(\mathbb {Z}_M\). This limitation seems to be inherent with any approach based on daBits, since a daBit requires generating a random shared bit in \(\mathbb {Z}_M\). The only known way of doing this with malicious security require first performing a multiplication (or squaring) in \(\mathbb {Z}_M\) on a secret value [16, 17]. However, secret multiplication is an expensive operation in MPC, and doing this for every daBit gets costly.

1.1 Our Contributions

In this paper, we present a new approach to converting between binary and arithmetic representations in MPC. Our method is general, and can be applied to a wide range of corruption settings, but seems particularly well-suited to the case of dishonest majority with malicious security such as SPDZ [18, 19], over the arithmetic domain \(\mathbb {Z}_p\) for large prime p, or the ring \(\mathbb {Z}_{2^k}\) [13]. Unlike previous works, we do not generate daBits, but instead create what we call extended daBits (\(\mathsf {edaBits}\)), which avoid the limitations above. These allow conversions between arithmetic and binary domains, but can also be used directly for certain non-linear functions such as truncations and comparisons. We found that, for two- and three-party computation, \(\mathsf {edaBits}\) allow to reduce the communication cost by up to two orders of magnitude and the wall clock time by up to a factor of 50 while both the inputs as well as the output are secret-shared in an arithmetic domain.

Below we highlight some more details of our contribution.

Extended daBits. An \(\mathsf {edaBit}\) consists of a set of m random bits \((r_{m-1},\dots ,r_0)\), secret-shared in the binary domain, together with the value \(r = \sum _{i=0}^{m-1} r_i2^{i}\) shared in the arithmetic domain. We denote these sharings by \([r_{m-1}]_2, \dots , [r_0]_2\) and \([r]_M\), for arithmetic modulus M. Note that a daBit is simply an \(\mathsf {edaBit}\) of length \(m=1\), and m daBits can be easily converted into an \(\mathsf {edaBit}\) with a linear combination of the arithmetic shares. We show that this is wasteful, however, and \(\mathsf {edaBits}\) can in general be produced much more efficiently than m daBits, for values of m used in practice.

Efficient malicious generation of \(\mathsf {edaBits}\) . Let us first consider a simple approach with semi-honest security. If there are n parties, we have each party locally sample a value \(r^i \in \mathbb {Z}_M\), then secret-shares \(r^i\) in the arithmetic domain, and the bits of \(r^i\) in the binary domain. We refer to these sharings as a private \(\mathsf {edaBit}\) known to \(P_i\). The parties can combine these by computing \(\sum _i r^i\) in the arithmetic domain, and executing \(n-1\) protocols for addition in the binary domain, with a cost O(nm) AND gates. Compared with using daBits, which costs O(m) secret multiplications in \(\mathbb {Z}_M\), this is much cheaper if n is not too large, by the simple fact that AND is a cheaper operation than multiplication in MPC.

To extend this naive approach to the malicious setting, we need a way to somehow verify that a set of \(\mathsf {edaBits}\) was generated correctly. Firstly, we extend the underlying secret-sharing scheme to one that enforces correct computations on the underlying shares. This can be done, for instance, using authenticated secret-sharing with information-theoretic MACs as in SPDZ [19]. Secondly, we use a cut-and-choose procedure to check that a large batch of \(\mathsf {edaBits}\) are correct. This method is inspired by previous techniques for checking multiplication triples in MPC [8, 22, 23]. However, the case of \(\mathsf {edaBits}\) is much more challenging to do efficiently, due to the highly non-linear relation between sharings in different domains, compared with the simple multiplicative property of triples (shares of (a, b, c) where \(c = ab\)).

Cut-and-choose approach. Our cut-and-choose procedure begins as in the semi-honest case, with each party \(P_i\) sampling and inputting a large batch of private \(\mathsf {edaBits}\) of the form \((r_{m-1}^i,\dots ,r_0^i), r^i\). We then run a verification step on \(P_i\)’s private \(\mathsf {edaBits}\), which begins by randomly picking a small subset of the \(\mathsf {edaBits}\) to be opened and checked for correctness. Then, the remaining \(\mathsf {edaBits}\) are shuffled and put into buckets of fixed size B. The first \(\mathsf {edaBit}\) in each bucket is paired off with every other \(\mathsf {edaBit}\) in the bucket, and we run a checking procedure on each of these pairs. To check a pair of \(\mathsf {edaBits}\) r, s, the parties can compute \(r+s\) in both the arithmetic and binary domains, and check these open to the same value. If all checks pass, then the parties take the first private \(\mathsf {edaBit}\) from every bucket, and add this to all the other parties’ private \(\mathsf {edaBits}\), created in the same way, to obtain secret-shared \(\mathsf {edaBits}\). Note that to pass a single check, the adversary must have corrupted both r and s so that they cancel each other out; therefore, the only way to successfully cheat is if every bucket with a corrupted \(\mathsf {edaBit}\) contains only corrupted \(\mathsf {edaBits}\). By carefully choosing parameters, we can ensure that it is very unlikely the adversary manages to do this. For example, with 40-bit statistical security, from the analysis of [23], we could use bucket size \(B=3\) when generating more than a million sets of \(\mathsf {edaBits}\).

While the above method works, it incurs considerable overhead compared with similar cut-and-choose techniques used for multiplication triples. This is because in every pairwise check within a bucket, the parties have to perform an addition of binary-shared values, which requires a circuit with O(m) AND gates. Each of these AND gates consumes an authenticated multiplication triple over \(\mathbb {Z}_2\), and generating these triples themselves requires additional layers of cut-and-choose and verification machinery, when using efficient protocols based on oblivious transfer [22, 31, 34].

To reduce this cost, our first optimization is as follows. Recall that the check procedure within each bucket is done on a pair of private values known to one party, and not secret-shares. This means that when evaluating the addition circuit, it suffices to use private multiplication triples, which are authenticated triples where the secret values are known to party \(P_i\). These are much cheaper to generate than fully-fledged secret-shared triples, although still require a verification procedure based on cut-and-choose. To further reduce costs, we propose a second, more significant optimization.

Cut-and-choose with faulty check circuits. Instead of using private triples that have been checked separately, we propose to use faulty private triples, that is, authenticated triples that are not guaranteed to be correct. This immediately raises the question, how can the checking procedure be useful, if the verification mechanism itself is faulty? The hope is that if we randomly shuffle the set of triples, it may still be hard for an adversary who corrupts them to ensure that any incorrect \(\mathsf {edaBits}\) are canceled out in the right way by the faulty check circuit, whilst any correct \(\mathsf {edaBits}\) still pass unscathed. Proving this, however, is challenging. In fact, it seems to inherently rely on the structure of the binary circuit that computes the check function. For instance, if a faulty circuit can cause a check between a good and a bad \(\mathsf {edaBit}\) to pass, and the same circuit also causes a check between two good \(\mathsf {edaBits}\) to pass, for some carefully chosen inputs, then this type of cheating can help the adversary.

To rule this out, we consider circuits with a property we call weak additive tamper-resilience, meaning that for any tampering that flips some subset of AND gate outputs, the tampered circuit is either incorrect for every possible input, or it is correct for all inputs. This notion essentially rules out input-dependent failures from faulty multiplication triples, which avoids the above attack and allows us to simplify the analysis.

Weak additive tamper-resilience is implied by previous notions of circuits secure against additive attacks [24], however, these constructions are not practical over \(\mathbb {F}_2\). Fortunately, we show that the standard ripple-carry adder circuit satisfies our notion, and suffices for creating \(\mathsf {edaBits}\) in \(\mathbb {Z}_{2^k}\). However, the circuit for binary addition modulo a prime, which requires an extra conditional subtraction, does not satisfy this. Instead, we adapt the circuit over the integers to use in our protocol modulo p, which allows us to generate length-m \(\mathsf {edaBits}\) for any \(m < {\log p}\); this turns out to be sufficient for most applications.

With this property, we can show that introducing faulty triples does not help an adversary to pass the check, so we can choose the same cut-and-choose parameters as previous works on triple generation, while saving significantly in the cost of generating our triples used in verification. The bulk of our technical contribution is in analysing this cut-and-choose technique.

Silent OT-friendly. Another benefit of our approach is that we can take advantage of recent advances in oblivious transfer (OT) extension techniques, which allow to create a large number of random, or correlated, OTs, with very little interaction [5]. In practice, the communication cost when using this “silent OT” method can be more than 100x less than OT extension based on previous techniques [27], with a modest increase in computation [4]. In settings where bandwidth is expensive, this suits our protocol well, since we mainly use MPC operations in \(\mathbb {F}_2\) to create \(\mathsf {edaBits}\), and these are best done with OT-based techniques. This reduces the communication of our \(\mathsf {edaBits}\) protocol by an \(O(\lambda )\) factor, in practice cutting communication by 50–100x, although we have not yet implemented this optimization.

Note that it does not seem possible to exploit silent OT with previous daBit generation methods such as by Aly et al. [1]. This is due to the limitation mentioned previously that these require a large number of random bits shared in \(\mathbb {Z}_p\), which we do not know how to create efficiently using OT.

Applications: improved conversions and primitives. \(\mathsf {edaBits}\) can be used in a natural way to convert between binary and arithmetic domains, where each conversion of an m-bit value uses one \(\mathsf {edaBit}\) of length m, and a single m-bit addition circuit. (In the mod-p case, we also need one “classic” daBit per conversion, to handle a carry computation.) However, for many primitives such as secure comparison, equality test and truncation, a better approach is to exploit the \(\mathsf {edaBits}\) to perform the operation without doing an explicit conversion. In the \(\mathbb {Z}_{2^k}\) case, a similar approach was used previously when combining the SPDZ2k protocol with daBit-style conversions [16]. We adapt these techniques to work with \(\mathsf {edaBits}\), in both \(\mathbb {Z}_{2^k}\) and \(\mathbb {Z}_p\). As an additional contribution, more at the engineering level, we take great care in all our constructions to ensure they work for both signed and unsigned data types. This was not done by previous truncation protocols in \(\mathbb {Z}_{2^k}\) based on SPDZ [15, 16], which only perform a logical shift, as opposed to the arithmetic shift that is needed to ensure correctness on signed inputs.

Handling garbled circuits. Our conversion method can also be extended to convert binary shares to garbled circuits, putting the ‘Y’ into ‘ABY’ and allowing constant round binary computations. In this paper, we do not focus on this, since the technique is exactly the same as described in [1]; when using binary shares based on TinyOT MACs, conversions between binary and garbled circuit representation comes for free, based on the observation from Hazay et al. [26] that TinyOT sharings can be locally converted into shares of a multi-party garbled circuit.

Performance evaluation. We have implemented our protocol in all relevant security models and computation domains as provided by MP-SPDZ [29], and we found it reduces communication both in microbenchmarks and application benchmarks when comparing to a purely arithmetic or a daBit-based implementation. More concretely, the reduction in communication lies between a factor of 2 and 60 for comparisons from purely arithmetic to \(\mathsf {edaBits}\) and between 2 and 25 from daBits to \(\mathsf {edaBits}\). Improvements in throughput per second are slightly lower but still as high as a factor of 47. Generally, the improvements are higher for dishonest-majority computation and semi-honest security.

We have also compared our implementation with the most established software for mixed circuits [9] and found that it still improves up to a factor of two for a basic benchmark in semi-honest two-party computation. However, they maintain an advantage if the parties are far apart (100 ms RTT) due to the usage of garbled circuits.

Finally, a comparison with a purely arithmetic implementation of deep-learning inference shows an improvement of up to a factor eight in terms of both communication and wall clock time.

1.2 Paper Outline

We begin in Sect. 2 with some preliminaries. In Sect. 3, we introduce \(\mathsf {edaBits}\) and show how to instantiate them, given a source of private \(\mathsf {edaBits}\). We then present our protocol for creating private \(\mathsf {edaBits}\) in Sect. 4, based on the new cut-and-choose procedure. Then, in Sect. 4.2–4.4 we describe abstract cut-and-choose games that model the protocol, and carry out a formal analysis. Then in Sect. 5 we show how to use \(\mathsf {edaBits}\) for higher-level primitives like comparison and truncation. Finally, in Sect. 6, we analyze the efficiency of our constructions and present performance numbers from our implementation.

2 Preliminaries

In this work we consider three main algebraic structures: \(\mathbb {Z}_M\) for \(M=p\) where p is a large prime, \(M=2^k\) where k is a large integer, and \(\mathbb {Z}_2\).

2.1 Arithmetic Black-Box

We model MPC via the arithmetic black box model (ABB), which is an ideal functionality in the universal composability framework [10]. This functionality allows a set of n parties \(P_1,\dots ,P_n\) to input values, operate on them, and receive outputs after the operations have been performed. Typically (see for example Rotaru and Wood [33]), this functionality is parameterized by a positive integer M, and the values that can be processed by the functionality are in \(\mathbb {Z}_M\), with the native operations being addition and multiplication modulo M.

In this work, we build on the basic ABB to construct \(\mathsf {edaBits}\), which are used in our higher-level applications. We therefore consider an extended version of the arithmetic black box model that handles values in both binary and arithmetic domains. First, within one single instance of the functionality we can have both \(\mathsf {binary}\) and \(\mathsf {arithmetic}\) computations, where the latter can be either modulo p or modulo \(2^k\). Furthermore, the functionality allows the parties to convert a single binary share into an arithmetic share of the same bit (but not the other way round). We will use this limited conversion capability to bootstrap to our fully-fledged \(\mathsf {edaBits}\), which can convert larger ring elements in both directions, and with much greater efficiency. The details of the functionality are presented in the full version [21].

Notation. As shorthand, we write \([x]_2\) to refer to a secret bit x that has been stored by the functionality \(\mathcal {F}_{\mathsf {ABB}}\), and similarly \([x]_M\) for a value \(x \in \mathbb {Z}_M\) with \(M \in \{p,2^k\}\). We overload the operators \(+\) and \(\cdot \), writing for instance, \([y]_M = [x]_M\cdot [y]_M + c\) to denote that the secret values x and y are first multiplied using the \(\mathsf {Mult}\) command, and then the public constant c is added using \(\mathsf {LinComb}\).

3 Extended daBits

The main primitive of our work is the concept of extended daBits, or edaBits. Unlike a daBit, which is a random bit b shared as \(([b]_M,[b]_2)\), an \(\mathsf {edaBit}\) is a collection of bits \((r_{m-1},\ldots ,r_0)\) such that (1) each bit is secret-shared as \([r_i]_2\) and (2) the integer \(r = \sum _{i=0}^{m}r_i2^i\) is secret-shared as \([r]_M\).

One \(\mathsf {edaBit}\) of length m can be generated from m daBits, and in fact, this is typically the first step when applying daBits to several non-linear primitives like truncation. Instead of following this approach, we choose to generate the \(\mathsf {edaBits}\)—which is what is needed for most applications where daBits are used—directly, which leads to a much more efficient method and ultimately leads to more efficient primitives for MPC protocols.

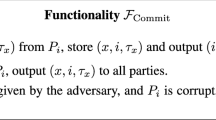

At a high level, our protocol for generating \(\mathsf {edaBits}\) proceeds as follows. Let us think initially of the passively secure setting. Each party \(P_i\) samples m random bits \(r_{i,0}^i,\ldots ,r_{i,m-1}^i\), and secret-shares these bits towards the parties over \(\mathbb {Z}_2\), as well as the integer \(r_i=\sum _{j=0}^{m-1}r_{i,j}2^j\) over \(\mathbb {Z}_M\). Since each \(\mathsf {edaBit}\) is known by one party, these \(\mathsf {edaBits}\) must be combined to get \(\mathsf {edaBits}\) where no party knows the underlying values. We refer to the former as private \(\mathsf {edaBits}\), and to the latter as global \(\mathsf {edaBits}\). The parties combine the private \(\mathsf {edaBits}\) by adding them together: the arithmetic shares can be simply added locally as \([r]_M = \sum _{i=1}^n[r_i]_M\), and the binary shares can be added via an n-input binary adder. Some complications arise, coming from the fact that the \(r_i\) values may overflow mod p. Dealing with this is highly non-trivial, and we will discuss this in detail in the description of our protocol in Sect. 3.2. However, before we dive into our construction, we will first present the functionality we aim at instantiating. This functionality is presented in Fig. 1.

3.1 Functionality for Private Extended daBits

We also use a functionality \(\mathcal {F}_{\mathsf {edaBitsPriv}}\), which models a private set of \(\mathsf {edaBits}\) that is known to one party. This functionality is defined exactly as \(\mathcal {F}_{\mathsf {edaBits}}\), except that the bits \(r_0,\ldots ,r_{m-1}\) are given as output to one party; additionally, if that party is corrupt, the adversary may instead choose these bits.

The heaviest part of our contribution lies on the instantiation of this functionality, which we postpone to Sect. 4.

3.2 From Private to Global Extended daBits

As we discussed already at the beginning of this section, one can instantiate \(\mathcal {F}_{\mathsf {edaBits}}\) using \(\mathcal {F}_{\mathsf {edaBitsPriv}}\), by combining the different private \(\mathsf {edaBits}\) to ensure no individual party knows the underlying values. Small variations are required depending on whether \(M=2^k\) or \(M=p\), for reasons that will become clear in a moment.

Now, to provide an intuition on our protocol, assume that the ABB is storing \(([r_i]_M,[r_{i,0}]_2,\ldots ,[r_{i,m-1}]_2)\) for \(i=1,\ldots ,n\), where party \(P_i\) knows \((r_{i,0},\ldots ,r_{i,m-1})\) and \(r_i = \sum _{j=1}^{m-1}r_{i,j}2^j\). The parties can add their arithmetic shares to get shares of \(r'=\sum _{i=1}^nr_i \mod M\), and they can also add their binary shares using a binary n-input adder, which results in shares of the bits of \(r'\), only without modular reduction.

Since we want to output a random m-bit integer, the parties need to remove the bits of \(r'\) beyond the m-th bit from the arithmetic shares. We have binary shares of these carry bits as part of the output from the binary adder, so using \(\log (n)\) calls to \(\mathsf {ConvertB2A}\) of \(\mathcal {F}_{\mathsf {ABB}}\), each of which costs a (regular) daBit, we can convert these to the arithmetic world and perform the correction. Notice that for the case of \(M=2^k\), \(m=k\), we can omit this conversion since the arithmetic shares are already reduced.

Even without the correction above, the least significant m bits of \(r'\) still correspond to \(r_0, \dots , r_{m-1}\). This turns out to be enough for some applications because it is easy to “delete” the most significant bit in \(\mathbb {Z}_{2^k}\) by multiplying with two. We call such an \(\mathsf {edaBit}\) loose as apposed to a strict one as defined in Fig. 1.

One must be careful with potential overflows modulo M. If \(M=2^k\), then any overflow bits beyond the k-th position can simply be discarded. On the other hand, if \(M=p\), as long as \(m < \log p\) then we can still subtract the \(\log n\) converted carries from the arithmetic shares to correct for any overflow modulo p. The protocol is given in Fig. 2, and the security stated in Theorem 1 below, whose proof follows in a straightforward manner from the correctness of the additions in the protocol. In the protocol, \(\mathsf {nBitADD}\) denotes an n-input binary adder on m-bit inputs. This can be implemented naively in a circuit with \({<} (m + \log n)\cdot (n-1)\) AND gates.

Theorem 1

Protocol \(\varPi _{\mathsf {edaBits}}\) UC-realizes functionality \(\mathcal {F}_{\mathsf {edaBits}}\) in the \((\mathcal {F}_{\mathsf {edaBitsPriv}},\mathcal {F}_{\mathsf {B2A}})\)-hybrid model.

4 Instantiating Private Extended daBits

Our protocol for producing private \(\mathsf {edaBits}\) is fairly intuitive. The protocol begins with each party inputting a set of \(\mathsf {edaBits}\) to the ABB functionality. However, since a corrupt party may input inconsistent \(\mathsf {edaBits}\) (that is, the binary part may not correspond to the bit representation of the arithmetic part), some extra checks must be set in place to ensure correctness. To this end, the parties engage in a consistency check, where each party must prove that their private \(\mathsf {edaBits}\) were created correctly. We do this with a cut-and-choose procedure, where first a random subset of a certain size of \(\mathsf {edaBits}\) is opened, their correctness is checked, and then the remaining \(\mathsf {edaBits}\) are randomly placed into buckets. Within each bucket, all \(\mathsf {edaBits}\) but the first one are checked against the first \(\mathsf {edaBit}\) by adding the two in both the binary and arithmetic domains, and opening the result. With high probability, the first \(\mathsf {edaBit}\) will be correct if all the checks pass.

This method is based on a standard cut-and-choose technique for verifying multiplication triples, used in several other works [22, 23]. However, the main difference in our case is that the checking procedure for verifying two \(\mathsf {edaBits}\) within a bucket is much more expensive: checking two multiplication triples consists of a simple linear combination and opening, whereas to check \(\mathsf {edaBits}\), we need to run a binary addition circuit on secret-shared values. This binary addition itself requires O(m) multiplication triples to verify, and the protocol for producing these triples typically requires further cut-and-choose steps to ensure correctness and security.

In this work, we take a different approach to reduce this overhead. First, we allow some of the triples used to perform the check within each bucket to be incorrect, which saves in resources as a triple verification step can be omitted. Furthermore, we observe that these multiplication triples are intended to be used on inputs that are known to the party proposing the \(\mathsf {edaBits}\), and thus it is acceptable if this party knows the bits of the underlying triples as well. As a result, we can simplify the triple generation by letting this party sample the triples together with the \(\mathsf {edaBits}\), which is much cheaper than letting the parties jointly sample (even incorrect) triples. Note that even though the triples may be incorrect, they must still be authenticated (in practice, with MACs) by the party who proposes them so that the errors cannot be changed after generating the triples.

To model this, we extend the arithmetic black box model with the following commands, for generating a private triple, and for faulty multiplication, which uses a previously stored triple to do a multiplication.

-

Input Triple. On input \((\mathsf {Triple}, \mathsf {id}, a, b, c)\) from \(P_i\), where \(\mathsf {id}\) is a fresh binary identifier and \(a,b,c\in \{0,1\}\), store \((\mathsf {Triple},i,\mathsf {id},a,b,c)\).

-

Faulty Multiplication. On input \((\mathsf {FaultyMult}, \mathsf {id}, \mathsf {id}_1,\mathsf {id}_2,\mathsf {id}_T, i)\) from all parties (where \(\mathsf {id}_1,\mathsf {id}_2\) are present in memory), retrieve \((\mathsf {binary},\mathsf {id}_1,x)\), \((\mathsf {binary},\mathsf {id}_2,y)\), \((\mathsf {Triple},i,\mathsf {id}_T,a,b,c)\), compute \(z = x \cdot y \oplus (c\oplus a\cdot b)\), and store \((\mathsf {id}, z)\).

The triple command can be directly instantiated using \(\mathsf {Input}\) from \(\mathcal {F}_{\mathsf {ABB}}\), while \(\mathsf {FaultyMult}\) uses Beaver’s multiplication technique with one of these triples. Note that in Beaver-based binary multiplication, it is easy to see that any additive error in a triple leads to exactly the same error in the product.

Now we are ready to present our protocol to preprocess private \(\mathsf {edaBits}\), described in Fig. 3. The party \(P_i\) locally samples a batch of \(\mathsf {edaBits}\) and multiplication triples, then inputs these into \(\mathcal {F}_{\mathsf {ABB}}\). The parties then run the \(\mathsf {CutNChoose}\) subprotocol, given in Fig. 4, to check that the \(\mathsf {edaBits}\) provided by \(P_{i}\) are consistent. The protocol outputs a batch of N \(\mathsf {edaBits}\), and is parametrized by a bucket size B, and values \(C,C'\) which determine how many \(\mathsf {edaBits}\) and triples are opened, respectively. \(\mathsf {BitADDCarry}\) denotes a two-input binary addition circuit with a carry bit, which must satisfy the weakly additively tamper resilient property given in the next section. As we will see later, this can be computed with m AND gates and depth \(m-1\).Footnote 1

The cut-and-choose protocol starts by using a standard coin-tossing functionality, \(\mathcal {F}_{\mathsf {Rand}}\), to sample public random permutations used to shuffle the sets of \(\mathsf {edaBits}\) and triples. The coin-tossing can be implemented, for example, with hash-based commitments in the random oracle model. Then the first C \(\mathsf {edaBits}\) and \(C'm\) triples are opened and tested for correctness; this is to ensure that not too large a fraction of the remaining \(\mathsf {edaBits}\) and triples are incorrect. Then the \(\mathsf {edaBits}\) are divided into buckets of size B, together with \(B-1\) sets of m triples in each bucket. Then, the top \(\mathsf {edaBit}\) from each bucket is checked with every other \(\mathsf {edaBit}\) in the bucket by evaluating a binary addition circuit using the triples, and comparing the result with the same addition done in the arithmetic domain. Each individual check in the \(\mathsf {CutNChoose}\) procedure takes two \(\mathsf {edaBits}\) of m bits each, and consumes m triples as well as a single regular daBit, needed to convert the carry bit from the addition into the arithmetic domain. Note that when working with modulus \(M=2^k\), if \(m=k\) then this conversion step is not needed.

4.1 Weakly Tamper-Resilient Binary Addition Circuit

To implement the \(\mathsf {BitADDCarry}\) circuit we use a ripple-carry adder, which computes the carry bit at every position with the following equation:

where \(c_0=0\), and \(x_i, y_i\) are the i-th bits of the two binary inputs. It then outputs \(z_i=x_i\oplus y_i \oplus c_i\), for \(i=0,\dots ,m-1\), and the last carry bit \(c_m\). Note that this requires m AND gates and has linear depth.

Below we define the tamper-resilient property of the circuit that we require. We consider an adversary who can additively tamper with a binary circuit by inducing bit-flips in the output wires of any AND gate.

Definition 1

A binary circuit \(\mathcal {C}: \mathbb {F}_2^{2m} \rightarrow \mathbb {F}_2^{m+1}\) is weakly additively tamper resilient, if given any tampered circuit \(\mathcal {C^{*}}\), obtained by additively tampering \(\mathcal {C}\), one of the following holds:

-

1.

\(\forall (x, y) \in \mathbb {F}_2^m:\ \mathcal {C}(x, y) = \mathcal {C^{*}}(x, y)\).

-

2.

\(\forall (x, y) \in \mathbb {F}_2^m:\ \mathcal {C}(x, y) \ne \mathcal {C^{*}}(x, y)\).

Intuitively, this says that the tampered circuit is either incorrect on every possible input, or functionally equivalent to the original circuit. In our protocol, this property restricts the adversary from being able to pass the check with a tampered circuit with bad \(\mathsf {edaBits}\) as well as the same circuit with good \(\mathsf {edaBits}\). It ensures that if any multiplication triple is incorrect, then the check at that position would only pass with either a good \(\mathsf {edaBit}\), or a bad \(\mathsf {edaBit}\) (but not both).

In the full version, we show that this property is satisfied by the ripple-carry adder circuit above, which we use.

Lemma 1

The ripple carry adder circuit above is weakly additively tamper-resilient (Definition 1).

In the case of generating \(\mathsf {edaBits}\) over \(\mathbb {Z}_p\), we still use the ripple-carry adder circuit, and our protocol works as long as the length of the \(\mathsf {edaBits}\) satisfies \(m < \log (p)\). If we wanted \(\mathsf {edaBits}\) with \(m = \lceil \log p \rceil \), for instance to be able to represent arbitrary elements of the field, it seems we would need to use an addition circuit modulo p. Unfortunately, the natural circuit consisting of a binary addition followed by a conditional subtraction is not weakly additively tamper resilient. One possible workaround is to use Algebraic Manipulation Detection (AMD) [24, 25] circuits, which satisfy much stronger requirements than being weakly additively tamper resilient, however this gives a very large overhead in practice.

4.2 Overview of Cut-and-Choose Analysis

The remainder of this section is devoted to proving that the cut-and-choose method used in our protocol is sound, as stated in the following theorem.

Theorem 2

Let \(N \ge 2^{s/(B-1)}\) and \(C=C'=B\), for some bucket size \(B \in \{3,4,5\}\). Then the probability that the \(\mathsf {CutNChoose}\) procedure in protocol \(\varPi _{\mathsf {edaBitsPriv}}\) outputs at least one incorrect \(\mathsf {edaBit}{}\) is no more than \(2^{-s}\).

Assuming the theorem above, we can prove that our protocol instantiates the desired functionality, as stated in the following theorem. The only interesting aspect to note about security is that we need \(m \le \log M\) to ensure that the value \(c'\) computed in step 4d of \(\mathsf {CutNChoose}\) does not overflow modulo p when \(M=p\) is prime. This guarantees that the check values are computed the same way in the binary and arithmetic domains.

Theorem 3

Protocol \(\varPi _{\mathsf {edaBitsPriv}}\) securely instantiates the functionality \(\mathcal {F}_{\mathsf {edaBitsPriv}}\) in the \(\mathcal {F}_{\mathsf {ABB}}\)-hybrid model.

To give some idea of parameters, in Table 1 we give the required bucket sizes and number N of \(\mathsf {edaBits}\) that must be produced to ensure \(2^{-s}\) failure probability according to Theorem 2. Note that these are exactly the same bounds as the standard cut-and-choose procedure without any faulty verification steps from [23]. Our current proof relies on case-by-case analyses for each bucket size, which is why Theorem 2 is not fully general. We leave it as an open problem to obtain a general result for any bucket size.

Overview of Analysis. We analyse the protocol by looking at two abstract games, which model the cut-and-choose procedure. The first game, \(\mathsf {RealGame}\), models the protocol fairly closely, but is difficult to directly analyze. We then make some simplifying assumptions about the game to get \(\mathsf {SimpleGame}\), and show that any adversary who wins in the real protocol can be translated into an adversary in the \(\mathsf {SimpleGame}\). This is the final game we actually analyze.

4.3 Abstracting the Cut-and-Choose Game

We first look more closely at the cut-and-choose procedure by defining an abstract game, \(\mathsf {RealGame}\), shown in Fig. 5, that models this process. Note that in this game, the only difference compared with the original protocol is that the adversary directly chooses additively tampered binary circuits, instead of multiplication triples. The check procedure is carried out exactly as before, so it is clear that this faithfully models the original protocol.

Complexities of Analyzing the Game. In this game, the adversary can pass the check with a bad \(\mathsf {edaBit}\) in two different ways. The first is to corrupt \(\mathsf {edaBits}\) in multiples of the bucket size B, and hope that they all end up in the same bucket so that the errors cancel each other out. The second way is to corrupt a set of \(\mathsf {edaBits}\) and guess the permutation in which they are most likely to end up. Once a permutation is guessed, the adversary will know how many triples it needs to corrupt in order to cancel out the errors, and must also hope that the triples end up in the right place.

To compute the exact probability of all these events, we will also have to consider the number of ways in which the bad \(\mathsf {edaBits}\) can be corrupted. For \(\mathsf {edaBits}\) which are m bits, there are up to \(2^m-1\) different ways in which they may be corrupted. On top of that, we have to consider the number of different ways in which these bad \(\mathsf {edaBits}\) may be paired in the check. In order to avoid enumerating the cases and the complex calculation involved, we simplify the game in a few ways which can only give the adversary a better chance of winning. However, we show that these simplifications are sufficient for our purpose.

4.4 The \(\mathsf {SimpleGame}\)

In this section we analyze a simplified game and bound the success probability of any adversary in that game by \(2^{-s}\). Before explaining the simple game, we will leave the complicated world of \(\mathsf {edaBits}\) and triples. We define a \(\mathsf {TRIP}\) to be a set of triples that is used to check two \(\mathsf {edaBits}\). In our simple world \(\mathsf {edaBits}\) transform into balls, GOOD \(\mathsf {edaBits}\) into white balls (

) and BAD \(\mathsf {edaBits}\) into gray balls (

) and BAD \(\mathsf {edaBits}\) into gray balls (

). An \(\mathsf {edaBit}\) is BAD when at least one of the underlying bits are not correct. \(\mathsf {TRIPs}\) transform themselves into triangles, GOOD \(\mathsf {TRIPs}\) into white triangles (

). An \(\mathsf {edaBit}\) is BAD when at least one of the underlying bits are not correct. \(\mathsf {TRIPs}\) transform themselves into triangles, GOOD \(\mathsf {TRIPs}\) into white triangles (

) and BAD \(\mathsf {TRIPs}\) into gray triangles (

) and BAD \(\mathsf {TRIPs}\) into gray triangles (

). We define a \(\mathsf {TRIP}\) to be BAD when it helps the adversary to win the game, in other words if it can alter the result of addition of two \(\mathsf {edaBits}\). Figure 6 illustrates the simple game.

). We define a \(\mathsf {TRIP}\) to be BAD when it helps the adversary to win the game, in other words if it can alter the result of addition of two \(\mathsf {edaBits}\). Figure 6 illustrates the simple game.

In the \(\mathsf {SimpleGame}\) \(\mathcal {A}\) wins if there is no \(\mathsf {Abort}\) (means \(\mathcal {A}\) passes all the checks) and there is at least one bad ball in the final output. The simple \(\mathsf {BucketCheck}\) checks all the buckets. Precisely, in each bucket two balls are being checked using one triangle. For example, let us consider the size of the buckets \(B=3\). Now one bucket contains three balls [B1, B2, B3] and two triangles [T1, T2]. Then \(\mathsf {BucketCheck}\) checks if the configurations [B1, B2|T1] and [B1, B3|T2] matches any one of these configurations  . If that is the case then \(\mathsf {BucketCheck}\) \(\mathsf {Abort}\)s. When there are two bad balls and one triangle the abort condition depends on the type of bad balls. That means we are considering all bad balls to be distinct, say with different color shades. As a result, in some cases challenger aborts if the checking configuration matches

. If that is the case then \(\mathsf {BucketCheck}\) \(\mathsf {Abort}\)s. When there are two bad balls and one triangle the abort condition depends on the type of bad balls. That means we are considering all bad balls to be distinct, say with different color shades. As a result, in some cases challenger aborts if the checking configuration matches  and in other cases it aborts due to

and in other cases it aborts due to  configuration.

configuration.

In the simple world everyone has access to a public function f, which takes two bad balls and a triangle as input and outputs 0 or 1. If the output is zero, that means it is a bad configuration, otherwise it is good. This function is isomorphic to the check from step 4 of \(\mathsf {RealGame}\), which takes 2 \(\mathsf {edaBits}\) and a circuit as inputs and outputs the result of the check. The \(\mathsf {BucketCheck}\) procedure uses f to check all the buckets. Figure 7 illustrates the check in detail. \(\mathcal {A}\) passes \(\mathsf {BucketCheck}\) if all the check configurations are favorable to the adversary. These favorable check configurations are illustrated in Table 2.

After throwing triangles, in each bucket, if the check configuration of balls and triangles are from the first three entries of Table 2, then \(\mathsf {BucketCheck}\) will not \(\mathsf {Abort}\). For the last entry \(\mathsf {BucketCheck}\) will not \(\mathsf {Abort}\) if the output of f is 1. Notice that if \(\mathsf {BucketCheck}\) passes only due to the first configuration of Table 2 in all buckets, then the output from each bucket is going to be a good ball and \(\mathcal {A}\) loses. So ideally we should take that into account while computing the winning probability of the adversary. However, for most of the cases it is sufficient to show that for large enough N the \(\Pr [\mathcal {A}\ \text {passes} \ {\mathsf {BucketCheck}}]\) is negligible in the statistical security parameter s, as that will bound the winning probability of \(\mathcal {A}\) in the simple game.

Before analyzing the \(\mathsf {SimpleGame}\), we show that security of \(\mathsf {RealGame}\) follows directly from security of \(\mathsf {SimpleGame}\). Intuitively, that is indeed the case, as in the \(\mathsf {SimpleGame}\) an adversary chooses number of bad triangles adaptively; Whereas in the \(\mathsf {RealGame}\) it has to fix the tampered circuits before seeing the permuted \(\mathsf {edaBits}\). Thus, if an adversary cannot win the \(\mathsf {SimpleGame}\) then it must be more difficult for it to succeed in the \(\mathsf {RealGame}\).

Lemma 2

Security against all adversaries in \(\mathsf {SimpleGame}\) implies security against all adversaries in \(\mathsf {RealGame}\).

Throughout the analysis, we use b to denote the number of bad balls and t to denote the number of bad triangles. Now in order to win the \(\mathsf {SimpleGame}\) the adversary has to pass all the three checks, so let us try to bound the success probability of \(\mathcal {A}\) for each of them. Throughout the analysis we consider \(N \ge 2^{\frac{s}{B-1}}\), that is for \(B \ge 3\), \(N(B-1) \ge 2^{\frac{s}{B-1}+1}\) and we are opening \(B(\ge 3)\) balls and B triangles in the first two checks.

Opening C balls: In the first check the challenger opens C balls and check whether they are good. So,

Now for \(b=(NB+C) \alpha \), where \( 1/(NB+C) \le \alpha \le 1\), the probability can be written as \((1-\alpha )^C\). In order to bound the success probability of the adversary with the statistical security parameter s, let us consider the case when \(\alpha \ge \frac{2^{s/B}-1}{2^{s/B}}\) and \(C=B\). Thus,

So if the challenger opens B balls to check then in order to pass the first check \(\mathcal {A}\) must corrupt less than \(\alpha \) fraction of the balls, where \(\alpha = \frac{2^{s/B}-1}{2^{s/B}}\). Lemma 3 follows from the above analysis.

Lemma 3

The probability of \(\mathcal {A}\) passing the first check in \(\mathsf {SimpleGame}\) is less than \(2^{-s}\), if the adversary corrupts more than \(\alpha \) fraction of balls for \(\alpha = \frac{2^{s/B}-1}{2^{s/B}}\) and the challenger opens B balls.

Opening \({{\textit{\textbf{C}}}}'\) triangles: In this case we’ll consider the probability of \(\mathcal {A}\) passing the second check. This is similar to the previous check, the only difference is that here the challenger opens \(C'\) triangles and checks whether they are good. Consequently,

As in the previous case, if t is more than \(\beta \) fraction of the total number of triangles for \(\beta = \frac{2^{s/B}-1}{2^{s/B}}\), we can upper bound the success probability of \(\mathcal {A}\) by \((2^{-s/B})^{C'}\). Thus for \(C'=B\) the success probability of \(\mathcal {A}\) in the second check can be bounded by \(2^{-s}\). Lemma 4 follows from the above analysis.

Lemma 4

The probability of \(\mathcal {A}\) passing the second check in \(\mathsf {SimpleGame}\) is less than \(2^{-s}\), if the adversary corrupts more than \(\beta \) fraction of triangles for \(\beta = \frac{2^{s/B}-1}{2^{s/B}}\) and the challenger opens B triangles.

Lemmas 3–4 show that it suffices to only look at the first two checks to prove security when the fraction of bad balls or bad triangles is sufficiently large. However, when one of these is small, we also need to analyze the checks within each bucket in the game.

BucketCheck procedure: In this case we consider that the adversary passes first two checks and reaches the last level of the game. However, in order to win the game the adversary has to pass the \(\mathsf {BucketCheck}\). Note that now we are dealing with NB balls and the challenger already fixes the arrangement of NB balls in N buckets. Once the ball permutation is fixed that imposes a restriction on the number of favorable (for \(\mathcal {A}\)) triangle permutations. For example, let us consider that the challenger throws 12 balls into 4 buckets of size 3 and fixes this permutation:

Then there are only two possible favorable permutations of triangles:

Two favorable permutations come from the fact that the third bucket contains two bad balls. From Table 2 we can see that whenever there are two bad balls in a bucket the adversary can pass the check in that bucket either with a good triangle or with a bad triangle. That means both configurations  and

and  might be favorable to the adversary. Now \(\mathcal {A}\) can use the public function f to determine the value of

might be favorable to the adversary. Now \(\mathcal {A}\) can use the public function f to determine the value of  and

and  . In this example, let us consider the value of

. In this example, let us consider the value of  to be 1; Then the first permutation of triangles is favorable to the adversary. As a result the probability of passing the \(\mathsf {BucketCheck}\) essentially depends on the probability of hitting that specific permutation of triangles among all possible arrangements of triangles. Then the probability of the adversary passing the last check given a specific arrangement of balls \(L_i\) is given by:

to be 1; Then the first permutation of triangles is favorable to the adversary. As a result the probability of passing the \(\mathsf {BucketCheck}\) essentially depends on the probability of hitting that specific permutation of triangles among all possible arrangements of triangles. Then the probability of the adversary passing the last check given a specific arrangement of balls \(L_i\) is given by:

where \(t = N(B-1) \beta \). Thus,

In order to upper bound \(\Pr [\mathcal {A}\ \text {passes} \ {\mathsf {BucketCheck}}]\) we will upper bound the probability for different ranges of \(\alpha \) and \(\beta \). Note that the total probability is given by:

If we can argue that for all possible \((2^{s/B} - 1)/2^{s/B} \ge \alpha \ge 1/NB\), the maximum probability for \(\Pr [\mathcal {A}\ \text {passes} \ {\mathsf {BucketCheck}} | L_i]\), for some configuration \(L_i\), can be bounded by \(2^{-s}\), then:

Note that the maximum possible value of \(\alpha \) is 1, however as the challenger opens C balls and \(C'\) triangles, the adversary cannot set \(\alpha \) to be 1. To pass the first check \(\mathcal {A}\) must set \(\alpha \) to be less than \((2^{s/B} - 1)/2^{s/B}\) if the challenger opens B balls and B triangles.

Now let us try to bound \(\Pr [\mathcal {A}\ \text {passes} \ {\mathsf {BucketCheck}}| L_i]\). The value of \({N(B-1) \atopwithdelims ()t}\) maximizes at \(t \approx N(B-1)/2\). Starting from the case when there is no bad triangle, the probability monotonically decreases from 1 to its minimum at \(\beta \approx 1/2\), and then it monotonically increases to 1 when all triangles are bad. We analyze the success probability of \(\mathcal {A}\) in three cases. These will be discussed in the full version. We summarize the analysis as follows.

Lemma 5

The probability of \(\mathcal {A}\) passing the \(\mathsf {BucketCheck}\) in \(\mathsf {SimpleGame}\) is less than \(2^{-s}\), if \(N \ge 2^{s/(B-1)}\) and the challenger opens \(C=B\) balls and \(C'=B\) triangles during first two checks of \(\mathsf {SimpleGame}\) for \(B \in \{3,4,5 \}\) given \(\frac{s}{B-1} > B\).

Combining Lemma 2 and Lemma 5, this completes the proof of Theorem 2.

Remark 1

As we already mentioned the bound we obtain is not general. However, from Lemma 5 it is evident that one can produce more than 1024 \(\mathsf {edaBits}\) efficiently with 40-bit statistical security using different bucket sizes with our \(\mathsf {CutNChoose}\) technique, which is sufficient for the applications we are considering in this work. It also shows that if we want to achieve 80-bit statistical security for \(N\ge 2^{20}\), then increasing the bucket size from 3 to 5 would be sufficient. Table 1 shows the number of \(\mathsf {edaBits}\) we can produce with different size of buckets.

5 Primitives

This section describes the high-level protocols we build using our \(\mathsf {edaBits}\), both over \(\mathbb {Z}_{2^k}\) and \(\mathbb {Z}_p\). We focus on secure truncation (Sect. 5.1) and secure integer comparison (Sect. 5.2), although our techniques apply to a much wider set of non-linear primitives that require binary circuits for intermediate computations. For example, our techniques also allow us to compute binary-to-arithmetic and arithmetic-to-binary conversions of shared integers, by plugging in our \(\mathsf {edaBits}\) into the conversion protocols from [11] and [16] for the field and ring cases, respectively.

Throughout this section our datatypes are signed integers in the interval \([-2^{\ell -1},2^{\ell -1})\). On the other hand, our MPC protocols operate over a modulus \(M\ge 2^\ell \) which is either \(2^k\) or a prime p. Given an integer \(\alpha \in [-2^{\ell -1},2^{\ell -1})\), we can associate to it the corresponding ring element in \(\mathbb {Z}_M\) by computing \(\alpha \bmod M\in \mathbb {Z}_M\) (modular reduction returns integers in [0, M)). We denote this map by \(\mathsf {Rep}_M(\alpha )\), and we may drop the sub-index M when it is clear from context. Finally, in the protocols below \(\mathsf {LT}\) denotes a binary less-than circuit.

5.1 Truncation

Recall that our datatypes are signed integers in the interval \([-2^{\ell -1},2^{\ell -1})\), represented by integers in \(\mathbb {Z}_M\) where \(M\ge 2^\ell \) via \(\mathsf {Rep}_M(\alpha ) = \alpha \bmod M\). The goal of a truncation protocol is to obtain \([y]\) from \([a]\), where \(y = \mathsf {Rep}\left( \left\lfloor \frac{\alpha }{2^m} \right\rfloor \right) \) and where \(a = \mathsf {Rep}(\alpha )\). This is a crucial operation when dealing with fixed-point arithmetic, and therefore an efficient solution for it has a substantial impact in the efficiency of MPC protocols for a wide range of applications. An important observation is that, as integers, \(\left\lfloor \frac{\alpha }{2^m} \right\rfloor = \frac{\alpha -(\alpha \bmod 2^m)}{2^m}\). If M is an odd prime p, this corresponds in \(\mathbb {Z}_p\) to \(y = \left( \mathsf {Rep}(\alpha ) - \mathsf {Rep}(\alpha \bmod 2^m)\right) \cdot \mathsf {Rep}(2^m)^{-1}\). Furthermore, \(\mathsf {Rep}(\alpha \bmod 2^m) = \alpha \bmod 2^m = a\bmod 2^m\) and \(\mathsf {Rep}(2^m) = 2^m\), so \(y = \frac{a - (a \bmod 2^m)}{(2^m)^{-1}}\).

We focus below in truncation over \(\mathbb {Z}_{2^k}\) as it is the less studied case. For the case of truncation over \(\mathbb {F}_p\) we refer the reader to the full version [21].

Truncation over \(\mathbb {Z}_{2^k}\). Truncation protocols over fields typically exploit the fact that one can divide by powers of 2 modulo p. This is not possible when working modulo \(2^k\). Instead, we take a different approach. Let \([a]_{2^k}\) be the initial shares, where \(a=\mathsf {Rep}(\alpha )\) with \(\alpha \in [-2^{\ell -1},2^{\ell -1})\) (notice that it may be the case that \(\ell <k\)). First, we provide a method, \(\mathsf {LogShift}\), for computing the logical right shift of a by m positions, assuming that \(a\in [0,2^\ell )\). That is, if a is

this procedure will yield shares of

Then, to compute the arithmetic shift, we use the fact thatFootnote 2

Now, to compute the logical shift, our protocol begins just like in the field case by computing shares of \(a\bmod 2^m\) and subtracting them from a, which produces shares of \((a_{k-1},\ldots ,a_m,0,\ldots ,0)\). The parties then open a masked version of \(a-(a\bmod 2^m)\) which does not reveal the upper \(k-\ell \) bits, and then shift to the right by m positions in the clear, and undo the truncated mask. One has to account for the overflow that may occur during this masking, but this can be calculated using a binary LT circuit.

The details of our logical shift protocol are provided in Fig. 8, and we analyze its correctness next. First, it is easy to see that \(c = 2^{k-m}((a+r)\bmod 2^m)\), so \(c/2^{k-m} = (a\bmod 2^m)+r - 2^mv\), where v is set if and only if \(c/2^{k-m}<r\). From this we can see that the first part of the protocol \(\left[ a\bmod 2^m\right] _{2^k}\) is correctly computed. Privacy of this first part follows from the fact that \(r\bmod 2^m\) completely masks \(a\bmod 2^m\) when c is opened.

For the second part, let us write \(b = 2^ma'\), then \(d=2^{k-\ell +m}((a'+r')\bmod 2^{\ell -m})\), so \(d/2^{k-\ell +m} = a'+r' - 2^{\ell -m}u\), where u is set if and only if \(d/2^{k-\ell +m}<r'\), as calculated by the protocol. We get then that \(a' = d/2^{k-\ell +m} - r' + 2^{\ell -m}u\), and since \(a'\) is precisely \(\mathsf {LogShift}_m(a)\), we conclude the correctness analysis.

Probabilistic Truncation. Recall that in the field case one can obtain probabilistic truncation avoiding a binary circuit, which results in a constant number of rounds. Over rings this is a much more challenging task. For example, probabilistic truncation with a constant number of rounds is achieved in ABY3 [30], but requires, like in the field case, a \(2^s\) gap between the secret values and the actual modulus, which in turn implies that only small non-negative values can be truncated.

In Fig. 9, we take a different approach. Intuitively, we follow the same approach as in ABY3, which consists of masking the value to be truncated with a shared random value for which its corresponding truncation is also known, opening this value, truncating it and removing the truncated mask. In ABY3 a large gap is required to ensure that the overflow that may happen by the masking process does not occur with high probability. Instead, we allow this overflow bit to be non-zero and remove it from the final expression. Doing this naively would require us to compute a LT circuit, but we avoid doing this by using the fact that, because the input is positive, the overflow bit can be obtained from the opened value by making the mask value also positive. This leaks the overflow bit, which is not secure, and to avoid this we mask this single bit with another random bit. This protocol can be seen as an extension of the probabilistic truncation protocol by Dalskov et al. [15]. Below, we provide an analysis for our extension that also applies to said protocol.

Now we analyze the protocol. First we notice that \(c=2^{k-\ell -1}c'\) where \(c' = (2^mr+r') + a + 2^{\ell }b - 2^{\ell +1}vb\), where v is set if and only if \((2^mr+r') + a\) overflows modulo \(2^{\ell }\). It is easy to see that this implies that \(c'_{\ell } = v\oplus b\), so we see that \(v = c'_{\ell }\oplus b\), as calculated in the protocol.

On the other hand, we have that \((c'\bmod 2^\ell ) = (2^mr+r') + a - 2^\ell v\), so \(a\bmod 2^m = (c'\bmod 2^m)-r' + 2^mu\), where u is set if \((c'\bmod 2^m)<r'\). From this it can be obtained that \(\left\lfloor (c'\bmod 2^\ell )/2^m \right\rfloor -r+2^{\ell -m} = \left\lfloor a/2^m \right\rfloor + u\).

Remark 2

The protocol we discussed above only works if \(a\in [0,2^{\ell })\), that is, if the value \(\alpha \) represented \(\alpha \in [0,2^{\ell -1})\). We can extend it to \(\alpha \in [-2^{\ell -1},2^{\ell -1})\) by using the same trick as in the deterministic truncation: The truncation is called with \(a+2^{\ell -1}\) as input, and \(2^{\ell -m-1}\) is subtracted from the output.

5.2 Integer Comparison

Another important primitive that appears in many applications is integer comparison. In this case, two secret integers \([a]_M\) and \([b]_M\) are provided as input, and the goal is to compute shares of \(\alpha {\mathop {<}\limits ^{?}} \beta \), where \(a=\mathsf {Rep}(\alpha )\) and \(b=\mathsf {Rep}(\beta )\).

As noticed by previous works (e.g. [11, 16]), this computation reduces to extracting the MSB from a shared integer as follows: If \(\alpha ,\beta \in [-2^{k-2},2^{k-2})\), then \(\alpha -\beta =[-2^{k-1},2^{k-1})\), so \(a-b = \mathsf {Rep}(\alpha -\beta )\) corresponds to the sign of \(\alpha -\beta \), which is minus (i.e. the bit is 1) if and only if \(\alpha \) is smaller than \(\beta \).

To extract the MSB, we simply notice that \(\mathsf {MSB}(\alpha ) = -\left\lfloor \frac{\alpha }{2^{k-1}} \right\rfloor \bmod 2^k\), so this can be extracted with the protocols we have seen in the previous sections.

6 Applications and Benchmarks

6.1 Theoretical Cost

We present the theoretical costs of the different protocols in the paper, starting with the cost for producing Private and Global \(\mathsf {edaBits}\) in terms of the different parameters.

Table 3 shows the main amortized costs for generating a Private and Global \(\mathsf {edaBit}\) of length m. For Global \(\mathsf {edaBits}\), we assume have the required correct Private \(\mathsf {edaBits}\) to start with, which is why number of Faulty \(\mathsf {edaBits}\) needed is 0. B is the bucket size for the cut-and-choose procedure and n is the number of parties.

Table 4 shows the cost for two of our primitives from Sect. 5, namely comparison of m-bit numbers and truncation of an \(\ell \)-bit number by m binary digits. For computation modulo a prime, there is also a statistical security parameter s.

Comparison in \(\mathbb {Z}_{2^k}\) is our only application where it suffices to use loose \(\mathsf {edaBits}\) (where the relation between the sets of shares only holds modulo \(2^m\), c.f. Sect. 3.2). This is because the arithmetic part of an \(\mathsf {edaBit}\) is only used in the first step (the masking) but not at the end. Recall that the truncation protocols always use the arithmetic part of an \(\mathsf {edaBit}\) twice, once before opening and once to compute an intermediate or the final result. Using a loose \(\mathsf {edaBit}\) would clearly distort the result. With comparison on the other hand, an \(\mathsf {edaBit}\) is only used to facilitate the conversion to binary computation, after which the result is converted back to arithmetic computation using a classic daBit.

6.2 Implementation Results

We have implemented our approach in a range of domains and security models, and we have run the generation of a million \(\mathsf {edaBits}\) of length 64 on AWS c5.9xlarge with the minimal number of parties required by the security model (two for dishonest majority and three for honest majority). Table 5 shows the throughput for various security models and computation domains, and Table 6 does so for communication. For computation modulo a prime with dishonest majority, we present figures for arithmetic computation both using oblivious transfer (OT) and LWE-based semi-homomorphic encryption (HE). Note that the binary computation is always based on oblivious transfer for dishonest majority and that all our results include all consumable preprocessing such as multiplication triples but not one-off costs such as key generation. The source code of our implementation has been added to MP-SPDZ [14].

We have also implemented 63-bitFootnote 3 comparison using \(\mathsf {edaBits}\), only daBits, and neither, and we have run one million comparisons in parallel again on AWS c5.9xlarge. Table 7 shows the throughput for our various security models and computation domains, and Table 8 does so for communication. Note that the arithmetic baseline uses either the protocol of Catrina and de Hoogh [11] (\(\mathbb {F}_p\)) or the variant by Dalskov et al. [15] (\(\mathbb {Z}_{2^k}\)).

Our results highlight the advantage of our approach over using only daBits. The biggest improvement comes in the dishonest majority with semi-honest security model. For the dishonest majority aspect, this is most likely because there is a great gap in the cost between multiplications and inputs (the latter is used extensively to generate \(\mathsf {edaBits}\)). For the semi-honest security aspect, note that our approach for malicious security involves a cascade of sacrificing because the \(\mathsf {edaBit}\) sacrifice involves binary computation, which in turn involves further sacrifice of AND triples. Finally, the improvement in communication is generally larger than the improvement in wall clock time. We estimate that this is due to the fact that switching to binary computation clearly reduces communication but increases the computational complexity.

6.3 Comparison to Previous Works

Dishonest majority. The authors of HyCC [9] report figures for biometric matching with semi-honest two-party computation in ABY [20] and HyCC. The algorithm essentially computes the minimum over a list of small-dimensional Euclidean distances. The aforementioned authors report figures in LAN (1 Gbps) and artificial WAN settings of two machines with four-core i7 processors. For a fair comparison, we have run our implementation using one thread limiting the bandwidth and latency accordingly. Table 9 shows that our results improves on the time in the LAN setting and on communication generally as well as on the in the WAN setting for larger instances compared to their A+B setting (without garbled circuits). The WAN setting is less favorable to our solution because it is purely based on secret sharing and we have not particularly optimized the number of rounds.

Honest majority. Our approach is not directly comparable to the one by Mohassel and Rindal [30] because they use the specifics of replicated secret sharing for the conversion. We do note however that their approach of restricting binary circuits to the binary domain is comparable to our solution, and that they use the same secret sharing schemes as us in the \(2^k\) domain. The full version [21] shows a comparison of their results with our approach applied to logistic regression.

daBits. Aly et al. [1] report figures for daBit generation with dishonest majority and malicious security in eight threads over a 10 Gbps network. For two-party computation using homomorphic-encryption, they achieve 2150 daBits per second at a communication cost of 94 kbit per daBit. In a comparable setting, we found that our protocol produces 12292 daBits per second requiring a communication cost of 32 kbit. Note however that Aly et al. use somewhat homomorphic encryption while our implementation is based on cheaper semi-homomorphic encryption.

Convolutional Neural Networks. We also apply our techniques to the convolutional neural networks considered be Dalskov et al. [15]. See the full version for details.

Notes

- 1.

This circuit is rather naive, and in fact there are logarithmic depth circuits with a greater number of AND gates. However, as we will see later in the section, it is important for our security proof to use specifically these naive circuits to obtain the tamper-resilient property. Furthermore, they are only used in the preprocessing phase, so the overhead in round complexity is insignificant in practice.

- 2.

Notice that we can use the \(\mathsf {LogShift}\) method on \(a+2^{\ell -1}\) since, \(\alpha +2^{\ell -1}\in [0,2^{\ell })\), which implies that \((a+2^{\ell -1})\bmod 2^k = \alpha +2^{\ell -1}\) and therefore \((a+2^{\ell -1})\bmod 2^k\) is \(\ell \)-bits long, as required.

- 3.

Comparison in secure computation is generally implemented by extracting the most significant bit of difference. This means that 63-bit is the highest accuracy achievable in computation modulo \(2^{64}\), which the natural modulus on current 64-bit platforms.

References

Aly, A., Orsini, E., Rotaru, D., Smart, N.P., Wood, T.: Zaphod: efficiently combining LSSS and garbled circuits in SCALE. In: WAHC 2019: Proceedings of the 7th ACM Workshop on Encrypted Computing & Applied Homomorphic Cryptography. ACM (2019). https://eprint.iacr.org/2019/974

Araki, T., et al.: Generalizing the SPDZ compiler for other protocols. In: Lie, D., Mannan, M., Backes, M., Wang, X. (eds.) ACM CCS 2018, pp. 880–895. ACM Press, October 2018

Bonte, C., Smart, N.P., Tanguy, T.: Thresholdizing HashEdDSA: MPC to the rescue. Cryptology ePrint Archive, Report 2020/214 (2020). https://eprint.iacr.org/2020/214

Boyle, E., et al.: Efficient two-round OT extension and silent non-interactive secure computation. In: Cavallaro, L., Kinder, J., Wang, X., Katz, J. (eds.) ACM CCS 2019, pp. 291–308. ACM Press, November 2019

Boyle, E., Couteau, G., Gilboa, N., Ishai, Y., Kohl, L., Scholl, P.: Efficient pseudorandom correlation generators: silent OT extension and more. In: Boldyreva, A., Micciancio, D. (eds.) CRYPTO 2019, Part III. LNCS, vol. 11694, pp. 489–518. Springer, Cham (2019). https://doi.org/10.1007/978-3-030-26954-8_16

Boyle, E., Gilboa, N., Ishai, Y.: Function secret sharing. In: Oswald, E., Fischlin, M. (eds.) EUROCRYPT 2015, Part II. LNCS, vol. 9057, pp. 337–367. Springer, Heidelberg (2015). https://doi.org/10.1007/978-3-662-46803-6_12

Boyle, E., Gilboa, N., Ishai, Y.: Secure computation with preprocessing via function secret sharing. In: Hofheinz, D., Rosen, A. (eds.) TCC 2019, Part I. LNCS, vol. 11891, pp. 341–371. Springer, Cham (2019). https://doi.org/10.1007/978-3-030-36030-6_14

Burra, S.S., et al.: High performance multi-party computation for binary circuits based on oblivious transfer. Cryptology ePrint Archive, Report 2015/472 (2015). http://eprint.iacr.org/2015/472

Büscher, N., Demmler, D., Katzenbeisser, S., Kretzmer, D., Schneider, T.: HyCC: compilation of hybrid protocols for practical secure computation. In: Lie, D., Mannan, M., Backes, M., Wang, X. (eds.) ACM CCS 2018, pp. 847–861. ACM Press, October 2018

Canetti, R.: Universally composable security: a new paradigm for cryptographic protocols. In: 42nd FOCS, pp. 136–145. IEEE Computer Society Press, October 2001

Catrina, O., de Hoogh, S.: Improved primitives for secure multiparty integer computation. In: Garay, J.A., De Prisco, R. (eds.) SCN 2010. LNCS, vol. 6280, pp. 182–199. Springer, Heidelberg (2010). https://doi.org/10.1007/978-3-642-15317-4_13

Chandran, N., Gupta, D., Rastogi, A., Sharma, R., Tripathi, S.: EzPC: programmable and efficient secure two-party computation for machine learning. In: 2019 IEEE European Symposium on Security and Privacy (EuroS&P), pp. 496–511. IEEE (2019)

Cramer, R., Damgård, I., Escudero, D., Scholl, P., Xing, C.: SPD \(\mathbb{Z}_{2^k}\): efficient MPC mod \(2^k\) for dishonest majority. In: Shacham, H., Boldyreva, A. (eds.) CRYPTO 2018. LNCS, vol. 10992, pp. 769–798. Springer, Cham (2018). https://doi.org/10.1007/978-3-319-96881-0_26

CSIRO’s Data61. MP-SPDZ (2020). https://github.com/data61/MP-SPDZ

Dalskov, A., Escudero, D., Keller, M.: Secure evaluation of quantized neural networks. Cryptology ePrint Archive, Report 2019/131 (2019). https://eprint.iacr.org/2019/131

Damgård, I., Escudero, D., Frederiksen, T.K., Keller, M., Scholl, P., Volgushev, N.: New primitives for actively-secure MPC over rings with applications to private machine learning. In: 2019 IEEE Symposium on Security and Privacy, pp. 1102–1120. IEEE Computer Society Press, May 2019

Damgård, I., Fitzi, M., Kiltz, E., Nielsen, J.B., Toft, T.: Unconditionally secure constant-rounds multi-party computation for equality, comparison, bits and exponentiation. In: Halevi, S., Rabin, T. (eds.) TCC 2006. LNCS, vol. 3876, pp. 285–304. Springer, Heidelberg (2006). https://doi.org/10.1007/11681878_15

Damgård, I., Keller, M., Larraia, E., Pastro, V., Scholl, P., Smart, N.P.: Practical covertly secure MPC for dishonest majority – or: breaking the SPDZ limits. In: Crampton, J., Jajodia, S., Mayes, K. (eds.) ESORICS 2013. LNCS, vol. 8134, pp. 1–18. Springer, Heidelberg (2013). https://doi.org/10.1007/978-3-642-40203-6_1

Damgård, I., Pastro, V., Smart, N., Zakarias, S.: Multiparty computation from somewhat homomorphic encryption. In: Safavi-Naini, R., Canetti, R. (eds.) CRYPTO 2012. LNCS, vol. 7417, pp. 643–662. Springer, Heidelberg (2012). https://doi.org/10.1007/978-3-642-32009-5_38

Demmler, D., Schneider, T., Zohner, M.: ABY - a framework for efficient mixed-protocol secure two-party computation. In: NDSS 2015. The Internet Society, February 2015

Escudero, D., Ghosh, S., Keller, M., Rachuri, R., Scholl, P.: Improved primitives for MPC over mixed arithmetic-binary circuits. Cryptology ePrint Archive, Report 2020/338 (2020). https://eprint.iacr.org/2020/338

Frederiksen, T.K., Keller, M., Orsini, E., Scholl, P.: A unified approach to MPC with preprocessing using OT. In: Iwata, T., Cheon, J.H. (eds.) ASIACRYPT 2015, Part I. LNCS, vol. 9452, pp. 711–735. Springer, Heidelberg (2015). https://doi.org/10.1007/978-3-662-48797-6_29

Furukawa, J., Lindell, Y., Nof, A., Weinstein, O.: High-throughput secure three-party computation for malicious adversaries and an honest majority. In: Coron, J.-S., Nielsen, J.B. (eds.) EUROCRYPT 2017, Part II. LNCS, vol. 10211, pp. 225–255. Springer, Cham (2017). https://doi.org/10.1007/978-3-319-56614-6_8

Genkin, D., Ishai, Y., Prabhakaran, M., Sahai, A., Tromer, E.: Circuits resilient to additive attacks with applications to secure computation. In: Shmoys, D.B. (ed.) 46th ACM STOC, pp. 495–504. ACM Press, May/June (2014)

Genkin, D., Ishai, Y., Weiss, M.: Binary AMD circuits from secure multiparty computation. In: Hirt, M., Smith, A. (eds.) TCC 2016, Part I. LNCS, vol. 9985, pp. 336–366. Springer, Heidelberg (2016). https://doi.org/10.1007/978-3-662-53641-4_14

Hazay, C., Scholl, P., Soria-Vazquez, E.: Low cost constant round MPC combining BMR and oblivious transfer. In: Takagi, T., Peyrin, T. (eds.) ASIACRYPT 2017, Part I. LNCS, vol. 10624, pp. 598–628. Springer, Cham (2017). https://doi.org/10.1007/978-3-319-70694-8_21

Ishai, Y., Kilian, J., Nissim, K., Petrank, E.: Extending oblivious transfers efficiently. In: Boneh, D. (ed.) CRYPTO 2003. LNCS, vol. 2729, pp. 145–161. Springer, Heidelberg (2003). https://doi.org/10.1007/978-3-540-45146-4_9

Ishaq, M., Milanova, A.L., Zikas, V.: Efficient MPC via program analysis: a framework for efficient optimal mixing. In: Cavallaro, L., Kinder, J., Wang, X., Katz, J. (eds.) ACM CCS 2019, pp. 1539–1556. ACM Press, November 2019

Keller, M.: MP-SPDZ: a versatile framework for multi-party computation. Cryptology ePrint Archive, Report 2020/521 (2020). https://eprint.iacr.org/2020/521

Mohassel, P., Rindal, P.: ABY\(^3\): a mixed protocol framework for machine learning. In: Lie, D., Mannan, M., Backes, M., Wang, X. (eds.) ACM CCS 2018, pp. 35–52. ACM Press, October 2018

Nielsen, J.B., Nordholt, P.S., Orlandi, C., Burra, S.S.: A new approach to practical active-secure two-party computation. In: Safavi-Naini, R., Canetti, R. (eds.) CRYPTO 2012. LNCS, vol. 7417, pp. 681–700. Springer, Heidelberg (2012). https://doi.org/10.1007/978-3-642-32009-5_40

Rotaru, D., Smart, N.P., Tanguy, T., Vercauteren, F., Wood, T.: Actively secure setup for SPDZ. Cryptology ePrint Archive, Report 2019/1300 (2019). https://eprint.iacr.org/2019/1300

Rotaru, D., Wood, T.: MArBled circuits: mixing arithmetic and Boolean circuits with active security. In: Hao, F., Ruj, S., Sen Gupta, S. (eds.) INDOCRYPT 2019. LNCS, vol. 11898, pp. 227–249. Springer, Cham (2019). https://doi.org/10.1007/978-3-030-35423-7_12

Wang, X., Ranellucci, S., Katz, J.: Global-scale secure multiparty computation. In: Thuraisingham, B.M., Evans, D., Malkin, T., Xu, D. (eds.) ACM CCS 2017, pp. 39–56. ACM Press, October 2017

Acknowledgements

We thank Deevashwer Rathee and the authors of [12] for pointing out corrections to our cost analysis, and Sameer Wagh for helpful comments on an earlier version of the paper. This work has been supported by the European Research Council (ERC) under the European Union’s Horizon 2020 research and innovation programme under grant agreements No 669255 (MPCPRO) and No 803096 (SPEC), the Danish Independent Research Council under Grant-ID DFF–6108-00169 (FoCC), the Concordium Blockhain Research Center, Aarhus University and an Aarhus University Forskningsfond (AUFF) starting grant.

Author information

Authors and Affiliations

Corresponding authors

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2020 International Association for Cryptologic Research

About this paper

Cite this paper

Escudero, D., Ghosh, S., Keller, M., Rachuri, R., Scholl, P. (2020). Improved Primitives for MPC over Mixed Arithmetic-Binary Circuits. In: Micciancio, D., Ristenpart, T. (eds) Advances in Cryptology – CRYPTO 2020. CRYPTO 2020. Lecture Notes in Computer Science(), vol 12171. Springer, Cham. https://doi.org/10.1007/978-3-030-56880-1_29

Download citation

DOI: https://doi.org/10.1007/978-3-030-56880-1_29

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-56879-5

Online ISBN: 978-3-030-56880-1

eBook Packages: Computer ScienceComputer Science (R0)