Abstract

Adaptive math software supports students’ learning by targeting specific math knowledge components. However, widespread use of adaptive math software in classrooms has not led to the expected changes in student achievement, particularly for racially minoritized students and students situated in poverty. While research has shown the power of human mentors to support student learning and reduce opportunity gaps, mentoring support could be optimized by using educational technology to identify the specific non-math factors that are disrupting students’ learning and direct mentors to appropriate resources related to those factors. In this paper, we present an analysis of one non-math factor—reading comprehension—that has been shown to influence math learning. We predict math performance using this non-math factor and show that it contributes novel explanatory value in modeling students’ learning behaviors. Through this analysis, we argue that educational technology could better address the learning needs of the whole student by modeling non-math factors. We suggest future research should take this learning analytics approach to identify the many different kinds of motivational and non-math content challenges that arise when students are learning from adaptive math software. We envision analyses such as those presented in this paper enabling greater individualization within adaptive math software that takes into account not only math knowledge and progress but also non-math factors.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

- Math education

- Reading comprehension

- Opportunity gap

- Cognitive tutor

- Additive Factors Model

- Gaming

- Wheel spinning

1 Introduction

1.1 Opportunity Gaps in Mathematics Education

Math software can accelerate—and even double—the rate of student learning in mathematics by identifying the specific knowledge components students have not mastered and delivering individualized instruction accordingly [1, 2]. These types of gains have been replicated across topics and contexts [3,4,5,6], leading to increasingly widespread use in schools [7]. Based on such results and a sense of excitement around the possibilities of individualized learning through technology, many school districts have invested significant resources to bring educational technology into classrooms.

As schools adopt educational technology, however, many are not seeing the changes in student achievement expected based on results from laboratory and controlled classroom experiments. Despite the success of adaptive math software in supporting learning outcomes, there is evidence that challenges in math learning go well beyond issues with math [8, 9], particularly for racially minoritized students and students situated in poverty. Racial and economic opportunity gaps prevent millions of American students from realizing their potential, but researchers have struggled to identify effective solutions to these longstanding problems [10,11,12]. Although some studies have found that math software supports learning outcomes for all students [13], educational technology is rarely designed to address existing inequities in access and opportunity, and high variation in students’ use of educational technology produces additional opportunity gaps. This suggests an important gap in current research: although we have powerful adaptive methods to teach students math, these programs are typically based solely on math performance even though we know that students’ math knowledge is not the only factor in understanding math outcomes.

1.2 AI Support for Personalized Mentoring

Recent research suggests that intensive, personalized mentoring may provide a fruitful avenue for reducing racial and economic gaps in learning opportunities and outcomes. In one study, fifth through seventh-grade students who participated in a two-year mentoring program targeting attendance and engagement were absent less often and failed fewer courses by the end of the program [14]. Another mentoring study that focused on intensive instructional support significantly improved students’ math achievement test scores and grades while reducing course failure rates [15]. Both studies took place in Chicago Public Schools with predominantly racially minoritized students and students situated in poverty. These effects are encouraging, but the large resources required for such intensive mentoring are prohibitive to many districts.

Mentoring support could be optimized by using artificial intelligence (AI) to help mentors and caregivers assess the specific non-math factors that are disrupting students’ learning and direct them to appropriate resources related to those factors. In other words, we could use the same kind of AI that currently assesses and responds to math knowledge to address the non-math factors influencing student learning. To provide this type of individualized non-math support, we must build better models.

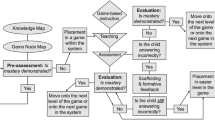

In this paper, we present an analysis of one non-math factor—reading comprehension—that has been shown to influence math learning [16,17,18,19,20]. Through this analysis, we argue that educational technology could better address the learning needs of the whole student by modeling non-math factors. We undertook this analysis in the context of developing the Personalized Learning2 (PL2) app, which is designed to supplement the individualized cognitive tutoring provided in existing adaptive math software with the motivational and learning support capabilities of human mentors [21]. Data generated through students’ use of math software could provide evidence for differentiating motivational and cognitive barriers to engagement and learning. Using this data, we aim to identify the many different kinds of motivational and non-math content challenges that arise when students are learning from adaptive math software and to adapt the PL2 app to help mentors support students through those challenges. The app currently focuses on data about students’ math performance in math software to guide mentors to appropriate resources, but analyses such as those presented in this paper could enable greater individualization that takes into account not only math knowledge and progress but also non-math factors.

1.3 Reading Comprehension and Math Performance

We focus on reading because comprehending math text requires more than numerical fluency. Although reading a math story problem is different from reading a work of fiction, reading comprehension plays an important role in comprehending math texts [16]. Students’ technical reading skills (i.e., word recognition and decoding, adaptive reading method, speed) predict their skills solving math word problems [17]. The role of reading comprehension in understanding math texts without symbols is similar to its role in understanding non-math texts, but math texts with symbols appear to require more specialized reading skills [18] and present more challenges than non-math texts [19]. This suggests the importance of both generalized reading skills and content-specific math reading skills for understanding symbolic math story problems.

Reading comprehension may have a cumulative effect in constraining math learning. A study that used third-graders’ reading comprehension scores to predict math problem solving, math conceptual knowledge, and math computation skills through eighth grade showed that reading comprehension predicted the rate of growth across all three math components, even when controlling for third-grade math achievement [20]. These results suggest that failure to support students’ reading comprehension can impact the trajectory of their math learning, but the role of reading comprehension in learning from math software is still an open question. In the current study, we aim to further examine the hypothesized role reading comprehension may have in constraining math learning.

1.4 Current Study

Our data were gathered through a popular educational technology platform used in classrooms around the United States that serves as a good example of an evidence-based, adaptive math software. Although we use reading comprehension in this adaptive math software as a test case, the ultimate goal is that our approach could be used to identify a range of non-math factors across many educational technologies. We explore the following research questions:

-

RQ1: Does a measure of reading comprehension extracted from math software relate to math performance in the software? We hypothesize that reading comprehension, as measured by performance in a non-math software tutorial, will be correlated with performance in the math modules of the same software. We predict that our measure of reading comprehension will not be correlated with non-performance behaviors, such as math hint use, because we hypothesize that we are not measuring an underlying, general behavior in the math software.

-

RQ2: Does the reading performance measure constrain growth from math learning opportunities? We will assess students’ learning by modeling growth from each additional practice opportunity, and we hypothesize that our measure of reading comprehension will be associated with less growth.

-

RQ3: Is the reading performance measure distinct from established measures of student behaviors in math software? To determine whether the reading performance measure is simply capturing other student behaviors, we will assess the relation between our measure of reading comprehension and wheel spinning and gaming. Wheel spinning occurs when a student fails to demonstrate mastery of a skill after a long time, typically indicating that they do not have the skills or support to advance [22]. Gaming occurs when students attempt to take advantage of the design of the software to get the correct answer without thinking carefully or learning, such as exploiting hints or clicking through multiple-choice answers until they find the correct response, and it typically involves making a large number of mistakes in a short amount of time [23]. If reading comprehension is highly correlated with these other measures, it may suggest that our measure of reading comprehension is simply detecting wheel spinning or gaming behaviors, since both can be associated with poor performance and learning. We hypothesize that our measure may be moderately correlated with gaming, as poor reading comprehension could lead to attempts to solve the problems without carefully reading them, but that it will not be strongly correlated with either, suggesting it is also detecting something unique.

2 Methods

2.1 Participants and Design

We analyzed two datasets: a smaller dataset with more detailed step-level data from the math software (Dataset 1) and a larger dataset with less detailed, problem-level data from the same software (Dataset 2). Dataset 1 contained data from 67 students from seven schools, including one public school and six charter schools in an urban area in the United States. Within the participating schools, more than 80% of students identified as racially minoritized and 80% qualified for free or reduced lunch. Students were in 6th, 7th, 8th, or 10th grade. Dataset 2 contained data from 197,139 students enrolled in middle school math across multiple schools across the United States. All students in both datasets used the same adaptive math software.

2.2 Materials and Procedure

Anonymized data were collected from students’ math software use. Our analysis focused on two module types in the software. The first was an introductory module focused on orienting students to the software through brief instructional text and questions about the text. Performance on the tutorial questions was selected as a measure of reading comprehension because it contained no math content; instead, students read about the math software and answered comprehension questions. For example, one page of the tutorial contained the following: “An Explore Tool is an interactive model that lets you explore math ideas on your own. These tools let you see different ways to model your mathematical thinking.” After reading the full text, students saw a list of questions including the following: “I can learn by playing with an Explore Tool, which is a(n) _____ that can help me model mathematical thinking,” with “interactive model” available as one of five multiple-choice answers. There were 24 tutorial questions, and most students completed all of them.

Performance on all other modules was used as a measure of math comprehension. Questions targeted a variety of math concepts depending on students’ grade level and instructors’ choices of assignments. Content across modules was typically presented in the form of math story problems. The number of math problems completed varied by student depending on a number of factors including time spent in the math software and problem type.

3 Results

3.1 Student Performance in Math and Reading Content

First, we examined Dataset 1 students’ performance on the math content and reading (non-math tutorial) content separately, looking at the number of problems completed, accuracy, and hint use. This initial inspection of the data allowed us to determine whether both sources of data (Math and Reading Content) provided adequate individual variation to allow for investigation of the RQs in the following sections.

All data focused on students’ first attempt on a problem. On math problems, students completed an average of 218.9 problems with an average accuracy rate of .54 and an average of 36.4 hints requests on their first attempts (Fig. 1a–c, Fig. 2a). On the reading problems, only four students completed fewer than the full 24 items available. Students had an average accuracy rate of .67 and used an average of only 1.27 hint requests on their first attempts (Fig. 1d–f, Fig. 2c). Two-tailed, paired-sample t-tests indicated that students had significantly higher accuracy rates, t(66) = 4.38, p < .001, and significantly lower hint use, t(66) = 5.55, p < .001, when they were completing the reading content compared to the math content. Overall, these analyses suggest students’ behavior in both reading and math portions of the tutor is sufficiently varied, and that the reading content is sufficiently different from the math content as demonstrated by differences in accuracy and hint behavior.

3.2 Is Reading Performance Related to Math Performance?

First, we assessed whether reading assistance scores predicted math assistance scores (RQ1). Assistance scores represent the level of support a student required to complete a learning opportunity. If the student completed the step correctly the first time, the assistance score would be 0; every hint or incorrect answer until the correct response added one assistance point to the score. Thus, higher scores mean greater assistance to complete the step, whereas lower scores mean lower assistance levels and potentially correct responses on the first try. A linear regression showed that assistance scores on the reading content explained 10% of the variance in assistance scores on the math content, \( r^{2} \) = .51, t(65) = 2.74, p = .008 (Fig. 2a). We also examined the relation between reading assistance and progress, as measured by the number of math problems completed. A linear regression showed that reading assistance explained 6% of the variance in the number of math problems completed, \( r^{2} \) = .548, t(65) = 2.04, p = .046 (Fig. 2b). Finally, to test whether reading assistance would fail to predict something that we did not hypothesize to be dependent on reading comprehension, we assessed the relation between reading assistance and math hint requests. Reading assistance did not explain a significant level of variance in average number of math hint requests on first attempt, \( r^{2} \) = .51, t(65) = −0.34, p = .74 (Fig. 2c).

To further understand how performance in the reading tutorial module relates to or constrains later performance during math learning (RQ2), we investigated how well students learned math from each added math practice opportunity using regression models. We investigated student learning using the Additive Factors Model (AFM), which extends item-response theory to include a growth or learning term. In short, AFM predicts, for each assessment opportunity, the probability of correctly answering a problem given the student’s baseline easiness and the number of opportunities with each knowledge component. We compared a baseline of AFM with a version of AFM (AFM-R) that included an added parameter to take into account the student’s performance in the reading tutorial section of the tutor. In essence, this model took into account not only students’ a priori easiness with knowledge components but also a priori easiness with reading materials.

Overall, AFM-R provided a better fit to the data than the baseline AFM (Χ2 (1) = 19.61, p < .001; see Table 1), suggesting that reading assistance has an impact on math learning. Moreover, consistent with the previous finding, requiring more assistance during the reading portion of the tutor was related to worse math learning, β = −2.55, z = 4.82, p < .001.

3.3 Is Reading Accuracy Related to Other Negative Student Behaviors While Learning Math?

Having established that performance in the pre-math reading activities was related to subsequent math performance and learning, we turn our attention to whether reading performance is related to other unproductive student behaviors, specifically wheel spinning and gaming (RQ3). We predicted that poor reading ability might be related to gaming behavior (specifically many errors in a short period of time), but not to wheel spinning (specifically indicators of effort without progress). Students with poor reading comprehension may advance in the tutor without trying, making them likely to display gaming behaviors but not wheel spinning.

For this analysis, we used existing models of wheel spinning [22] and gaming [24, 25] to determine for each knowledge component whether each student’s behavior could be classified as wheel spinning or gaming. We calculated a proportion of wheel spinning and gaming events out of all knowledge components for each student and related that proportion to performance in the reading portion of the tutor. As predicted, we saw that worse reading performance was related to more gaming events, r2 = .45, p < .001, but not related to wheel spinning behavior, r2 = .05, p = .69 (see Fig. 3).

3.4 Does This Relation Scale and Generalize?

To test the generalizability of our results, we investigated whether the relation between reading and math performance that we observed in the small dataset generalized and scaled to a larger sample.

Using Dataset 2, we extracted the same measures of reading and math performance used for our Dataset 1 analyses. Overall, our findings generalized to a larger dataset (see Fig. 4). Students with higher assistance scores during the reading section performed worse (higher assistance scores) in the subsequent math section, r2 = 0.39, t(197136) = 186.35, p < .001 (Fig. 4a), and completed fewer problems, r2 = −0.07, t(197136) = −30.433, p < .001 (Fig. 4b). However, contrary to what we saw in the small Dataset 1, we found that students who required greater assistance during the reading portion also requested more hints during the math portion, r2 = 0.27, t(197136) = 127.03, p < .001 (Fig. 4c).

4 Discussion and Conclusion

4.1 Overview

Educational technology offers opportunities for students to engage in adaptive learning but does not always lead to positive learning gains. When the technology does not consider the whole student, ignoring key factors that influence learning, it often falls short of helping students reach their optimal potential. Racially minoritized students and students situated in poverty are especially susceptible to being left behind. Technologies must do better to go beyond math content and address the other factors that influence performance. In this study, we provide a first look at using a non-math module within a prominent educational technology to identify an important factor that may negatively impact math learning: reading comprehension. In doing so, our goal was to determine if a measure of reading comprehension within a math software predicted math performance in the same software, restricted growth from learning opportunities, and differed from other measures of student behaviors.

Overall, our results regarding reading comprehension and math learning were similar to those found in previous literature: reading accuracy has an impact on math performance [16,17,18,19,20]. Our study expands on this literature, however, by demonstrating that a module within an adaptive educational technology platform can be used to identify students who may need assistance during reading, which, if provided, could support future math learning. Typical software of this nature falls short in identifying potential issues beyond content understanding. Our results show the importance of integrating novel modules that target other non-content aspects of math learning. Moreover, we were able to show that lower reading performance was related to more gaming behavior, but not to wheel spinning behavior. This suggests that students may attempt to take advantage of the system (e.g., exploiting multiple-choice responses) due to difficulties in reading. Finally, we were able to scale up and generalize our findings related to performance from our original dataset (n = 67) to a much larger dataset (n = 197,139). Unfortunately, we did not have detailed (i.e., transaction-level) data for the larger dataset; therefore, we could not attempt to generalize the gaming and wheel spinning findings.

4.2 Contributions

This study contributes to our understanding of how students learn math using educational technology. First, our findings demonstrate a new and potentially fruitful avenue for measuring and responding to non-math factors in student learning. While we focused on a text-heavy, non-math module to measure reading comprehension, we believe a similar approach could detect other non-math factors in student data captured by educational technology. Second, these results contribute to the theoretical understanding of how non-math factors influence math learning. Our findings expand on prior research to demonstrate that poor reading comprehension relates to fewer problems solved, less learning from each new learning opportunity, and more gaming behaviors in online math learning. Third, we have highlighted the benefit of adding a non-math, introductory module to existing math content lessons to help identify potential issues with reading comprehension. Doing so introduces a powerful resource to detect possible reading comprehension issues, which in turn could help designers and developers build and refine tutoring systems that better consider the whole student. Finally, our study helps create a way for tutors, mentors, and teachers (i.e., practitioners) to identify students whose literacy needs may negatively affect their math performance. This practical application addresses a need that is often overlooked in math learning. Moreover, a technological solution to identifying these students frees up the time and resources of busy practitioners who may need assistance in identifying these students on their own.

This is a first step toward supporting practitioners as they help students learn math by addressing the needs of the whole student. Programs like PL2 can create support features (e.g., resources, remediation) for students based on their needs [21]. Supporting reading comprehension as well as students’ motivational and social needs could positively affect educational factors such as attendance [26], enrollment in future math courses [27], and performance on college admissions tests [28], which could in turn help address opportunity gaps for marginalized populations of students.

4.3 Limitations

We argue that the results from the non-math (i.e., reading) module can be attributed to reading comprehension due to the nature of the content. One limitation and idea for future research is the unknown effect of motivated attention on learning math-free content. Future researchers could measure the students’ attention during these sections (e.g., through eye-tracking) to determine how attention factors into the results. Given that there were no available student performance data outside the math software, we could not validate our measures of reading and math performance with other measures; future work would benefit from comparing these within-software measures to other reading comprehension assessments and measure of math knowledge and growth. Due to the lack of transaction data in the larger dataset, we were unable to generalize our findings on gaming and wheel spinning. Nevertheless, we were still able to scale up our findings related to reading and performance. Future research could examine more comprehensive datasets as well as other educational technology software to assess the scalability and generalizability of these measures and results.

4.4 Next Steps

Future research should focus on adding modules into new and existing tutor systems and using them as detectors for students who may need help with reading comprehension. Once students have been identified, researchers and practitioners need to develop support to address issues with reading comprehension. Researchers could then test the effectiveness of the support and develop formulas for both detecting and improving reading comprehension in adaptive math software, similar to the way such technologies currently detect and support math knowledge. Similarly, research can focus on the specific pieces of word problems (e.g., length, individual word difficulty) that are most detrimental to student performance. Using this information, designers and developers can provide more support when students encounter these problems or structure modules to reduce these issues (e.g., reduce wordiness). Researchers could also experiment with the effectiveness of flagging students based on their performance and hint use in the non-math modules to determine if awareness of additional literacy needs can improve later math performance. Finally, more research should take this learning analytics approach to identify additional detectors to address the needs of the whole student. There are many other important learning factors that can affect math performance (e.g., utility value, technological literacy), and building detectors for these factors increases the likelihood of addressing challenges and improving math learning.

4.5 Conclusion

In this study, we were able to connect reading comprehension to math learning, measuring both constructs within an adaptive math software. This type of analysis will improve our understanding of how math learning interacts with non-math factors and individual differences among students. Building a better theory of how math and non-math factors predict learning will help to clarify the best forms of support for student learning and provide a roadmap for developing tutoring systems that consider the whole student when creating adaptation.

References

Koedinger, K.R., Anderson, J.R., Hadley, W.H., Mark, M.A.: Intelligent tutoring goes to school in the big city. IJAIED 8, 30–43 (1997)

Pane, J.F., Griffin, B.A., McCaffrey, D.F., Karam, R.: Effectiveness of cognitive tutor algebra I at scale. Educ. Eval. Policy Anal. 36(2), 127–144 (2014)

Aleven, V., McLaughlin, E.A., Glenn, R.A., Koedinger, K.R.: Instruction based on adaptive learning technologies. In: Handbook of Research on Learning and Instruction, pp. 522–560. Routledge (2016)

Koedinger, K.R., Brunskill, E., Baker, R.S.J.d., McLaughlin, E.A., Stamper, J.: New potentials for data-driven intelligent tutoring system development and optimization. AI Mag. 34(3), 27–41 (2013)

Lovett, M., Meyer, O., Thille, C.: The open learning initiative: measuring the effectiveness of the OLI learning course in accelerating student learning. J. Interact. Media Educ. 2008, 1 (2008)

Ritter, S., Anderson, J.R., Koedinger, K.R., Corbett, A.: Cognitive tutor: applied research in mathematics education. Psychon. Bull. Rev. 14(2), 249–255 (2007)

Calderon, V.J., Carlson, M.: Educators agree on the value of Ed Tech. Gallup Education (2019). https://www.gallup.com/education/266564/educators-agree-value-tech.aspx. Accessed 02 Mar 2020

Carnevale, A.P.: Education = success: empowering Hispanic youth and adults. Educational Testing Service and the Hispanic Association of Colleges and Universities. Washington, DC (1999)

Lee, J.: Racial and ethnic achievement gap trends: reversing the progress toward equity? Educ. Res. 31(1), 3–12 (2002)

Autor, D.H.: Skills, education, and the rise of earnings inequality among the “other 99 percent”. Science 344(6186), 843–851 (2014)

Davis, J.E.: Early schooling and academic achievement of African American males. Urban Educ. 38(5), 515–537 (2003)

Milner, H.R.: Beyond a test score: explaining opportunity gaps in educational practice. J. Black Stud. 43(6), 693–718 (2012)

Setren, E.M.: Essays on the economics of education. Ph.D. dissertation. Massachusetts Institute of Technology, Cambridge, MA (2017)

Guryan, J., et al.: The effect of mentoring on school attendance and academic outcomes: a randomized evaluation of the Check & Connect Program. Northwestern University Institute for Policy Research Working Paper No. 16-18. Northwestern University, Evanston, IL (2017)

Cook, P.J., et al.: Not too late: improving academic outcomes for disadvantaged youth. Northwestern University Institute for Policy Research Working Paper No. 15-01. Northwestern University, Evanston, IL (2015)

Fuentes, P.: Reading comprehension in mathematics. Clearing House 72(2), 81–88 (1998)

Vilenius-Tuohimaa, P.M., Aunola, K., Nurmi, J.E.: The association between mathematical word problems and reading comprehension. Educ. Psychol. 28(4), 409–426 (2008)

Österholm, M.: Characterizing reading comprehension of mathematical texts. Educ. Stud. Math. 63(3), 325–346 (2006)

Koedinger, K.R., Nathan, M.J.: The real story behind story problems: effects of representations on quantitative reasoning. J. Learn. Sci. 13(2), 129–164 (2004)

Grimm, K.J.: Longitudinal associations between reading and mathematics achievement. Dev. Neuropsychol. 33(3), 410–426 (2008)

Lobczowski, N.G., et al.: Building strategies for a personalized learning mentor app: a design case. Poster Accepted for the 2020 Annual Meeting of the American Education Research Association (AERA), San Francisco (2020)

Beck, J.E., Gong, Y.: Wheel-spinning: students who fail to master a skill. In: Lane, H.C., Yacef, K., Mostow, J., Pavlik, P. (eds.) AIED 2013. LNCS (LNAI), vol. 7926, pp. 431–440. Springer, Heidelberg (2013). https://doi.org/10.1007/978-3-642-39112-5_44

Baker, R., Walonoski, J., Heffernan, N., Roll, I., Corbett, A., Koedinger, K.: Why students engage in “gaming the system” behavior in interactive learning environments. J. Interact. Learn. Res. 19(2), 185–224 (2008)

Paquette, L., Baker, R.S., Moskal, M.: A system-general model for the detection of gaming the system behavior in CTAT and LearnSphere. In: Penstein Rosé, C., et al. (eds.) AIED 2018. LNCS (LNAI), vol. 10948, pp. 257–260. Springer, Cham (2018). https://doi.org/10.1007/978-3-319-93846-2_47

Holstein, K., Yu, Z., Sewall, J., Popescu, O., McLaren, B.M., Aleven, V.: Opening up an intelligent tutoring system development environment for extensible student modeling. In: Penstein Rosé, C., et al. (eds.) AIED 2018. LNCS (LNAI), vol. 10947, pp. 169–183. Springer, Cham (2018). https://doi.org/10.1007/978-3-319-93843-1_13

Rogers, T., Feller, A.: Reducing student absences at scale by targeting parents’ misbeliefs. Nat. Hum. Behav. 2, 335–342 (2018)

Harackiewicz, J.M., Rozek, C.S., Hulleman, C.S., Hyde, J.S.: Helping parents to motivate adolescents in mathematics and science: an experimental test of a utility-value intervention. Psychol. Sci. 23(8), 899–906 (2012)

Rozek, C.S., Svoboda, R.C., Harackiewicz, J.M., Hulleman, C.S., Hyde, J.S.: Utility-value intervention with parents increases students’ STEM preparation and career pursuit. PNAS 114(5), 909–914 (2017)

Acknowledgements

This work is supported by the Chan Zuckerberg Initiative (CZI) Grant # 2018-193694. Any opinions, findings, and conclusions or recommendations expressed in this material are those of the authors and do not necessarily reflect the views of the Chan Zuckerberg Initiative.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2020 Springer Nature Switzerland AG

About this paper

Cite this paper

Richey, J.E., Lobczowski, N.G., Carvalho, P.F., Koedinger, K. (2020). Comprehensive Views of Math Learners: A Case for Modeling and Supporting Non-math Factors in Adaptive Math Software. In: Bittencourt, I., Cukurova, M., Muldner, K., Luckin, R., Millán, E. (eds) Artificial Intelligence in Education. AIED 2020. Lecture Notes in Computer Science(), vol 12163. Springer, Cham. https://doi.org/10.1007/978-3-030-52237-7_37

Download citation

DOI: https://doi.org/10.1007/978-3-030-52237-7_37

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-52236-0

Online ISBN: 978-3-030-52237-7

eBook Packages: Computer ScienceComputer Science (R0)