Abstract

Recently Gouveia, Thomas and the authors introduced the slack realization space, a new model for the realization space of a polytope. It represents each polytope by its slack matrix, the matrix obtained by evaluating each facet inequality at each vertex. Unlike the classical model, the slack model naturally mods out projective transformations. It is inherently algebraic, arising as the positive part of a variety of a saturated determinantal ideal, and provides a new computational tool to study classical realizability problems for polytopes. We introduce the package SlackIdeals for Macaulay2, that provides methods for creating and manipulating slack matrices and slack ideals of convex polytopes and matroids. Slack ideals are often difficult to compute. To improve the power of the slack model, we develop two strategies to simplify computations: we scale as many entries of the slack matrix as possible to one; we then obtain a reduced slack model combining the slack variety with the more compact Grassmannian realization space model. This allows us to study slack ideals that were previously out of computational reach. As applications, we show that the well-known Perles polytope does not admit rational realizations and prove the non-realizability of a large simplicial sphere.

A. Macchia—Supported by the Einstein Foundation Berlin under Francisco Santos grant EVF-2015-230.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

Slack matrices of polytopes are nonnegative real matrices whose entries express the slack of a vertex in a facet inequality. In particular, the zero pattern of a slack matrix encodes the vertex-facet incidence structure of the polytope. Slack matrices have found remarkable use in the theory of extended formulations of polytopes: Yannakakis [10] proved that the extension complexity of a polytope is equal to the nonnegative rank of its slack matrix.

More generally, one can define the slack matrix of a matroid by computing the slacks of the ground set vectors in the hyperplanes of the matroid.

If P is d-dimensional polytope, replacing all positive entries in the slack matrix with distinct variables, one obtains a new sparse generic matrix \(S_P(\varvec{x})\), called the symbolic slack matrix of P. Then we define the slack ideal \(I_P\) of P as the ideal of all \((d+2)\)-minors of \(S_P(\varvec{x})\), saturated with respect to the product of all variables in \(S_P(\varvec{x})\).

Slack ideals were introduced for polytopes in [5], where it was also noted that they could be used to model the realization space of a polytope. The details of this realization space model and further properties of the slack ideal were studied in [2, 3] and [4]. An analogous realization space model for matroids was introduced in [1].

In this paper, we describe the Macaulay2 [6] package SlackIdeals.m2, that is available at https://bitbucket.org/macchia/slackideals/src/master/SlackIdeals.m2. It provides methods to define and manipulate slack matrices of polytopes, matroids, polyhedra, and cones; obtain a slack matrix directly from the Gale transform of a polytope; compute the symbolic slack matrix and the slack ideal from a slack matrix; compute the graphic ideal of a polytope, the cycle ideal and the universal ideal of a matroid.

Slack ideal computations are often out of computational reach. Therefore we develop two techniques to speed up and simplify computations. First, we suitably set to one as many entries of the slack matrix as possible. One can compute the slack ideal of this dehomogenized slack matrix and then rehomogenize the resulting ideal (see Proposition 1). The new ideal coincides with the original slack ideal if the latter is radical. Second, we obtain a reduced slack matrix by keeping the columns of a set of facets F that contains a flag (a maximal chain in the face lattice of P) and such that the facets not in F are simplicial. Combining these two strategies, we have a powerful tool for the study of hard realizability questions. As applications, we show that the well-known Perles polytope does not admit rational realizations and prove the non-realizability of a large simplicial sphere.

2 Slack Matrices and Slack Ideals

Given a collection of points \(V = \{\varvec{v}_1,\ldots , \varvec{v}_n\}\subset \mathbb {R}^d\) and a collection of (affine) hyperplanes \(H = \{\{\varvec{x}\in \mathbb {R}^d: b_i-\varvec{\alpha }_i^\top \varvec{x}= 0\} : i=1\ldots f\}\) we can define a slack matrix of the pair (V, H) by

If P is a d-polytope, we take \(V = \text {vert}(P)\) and H to be the set of facet defining hyperplanes. Then \(S_P = S_{(V,H)}\). When coordinates V are given for the vectors of a matroid M, they are always assumed to be an affine configuration which gets homogenized to form the matroid; in particular, this means that if \(V = \text {vert}(P)\), then the associated matroid is the matroid of the polytope P. The hyperplanes are taken to be all hyperplanes of M, and then \(S_M = S_{(V,H)}\).

The slackMatrix command also takes a pre-computed matroid, polyhedron or cone object as input.

Another way to compute the slack matrix of a polytope is from its Gale transform using the command slackFromGaleCircuits. Let G be a matrix with real entries whose columns are the vectors of a Gale transform of a polytope P. A slack matrix of P is computed by finding the minimal positive circuits of G, see [7, Section 5.4]. Alternatively, the command slackFromGalePlucker applies the maps of [4, Section 5] to fill a slack matrix with Plücker coordinates of the Gale transform.

The slack matrices of a few specific polytopes and matroids of theoretical importance are built-in, using the command specificSlackMatrix.

The symbolic slack matrix can be obtained by replacing the nonzero entries of a slack matrix by distinct variables; that is,

From this sparse generic matrix we obtain the slack ideal as the saturation of the ideal of its \((d+2)\)-minors by the product of all variables in \(S_{(V,H)}(\varvec{x})\):

Given a (symbolic) slack matrix of a d-polytope, \((d+1)\)-dimensional cone, or rank \(d+1\) matroid, we can compute the associated slack ideal, specifying d as an input. Unless we pass variable names as an option, the function labels the variables consecutively by rows with a single index starting from 1:

We get the same result if we compute slackIdeal(2,V), giving only the list of vertices of a d-polytope or ground set vectors of a matroid instead of a slack matrix. We also get the same result with slackIdeal(V), but the computation is faster if you provide d as an argument. As optional argument, one can choose the object to be set as "polytope", "cone", or "matroid" (default is Object=>"polytope").

To a polytope or matroid we can also associate a specific toric ideal, known as the graphic or cycle ideal, respectively. These ideals are important in the classification of certain projectively unique polytopes [3] and matroids [1], and can be computed using the commands graphicIdeal and cycleIdeal.

In [4, Section 4] it is shown that a slack matrix can be filled with Plücker coordinates of a matrix formed from the vertex coordinates of a polytope (or extreme ray generators of a cone or ground set vectors of a matroid). This idea is the basis for the reduction technique described in [4, Section 6] and Sect. 4. The Grassmannian section ideal of a polytope is also defined and shown to cut out exactly a set of representatives of the slack variety that are constructed in this way [4, Section 4.1]. The command grassmannSectionIdeal computes this section ideal given a set of vertices of a polytope and the indices of vertices that span each facet.

3 On the Dehomogenization of the Slack Ideal

Let P be a polytope and \(S_P\) its slack matrix. We define the non-incidence graph \(G_P\) as the bipartite graph whose vertices are the vertices and facets of P, and whose edges are the vertex-facet pairs of P such that the vertex is not on the facet. This graphic structure provides a systematic way to scale a maximal number of entries in \(S_P\) to 1, as spelled out in [3, Lemma 5.2]. In particular, we may scale the rows and columns of \(S_P(\varvec{x})\) so that it has ones in the entries indexed by the edges in a maximal spanning forest of the graph \(G_P\). This can be done using setOnesForest, which outputs a sequence (Y, F) where Y is the scaled symbolic slack matrix and F is the spanning forest used to scale Y.

This leads to a dehomogenized version of the slack ideal defined as follows. Given \(S_P\) and a maximal spanning forest F of \(G_P\), let \(S_P(\varvec{x}^F)\) be the symbolic slack matrix of P with all the variables corresponding to edges in F set to 1. Then the dehomogenized ideal, \(I_P^F\), is the slack ideal of this scaled slack matrix:

It is natural to ask what is the relation between \(I_P^F\) and the original slack ideal \(I_P\). In particular, we might wish to know if we can recover the full slack ideal from \(I_P^F\). From [3, Lemma 5.2] we know that any slack matrix in \(\mathcal V(I_P)\) (or, in fact, any point in the slack variety with all coordinates that correspond to F being nonzero) can be scaled to a matrix in \(\mathcal V(I^F_P)\). Conversely, it is clear that any point in \(\mathcal V(I^F_P)\) can be thought of as a point in \(\mathcal V(I_P)\). Thus, in terms of the varieties we have \(\mathcal {V}(I_P)^*/(\mathbb {R}^v\times \mathbb {R}^f) \cong \mathcal {V}(I_P^F)^*,\) where \(\mathcal {V}(I)^*\) denotes the part of the variety where all coordinates are nonzero.

To see the algebraic implications of this, let us introduce the following rehomogenization process. Notice that in the proof of [3, Lemma 5.2], we dehomogenize by following the edges of forest F starting from some chosen root(s) and moving toward the leaves. The destination vertex of each edge tells us which row or column to scale, and the edge label is the variable by which we scale. Now, given a polynomial in \(I_P^F\), using the same forest and orientation we proceed in the reverse order: starting at the leaves, for each edge of the forest, we reintroduce the variable corresponding to it in order to rehomogenize the polynomial with respect to the row or column corresponding to the destination vertex of that edge.

Example 1

Consider the slack matrix \(S_P(\varvec{x}^F)\) of the triangular prism P scaled according to forest F, pictured in Fig. 1. Then \(I_P^F = \langle x_8-1,x_{12}-1\rangle \). So we can rehomogenize, for example, the element \(x_8-x_{12}\) with respect to forest F as follows.

First, consider the leaf corresponding to column 3.

Its edge is labeled with \(x_6\), so we reintroduce that variable to the monomial \(x_{12}\) since its degree in column 3 is currently 0, while the degree of \(x_8\) in that column is 1. We continue this process until all the edges of F have been used.

Call the resulting ideal \(H({I}_P^F)\). By the tree structure, the rehomogenization process does indeed end with a polynomial that is homogeneous, as once we make it homogeneous for a row or column we never add variables in that row or column again. We now consider the effect of this rehomogenization on minors.

Lemma 1

Let p be a minor of \(S_P(\varvec{x})\) and \(p^F\) its dehomogenization by F. Then its rehomogenization \(H(p^F)\) equals p divided by the product of all variables in F that divide p.

Proof

Note that all monomials in a minor have degree precisely one on every relevant row and column. In fact they can be interpreted as perfect matchings on the subgraph of \(G_P\) corresponding to the \((d+2) \times (d+2)\) submatrix being considered. Let \(\varvec{x}^{\varvec{a}}\) and \(\varvec{x}^{\varvec{b}}\) be two distinct monomials in the minor, then their dehomogenizations are also distinct. To see this, note that if we interpret \(\varvec{a}\) and \(\varvec{b}\) as matchings, a common dehomogenization would be a common submatching \(\varvec{c}\) of both, with all the remaining edges being in F. But \({\varvec{a}} \setminus \varvec{c}\) and \(\varvec{b}\setminus \varvec{c}\) would then be distinct matchings on the same set of variables, hence their union contains a cycle, so they would not be both contained in the forest F.

Now note that when rehomogenizing a minor, we start with all degrees being zero or one for every row and column, and since we visit each node (corresponding to each of the rows/columns) exactly once by the tree structure, the degree of every row and column is at most one after homogenizing. In the first step of rehomogenizing, we start with a leaf of F, which means the variable \(x_i\) labeling its edge is the only variable in the row or column corresponding to that leaf which was set to 1. Thus if any monomial of the minor has degree zero on that row or column, it must be because \(x_i\) occurred in that monomial in the original minor.

Hence rehomogenizing will just add that variable to the monomials where it was originally present, with the exception of the case where it was present on all monomials, in which case there will be no need to add it, as the dehomogenized polynomial would be homogeneous (of degree 0) for that particular row/column.

All degrees remain 0 or 1 after this process, and now the node incident to the leaf we just rehomogenized corresponds to a row/column with exactly one variable that is still dehomogenized. Thus we can repeat the argument on the entire forest to find that each monomial rehomogenizes to itself divided by the variables that were originally present in all monomials of the minor.

Remark 1

It is important to note that \(H(I_P^F)\) is the ideal of all elements of \(I_P^F\) rehomogenized. In general, this is different from the ideal generated by the rehomogenized generators of \(I_P^F\). In the package, we rehomogenize the whole ideal by rehomogenizing the generators and saturating the resulting ideal by all the variables we just homogenized by.

For example, let V be the set of vertices of the triangular prism with spanning forest Y as computed before, and let us compute the rehomogenized ideal \(H(I_P^F)\).

Notice that, in this case the rehomogenized ideal \(H(I_P^F)\) equals the slack ideal \(I_P\).

Example 2

Recall that the generators of \(I_P^F\) for the triangular prism were \(x_8-1\) and \(x_{12}-1\), which rehomogenize to \(x_2x_3x_5x_8-x_1x_4x_6x_7\) and \(x_2x_3x_9x_{12}-x_1x_4x_{10}x_{11}\), respectively. However,

The relation between the rehomogenized ideal \(H(I_P^F)\) and the original slack ideal is given in the following lemma. The proof relies on the key fact that the variety of the rehomogenized ideal is still the same as the slack variety that we started with.

Proposition 1

Given a spanning forest F for the non-incidence graph of polytope P, the rehomogenization of its scaled slack ideal is an intermediate ideal between the slack ideal and its radical: \(I_P \subseteq H(I_P^F) \subseteq \sqrt{I_P}\).

Proof

To prove the inclusion \(I_P \subseteq H(I_P^F)\), note that \(p \in I_P\) happens if and only if \(\varvec{x}^{\varvec{a}} p \in J\) for some exponent vector \(\varvec{a}\), where J is the ideal generated by all \((d+2)\)-minors of the symbolic slack matrix of P. Dehomogenizing we get \(\varvec{x}^{\varvec{b}} p^F \in J^F\), which means \(p^F\) is in the saturation of \(J^F\) by the product of all variables, which is precisely the definition of \(I_P^F\). From Lemma 1 it follows that \(p\in H(I_P^F)\).

To prove that \(H(I_P^F) \subseteq \sqrt{I_P}\), it is enough to show that any polynomial in \(H(I_P^F)\) vanishes in the slack variety. By construction, any such polynomial must vanish on the points of the slack variety where the variables corresponding to the forest F are nonzero, \(\mathcal {V}(I_P)\backslash \mathcal {V}(\langle \varvec{x}^F\rangle )\). Thus, they vanish on the Zariski closure of that set. Considering the following containments,

we get that this closure is exactly the slack variety since  .

.

Remark 2

One would like to say that \(I_P = H(I_P^F)\), and so far we have no counterexample for this equality, since it always holds if \(I_P\) is radical, and we also have no examples of non-radical slack ideals.

4 Reduced Slack Matrices

In general, computing the slack ideal may take a long time or be infeasible, especially if the dimension of the polytope is small compared to its number of vertices and facets. In some cases we can speed up this computation combining the slack and the Grassmannian realization space models [4, Section 6]. In fact, we do not need to work with the full slack matrix, since the essential information is contained into a sufficiently large submatrix.

We will see in Examples 3 and 4, that slack ideals which we were not even able to compute (using personal computers) are now able to be calculated in a matter of a few seconds. To give an estimate of the improvement, computing the slack ideal of the full slack matrix in Example 3 requires the computation of about \(8.6 \cdot 10^9\) minors, whereas the reduced slack ideal only requires the computation of about \(1.9 \cdot 10^4\) minors.

More precisely, let P be a realizable polytope and F be a set of facets of P such that F contains a set of facets that can be intersected to form a flag in the face lattice of P and all facets of P not in F are simplicial. We call a reduced slack matrix for P the submatrix, \(S_F\), of \(S_P\) consisting of only the columns indexed by F. Set \(\mathcal {V}_F\) to be the nonzero part of the slack variety \(\mathcal {V}(I_F)\).

If \(\overline{\mathcal {V}_F}\) is irreducible, then \(\mathcal {V}_F \times \mathbb C^h \cong \mathcal {V}(I_P)^*\) are birationally equivalent, where h denotes the number of facets of P outside F [4, Proposition 6.9].

Example 3

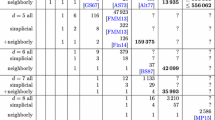

Let P be the Perles projectively unique polytope with no rational realization coming from the point configuration in [7, Figure 5.5.1, p. 93]. This is an 8-polytope with 12 vertices and 34 facet and its symbolic slack matrix \(S_P(\varvec{x})\) is a \(12 \times 34\) matrix with 120 variables.

Let \(S_F\) be the following submatrix of \(S_P\) whose 13 columns correspond to all the nonsimplicial facets of P:

The associated symbolic slack matrix is:

Using [3, Lemma 5.2], we first set \(x_i=1\) for \(i = 1,4,5,6,7,8,9,10,13,15,16,17,18,21,22,26,27,28,29,30,31,32,33,35\). The resulting scaled reduced slack ideal is:

It follows that \(x_{36}=\frac{-1 \pm \sqrt{5}}{2}\). Hence, P does not admit rational realizations.

Example 4

Let P be the following 3-dimensional simplicial sphere, constructed by Jockusch [8] and studied by Novik and Zheng [9], with 12 vertices labeled by \(1,2,\dots ,6\) and \(-1,-2,\dots ,-6\), and with the following 48 facets:

The remaining 24 facets are antipodes of the above ones, i.e., they are of the form \(\{-x,-y,-z,-t\}\) for each \(\{x,y,z,t\}\) from the above list.

This sphere, denoted by \(\varDelta ^{3,2}_6\) in [9], is centrally symmetric, and is not realizable as the boundary complexes of a centrally symmetric polytope. However, it is not known whether it is realizable as (a non-centrally symmetric) polytope [9, Problem 6.1].

The symbolic slack matrix \(S_P(\varvec{x})\) is a \(12 \times 48\) matrix with 384 variables. A reduced slack matrix (where facets 1, 3, 4, 5, 7 form a flag) is the matrix \(S_F(\varvec{x})\) below, where vertices 1, 3, 5, 6, 9 form a flag of \(S_F(\varvec{x})^\top \). Since row 10 of \(S_F(\varvec{x})\) contains four zeros, we can further reduce the above matrix and scale some of the entries according to [3, Lemma 5.2], obtaining the matrix \(S_G(\varvec{x})\).

We then reconstruct row 10 by applying the map \(\mathbf {GrV}\) defined in [4, Section 4.1] to \(S_G(\varvec{x})^\top \):

The above error means that in reconstructing row 10, we get more than four zero entries. Computing explicitly the map \(\mathbf {GrV}\), we can see that five entries are zero. This shows that P is not realizable as a polytope.

The previous example shows that the reduction process can be a powerful tool to show nonrealizability of large simplicial spheres.

References

Brandt, M., Wiebe, A.: The slack realization space of a matroid. Algebr. Comb. 2(4), 663–681 (2019)

Gouveia, J., Macchia, A., Thomas, R., Wiebe, A.: The slack realization space of a polytope. SIAM J. Discrete Math. 33(3), 1637–1653 (2019)

Gouveia, J., Macchia, A., Thomas, R., Wiebe, A.: Projectively unique polytopes and toric slack ideals. J. Pure Appl. Algebra 224(5), 14 (2020)

Gouveia, J., Macchia, A., Wiebe, A.: Combining realization space models of polytopes (2020). Preprint https://arxiv.org/abs/2001.11999

Gouveia, J., Pashkovich, K., Robinson, R., Thomas, R.: Four-dimensional polytopes of minimum positive semidefinite rank. J. Comb. Theory Ser. A 145, 184–226 (2017)

Grayson, D., Stillman, M.: Macaulay 2, a software system for research in algebraic geometry. http://www.math.uiuc.edu/Macaulay2/

Grünbaum, B.: Convex Polytopes. Graduate Texts in Mathematics, vol. 221, 2nd edn. Springer, New York (2003). https://doi.org/10.1007/978-1-4613-0019-9

Jockusch, W.: An infinite family of nearly neighborly centrally symmetric 3-spheres. J. Comb. Theory Ser. A 72(2), 318–321 (1995)

Novik, I., Zheng, H.: Highly neighborly centrally symmetric spheres (2019). Preprint https://arxiv.org/abs/1907.06115

Yannakakis, M.: Expressing combinatorial optimization problems by linear programs. J. Comput. Syst. Sci. 43(3), 441–466 (1991)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2020 Springer Nature Switzerland AG

About this paper

Cite this paper

Macchia, A., Wiebe, A. (2020). Slack Ideals in Macaulay2. In: Bigatti, A., Carette, J., Davenport, J., Joswig, M., de Wolff, T. (eds) Mathematical Software – ICMS 2020. ICMS 2020. Lecture Notes in Computer Science(), vol 12097. Springer, Cham. https://doi.org/10.1007/978-3-030-52200-1_22

Download citation

DOI: https://doi.org/10.1007/978-3-030-52200-1_22

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-52199-8

Online ISBN: 978-3-030-52200-1

eBook Packages: Computer ScienceComputer Science (R0)