Abstract

A language independent deep learning (DL) architecture for machine translation (MT) evaluation is presented. This DL architecture aims at the best choice between two MT (S1, S2) outputs, based on the reference translation (Sr) and the annotation score. The outputs were generated from a statistical machine translation (SMT) system and a neural machine translation (NMT) system. The model applied in two language pairs: English - Greek (EN-EL) and English - Italian (EN-IT). In this paper, a variety of experiments with different parameter configurations is presented. Moreover, linguistic features, embeddings representation and natural language processing (NLP) metrics (BLEU, METEOR, TER, WER) were tested. The best score was achieved when the proposed model used source segments (SSE) information and the NLP metrics set. Classification accuracy has increased up to 5% (compared to previous related work) and reached quite satisfactory results for the Kendall τ score.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

- Machine learning

- Machine translation evaluation

- Deep learning

- Neural network architecture

- Pairwise classification

1 Introduction

Deep neural networks are demonstrating a large impact on NLP. NMT [2, 14, 26, 28], in particular, has gained increasing popularity since it has shown remarkable results in several tasks and its effective approach has had a strong influence on other related NLP tasks, such as dialogue generation [8].

The evaluation of MT systems is a vital field of research, both for determining the effectiveness of existing MT systems (evaluation of the classification performance) and for guiding the MT systems modeling. Progress in the field of ΜΤ relies on assessing the quality of a new system through systematic evaluation, such that the new system can be shown to perform better than pre-existing systems. The difficulty arises in the definition of a better system. When assessing the quality of a translation, there is no single correct answer; rather, there may be any number of possible correct translations. In addition, when two translations are only partially correct -but in different ways- it is difficult to distinguish quality.

Many methods for MT evaluation have been employed. There are metrics that focus on the MT output evaluation, such as BLEU [18], METEOR [4], TER [24] and WER [25]. BLEU score is maybe the most famous and widely-used metric in MT evaluation. The closer an MT output is to the professional translation, the higher the BLEU score is. The BLEU score suffers from several shortcomings i.e. it doesn’t handle morphologically rich languages well and it doesn’t map well to human judgements. Several other metrics, that address these issues, are used, such as METEOR. The METEOR score has a good correlation with human judgement at the segment level. It is based on the alignment between the MT outputs and the professional translation. Alignments are based on synonym and paraphrase matches between words and phrases. The translation error rate (TER) and word error rate (WER) are other commonly-used metrics. They are based on the matching of the MT outputs with the professional translation. They measure the minimum number of edits needed to change the original output translation into the professional translation. Other metrics focus on performance evaluation. In some studies [15, 17], parallel corpora are used and showed that certain string-based features, e.g. the length of the segments, and similarity-based features e.g. the ratio of common suffixes shared between the MT outputs and the reference, could improve the MT system performance. They considered the task as a classification problem and they used Random Forest (RF) as classifier.

NMT can potentially perform end-to-end translation, though many NMT systems are still relying on language-dependent pre- and post-processors, which have been used in traditional SMT systems. Moses [11], a toolkit for SMT, implements a reasonably useful pre- and post-processor. Α language dependent processing also makes it hard to train multilingual NMT models.

It is important for the NLP community to develop a simple, efficient and language independent framework for automatic MT evaluation. A few studies have been reported using learning frameworks. Duh [5] uses a framework for ranking translations in parallel settings, given information of translation outputs and a reference translation. This study showed that ranking achieves higher correlation to human judgments when the framework makes use of a ranking specific feature set and of BLEU score information. They have tested the framework performance using Support Vector Machine (SVM). Another important work is presented by [7] who used syntactic and semantic information about the reference and the machine-generated translation as well, by using pre-trained embeddings and the BLEU translations scores. They used a feedforward neural network (NN) to decide which of the MT outputs is better. A learning scheme to classify machine-generated translations using information from numerous linguistic features and hand-crafted word embeddings from two MT outputs and one reference translation is presented from [16]. They used a convolutional NN to choose the right translation among two provided.

In this paper, we introduce a learning schema, for evaluating MT, similar to that of a preliminary study [16], but we extend it to a new level, both in terms of number of feature and their representation and learning framework as well.

Compared to that study, the present approach includes the following novelties:

-

the utilization of a deeper NN architecture. More hidden layers and different types were tested (Dense and LSTM layers).

-

the inclusion of an NLP metric set (BLEU score, METEOR score, TER, WER).

-

the use of the linguistic information from the SSE in EN. 18 string-based features were calculated and used as an extra input to the DL architecture.

-

the accuracy exploration of different inputs to the hidden layers (the NLP set and the string-based features).

To the best of the authors’ knowledge, this is the first time that information of the SSE combined with handcrafted features, embeddings and a set of NLP metrics are used from a DL architecture for a classification task.

2 Materials and Methods

The current section presents the corpora, the features and NLP set as well as the DL architecture used in the experiments.

2.1 Dataset

The dataset used in the experiments consists of parallel corpora in the language pairs EN-EL and EN-IT. The dataset is part of the test sets developed in the TraMOOC project [12]. They are educational corpora from video lectures and they contain mathematical expressions, URLs and many special characters, such as /, @, #. The corpora are described in detail by [15, 17]. The EN-EL corpora consists of 2686 segments and the EN-IT consist of 2745 segments. Two MT outputs were used - one generated by the Moses SMT toolkit [11] and the other generated by the NMT Nematus toolkit [22]. Both models trained on in- and out-of-domain data. In- and out-of-domain data included widely known corpora e.g. TED, OPUS. In order to improve the classification, a professional translation is provided for every segment. More details on the training datasets can be found in [27].

2.2 The Feature Set Used

The feature set used is based on linguistics features divided in three categories: i) string similarity features, such as ratios between words of S1, S2 and Sr, word distances (e.g. Dice distance [20]), percentage of segments similarity, ii) features finding the percentage of the noise in the data set (e.g. repeated words) and iii) features using length factor (LF) [21]. More details on the feature set used can be found in [17]. In this work, in order to check if the information from SSE will help the accuracy, additional features from the SSE in the EN language are used. Based on the other features, it is observed that features containing ratios are more effective to the classifier. These features are: 1) the words and character length of the SSE, 2) the ratio between these lengths in the SSE and the two MT outputs, 3) the longest word length, 4) the ratio between longest words from SSE and the two MT outputs and Sr translation.

2.3 Word Embeddings

The use of word embeddings helped us to model the relations between the two translations and the reference. In these experiments, hand-crafted embeddings were used, for the two MT outputs and the reference translation as well for both language pairs. The encoding function used is the one-hot function. The size, in number of nodes, of the embedding layer is 64 for both languages. The input dimensions of the embedding layers are in agreement with the vocabulary of each language (taking into account the most frequent words): 400 for the EN-EL language pair and 200 for the EN-IT language pair. The embedding layer used is the one provided by Keras [10] with TensorFlow as backend [1].

2.4 The NLP Metrics Used

The NLP set used in these experiments contains the BLEU score, METEOR, TER and WER. To calculate the BLEU score, an implementation of the BLEU score from the Python Natural Language Toolkit library [13] is used. For the calculation of the other three metrics, the code from GitHub [6] is used. All metrics were calculated for (S1, S2), (S1, Sr), (S2, Sr).

2.5 The DL Schema

This study approaches the MT evaluation problem as a classification task. In particular, two volunteer linguists-annotators chose the better MT output. The linguists annotate the corpora as follows: Y = 0 if S1 is better than S2, and Y = 1 if S2 is better than S1 for both language pairs. Where Y is the output, i.e. the label of the classification class. This information is used as the ‘ground truth’. As an input to the learning schema, the vectors (S1, S2, Sr) were used, in a parallel setting. The embedding layer (as described in Sect. 2.3) is applied and the respective embeddings EmbS1, EmbS2 and EmbSr were created. The embeddings EmbS1, EmbS2 and EmbSr were contracted in a pairwise setting, and the vectors (EmbS1, EmbS2), (EmbS1, EmbSr) and (EmbS2, EmbSr) were created. These vectors are the input to the hidden layers h12, h1r, h2r respectively. Using hidden layers h1r and h2r, the similarity between the two MT outputs and the professional translation (Sr) is explored. It is important to investigate the similarity between S1 and S2, so an extra hidden layer h12 is added. Interestingly, it is often observed that the MT outputs were more similar to each other than to the Sr. Every hidden layer h12, h1r, h2r, got as an extra input 2D matrixes H12[i, j], H1r[i, j], H2r[i, j], where i is the number of segments and j is the number of features. These matrices contain information about (i) the NLP set for S1-S2, S1-Sr, S2-Sr (as described in Sect. 2.4) or (ii) information about linguistic features of the SSE, i.e. n-grams, or (iii) the combination of the previous two options. The outputs of the hidden layers h12, h1r, h2r are grouped and became the input to the last layer of the NN model. An extra 2D A[i, j] matrix with hand-crafted features (string-based) (as described in Sect. 2.2) was added to this last layer.

The model of the DL architecture is shown in Fig. 1.

A suitable function to describe the input-output relationship in the training data should be selected. The output label is modeled as a random variable in order to minimize the discrepancy between the predicted and the true labels – maximum likelihood estimation. The binary classification problem is modeled as a Bernoulli distribution (Eq. 1)

Where by is the sigmoid function σ(wTx + b), wT and b are network’s parameters.

Finally, the MaxAbsScaler [19] is used, as a preprocessing method for EmbS1, EmbS2, EmbSr and matrices H12[i, j], H1r[i, j], H2r[i, j], A[i, j] as well. Every feature is scaled by its maximum absolute value.

3 Experimental Setup and Results

This section describes the details about experiments and its results.

3.1 Network Parameters

After experimentation, in order to test the proposed DL architecture, the model architecture for the experiments is defined as follows (Table 1).

3.2 Evaluation Scores

There are many machine learning evaluation metrics. In this study, commonly used metrics in classification (precision, recall and F-score) were used for the model performance evaluation. The first score (precision) shows the number of the correctly predictive values, the second score (recall) shows the percentage of total results correctly classified by the model. However, because of the unbalanced precision and recall, F-score (F1), which is a harmonic mean of precision and recall, is used. It is important to analyze the relationship between the MT outputs and the human translation, using a statistic metric - Kendall τ [9]. It is a non-parametric test used to measure the ordinal association between the two MT outputs. Kendall τ is calculated for every language pair and the macro average across all language pairs.

3.3 Results

The main results of the experiments are shown in Table 2. Different experiments were tested in the same DL architecture - using different information. The NLP set gave 67% accuracy for EN-EL and 60% for EN-IT. Subsequently, the goal was to verify if the SSE information can improve the model accuracy. Indeed, an increase of 2% of the classification accuracy for EN-EL and EN-IT is observed. Better accuracy results are reported when the proposed NN model uses both the information from the NLP set and SSE (72% accuracy for EN-EL/70% for EN-IT). It’s quite interesting that when the proposed NN model is used, without using any extra information in the hidden layers, it correctly classifies all the instances for the NMT class. Nevertheless, this model cannot be considered as the best, because the number of the correctly classified instances for the SMT class was low. The 2D matrixes H12[i, j], H1r[i, j], H2r[i, j] utilization in every hidden layer h12, h1r, h2r gave balance between the correct instances.

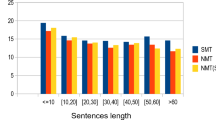

It is important to have balance of accuracy performance for both classes, so the F1 score is used. In order to make a direct comparison with other models [3, 23], additional experiments were run, using, for some of them, the WEKA framework as backend [22] for the SVM and RF classifiers. It is observed that the proposed model achieves a better F1 score 4% compared with the RF, and 5% with SVM (Fig. 2).

Table 3 shows the Kendall τ results for different models. Firstly, Kendall τ is presented for four commonly used metrics in MT evaluation (NLP set), comparing the MT outputs S1, S2 with the reference Sr. These metrics achieved Kendall τ between 14–20. However, when they were used as extra input to the hidden layers, they led to significant improvements. In Table 2, Kendall τ values are presented for the model using different configuration setups. The NN itself achieves lower τ value compared to the other NN architectures, something which should not be surprising because this architecture does not use any further linguistic information. The NLP set utilization in the NN gets Kendall τ average (AVG) for both languages 27 points. This is because NLP metrics contain significant linguistic information about the languages (i.e. similarity scores, length). An increase up to 2.5 points is observed using information about the SSE (in English). Moreover, the Kendall τ reaches its highest value when both the NLP set and SSE information were applied (36 for EL/32 for IT).

3.4 Linguistic Analysis

Linguistic analysis helps us to understand better the reasons why the MT output that belongs to NMT class yields higher accuracy and Kendall τ scores in both languages pairs. In Table 4, two cases are presented in the EN-EL language pair that the model didn’t classify correctly.

-

ID 1:

-

In this segment, S2 made two serious mistakes. In the literal sense, the compound word bandwagon is a wagon used for carrying a band in a parade or procession. As a metaphor, the word bandwagon is used for an activity, cause, that is currently fashionable or popular and attracting increasing support. S2 “didn’t know” the metaphorical meaning of the word, so it has erroneously translated only the second part of the compound word in question: wagon as άμαξα (carriage, coach). Moreover, it is surprising that S2 didn’t even translate the first part of that compound word (band).

-

S2 has the phrase gut feeling. Gut feeling is an idiom, meaning an instinct or intuition, an immediate or basic feeling or reaction without a logical rationale. S2 has literally translated the phrase: το ένστικτο του εντέρου (!) (the instinct of the gut). Even though in English there is also the idiom gut instinct, as a synonym of gut feeling, in Greek the literal translation of gut instinct is non-sensical.

-

Finally, S2 also made a slight mistake. It erroneously translated the adverb phrase by habit (habitually) literally: από τη συνήθεια (from the habit).

-

S1 has erroneously translated the above adverbial phrase by bandwagon as με ρεύμα, being unclear as to the precise meaning of the word ρεύμα, as in Greek this is a polysemous term that may refer to: electricity, drift, current, stream. With the preposition με, the Greek version is closer to the first meaning: with electricity (!), but this is nonsensical.

-

-

ID2:

-

S1 has not localized the proper noun Robert Pratten and rightly so, as this is the most common choice.

-

S1 did not at all translate the first of the two phrases: francise transmedia as well as the second word of the second phrase: portmanteau transmedia. S1 has only translated the first word of this phrase: portmanteau, without, nevertheless, adopting the very common sense of the word: bag, luggage, valise, but a special and relatively rare one: σύμμειξη (compounding, blending). The professional linguist did not at all translate these phrases.

-

On the contrary, S2 translated the same phrases in a completely erroneous way: τρανζίστορ (transistor) and τρανζίσον (no meaning in Greek) respectively. S2 translated these phrases incompletely and erroneously, obviously “misled” by the prefix: –trans of transmedia.

-

Neither S1 nor S2 identified that franchise transmedia and portmanteau transmedia are methodologies (methods, techniques, approaches), as professional linguist (Reference) did.

-

4 Conclusion and Future Work

In this study, it is presented a DL architecture for classifying the best MT output between two options provided (one from an SMT model and the other from an NMT model), given a reference translation and an annotation schema, as well. It is worth mentioning that the translation was from EN to EL, and EN to IT which increased the task complexity, since the Greek and Italian languages are both morphologically rich languages. Well known NLP metrics were calculated and became extra inputs to the NN. Also, linguistics features from the SSE were used. The model’s accuracy performance was tested in configurations. When the NN combines embeddings, the NLP set (BLEU, METEOR, TER, WER) and SSE information (i.e. some ratios) achieved better accuracy results (increase up to 5%) and a higher Kendall τ score (increase up to 4 points) compared to related work. A linguistic analysis is also provided in order to explain linguistically the above results.

In future work, it is important to study other aspects which are likely to improve the DL architecture accuracy, such as a) a different NN configuration (e.g. different kinds of NN layers, batch normalization, learning rate), b) a feature selection method to reject the features that aren’t effective for the model and c) a feature importance method to apply the proper feature weights during the NN training. In addition, it worth exploring the reasons for which the proposed model presents low accuracy values in the EN-IT pair, even though it is language independent. Finally, the model will be tested with another dataset, including in- and out-of-domain data.

References

Abadi, M., et al.: Tensorflow: a system for large-scale machine learning. In: 12th {USENIX} Symposium on Operating Systems Design and Implementation ({OSDI} 2016), USA, pp. 265–283. USENIX Association (2016)

Bahdanau, D., Cho, K., Bengio, Y.: Neural machine translation by jointly learning to align and translate. In: Proceedings of 3th International Conference on Learning Representations, San Diego, pp. 1–15. ICLR (2015)

Barrón-Cedeño, A., Màrquez Villodre, L., Henríquez Quintana, C.A., Formiga Fanals, L., Romero Merino, E., May, J.: Identifying useful human correction feedback from an on-line machine translation service. In: Proceedings of 23rd International Joint Conference on Artificial Intelligence, Beijing, pp. 2057–2063. AAAI Press (2013)

Denkowski, M., Lavie, A.: Meteor universal: language specific translation evaluation for any target language. In: Proceedings of the 9th Workshop on Statistical Machine Translation, Baltimore, Maryland, USA, pp. 376–380. ACL (2014)

Duh, K.: Ranking vs. regression in machine translation evaluation. In: Proceedings of the 3rd Workshop on Statistical Machine Translation, Columbus, Ohio, pp. 191–194. ACL (2008)

GitHub. https://github.com/gcunhase/NLPMetrics. Accessed 20 Feb 2020

Guzmán, F., Joty, S., Màrquez, L., Nakov, P.: Pairwise neural machine translation evaluation. arXiv preprint arXiv:1912.03135. In: Proceedings of the 53rd Annual Meeting of the Association for Computational Linguistics and the 7th International Joint Conference on Natural Language Processing, Beijing, China, pp. 805–814. ACL (2015)

Jaitly, N., Sussillo, D., Le, Q.V., Vinyals, O., Sutskever, I., Bengio, S.: A neural transducer. Cornell University Library. arXiv preprint arXiv:1511.04868 (2015)

Kendall, M.: A new measure of rank correlation. Biometrika 30(1/2), 81–93 (1938)

Keras: Deep learning library for theano and tensorflow. https://keras.io/k7.8. Accessed 20 Feb 2020

Koehn, P., et al.: Moses: open source toolkit for statistical machine translation. In: Proceedings of the 45th Annual Meeting of the ACL on Interactive Poster and Demonstration Sessions, Prague, pp. 177–180. ACL (2007)

Kordoni, V., et al.: TraMOOC (translation for massive open online courses): providing reliable MT for MOOCs. In: Proceedings of the 19th Annual Conference of the European Association for Machine Translation (EAMT), Riga, pp. 376–400. European Association for Machine Translation (EAMT) (2016)

Loper, E., Bird, S.: NLTK: the natural language toolkit. In: Proceedings of the ACL 2002 Workshop on Effective Tools and Methodologies for Teaching Natural Language Processing and Computational Linguistics, USA, pp. 63–70. ACL (2002)

Luong, M.T., Pham, H., Manning, C.D.: Effective approaches to attention-based neural machine translation. In: Proceedings of the 2015 Conference on Empirical Methods in Natural Language Processing, Lisbon, Portugal, pp. 1412–1421. ACL (2015)

Mouratidis, D., Kermanidis, K.L.: Automatic selection of parallel data for machine translation. In: Iliadis, L., Maglogiannis, I., Plagianakos, V. (eds.) AIAI 2018. IAICT, vol. 520, pp. 146–156. Springer, Cham (2018). https://doi.org/10.1007/978-3-319-92016-0_14

Mouratidis, D., Kermanidis, K.L.: Comparing a hand-crafted to an automatically generated feature set for deep learning: pairwise translation evaluation. In: 2nd Workshop on Human-Informed Translation and Interpreting Technology, Varna, Bulgaria, pp. 66–74. HiT-IT (2019)

Mouratidis, D., Kermanidis, K.L.: Ensemble and deep learning for language-independent automatic selection of parallel data. Algorithms 12(1), 12–26 (2019)

Papineni, K., Roukos, S., Ward, T., Zhu, W.J.: BLEU: a method for automatic evaluation of machine translation. In: Proceedings of the 40th Annual Meeting on Association for Computational Linguistics, Philadelphia, pp. 311–318. Association for Computational Linguistics (2002)

Pedregosa, F., et al.: Scikit-learn: machine learning in python. J. Mach. Learn. Res. 12, 2825–2830 (2011)

Peris, Á., Cebrián, L., Casacuberta, F.: Online learning for neural machine translation post-editing. Cornell University Library. arXiv preprint 1, pp. 1–12. arXiv:1706.03196 (2017)

Pouliquen, B., Steinberger, R., Ignat, C.: Automatic identification of document translations in large multilingual document collections. In: Proceedings of the International Conference Recent Advances in Natural Language Processing (RANLP), Borovets, pp. 401–408. Recent Advances in Natural Language Processing (RANLP) (2003)

Sennrich, R., et al.: Nematus: a toolkit for neural machine translation. In: Proceedings of the EACL 2017 Software Demonstrations, Valencia, pp. 65–68. ACL (2017)

Singhal, S., Jena, M.: A study on WEKA tool for data preprocessing, classification and clustering. Int. J. Innov. Technol. Explor. Eng. (IJITEE) 2(6), 250–253 (2013)

Snover, M., Dorr, B., Schwartz, R., Micciulla, L., Makhoul, J.: A study of translation edit rate with targeted human annotation. In: Proceedings of the 7th Conference of the Association for Machine Translation in the Americas, Cambridge, pp. 223–231. The Association for Machine Translation in the Americas (2006)

Su, K.Y., Wu, M.W., Chang, J.S.: A new quantitative quality measure for machine translation systems. In: Proceedings of the 14th Conference on Computational Linguistics, Nantes, France, vol. 2, pp. 433–439. Association for Computational Linguistics (1992)

Vaswani, A., et al.: Attention is all you need. In: 31st Conference on Neural Information Processing Systems, Long Beach, CA, USA, pp. 5998–6008. NIPS (2017)

Sosoni, V., et al.: Translation crowdsourcing: creating a multilingual corpus of online educational content. In: Proceedings of the 11th International Conference on Language Resources and Evaluation, Japan, pp. 479–483. European Language Resources Association (2018)

Wu, Y., et al.: Google’s neural machine translation system: bridging the gap between human and machine translation. arXiv preprint arXiv:1609.08144 (2016)

Acknowledgments

This project has received funding from the GSRT for the European Union’s Horizon 2020 research and innovation program under grant agreement No 644333.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2020 IFIP International Federation for Information Processing

About this paper

Cite this paper

Mouratidis, D., Kermanidis, K.L., Sosoni, V. (2020). Innovative Deep Neural Network Fusion for Pairwise Translation Evaluation. In: Maglogiannis, I., Iliadis, L., Pimenidis, E. (eds) Artificial Intelligence Applications and Innovations. AIAI 2020. IFIP Advances in Information and Communication Technology, vol 584. Springer, Cham. https://doi.org/10.1007/978-3-030-49186-4_7

Download citation

DOI: https://doi.org/10.1007/978-3-030-49186-4_7

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-49185-7

Online ISBN: 978-3-030-49186-4

eBook Packages: Computer ScienceComputer Science (R0)