Abstract

Automatic Driver Assistance System (ADAS) is a concept to have an innovative automatic driver which is growing rapidly now-a-days. Even though an ADAS system is functioning automatically without the necessity of driver in presence, it is not considered as completely autonomous car. In some of the practical situations, it is more typical to handle few complications in driving. So, the latest technologies have not grown to the level of creating fully autonomous driving system. The ADAS is developed to avoid the road accidents due to human errors. There are various ADAS technologies developed so-far to provide driver safety and comfort.

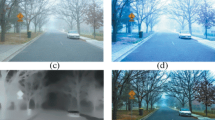

Different control units are embedded in to the entire system for full control of the driving system. One of the control unit is Pedestrian Crash Avoidance Mitigation (PCAM). And one of the most difficult parts to assist the PCAM control unit is during the winter season when there is huge fog or haziness on the roads. So, an additional processing unit should be developed to get proper assistance to the ADAS system to avoid the crash during pedestrian crossing the road. For this purpose, a special imaging system should be introduced for better visualization of roads, nearby vehicles and people crossing the roads. Infrared imaging camera is additionally used with the normal camera system. So that the two images were processed with a concept of fusion, which combines the best properties of two images, and produce a clear vision of scene. This may improve the effective improvement in the PCAM control unit and enabling the autonomous cars to be driven successfully even in the heavy hazed areas without any crashes or accidents.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Adella, E., Varhelyia, A., & Della Fontana, M. (2011). The effects of a driver assistance system for safe speed and safe distance e a real-life field study. Transportation Research Part C: Emerging Technologies, 19(1), 145–155.

van Arem, B., van Driel, C. J. G., & Visser, R. (2006). The impact of cooperative adaptive cruise control on traffic-flow characteristics. IEEE Transactions on Intelligent Transportation Systems, 7(4), 429–436.

Coelingh, E., & Eidehall, A. (2010). Collision warning with full auto brake and pedestrian detection e a practical example of automatic emergency braking. In 13th International IEEE Conference on Intelligent Transportation Systems, Madeira Island, Portugal, pp. 155–160.

Wang, X., Wang, M., & Li, W. (2014). Scene-specific pedestrian detection for static video surveillance. IEEE Transactions on Pattern Analysis and Machine Intelligence, 36(2), 361–374.

Tarel, J.-P., Hautière, N., Cord, A., Gruyer, D., & Halmaoui, H. (2010). Improved visibility of road scene images under heterogeneous fog. In IEEE Intelligent Vehicles Symposium, San Diego, CA, pp. 478–485.

Calderara, S., Piccinini, P., & Cucchiara, R. (2011). Vision based smoke detection system using image energy and color information. Machine Vision and Applications, 22(4), 705–719.

Narasimhan, S. G., & Nayar, S. K. (2003). Contrast restoration of weather degraded images. IEEE Transactions on Pattern Analysis and Machine Learning, 25(6), 713–724.

Jian, L., Yang, X., Zhou, Z., Zhou, K., & Liu, K. (2018). Multi-Scale image fusion through rolling guidance filter. Future Generation Computer Systems, 83, 310–325.

Mustafa, H. T., Yang, J., & Zareapoor, M. (2019). Multi-Scale convolutional neural network for multi-focus image fusion. Image and Vision Computing, 85, 26–35.

Fattal, R. (2008). Single image dehazing. ACM Transactions on Graphics, 27(3), 72.

Zhang, B., & Zhao, J. (2017). Hardware implementation for real-time haze removal. IEEE Transactions on Very Large Scale Integration (VLSI) Systems, 25(3), 1188–1192.

Kumar, R., Kaushik, B. K., & Balasubramanian, R. (2017). FPGA implementation of image dehazing algorithm for real time applications. Proceedings of SPIE, 10396, 1039633.

Shen, H., Meng, X., & Zhang, L. (2016). An integrated framework for the spatio-temporal spectral fusion of remote sensing images. IEEE Transactions on Geoscience and Remote Sensing, 54(12), 7135–7148.

Wang, L., Du, J., Zhu, S., Fan, D., & Lee, J. (2016). New region-based image fusion scheme using the discrete wavelet frame transform. In IEEE International Conference on Intelligent Control and Automation (WCICA), pp. 3066–3070.

Nirmala, D. E., Paul, A. B. S., & Vaidehi, V. (2013). Improving independent component analysis using support vector machines for multimodal image fusion. Journal of Computer Science, 9, 1117–1132.

Berman, D., Treibitz, T., & Avidan, S. (2016). Non-local image dehazing. In IEEE Conference on Computer Vision and Pattern Recognition, pp. 1674–1682.

Narasimhan, S. G., & Nayar, S. K. (2000). Chromatic framework for vision in bad weather. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. (CVPR), Hilton Head Island, SC, pp. 598–605.

Choi, L. K., You, J., & Bovik, A. C. (2015). Referenceless prediction of perceptual fog density and perceptual image defogging. IEEE Transactions on Image Processing, 24(11), 3888–3901.

Zhu, Q., Mai, J., & Shao, L. (2015). A fast single image haze removal algorithm using color attenuation prior. IEEE Transactions on Image Processing, 24(11), 3522–3533.

Gibson, K. B., Vo, D. T., & Nguyen, T. Q. (2012). An investigation of dehazing effects on image and video coding. IEEE Transactions on Image Processing, 21(2), 662–673.

Kumar, R., Kaushik, B. K., & Balasubramanian, R. (2019). Multispectral transmission map fusion method and architecture for image dehazing. In IEEE Transactions on Very Large Scale Integration (VLSI) Systems, pp. 1063–8210.

Tarel, J. P., & Hautiere, N. (2009). Fast visibility restoration from a single colour or gray level image. In IEEE International Conference on Computer Vision, pp. 2201–2208.

Author information

Authors and Affiliations

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2021 Springer Nature Switzerland AG

About this chapter

Cite this chapter

Bhavani, M.D.L., Murugan, R. (2021). The Concept of Fusion for Clear Vision of Hazy Roads in ADAS. In: Gupta, N., Prakash, A., Tripathi, R. (eds) Internet of Vehicles and its Applications in Autonomous Driving. Unmanned System Technologies. Springer, Cham. https://doi.org/10.1007/978-3-030-46335-9_8

Download citation

DOI: https://doi.org/10.1007/978-3-030-46335-9_8

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-46334-2

Online ISBN: 978-3-030-46335-9

eBook Packages: EngineeringEngineering (R0)