Abstract

There is a rich history in predicting ecological interactions in nature going back to the seminal work of Robert May. Historically, the use of models in ecological decision making has been centered on tools that require less data and are easier to communicate into policy. However, the explosion in the availability of ecological data, as well as the ready access to computer power, has opened the door to more detailed computation tools. This in turn has created a suite of questions about how and how much model-based projections can influence decision making in management of ecological resources. Here we address some of these issues particularly those created by model complexity, data quality and availability, and model acceptance by policy makers. Our goal is to use specific examples to critically examine these issues for both gaps and opportunities in how models can be used to inform decision making at the level of ecosystems and in the currency of ecosystem services to people.

“All models are wrong, but some models are useful”

George E.P. Box

You have full access to this open access chapter, Download chapter PDF

Similar content being viewed by others

-

Model complexity should fit the focal management question and the available data.

-

The most useful ecosystem-based models are transparent with output that is easily translated into the language of policy. These endpoints are best accomplished with stakeholder engagement in model development.

-

Multiple-model approaches, such as ensemble modeling, are better and more transferable than a single modeling tool.

-

Model utility for Ecosystem-Based Management (EBM) decision making should be demonstrated through short-term forecasting efforts to improve the acceptance of models as a decision tool.

-

Greater investment in ecological forecasting based on models as a testbed for model performance and improvement.

-

Standardization of process for communicating model output in the language of policy including effective communication of model uncertainty as risk.

-

Focus on improvement of existing models rather than new model development. This should be done in cooperation with policy makers early to improve model utility.

1 Introduction

Mathematical modeling has a rich history in ecology, but its foundations come from the physical sciences where well-characterized relationships such as reaction-diffusion are repeatable and well-validated (Getz 1998). The use of these relationships to address ecological questions has sprouted from multiple disciplines including population ecology (May 1974b), food web ecology (Whipple 1999), and nutrient dynamics (Clark and Gelfand 2006). What all these pathways have in common is the desire to use observable relationships to either predict or describe important but complex ecological outcomes. May (1974a) championed this concept in his seminal work using models to understand patterns in population dynamics. The work of May and others fostered debate on the utility of models vs. observation and fostered a modeling discipline in ecology that has grown steadily into a functional tool for management (Griffith and Fulton 2014; Nielsen et al. 2018; Piroddi et al. 2017).

Ultimately, we need models that are more than the sum of their parts and produce novel observations or theory useful for understanding nature. Nowhere is this more visible than in the effort to shift from management of single issues, such as nutrient load reduction, to a paradigm of ecosystem management (Heymans et al. 2018). Ecosystem dynamics are complicated and largely unobservable making models a pivotal tool for forecasting change (Heymans et al. 2016; Rose et al. 2010). The concept of ecosystem services further complicates the question, since social and economic dynamics not just ecological dynamics must also be considered (Austen et al. 2019). Models that incorporate all these dynamics are rare and largely unvalidated, yet the roadmap has been laid for such efforts and like the early days of ecological modeling the debate has shifted to incorporate models that predict ecosystem services (Nielsen et al. 2018; Zvoleff and An 2014). In this chapter we explore some of the issues related to ecosystem service modeling, first by defining it and then by exploring its possibilities and limits. This is not intended to be a thorough review of available ecosystem models, rather it is a discussion of where we are as a discipline and where we need to go to fully operationalize models for ecosystem decision making.

Ecosystem Goods and Services (EGS) are those elements of nature that directly benefit people. Models are well-suited to inform on an ecosystem good or service as the core value of models is to synthesize scientific information into a targeted endpoint. However, a focus on EGS as an endpoint for decision making requires socio/economic components, which are rarely included in ecosystem modeling (Bagstad et al. 2014; Fulford et al. 2015; Sanchirico and Mumby 2009). One example of interdisciplinary modeling for decision making is the integration of an economic model (Resource Investment Optimization System—RIOS) and an Ecosystem Services (ES) modeling tool (Integrated Valuation of Ecosystem Services and Tradeoffs—InVEST) to evaluate ES costs and benefits impacted by land use/land cover planning in the Thadee watershed in southern Thailand (Trisurat 2013, www.ipbes.net/resources/assessment reports). The more common approach is ecological modeling that forecasts EGS production without considering beneficiaries or assumes service delivery to be unrelated to changes in production (Craft et al. 2009; Redhead et al. 2018). Such limitations are not realistic and there is a need to broaden cross-disciplinary work in modeling to bridge this gap. The key stumbling block is to measure and communicate utility of models from different disciplines (Fulton 2010).

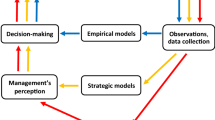

Model-based decision making is highly dependent on the accessibility and reliability of the chosen model. Accessibility encompasses ownership of the model framework, data availability as well as technical expertise, all which can affect a model’s usability. Reliability considers the accuracy of model output, but also considers model acceptance based on how well the output is understood and the model’s prior track record solving similar problems. Most of the issues to be discussed in this chapter find their origins in the need to demonstrate either model accessibility or reliability prior to application. There is typically a continuum of decision making from a purely policy-based to a data-based decision process. From the policy perspective, decisions are based largely on authoritative opinion. As the need for expert opinion grows, the decision begins to shift towards technical guidance and finally, when specific data are available, towards numerical decision rules. More complicated decisions are naturally shifted to the data end of the spectrum, however as problems become more complicated, so do the data. Ultimately the synthesis of that data into the language of policy can become a limiting factor. Here is where the value of models in decision making is most evident, as they are a powerful tool for synthesizing data. Yet, the process of data synthesis also yields a new challenge, that of communicating to non-technical decisions-makers. There is a balance to be struck between the amount of synthesis possible in any situation (e.g., how complicated is the problem?) and the ability to communicate the result well-enough to make it useful. In this chapter we take three examples of model applications to EGS in environmental decision making to illustrate how this balance was struck in each case.

The first example is taken from marine fisheries management and applies a population dynamics model to the challenge of maintaining a sustainable harvest in the context of both a variable fishery and a variable environment. Marine Fishery Stock Assessment (MFSA) has a long history of applying models to decision making. Stock assessment models such as the Stock Synthesis (SS) model (Methot 2009) are used to project stability of exploited populations in response to both fishery harvest and environmental variability (SEDAR 2016). Under MFSA models are usually combined with a formal process of stakeholder engagement to assure the right data and perspectives are utilized. The Gulf of Mexico Red Snapper (Lutjanus campechanus) stock (GMFMC 2018) provides an example. The sustainability of Gulf Red Snapper is assessed based on Maximum Sustainable Yield (MSY) i.e. the level at which harvest will not prevent the stock from replacing itself over the short term (~5 years). The SS model tracks population dynamics under different harvest scenarios and produces a prediction of MSY. Multiple model runs are used to account for several levels of uncertainty and model outcomes are combined to generate a best estimate of MSY, which is converted to a decision rule based on the uncertainty in the estimate and the level of acceptable risk of overfishing the stock. The models used in this assessment, as well as the input data for the model, are both well-validated and accepted for decision making (GMFMC 2018). In this example the ES is fishery harvest and the primary stakeholder (fishers) are engaged in the model-based decision process.

The second example comes from landscape management and involves a projection of how changes in land use practice in a watershed may impact the water quality of an estuary or other receiving water body (USDA-NRCS 2013). This example described the application of the Soil and Water Assessment Tool (SWAT) model (USDA NRCS; https://swat.tamu.edu/) to land conservation practices in Chesapeake Bay (CB) watershed USA. The SWAT model is a partially distributed hydrology model that plots water movement through a watershed to estimate the loading rate of nutrients and pollutants to the estuary. In the CB watershed, SWAT was used to examine changes in nutrient load between 2003–2006 and 2011 based on conservation practices in farm land mostly ‘edge of field’ retention of soil and nutrients. The model predicted a reduction delivered to streams in sediment (82%), nitrogen (44%), and phosphorus (75%). These results reinforce the value of land conservation on Bay water quality, but the information is not formally used to shape decisions. Rather the model output may be used to validate recommendations made to land owners for good conservation practice. In this example the ES is Bay water quality, which is an intermediate EGS leading to multiple benefits including recreational opportunities, shoreline land value, and aquatic biodiversity.

The third example comes from ecosystem management efforts in the Baltic Sea focusing on eutrophication over a large region using an ensemble modelling approach based on four different coastal eutrophication models (Skogen et al. 2014). The four models differed in coverage and approach but all projected coastal eutrophication and hypoxia responses to nutrient loading over time. The outcome was a map of eutrophication ‘problem areas’ in the Baltic Sea region that can be used to guide future conservation efforts. As in the case of the SWAT model, these results are not a part of a formal decision-making process but inform potential decisions aimed at reducing eutrophication. Ensemble models allow for different perspectives in modeling to be used and combined into a common recommendation (Skogen et al. 2014). In this example the ES is water qualtiy related to balanced primary production and a low incidence of hypoxia. These are intermediate EGS that contribute to fishery production, biodiversity, and human health.

Box 1 Central Questions in Making Model-Based Assessments Useful in Environmental Decision Making

-

1.

Necessary complexity—How much detail is needed to address a management problem?

-

2.

Operationalizing model output—Translating model output into the language of policy.

-

3.

Transparent and transferable—Proper engagement with decision makers so they accept and use model-based information.

In this chapter we explore some of the dominant issues related to model-based assessments for environmental decision making based on ES assessments. We will use our examples from major areas of environmental decision making but we explore common ground by considering how models can be made more useful across important environmental issues. Three major issues exist in making models acceptable and reliable for model-based assessments of ES (Box 1). First models are meant to be simplified reflections of nature, but how much detail is needed? Modelers must address the question of how much model complexity is necessary for a given problem. Second, model output must be presented in a way that it is useful for decision making. This includes both translating model output into policy terms and properly communicating model uncertainty. The translation of model output and uncertainty into policy terms is called operationalizing the information. Graphic outputs and summarized metrics like ecological thresholds facilitate the operationalization process. Third, the model itself must be transparent and transferable to an issue and ecosystem. This means that not only is the model useful for a given problem, but it is also viewed as useful by policy makers and stakeholders, which may require early stakeholder engagement in model development. All these issues are necessary to make models useful for decision making, they are addressed here by examining some of the major issues in model choice and development.

2 Issues of Model Complexity

Quantitative models exist along a broad spectrum of complexity from simple empirical models (e.g. linear regression) to large mechanistic models that contain hundreds of parameters. By nature, simple empirical models are easier to conceptualize and fit as the parameters are simply components of a transformation from data to model output (X → Y). A good example is the exponential growth model (See Getz 1998), which describes exponential population growth and introduces the concept of carrying capacity in the case of limited resources. There is a tradition of parsimony in modeling that finds its roots in empirical models, since degrees of freedom are lost as fitting parameters are added (Getz 1998; May 1974a). In contrast, mechanistic models serve to recreate real pathways between data and model prediction in a way that allows for observable responses at the sub-model level but requires parameters that are themselves derived from empirical relationships. A simple example of a mechanistic model is the application of a functional response to predator-prey dynamics (Rosenweig and MacArthur 1963). The addition of data-derived parameters greatly adds to the complexity of the model, as well as the data requirements, but also provides a platform for examining the effects of real change that is not generally acceptable in empirical models where results cannot exceed the bounds of the data used to fit the model. If mechanistic models can be properly calibrated and validated for use, they become more useful for theoretical exploration of system change (Getz 1998). See Lewis et al. (2020) in this volume for additional examples of models across the complexity spectrum.

History of parsimony in modeling is derived largely from empirical modeling and its dependence on ‘Goodness of Fit’. Empirical models are penalized based on inclusion of additional complexity if that complexity does not contribute significantly to the fit of the model (Burnham and Anderson 1998). However, that constraint is based on complexity that is not typically biologically interpretable. For instance, using a 3-parameter polynomial vs. a 2-parameter polynomial may improve fit, but adds very little to the interpretation of the results. This can be contrasted with mechanistic models commonly employed in ecological forecasting where new complexity may improve fit but also contributes to interpretation of the results (Scott et al. 2016). For instance, a well fit model predicting primary productivity in an estuary that does not consider plankton grazing is not a priori better as this is a significant loss term in real systems. In such a case, a modeler may accept a decline in the model fit to assure important dynamics are included. As data availability and computational power have increased, so has the attraction of increasing model complexity. Yet, neither of these improvements is a good reason to increase model complexity, rather that decision should be based on the trade-off between parsimony and realism (e.g., ARIES, Martínez-López et al. 2019). What rule should we use to justify model complexity in any particular situation?

The best approach is to match model complexity to the system and question in hand. Getz (1998) provided a review of model complexity relating it directly to the utility of models as a scientific tool. In that review, he highlighted the need to remain true to scientific principles of hypothesis testing to determine cause and the value of models for seeking a pattern that is believable and testable. Testability requires simplicity, but this goal is hard to apply in ecosystem science dealing with large complex systems. The question is how representative is the observed pattern in simplistic models? The second goal of predicting outcomes is much more aligned with ecosystem problems, but far less aligned with parsimony (Dietze et al. 2018). For example Bauer et al. (2019) assessed three models based on their ability to inform a comparison of fisheries management strategy, but their assessment was based not on information- theory comparisons of model accuracy (which reward simplicity) but on the range of disagreement among models in the predicted outcome. The review also considered model complexity in that the models differed both in approach and in the number of important issues each could consider (Bauer et al. 2019). The target was management guidance and the models were evaluated on how useful the results were for informing management. They concluded that complexity is needed where it applies to a known important dynamic in the ecosystem, such as competitive interactions between harvested and non-harvested prey.

The question of how much complexity is enough is a difficult one but is reminiscent of other threshold-based questions in computational science. The one common axiom is that you must pass over the threshold to identify it clearly (Hilborn et al. 1995). In modeling, the concept of necessary complexity has been suggested (Biebricher et al. 2012; Cahill and Mackay 2003) and we argue here that in ecological modeling it is necessary to overfit the problem at hand and then critically evaluate how much complexity is necessary to get a useable answer. Good examples of questioning simplifying assumptions comes from models that describe optimal behavior in animals (Alerstam 2011; Petchey et al. 2008). Response to change is a critical feature of forecasting ecological impacts of change in landscapes, water qualtiy, and food supply. Historically this type of modeling describes response to change based on the assumption that organisms will always respond to optimize their fitness in any novel situation (Doniol-Valcroze et al. 2011). Yet this approach is contrary to behavioral theory (McNamara and Houston 2009) and assumes an unrealistic level of knowledge regarding the spectrum of available resources (Fulford et al. 2011). Tools exist to predict non-optimal short-term behavior that both satisfy optimality theory and account for more realistic decision making in complex environments. Such tools pave the way for inclusion of additional complexity when necessary to the problem, such as for animal distributions in heterogenous landscapes (Rustigian et al. 2003) and mate choice predictions in fish reproductive models (Wooten 1984).

It is important to realize that the question of necessary complexity can be tied to the choice of model, such as with SWAT described above (USDA NRCS 2013). This mode-based analysis was intended to predict improvements in an ES (water quality) related to a management decision (land use conservation strategies) in the watershed. The model used was a semi-distributed hydrologic model with three effective vertical layers (surface flow, shallow ground water, and deep ground water). The conservation questions at hand all focused on surface flow, so loss to groundwater was a secondary element. In addition, the timescale was seasonal to annual and avoided examination of daily fluctuations in flow. This can be contrasted with fully-gridded models (e.g. Abdelnour et al. 2013), which are more detailed and optimized for measuring flow in multiple vertical layers at the scale of meters and days that might be needed to examine episodic storm events or annual forest harvesting strategies, rather than longer term landscape changes. The key is to approach any model problem by first critically evaluating the complexity that is needed to properly characterize the ecosystem and the issue at hand.

3 Communicating Model Uncertainty as Risk

A core objective of modeling applied to management is the meaningful assessment of risk. This is a key element of ES valuation and trade-off analysis among different competing services in the context of their impacts on social well-being (Spence et al. 2018). In the face of uncertainty and complex outcomes, models can and should communicate not just how the system will respond to change but also the probability and consequences of those responses in the context of policy. For example, in fishery management the goal is to reduce the probability of overfishing over a fixed time horizon (~5 years) (SEDAR 2016). Models are used to examine the probability of overfishing based on a suite of scenarios for future events (e.g., management limits, climate, recruitment variability), and these results are interpreted in the context of policy-driven risk thresholds (e.g., P(overfishing) < 0.25). Another example is the use of models in toxicological risk assessment to address the probability of adverse events (Forbes and Calow 2012). The challenge for traditional toxicological risk assessment is extrapolating from experimental data at the sub-organism level to population and ecosystem level effects. The use of models for toxicological risk assessment is controversial but shows promise as a method for extrapolation in cases where empirical data are unavailable (e.g., novel species) or unobtainable (e.g., ecosystems). The scientific community tends to vilify model uncertainty as a fault of the approach (Dietze et al. 2018), yet uncertainty is an intrinsic qualtiy of complex systems that necessitates a risk-based approach, and the quantifcation and communication of model uncertainty can contribute to the assessment of risk. For instance, in the fishery example from the Gulf of Mexico Red Snapper, the Stock Synthesis model is used to project the Over Fishing Limit (OFL) as the maximum allowable harvest rate for the stock. The goal here is to sustain the ES (fishery harvest) by managing fishing pressure in the context of other environmental influences (e.g., climate). Therefore, model output uncertainty is also reported and used to quantify the policy-based level of risk into a reduced maximum harvest value called Allowable Biological Catch (ABC). This value is the functional maximum rate used in management. See Lewis et al. (2020) in this volume for additional examples of quantifying uncertainty in specific models.

At the center of risk assessment based on model output is the proper quantification of model uncertainty. Model uncertainty can be parsed into uncertainty in data, parameter values, model functional choice, and underlying variability in the modeled system. Uncertainty within a model caused by input data and parameter uncertainty has received the most attention (LaDeau 2010; Nielsen et al. 2018). Several common tools exist for uncertainty assessment including Monte Carlo Simulation (Thorson et al. 2015; Zhang et al. 2012) and Bayesian Belief Networks (Schmitt and Brugere 2013). There is a strong need to standardize approaches for quantifying uncertainty so that non-modelers can better evaluate and compare results. Returning to the Gulf of Mexico Red Snapper example, the uncertainty analysis included examining sensitivity to parameter values, but also sensitivity to initial model conditions, timeseries dependencies from independent data, and model validation (SEDAR 52). These results were then converted to a cumulative estimate of uncertainty in the OFL value that was used to inform selection of ABC for the stock.

The key element for using models in decision making is the translation of model uncertainty into an estimation of risk. Risk has two components that must be considered in assessment of model output. First model output must be matched to a policy-based outcome (e.g., overfishing, HAB events). Frequently, this is the hardest step as model output is tied to the ecological dynamics not the policy-based objectives. For instance, in the case of Gulf Red Snapper the policy based risk component is standardized to a probability of 25% (SEDAR 2016). This value was derived from high level discussion of acceptable risk and all subsequent assessment outcomes must be reported in these terms by rule, which results in an operationalized model outcome. A comparable nutrient model might predict changes in nutrient concentrations through time and space but is less likely to synthesize those output into a time/space specific risk prediction. Policy makers must be engaged to make model output match the needs of management. In the nutrient case, that can be an estimate of the probability of exceeding Total Maximum Daily Load (TMDL) daily, which can be used to evaluate different strategies for reducing nutrient loading. Alternatively, a model might be adapted to estimate probability of harmful events given TMDL’s are met, which is useful for assessment of different TMDL values. In either case, model uncertainty can then be evaluated as a component of estimating risk for the chosen outcome. This approach has been well-developed in fishery management where p(overfishing) has been defined so that it’s incorporation into assessment models is straightforward. The same cannot be said for nutrient load management or habitat management, but this is largely a process limitation in that the tools exist but a mutually-acceptable process for inclusion does not.

4 Model Temporal and Spatial Scale

Model-based analyses are highly dependent on the choice of temporal and spatial scale and resolution. Models typically have an optimal resolution such as the SWAT model, which is a semi-distributed model designed to work best at medium resolution (e.g., Wellen et al. 2015) (seasons, km2) vs. Ordinary Differential Equation Models designed to work at high temporal resolution but amalgamated over large spatial areas (e.g., Xu et al. 2011). The choice of a spatial and temporal resolution should be tied to the management question at hand and not the model. For instance, a fishery assessment of highly migratory coastal fishes does not require meter-scale resolution. In contrast, assessment of marine protected areas as a tool for increasing fishery recruitment may in fact require a high spatial resolution that can capture habitat features important to nursery production (e.g., Fulford et al. 2011). So, the choice to use a model also includes choices of scale that will impact the validity of the results. See Lewis et al. (2020) in this volume for additional examples of models optimized for particular spatial and temporal scales.

Simply considering both spatial and temporal variability in the same model is relatively novel in modeling as the issue of simultaneously quantifying both spatial and temporal uncertainty is challenging (Jager et al. 2005). For instance, in landscape management a wide variety of models with different spatial and temporal resolutions exist for examination of the impact of land use practices on nutrient loading into waterbodies. Historically, loading rates have been the focus of management, which do not require high spatial resolution (Hashemi et al. 2016). Yet, the impact of land use and the relative importance of surface flow vs. groundwater have greatly increased the need to examine nutrient loading at small spatial scales and in conjunction with short-term episodic events (Abdelnour et al. 2013). Models have had to keep up with this need. A potentially fruitful solution to scale issues is ensemble modeling or end-to-end modeling (Heymans et al. 2018; Lewis et al. 2020; Serpetti et al. 2017). Ensemble modeling was described earlier and end-to-end differs from ensemble modeling in that models are linked sequentially so that output from one model is input for another. Both approaches use multiple models and therefore open the door for examination of multiple temporal and spatial scales for a single system or problem. A good example of the application of multiple models to ES assessment is the ensemble model used to explore eutrophication problem areas in the Baltic Sea (Skogen et al. 2014). In this case, multiple spatial and temporal scales were involved in the collective analysis of eutrophication effects resulting from the interaction of nutrient loading and climatic forcing to generate common guidance for decision making. The result was the identification of ‘problem areas’ for eutrophication at several different scales, which makes the information more useful for decision making. As with other forms of complexity, spatial and temporal scale must be chosen deliberately to match the management problem of interest. This can be inhibited by fidelity to a particular model or limitations of available data for calibration and validation. Optimally, these three elements (model, problem, data) will be examined a priori to decide on what can be done so that operating at the wrong scale is not a criticism of the output. This is another opportunity for stakeholder engagement prior to any model-based analysis.

5 Connecting Science and Policy Objectives in Models

Models can be powerful tools for synthesizing scientific information to inform complex decision making if we can identify steps to operationalize model output (Box 2). Yet, for ecosystem-based management the use of models to justify decisions has not reached its full potential. If model-based assessments are needed to more fully inform decision making for ecosystem-based management, then modelers must meet the challenges described by making models more transparent and by communicating model output in the language of policy (e.g., ICES WGSAM 'Key run' model designation; https://www.ices.dk/community/groups/Pages/WGSAM.aspx). This will be greatly facilitated by early engagement with policy makers and other stakeholders in model choice and development, as well as formal processes for communicating uncertainty as a tool for risk management.

Box 2 Future Needs and Directions for Model-Based Decision Making

-

1.

Standardize the process not the model—no model fits all problems and we should step away from promoting specific tools to promoting methods for tool selection. This includes a raised awareness of available tools and some standards regarding their evaluation.

-

2.

Engage with policy makers early not late in the model development process.

-

3.

Develop clear decision criteria that can be readily translated to and from model output.

-

4.

Communication of model output should include uncertainty not as a limitation of the model but as a property of the decisional landscape useful for risk analysis.

-

5.

Develop success stories based on ensemble modeling and provable short-term forecasting.

For models to be more widely used in decision making, scientists should also check the temptation to create new models. Rather, the focus should be on evaluating current models and providing practical suggestions on model selection for management decisions. Model libraries such as GULF TREE (http://www.gulftree.org/) and the EPA EcoService Models Library (https://esml.epa.gov/) provide search engines to help managers find the right tool for a specific problem. If new models are needed, decision makers should be involved in the development process and their opinions should be integrated into the modeling process (O’Higgins et al. 2020). Multiple model types can also be combined to improve usefulness of both predictive models, as well as utility models, such as neutral models to provide a benchmark for model performance and Bayesian Belief Networks, which can integrate qualitative information to make probabilistic predictions. The use of ensemble modeling holds particular promise for handling uncertainty, as it allows for multiple perspectives and the interactive exploitation of strengths of multiple models (Bauer et al. 2019).

There is also a need to develop proven track records for model-based assessments, which can only occur as a part of application to a practical problem. This can best be achieved through short-term forecasting exercises (Dietze et al. 2018) in real world situations. This approach demonstrates model abilities, but also provides a testbed for feedback and model improvement. Two key examples of this approach are in weather prediction (Bengtsson et al. 2019; Wu et al. 2019) and fishery stock assessment (GMFMC 2018). Future directions in model development for predicting ES should also include identification of such opportunities to engage in short-term forecasting in partnership with decision makers. Ultimately, the use of models in ecological decision making requires acceptance from policy makers and the public and that is where the most effort if needed.

References

Abdelnour, A., McKane, R. B., Stieglitz, M., Pan, F., & Cheng, Y. (2013). Effects of harvest on carbon and nitrogen dynamics in a Pacific northwest forest catchments. Water Resources Research, 49, 1292–1313.

Alerstam, T. (2011). Optimal bird migration revisited. Journal für Ornithologie, 152, 5–23.

Austen, M. C., Andersen, P., Armstrong, C., Doring, R., Hynes, S., Levrel, H., Oinonen, S., & Ressurreicao, A. (2019). Valuing marine ecosystems - taking into account the value of ecosystem benefits in the blue economy. In J. Coopman, J. J. Heymans, P. Kellett, P. A. Munoz, V. French, & B. Alexander (Eds.), Future science brief 5 of the European marine board (p. 32). Belgium: Ostend.

Bagstad, K. J., Villa, F., Batker, D., Harrison-Cox, J., Voigt, B., & Johnson, G. W. (2014). From theoretical to actual ecosystem services: Mapping beneficiaries and spatial flows in ecosystem service assessments. Ecology and Society, 19(2), 64.

Bauer, B., Horbowy, J., Rahikainen, M., Kulatska, N., Muller-Karulis, B., Tomczak, M. T., & Bartolino, V. (2019). Model uncertainty and simulated multi-species fisheries management advice in the Baltic Sea. PLoS One, 14, e0211320.

Bengtsson, L., Bao, J. W., Pegion, P., Penland, C., Michelson, S., & Whitaker, J. (2019). A model framework for stochastic representation of uncertainties associated with physical processes in NOAA’s next generation global prediction system (NGGPS). Monthly Weather Review, 147, 893–911.

Biebricher, A., Havnes, O., & Bast, R. (2012). On the necessary complexity of modeling of the polar mesosphere summer echo overshoot effect. Journal of Plasma Physics, 78, 225–239.

Burnham, K. P., & Anderson, D. R. (1998). Model selection and inference: A practical information theoretic approach. New York, NY: Springer.

Cahill, T. M., & Mackay, D. (2003). Complexity in multimedia mass balance models: When are simple models adequate and when are more complex models necessary? Environmental Toxicology and Chemistry, 22(6), 1404–1412.

Clark, J. S., & Gelfand, A. E. (2006). A future for models and data in environmental science. Trends in Ecology & Evolution, 21, 375–380.

Craft, C., Clough, J., Ehman, J., Joye, S., Park, R., Pennings, S., Guo, H. Y., & Machmuller, M. (2009). Forecasting the effects of accelerated sea-level rise on tidal marsh ecosystem services. Frontiers in Ecology and the Environment, 7, 73–78.

Dietze, M. C., Fox, A., Beck-Johnson, L. M., Betancourt, J. L., Hooten, M. B., Jarnevich, C. S., Keitt, T. H., Kenney, M. A., Laney, C. M., Larsen, L. G., Loescher, H. W., Lunch, C. K., Pijanowski, B. C., Randerson, J. T., Read, E. K., Tredennick, A. T., Vargas, R., Weathers, K. C., & White, E. P. (2018). Iterative near-term ecological forecasting: Needs, opportunities, and challenges. Proceedings of the National Academy of Sciences of the United States of America, 115, 1424–1432.

Doniol-Valcroze, T., Lesage, V., Giard, J., & Michaud, R. (2011). Optimal foraging theory predicts diving and feeding strategies of the largest marine predator. Behavioral Ecology, 22(4), 880–888.

Forbes, V. E., & Calow, P. (2012). Promises and problems for the new paradigm for risk assessment and an alternative approach involving predictive systems models. Environmental Toxicology and Chemistry, 31, 2663–2671.

Fulford, R. S., Peterson, M. S., & Grammer, P. O. (2011). An ecological model of the habitat mosaic in estuarine nursery areas: Part I-Interaction of dispersal theory and habitat variability in describing juvenile fish distributions. Ecological Modelling, 222, 3203–3215.

Fulford, R. S., Yoskowitz, D., Russell, M., Dantin, D. D., & Rogers, J. (2015). Habitat and recreational fishing opportunity in Tampa Bay: Linking ecological and ecosystem services to human beneficiaries. Ecosystem Services, 17, 64–74.

Fulton, E. A. (2010). Approaches to end-to-end ecosystem models. Journal of Marine Systems, 81, 171–183.

Getz, W. M. (1998). An introspection on the art of modeling in population ecology. BioScience, 48, 540–552.

GMFMC. (2018). SEDAR 52 stock assessment report: Gulf of Mexico red snapper. North Charleston, SC: Southeast Data Assessment and Review (SEDAR).

Griffith, G. P., & Fulton, E. A. (2014). New approaches to simulating the complex interaction effects of multiple human impacts on the marine environment. ICES Journal of Marine Science, 71, 764–774.

Hashemi, F., Olesen, J. E., Dalgaard, T., & Borgesen, C. D. (2016). Review of scenario analyses to reduce agricultural nitrogen and phosphorus loading to the aquatic environment. Science of the Total Environment, 573, 608–626.

Heymans, J. J., Coll, M., Link, J. S., Mackinson, S., Steenbeek, J., Walters, C., & Christensen, V. (2016). Best practice in Ecopath with Ecosim food-web models for ecosystem-based management. Ecological Modelling, 331, 173–184.

Heymans, J. J., Skogen, M., Schrum, C., & Solidoro, C. (2018). Enhancing Europe’s capabiltiy in marine ecosystem modelling for societal benefit. In K. E. Larkin, J. Coopman, P. A. Munoz, P. Kellett, C. Simon, C. Rundt, C. Viegas, & J. J. Heymans (Eds.), Future science brief 4 of the European marine board (p. 32). Ostend, Belgium: European Marine Board.

Hilborn, R., Walters, C. J., & Ludwig, D. (1995). Sustainable exploitation of renewable resources. Annual Review of Ecology and Systematics, 26, 45–67.

Jager, H. I., King, A. W., Schumaker, N. H., Ashwood, T. L., & Jackson, B. L. (2005). Spatial uncertainty analysis of population models. Ecological Modelling, 185, 13–27.

LaDeau, S. (2010). Advances in modeling highlight a tension between analytical accuracy and accessibility. Ecology, 91, 3488–3492.

Lewis, N. S., Marois, D. E., Littles, C. J., & Fulford, R. S. (2020). Projecting changes to coastal and estuarine ecosystem goods and services - models and tools. In T. O’Higgins, M. Lago, & T. H. DeWitt (Eds.), Ecosystem-based management, ecosystem services and aquatic biodiversity: Theory, tools and applications (pp. 235–254). Amsterdam: Springer.

Martínez-López, J., Bagstad, K. J., Balbi, S., Magrach, A., Voigt, B., Athanasiadis, I., Pascual, M., Willcock, S., & Villa, F. (2019). Towards globally customizable ecosystem service models. Science of The Total Environment, 650, 2325–2336.

May, R. M. (1974a). Biological populations with nonoverlapping generations—Stable points, stable cycles, and chaos. Science, 186, 645–647.

May, R. M. (1974b). Patterns of species abundance—Mathematical aspects of dynamics of populations. SIAM Review, 16, 585–585.

McNamara, J. M., & Houston, A. I. (2009). Integrating function and mechanism. Trends in Ecology & Evolution, 24, 670–675.

Methot, R. D. (2009). Stock assessment: Operational models in support of fisheries management. In Beamish, R. J & Methot, R. D. (Eds.), The future of fisheries science in North America. 137 fish and fisheries science series. Berlin: Springer.

Nielsen, J. R., Thunberg, E., Holland, D. S., Schmidt, J. O., Fulton, E. A., Bastardie, F., Punt, A. E., Allen, I., Bartelings, H., Bertignac, M., Bethke, E., Bossier, S., Buckworth, R., Carpenter, G., Christensen, A., Christensen, V., Da-Rocha, J. M., Deng, R., Dichmont, C., Doering, R., Esteban, A., Fernandes, J. A., Frost, H., Garcia, D., Gasche, L., Gascuel, D., Gourguet, S., Groeneveld, R. A., Guillen, J., Guyader, O., Hamon, K. G., Hoff, A., Horbowy, J., Hutton, T., Lehuta, S., Little, L. R., Lleonart, J., Macher, C., Mackinson, S., Mahevas, S., Marchal, P., Mato-Amboage, R., Mapstone, B., Maynou, F., Merzereaud, M., Palacz, A., Pascoe, S., Paulrud, A., Plaganyi, E., Prellezo, R., van Putten, E. I., Quaas, M., Ravn-Jonsen, L., Sanchez, S., Simons, S., Thebaud, O., Tomczak, M. T., Ulrich, C., van Dijk, D., Vermard, Y., Voss, R., & Waldo, S. (2018). Integrated ecological-economic fisheries models-evaluation, review and challenges for implementation. Fish and Fisheries, 19, 1–29.

O’Higgins, T. G., Culhane, F., O’Dwyer, B., Robinson, L., & Lago, M. (2020). Combining methods to establish potential management measures for invasive species Elodea nutallii in Lough Erne Northern Ireland. In T. O’Higgins, M. Lago, & T. H. DeWitt (Eds.), Ecosystem-based management, ecosystem services and aquatic biodiversity: Theory, tools and applications (pp. 445–460). Amsterdam: Springer.

Petchey, O. L., Beckerman, A. P., Riede, J. O., & Warren, P. H. (2008). Size, foraging, and food web structure. Proceedings of the National Academy of Sciences of the United States of America, 105, 4191–4196.

Piroddi, C., Coll, M., Liquete, C., Macias, D., Greer, K., Buszowski, J., Steenbeek, J., Danovaro, R., & Christensen, V. (2017). Historical changes of the Mediterranean Sea ecosystem: Modelling the role and impact of primary productivity and fisheries changes over time. Scientific Reports, 7, 44491.

Redhead, J. W., May, L., Oliver, T. H., Hamel, I., Hamel, P., Sharp, R., & Bullock, J. M. (2018). National scale evaluation of the InVEST nutrient retention model in the United Kingdom. Science of the Total Environment, 610, 666–677.

Rose, K. A., Allen, J. I., Artioli, Y., Barange, M., Blackford, J., Carlotti, F., Cropp, R., Daewel, U., Edwards, K., Flynn, K., Hill, S. L., HilleRisLambers, R., Huse, G., Mackinson, S., Megrey, B., Moll, A., Rivkin, R., Salihoglu, B., Schrum, C., Shannon, L., Shin, Y. J., Smith, S. L., Smith, C., Solidoro, C., John, M. S., & Zhou, M. (2010). End-to-end models for the analysis of marine ecosystems: Challenges, issues, and next steps. Marine and Coastal Fisheries, 2, 115–130.

Rosenweig, M. L., & MacArthur, R. H. (1963). Graphical representation and stability conditions of predator-prey interactions. The American Naturalist, 97, 209–223.

Rustigian, H. L., Santelmann, M. V., & Schumaker, N. H. (2003). Assessing the potential impacts of alternative landscape designs on amphibian population dynamics. Landscape Ecology, 18, 65–81.

Sanchirico, J. N., & Mumby, P. J. (2009). Mapping ecosystem functions to the valuation of ecosystem services: Implications of species-habitat associations for coastal land-use decisions. Theoretical Ecology, 2, 67–77.

Schmitt, L. H. M., & Brugere, C. (2013). Capturing ecosystem services, stakeholders’ preferences and trade-offs in coastal aquaculture decisions: A bayesian belief network application. PLoS One, 8, e75956.

Scott, E., Serpetti, N., Steenbeek, J., & Heymans, J. J. (2016). A stepwise fitting procedure for automated fitting of Ecopath with EcoSim models. Software X, 5, 25–30.

SEDAR. (2016, September). Southeast data assessment and review—Data best practices: Living document (p. 115). North Charleston, SC: SEDAR. Retrieved from http://sedarweb.org/sedar-data-best-practices.

Serpetti, N., Baudron, A. R., Burrows, M. T., Payne, B. L., Helaouet, P., Fernandes, P. G., & Heymans, J. J. (2017). Impact of ocean warming on sustainable fisheries management informs the ecosystem approach to fisheries (Scientific reports, 7 of the European marine board). Belgium: Ostend.

Skogen, M. D., Eilola, K., Hansen, J. L. S., Meier, H. E. M., Molchanov, M. S., & Ryabchenko, V. A. (2014). Eutrophication status of the North Sea, Skagerrak, Kattegat and the Baltic Sea in present and future climates: A model study. Journal of Marine Systems, 132, 174–184.

Spence, M. A., Blanchard, J. L., Rossberg, A. G., Heath, M. R., Heymans, J. J., Mackinson, S., Serpetti, N., Speirs, D. C., Thorpe, R. B., & Blackwell, P. G. (2018). A general framework for combining ecosystem models. Fish and Fisheries, 19, 1031–1042. https://doi.org/10.1111/faf.12310.

Thorson, J. T., Hicks, A. C., & Methot, R. D. (2015). Random effect estimation of time-varying factors in stock synthesis. ICES Journal of Marine Science, 72, 178–185.

Trisurat, Y. 2013. Ecological assessment: Assessing condition and trend of ecosystem service of Thadee watershed. Nakhon Si Thammarat Province, Bangkoknd: ECO-BEST Project, Faculty of Forestry, Kasetsart University.

USDA-NRCS. (2013). Impacts of conservation adoption on cultivated acres of cropland in the Chesapeake Bay region 2003–06 to 2011 (p. 113). United States Department of Agriculture, Natural Resources Conservation Service. https://www.nrcs.usda.gov/wps/portal/nrcs/detail/national/technical/nra/ceap/na/?cid=stelprdb1240074.

Wellen, C., Kamran-Disfani, A. R., & Arhonditsis, G. B. (2015). Evaluation of the current state of distributed watershed nutrient water quality modeling. Environmental Science & Technology, 49, 3278–3290.

Whipple, S. J. (1999). Analysis of ecosystem structure and function: Extended path and flow analysis of a steady-state oyster reef model. Ecological Modelling, 114, 251–274.

Wooten, R. J. (1984). A functional biology of sticklebacks. New York, NY: Springer.

Wu, T. J., Min, J. Z., & Wu, S. (2019). A comparison of the rainfall forecasting skills of the WRF ensemble forecasting system using SPCPT and other cumulus parameterization error representation schemes. Atmospheric Research, 218, 160–175.

Xu, S., Chen, Z., Li, S., & He, P. (2011). Modeling trophic structure and energy flows in a coastal artificial ecosystem using mass-balance Ecopath model. Estuaries and Coasts, 34, 351–363.

Zhang, L., Yu, G., Gu, F., He, H., Zhang, L., & Han, S. (2012). Uncertainty analysis of modeled carbon fluxes for a broad-leaved Korean pine mixed forest using a process-based ecosystem model. Journal of Forest Research, 17, 268–282.

Zvoleff, A., & An, L. (2014). Analyzing human-landscape interactions: Tools that integrate. Environmental Management, 53, 94–111.

Disclaimer

This chapter has been subjected to Agency review and has been approved for publication. The views expressed in this paper are those of the author(s) and do not necessarily reflect the views or policies of the U.S. Environmental Protection Agency.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Open Access This chapter is licensed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license and indicate if changes were made.

The images or other third party material in this chapter are included in the chapter's Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the chapter's Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder.

Copyright information

© 2020 The Author(s)

About this chapter

Cite this chapter

Fulford, R.S., Heymans, S.J.J., Wu, W. (2020). Mathematical Modeling for Ecosystem-Based Management (EBM) and Ecosystem Goods and Services (EGS) Assessment. In: O’Higgins, T., Lago, M., DeWitt, T. (eds) Ecosystem-Based Management, Ecosystem Services and Aquatic Biodiversity . Springer, Cham. https://doi.org/10.1007/978-3-030-45843-0_14

Download citation

DOI: https://doi.org/10.1007/978-3-030-45843-0_14

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-45842-3

Online ISBN: 978-3-030-45843-0

eBook Packages: Biomedical and Life SciencesBiomedical and Life Sciences (R0)