Abstract

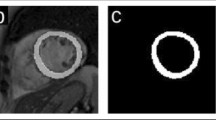

Normalization layers are essential in a Deep Convolutional Neural Network (DCNN). Various normalization methods have been proposed. The statistics used to normalize the feature maps can be computed at batch, channel, or instance level. However, in most of existing methods, the normalization for each layer is fixed. Batch-Instance Normalization (BIN) is one of the first proposed methods that combines two different normalization methods and achieve diverse normalization for different layers. However, two potential issues exist in BIN: first, the Clip function is not differentiable at input values of 0 and 1; second, the combined feature map is not with a normalized distribution which is harmful for signal propagation in DCNN. In this paper, an Instance-Layer Normalization (ILN) layer is proposed by using the Sigmoid function for the feature map combination, and cascading group normalization. The performance of ILN is validated on image segmentation of the Right Ventricle (RV) and Left Ventricle (LV) using U-Net as the network architecture. The results show that the proposed ILN outperforms previous traditional and popular normalization methods with noticeable accuracy improvements for most validations, supporting the effectiveness of the proposed ILN.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Ba, J.L., Kiros, J.R., Hinton, G.E.: Layer normalization. Stat 1050, 21 (2016)

Bjorck, N., Gomes, C.P., Selman, B., Weinberger, K.Q.: Understanding batch normalization. In: NeurIPS, pp. 7705–7716 (2018)

Ioffe, S.: Batch renormalization: towards reducing minibatch dependence in batch-normalized models. In: NeurIPS, pp. 1945–1953 (2017)

Ioffe, S., Szegedy, C.: Batch normalization: accelerating deep network training by reducing internal covariate shift. In: ICML, pp. 448–456 (2015)

Nam, H., Kim, H.E.: Batch-instance normalization for adaptively style-invariant neural networks. In: NeurIPS, pp. 2563–2572 (2018)

Radau, P., Lu, Y., Connelly, K., Paul, G., Dick, A., Wright, G.: Evaluation framework for algorithms segmenting short axis cardiac MRI. MIDAS J. Card. MR Left Ventricle Segmentation Challenge 49 (2009). https://ieeexplore.ieee.org/stamp/stamp.jsp?tp=&arnumber=8759179%20[12]

Ronneberger, O., Fischer, P., Brox, T.: U-Net: convolutional networks for biomedical image segmentation. In: Navab, N., Hornegger, J., Wells, W.M., Frangi, A.F. (eds.) MICCAI 2015. LNCS, vol. 9351, pp. 234–241. Springer, Cham (2015). https://doi.org/10.1007/978-3-319-24574-4_28

Salimans, T., Kingma, D.P.: Weight normalization: a simple reparameterization to accelerate training of deep neural networks. In: NeurIPS, pp. 901–909 (2016)

Santurkar, S., Tsipras, D., Ilyas, A., Madry, A.: How does batch normalization help optimization? In: NeurIPS, pp. 2488–2498 (2018)

Ulyanov, D., Vedaldi, A., Lempitsky, V.: Instance normalization: The missing ingredient for fast stylization. arXiv preprint arXiv:1607.08022 (2016)

Wang, G., Peng, J., Luo, P., Wang, X., Lin, L.: Batch kalman normalization: Towards training deep neural networks with micro-batches. arXiv preprint arXiv:1802.03133 (2018)

Wu, Y., He, K.: Group normalization. In: Ferrari, V., Hebert, M., Sminchisescu, C., Weiss, Y. (eds.) ECCV 2018. LNCS, vol. 11217, pp. 3–19. Springer, Cham (2018). https://doi.org/10.1007/978-3-030-01261-8_1

Xu, Y., Wang, X.: Understanding weight normalized deep neural networks with rectified linear units. In: NeurIPS, pp. 130–139 (2018)

Zhou, X.Y., Lin, J., Riga, C., Yang, G.Z., Lee, S.L.: Real-time 3D shape instantiation from single fluoroscopy projection for fenestrated stent graft deployment. IEEE RAL 3(2), 1314–1321 (2018)

Zhou, X.Y., Riga, C., Lee, S.L., Yang, G.Z.: Towards automatic 3D shape instantiation for deployed stent grafts: 2D multiple-class and class-imbalance marker segmentation with equally-weighted focal U-Net. In: 2018 IEEE/RSJ IROS, pp. 1261–1267 (2018)

Zhou, X.Y., Yang, G.Z.: Normalization in training U-Net for 2D biomedical semantic segmentation. IEEE RAL 4(2), 1792–1799 (2019)

Zhou, X.Y., Yang, G.Z., Lee, S.L.: A real-time and registration-free framework for dynamic shape instantiation. MedIA 44, 86–97 (2018)

Zhou, X.Y., Zheng, J.Q., Yang, G.Z.: Atrous convolutional neural network (ACNN) for biomedical semantic segmentation with dimensionally lossless feature maps. arXiv preprint arXiv:1901.09203 (2019)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2020 Springer Nature Switzerland AG

About this paper

Cite this paper

Zhou, XY., Li, P., Wang, ZY., Yang, GZ. (2020). U-Net Training with Instance-Layer Normalization. In: Li, Q., Leahy, R., Dong, B., Li, X. (eds) Multiscale Multimodal Medical Imaging. MMMI 2019. Lecture Notes in Computer Science(), vol 11977. Springer, Cham. https://doi.org/10.1007/978-3-030-37969-8_13

Download citation

DOI: https://doi.org/10.1007/978-3-030-37969-8_13

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-37968-1

Online ISBN: 978-3-030-37969-8

eBook Packages: Computer ScienceComputer Science (R0)