Abstract

Synchronous Kleene algebra (SKA), an extension of Kleene algebra (KA), was proposed by Prisacariu as a tool for reasoning about programs that may execute synchronously, i.e., in lock-step. We provide a countermodel witnessing that the axioms of SKA are incomplete w.r.t. its language semantics, by exploiting a lack of interaction between the synchronous product operator and the Kleene star. We then propose an alternative set of axioms for SKA, based on Salomaa’s axiomatisation of regular languages, and show that these provide a sound and complete characterisation w.r.t. the original language semantics.

This work was partially supported by ERC Starting Grant ProFoundNet (679127), a Leverhulme Prize (PLP–2016–129) and a Marie Curie Fellowship (795119). The first author conducted part of this work at Centrum Wiskunde & Informatica, Amsterdam.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Notes

- 1.

Unlike [26], we include H in the syntax; one can prove that for any \(e \in \mathcal {T}_{\mathsf {F}_1}\) it holds that \(H(e) \equiv 0\) or \(H(e) \equiv 1\), and hence any occurence of H can be removed from e. This is what allows us to apply the completeness result from op. cit. here.

- 2.

Note that for the synchronous language model we know the least fixpoint axioms are sound as well (Lemma 3.6). However, there might be other \(\mathsf {SF}_1 \text {-models}\) where the least fixpoint axioms are not valid.

References

Antimirov, V.M.: Partial derivatives of regular expressions and finite automaton constructions. Theor. Comput. Sci. 155(2), 291–319 (1996). https://doi.org/10.1016/0304-3975(95)00182-4

Backhouse, R.: Closure algorithms and the star-height problem of regular languages. PhD thesis, University of London (1975)

Baier, C., Sirjani, M., Arbab, F., Rutten, J.: Modeling component connectors in reo by constraint automata. Sci. Comput. Program. 61(2), 75–113 (2006). https://doi.org/10.1016/j.scico.2005.10.008

Bergstra, J.A., Klop, J.W.: Process algebra for synchronous communication. Inf. Control 60(1–3), 109–137 (1984). https://doi.org/10.1016/S0019-9958(84)80025-X

Boffa, M.: Une remarque sur les systèmes complets d’identités rationnelles. ITA 24, 419–428 (1990)

Bonchi, F., Pous, D.: Checking NFA equivalence with bisimulations up to congruence. In: Proceedings of Principles of Programming Languages (POPL), pp. 457–468 (2013). https://doi.org/10.1145/2429069.2429124

Broda, S., Cavadas, S., Ferreira, M., Moreira, N.: Deciding synchronous Kleene algebra with derivatives. In: Drewes, F. (ed.) CIAA 2015. LNCS, vol. 9223, pp. 49–62. Springer, Cham (2015). https://doi.org/10.1007/978-3-319-22360-5_5

Brzozowski, J.A.: Derivatives of regular expressions. J. ACM 11(4), 481–494 (1964). https://doi.org/10.1145/321239.321249

John Horton Conway: Regular Algebra and Finite Machines. Chapman and Hall Ltd., London (1971)

Foster, S., Struth, G.: On the fine-structure of regular algebra. J. Autom. Reason. 54(2), 165–197 (2015). https://doi.org/10.1007/s10817-014-9318-9

Hayes, I.J.: Generalised rely-guarantee concurrency: an algebraic foundation. Formal Asp. Comput. 28(6), 1057–1078 (2016). https://doi.org/10.1007/s00165-016-0384-0

Hayes, I.J., Colvin, R.J., Meinicke, L.A., Winter, K., Velykis, A.: An algebra of synchronous atomic steps. In: Fitzgerald, J., Heitmeyer, C., Gnesi, S., Philippou, A. (eds.) FM 2016. LNCS, vol. 9995, pp. 352–369. Springer, Cham (2016). https://doi.org/10.1007/978-3-319-48989-6_22

Hayes, I.J., Meinicke, L.A., Winter, K., Colvin, R.J.: A synchronous program algebra: a basis for reasoning about shared-memory and event-based concurrency. Formal Asp. Comput. 31(2), 133–163 (2019). https://doi.org/10.1007/s00165-018-0464-4

Kozen, D.: Myhill-Nerode relations on automatic systems and the completeness of Kleene algebra. In: Ferreira, A., Reichel, H. (eds.) STACS 2001. LNCS, vol. 2010, pp. 27–38. Springer, Heidelberg (2001). https://doi.org/10.1007/3-540-44693-1_3

Hoare, T., van Staden, S., Möller, B., Struth, G., Zhu, H.: Developments in concurrent Kleene algebra. J. Log. Algebr. Meth. Program. 85(4), 617–636 (2016). https://doi.org/10.1016/j.jlamp.2015.09.012

Kappé, T., Brunet, P., Rot, J., Silva, A., Wagemaker, J., Zanasi, F.: Kleene algebra with observations. arXiv:1811.10401

Kappé, T., Brunet, P., Silva, A., Zanasi, F.: Concurrent Kleene algebra: free model and completeness. In: Proceedings of European Symposium on Programming (ESOP), pp. 856–882 (2018). https://doi.org/10.1007/978-3-319-89884-1_30

Kozen, D.: A completeness theorem for Kleene algebras and the algebra of regular events. Inf. Comput. 110(2), 366–390 (1994). https://doi.org/10.1006/inco.1994.1037

Kozen, D.: Myhill-Nerode relations on automatic systems and the completeness of Kleene algebra. In: Proceedings of Symposium on Theoretical Aspects of Computer Science (STACS), pp. 27–38 (2001). https://doi.org/10.1007/3-540-44693-1_3

Kozen, D., Smith, F.: Kleene algebra with tests: completeness and decidability. In: van Dalen, D., Bezem, M. (eds.) CSL 1996. LNCS, vol. 1258, pp. 244–259. Springer, Heidelberg (1997). https://doi.org/10.1007/3-540-63172-0_43

Krob, D.: Complete systems of B-rational identities. Theor. Comput. Sci. 89(2), 207–343 (1991). https://doi.org/10.1016/0304-3975(91)90395-I

Laurence, M.R., Struth, G.: Completeness theorems for pomset languages and concurrent Kleene algebras. arXiv:1705.05896

Milner, R.: Calculi for synchrony and asynchrony. Theor. Comput. Sci. 25, 267–310 (1983). https://doi.org/10.1016/0304-3975(83)90114-7

Prisacariu, C.: Synchronous Kleene algebra. J. Log. Algebr. Program. 79(7), 608–635 (2010). https://doi.org/10.1016/j.jlap.2010.07.009

Rutten, J.J.M.M.: Behavioural differential equations: a coinductive calculus of streams, automata, and power series. Theor. Comput. Sci. 308(1–3), 1–53 (2003). https://doi.org/10.1016/S0304-3975(02)00895-2

Salomaa, A.: Two complete axiom systems for the algebra of regular events. J. ACM 13(1), 158–169 (1966). https://doi.org/10.1145/321312.321326

Silva, A.: Kleene Coalgebra. PhD thesis, Radboud Universiteit Nijmegen (2010)

Acknowledgements

The first author is grateful for discussions with Hans-Dieter Hiep and Benjamin Lion.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

A Appendix

A Appendix

Lemma 3.5. The structure \((\mathcal {L}_{\varSigma },S,+, \cdot ,^*,\times , \emptyset ,\{\varepsilon \})\) is an SKA, that is, synchronous languages over \(\varSigma \) form an SKA.

Proof

The carrier \(\mathcal {L}_{\varSigma }\) is obviously closed under the operations of synchronous Kleene algebra. We need only argue that each of the SKA axioms is valid on synchronous languages.

The proof for the Kleene algebra axioms follows from the observation that synchronous languages over the alphabet \(\varSigma \) are simply languages over the alphabet \(\mathcal {P}_n(\varSigma )\). Thus we know that the Kleene algebra axioms are satisfied, as languages over alphabet \(\mathcal {P}_n(\varSigma )\) with \(1=\{\varepsilon \}\) and \(0=\emptyset \) form a Kleene algebra.

For the semilattice axioms, note that S is isomorphic to \(\mathcal {P}_n(\varSigma )\) (by sending a singleton set in S to its sole element), and that the latter forms a semilattice when equipped with \(\cup \). Since the isomorphism between S and \(\mathcal {P}_n(\varSigma )\) respects these operators, it follows that \((S, \times )\) is also a semilattice.

The first SKA axiom that we check is commutativity. We prove that \(\times \) on synchronous strings is commutative by induction on the paired length of the strings. Consider synchronous strings u and v. For the base, where u and v equal \(\varepsilon \), the result is immediate. In the induction step, we take \(u=xu'\) with \(x\in \mathcal {P}_n(\varSigma )\). If \(v=\varepsilon \) we are done immediately. Now for the case \(v=yv'\) with \(y\in \mathcal {P}_n(\varSigma )\). We have \(u\times v= (xu')\times (yv')=(x\cup y)\cdot (u'\times v')\). From the induction hypothesis we know that \(u'\times v' = v'\times u'\). Combining this with commutativity of union we have \(u\times v = (x\cup y)\cdot (v'\times u') = v\times u\). Take synchronous languages K and L. Now consider \(w\in K\times L\). This means that \(w=u\times v\) for \(u\in K\) and \(v\in L\). From commutativity of synchronous strings we know that \(w=u\times v= v\times u\). And thus we have \(w\in L\times K\). The other inclusion is analogous.

It is obvious that the axioms \(K\times \emptyset =\emptyset \) and \(K\times \{\varepsilon \}=K\) are satisfied.

For associativity we again first argue that \(\times \) on synchronous strings is associative. Take synchronous strings u, v and w. We will show by induction on the paired length of u, v and w that \(u\times (v\times w)= (u\times v)\times w\). If \(u,v,w=\varepsilon \) the result is immediate. Now consider \(u=xu'\) for \(x\in \mathcal {P}_n(\varSigma )\). If v or w equals \(\varepsilon \) the result is again immediate. Hence we consider the case where \(v=yv'\) and \(w=zw'\) for \(y,z\in \mathcal {P}_n(\varSigma )\). From the induction hypothesis we know that \(u'\times (v'\times w')= (u'\times v')\times w'\). We can therefore derive

From associativity of union, we then know that \((x\cup (y\cup z))\cdot ((u'\times v')\times w')=(u\times v)\times w\). Now consider \(t\in K\times (L\times J)\) for K, L and J synchronous languages. Thus \(t=u\times (v\times w)\) for \(u\in K\), \(v\in L\) and \(w\in J\). From associativity of synchronous strings we know that \(t=u\times (v\times w)= (u\times v)\times w\), and thus we have \(t\in (K\times L)\times J\). The other inclusion is analogous.

For distributivity consider \(w\in K\times (L+J)\) for K, L, J synchronous languages. This means that \(w=u\times v\) for \(u\in K\) and \(v\in L+J\). Thus we know \(v\in L\) or \(v\in J\). We immediately get that \(u\times v\in K\times L\) or \(u\times v\in K\times J\) and therefore that \(w\in K\times L + K\times J\). The other direction is analogous.

For the synchrony axiom we take synchronous languages K, L and \(A,B\in S\). Suppose \(A=\{x\}\) and \(B=\{y\}\) for \(x,y\in \mathcal {P}_n(\varSigma )\). Take \(w\in (A\cdot K)\times (B\cdot L)\). This means that \(w=u\times v\) for \(u\in A\cdot K\) and \(v\in B\cdot L\). Thus we know that \(u=xu'\) with \(u'\in K\) and \(v=yv'\) with \(v'\in L\). From this we conclude \(w=u\times v=(xu')\times (yv')=(x\cup y)\cdot (u'\times v')\). As \(u'\in K\) and \(v'\in L\) and \(x\cup y = x\times y\) with \(x\in A\) and \(y\in B\), we have that \(w\in (A\times B)\cdot (K\times L)\). For the other direction, consider \(w\in (A\times B)\cdot (K\times L)\). This entails \(w=t\cdot v\) for \(t\in A\times B\) and \(v\in K\times L\). As \(A\times B=\{x\cup y\}\) we have \(t=x\cup y\). And \(v=u\times s\) for \(u\in K\) and \(s\in L\). Thus \(t\cdot v=(x\cup y)\cdot (u\times s)=(xu)\times (ys)\) for \(u\in K\), \(s\in L\), \(x\in A\) and \(y\in B\). Hence \(w\in (A\cdot K)\times (B\cdot L)\). \(\square \)

Lemma 3.6 (Soundness of SKA). For all \(e, f \in \mathcal {T}_{\mathsf {SKA}}\), we have that \(e \equiv _{\scriptscriptstyle \mathsf {SKA}}f\) implies \([\![e]\!]_{\scriptscriptstyle \mathsf {SKA}} = [\![f]\!]_{\scriptscriptstyle \mathsf {SKA}}\).

Proof

This is proved by induction on the construction of \(\equiv _{\scriptscriptstyle \mathsf {SKA}}\). In the base case we need to check all the axioms generating \(\equiv _{\scriptscriptstyle \mathsf {SKA}}\), which we have already done for Lemma 3.5. For the inductive step, we need to check the closure rules for congruence preserve soundness. This is all immediate from the definition of the semantics of SKA and the induction hypothesis. For instance, if \(e=e_0+e_1\), \(f=f_0+f_1\), \(e_0\equiv _{\scriptscriptstyle \mathsf {SKA}}f_0\) and \(e_1\equiv _{\scriptscriptstyle \mathsf {SKA}}f_1\), then \([\![e]\!]_{\scriptscriptstyle \mathsf {SKA}}=[\![e_0]\!]_{\scriptscriptstyle \mathsf {SKA}}+[\![e_1]\!]_{\scriptscriptstyle \mathsf {SKA}}=[\![f_0]\!]_{\scriptscriptstyle \mathsf {SKA}}+[\![f_1]\!]_{\scriptscriptstyle \mathsf {SKA}}=[\![f]\!]_{\scriptscriptstyle \mathsf {SKA}}\), where use that \([\![e_0]\!]_{\scriptscriptstyle \mathsf {SKA}}=[\![f_0]\!]_{\scriptscriptstyle \mathsf {SKA}}\) and \([\![e_1]\!]_{\scriptscriptstyle \mathsf {SKA}}=[\![f_1]\!]_{\scriptscriptstyle \mathsf {SKA}}\) as a consequence of the induction hypothesis.

Lemma 4.1. For \(\alpha \in \mathcal {T}_{\mathsf {SL}}\), we have \([\![\alpha ^* \times \alpha ^*]\!]_{\scriptscriptstyle \mathsf {SKA}}=[\![\alpha ^*]\!]_{\scriptscriptstyle \mathsf {SKA}}\).

Proof

For the first inclusion, take \(w\in [\![a^* \times a^*]\!]_{\scriptscriptstyle \mathsf {SKA}} = [\![a^*]\!]_{\scriptscriptstyle \mathsf {SKA}} \times [\![a^*]\!]_{\scriptscriptstyle \mathsf {SKA}}\). Thus we have \(w=u\times v\) for \(u,v\in [\![a^*]\!]_{\scriptscriptstyle \mathsf {SKA}}\). Hence \(u=x_1\cdots x_n\) for \(x_i\in [\![a]\!]_{\scriptscriptstyle \mathsf {SKA}}\) and \(v=y_1\cdots y_m\) for \(y_i\in [\![a]\!]_{\scriptscriptstyle \mathsf {SKA}}\). As \([\![a]\!]_{\scriptscriptstyle \mathsf {SKA}}=\{[\![a]\!]_{\scriptscriptstyle \mathsf {SL}}\}\) with \([\![a]\!]_{\scriptscriptstyle \mathsf {SL}}\in \mathcal {P}_n(\varSigma )\), we know that \(x_i=[\![a]\!]_{\scriptscriptstyle \mathsf {SL}}\) and \(y_i=[\![a]\!]_{\scriptscriptstyle \mathsf {SL}}\). Assume that \(n\le m\) without loss of generality. We then know that \(v=u\cdot [\![a]\!]_{\scriptscriptstyle \mathsf {SL}}^{m-n}\), where synchronous string \(e^n\) indicates n copies of string e concatenated. Unrolling the definition of \(\times \) on words, we find \(u\times v=u\times (u\cdot [\![a]\!]_{\scriptscriptstyle \mathsf {SL}}^k)=(u\times u)\cdot [\![a]\!]_{\scriptscriptstyle \mathsf {SL}}^k=u\cdot [\![a]\!]_{\scriptscriptstyle \mathsf {SL}}^k=v\), and hence \(w = u \times v = v \in [\![a^*]\!]_{\scriptscriptstyle \mathsf {SKA}}\). For the other inclusion, take \(w\in [\![a^*]\!]_{\scriptscriptstyle \mathsf {SKA}}\). As \(\varepsilon \in [\![a^*]\!]_{\scriptscriptstyle \mathsf {SKA}}\) and \(w\times \varepsilon =w\), we immediately have \(w\in [\![a^*]\!]_{\scriptscriptstyle \mathsf {SKA}}\times [\![a^*]\!]_{\scriptscriptstyle \mathsf {SKA}}\). \(\square \)

Lemma A.1

For \(K,L\in \mathcal {L}_{s}\), K a non-empty finite language and L an infinite language, \(K\times L\) is an infinite language.

Proof

Suppose that \(K\times L\) is a finite language. Hence we have an upper bound on the length of words in \(K\times L\). Since the length of the synchronous product of two words is obviously the maximum of the length of the operands, this means we also have an upper bound on the length of words in L, and as we have finite words over a finite alphabet in L this means that L is finite. Hence we get a contradiction, thus \(K\times L\) is infinite.

Lemma 4.3. \(\mathcal {M}=(\mathcal {L}_{s}\cup \{\dagger \},\{\{\{ s\}\}\},+,\cdot ,^*,\times ,\emptyset ,\{\varepsilon \})\) with the operators as defined in Definition 4.2 forms an SKA.

Proof

In the main text we treated one of the least fixpoint axioms and the synchrony axiom, and here we will treat all the remaining cases. For the sake of brevity, for each axiom we omit the cases where we can appeal to the proof for (synchronous) regular languages.

The proof that \((S, \times )\) is a semilattice is the same as in Lemma 3.5. Next, we take a look at the Kleene algebra axioms. If \(K\in \mathcal {L}_{s}\), then \(K+\emptyset = \emptyset \) holds by definition of union of sets. If \(K=\dagger \), we get \(\dagger +\emptyset =\dagger \), and the axiom also holds.

For \(K\in \mathcal {L}_{s}\cup \{\dagger \}\), the axiom \(K+K=K\) also easily holds by definition of the plus operator. Same for \(K\cdot \{\varepsilon \}= K = K \cdot \{\varepsilon \}\) and \(K\cdot \emptyset = \emptyset = \emptyset \cdot K\) by definition of the operator for sequential composition.

It is easy to see the axioms \(1+e\cdot e^*\equiv _{\scriptscriptstyle \mathsf {SKA}}e^*\) and \(1+e^*\cdot e\equiv _{\scriptscriptstyle \mathsf {SKA}}e^*\) hold for \(K\in \mathcal {L}_{s}\). In case \(K=\dagger \), for \(1+e\cdot e^*\equiv _{\scriptscriptstyle \mathsf {SKA}}e^*\) we have

and a similar derivation for \(1+e^*\cdot e\equiv _{\scriptscriptstyle \mathsf {SKA}}e^*\).

For the commutativity of \(+\) we take \(K,L \in \mathcal {L}_{s}\cup \{\dagger \}\). If \(K=\dagger \) or \(L=\dagger \), we have \(K+L=\dagger =L+K\).

For associativity of the plus operator we take \(K,L,J\in \mathcal {L}_{s}\cup \{\dagger \}\). If any of K, L or J is \(\dagger \), it is easy to see the axiom holds.

For associativity of the sequential composition operator, consider \(K,L,J\in \mathcal {L}_{s}\cup \{\dagger \}\). We first can observe that if one of K, L or J is empty, then the equality holds trivially. Otherwise, if one of K, L and J is \(\dagger \), then \((K\cdot L)\cdot J = \dagger = K\cdot (L\cdot J)\).

Next, we verify distributivity of concatenation over \(+\). We will show a detailed proof for left-distributivity only; right-distributivity can be proved similarly. Let \(K,L,J\in \mathcal {L}_{s}\cup \{\dagger \}\). If one of K, L, or J is empty, then the claim holds immediately (the derivation is slightly different for K versus L or J). Otherwise, if one of K, L or J is \(\dagger \), then \(K\cdot (L + J) = \dagger = K\cdot L + K\cdot J\).

For the remaining least fixpoint axiom, let \(K,L,J\in \mathcal {L}_{s}\cup \{\dagger \}\). Assume that \(K+L\cdot J\le L\). We need to prove that \(K\cdot J^*\le L\). If \(L=\dagger \), then the claim holds immediately. If \(L\in \mathcal {L}_{s}\) and \(J = \dagger \), then L must be empty, hence K is empty, and the claim holds. If \(L,J\in \mathcal {L}_{s}\), then also \(K\in \mathcal {L}_{s}\) and the proof goes through as it does for KA.

We now get to the axioms for the \(\times \)-operator. The commutativity axiom is obvious from the commutative definition of \(\times \) (as we already know that \(\times \) is commutative on synchronous strings). The axiom \(K\times \emptyset =\emptyset \) is also satisfied by definition. The same holds for the axiom \(K\times \{\varepsilon \}= K\) as \(\{\varepsilon \}\) is finite.

For associativity of the synchronous product, consider \(K,L,J\in \mathcal {L}_{s}\cup \{\dagger \}\). If one of K, L or J is empty, then both sides of the equation evaluate to \(\emptyset \). Otherwise, if one of K, J, or L is \(\dagger \), then both sides of the equation evaluate to \(\dagger \). If K, J and L are all languages, and at most one of them is finite, then either \(K \times L = \dagger \), in which case the left-hand side evaluates to \(\dagger \), or \(K \times L\) is infinite (by Lemma A.1) and \(J = \dagger \), in which case the right-hand side evaluates to \(\dagger \) again. The right-hand side can be shown to evaluate to \(\dagger \) by a similar argument. In the remaining cases (at least two out of K, J and L are finite languages and none of them is \(\dagger \) or \(\emptyset \)), the proof of associativity for the language model applies.

For distributivity of synchronous product over \(+\), let \(K,L,J\in \mathcal {L}_{s}\cup \{\dagger \}\). If one of K, L or J is \(\emptyset \), then the proof is straightforward. Otherwise, if one of K, L or J is \(\dagger \), then both sides evaluate to \(\dagger \). If K and \(L + J\) are infinite, then the outcome is again \(\dagger \) on both sides (note that \(L + J\) being infinite implies that either L or J is infinite). In the remaining cases, K, L and J are languages and either K or \(L + J\) (hence L and J) is finite. In either case the proof for synchronous regular languages goes through. \(\square \)

Lemma 5.6. Let \(e,f\in \mathcal {T}_{\mathsf {SF}_1}\). If \(e\equiv _{\scriptscriptstyle \mathsf {SF}_1}f\) then \([\![e]\!]_{\scriptscriptstyle \mathsf {SF}_1}=[\![f]\!]_{\scriptscriptstyle \mathsf {SF}_1}\).

Proof

We need to verify each of the axioms of \(\mathsf {SF}_1\). The proof for the axioms of \(\mathsf {F}_1\) is immediate via the observation that synchronous languages over the alphabet \(\varSigma \) are simply languages over the alphabet \(\mathcal {P}_n(\varSigma )\). Thus we know that the \(\mathsf {F}_1\)-axioms are satisfied, as languages over alphabet \(\mathcal {P}_n(\varSigma )\) with \(1=\{\varepsilon \}\) and \(0=\emptyset \) form an \(\mathsf {F}_1\)-algebra. The additional axioms are the same as the ones that were added to KA for SKA, and we know they are sound from Lemma 3.6. \(\square \)

Lemma 5.14. For all \(e\in \mathcal {T}_{\mathsf {SF}_1}\) and \(A\in \mathcal {P}_n(\varSigma )\), we have \(\delta (e,A)\subseteq \rho (e)\). Also, if \(e'\in \rho (e)\), then \(\delta (e',A)\subseteq \rho (e)\).

Proof

We prove the first statement by induction on the structure of e. In the base, if we have \(e \in \{0, 1\}\), the claim holds vacuously. If we have \(a\in \varSigma \), then \(\rho (a)=\{1,a\}\) and \(\delta (a,A)=\{1 : A=\{a\}\}\), so the claim follows. For the inductive step, there are five cases to consider.

-

If \(e=H(e_0)\), then immediately \(\delta (H(e_0),A)=\emptyset \) so the claim holds vacuously.

-

If \(e = e_0 + e_1\), then by induction we have \(\delta (e_0, A) \subseteq \rho (e_0)\) and \(\delta (e_1, A) \subseteq \rho (e_1)\). Hence, we find that \(\delta (e, A) = \delta (e_0, A) \cup \delta (e_1, A) \subseteq \rho (e_0) \cup \rho (e_1) = \rho (e)\).

-

If \(e = e_0 \cdot e_1\), then by induction we have \(\delta (e_0, A) \subseteq \rho (e_0)\) and \(\delta (e_1, A) \subseteq \rho (e_1)\). Hence, we can calculate that

$$\begin{aligned} \delta (e, A)&= \{ e_0' \cdot e_1 : e_0' \in \delta (e_0, A) \} \cup \varDelta (e_1,e_0,A) \\&\subseteq \{ e_0' \cdot e_1 : e_0' \in \rho (e_0) \} \cup \rho (e_1) = \rho (e) \end{aligned}$$ -

If \(e = e_0 \times e_1\), then by induction we have \(\delta (e_0, A) \subseteq \rho (e_0)\) and \(\delta (e_1, A) \subseteq \rho (e_1)\) for all \(A\in \mathcal {P}_n(\varSigma )\). Hence, we can calculate that

$$\begin{aligned} \delta (e, A)&= \{ e_0' \times e_1' : e_0' \in \delta (e_0, B_1), e_1'\in \delta (e_1,B_2),B_1\cup B_2=A \} \\&\quad \cup \varDelta (e_0, e_1, A) \cup \varDelta (e_1, e_0, A) \\&\subseteq \{ e_0' \times e_1' : e_0' \in \rho (e_0), e_1'\in \rho (e_1) \} \cup \rho (e_0)\cup \rho (e_1) = \rho (e) \end{aligned}$$ -

If \(e = e_0^*\), then by induction we have \(\delta (e_0, A) \subseteq \rho (e_0)\). Hence, we find that

$$ \delta (e, A) = \{ e_0' \cdot e_0^* : e_0' \in \delta (e_0, A) \} \subseteq \{ e_0' \cdot e_0^* : e_0' \in \rho (e_0) \}\subseteq \rho (e) $$

For the second statement, we prove that if \(e' \in \rho (e)\), then \(\rho (e') \subseteq \rho (e)\). The result of the first part tells us that \(\delta (e',A)\subseteq \rho (e')\), which together with \(\rho (e') \subseteq \rho (e)\) proves the claim. We proceed by induction on e. In the base, there are two cases to consider. First, if \(e =0\), then the claim holds vacuously. If \(e=1\), then the only \(e'\in \rho (e)\) is \(e'=1\), so the claim holds. If \(e=a\) for \(a\in \varSigma \), we have \(\rho (e)=\{1,a\}\). It trivially holds that \(\rho (e')\subseteq \rho (e)\) for \(e'\in \rho (e)\).

For the inductive step, there are four cases to consider.

-

If \(e = H(e_0)\), then \(\rho (e) = \{ 1 \}\), and the proof is as in the case where \(e = 1\).

-

If \(e = e_0 + e_1\), assume w.l.o.g. that \(e' \in \rho (e_0)\). By induction, we derive that

$$ \rho (e') \subseteq \rho (e_0) \subseteq \rho (e) $$ -

If \(e = e_0 \cdot e_1\) then there are two cases to consider.

-

If \(e' = e_0' \cdot e_1\) where \(e_0' \in \rho (e_0)\), then we calculate

$$\begin{aligned} \rho (e')&= \{ e_0'' \cdot e_1 : e_0'' \in \rho (e_0') \} \cup \rho (e_1)\\&\subseteq \{ e_0'' \cdot e_1 : e_0'' \in \rho (e_0) \} \cup \rho (e_1) = \rho (e) \end{aligned}$$ -

If \(e' \in \rho (e_1)\), then by induction we have \(\rho (e') \subseteq \rho (e_1) \subseteq \rho (e)\).

-

-

If \(e = e_0 \times e_1\) then there are three cases to consider.

-

The first case is \(e' = e_0' \times e_1'\) where \(e_0' \in \rho (e_0)\) and \(e_1'\in \rho (e_1)\), we get \(\rho (e_0')\subseteq \rho (e_0)\) and \(\rho (e_1')\subseteq \rho (e_1)\) by induction. We calculate

$$\begin{aligned} \rho (e')&= \{ e_0'' \times e_1'' : e_0'' \in \rho (e_0'), e_1'' \in \rho (e_1') \} \cup \rho (e_0')\cup \rho (e_1') \\&\subseteq \{ e_0'' \cdot e_1'' : e_0'' \in \rho (e_0), e_1'' \in \rho (e_1) \} \cup \rho (e_0)\cup \rho (e_1) \\&= \rho (e) \end{aligned}$$ -

For \(e' \in \rho (e_0)\), then by induction we have \(\rho (e') \subseteq \rho (e_0) \subseteq \rho (e)\).

-

For \(e' \in \rho (e_1)\), the argument is similar to the previous case.

-

-

If \(e = e_0^*\), then either \(e' = 1\) or \(e' = e_0' \cdot e_0^*\) for some \(e_0' \in \rho (e_0)\). In the former case, \(\rho (e') = \{1\}\subseteq \rho (e)\). In the latter case, we find by induction that

$$\begin{aligned} \rho (e')&= \{ e_0'' \cdot e_0^* : e_0'' \in \rho (e_0') \} \cup \rho (e_0^*) \\&\subseteq \{ e_0'' \cdot e_0^* : e_0'' \in \rho (e_0) \} \cup \rho (e_0^*) \subseteq \rho (e_0^*) \end{aligned}$$\(\square \)

Lemma 5.17. For all \(e\in \overline{\mathcal {T}_{\mathsf {SL}}}\) we have that \(\overline{e}=e\).

Proof

As \(e\in \overline{\mathcal {T}_{\mathsf {SL}}}\) we have that \(e=\overline{e_0}\) for some \(e_0\in \mathcal {T}_{\mathsf {SL}}\). From Lemma 5.16 we know that \(\overline{e_0}\equiv _{\scriptscriptstyle \mathsf {SF}_1}e_0\). So we get \(e\equiv _{\scriptscriptstyle \mathsf {SF}_1}e_0\). Again from Lemma 5.16 we then know that \(\overline{e}=\overline{e_0}=e\). \(\square \)

Lemma A.2

For \(x,y\in {(\mathcal {P}_n(\varSigma ))}^*\), we have \({(x\cdot y)}^{\varPi }=x^{\varPi }\cdot y^{\varPi }\).

Proof

We proceed by induction on the length of xy. In the base, we have \(xy=\varepsilon \). Thus \(x=\varepsilon \) and \(y=\varepsilon \). We have \(\varepsilon ^{\varPi }=\varepsilon \) so the result follows immediately. In the inductive step we consider \(xy=aw\) for \(a\in \mathcal {P}_n(\varSigma )\). We have to consider two cases. In the first case we have \(x=ax'\). The induction hypothesis gives us that \({(x'\cdot y)}^{\varPi }=x'^{\varPi }\cdot y^{\varPi }\). We then have \({(x\cdot y)}^{\varPi }={(ax'\cdot y)}^{\varPi }=a^{\varPi }\cdot {(x'\cdot y)}^{\varPi }=a^{\varPi }\cdot x'^{\varPi }\cdot y^{\varPi }=x^{\varPi }\cdot y^{\varPi }\). In the second case we have \(x=\varepsilon \) and \(y=aw\). We then conclude that \({(x\cdot y)}^{\varPi }=y^{\varPi }= x^{\varPi }\cdot y^{\varPi }\). \(\square \)

Lemma 5.18. For synchronous languages L and K, all of the following hold: (i) \({(L\cup K)}^{\varPi }=L^{\varPi }\cup K^{\varPi }\), (ii) \({(L\cdot K)}^{\varPi }=L^{\varPi }\cdot K^{\varPi }\), and(iii) \({(L^*)}^{\varPi }= {(L^{\varPi })}^*\).

Proof

-

(i)

First, suppose \(w \in {(L\cup K)}^{\varPi }\). Thus we have \(w=v^{\varPi }\) for \(v\in L\cup K\). This gives us \(v\in L\) or \(v\in K\). We assume the former without loss of generality. Thus we know \(w=v^{\varPi }\in L^{\varPi }\). Hence we know \(w\in L^{\varPi }\cup K^{\varPi }\). The other direction can be proved analogously.

-

(ii)

First, suppose \(w \in {(L\cdot K)}^{\varPi }\). Thus we have \(w=v^{\varPi }\) for some \(v\in L\cdot K\). This gives us \(v=v_1\cdot v_2\) for some \(v_1\in L\) and some \(v_2\in K\). By definition of \({(-)}^{\varPi }\) we know that \(v_1^{\varPi }\in L^{\varPi }\) and \(v_2^{\varPi }\in K^{\varPi }\). Thus we have \(v_1^{\varPi }\cdot v_2^{\varPi }\in L^{\varPi }\cdot K^{\varPi }\). From Lemma A.2 we know that \(w=v^{\varPi }={(v_1\cdot v_2)}^{\varPi }=v_1^{\varPi }\cdot v_2^{\varPi }\), which gives us the desired result of \(w\in L^{\varPi }\cdot K^{\varPi }\). The other direction can be proved analogously.

-

(iii)

Take \(w\in {(L^*)}^{\varPi }\). Thus we have \(w=v^{\varPi }\) for some \(v\in L^*\). By definition of the star of a synchronous language we know that \(v=u_1\cdots u_n\) for \(u_i\in L\). As \(u_i\in L\), we have \(u_i^{\varPi }\in L^{\varPi }\) and \(u_1^{\varPi }\cdots u_n ^{\varPi }\in {(L^{\varPi })}^*\). By Lemma A.2, we know that \(w=v^{\varPi }={(u_1\cdots u_n)}^{\varPi }=u_1^{\varPi }\cdots u_n^{\varPi }\). Thus we have \(w\in {(L^{\varPi })}^*\), which is the desired result. The other direction can be proved analogously. \(\square \)

Theorem 6.2 (Fundamental Theorem). For all \(e\in \mathcal {T}_{\mathsf {SF}_1}\), we have

Proof

Here we treat the inductive cases not displayed in the main proof, where we treated only the synchronous case.

-

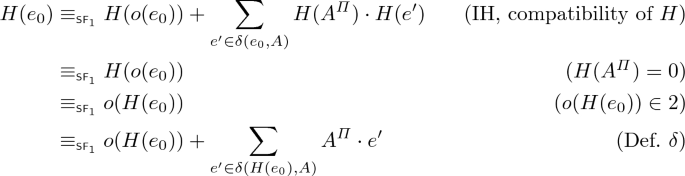

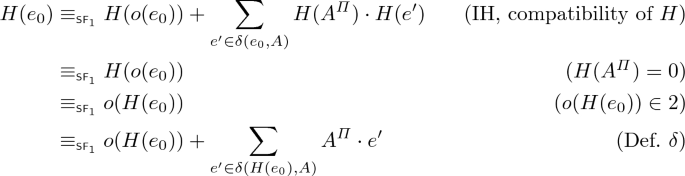

If \(e=H(e_0)\), derive:

-

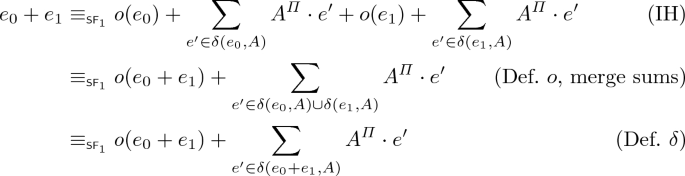

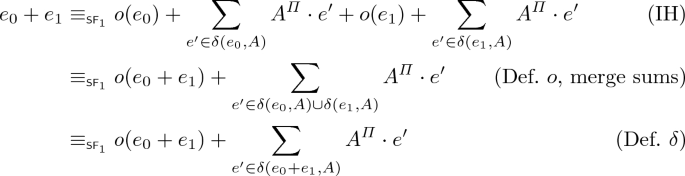

If \(e=e_0+e_1\), derive:

-

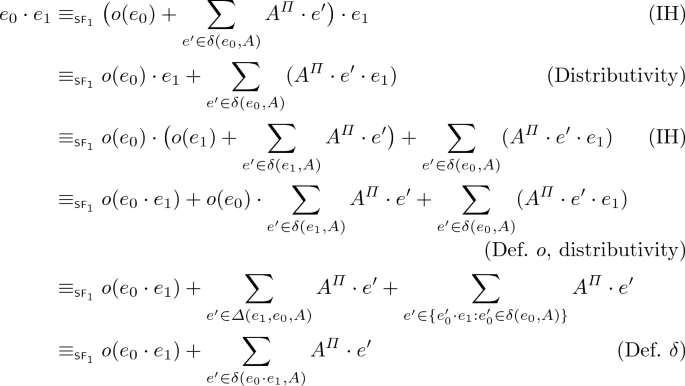

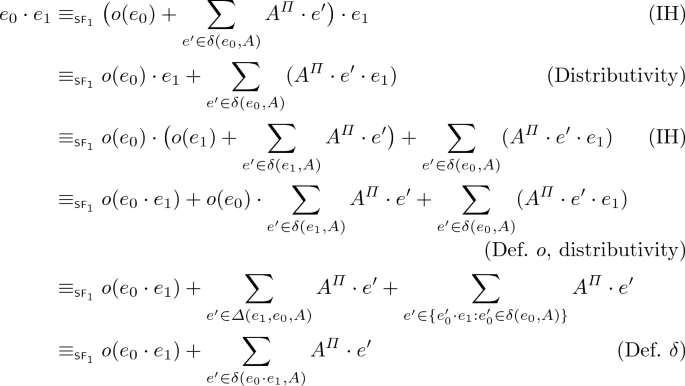

If \(e=e_0\cdot e_1\), derive:

-

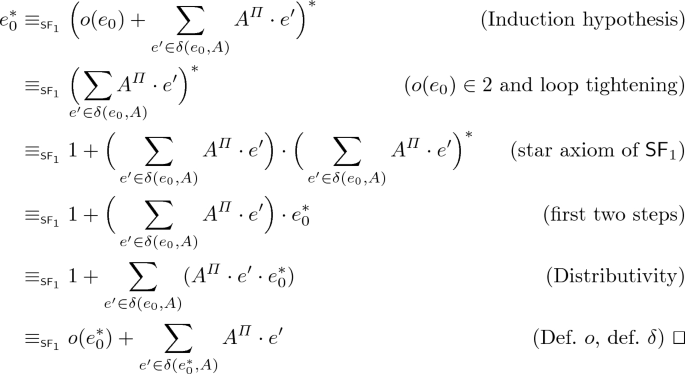

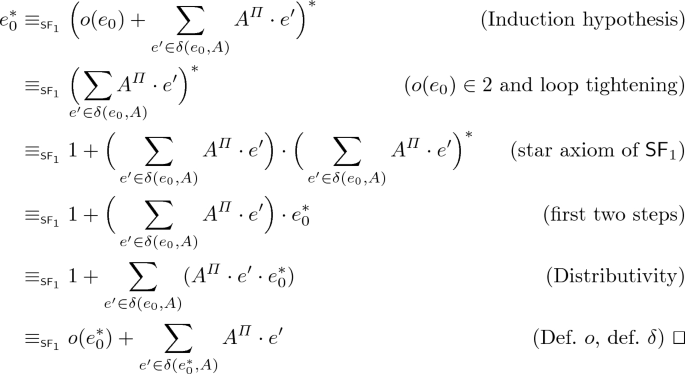

If \(e=e_0^*\), we derive:

Lemma 7.2. For all \(e\in \mathcal {T}_{\mathsf {NSF}}\), we have \({([\![e]\!]_{\scriptscriptstyle \mathsf {SF}_1})}^{\varPi }=[\![e]\!]_{\scriptscriptstyle \mathsf {F}_1}\).

Proof

In the main text we have treated the base cases. The inductive cases work as follows. There are three cases to consider. If \(e=e_0+e_1\), then \({([\![e]\!]_{\scriptscriptstyle \mathsf {SKA}})}^{\varPi }={([\![e_0]\!]_{\scriptscriptstyle \mathsf {SKA}}\cup [\![e_1]\!]_{\scriptscriptstyle \mathsf {SKA}})}^{\varPi }={([\![e_0]\!]_{\scriptscriptstyle \mathsf {SKA}})}^{\varPi }\cup {([\![e_1]\!]_{\scriptscriptstyle \mathsf {SKA}})}^{\varPi }\) (Lemma 5.18). From the induction hypothesis we obtain \({([\![e_0]\!]_{\scriptscriptstyle \mathsf {SKA}})}^{\varPi }=[\![e_0]\!]_{\scriptscriptstyle \mathsf {KA}}\) and \({([\![e_1]\!]_{\scriptscriptstyle \mathsf {SKA}})}^{\varPi }=[\![e_1]\!]_{\scriptscriptstyle \mathsf {KA}}\). Combining these results we get \({([\![e]\!]_{\scriptscriptstyle \mathsf {SKA}})}^{\varPi }=[\![e_0]\!]_{\scriptscriptstyle \mathsf {KA}}\cup [\![e_1]\!]_{\scriptscriptstyle \mathsf {KA}}=[\![e_0]\!]_{\scriptscriptstyle \mathsf {KA}}+[\![e_1]\!]_{\scriptscriptstyle \mathsf {KA}}=[\![e_0+e_1]\!]_{\scriptscriptstyle \mathsf {KA}}\), so the claim follows. Secondly, if \(e=e_0\cdot e_1\), then \({([\![e]\!]_{\scriptscriptstyle \mathsf {SKA}})}^{\varPi }={([\![e_0]\!]_{\scriptscriptstyle \mathsf {SKA}}\cdot [\![e_1]\!]_{\scriptscriptstyle \mathsf {SKA}})}^{\varPi }={([\![e_0]\!]_{\scriptscriptstyle \mathsf {SKA}})}^{\varPi }\cdot {([\![e_1]\!]_{\scriptscriptstyle \mathsf {SKA}})}^{\varPi }\) (Lemma 5.18). From the induction hypothesis we obtain \({([\![e_0]\!]_{\scriptscriptstyle \mathsf {SKA}})}^{\varPi }=[\![e_0]\!]_{\scriptscriptstyle \mathsf {KA}}\) and \({([\![e_1]\!]_{\scriptscriptstyle \mathsf {SKA}})}^{\varPi }=[\![e_1]\!]_{\scriptscriptstyle \mathsf {KA}}\). We can then conclude that \({([\![e]\!]_{\scriptscriptstyle \mathsf {SKA}})}^{\varPi }=[\![e_0]\!]_{\scriptscriptstyle \mathsf {KA}}\cdot [\![e_1]\!]_{\scriptscriptstyle \mathsf {KA}}=[\![e_0\cdot e_1]\!]_{\scriptscriptstyle \mathsf {KA}}\). Lastly, if \(e=e_0^*\), we get \({([\![e_0^*]\!]_{\scriptscriptstyle \mathsf {SKA}})}^{\varPi }={({([\![e_0]\!]_{\scriptscriptstyle \mathsf {SKA}})}^*)}^{\varPi }={({([\![e_0]\!]_{\scriptscriptstyle \mathsf {SKA}})}^{\varPi })}^*\) (Lemma 5.18). From the induction hypothesis we obtain \({([\![e_0]\!]_{\scriptscriptstyle \mathsf {SKA}})}^{\varPi }=[\![e_0]\!]_{\scriptscriptstyle \mathsf {KA}}\). Thus we have \({([\![e]\!]_{\scriptscriptstyle \mathsf {SKA}})}^{\varPi }=[\![e_0]\!]_{\scriptscriptstyle \mathsf {KA}}^* = [\![e_0^*]\!]_{\scriptscriptstyle \mathsf {KA}}\) and the claim follows. \(\square \)

Lemma 7.4. Let (M, p) be a Q -linear system such that M and p are guarded. We can construct Q -vector x that is the unique (up to \(\mathsf {SF}_1 \) -equivalence) solution to (M, p) in \(\mathsf {SF}_1\). Moreover, if M and p are in normal form, then so is x.

Proof

We will construct x by induction on the size of Q. In the base, let \(Q=\emptyset \). In this case the unique Q-vector is a solution. In the inductive step, take \(k\in Q\) and let \(Q'=Q\setminus \{k\}\). Then construct the \(Q'\)-linear system \((M', p')\) as follows:

As \(Q'\) is a strictly smaller set than Q and \(M'\) is guarded, we can apply our induction hypothesis to \((M', p')\). So we know by induction that \((M', p')\) has a unique solution \(x'\). Moreover, if \(M'\) and \(p'\) are in normal form, so is \(x'\); note that if M and p are in normal form, then so are \(M'\) and \(p'\).

We use \(x'\) to construct the Q-vector x:

The first thing to show now is that x is indeed a solution of (M, p). To this end, we need to show that \(M\cdot x+p\equiv _{\scriptscriptstyle \mathsf {SF}_1}x\). We have two cases. For \(i\in Q'\) we derive:

For \(i=k\), we derive:

We now know that x is a solution to (M, p) because \(M\cdot x+p\equiv _{\scriptscriptstyle \mathsf {SF}_1}x\). Furthermore, if M and p are in normal form, then so is \(x'\), and thus x is in normal form by construction.

Next we claim that x is unique. Let y be any solution of (M, p). We choose the \(Q'\)-vector \(y'\) by taking \(y'(i)=y(i)\). To see that \(y'\) is a solution to \((M', p')\), we first claim that the following holds:

To see that this is true, derive

Note that we can apply the unique fixpoint axiom because we know that M is guarded and thus that \(H(M(k,k))=0\).

Now we can derive the following:

Thus \(y'\) is a solution to \((M', p')\). As \(x'\) is the unique solution to \((M', p')\), we know that \(y'\equiv _{\scriptscriptstyle \mathsf {SF}_1}x'\).

For \(i\ne k\) we know that \(x(i)=x'(i)\equiv _{\scriptscriptstyle \mathsf {SF}_1}y'(i)=y(i)\). For \(i=k\) we can derive:

Thus, \(y\equiv _{\scriptscriptstyle \mathsf {SF}_1}x\), thereby proving that x is the unique solution to (M, p). \(\square \)

Rights and permissions

Copyright information

© 2019 Springer Nature Switzerland AG

About this paper

Cite this paper

Wagemaker, J., Bonsangue, M., Kappé, T., Rot, J., Silva, A. (2019). Completeness and Incompleteness of Synchronous Kleene Algebra. In: Hutton, G. (eds) Mathematics of Program Construction. MPC 2019. Lecture Notes in Computer Science(), vol 11825. Springer, Cham. https://doi.org/10.1007/978-3-030-33636-3_14

Download citation

DOI: https://doi.org/10.1007/978-3-030-33636-3_14

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-33635-6

Online ISBN: 978-3-030-33636-3

eBook Packages: Computer ScienceComputer Science (R0)