Abstract

The investigation of vascular biometric traits has become increasingly popular during the last years. This book chapter provides a comprehensive discussion of the respective state of the art, covering hand-oriented techniques (finger vein, palm vein, (dorsal) hand vein and wrist vein recognition) as well as eye-oriented techniques (retina and sclera recognition). We discuss commercial sensors and systems, major algorithmic approaches in the recognition toolchain, available datasets, public competitions and open-source software, template protection schemes, presentation attack(s) (detection), sample quality assessment, mobile acquisition and acquisition on the move, and finally eventual disease impact on recognition and template privacy issues.

You have full access to this open access chapter, Download chapter PDF

Similar content being viewed by others

Keywords

- Vascular biometrics

- Finger vein recognition

- Hand vein recognition

- Palm vein recognition

- Retina recognition

- Sclera recognition

- Near-infrared

1 Introduction

As the name suggests, vascular biometrics are based on vascular patterns, formed by the blood vessel structure inside the human body.

Historically, Andreas Vesalius already suggested in 1543 that the vessels in the extremities of the body are highly variable in their location and structure. Some 350 years later, a professor of forensic medicine at Padua University, Arrigo Tamassia, stated that no two vessel patterns seen on the back of the hand seem to be identical in any two individuals [23].

This pattern has to be made visible and captured by a suitable biometric scanner device in order to be able to conduct biometric recognition. Two parts of the human body (typically not covered by clothing in practical recognition situations) are the major source to extract vascular patterns for biometric purposes: The human hand [151, 275] (used in finger vein [59, 120, 234, 247, 250, 300] as well as in hand/palm/wrist vein [1, 226] recognition) and the human eye (used in retina [97, 166] and sclera [44] recognition), respectively.

The imaging principles used, however, are fairly different for those biometric modalities. Vasculature in the human hand is at least covered by skin layers and also by other tissue types eventually (depending on the vasculatures’ position depth wrt. skin surface). Therefore, Visible Light (VIS) imaging does not reveal the vessel structures properly.

1.1 Imaging Hand-Based Vascular Biometric Traits

In principle, high-precision imaging of human vascular structures, including those inside the human hand, is a solved problem. Figure 1.1a displays corresponding vessels using a Magnetic Resonance Angiography (MRA) medical imaging device, while Fig. 1.1b shows the result of applying hyperspectral imaging using a STEMMER IMAGING device using their Perception Studio software to visualise the data captured in the range 900–1700 nm. However, biometric sensors have a limitation in terms of their costs. For practical deployment in real-world authentication solutions, the technologies used to produce the images in Fig. 1.1 are not an option for this reason. The solution is much simpler and thus more cost-effective Near-Infrared (NIR) imaging.

Joe Rice (the author of the Foreword of this Handbook) patented his NIR-imaging-based “Veincheck” system in the early 1980s which is often seen as the birth of hand-based vascular biometrics. After the expiry of that patent, Hitachi, Fujitsu and Techsphere launched security products relying on vein biometrics (all holding various patents in this area now). Joe Rice is still involved in this business, as he is partnering with the Swiss company BiowatchID producing wrist vein-based mobile recognition technology (see Sect. 1.2).

The physiological background of this imaging technique is as follows. The haemoglobin in the bloodstream absorbs NIR light. The haemoglobin is the pigment in the blood which is primarily composed of iron, which carries the oxygen. Haemoglobin is known to absorb NIR light. This is why vessels appear as dark structures under NIR illumination, while the surrounding tissue has a much lower light absorption coefficient in that spectrum and thus appears bright. The blood in veins obviously contains a higher amount of deoxygenated haemoglobin as compared to blood in arteries. Oxygenated and deoxygenated haemoglobin absorb NIR light equally at 800 nm, whereas at 760 nm absorption is primarily from deoxygenated haemoglobin while above 800 nm oxygenated haemoglobin exhibits stronger absorption [68, 161]. Thus, the vascular pattern inside the hand can be rendered visible with the help of an NIR light source in combination with an NIR-sensitive image sensor. Depending on the used wavelength of illumination, either both or only a single type of vessels is captured predominantly.

The absorbing property of deoxygenated haemoglobin is also the reason for terming these hand-based modalities as finger vein and hand/palm/wrist vein recognition, while it is actually never demonstrated that it is really only veins and not arteries that are acquired by the corresponding sensors. Finger vein recognition deals with the vascular pattern inside the human fingers (this is the most recent trait in this class, and often [126] is assumed to be its origin), while hand/palm/wrist vein recognition visualises and acquires the pattern of the vessels of the central area (or wrist area) of the hand. Figure 1.2 displays example sample data from public datasets for palm vein, wrist vein and finger vein.

The positioning of the light source relative to the camera and the subject’s finger or hand plays an important role. Here, we distinguish between reflected light and transillumination imaging. Reflected light means that the light source and the camera are placed on the same side of the hand and the light emitted by the source is reflected back to the camera. In transillumination, the light source and the camera are on the opposite side of the hand, i.e. the light penetrates skin and tissue of the hand before it is captured by the camera. Figure 1.3 compares these two imaging principles for the backside of the hand. A further distinction is made (mostly in reflected light imaging) whether the palmar or ventral (i.e. inner) side of the hand (or finger) is acquired, or if the dorsal side is subject to image acquisition. Still, also in transillumination imaging, it is possible to discriminate between palmar and dorsal acquisition (where in palmar acquisition, the camera is placed so to acquire the palmar side of the hand while the light is positioned at the dorsal side). Acquisition for wrist vein recognition is limited to reflected light illumination of the palmar side of the wrist.

1.2 Imaging Eye-Based Vascular Biometric Traits

For the eye-based modalities, VIS imaging is applied to capture vessel structures. The retina is the innermost, light-sensitive layer or “coat”, of shell tissue of the eye. The optic disc or optic nerve head is the point of exit for ganglion cell axons leaving the eye. Because there are no rods or cones covering the optic disc, it corresponds to a small blind spot in each eye. The ophthalmic artery bifurcates and supplies the retina via two distinct vascular networks: The choroidal network, which supplies the choroid and the outer retina, and the retinal network, which supplies the retina’s inner layer. The bifurcations and other physical characteristics of the inner retinal vascular network are known to vary among individuals, which is exploited in retina recognition. Imaging this vascular network is accomplished by fundus photography , i.e. capturing a photograph of the back of the eye, the fundus (which is the interior surface of the eye opposite the lens and includes the retina, optic disc, macula, fovea and posterior pole). Specialised fundus cameras as developed for usage in ophthalmology (thus being a medical device) consist of an intricate microscope (up to 5\(\times \) magnification) attached to a flash-enabled camera, where the annulus-shaped illumination passes through the camera objective lens and through the cornea onto the retina. The light reflected from the retina passes through the un-illuminated hole in the doughnut-shaped illumination system. Illumination is done with white light and acquisition is done either in full colour or employing a green-pass filter (\({\approx }\)540–570 nm) to block out red wavelengths resulting in higher contrast. In medicine, fundus photography is used to monitor, e.g. macular degeneration, retinal neoplasms, choroid disturbances and diabetic retinopathy.

Finally, for sclera recognition, high-resolution VIS eye imagery is required in order to properly depict the fine vessel network being present. Optimal visibility of the vessel network is obtained from two off-angle images in which the eyes look into two directions. Figure 1.4 displays example sample data from public datasets for retina and sclera biometric traits.

1.3 Pros and Cons of Vascular Biometric Traits

Vascular biometrics exhibit certain advantages as compared to other biometric modalities as we shall discuss in the following. However, these modalities have seen commercial deployments to a relatively small extent so far, especially when compared to fingerprint or face recognition-based systems. This might be attributed to some disadvantages also being present for these modalities, which will be also considered subsequently. Of course, not all advantages or disadvantages are shared among all types of vascular biometric modalities, so certain aspects need to be discussed separately and we again discriminate between hand- and eye-based traits.

-

Advantages of hand-based vascular biometrics (finger, hand, and wrist vein recognition): Comparisons are mostly done against fingerprint and palmprint recognition (and against techniques relying on hand geometry to some extent).

-

Vascular biometrics are expected to be insensitive to skin surface conditions (dryness, dirt, lotions) and abrasion (cuts, scars). While the imaging principle strongly suggests this property, so far no empirical evidence has been given to support this.

-

Vascular biometrics enable contactless sensing as there is no necessity to touch the acquiring camera. However, in finger vein recognition, all commercial systems and almost all other sensors being built require the user to place the finger directly on some sensor plate. This is done to ensure position normalisation to some extent and to avoid the camera being dazzled in case of a mal-placed finger (in the transillumination case, the light source could directly illuminate the sensing camera).

-

Vascular biometrics are more resistant against forgeries (i.e. spoofing, presentation attacks) as the vessels are only visible in infrared light. So on the one hand, it is virtually impossible to capture these biometric traits without user consent and from a distance and, on the other hand, it is more difficult to fabricate artefacts to be used in presentation attacks (as these need to be visible in NIR).

-

Liveness detection is easily possible due to detectable blood flow. However, this requires NIR video acquisition and subsequent video analysis and not much work has been done to actually demonstrate this benefit.

-

-

Disadvantages

-

In transillumination imaging (as typically applied for finger veins), the capturing devices need to be built rather large.

-

Images exhibit low contrast and low quality overall caused by the scattering of NIR rays in human tissue. The sharpness of the vessel layout is much lower compared to vessels acquired by retina or sclera imaging. Medical imaging principles like Magnetic Resonance Angiography (MRA) produce high-quality imagery depicting vessels inside the human body; however, these imaging techniques have prohibitive cost for biometric applications.

-

The vascular structure may be influenced by temperature, physical activity, as well as by ageing and injuries/diseases; however, there is almost no empirical evidence that this applies to vessels inside the human hand (see for effects caused by meteorological variance [317]). This book contains a chapter investigating the influence of varying acquisition conditions on finger vein recognition to lay first foundations towards understanding these effects [122].

-

Current commercial sensors do not allow to access, output and store imagery for further investigations and processing. Thus, all available evaluations of these systems have to rely on a black-box principle and only commercial recognition software of the same manufacturer can be used. This situation has motivated the construction of many prototypical devices for research purposes.

-

These modalities cannot be acquired from a distance (which is also an advantage in terms of privacy protection), and it is fairly difficult to acquire them on the move. While at least the first property is beneficial for privacy protection, the combination of both properties excludes hand-based vascular biometrics from free-flow, on-the-move-type application scenarios. However, at least for on-the-move acquisition, advances can be expected in the future [164].

-

-

Advantages of eye-based vascular biometrics (sclera and retina recognition): Comparisons are mostly done against iris, periocular and face recognition.

-

As compared to iris recognition, there is no need to use NIR illumination and imaging. For both modalities, VIS imaging is used.

-

As compared to periocular and face recognition, retina and sclera vascular patterns are much less influenced by intended (e.g. make-up, occlusion like scarfs, etc.) and unintended (e.g. ageing) alterations of the facial area.

-

It is almost impossible to conduct presentation attacks against these modalities—entire eyes cannot be replaced as suggested by the entertainment industry (e.g. “Minority Report”). Full facial masks cannot be used for realistic sclera spoofing.

-

Liveness detection should be easily possible due to detectable blood flow (e.g. video analysis of retina imagery) and pulse detection in sclera video.

-

Not to be counted as an isolated advantage, but sclera-related features can be extracted and fused with other facial related modalities given the visual data is of sufficiently high quality.

-

-

Disadvantages

-

Retina vessel capturing requires to illuminate the background of the eye which is not well received by users. Data acquisition feels like ophthalmological treatment.

-

Vessel structure/vessel width in both retina [171] and sclera [56] is influenced by certain diseases or pathological conditions.

-

Retina capturing devices originate from ophthalmology and thus have a rather high cost (as it is common for medical devices).

-

Currently, there are no commercial solutions available that could prove the practicality of these two biometric modalities.

-

For both modalities, data capture is not possible from a distance (as noted before, this can also be seen as an advantage in terms of privacy protection). For retina recognition, data acquisition is also definitely not possible on-the-move (while this could be an option for sclera given top-end imaging systems in place).

-

In the subsequent sections, we will discuss the following topics for each modality:

-

Commercial sensors and systems;

-

Major algorithmic approaches for preprocessing, feature extraction, template comparison and fusion (published in high-quality scientific outlets);

-

Used datasets (publicly available), competitions and open-source software;

-

Template protection schemes;

-

Presentation attacks, presentation attack detection techniques and sample quality;

-

Mobile acquisition and acquisition on the move.

2 Commercial Sensors and Systems

2.1 Hand-Based Vascular Traits

The area of commercial systems for hand-based vein biometrics is dominated by the two Japanese companies Hitachi and Fujitsu which hold patents for many technical details of the corresponding commercial solutions. This book contains two chapters authored by leading personnel of these two companies [88, 237]. Only in the last few years, competitors have entered the market. Figure 1.5 displays the three currently available finger vein sensors. As clearly visible, the Hitachi sensor is based on a pure transillumination principle, while the other two sensors illuminate the finger from the side while capturing is conducted from below (all sensors capture the palmar side of the finger). Yannan Tech has close connections to a startup from Peking University.

With respect to commercial hand vein systems, the market is even more restricted. Figure 1.6 shows three variants of the Fujitsu PalmSecure system: The “pure” sensor (a), the sensor equipped with a supporting frame to stabilise the hand and restrict the possible positions relative to the sensor (b) and the sensor integrated into a larger device for access control (integration done by a Fujitsu partner company) (c). When comparing the two types of systems, it gets clear that the PalmSecure system can be configured to operate in touchless/contactless manner (where the support frame is suspected to improve in particular genuine comparison scores), while finger vein scanners all require the finger to be placed on the surface of the scanner. While this would not be required in principle, this approach limits the extent of finger rotation and guarantees a rather correct placement of the finger relative to the sensors’ acquisition device. So while it is understandable to choose this design principle, the potential benefit of contactless operation, especially in comparison to fingerprint scanners, is lost.

Techsphere,Footnote 1 being in the business almost right from the start of vascular biometrics, produces dorsal hand vein readers. BiowatchID,Footnote 2 a recent startup, produces a bracelet that is able to read out the wrist pattern and supports various types of authentication solutions. Contrasting to a stationary sensor, this approach represents a per-se mobile solution permanently attached to the person subject to authentication.

Although hand vein-based sensors have been readily available for years, deployments are not seen as frequently as compared to the leading biometric modalities, i.e. face and fingerprint recognition. The most widespread application field of finger vein recognition technology can be observed in finance industry (some examples are illustrated in Fig. 1.7). On the one hand, several financial institutions offer their clients finger vein sensors for secure authentication in home banking. On the other hand, major finger vein equipped ATM roll-outs have been conducted in several countries, e.g. Japan, Poland, Turkey and Hong Kong. The PalmSecure system is mainly used for authentication on Fujitsu-built devices like laptops and tablets and in access control systems.

2.2 Eye-Based Vascular Traits

For vascular biometrics based on retina, commercialisation has not yet reached a mature state (in contrast, first commercial systems have disappeared from the market). Starting very early, the first retina scanners were launched in 1985 by the company EyeDentify and subsequently the company almost established a monopoly in this area. The most recent scanner is the model ICAM 2001, and it seems that this apparatus can still be acquired.Footnote 3 In the first decade of this century, the company Retica Systems Inc. even provided some insight into their template structure called retina code (“Multi-Radius Digital Pattern”,Footnote 4 website no longer active), which has been analysed in earlier work [67]. The proposed template seemed to indicate a low potential for high variability (since the generation is not explained in detail, a reliable statement on this issue is not possible of course). Recall that Retica Systems Inc. claimed a template size of 20–100 bytes, whereas the smallest template investigated in [67] had 225 bytes and did not exhibit sufficient inter-class variability. Deployment of retina recognition technology has been seen mostly in US governmental agencies like CIA, FBI, NASA,Footnote 5 which is a difficult business model for sustainable company development (which might represent a major reason for the low penetration of this technology).

For sclera biometrics, the startup EyeVerify (founded 2012) termed their sclera recognition technology “Eyeprint ID” for which the company also acquired the corresponding patent. After the inclusion of the technology into several mobile banking applications, the company was acquired by Ant Financial, the financial services arm of Alibaba Group in 2016 (their website http://eyeverify.com/ is no longer active).

3 Algorithms in the Recognition Toolchain

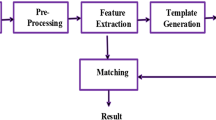

Typically, the recognition toolchain consists of several distinct stages, most of which are identical across most vascular traits:

-

1.

Acquisition: Commercial sensors are described in Sect. 1.2, while references to custom developments are given in the tables describing publicly available datasets in Sect. 1.4. The two chapters in this handbook describing sensor technologies provide further details on this topic [113, 258].

-

2.

Image quality assessment: Techniques for this important topic (as required to assess sample quality to demand another acquisition process in case of poor quality or to conduct quality-weighted fusion) are described in Sect. 1.6 for all considered vascular modalities separately.

-

3.

Preprocessing: Typically describes low-level image processing techniques (including normalisation and a variety of enhancement techniques) to cope with varying acquisition conditions, poor contrast, noise and blur. These operations depend on the target modality and are typically even sensor specific. They might also be conducted after the stage mentioned subsequently, but do often assist in RoI determination so that in most cases, the order as suggested here is the typical one.

-

4.

Region of Interest (RoI) determination: This operation describes the process to determine the area in the sample image which is further subjected to feature extraction. In finger vein recognition, the actual finger texture has to be determined, while in palm vein recognition in most cases a rectangular central area of the palm is extracted. For hand and wrist vein recognition, respectively, RoI extraction is hardly consistently done across different methods; still, the RoI is concentrated to contain visual data corresponding to hand tissue only. For retina recognition, the RoI is typically defined by the imaging device and is often a circle of normalised radius around the blind spot. In sclera recognition, this process is of highest importance and is called sclera segmentation, as it segments the sclera area from iris and eyelids.

-

5.

Feature extraction: The ultimate aim of feature extraction is to produce a compact biometric identifier, i.e. the biometric template. As all imagery involving vascular biometrics contain visualised vascular structure, there are basically two options for feature extraction: First, feature extraction directly employs extracted vessel structures, relying on binary images representing these structures, skeletonised versions thereof, graph representations of the generated skeletons or using vein minutiae in the sense of vessel bifurcations or vessel endings. The second option relies on interpreting the RoI as texture patch which is used to extract discriminating features, in many cases key point-related techniques are employed. Deep-learning-based techniques are categorised into this second type of techniques except for those which explicitly extract vascular structure in a segmentation approach. A clear tendency may be observed: The better the quality of the samples and thus the clarity of the vessel structure, the more likely it is to see vein minutiae being used as features. In fundus images with their clear structure, vessels can be identified with high reliability, thus, vessel minutiae are used in most proposals (as fingerprint minutiae-based comparison techniques can be used). On the other hand, sclera vessels are very fine-grained and detailed structures which are difficult to explicitly extract from imagery. Therefore, in many cases, sclera features are more related to texture properties rather than to explicit vascular structure. Hand-based vascular biometrics are somewhat in between, so we see both strategies being applied.

-

6.

Biometric comparison: Two different variants are often seen in literature: The first (and often more efficient) computes distance among extracted templates and compares the found distance to the decision threshold for identifying the correct user, and the second approach applies a classifier to assign a template to the correct class (i.e. the correct user) as stored in the biometric database. This book contains a chapter on efficient template indexing and template comparison in large-scale vein-based identification systems [178].

In most papers on biometric recognition, stages (3)–(5) of this toolchain are presented, discussed, and evaluated. Often, those papers rely on some public (or private) datasets and do not discuss sensor issues. Also, quality assessment is often left out or discussed in separate papers (see Sect. 1.6). A minority of papers discusses certain stages in isolated manner, as also evaluation is more difficult in this setting (e.g. manuscripts on sensor construction, as also contained in this handbook [113, 258], sample quality (see Sect. 1.6), or RoI determination (e.g. on sclera segmentation [217])). In the following, we separately discuss the recognition toolchain of the considered vascular biometric traits and provide many pointers into literature.

A discussion and comparison of the overall recognition performance of vascular biometric traits turns out to be difficult. First, no major commercial players take part in open competitions in this field (contrasting to e.g. fingerprint or face recognition), so the relation between documented recognition accuracy as achieved in these competitions and claimed performance of commercial solutions is not clear. Second, many scientific papers in the field still conduct experiments on private datasets and/or do not release the underlying software for independent verification of the results. As a consequence, many different results are reported and depending on the used dataset and the employed algorithm, reported results sometimes differ by several orders of magnitude (among many examples, see e.g. [114, 258]). Thus, there is urgent need for reproducible research in this field to enable a sensible assessment of vascular traits and a comparison to other biometric modalities.

3.1 Finger Vein Recognition Toolchain

An excellent recent survey covering a significant number of manuscripts in the area of finger vein recognition is [234]. Two other resources provide an overview of hand-based vascular biometrics [151, 275] (where the latter is a monograph) including also finger vein recognition, and also less recent or less comprehensive surveys of finger vein recognition do exist [59, 120, 247, 250, 300] (which still contain a useful collection and description of work in the area).

A review of finger vein preprocessing techniques is provided in [114]. A selection of manuscripts dedicated to this topic is discussed as follows. Yang and Shi [288] analyse the intrinsic factors causing the degradation of finger vein images and propose a simple but effective scattering removal method to improve visibility of the vessel structure. In order to handle the enhancement problem in areas with vasculature effectively, a directional filtering method based on a family of Gabor filters is proposed. The use of Gabor filter in vessel boundary enhancement is almost omnipresent: Multichannel Gabor filters are used to prominently protrude vein vessel information with variances in widths and orientations in images [298]. The vein information in different scales and orientations of Gabor filters is then combined together to generate an enhanced finger vein image. Grey-Level Grouping (GLG) and Circular Gabor Filters (CGF) are proposed for image enhancement [314] by using GLG to reduce illumination fluctuation and improve the contrast of finger vein images, while the CGF strengthens vein ridges in the images. Haze removal techniques based on the Koschmieder’s law can approximatively solve the biological scattering problem as observed in finger vein imagery [236]. Another, yet related approach, is based on a Biological Optical Model (BOM [297]) specific to finger vein imaging according to the principle of light propagation in biological tissues. Based on BOM, the light scattering component is properly estimated and removed for finger vein image restoration.

Techniques for RoI determination are typically described in the context of descriptions of the entire recognition toolchain. There are hardly papers dedicated to this issue separately. A typical example is [287], where an inter-phalangeal joint prior is used for finger vein RoI localisation and haze removal methods with the subsequent application of Gabor filters are used for improving visibility of the vascular structure. The determination of the finger boundaries using a simple \(20\,\times \,4\) mask is proposed in [139], containing two rows of one followed by two rows of \(-1\) for the upper boundary and a horizontally mirrored one for the lower boundary. This approach is further refined in [94], where the finger edges are used to fit a straight line between the detected edges. The parameters of this line are then used to perform an affine transformation which aligns the finger to the centre of the image. A slightly different method is to compute the orientation of the binarised finger RoI using second-order moments and to compensate for the orientation in rotational alignment [130].

The vast majority of papers in the area of finger vein recognition covers the toolchain stages (3)–(5). The systematisation used in the following groups the proposed schemes according to the employed type of features. We start by first discussing feature extraction schemes focusing at the vascular structures in the finger vein imagery, see Table 1.1 for a summarising overview of the existing approaches.

Classical techniques resulting in a binary layout of the vascular network (which is typically used as template and is subject to correlation-based template comparison employing alignment compensation) include repeated line tracking [174], maximum curvature [175], principle curvature [32], mean curvature [244] and wide line detection [94] (where the latter technique proposes a finger rotation compensating template comparison stage). A collection of these features (including the use of spectral minutiae) has also been applied to the dorsal finger side [219] and has been found to be superior to global features such as ordinal codes. Binary finger vein patterns generated using these techniques have been extracted from both the dorsal and palmar finger sides in a comparison [112].

The simplest possible binarisation strategy is adaptive local binarisation, which has been proposed together with a Fourier-domain computation of matching pixels from the resulting vessel structure [248]. Matched filters as well as Gabor filters with subsequent binarisation and morphological post-processing have also been suggested to generate binary vessel structure templates [130]. A repetitive scanning of the images in steps of 15 degrees for strong edges after applying a Sobel edge detector is proposed in combination with superposition of the strong edge responses and subsequent thinning [326]. A fusion of the results when applying this process to several samples leads to the final template.

The more recent techniques focusing on the entire vascular structure take care of potential deformations and misalignment of the vascular network. A matched filtering at various scales is applied to the sample [76], and subsequently local and global characteristics of enhanced vein images are fused to obtain an accurate vein pattern. The extracted structure is then subjected to a geometric deformation compensating template comparison process. Also, [163] introduces a template comparison process, in which a finger-shaped model and non-rigid registration method are used to correct a deformation caused by the finger-posture change. Vessel feature points are extracted based on curvature of image intensity profiles. Another approach considers two levels of vascular structures which are extracted from the orientation map-guided curvature based on the valley- or half valley-shaped cross-sectional profile [299]. After thinning, the reliable vessel branches are defined as vein backbone, which is used to align two images to overcome finger displacement effects. Actual comparison uses elastic matching between the two entire vessel patterns and the degree of overlap between the corresponding vein backbones. A local approach computing vascular pattern in corresponding localised patches instead of the entire images is proposed in [209], template comparison is done in local patches and results are fused. The corresponding patches are identified using mated SIFT key points. Longitudinal rotation correction in both directions using a predefined angle combined with score-level fusion is proposed and successfully applied in [203].

A different approach not explicitly leading to a binary vascular network as template is the employment of a set of Spatial Curve Filters (SCFs) with variations in curvature and orientation [292]. Thus the vascular network consists of vessel curve segments. As finger vessels vary in diameters naturally, a Curve Length Field (CLF) estimation method is proposed to make weighted SCFs adaptive to vein width variations. Finally, with CLF constraints, a vein vector field is built and used to represent the vascular structure used in template comparison.

Subsequent work uses vein minutiae (vessel bifurcations and endings) to represent the vascular structure. In [293], it is proposed to extract each bifurcation point and its local vein branches, named tri-branch vein structure, from the vascular pattern. As these features are particularly well suited to identify imposter mismatches, these are used as first stage in a serial fusion before conducting a second comparison stage using the entire vascular structure. Minutiae pairs are the basis of another feature extraction approach [148], which consists of minutiae pairing based on an SVD-based decomposition of the correlation-weighted proximity matrix. False pairs are removed based on an LBP variant applied locally, and template comparison is conducted based on average similarity degree of the remaining pairs. A fixed-length minutiae-based template representation originating in fingerprint recognition, i.e. minutiae cylinder codes, have also been applied successfully to finger vein imagery [84].

Finally, semantic segmentation convolutional neural networks have been used to extract binary vascular structures subsequently used in classical binary template comparison. The first documented approach uses a combination of vein pixel classifier and a shallow segmentation network [91], while subsequent approaches rely on fully fledged deep segmentation networks and deal with the issue of training data generation regarding the impact of training data quality [100] and a joint training with manually labelled and automatically generated training data [101]. This book contains a chapter extending the latter two approaches [102].

Secondly, we discuss feature extraction schemes interpreting the finger vein sample images as texture image without specific vascular properties. See Table 1.2 for a summarising overview of the existing approaches.

An approach with main emphasis on alignment conducts a fuzzy contrast enhancement algorithm as first stage with subsequent mutual information and affine transformation-based registration technique [11]. Template comparison is conducted by simple correlation assessment. LBP is among the most prominent texture-oriented feature extraction schemes, also for finger vein data. Classical LBP is applied before a fusion of the results of different fingers [290] and the determination of personalised best bits from multiple enrollment samples [289]. Another approach based on classical LBP features applies a vasculature-minutiae-based alignment as first stage [139]. In [138], a Gaussian HP filter is applied before a binarisation with LBP and LDP. Further texture-oriented feature extraction techniques include correlating Fourier phase information of two samples while omitting the high-frequency parts [157] and the development of personalised feature subsets (employing a sparse weight vector) of Pyramid Histograms of Grey, Texture and Orientation Gradients (PHGTOG) [279]. SIFT/SURF keypoints are used for direct template comparison in finger vein samples [114]. A more advanced technique, introducing a deformable finger vein recognition framework [31], extracts PCA-SIFT features and applies bidirectional deformable spatial pyramid comparison.

One of the latest developments is the development usage of learned binary codes of learned binary codes. The first variant [78] is based on multidirectional pixel difference vectors (which are basically simple co-occurrence matrices) which are mapped into low-dimensional binary codes by minimising the information loss between original codes and learned vectors and by conducting a Fisher discriminant analysis (the between-class variation of the local binary features is maximised and the within-class variation of the local binary features is minimised). Each finger vein image is represented as a histogram feature by clustering and pooling these binary codes. A second variant [280] is based on a subject relation graph which captures correlations among subjects. Based on this graph, binary templates are transformed in an optimisation process, in which the distance between templates from different subjects is maximised and templates provide maximal information about subjects.

The topic of learned codes naturally leads to the consideration of deep learning techniques in finger vein recognition. The simplest approach is to extract features from certain layers of pretrained classification networks and to feed those features into a classifier to determine vein pattern similarity to result in a recognition scheme [40, 144]. A corresponding dual-network approach based on combining a Deep Belief Network (FBF-DBN) and a Convolutional Neural Network (CNN) and using vessel feature point image data as input is introduced in [30].

Another approach to apply traditional classification networks is to train the network with the available enrollment data of certain classes (i.e. subjects). A model of a reduced complexity, four-layered CNN classifier with fused convolutional-subsampling architecture for finger vein recognition is proposed for this [228], besides a CNN classifier of similar structure [98]. More advanced is a lightweight two-channel network [60] that has only three convolution layers for finger vein verification. A mini-RoI is extracted from the original images to better solve the displacement problem and used in a second channel of the network. Finally, a two-stream network is presented to integrate the original image and the mini-RoI. This approach, however, has significant drawbacks in case new users have to be enrolled as the networks have to be re-trained, which is not practical.

A more sensible approach is to employ fine-tuned pretrained models of VGG-16, VGG-19, and VGG-face classifiers to determine whether a pair of input images belongs to the same subject or not [89]. Thus, authors eliminated the need for training in case of new enrollment. Similarly, a recent approach [284] uses several known CNN models (namely, light CNN (LCNN), LCNN with triplet similarity loss function, and a modified version of VGG-16) to learn useful feature representations and compare the similarity between finger vein images.

Finally, we aim to discuss certain specific topics in the area of finger vein recognition. It has been suggested to incorporate user individuality, i.e. user role and user gullibility, into the traditional cost-sensitive learning model to further lower misrecognition cost in a finger vein recognition scheme [301]. A study on the individuality of finger vein templates [304] analysing large-scale datasets and corresponding imposter scores showed that at least the considered finger vein templates are sufficiently unique to distinguish one person from another in such large scale datasets. This book contains a chapter [128] on assessing the amount of discriminatory information in finger vein templates. Fusion has been considered in multiple contexts. Different feature extractions schemes have been combined in score-level fusion [114] as well as feature-level fusion [110], while the recognition scores of several fingers have also been combined [290] ([318] aims to identify the finger suited best for finger vein recognition). Multimodal fusion has been enabled by the development of dedicated sensors for this application context, see e.g. for combined fingerprint and finger vein recognition [140, 222]. A fusion of finger vein and finger image features is suggested in [130, 302], where the former technique uses the vascular finger vein structure and normalised texture which are fused into a feature image from which block-based texture is extracted, while the latter fuses the vascular structure binary features at score level with texture features extracted by Radon transform and Gabor filters. Finger vein feature comparison scores (using phase-only correlation) and finger geometry scores (using centroid contour distance) are fused in [10].

A topic of current intensive research is template comparison techniques (and suited feature representations) enabling the compensation of finger rotation and finger deformation [76, 94, 163, 203, 204, 299]. Somewhat related is the consideration multi-perspective finger vein recognition, where two [153] and multiple [205] perspectives are fused to improve recognition results of single-perspective schemes. A chapter in this handbook contains the proposal of a dedicated three-view finger vein scanner [258], while an in-depth analysis of multi-perspective fusion techniques is provided in another one [206].

3.2 Palm Vein Recognition Toolchain

Palm vein recognition techniques are reviewed in [1, 226], while [151, 275] review work on various types of hand-based vein recognition techniques including palm veins. The palm vein recognition toolchain has different requirements compared to the finger vein one, which is also expressed by different techniques being applied. In particular, finger vein sensors typically require the finger to be placed directly on the sensor (not contactless), while palm vein sensors (at least the more recent models) often facilitate a real contactless acquisition. As a consequence, the variability with respect to relative position between hand and sensor can be high, and especially the relative position of sensor plane and hand plane in 3D space may vary significantly causing at least affine changes in the textural representation of the palm vein RoI imagery. Also, RoI extraction is less straightforward compared to finger veins; however, in many cases we see techniques borrowed from palmprint recognition (i.e. extracting a central rectangular area defined by a line found by connecting inter-phalangeal joints). However, it has to be pointed out that most public palm vein datasets do not exhibit these positional variations so that recognition results of many techniques are quite well, but many of these cannot be transferred to real contactless acquisition. We shall notice that the amount of work attempting to rely on the vascular structure directly is much lower, while we see more papers applying local descriptors compared to the finger vein field, see Table 1.3 for an overview of the proposed techniques.

We start by describing approaches targeting the vascular structure. Based on an area maximisation strategy for the RoI, [154] propose a novel parameter selection scheme for Gabor filters used in extracting the vascular network. A directional filter bank involving different orientations is designed to extract the vein pattern [277]; subsequently, the Minimum Directional Code (MDC) is employed to encode the line-based vein features. The imbalance among vessel and non-vessel pixels is considered by evaluating the Directional Filtering Magnitude (DFM) and considered in the code construction to obtain better balance of the binary values. A similar idea based on 2-D Gabor filtering [141] proposes a robust directional coding technique entitled “VeinCode” allowing for compact template representation and fast comparison. The “Junction Points” (JP) set [263], which is formed by the line segments extracted from the sample data, contains position and orientation information of detected line segments and is used as feature. Finally, [9] rely on their approach of applying the Biometric Graph Matching (BGM) to graphs derived from skeletons of the vascular network. See a chapter in this book for a recent overview of this type of methodology [8].

Another group of papers applies local descriptors, obviously with the intention to achieve robustness against positional variations as described before. SIFT features are extracted from registered multiple samples after hierarchical image enhancement and feature-level fusion is applied to result in the final template [286]. Also, [133] applies SIFT to binarised patterns after enhancement, while [193] employs SIFT, SURF and Affine-SIFT as feature extraction to histogram equalised sample data. An approach related to histogram of gradients (HOG) is applied in [72, 187], where after the application of matched filters localised histograms encoding vessel directions (denoted as “histogram of vectors”) are generated as features. It is important to note that this work is based on a custom sensor device which is able to apply reflected light as well as transillumination imaging [72]. Another reflected light palm vein sensor prototype is presented in [238]. After a scaling normalisation of the RoI, [172, 173] apply LBP and LDP for local feature encoding. An improved mutual foreground LBP method is presented [108] in which the LBP extraction process is restricted to neighbourhoods of vessels only by first extracting the vascular network using the principle curvature approach. Multiscale vessel enhancement is targeted in [320, 325] which is implemented by a Hessian-phase-based approach in which the eigenvalues of the second-order derivative of the normalised palm vein images are analysed and used as features. In addition, a localised Radon transform is used as feature extraction and (successfully) compared to the “Laplacianpalm” approach (which finds an embedding that preserves local information by basically computing a local variant of PCA [266]).

Finally, a wavelet scattering approach is suggested [57] with subsequent Spectral Regression Kernel Discriminant Analysis (SRKDA) for dimensionality reduction of the generated templates. A ResNet CNN [309] is proposed for feature extraction on a custom dataset of palm vein imagery with preceding classical RoI detection.

Several authors propose to apply multimodal recognition combining palmprint and palm vein biometrics. In [79], a multispectral fusion of multiscale coefficients of image pairs acquired in different bands (e.g. VIS and NIR) is proposed. The reconstructed images are evaluated in terms of quality but unfortunately no recognition experimentation is conducted. A feature-level fusion of their techniques applied to palm vein and palmprint data is proposed in [187, 263, 266]. The mentioned ResNet approach [309] is also applied to both modalities with subsequent feature fusion.

3.3 (Dorsal) Hand Vein Recognition Toolchain

There are no specific review articles on (dorsal) hand vein recognition, but [151, 275] review work on various types of hand-based vein recognition techniques. Contrasting to the traits discussed so far, there is no commercial sensor available dedicated to acquire dorsal hand vein imagery. Besides the devices used to capture the publicly available datasets, several sensor prototypes have been constructed. For example, [35] use a hyperspectral imaging system to identify the spectral bands suited best to represent the vessel structure. Based on PCA applied to different spectral bands, authors were able to identify two bands which optimise the detection of the dorsal veins. Transillumination is compared to reflected light imaging [115] in a recognition context employing several classical recognition toolchains (for most configurations the reflected light approach was superior due to the more uniform illumination—light intensity varies more due to changing thickness of the tissue layers in transillumination). With respect to preprocessing, [316] propose a combination of high-frequency emphasis filtering and histogram equalisation, which has also been successfully applied to finger vein data [114].

Concerning feature extraction, Table 1.4 provides an overview of the existing techniques. We first discuss techniques relying on the extracted vascular structure. Lee et al. [143] use a directional filter bank involving different orientations to extract vein patterns, and the minimum directional code is employed to encode line-based vein features into a binary code. Explicit background treatment is applied similar to the techniques used in [277] for palm veins. The knuckle tips are used as key points for the image normalisation and extraction of the RoI [131]. Comparison scores are generated by a hierarchical comparison score from the four topologies of triangulation in the binarised vein structures, which are generated by Gabor filtering.

Classical vessel minutiae are used as features in [271], while [33] adds dynamic pattern tree comparison to accelerate recognition performance to the minutiae representation. A fixed-length minutiae-based representation originating from fingerprint biometrics, i.e. spectral minutiae [82], is applied successfully to represent dorsal hand vein minutiae in a corresponding recognition scheme. Biometric graph comparison, as already described before in the context of other vascular modalities, is also applied to graphs constructed from skeletonised dorsal hand vascular networks. Zhang et al. [310] extend the basic graph model consisting of the minutiae of the vein network and their connecting lines to a more detailed one by increasing the number of vertices, describing the profile of the vein shape more accurately. PCA features of patches around minutiae are used as templates in this approach, and thus this is an approach combining vascular structure information with local texture description. This idea is also followed in [93], however, employing different technologies: A novel shape representation methodology is proposed to describe the geometrical structure of the vascular network by integrating both local and holistic aspects and finally combined with LPB texture description. Also, [307] combine geometry and appearance methods and apply these to the Bosphorus dataset which is presented the first time in this work. [86] use an ICA representation of the vascular network obtained by thresholding-based binarisation and several post-processing stages.

Texture-oriented feature extraction techniques are treated subsequently. Among them, again key point-based schemes are the most prominent option. A typical toolchain description including the imaging device used, image processing methods proposed for geometric correction, region of interest extraction, image enhancement and vein pattern segmentation, and finally the application of SIFT key point extraction and comparison with several enrollment samples is described in [267]. Similarly, [150] uses contrast enhancement with subsequent application of SIFT in the comparison stage. Hierarchical key points’ selection and mismatch removal is required due to excessive key point generation caused by the enhancement procedure. SIFT with improved key point detection is proposed [262] as the NIR dorsal hand images do not contain many key points. Also, an improved comparison stage is introduced as compared to traditional SIFT key point comparison. Another approach to improve the key point detection stage is taken by [311], where key points are randomly selected and using SIFT descriptors an improved, fine-grained SIFT descriptor comparison is suggested. Alternatively, [249] conduct key point detection by Harris-Laplace and Hessian-Laplace detectors and SIFT descriptors, and corresponding comparison is applied. [270] propose a fusion of multiple sets of SIFT key points which aims at reducing information redundancies and improving the discrimination power, respectively. Different types of key points are proposed to be used by [92], namely, based on Harris corner-ness measurement, Hessian blob-ness measurement and detection of curvature extrema by operating the DoG detector on a human vision inspired image representation (so-called oriented gradient maps).

Also, other types of texture descriptors have been used. A custom acquisition device and LBP feature description is proposed in [268]. Gabor filtering using eight encoding masks is proposed [168] to extract four types of features, which are derived from the magnitude, phase, real and imaginary components of the dorsal hand vein image after Gabor filtering, respectively, and which are then concatenated into feature histograms. Block-based pattern comparison introduced with a Fisher linear discriminant adopts a “divide and conquer” strategy to alleviate the effect of noise and to enhance the discriminative power. A localised (i.e. block-based) statistical texture descriptor denoted as “Gaussian membership function” is employed in [28]. Also, classical CNN architectures have been suggested for feature extraction [144].

Dual-view acquisition has been introduced [215, 216, 315] resulting in a 3D point cloud representations of hand veins. Qi et al. [215, 216] propose a 3D point cloud registration for multi-pose acquisition before the point cloud matching vein recognition process based on a kernel correlation method. In [315], both the 3D point clouds of hand veins and knuckle shape are obtained. Edges of the hand veins and knuckle shape are used as key points instead of other feature descriptors because they are representing the spatial structure of hand vein patterns and significantly increase the amount of key points. A kernel correlation analysis approach is used to register the point clouds.

Multimodal fusion techniques have been used, e.g. [86] use dorsal hand veins as well as palm veins while [28] fuse palmprint, palm–phalanges print and dorsal hand vein recognition. The knuckle tips have been used as key points for the image normalisation and extraction of region of interest in [131]. The comparison subsystem combines the dorsal hand vein scheme [131] and the geometrical features consisting of knuckle point perimeter distances in the acquired images.

3.4 Wrist Vein Recognition Toolchain

There are no specific review articles on wrist vein recognition, but [151, 275] review work on various types of hand-based vein recognition techniques. Overall, the literature on wrist vein recognition is sparse. A low-cost device to capture wrist vein data is introduced [195] with good results when applying standard recognition techniques to the acquired data as described subsequently. Using vascular pattern-related feature extraction, [177] propose the fusion of left and right wrist data; a classical preprocessing cascade is used and binary images resulting from local and global thresholding are fused for each hand. A fast computation of cross-correlation comparison of binary vascular structures with shift compensation is derived in [186]. Another low-cost sensor device is proposed in [221]. Experimentation with the acquired data reveals Log Gabor filtering and a sparse representation classifier to be the best of 10 considered techniques. The fixed-length spectral minutiae representation has been identified to work well on minutiae extracted from the vascular pattern [82].

With respect to texture-oriented feature representation, [49] employs a preprocessing consisting of adaptive histogram equalisation and enhancement using a discrete Meyer wavelet. Subsequently, LBP is extracted from patches with subsequent BoF representation in a spatial pyramid.

3.5 Retina Recognition Toolchain

Survey-type contributions on retina recognition can be found in [97, 166] where especially the latter manuscript is a very recent one. Fundus imagery exhibits very different properties as compared to the sample data acquired from hand-related vasculature as shown in Fig. 1.4a. In particular, the vascular network is depicted with high clarity and with far more details with respect to the detailed representation of fine vessels. As the vessels are situated at the surface of the retina, illumination does not have to penetrate tissue and thus no scattering is observed. This has significant impact on the type of feature representations that are mainly used—as the vascular pattern can be extracted with high reliability, the typical features used as templates and in biometric comparisons are based on vascular minutiae. On the other hand, we hardly see texture-oriented techniques being applied. With respect to alignment, only rotational compensation needs to be considered, in case the head or the capturing instrument (in case of mobile capturing) is being rotated. Interestingly, retina recognition is not limited to the authentication of human beings. Barron et al. [15] investigate retinal identification of sheep. The influence of lighting and different human operators is assessed for a commercially available retina biometric technology for sheep identification.

As fundus imaging is used as an important diagnostic tool in (human) medicine (see Sect. 1.8), where the vascular network is mainly targeted as the entity diagnosis is based on, a significant corpus of medical literature exists on techniques to reliably extract the vessel structure (see [260] for a performance comparison of publicly available retinal blood vessel segmentation methods). A wide variety of techniques has been developed, e.g.

-

Wavelet decomposition with subsequent edge location refinement [12],

-

2-D Gabor filtering and supervised classification of vessel outlines [241],

-

Ridge-based vessel segmentation where the direction of the surface curvature is estimated by the Hessian matrix with additional pixel grouping [245],

-

Frangi vessel enhancement in a multiresolution framework [26],

-

Application of matched filters, afterwards a piecewise threshold probing for longer edge segments is conducted on the filter responses [90],

-

Neural network-based pixel classification after application of edge detection and subsequent PCA [240],

-

Laplace-based edge detection with thresholding applied to detected edges followed by a pixel classification step [259],

-

Wavelet transform and morphological post-processing of detail sub-band coefficients [137], and

-

Supervised multilevel deep segmentation networks [180].

Also, the distinction among arterial and venous vessels in the retina has been addressed in a medical context [95], which could also exploited by using this additional label in vascular pattern comparison.

When looking at techniques for the recognition toolchain, one of the exceptions not relying on vascular minutiae is represented by an approach relying on Hill’s algorithm [25] in which fundus pixels are averaged in some neighbourhood along scan circles, typically centred around the blind spot. The resulting waveforms (extracted from the green channel) are contrast-enhanced and post-processed in Fourier space. Combining these data for different radii lead to “retina codes” as described in [67]. Another texture-oriented approach [169] applies circular Gabor filters and iterated spatial anisotropic smoothing with subsequent application of SIFT key point detection and matching. A Harris corner detector is used to detect feature points [54], and phase-only correlation is used to determine and compensate for rotation before comparing the detected feature points.

All the techniques described in the following rely on an accurate determination of the vascular network as first stage. In a hybrid approach, [261] combine vascular and non-vascular features (i.e. texture–structure information) for retina-based recognition. The entire retinal vessel network is extracted, registered and finally subject to similarity assessment [85], and a strong focus on a scale, rotation and translation compensating comparison of retinal vascular network is set by [127]. In [13], an angular and radial partitioning of the vascular network is proposed where the number of vessel pixels is recorded in each partition and the comparison of the resulting feature vector is done in Fourier space. In [66], retinal vessels are detected by an unsupervised method based on direction information. The vessel structures are co-registered via a point set alignment algorithm and employed features also exploit directional information as also used for vessel segmentation. In [182], not the vessels but the regions surrounded by vessels are used and characterised as discriminating entities. Features of the regions are compared, ranging from simple statistical ones to more sophisticated characteristics in a hierarchical similarity assessment process.

All subsequent techniques rely on the extraction of retinal minutiae , i.e. vessel bifurcations, crossings and endings, respectively. In most cases, the vascular pattern is extracted from the green channel after some preprocessing stages, with subsequent scanning of the identified vessel skeleton for minutiae [145, 191, 285] and a final minutiae comparison stage. An important skeleton post-processing stage is the elimination of spurs, breakages and short vessels as described in [61]. The pure location of minutiae is augmented by also considering relative angles to four neighbouring minutiae in [207]. Biometric Graph Matching, relying on the spatial graph connecting two vessel minutiae points by a straight line of certain length and angle, has also been applied to retinal data [134]. In [22], only minutiae points from major blood vessels are considered (to increase robustness). Features generated from these selected minutiae are invariant to rotation, translation and scaling as inherited from the applied geometric hashing. A graph-based feature points’ comparison followed by pruning of wrongly matched feature points is proposed in [190]. Pruning is done based on a Least-Median-Squares estimator that enforces an affine transformation geometric constraint.

The actual information content of retinal data has been investigated in some detail [232], with particular focus set on minutiae-type [103, 232] and vessel-representation-type templates [7], respectively.

3.6 Sclera Recognition Toolchain

An excellent survey of sclera recognition techniques published up to 2012 can be found in [44]. Sclera recognition is the most difficult vascular trait as explained subsequently. While imaging can be done with traditional cameras, even from a distance and on the move, there are distinct difficulties in the processing toolchain: (i) sclera segmentation involves very different border types and non-homogeneous texture and is thus highly non-trivial especially when considering off-angle imagery and (ii) the fine-grained nature of the vascular pattern and its movement in several layers when the eye is moving makes feature extraction difficult in case of sensitivity against these changes. As a consequence, rather sophisticated and involved techniques have been developed and the recognition accuracy, in particular under unconstraint conditions, is lower as compared to other vascular traits. Compared to other vascular traits, a small number of research groups have published on sclera recognition only. This book contains a chapter on using deep learning techniques in sclera segmentation and recognition, respectively [229].

A few papers deal with a restricted part of the recognition toolchain. As gaze detection is of high importance for subsequent segmentation and the determination of the eventual off-angle extent, [3] cover this topic based on the relative position of iris and sclera pixels. This relative position is determined on a scan line connecting the two eye corners. After pupil detection, starting from the iris centre, flesh-coloured pixels are scanned to detect eyelids. Additionally, a Harris corner detector is applied and the centroid of detected corners is considered. Fusing the information about corners and flesh-coloured pixels in a way to look for the points with largest distance to the pupil leads to the eye corners.

Also, sclera segmentation (as covered in the corresponding challenges/competitions, see Sect. 1.4) has been investigated in isolated manner. Three different feature extractors, i.e. local colour-space pixel relations in various colour spaces as used in iris segmentation, Zernike moments, and HOGs, are fused into a two-stage classifier consisting of three parallel classifiers in the first stage and a shallow neural net as second stage in [217]. Also, deep-learning-based semantic segmentation has been used by combining conditional random fields and a classical CNN segmentation strategy [170].

Subsequent papers comprise the entire sclera recognition toolchain. Crihalmeanu and Ross [37] introduce a novel algorithm for segmentation based on a normalised sclera index measure. In the stage following segmentation, line filters are used for vessel enhancement before extracting SURF key points and vessel minutiae. After multiscale elastic registration using these landmarks, direct correlation between extracted sclera areas is computed as biometric comparison. Both [2, 4] rely on gaze detection [3] to guide the segmentation stage, which applies a classical integro-differential operator for iris boundary detection, while for the sclera–eyelid boundary the first approach relies on fusing a non-skin and low saturation map, respectively. After this fusion, which involves an erosion of the low saturation map, the convex hull is computed for the final determination of the sclera area. The second approach fuses multiple colour space skin classifiers to overcome the noise factors introduced through acquiring sclera images such as motion, blur, gaze and rotation. For coping with template rotation and distance scaling alignment, the sclera is divided into two sections and Harris corner detection is used to compute four internal sclera corners. The angles among those corners are normalised to compensate for rotation, and the area is resized to a normalised number of pixels. For feature extraction, CLAHE enhancement is followed by Gabor filtering. The down-sampled magnitude information is subjected to kernel Fisher discriminant analysis, and the resulting data are subjected to Mahalanobis cosine similarity determination for biometric template comparison. Alkassar et al. [5] set the focus on applying sclera recognition on the move at a distance by applying the methodology of [2, 4] to corresponding datasets. Fuzzy C-means clustering sclera segmentation is proposed by [43]. For enhancement, high-frequency emphasis filtering is done followed by applying a discrete Meyer wavelet filtering. Dense local directional patterns are extracted subsequently and fed into a bag of features template construction. Also, active contour techniques have been applied in the segmentation stage as follows. A sclera pixel candidate selection is done after iris and glare detection by looking for pixels which are of non-flesh type and exhibit low saturation. Refinement of sclera region boundaries is done based on Fourier active contours [322]. A binary vessel mask image is obtained after Gabor filtering of the sclera area. The extracted skeleton is used to extract data for a line descriptor (using length and angle to describe line segments). After sclera region registration using RANSAC, the line segment information is used in the template comparison process. Again, [6] use the integro-differential operator to extract the iris boundary. After a check for sufficient sclera pixels (to detect eventually closed eyes) by determination of the number of non-skin pixels, an active contours approach is used for the detection of the sclera-eyelid boundary. For feature extraction, Harris corner and edge detections are applied and the phase of Log Gabor filtering of a patch centred around the Harris points is used as template information. For biometric comparison, alignment is conducted to the centre of the iris and by applying RANSAC to the Harris points.

Oh et al. [188] propose a multi-trait fusion based on score-level fusion of periocular and binary sclera features, respectively.

4 Datasets, Competitions and Open-Source Software

4.1 Hand-Based Vascular Traits

Finger vein recognition has been the vascular modality that has been researched most intensively in the last years, resulting in the largest set of public datasets available for experimentation and reproducible research as displayed in Table 1.5. The majority is acquired in palmar view, but especially in more recent years also dorsal view is available. All datasets are imaged using the transillumination principle. As a significant limitation, the largest number of individuals that is reflected in all these datasets is 610 (THU-FVFDT), while all the others do not even surpass 156 individuals. This is not enough for predicting behaviour when applied to large-scale or even medium-scale populations.

There are also “Semi-public” datasets, i.e. these can only be analysed in the context of a visit at the corresponding institutions, including GUC45 [81], GUC-FPFV-DB [225] and GUC-Dors-FV-DB [219] (where the former are palmar and the latter is a dorsal dataset, respectively). A special case is the (large-scale) datasets of Peking University, which are only partially available, but can be interfaced by the RATEFootnote 6 (Recognition Algorithm Test Engine), which has also been used in the series of (International) Finger Vein Recognition Contests (ICFVR/FVRC/PFVR) [281, 282, 303, 312]. This series of contests demonstrated the advances made in this field, e.g. the winner of 2017 improved the EER from 2.64 to 0.48% compared to the winner of 2016 [312].

The datasets publicly available for hand vein recognition are more diverse as shown in Table 1.6. Palmar, dorsal and wrist datasets are available, and we also find reflected light as well as transillumination imaging being applied. However, again, the maximal number of subjects covered in these datasets is 110, and thus the same limitations as with finger vein data do apply.

VeinPLUS [73] is a semi-public hand vein dataset (reflected light and transillumination, resolution of \(2784\times 1856\) pixels with RoI of \(500\times 500\) pixels). To the best of the authors’ knowledge, no public open competition has been organised in this area.

4.2 Eye-Based Vascular Traits

For retina recognition, the availability of public fundus image datasets is very limited as shown in Table 1.7. Even worse, there are only two datasets (i.e. VARIA and RIDB) which contain more than a single image per subject. The reason is that the other datasets originate from a medical background and are mostly used to investigate techniques for vessel segmentation (thus, the availability of corresponding segmentation ground truth is important). The low number of subjects (20 for RIDB) and low number of images per subjects (233 images from 139 subjects for VARIA) makes the modelling of intra-class variability a challenging task (while this is not possible at all for the medical datasets, for which this has been done by introducing distortions to the images to simulate intra-class variability [67]).

The authors are not aware of any open or public competition for retina biometrics.

For sclera-based biometrics, sclera segmentation (and recognition) competitions have been organised 2015–2018Footnote 7 (SSBC’15 [45], SSRBC’16 [46], SSERBC’17 [48], SSBC’18 [47]) based on the SSRBC Dataset (2 eyes of 82 individuals, RGB, 4 angles) for which segmentation ground truth is being prepared. However, this dataset is not public and only training data are made available to participants of these competitions. Apart from this dataset, no dedicated sclera data are available and consequently, most experiments are conducted on the VIS UBIRIS datasets: UBIRIS v1 [201] and UBIRIS v2 [202].

Synthetic sample data has been generated for several biometric modalities including fingerprints (generated by SFinGe [160] and included as an entire synthetic dataset in FVC2004 [159]) and iris (generated from iris codes using genetic algorithms [69] or entirely synthetic [38, 327]), for example. The background is to generate (large-scale) realistic datasets without the requirements of human enrollment avoiding all eventual pitfalls with respect to privacy regulations and consent forms. Also, for vascular structures, synthetic generation has been discussed and some interesting results have been obtained. The general synthesis of blood vessels (more from a medical perspective) is discussed in [276] where Generative Adversarial Networks (GANs) are employed. The synthesis of fundus imagery is discussed entirely with a medical background [24, 36, 64, 75] where again the latter two papers rely on GAN technology. Within the biometric context, finger vein [87] as well as sclera [42] data synthesis has been discussed and rather realistic results have been achieved.

Open-source or free software is a scarce resource in the field of vascular biometrics, a fact that we aim to improve on with this book project. In the context of the (medical) analysis of retinal vasculature, retinal vessel extraction software based on wavelet-domain techniques has been provided: The ARIA Matlab package based on [12] and a second MATLAB software package termed mlvesselFootnote 8 based on the methods described in [241].

For finger vein recognition, B. T. TonFootnote 9 provides MATLAB implementations of Repeated Line Tracking [174], Maximum Curvature [175], and the Wide Line Detector [94] (see [255] for results) and a collection of related preprocessing techniques (e.g. region detection [139] and normalisation [94]). These implementations are the nucleus for both of the subsequent libraries/SDKs.

The “Biometric Vein Recognition LibraryFootnote 10” is an open-source tool consisting of a series of plugins for bob.bio.base, IDIAP’s open-source biometric recognition platform. With respect to (finger) vein recognition, this library implements Repeated Line Tracking [174], Maximum Curvature [175] and the Wide Line Detector [94], all with the Miura method used for template comparison. For palm vein recognition,Footnote 11 a local binary pattern-based approach is implemented.

Finally, the “PLUS OpenVein Finger- and Hand-Vein SDKFootnote 12” is currently the largest open-source toolbox for vascular-related biometric recognition and is a feature extraction and template comparison/evaluation framework for finger and hand vein recognition implemented in MATLAB. A chapter in this book [116] is dedicated to a detailed description of this software.

5 Template Protection

Template protection schemes are of high relevance when it comes to the security of templates in biometric databases, especially in case of database compromise. As protection of biometric templates by classical encryption does not solve all associated security concerns (as the comparison has to be done after the decryption of templates and thus, these are again exposed to eventual attackers), a large variety of template protection schemes has been developed. Typically, these techniques are categorised into Biometric Crypto Systems (BCS), which ultimately target on the release of a stable cryptographic key upon presentation of a biometric trait and Cancelable Biometrics (CB), where biometric sample or template data are subjected to a key-dependent transformation such that it is possible to revoke a template in case it has been compromised [227]. According to [99], each class of template protection schemes can be further divided into two subclasses. BCS can either be key binding (a key is obtained upon presentation of the biometric trait which has before been bound to the biometric features) or key generating (the key is generated directly from the biometric features often using informed quantisation techniques). CB (also termed feature transformation schemes) can be subdivided into salting and non-invertible transformations [99]. If an adversary gets access to the key used in the context of the salting approach, the original data can be restored by inverting the salting method. Thus, the key needs to be handled with special care and stored safely. This drawback of the salting approaches can be solved by using non-invertible transformations as they are based on the application of one-way functions which cannot be reversed. In this handbook, two chapters are devoted to template protection schemes for finger vein recognition [121, 129] and both fall into the CB category.

Vein-based biometrics subsumes some of the most recent biometric traits. It is therefore not surprising that template protection ideas which have been previously developed for other traits are now being applied to vascular biometric traits, without developments specific for the vascular context. For example, in case we consider vascular minutiae points as features, techniques developed for fingerprint minutiae can be readily applied, like the fuzzy vault approach or techniques relying on fixed-length feature descriptors like spectral minutiae and minutiae cylinder codes. In case binary data representing the layout of the vascular network are being used as feature data, the fuzzy commitment scheme approach is directly applicable.

5.1 Hand-Based Vascular Traits