Abstract

The Sub-NUMA Clustering 4 (SNC-4) affinity mode of the Intel Xeon Phi Knights Landing introduces a new environment for parallel applications, that provides a NUMA system in a single chip.

The main target of this work is to characterize the behaviour of this system, focusing in nested parallelization for a well known algorithm, with regular and predictable memory access patterns as the matrix multiplication. It has been studied the effects of thread distribution in the processor on the performance when using SNC-4 affinity mode, the differences between cache and flat modes of the MCDRAM and the improvements due to vectorization in different scenarios in terms of data locality.

Results show that the best thread location is the scatter distribution, using 64 or 128 threads. Differences between cache and flat modes of the MCDRAM are, generally, not significant. The use of optimization techniques as padding to improve locality has a great impact on execution times. Vectorization resulted to be efficient only when the data locality is good, specially when the MCDRAM is used as a cache.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

Manycore architectures as the presented by the Intel Xeon Phi Knights Landing (KNL) provide highly parallel environments with a large number of cores in a single chip, allowing developers to exploit parallelism in their algorithms. In the case of the Intel Xeon Phi KNL, the second generation of the Intel’s manycore processors, the most interesting features are the clustering modes and the integrated on-package memory known as MCDRAM (Multi-Channel DRAM).

This work is focused on the SNC-4 (Sub-NUMA Clustering 4) mode, in which the chip is partitioned into four nodes, being considered as a NUMA system. However, this configuration differs from typical NUMA systems in latency and bandwidth. Given that, a characterization of the KNL behaviour in its SNC-4 configuration can be useful for its users. In this work it is also included a brief comparison between the cache and flat modes of the MCDRAM memory and a study of the performance improvement achieved using vectorization.

To study these elements, different implementations of the classic matrix multiplication have been used, as this algorithm has some interesting properties that fit the goals of this work. It is a well known code and its memory access patterns are predictable, regular and easy to modify by changing the order of the loops, providing different scenarios in terms of data locality. Note that it is not intended to optimize this code, like in [3] or [7], but to use it just as a case study.

Other works have shown studies with very specific benchmarks, testing the MCDRAM in its different modes [12] or obtaining models of its behaviour [10]. Other authors have used benchmarks with dense matrix multiplication of small dimensions [4] or have looked for the roofline model using benchmarks based on sparse matrices algebra [2]. Finally, works like [6] show performance comparison on commonly used software. In contrast, this paper is intended to present a general study with different conditions, as the given when optimizing and parallelizing a code with regular memory patterns as the matrix multiplication.

2 Intel Xeon Phi KNL Architecture and Benchmarks

The Intel Xeon Phi is a manycore processor which bases its architecture in tiles [5]. Each tile has two cores and a 1 MB shared L2 cache memory. Each core has two VPUs (Vector Processing Unit), compatible with the AVX-512 instructions [11], and it is capable of execute up to four threads simultaneously. With a maximum of 36 tiles, the KNL can have up to 72 cores, 144 VPUs, and can execute up to 288 threads concurrently.

This processor has another singular features, like the clustering modes and the MCDRAM memory [13]. Concerning the clustering modes, three main configurations are available: All-to-all, Quadrant and SNC-4. The most interesting one is the SNC-4, where the chip behaves like a singular NUMA system. Additionally, the MCDRAM memory is an integrated high-bandwidth memory (up to 450 GB/s) with 16 GB of capacity that has three different configurations: cache, flat and hybrid modes. Using the cache mode, the MCDRAM behaves as a L3 direct-mapped cache memory. In the flat mode, the MCDRAM is configured as another main memory. In the hybrid mode, the memory is divided 50%–50% or 75%–25% in cache and flat modes, respectively. Note that using SNC-4 and flat modes, there are four NUMA nodes that correspond to the cores and the main memory, and four (of 4 GB each) corresponding to the MCDRAM.

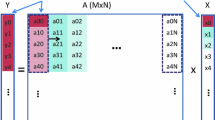

In this study, the parallel (using OpenMP [1]) dense matrix multiplication has been used, \(C = A \times B\), \(A, B, C \in \mathcal {M}_{n\times n}\). The matrices are located in the MCDRAM when using flat mode, and in DDR4 memory with cache mode. The following nests in the loops has been studied: ijk, ikj, jik and jki. E.g. the ijk is referred as the one which has the i index in the outer-most loop, the index j in the intermediate one, and the k in the inner-most. The matrices elements accessed are always \(a_{ik}, b_{kj}, c_{ij}\). The k loop cannot be parallelized due to race conditions, so nested parallelization has been tested only with ijk and jik nests.

In terms of data locality, the ikj loop should have the best performance because it accesses all the matrices by rows (the best way in C language). The ijk nest accesses the elements of A and C by rows and B by columns, being moderately efficient. The jik nest gets the elements of the matrices B and C by columns, and the elements of A by rows. Finally, the jki order should show the worst performance as all the matrices are accessed by columns.

Given the structure of the code and the organization of the cores in the SNC-4 mode, it is interesting to use a two-level parallelization. In the outer-most loop, iterations are distributed to different thread groups. In the second loop, iterations are shared out to the threads of each group. In the inner-most loop, vectorization can be applied using AVX-512 instructions. The natural distribution, given the architecture of the KNL, would be 4 groups of 64 threads, denoted as 4 \(\times \) 64 from now on. This way of sharing out the iterations allows us to consider different locations for the threads of each group, scattering or compacting them across the cores, while the groups will be always scattered.

The basic algorithm where all the data are in contiguous positions, might have performance issues because of the replacements in the caches when using matrices with size \(n = a 2^b,\ a,b \in \mathbb {N}\), for certain values of b. To solve this problem, another version that uses padding has been implemented, so 64 bytes (the length of a cache line) are added to the end of each row of the matrices.

In the execution of the benchmarks, it has been used the Intel Xeon Phi KNL 7250 using 64 cores and 48 GB of DDR4 memory. The compiler used in the tests was the Intel ICC 18.0.0 with the options -qopenmp, -O2, -xMIC-AVX512 and -vec-threshold0 to ensure that the AVX-512 instructions were used. Other optimization options might transform the code too much, so the memory access patterns might not be the ones written in the source file. Metrics about cache misses and memory performance have been obtained with Intel VTune 2018.

3 Results

This section shows the results of the benchmarks with matrices of dimension \(n=4096\). Other sizes were tested too, achieving similar results.

3.1 Nested Parallelism

A brief summary of the results for the codes using nested parallelization and 4 groups of 16 threads each (4 \(\times \) 16) are shown in Tables 1 and 2, where each entry contains the execution times (in seconds) of the sequential and parallel executions and the corresponding speedup.

Padding: The programs that do not use padding show higher execution times than those which implement this technique as it reduces the L2 cache misses up to a 98%. The ijk code shows an improvement of 97% in the sequential execution time, while jik improves up to 98%, showing the relevance of this kind of techniques in the KNL, improving drastically the execution times and changing the behaviour in terms of thread location and vectorization.

Thread Location: The results of the programs which do not use padding show that the scatter distribution is not always the best. A compact distribution can cause the threads of the same core/tile share cache lines, reducing the traffic in the mesh. This situation also implies a faster communication in comparison with sharing data with external tiles. In addition, a compact distribution divides the resources of each core, probably causing a lower performance. Also, it can saturate the buses of the caches or the interconnection mesh, degrading the execution times, as in the jik nest with the MCDRAM in flat mode.

Vectorization: The codes that do not use padding present poor performance results when using vectorization. However, when padding is applied, improvements up to 54% are obtained, showing that vectorization is only beneficial when the programs have efficient data access patterns.

MCDRAM: Using the MCDRAM in cache mode and having inefficient access patterns causes important performance problems in the vectorized programs, due to the difficulties in feeding the registers. Note that these performance issues do not appear with the flat mode. In contrast, cache mode shows a slightly better performance in parallel executions.

Best Execution: The best execution time obtained using nested parallelism has been 2.66 s (51.66 GFlop/s), achieved using the jik nest, 64 groups of 2 threads with a compact distribution, MCDRAM in cache mode, and using padding and vector instructions.

3.2 One-Level Parallelism

A summary of the results obtained by the codes where only the outer loop is parallelized is shown in Tables 3 and 4, including the ikj and jki nests.

Padding: All loop nests take advantage of this technique because of the significant reduction in the number of cache misses, up to 98%.

Thread Location: When only the outer most loop is parallelized, the scatter distribution gives the best execution times. The differences are specially noticeable in the ikj and jki cases using padding, improving up to 60% and 85%.

Vectorization: The ikj nest, the best access pattern, takes the maximum benefit of vector instructions, reducing up to 63% the execution time.

MCDRAM: Using one-level parallelism, the cache mode usually achieves a slightly better performance in parallel executions. Even though, differences between flat and cache modes are generally not significant.

Best execution: The best time obtained using one-level parallelism has been of 1.17 s (117.46 GFlop/s), using the ikj order, with padding, 256 threads, MCDRAM in cache mode, and using AVX-512 instructions.

3.3 Comparative with a Real NUMA

A summary of the comparison of the Intel Xeon Phi KNL with a NUMA server that consists of four Intel Xeon E5 4620 is shown in Table 5.

Memory Latencies: To study the effect of non-local accesses in the NUMA server and in the KNL, the numademo command [8] with the sequential STREAM benchmark [9] has been executed in both machines. Results in Table 6 show that the penalties in the NUMA server go from 28% up to 40% while in the KNL these penalties are much lower. With the MCDRAM in cache mode, the differences are between 3.8% and 6.9%. Using the flat mode, both kind of penalties, those related to the DDR memory and the MCDRAM, are around 2% and 3%.

Padding: This optimization technique has a low impact in the Intel Xeon E5 in comparison with the KNL. In the tests, adding padding to the data has improved the sequential execution times up to 30%.

Vectorization: The Intel Xeon E5 is not compatible with the AVX-512 vector instructions, being only compatible with the AVX2 instruction set. This kind of instructions works with 4 operands with FMA operations, so its performance is lower, improving just up to 9% the execution times.

Thread Location: The performance shows that using more NUMA nodes is not always the best option to improve the performance, like in the Intel Xeon Phi, due to the higher penalties of remote accesses on a real NUMA server.

Best Execution: In the NUMA server the best execution time has been obtained by the ikj nest, computing the matrix multiplication in 2.20 s (62.47 GFlop/s), using 80 threads with a scatter distribution, vectorization and no padding. This performance is noticeably lower than the provided by the KNL.

4 Conclusions

In this work, it has been studied the behaviour of the Intel Xeon Phi KNL in different situations, characterizing its performance in terms of thread location, MCDRAM mode, vectorization, data locality and, also, comparing the SNC-4 mode of the KNL with a real NUMA system.

Generally, the most efficient thread distribution is the scatter location, using all the NUMA nodes of the KNL, and one or two threads per core. In a real NUMA system, the behaviour is different, depending heavily on the algorithm because of the higher penalties in the communications between nodes.

The way the data is located in memory has a deep impact in the performance in the KNL compared to other processors. In this case, adding padding to the data has produced a reduction of the execution time up to 98%.

Using vector instructions has shown an irregular behaviour. With a low locality, the use of vector instructions had a negative impact. In opposition, with good data locality, their use has improved the execution times up to 60%.

Differences between cache and flat modes of the MCDRAM are, generally, not significant. Flat mode seems to perform better under inefficient data access patterns, but cache mode has usually given better results on parallel codes.

References

Dagum, L., Menon, R.: OpenMP: an industry standard API for shared-memory programming. IEEE Comput. Sci. Eng. 5(1), 46–55 (1998)

Doerfler, D., et al.: Applying the roofline performance model to the Intel Xeon Phi knights landing processor. In: Taufer, M., Mohr, B., Kunkel, J.M. (eds.) ISC High Performance 2016. LNCS, vol. 9945, pp. 339–353. Springer, Cham (2016). https://doi.org/10.1007/978-3-319-46079-6_24

Guney, M.E., et al.: Optimizing matrix multiplication on Intel® Xeon Phi TH x200 architecture. In: 2017 IEEE 24th Symposium on Computer Arithmetic (ARITH), July 2017. https://doi.org/10.1109/ARITH.2017.19

Heinecke, A., Breuer, A., Bader, M., Dubey, P.: High order seismic simulations on the Intel Xeon Phi processor (knights landing). In: Kunkel, J.M., Balaji, P., Dongarra, J. (eds.) ISC High Performance 2016. LNCS, vol. 9697, pp. 343–362. Springer, Cham (2016). https://doi.org/10.1007/978-3-319-41321-1_18

Jeffers, J., Reinders, J., Sodani, A.: Intel Xeon Phi Processor High Performance Programming: Knights Landing Edition. Morgan Kaufmann, San Francisco (2016)

Kang, J.H., Kwon, O.K., Ryu, H., Jeong, J., Lim, K.: Performance evaluation of scientific applications on Intel Xeon Phi knights landing clusters. In: 2018 International Conference on High Performance Computing & Simulation (HPCS). IEEE (2018)

Kim, R.: Implementing general matrix-matrix multiplication algorithm on the Intel Xeon Phi knights landing processor. Ph.D. thesis, Department of Mathematical Sciences, Seoul National University (2018)

Kleen, A.: A NUMA API for Linux. Novel Inc. (2005)

McCalpin, J.D., et al.: Memory bandwidth and machine balance in current high performance computers. IEEE Comput. Soc. Tech. Committee Comput. Archit. (TCCA) Newsl. 1995, 19–25 (1995)

Ramos, S., Hoefler, T.: Capability models for many core memory systems: a case-study with Xeon Phi KNL. In: 2017 IEEE International Parallel and Distributed Processing Symposium (IPDPS), pp. 297–306. IEEE (2017)

Reinders, J.: AVX-512 instructions. Intel Corporation (2013)

Rosales, C., Cazes, J., Milfeld, K., Gómez-Iglesias, A., Koesterke, L., Huang, L., Vienne, J.: A comparative study of application performance and scalability on the Intel knights landing processor. In: Taufer, M., Mohr, B., Kunkel, J.M. (eds.) ISC High Performance 2016. LNCS, vol. 9945, pp. 307–318. Springer, Cham (2016). https://doi.org/10.1007/978-3-319-46079-6_22

Sodani, A., et al.: Knights landing: second-generation Intel Xeon Phi product. IEEE Micro 36(2), 34–46 (2016)

Acknowledgment

This work has received financial support from the Ministerio de Economía, Industria y Competitividad within the project TIN2016-76373-P and network CAPAP-H. It was also funded by the Consellería de Cultura, Educación e Ordenación Universitaria of Xunta de Galicia (accr. 2016–2019, ED431G/08 and reference competitive group 2019–2021, ED431C 2018/19) and network R2016/045.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2019 Springer Nature Switzerland AG

About this paper

Cite this paper

Laso, R., Rivera, F.F., Cabaleiro, J.C. (2019). Influence of Architectural Features of the SNC-4 Mode of the Intel Xeon Phi KNL on Matrix Multiplication. In: Rodrigues, J., et al. Computational Science – ICCS 2019. ICCS 2019. Lecture Notes in Computer Science(), vol 11540. Springer, Cham. https://doi.org/10.1007/978-3-030-22750-0_41

Download citation

DOI: https://doi.org/10.1007/978-3-030-22750-0_41

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-22749-4

Online ISBN: 978-3-030-22750-0

eBook Packages: Computer ScienceComputer Science (R0)