Abstract

System security analysis has been focusing on technology-based attacks, while paying less attention on social perspectives. As a result, social engineering are becoming more and more serious threats to socio-technical systems, in which human plays important roles. However, due to the interdisciplinary nature of social engineering, there is a lack of consensus on its definition, hindering the further development of this research field. In this paper, we propose a comprehensive and fundamental ontology of social engineering, with the purpose of prompting the fast development of this field. In particular, we first review and compare existing social engineering taxonomies in order to summarize the core concepts and boundaries of social engineering, as well as identify corresponding research challenges. We then define a comprehensive social engineering ontology, which is embedded with extensive knowledge from psychology and sociology, providing a full picture of social engineering. The ontology is built on top of existing security ontologies in order to align social engineering analysis with typical security analysis as much as possible. By formalizing such ontology using Description Logic, we provide unambiguous definitions for core concepts of social engineering, serving as a fundamental terminology to facilitate research within this field. Finally, our ontology is evaluated based on a collection of existing social engineering attacks, the results of which indicate good expressiveness of our ontology.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

With the rapid growth of system scales, modern information systems include more heterogeneous components other than software. In particular, human has played an increasingly important role in the entire system, who can be seen as a system component and interacts with other system components (e.g., human, software, hardware) [9]. As such, security analysis should cover not only software security, but also concerns the security of human in order to ensure holistic security of systems. However, traditional security analysis has been focusing on technology-based attacks, while paying less attention on the social perspective [6]. As a result, social engineering has become more and more serious threats to socio-technical systems. A famous social engineer Kevin Mitnick has clearly pointed out that nowadays human has become the most vulnerable part of systems [11].

Due to the interdisciplinary nature of social engineering, there is a lack of consensus on its definition, hindering the further development of this research field. Researchers from different research fields are likely to use their own terminology to describe social engineering. Even researchers in the same research field may have totally different definitions. A notable example is about inclusion or exclusion of shoulder surfing, which is traditionally treated as a social engineering attack [3], but some other researchers explicitly vote for excluding it from social engineering attacks [14]. The main reason for this phenomenon is because there lacks of a well-defined ontology for social engineering. Especially, all social engineering researchers only present social engineering in a descriptive way by using natural languages, which is by no means precise and unambiguous. As a result, without having a consensus on the definition of social engineering, even social engineering researchers are confusing about concepts of social engineering, not mention industrial practitioners.

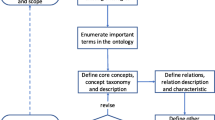

In this paper, we aim to provide a rigorous and comprehensive ontology of social engineering in order to form a fundamental terminology for research of social engineering. Specifically, we first review existing social engineering ontologies in detail, investigating aspects they focus on and comparing their conceptual coverage. Based on such a review, we propose a comprehensive ontology of social engineering, which offers a unified set of concepts of social engineering and covers various perspectives in order to be easily comprehended by researchers from different communities. The proposal is founded on top of typical security requirements ontologies, and is embedded with extensive knowledge from psychology and sociology, providing a complete picture of social engineering. We have formalized the proposed ontology by using Description Logic (DL) in order to provide unambiguous semantics and enable class inferences, serving as a theoretic foundation for carrying out follow-up research in this field. Finally, we evaluate the coverage and correctness of our approach by using it to analyze real social engineering attacks reported in literature.

In the remainder of this paper, we first report the findings of our literature survey in Sect. 2. Based on the results of the survey, we then present our main contribution, a comprehensive social engineering ontology in Sect. 3. After that we evaluate the expressiveness of our ontology in Sect. 4, and eventually conclude the paper in Sect. 5.

2 Review of Existing Approaches

A number of studies have been carried out to investigate the taxonomy and ontology of social engineering, each of which emphasizes different perspectives. In this section, we present an in-depth survey of existing taxonomies and ontology of social engineering with the purpose of comprehensively understanding various conceptual elements in social engineering. Base on such a survey, we then report our findings about a number of challenges with existing studies. In particular, we focus on investigating social engineering attacks and their categories. Moreover, other social engineering-specific concepts (e.g., human vulnerability) are also surveyed and presented.

2.1 Social Engineering Attacks

Many researchers have investigated social engineering by focusing on social engineering attacks, and have proposed several taxonomies of social engineering attacks [2,3,4, 8, 13, 14]. Note that social engineering attacks are described as attack vector [8], attack technique [2], or attack method [5]. Table 1 shows the comparison of various studies regarding social engineering attacks. We have tried to cluster similar attacks in a row to better present the similarity and difference among various studies (i.e., C1-C5). It is worth noting that the last row C5 presents attacks that are different from each other and cannot be related to any of the previous attacks.

Having a closer look at these studies, Harley first presents a taxonomy of social engineering attacks [3], in which he enumerated seven social engineering attacks, including masquerading, password stealing, spam etc. Such attacks are summarized at a rather coarse-grained level, describing the common procedure for carrying out the attacks. Krombholz et al. also describe attacks in a coarse manner, such as shoulder surfing, baiting, and waterholing [8]. Ivaturi and Janczewski identify social engineering attacks by focusing on particular approaches used by attackers, such as pretexting, cross site request forgery, and malware [4]. Also, they distinguish attacks that are carried out via different media. For example, phishing, smsishing, and vishing characterize three attacks that are carried out via email, short message, and voice. Peltier summarizes a list of social engineering attacks from real attack scenarios [14]. Similar to Ivaturi’s taxonomy, he looks into the media via which attacks are carried out, e.g., pop-up windows and mail attachments. Gulati enumerates social engineering attacks at a fine-grained level [2]. In particular, he distinguishes impersonation attacks based on the person to be impersonated, such as technical expert and supporting stuff. Nyamsuren and Choi discuss social engineering attacks in the context of ubiquitous environment [13]. Thus, they present some attacks specific to the ubiquitous environment in addition to typical ones, e.g., signal hijacking.

Challenges. Although such studies all tried to enumerate a full list of social engineering attacks, each of them came out with a particular taxonomy that is inconsistent and even conflicting with others. In particular, we have observed the following challenges that should be addressed.

-

No Clear Definition. As clearly shown in Table 1, different studies have proposed quite different social engineering attacks (as shown in row C5), and none of them are complete. The most important issue is that there is no clear definition for social engineering attacks. Thus, the scope of social engineering attacks varies from studies to studies. A notable example is Dumpster Diving. Although most studies treat it as a social engineering attack, Ivaturi and Janczewski argue that such an attack does not involve social interactions with the victim and thus explicitly exclude it from their taxonomy [4]. Such a clarification is reasonable, but we cannot determine which proposal is correct without a clear definition.

-

Inconsistent Granularity. Different researchers characterize social engineering attacks at different granularity levels. For instance, Peltier emphasizes particular scenarios of carrying out psychological manipulation, such as third-party authorization and creating a sense of urgency. While other researchers [3] view such attacks at a higher abstraction level as direct psychological manipulation.

-

Incomplete Enumeration. In addition to common social engineering attacks, each study has proposed unique attacks from their own perspective. For example, Nyamsuren and Choi extend dumpster diving to propose digital dumpster diving; Ivaturi and Janczewski propose smsishing, which is similar to phishing but is carried out via mobile phone message. However, such new attacks have not been summarized and proposed in a systematic way, and thus none of existing studies can come out with a complete enumeration of social engineering attacks. Not to mention some of the newly proposed attacks should not be classified as a new type of attack. For example, password stealing describes a possible damage caused by impersonation [3], which should not be positioned as a particular type of social engineering attack.

-

Different Terminologies. Different researchers are likely to name social engineering attacks based on their own terminology. For example, masquerading [3] and impersonation [4] describe the same type of attacks, in which attackers use fake identities. Also, digital dumpster diving and leftover are describing the same thing according to their explanation [3, 13].

2.2 Categories of Social Engineering Attacks

Researchers have proposed various categories to manage and navigate social engineering attacks, among which the mostly accepted proposal is to classify attacks as human-based attacks and technology-based attacks [1, 2, 5, 14]. Specifically, as long as a social engineering attack involves using any computer-related technology, it is deemed as a technology-based attack; otherwise, it is a human-based attack.

In addition to the above simple categories, Krombholz et al. have defined a more comprehensive taxonomy for classifying social engineering attacks [8]. In particular, they view a social engineering attack as a scenario that attackers perform physical actions, apply socio-psychological or computer technique over a variety of different channels. As such, they propose to classify social engineering attacks in terms of Operator, Channel, and Type. Mouton et al. emphasize the communication between attackers and victims, and propose to classify social engineering attacks into direct attacks and indirect attacks [12]. Furthermore, they classify direct attacks into two ways of communicating: bidirectional communication or unidirectional communication.

Challenges. We argue the binary classification, i.e., human-based attacks and technology-based attacks, is too general to be useful. Especially, a social engineering attack, in many cases, actually belongs to multiple categories depending on its different attributes. For example, voice of authority can be reasonably classified as psychological manipulation; however, if an attacker opts to perform actions via email with attachments, then such an attack can be classified as email attachment. As such, we believe a well-defined categorization of social engineering attacks is essential for people to understand the ever-increasing set of social engineering attacks. To this end, we need to identify meaningful dimensions for characterizing social engineering attacks as many as possible, which is a challenging task. As reviewed above, Krombholz et al. and Mouton et al. have tentatively explored such dimensions, serving as good starting points.

2.3 Vulnerability

In contrast to typical information security attacks which exploit software vulnerability to carry out malicious actions, social engineering attacks exploit human as targets. Several researchers have explicitly point this out, and present a list of human traits and behaviors that are vulnerable to be exploited by social engineers. Harley present seven personality traits, namely gullibility, curiosity, courtesy, greed, diffidence, thoughtlessness, and apathy [3]. Similarly, Gulati also mentions seven vulnerable behaviors [2], parts of which are different from Harley’s proposal, including trust, fear of unknown. Finally, Peltier summarizes four vulnerable factors, including diffusion of responsibility, chance of ingratiation, trust relationship, and guilt [14].

Challenges. The challenges regarding the human vulnerability research are twofold.

-

Enumeration of Human Vulnerability. As an interdisciplinary research field, the research of human vulnerability requires knowledge from psychology and sociology, which is difficult for information security researchers. This explains why our reviewed studies all come out with different sets of human vulnerabilities. It is also one of the most important reasons that hinders the development of this research field.

-

Linkages between Human Vulnerability and Social Engineering Attacks. Security analysts need not only identify human vulnerabilities, but also well understand how attackers exploit known human vulnerabilities. Thus, a follow-up challenge is linking the human vulnerabilities to corresponding social engineering attacks.

3 A Comprehensive Ontology of Social Engineering

In this section, we present a comprehensive social engineering ontology, dealing with the research challenges we identified in Sect. 2. A well-defined ontology should preserve a number of characteristics, e.g., completeness, consistency, disjointness [15]. In addition, based on our survey analysis, we argue the ontology of social engineering should meet the following requirements.

-

1.

Inconsistent understanding about social engineering is the primary challenge that hinders the development of this research topic. Consequently, the ontology should have an unambiguous definition towards social engineering attacks, which can be used to precisely distinguish social engineering attacks from other attacks.

-

2.

Social engineering attacks are a particular type of security attack, which has been used together with technical attacks (e.g., SQL injection) to cause more serious damage [11]. As argued by Li et al. [10], social engineering analysis should be part of a holistic system security analysis, in which human is deemed as a particular type of system component. Thus, it is important to align core concepts of social engineering with existing security concepts as much as possible. Moreover, such alignment can greatly help information security analysts to comprehend social engineering.

-

3.

As an interdisciplinary research field, social engineering attacks are evolving along with the advances of related research fields, e.g., information security, sociology and psychology. For one thing, design of the ontology should appropriately take into account knowledge from those related research fields. For another, the ontology should be extensible to incorporate emerging social engineering attacks.

-

4.

Researchers from different research communities are likely to take their particular perspectives to classify social engineering attacks at different abstraction levels. As a result, the ontology should support a hierarchical classification schema, helping people to better understand social engineering.

Taking into account such requirements, in the remaining parts of this section, we first present and explain core concepts and relations of social engineering in detail. Then we formalize such a concepts and relations using Description Logic (DL) in order to assign formal and unambiguous semantics to the proposed ontology.

3.1 Core Concepts of Social Engineering

Security ontology has been investigated for decades, resulting in many different variants [17]. Among all such proposals, there are four essential concepts have been universally identified, i.e., Asset, Attacker, Attack, and Vulnerability. In particular, an asset is anything that is valuable to system stakeholders; an attacker is a proactive agent who carries out attack actions to harm assets; a vulnerability is any weakness exists in system assets that can be exploited by corresponding attack actions.

To align social engineering analysis with typical security analysis, we identify core concepts and relations of social engineering based on the aforementioned security concepts. Based on our in-depth survey over existing social engineering research, we come out with the conclusion that all the security concepts can be specialized into subconcepts in the context of social engineering. Furthermore, two additional concepts are also introduced to more comprehensively characterize social engineering attacks. The core concepts of social engineering and their connections with security concepts are presented in Fig. 1.

Specifically, Human is a subclass of Asset, acting as the target of social engineering attacks. We argue that targeting human is a distinguishing feature of social engineering attack. Vulnerability of an asset is then specialized as Human Vulnerability. Different from software vulnerability, which is typically a defect, human vulnerability is not always a negative characteristic. For example, desiring to be helpful is a good for people in most contexts, but such a characteristic can increase the likelihood of being manipulated by social engineering attackers. Defining human vulnerability requires additional psychology and sociology knowledge, which is detailed and explained in the next subsection. In contrast, Attacker and Attack are more intuitive to be specialized into Social Engineer and Social Engineering Attack, respectively. During the survey of social engineering studies, we realized that human as an attack target can be reached by a social engineer through various ways, e.g., email, telephone, each of which presents a particular attack scenario. As such we define Attack Media as an important dimension for characterizing social engineering attacks and consider seven particular types of media, including Email, Instant Message, Telephone, Website, Malware, Face to Face, and Physical. Finally, another distinguish feature of social engineering attacks is to apply particular Social Engineering Techniques in order to manipulate human. In particular, each social engineering attack can apply one or multiple social engineering techniques, while each technique exploits one or multiple human vulnerabilities. Detailed explanation of this concept involves psychological knowledge and thus is presented in the next subsection.

3.2 Incorporate Social and Psychological Knowledge

As an interdisciplinary topic, the investigation of social engineering ontology inevitably requires social and psychological knowledge, especially when dealing with social attack techniques and human vulnerabilities. To this end, we survey and summarize relevant knowledge from existing social engineering studies [2, 3, 11, 14]. Moreover, we refer to psychological publications that discuss psychopathy and manipulative people [7, 16] in order to better understand how people is psychologically manipulated. Eventually, we here elaborate and define detailed Social Engineering Technique and Human Vulnerability, as well as their exploit relationships, based on the core social engineering concepts we identified before. On the one hand, we defined 9 atomic social engineering techniques, serving as fundamental concepts for describing various social engineering attacks. On the other hand, we so far have identified 8 typical personality traits, which are vulnerable to our defined atomic social engineering techniques and thus increase the likelihood of being manipulated by social engineers. Figure 2 shows our full research results, which are explained in detail below. It is worth noting that the elaborated concepts are by no means complete, and they should be continuously revised according to the recent advances of research in psychology and sociology.

Social Engineering Technique. We divide social engineering techniques into two categories, direct approach and indirect approach. The former category contains techniques that are directly applied to human, i.e., the social engineer directly interacts with the victim. Specifically, all direct approaches aim to first turn victims into certain situations/states, in which the victims will likely follow what the social engineer asks them to do.

-

Reverse social engineering is a means to establish trust between a social engineer and a victim, i.e., turn the victim into a trusting situation. Typically, the social engineer creates a situation in which the victim requires help and then present themselves as problem solvers to gain trust from the victim. After that the social engineer can easily manipulate the victim to disclose confidential information or to perform certain actions.

-

Impersonation is one of the most valued techniques that a social engineer impersonates himself with a particular identity that affects the state of the victim. In particular, the social engineer can achieve various malicious goals by impersonating different identities, depending on the roles of the identities. For example, impersonating as a supporting staff can get access to restricted areas, while impersonating as an authoritative person can ask the victim for confidential information.

-

Intimidation is a manipulation strategy with the purpose of turning victims into scared states in which they have to follow requests of the social engineer. It is worth noting that this technique can be carried out together with the impersonation technique, which can significantly increase its success rate.

-

Incentive is a manipulation strategy to make victim motivated to do something. For example, bribery is one of the most simple and effective behaviors to implement this strategy. Similar to the intimidation technique, incentive can also be applied together with impersonation. One common scenario is that a social engineer pretends to be a lottery company and send an email to victims saying they hit the jackpot and have to do certain things to get their rewards.

-

Responsibility manipulates victims to feel that they should do something in order to comply with certain laws, regulations, or social rules. For example, a social engineer may lie to the victim that his manager asks him to do something. More effectively, the social engineer can directly impersonate an authoritative person, i.e., combining with the impersonation technique.

-

Distraction is a direct approach to attract victims’ attention in order to carry out malicious actions without being detected. This strategy is commonly applied when more than one social engineer attack together.

The indirect approach category concerns techniques that do not involve direct interactions with the victim. Some researchers argue such techniques are outside the boundary of social engineering as victims have not been manipulated via interactions [14]. In this paper, we include these techniques as social engineering techniques as they do exploit human vulnerabilities, which we argue is the essential criterion of being a social engineering attack. More formal definition will be presented in the next subsection.

-

Shoulder Surfing is a simple but effective approach where a social engineer monitors the physical activity of victims in close proximity.

-

Dumpster Diving is to sift through the trash of individuals or companies with the aim of finding discarded items that include sensitive information.

-

Tailgating is a means to entry into a restricted area by following an authorized person. Note that although this technique might not require any interactions with any people, the behavior of the social engineer implies that he is an authorized person as well. As such some researchers classify this techniques as a subclass of impersonation [4].

Human Vulnerability. In the context of social engineering, personality traits that can increase the success rate of social engineering techniques are defined as human vulnerabilities. As shown in Fig. 2, among the identified 8 vulnerabilities, some of them are vulnerable to many social engineering techniques (e.g., gullibility), while others are only related to one particular technique (e.g., curiosity). We here explain each of them in detail.

-

Gullibility is the most exploited vulnerability. All social engineering techniques that involve deception take advantage of the gullibility of victims. As most people by nature are prone to believe what other people say instead of questioning, people have to be specially trained in order to avoid such attacks.

-

Curiosity is a trait which encourages people to disclose unknown things. When a social engineer sends mysterious messages to victims, such a trait will drive the victims to follow instructions to reveal the mysterious mask of the message.

-

Courtesy describes the fact that many people are by default desiring to be helpful. As a result, when they feel that they are responsible to do something, they tend to offer more than they should, violating the need-to-know policy.

-

Greed leads a victim to be vulnerable to incentive techniques (e.g., bribery).

-

Fear of unknown is vulnerable to both intimidation and incentive techniques. On the one hand, people’s fear can be significantly increased due to the unknown threats; On the other hand, people also are afraid of not knowing potential monetary rewards.

-

Thoughtlessness is common in reality, as we cannot expect all people being thoughtful all the time. When people are thoughtless, they are more vulnerable to distraction, shoulder surfing, dumpster diving and tailgating techniques.

-

Apathy is a trait that makes people ignore things that they think are not worthwhile to do, making shoulder surfing, dumpster diving and tailgating much easier to success. In many cases, such situation can be attributed to a management problem, which makes people’s morale low.

-

Diffusion of Responsibility describes that people are reluctant to do something even if he is responsible (e.g., asking a stranger to show his ID when he tries to enter confidential places), as they think other responsible people will do that. Taking advantages of this vulnerability, social engineers can easily apply perform impersonation and tailgating techniques.

3.3 Formal Definition of the Social Engineering Ontology

Based on the previously discussed concepts and relations of social engineering, we here provide a formal definition of our proposed social engineering ontology using Description Logic (DL), which is shown in Table 2. As defined at Axiom_1, we formally define a social engineering attack is an attack which applies one or multiple social engineering attack techniques, targets one particular person who has at least one human vulnerability, and is performed by a social engineer through a particular type of attack media. In particular, we here emphasize that the most distinguishing characteristic of a social engineering attack is exploiting human vulnerabilities. This explains why our ontology includes shoulder surfing as a social engineering attack, because it exploits people’s thoughtlessness and apathy. Moreover, Axiom_2–4 formally define human vulnerabilities, social engineering techniques and attack media, respectively.

Our proposed ontology comprehensively includes important dimensions for characterizing social engineering attacks, i.e., attack media, human vulnerability, and social engineering technique. Thus, we propose to base various classification schemata of social engineering attacks on our ontology, offering a shared terminology to related researchers. Taking a notable example, many studies roughly classify social engineering attacks a human-based attacks and technology-based attacks [1, 5, 14]. However, none of these studies precisely defines such a classification schema, and in fact their classification results are inconsistent with each other. Our ontology can be used to formally and unambiguously define such classification schema, and contribute to the communication and development of this research field. Axiom_5–7 in Table 3 show such formal definitions. Specifically, we define a social engineering attack as non-technology attack if it is carried out through either face to face or physical means, while all other social engineering attacks are then defined as technology-based attacks. Axiom_8–10 illustrate another example, in which we formally classify social engineering attacks as either an indirect attack or a direct attack. The former category includes social engineering attacks that apply shoulder surfing, dumpster diving, or tailgating techniques, and all other attacks are classified as direct social engineering attacks.

We have implemented our formal ontology in ProtégéFootnote 1, which is shown Fig. 3. With the implementation support, we are able to leverage inference engines to automatically classify social engineering attacks according to particular classification schemata (such as those defined in Table 3), which is essential for people to understand the ever-increasing set of social engineering attacks. Regrading our research objective, we plan to offer our ontology to social engineering researchers and contribute to this research community. The ontology file can be found onlineFootnote 2.

4 Evaluation

In this section, we evaluate the expressiveness of our ontology by using it to characterize and classify all social engineering attacks we have collected during our literature review [2,3,4, 8, 13, 14], i.e., attacks we have shown in Table 1. The attack classification is done strictly based on its original descriptions, even some of the descriptions might not be complete. In such a way, we try to make the evaluation clear and repeatable. The results of our evaluations lead us to the following observations and conclusions:

-

Our ontology has good expressiveness and is able to characterize all social engineering attacks we have collected in terms of attack technique, human vulnerability, and attack media.

-

As shown clearly in the table, the three dimensions are very helpful for classifying and comprehending social engineering attacks. More importantly, the results clearly present us which attacks are actually describing the same things and should be merged to avoid misunderstanding. For instance, Impersonation and Masquerading are the same type of attacks.

-

Interpreting various social engineering attacks based on our ontology can help with identifying useful categories of social engineering attacks. For instance, Phishing, Vishing, and SMSishing leverage the same social engineering techniques and exploit the same vulnerabilities, but through different attack media. In such a case, it is reasonable to consider a parent class of these three attacks.

5 Conclusions and Future Work

In this paper, we present our work towards an unambiguous and comprehensive ontology of social engineering. Our major research objective is to prompt the development of this research field, which is becoming more and more important. In particular, we have reviewed existing social engineering ontologies in detail and discovered current research challenges, motivated by which we propose a unified set of concepts of social engineering to covers various perspectives. To deal with the interdisciplinary nature of social engineering, we have systematically review and incorporate related psychology and sociology knowledge, and formalize the ontology using Description Logic (DL) . Finally, we evaluate the coverage and correctness of our approach by using it to analyze real social engineering attacks reported in literature. In the future, we are first planning to have researchers other than the authors to apply our ontology in order to evaluate its usability. Also, we aim to analyze industrial cases in a real word setting to validate the effectiveness of our ontology.

References

Foozy, F.M., Ahmad, R., Abdollah, M., Yusof, R., Mas’ud, M.: Generic taxonomy of social engineering attack. In: Malaysian Technical Universities International Conference on Engineering & Technology, pp. 1–7 (2011)

Gulati, R.: The threat of social engineering and your defense against it. SANS Reading Room (2003)

Harley, D.: Re-floating the titanic: dealing with social engineering attacks. European Institute for Computer Antivirus Research, pp. 4–29 (1998)

Ivaturi, K., Janczewski, L.: A taxonomy for social engineering attacks. In: International Conference on Information Resources Management, pp. 1–12. Centre for Information Technology, Organizations, and People (2011)

Janczewski, L.J., Fu, L.: Social engineering-based attacks: model and New Zealand perspective. In: Proceedings of the 2010 International Multiconference on Computer Science and Information Technology (IMCSIT), pp. 847–853. IEEE (2010)

Jürjens, J.: UMLsec: extending uml for secure systems development. In: Jézéquel, J.-M., Hussmann, H., Cook, S. (eds.) UML 2002. LNCS, vol. 2460, pp. 412–425. Springer, Heidelberg (2002). https://doi.org/10.1007/3-540-45800-X_32

Kantor, M.: The psychopathy of everyday life (2006)

Krombholz, K., Hobel, H., Huber, M., Weippl, E.: Advanced social engineering attacks. J. Inf. Secur. Appl. 22, 113–122 (2015)

Li, T., Horkoff, J.: Dealing with security requirements for socio-technical systems: a holistic approach. In: Jarke, M., et al. (eds.) CAiSE 2014. LNCS, vol. 8484, pp. 285–300. Springer, Cham (2014). https://doi.org/10.1007/978-3-319-07881-6_20

Li, T., Horkoff, J., Mylopoulos, J.: Holistic security requirements analysis for socio-technical systems. Softw. Syst. Model. 17(4), 1253–1285 (2018)

Mitnick, K.D., Simon, W.L.: The Art of Deception: Controlling the Human Element of Security. Wiley, Hoboken (2011)

Mouton, F., Leenen, L., Malan, M.M., Venter, H.S.: Towards an ontological model defining the social engineering domain. In: Kimppa, K., Whitehouse, D., Kuusela, T., Phahlamohlaka, J. (eds.) HCC 2014. IAICT, vol. 431, pp. 266–279. Springer, Heidelberg (2014). https://doi.org/10.1007/978-3-662-44208-1_22

Nyamsuren, E., Choi, H.-J.: Preventing social engineering in ubiquitous environment. In: Future Generation Communication and Networking (FGCN 2007), vol. 2, pp. 573–577. IEEE (2007)

Peltier, T.R.: Social engineering: concepts and solutions. Inf. Secur. J. 15(5), 13 (2006)

Roussey, C., Pinet, F., Kang, M.A., Corcho, O.: An introduction to ontologies and ontology engineering. In: Falquet, G., Métral, C., Teller, J., Tweed, C. (eds.) Ontologies in Urban Development Projects, vol. 1, pp. 9–38. Springer, London (2011). https://doi.org/10.1007/978-0-85729-724-2_2

Simon, G.K., Foley, K.: In Sheep’s Clothing: Understanding and Dealing with Manipulative People. Tantor Media, Incorporated, Old Saybrook (2011)

Souag, A., Salinesi, C., Comyn-Wattiau, I.: Ontologies for security requirements: a literature survey and classification. In: Bajec, M., Eder, J. (eds.) CAiSE 2012. LNBIP, vol. 112, pp. 61–69. Springer, Heidelberg (2012). https://doi.org/10.1007/978-3-642-31069-0_5

Acknowledgements

This work is supported by National Key R&D Program of China (No. 2018YFB0804703, 2017YFC0803307), the National Natural Science of Foundation of China (No. 91546111, 91646201), International Research Cooperation Seed Fund of Beijing University of Technology (No. 2018B2), and Basic Research Funding of Beijing University of Technology (No. 040000546318516).

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2019 Springer Nature Switzerland AG

About this paper

Cite this paper

Li, T., Ni, Y. (2019). Paving Ontological Foundation for Social Engineering Analysis. In: Giorgini, P., Weber, B. (eds) Advanced Information Systems Engineering. CAiSE 2019. Lecture Notes in Computer Science(), vol 11483. Springer, Cham. https://doi.org/10.1007/978-3-030-21290-2_16

Download citation

DOI: https://doi.org/10.1007/978-3-030-21290-2_16

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-21289-6

Online ISBN: 978-3-030-21290-2

eBook Packages: Computer ScienceComputer Science (R0)