Abstract

We introduce Meta-F\(^{\star }\), a tactics and metaprogramming framework for the F\(^\star \) program verifier. The main novelty of Meta-F\(^\star \) is allowing the use of tactics and metaprogramming to discharge assertions not solvable by SMT, or to just simplify them into well-behaved SMT fragments. Plus, Meta-F\(^\star \) can be used to generate verified code automatically.

Meta-F\(^\star \) is implemented as an F\(^\star \) effect, which, given the powerful effect system of F\(^{\star }\), heavily increases code reuse and even enables the lightweight verification of metaprograms. Metaprograms can be either interpreted, or compiled to efficient native code that can be dynamically loaded into the F\(^\star \) type-checker and can interoperate with interpreted code. Evaluation on realistic case studies shows that Meta-F\(^\star \) provides substantial gains in proof development, efficiency, and robustness.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

Scripting proofs using tactics and metaprogramming has a long tradition in interactive theorem provers (ITPs), starting with Milner’s Edinburgh LCF [37]. In this lineage, properties of pure programs are specified in expressive higher-order (and often dependently typed) logics, and proofs are conducted using various imperative programming languages, starting originally with ML.

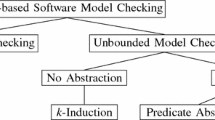

Along a different axis, program verifiers like Dafny [47], VCC [23], Why3 [33], and Liquid Haskell [59] target both pure and effectful programs, with side-effects ranging from divergence to concurrency, but provide relatively weak logics for specification (e.g., first-order logic with a few selected theories like linear arithmetic). They work primarily by computing verification conditions (VCs) from programs, usually relying on annotations such as pre- and postconditions, and encoding them to automated theorem provers (ATPs) such as satisfiability modulo theories (SMT) solvers, often providing excellent automation.

These two sub-fields have influenced one another, though the situation is somewhat asymmetric. On the one hand, most interactive provers have gained support for exploiting SMT solvers or other ATPs, providing push-button automation for certain kinds of assertions [26, 31, 43, 44, 54]. On the other hand, recognizing the importance of interactive proofs, Why3 [33] interfaces with ITPs like Coq. However, working over proof obligations translated from Why3 requires users to be familiar not only with both these systems, but also with the specifics of the translation. And beyond Why3 and the tools based on it [25], no other SMT-based program verifiers have full-fledged support for interactive proving, leading to several downsides:

Limits to expressiveness. The expressiveness of program verifiers can be limited by the ATP used. When dealing with theories that are undecidable and difficult to automate (e.g., non-linear arithmetic or separation logic), proofs in ATP-based systems may become impossible or, at best, extremely tedious.

Boilerplate. To work around this lack of automation, programmers have to construct detailed proofs by hand, often repeating many tedious yet error-prone steps, so as to provide hints to the underlying solver to discover the proof. In contrast, ITPs with metaprogramming facilities excel at expressing domain-specific automation to complete such tedious proofs.

Implicit proof context. In most program verifiers, the logical context of a proof is implicit in the program text and depends on the control flow and the pre- and postconditions of preceding computations. Unlike in interactive proof assistants, programmers have no explicit access, neither visual nor programmatic, to this context, making proof structuring and exploration extremely difficult.

In direct response to these drawbacks, we seek a system that successfully combines the convenience of an automated program verifier for the common case, while seamlessly transitioning to an interactive proving experience for those parts of a proof that are hard to automate. Towards this end, we propose Meta-F\(^\star \), a tactics and metaprogramming framework for the F\(^\star \) [1, 58] program verifier.

Highlights and Contributions of Meta-F\(^\star \)

F\(^\star \) has historically been more deeply rooted as an SMT-based program verifier. Until now, F\(^\star \) discharged VCs exclusively by calling an SMT solver (usually Z3 [28]), providing good automation for many common program verification tasks, but also exhibiting the drawbacks discussed above.

Meta-F\(^\star \) is a framework that allows F\(^\star \) users to manipulate VCs using tactics. More generally, it supports metaprogramming, allowing programmers to script the construction of programs, by manipulating their syntax and customizing the way they are type-checked. This allows programmers to (1) implement custom procedures for manipulating VCs; (2) eliminate boilerplate in proofs and programs; and (3) to inspect the proof state visually and to manipulate it programmatically, addressing the drawbacks discussed above. SMT still plays a central role in Meta-F\(^\star \): a typical usage involves implementing tactics to transform VCs, so as to bring them into theories well-supported by SMT, without needing to (re)implement full decision procedures. Further, the generality of Meta-F\(^\star \) allows implementing non-trivial language extensions (e.g., typeclass resolution) entirely as metaprogramming libraries, without changes to the F\(^\star \) type-checker.

The technical contributions of our work include the following:

“Meta-” is just an effect (Sect. 3.1). Meta-F\(^\star \) is implemented using F\(^{\star }\)’s extensible effect system, which keeps programs and metaprograms properly isolated. Being first-class F\(^\star \) programs, metaprograms are typed, call-by-value, direct-style, higher-order functional programs, much like the original ML. Further, metaprograms can be themselves verified (to a degree, see Sect. 3.4) and metaprogrammed.

Reconciling tactics with VC generation (Sect. 4.2). In program verifiers the programmer often guides the solver towards the proof by supplying intermediate assertions. Meta-F\(^\star \) retains this style, but additionally allows assertions to be solved by tactics. To this end, a contribution of our work is extracting, from a VC, a proof state encompassing all relevant hypotheses, including those implicit in the program text.

Executing metaprograms efficiently (Sect. 5). Metaprograms are executed during type-checking. As a baseline, they can be interpreted using F\(^\star \)’s existing (but slow) abstract machine for term normalization, or a faster normalizer based on normalization by evaluation (NbE) [10, 16]. For much faster execution speed, metaprograms can also be run natively. This is achieved by combining the existing extraction mechanism of F\(^\star \) to OCaml with a new framework for safely extending the F\(^\star \) type-checker with such native code.

Examples (Sect. 2) and evaluation (Sect. 6). We evaluate Meta-F\(^\star \) on several case studies. First, we present a functional correctness proof for the Poly1305 message authentication code (MAC) [11], using a novel combination of proofs by reflection for dealing with non-linear arithmetic and SMT solving for linear arithmetic. We measure a clear gain in proof robustness: SMT-only proofs succeed only rarely (for reasonable timeouts), whereas our tactic+SMT proof is concise, never fails, and is faster. Next, we demonstrate an improvement in expressiveness, by developing a small library for proofs of heap-manipulating programs in separation logic, which was previously out-of-scope for F\(^\star \). Finally, we illustrate the ability to automatically construct verified effectful programs, by introducing a library for metaprogramming verified low-level parsers and serializers with applications to network programming, where verification is accelerated by processing the VC with tactics, and by programmatically tweaking the SMT context.

We conclude that tactics and metaprogramming can be prosperously combined with VC generation and SMT solving to build verified programs with better, more scalable, and more robust automation.

The full version of this paper, including appendices, can be found online in https://www.fstar-lang.org/papers/metafstar.

2 Meta-F\(^\star \) by Example

F\(^\star \) is a general-purpose programming language aimed at program verification. It puts together the automation of an SMT-backed deductive verification tool with the expressive power of a language with full-spectrum dependent types. Briefly, it is a functional, higher-order, effectful, dependently typed language, with syntax loosely based on OCaml. F\(^\star \) supports refinement types and Hoare-style specifications, computing VCs of computations via a type-level weakest precondition (WP) calculus packed within Dijkstra monads [57]. F\(^\star \)’s effect system is also user-extensible [1]. Using it, one can model or embed imperative programming in styles ranging from ML to C [55] and assembly [35]. After verification, F\(^\star \) programs can be extracted to efficient OCaml or F# code. A first-order fragment of F\(^\star \), called Low\(^\star \), can also be extracted to C via the KreMLin compiler [55].

This paper introduces Meta-F\(^\star \), a metaprogramming framework for F\(^\star \) that allows users to safely customize and extend F\(^\star \) in many ways. For instance, Meta-F\(^\star \) can be used to preprocess or solve proof obligations; synthesize F\(^\star \) expressions; generate top-level definitions; and resolve implicit arguments in user-defined ways, enabling non-trivial extensions. This paper primarily discusses the first two features. Technically, none of these features deeply increase the expressive power of F\(^\star \), since one could manually program in F\(^\star \) terms that can now be metaprogrammed. However, as we will see shortly, manually programming terms and their proofs can be so prohibitively costly as to be practically infeasible.

Meta-F\(^\star \) is similar to other tactic frameworks, such as Coq’s [29] or Lean’s [30], in presenting a set of goals to the programmer, providing commands to break them down, allowing to inspect and build abstract syntax, etc. In this paper, we mostly detail the characteristics where Meta-F\(^\star \) differs from other engines.

This section presents Meta-F\(^\star \) informally, displaying its usage through case studies. We present any necessary F\(^\star \) background as needed.

2.1 Tactics for Individual Assertions and Partial Canonicalization

Non-linear arithmetic reasoning is crucially needed for the verification of optimized, low-level cryptographic primitives [18, 64], an important use case for F\(^\star \) [13] and other verification frameworks, including those that rely on SMT solving alone (e.g., Dafny [47]) as well as those that rely exclusively on tactic-based proofs (e.g., FiatCrypto [32]). While both styles have demonstrated significant successes, we make a case for a middle ground, leveraging the SMT solver for the parts of a VC where it is effective, and using tactics only where it is not.

We focus on Poly1305 [11], a widely-used cryptographic MAC that computes a series of integer multiplications and additions modulo a large prime number \(p = 2^{130} - 5\). Implementations of the Poly1305 multiplication and mod operations are carefully hand-optimized to represent 130-bit numbers in terms of smaller 32-bit or 64-bit registers, using clever tricks; proving their correctness requires reasoning about long sequences of additions and multiplications.

Previously: Guiding SMT Solvers by Manually Applying Lemmas. Prior proofs of correctness of Poly1305 and other cryptographic primitives using SMT-based program verifiers, including F\(^\star \) [64] and Dafny [18], use a combination of SMT automation and manual application of lemmas. On the plus side, SMT solvers are excellent at linear arithmetic, so these proofs delegate all associativity-commutativity (AC) reasoning about addition to SMT. Non-linear arithmetic in SMT solvers, even just AC-rewriting and distributivity, are, however, inefficient and unreliable—so much so that the prior efforts above (and other works too [40, 41]) simply turn off support for non-linear arithmetic in the solver, in order not to degrade verification performance across the board due to poor interaction of theories. Instead, users need to explicitly invoke lemmas.Footnote 1

For instance, here is a statement and proof of a lemma about Poly1305 in F\(^\star \). The property and its proof do not really matter; the lines marked “

” do. In this particular proof, working around the solver’s inability to effectively reason about non-linear arithmetic, the programmer has spelled out basic facts about distributivity of multiplication and addition, by calling the library lemma

” do. In this particular proof, working around the solver’s inability to effectively reason about non-linear arithmetic, the programmer has spelled out basic facts about distributivity of multiplication and addition, by calling the library lemma

, in order to guide the solver towards the proof. (Below,

, in order to guide the solver towards the proof. (Below,

and

and

represent \(2^{44}\) and \(2^{88}\) respectively)

represent \(2^{44}\) and \(2^{88}\) respectively)

Even at this relatively small scale, needing to explicitly instantiate the distributivity lemma is verbose and error prone. Even worse, the user is blind while doing so: the program text does not display the current set of available facts nor the final goal. Proofs at this level of abstraction are painfully detailed in some aspects, yet also heavily reliant on the SMT solver to fill in the aspects of the proof that are missing.

Given enough time, the solver can sometimes find a proof without the additional hints, but this is usually rare and dependent on context, and almost never robust. In this particular example we find by varying Z3’s random seed that, in an isolated setting, the lemma is proven automatically about 32% of the time. The numbers are much worse for more complex proofs, and where the context contains many facts, making this style quickly spiral out of control. For example, a proof of one of the main lemmas in Poly1305,

, requires 41 steps of rewriting for associativity-commutativity of multiplication, and distributivity of addition and multiplication—making the proof much too long to show here.

, requires 41 steps of rewriting for associativity-commutativity of multiplication, and distributivity of addition and multiplication—making the proof much too long to show here.

SMT and Tactics in Meta-F\({^{\star }}\mathbf{.}\) The listing below shows the statement and proof of

in Meta-F\(^\star \), of which the lemma above was previously only a small part. Again, the specific property proven is not particularly relevant to our discussion. But, this time, the proof contains just two steps.

in Meta-F\(^\star \), of which the lemma above was previously only a small part. Again, the specific property proven is not particularly relevant to our discussion. But, this time, the proof contains just two steps.

First, we call a single lemma about modular addition from F\(^\star \)’s standard library. Then, we assert an equality annotated with a tactic (

). Instead of encoding the assertion as-is to the SMT solver, it is preprocessed by the

). Instead of encoding the assertion as-is to the SMT solver, it is preprocessed by the

tactic. The tactic is presented with the asserted equality as its goal, in an environment containing not only all variables in scope but also hypotheses for the precondition of

tactic. The tactic is presented with the asserted equality as its goal, in an environment containing not only all variables in scope but also hypotheses for the precondition of

and the postcondition of the

and the postcondition of the

call (otherwise, the assertion could not be proven). The tactic will then canonicalize the sides of the equality, but notably only “up to” linear arithmetic conversions. Rather than fully canonicalizing the terms, the tactic just rewrites them into a sum-of-products canonical form, leaving all the remaining work to the SMT solver, which can then easily and robustly discharge the goal using linear arithmetic only.

call (otherwise, the assertion could not be proven). The tactic will then canonicalize the sides of the equality, but notably only “up to” linear arithmetic conversions. Rather than fully canonicalizing the terms, the tactic just rewrites them into a sum-of-products canonical form, leaving all the remaining work to the SMT solver, which can then easily and robustly discharge the goal using linear arithmetic only.

This tactic works over terms in the commutative semiring of integers (

) using proof-by-reflection [12, 20, 36, 38]. Internally, it is composed of a simpler, also proof-by-reflection based tactic

) using proof-by-reflection [12, 20, 36, 38]. Internally, it is composed of a simpler, also proof-by-reflection based tactic

that works over monoids, which is then “stacked” on itself to build

that works over monoids, which is then “stacked” on itself to build

. The basic idea of proof-by-reflection is to reduce most of the proof burden to mechanical computation, obtaining much more efficient proofs compared to repeatedly applying lemmas. For

. The basic idea of proof-by-reflection is to reduce most of the proof burden to mechanical computation, obtaining much more efficient proofs compared to repeatedly applying lemmas. For

, we begin with a type for monoids, a small AST representing monoid values, and a denotation for expressions back into the monoid type.

, we begin with a type for monoids, a small AST representing monoid values, and a denotation for expressions back into the monoid type.

To canonicalize an

, it is first converted to a list of operands (

, it is first converted to a list of operands (

) and then reflected back to the monoid (

) and then reflected back to the monoid (

). The process is proven correct, in the particular case of equalities, by the

). The process is proven correct, in the particular case of equalities, by the

lemma.

lemma.

At this stage, if the goal is

, we require two monoidal expressions

, we require two monoidal expressions

and

and

such that

such that

and

and

. They are constructed by the tactic

. They are constructed by the tactic

by inspecting the syntax of the goal, using Meta-F\(^\star \)’s reflection capabilities (detailed ahead in Sect. 3.3). We have no way to prove once and for all that the expressions built by

by inspecting the syntax of the goal, using Meta-F\(^\star \)’s reflection capabilities (detailed ahead in Sect. 3.3). We have no way to prove once and for all that the expressions built by

correctly denote the terms, but this fact can be proven automatically at each application of the tactic, by simple unification. The tactic then applies the lemma

correctly denote the terms, but this fact can be proven automatically at each application of the tactic, by simple unification. The tactic then applies the lemma

, and the goal is changed to

, and the goal is changed to

. Finally, by normalization, each side will be canonicalized by running

. Finally, by normalization, each side will be canonicalized by running

and

and

.

.

The

tactic follows a similar approach, and is similar to existing reflective tactics for other proof assistants [9, 38], except that it only canonicalizes up to linear arithmetic, as explained above. The full VC for

tactic follows a similar approach, and is similar to existing reflective tactics for other proof assistants [9, 38], except that it only canonicalizes up to linear arithmetic, as explained above. The full VC for

contains many other facts, e.g., that

contains many other facts, e.g., that

is non-zero so the division is well-defined and that the postcondition does indeed hold. These obligations remain in a “skeleton” VC that is also easily proven by Z3. This proof is much easier for the programmer to write and much more robust, as detailed ahead in Sect. 6.1. The proof of Poly1305’s other main lemma,

is non-zero so the division is well-defined and that the postcondition does indeed hold. These obligations remain in a “skeleton” VC that is also easily proven by Z3. This proof is much easier for the programmer to write and much more robust, as detailed ahead in Sect. 6.1. The proof of Poly1305’s other main lemma,

, is also similarly well automated.

, is also similarly well automated.

Tactic Proofs Without SMT. Of course, one can verify

in Coq, following the same conceptual proof used in Meta-F\(^\star \), but relying on tactics only. Our proof (included in the appendix) is 27 lines long, two of which involve the use of Coq’s

in Coq, following the same conceptual proof used in Meta-F\(^\star \), but relying on tactics only. Our proof (included in the appendix) is 27 lines long, two of which involve the use of Coq’s

tactic (similar to our

tactic (similar to our

tactic) and

tactic) and

tactic for solving formulas in Presburger arithmetic. The remaining 25 lines include steps to destruct the propositional structure of terms, rewrite by equalities, enriching the context to enable automatic modulo rewriting (Coq does not fully automatically recognize equality modulo p as an equivalence relation compatible with arithmetic operators). While a mature proof assistant like Coq has libraries and tools to ease this kind of manipulation, it can still be verbose.

tactic for solving formulas in Presburger arithmetic. The remaining 25 lines include steps to destruct the propositional structure of terms, rewrite by equalities, enriching the context to enable automatic modulo rewriting (Coq does not fully automatically recognize equality modulo p as an equivalence relation compatible with arithmetic operators). While a mature proof assistant like Coq has libraries and tools to ease this kind of manipulation, it can still be verbose.

In contrast, in Meta-F\(^\star \) all of these mundane parts of a proof are simply dispatched to the SMT solver, which decides linear arithmetic efficiently, beyond the quantifier-free Presburger fragment supported by tactics like

, handles congruence closure natively, etc.

, handles congruence closure natively, etc.

2.2 Tactics for Entire VCs and Separation Logic

A different way to invoke Meta-F\(^\star \) is over an entire VC. While the exact shape of VCs is hard to predict, users with some experience can write tactics that find and solve particular sub-assertions within a VC, or simply massage them into shapes better suited for the SMT solver. We illustrate the idea on proofs for heap-manipulating programs.

One verification method that has eluded F\(^\star \) until now is separation logic, the main reason being that the pervasive “frame rule” requires instantiating existentially quantified heap variables, which is a challenge for SMT solvers, and simply too tedious for users. With Meta-F\(^\star \), one can do better. We have written a (proof-of-concept) embedding of separation logic and a tactic (

) that performs heap frame inference automatically.

) that performs heap frame inference automatically.

The approach we follow consists of designing the WP specifications for primitive stateful actions so as to make their footprint syntactically evident. The tactic then descends through VCs until it finds an existential for heaps arising from the frame rule. Then, by solving an equality between heap expressions (which requires canonicalization, for which we use a variant of

targeting commutative monoids) the tactic finds the frames and instantiates the existentials. Notably, as opposed to other tactic frameworks for separation logic [4, 45, 49, 51], this is all our tactic does before dispatching to the SMT solver, which can now be effective over the instantiated VC.

targeting commutative monoids) the tactic finds the frames and instantiates the existentials. Notably, as opposed to other tactic frameworks for separation logic [4, 45, 49, 51], this is all our tactic does before dispatching to the SMT solver, which can now be effective over the instantiated VC.

We now provide some detail on the framework. Below, ‘

’ represents the empty heap, ‘\(\bullet \)’ is the separating conjunction and ‘

’ represents the empty heap, ‘\(\bullet \)’ is the separating conjunction and ‘

’ is the heaplet with the single reference

’ is the heaplet with the single reference

set to value

set to value

.Footnote 2 Our development distinguishes between a “heap” and its “memory” for technical reasons, but we will treat the two as equivalent here. Further,

.Footnote 2 Our development distinguishes between a “heap” and its “memory” for technical reasons, but we will treat the two as equivalent here. Further,

is a predicate discriminating valid heaps (as in [52]), i.e., those built from separating conjunctions of actually disjoint heaps.

is a predicate discriminating valid heaps (as in [52]), i.e., those built from separating conjunctions of actually disjoint heaps.

We first define the type of WPs and present the WP for the frame rule:

Intuitively,

behaves as the postcondition

behaves as the postcondition

“framed” by

“framed” by

, i.e.,

, i.e.,

holds when the two heaps

holds when the two heaps

and

and

are disjoint and

are disjoint and

holds over the result value

holds over the result value

and the conjoined heaps. Then,

and the conjoined heaps. Then,

takes a postcondition

takes a postcondition

and initial heap

and initial heap

, and requires that

, and requires that

can be split into disjoint subheaps

can be split into disjoint subheaps

(the footprint) and

(the footprint) and

(the frame), such that the postcondition

(the frame), such that the postcondition

, when properly framed, holds over the footprint.

, when properly framed, holds over the footprint.

In order to provide specifications for primitive actions we start in small-footprint style. For instance, below is the WP for reading a reference:

We then insert framing wrappers around such small-footprint WPs when exposing the corresponding stateful actions to the programmer, e.g.,

To verify code written in such style, we annotate the corresponding programs to have their VCs processed by

. For instance, for the

. For instance, for the

function below, the tactic successfully finds the frames for the four occurrences of the frame rule and greatly reduces the solver’s work. Even in this simple example, not performing such instantiation would cause the solver to fail.

function below, the tactic successfully finds the frames for the four occurrences of the frame rule and greatly reduces the solver’s work. Even in this simple example, not performing such instantiation would cause the solver to fail.

The

tactic: (1) uses syntax inspection to unfold and traverse the goal until it reaches a

tactic: (1) uses syntax inspection to unfold and traverse the goal until it reaches a

—say, the one for

—say, the one for

; (2) inspects

; (2) inspects

’s first explicit argument (here

’s first explicit argument (here

) to compute the references the current command requires (here

) to compute the references the current command requires (here

); (3) uses unification variables to build a memory expression describing the required framing of input memory (here

); (3) uses unification variables to build a memory expression describing the required framing of input memory (here

) and instantiates the existentials of

) and instantiates the existentials of

with these unification variables; (4) builds a goal that equates this memory expression with

with these unification variables; (4) builds a goal that equates this memory expression with

’s third argument (here

’s third argument (here

); and (5) uses a commutative monoids tactic (similar to Sect. 2.1) with the heap algebra (

); and (5) uses a commutative monoids tactic (similar to Sect. 2.1) with the heap algebra (

,

,

) to canonicalize the equality and sort the heaplets. Next, it can solve for the unification variables component-wise, instantiating

) to canonicalize the equality and sort the heaplets. Next, it can solve for the unification variables component-wise, instantiating

to

to

and

and

, and then proceed to the next

, and then proceed to the next

.

.

In general, after frames are instantiated, the SMT solver can efficiently prove the remaining assertions, such as the obligations about heap definedness. Thus, with relatively little effort, Meta-F\(^\star \) brings an (albeit simple version of a) widely used yet previously out-of-scope program logic (i.e., separation logic) into F\(^\star \). To the best of our knowledge, the ability to script separation logic into an SMT-based program verifier, without any primitive support, is unique.

2.3 Metaprogramming Verified Low-Level Parsers and Serializers

Above, we used Meta-F\(^\star \) to manipulate VCs for user-written code. Here, we focus instead on generating verified code automatically. We loosely refer to the previous setting as using “tactics”, and to the current one as “metaprogramming”. In most ITPs, tactics and metaprogramming are not distinguished; however in a program verifier like F\(^\star \), where some proofs are not materialized at all (Sect. 4.1), proving VCs of existing terms is distinct from generating new terms.

Metaprogramming in F\(^\star \) involves programmatically generating a (potentially effectful) term (e.g., by constructing its syntax and instructing F\(^\star \) how to type-check it) and processing any VCs that arise via tactics. When applicable (e.g., when working in a domain-specific language), metaprogramming verified code can substantially reduce, or even eliminate, the burden of manual proofs.

We illustrate this by automating the generation of parsers and serializers from a type definition. Of course, this is a routine task in many mainstream metaprogramming frameworks (e.g., Template Haskell, camlp4, etc). The novelty here is that we produce imperative parsers and serializers extracted to C, with proofs that they are memory safe, functionally correct, and mutually inverse. This section is slightly simplified, more detail can be found the appendix.

We proceed in several stages. First, we program a library of pure, high-level parser and serializer combinators, proven to be (partial) mutual inverses of each other. A

for a type

for a type

is represented as a function possibly returning a

is represented as a function possibly returning a

along with the amount of input bytes consumed. The type of a

along with the amount of input bytes consumed. The type of a

for a given

for a given

contains a refinementFootnote 3 stating that

contains a refinementFootnote 3 stating that

is an inverse of the serializer. A

is an inverse of the serializer. A

is a dependent record of a parser and an associated serializer.

is a dependent record of a parser and an associated serializer.

Basic combinators in the library include constructs for parsing and serializing base values and pairs, such as the following:

Next, we define low-level versions of these combinators, which work over mutable arrays instead of byte sequences. These combinators are coded in the Low\(^\star \) subset of F\(^\star \) (and so can be extracted to C) and are proven to both be memory-safe and respect their high-level variants. The type for low-level parsers,

, denotes an imperative function that reads from an array of bytes and returns a

, denotes an imperative function that reads from an array of bytes and returns a

, behaving as the specificational parser

, behaving as the specificational parser

. Conversely, a

. Conversely, a

writes into an array of bytes, behaving as

writes into an array of bytes, behaving as

.

.

Given such a library, we would like to build verified, mutually inverse, low-level parsers and serializers for specific data formats. The task is mechanical, yet overwhelmingly tedious by hand, with many auxiliary proof obligations of a predictable structure: a perfect candidate for metaprogramming.

Deriving Specifications from a Type Definition. Consider the following F\(^\star \) type, representing lists of exactly 18 pairs of bytes.

The first component of our metaprogram is

, which generates parser and serializer specifications from a type definition.

, which generates parser and serializer specifications from a type definition.

The syntax

is the way to call Meta-F\(^\star \) for code generation. Meta-F\(^\star \) will run the metaprogram

is the way to call Meta-F\(^\star \) for code generation. Meta-F\(^\star \) will run the metaprogram

and, if successful, replace the underscore by the result. In this case, the

and, if successful, replace the underscore by the result. In this case, the

inspects the syntax of the

inspects the syntax of the

type (Sect. 3.3) and produces the package below (

type (Sect. 3.3) and produces the package below (

and

and

are sequencing combinators):

are sequencing combinators):

Deriving Low-Level Implementations that Match Specifications. From this pair of specifications, we can automatically generate Low\(^\star \) implementations for them:

which will produce the following low-level implementations:

For simple types like the one above, the generated code is fairly simple. However, for more complex types, using the combinator library comes with non-trivial proof obligations. For example, even for a simple enumeration,

, the parser specification is as follows:

, the parser specification is as follows:

We represent

with

with

and

and

with

with

. The parser first parses a “bounded” byte, with only two values. The

. The parser first parses a “bounded” byte, with only two values. The

combinator then expects functions between the bounded byte and the datatype being parsed (

combinator then expects functions between the bounded byte and the datatype being parsed (

), which must be proven to be mutual inverses. This proof is conceptually easy, but for large enumerations nested deep within the structure of other types, it is notoriously hard for SMT solvers. Since the proof is inherently computational, a proof that destructs the inductive type into its cases and then normalizes is much more natural. With our metaprogram, we can produce the term and then discharge these proof obligations with a tactic on the spot, eliminating them from the final VC. We also explore simply tweaking the SMT context, again via a tactic, with good results. A quantitative evaluation is provided in Sect. 6.2.

), which must be proven to be mutual inverses. This proof is conceptually easy, but for large enumerations nested deep within the structure of other types, it is notoriously hard for SMT solvers. Since the proof is inherently computational, a proof that destructs the inductive type into its cases and then normalizes is much more natural. With our metaprogram, we can produce the term and then discharge these proof obligations with a tactic on the spot, eliminating them from the final VC. We also explore simply tweaking the SMT context, again via a tactic, with good results. A quantitative evaluation is provided in Sect. 6.2.

3 The Design of Meta-F\(^\star \)

Having caught a glimpse of the use cases for Meta-F\(^\star \), we now turn to its design. As usual in proof assistants (such as Coq, Lean and Idris), Meta-F\(^\star \) tactics work over a set of goals and apply primitive actions to transform them, possibly solving some goals and generating new goals in the process. Since this is standard, we will focus the most on describing the aspects where Meta-F\(^\star \) differs from other engines. We first describe how metaprograms are modelled as an effect (Sect. 3.1) and their runtime model (Sect. 3.2). We then detail some of Meta-F\(^\star \)’s syntax inspection and building capabilities (Sect. 3.3). Finally, we show how to perform some (lightweight) verification of metaprograms (Sect. 3.4) within F\(^\star \).

3.1 An Effect for Metaprogramming

Meta-F\(^\star \) tactics are, at their core, programs that transform the “proof state”, i.e. a set of goals needing to be solved. As in Lean [30] and Idris [22], we define a monad combining exceptions and stateful computations over a proof state, along with actions that can access internal components such as the type-checker. For this we first introduce abstract types for the proof state, goals, terms, environments, etc., together with functions to access them, some of them shown below.

We can now define our metaprogramming monad:

. It combines F\(^\star \)’s existing effect for potential divergence (

. It combines F\(^\star \)’s existing effect for potential divergence (

), with exceptions and stateful computations over a

), with exceptions and stateful computations over a

. The definition of

. The definition of

, shown below, is straightforward and given in F\(^\star \)’s standard library. Then, we use F\(^\star \)’s effect extension capabilities [1] in order to elevate the

, shown below, is straightforward and given in F\(^\star \)’s standard library. Then, we use F\(^\star \)’s effect extension capabilities [1] in order to elevate the

monad and its actions to an effect, dubbed

monad and its actions to an effect, dubbed

.

.

The

declaration introduces computation types of the form

declaration introduces computation types of the form

, where

, where

is the return type and

is the return type and

a specification. However, until Sect. 3.4 we shall only use the derived form

a specification. However, until Sect. 3.4 we shall only use the derived form

, where the specification is trivial. These computation types are distinct from their underlying monadic representation type

, where the specification is trivial. These computation types are distinct from their underlying monadic representation type

—users cannot directly access the proof state except via the actions. The simplest actions stem from the

—users cannot directly access the proof state except via the actions. The simplest actions stem from the

monad definition:

monad definition:

returns the current proof state and

returns the current proof state and

fails with the given exceptionFootnote 4. Failures can be handled using

fails with the given exceptionFootnote 4. Failures can be handled using

, which resets the state on failure, including that of unification metavariables. We emphasize two points here. First, there is no “

, which resets the state on failure, including that of unification metavariables. We emphasize two points here. First, there is no “

” action. This is to forbid metaprograms from arbitrarily replacing their proof state, which would be unsound. Second, the argument to

” action. This is to forbid metaprograms from arbitrarily replacing their proof state, which would be unsound. Second, the argument to

must be thunked, since in F\(^\star \) impure un-suspended computations are evaluated before they are passed into functions.

must be thunked, since in F\(^\star \) impure un-suspended computations are evaluated before they are passed into functions.

The only aspect differentiating

from other user-defined effects is the existence of effect-specific primitive actions, which give access to the metaprogramming engine proper. We list here but a few:

from other user-defined effects is the existence of effect-specific primitive actions, which give access to the metaprogramming engine proper. We list here but a few:

All of these are given an interpretation internally by Meta-F\(^\star \). For instance,

calls into F\(^\star \)’s logical simplifier to check whether the current goal is a trivial proposition and discharges it if so, failing otherwise. The

calls into F\(^\star \)’s logical simplifier to check whether the current goal is a trivial proposition and discharges it if so, failing otherwise. The

primitive queries the type-checker to infer the type of a given term in the current environment (F\(^\star \) types are a kind of terms, hence the codomain of

primitive queries the type-checker to infer the type of a given term in the current environment (F\(^\star \) types are a kind of terms, hence the codomain of

is also

is also

). This does not change the proof state; its only purpose is to return useful information to the calling metaprograms. Finally,

). This does not change the proof state; its only purpose is to return useful information to the calling metaprograms. Finally,

outputs the current proof state to the user in a pretty-printed format, in support of user interaction.

outputs the current proof state to the user in a pretty-printed format, in support of user interaction.

Having introduced the

effect and some basic actions, writing metaprograms is as straightforward as writing any other F\(^\star \) code. For instance, here are two metaprogram combinators. The first one repeatedly calls its argument until it fails, returning a list of all the successfully-returned values. The second one behaves similarly, but folds the results with some provided folding function.

effect and some basic actions, writing metaprograms is as straightforward as writing any other F\(^\star \) code. For instance, here are two metaprogram combinators. The first one repeatedly calls its argument until it fails, returning a list of all the successfully-returned values. The second one behaves similarly, but folds the results with some provided folding function.

These two small combinators illustrate a few key points of Meta-F\(^\star \). As for all other F\(^\star \) effects, metaprograms are written in applicative style, without explicit

,

,

, or

, or

of computations (which are inserted under the hood). This also works across different effects:

of computations (which are inserted under the hood). This also works across different effects:

can seamlessly combine the pure

can seamlessly combine the pure

from F\(^\star \)’s list library with a metaprogram like

from F\(^\star \)’s list library with a metaprogram like

. Metaprograms are also type- and effect-inferred: while

. Metaprograms are also type- and effect-inferred: while

was not at all annotated, F\(^\star \) infers the polymorphic type

was not at all annotated, F\(^\star \) infers the polymorphic type

for it.

for it.

It should be noted that, if lacking an effect extension feature, one could embed metaprograms simply via the (properly abstracted)

monad instead of the

monad instead of the

effect. It is just more convenient to use an effect, given we are working within an effectful program verifier already. In what follows, with the exception of Sect. 3.4 where we describe specifications for metaprograms, there is little reliance on using an effect; so, the same ideas could be applied in other settings.

effect. It is just more convenient to use an effect, given we are working within an effectful program verifier already. In what follows, with the exception of Sect. 3.4 where we describe specifications for metaprograms, there is little reliance on using an effect; so, the same ideas could be applied in other settings.

3.2 Executing Meta-F\(^\star \) Metaprograms

Running metaprograms involves three steps. First, they are reified [1] into their underlying

representation, i.e. as state-passing functions. User code cannot reify metaprograms: only F\(^\star \) can do so when about to process a goal.

representation, i.e. as state-passing functions. User code cannot reify metaprograms: only F\(^\star \) can do so when about to process a goal.

Second, the reified term is applied to an initial proof state, and then simply evaluated according to F\(^\star \)’s dynamic semantics, for instance using F\(^\star \)’s existing normalizer. For intensive applications, such as proofs by reflection, we provide faster alternatives (Sect. 5). In order to perform this second step, the proof state, which up until this moments exists only internally to F\(^\star \), must be embedded as a term, i.e., as abstract syntax. Here is where its abstraction pays off: since metaprograms cannot interact with a proof state except through a limited interface, it need not be deeply embedded as syntax. By simply wrapping the internal proofstate into a new kind of “alien” term, and making the primitives aware of this wrapping, we can readily run the metaprogram that safely carries its alien proof state around. This wrapping of proof states is a constant-time operation.

The third step is interpreting the primitives. They are realized by functions of similar types implemented within the F\(^\star \) type-checker, but over an internal

monad and the concrete definitions for

monad and the concrete definitions for

,

,

, etc. Hence, there is a translation involved on every call and return, switching between embedded representations and their concrete variants. Take

, etc. Hence, there is a translation involved on every call and return, switching between embedded representations and their concrete variants. Take

, for example, with type

, for example, with type

. Its internal implementation, implemented within the F\(^\star \) type-checker, has type

. Its internal implementation, implemented within the F\(^\star \) type-checker, has type

. When interpreting a call to it, the interpreter must unembed the arguments (which are representations of F\(^\star \) terms) into a concrete string and a concrete proofstate to pass to the internal implementation of

. When interpreting a call to it, the interpreter must unembed the arguments (which are representations of F\(^\star \) terms) into a concrete string and a concrete proofstate to pass to the internal implementation of

. The situation is symmetric for the return value of the call, which must be embedded as a term.

. The situation is symmetric for the return value of the call, which must be embedded as a term.

3.3 Syntax Inspection, Generation, and Quotation

If metaprograms are to be reusable over different kinds of goals, they must be able to reflect on the goals they are invoked to solve. Like any metaprogramming system, Meta-F\(^\star \) offers a way to inspect and construct the syntax of F\(^\star \) terms. Our representation of terms as an inductive type, and the variants of quotations, are inspired by the ones in Idris [22] and Lean [30].

Inspecting Syntax. Internally, F\(^\star \) uses a locally-nameless representation [21] with explicit, delayed substitutions. To shield metaprograms from some of this internal bureaucracy, we expose a simplified view [61] of terms. Below we present a few constructors from the

type:

type:

The

type provides the “one-level-deep” structure of a term: metaprograms must call

type provides the “one-level-deep” structure of a term: metaprograms must call

to reveal the structure of the term, one constructor at a time. The view exposes three kinds of variables: bound variables,

to reveal the structure of the term, one constructor at a time. The view exposes three kinds of variables: bound variables,

; named local variables

; named local variables

; and top-level fully qualified names,

; and top-level fully qualified names,

. Bound variables and local variables are distinguished since the internal abstract syntax is locally nameless. For metaprogramming, it is usually simpler to use a fully-named representation, so we provide

. Bound variables and local variables are distinguished since the internal abstract syntax is locally nameless. For metaprogramming, it is usually simpler to use a fully-named representation, so we provide

and

and

functions that open and close binders appropriately to maintain this invariant. Since opening binders requires freshness,

functions that open and close binders appropriately to maintain this invariant. Since opening binders requires freshness,

has effect

has effect

.Footnote 5 As generating large pieces of syntax via the view easily becomes tedious, we also provide some ways of quoting terms:

.Footnote 5 As generating large pieces of syntax via the view easily becomes tedious, we also provide some ways of quoting terms:

Static Quotations. A static quotation

is just a shorthand for statically calling the F\(^\star \) parser to convert

is just a shorthand for statically calling the F\(^\star \) parser to convert

into the abstract syntax of F\(^\star \) terms above. For instance,

into the abstract syntax of F\(^\star \) terms above. For instance,

is equivalent to the following,

is equivalent to the following,

Dynamic Quotations. A second form of quotation is

, an effectful operation that is interpreted by F\(^\star \)’s normalizer during metaprogram evaluation. It returns the syntax of its argument at the time

, an effectful operation that is interpreted by F\(^\star \)’s normalizer during metaprogram evaluation. It returns the syntax of its argument at the time

is evaluated. Evaluating

is evaluated. Evaluating

substitutes all the free variables in

substitutes all the free variables in

with their current values in the execution environment, suspends further evaluation, and returns the abstract syntax of the resulting term. For instance, evaluating

with their current values in the execution environment, suspends further evaluation, and returns the abstract syntax of the resulting term. For instance, evaluating

produces the abstract syntax of

produces the abstract syntax of

.

.

Anti-quotations. Static quotations are useful for building big chunks of syntax concisely, but they are of limited use if we cannot combine them with existing bits of syntax. Subterms of a quotation are allowed to “escape” and be substituted by arbitrary expressions. We use the syntax

to denote an antiquoted

to denote an antiquoted

, where

, where

must be an expression of type

must be an expression of type

in order for the quotation to be well-typed. For example,

in order for the quotation to be well-typed. For example,

creates syntax for an addition where one operand is the integer constant

creates syntax for an addition where one operand is the integer constant

and the other is the term represented by

and the other is the term represented by

.

.

Unquotation. Finally, we provide an effectful operation,

, which takes a term representation

, which takes a term representation

and an expected type for it

and an expected type for it

(usually inferred from the context), and calls the F\(^\star \) type-checker to check and elaborate the term representation into a well-typed term.

(usually inferred from the context), and calls the F\(^\star \) type-checker to check and elaborate the term representation into a well-typed term.

3.4 Specifying and Verifying Metaprograms

Since we model metaprograms as a particular kind of effectful program within F\(^\star \), which is a program verifier, a natural question to ask is whether F\(^\star \) can specify and verify metaprograms. The answer is “yes, to a degree”.

To do so, we must use the WP calculus for the

effect:

effect:

-computations are given computation types of the form

-computations are given computation types of the form

, where

, where

is the computation’s result type and

is the computation’s result type and

is a weakest-precondition transformer of type

is a weakest-precondition transformer of type

=

=

. However, since WPs tend to not be very intuitive, we first define two variants of the

. However, since WPs tend to not be very intuitive, we first define two variants of the

effect:

effect:

in “Hoare-style” with pre- and postconditions and

in “Hoare-style” with pre- and postconditions and

(which we have seen before), which only specifies the return type, but uses trivial pre- and postconditions. The

(which we have seen before), which only specifies the return type, but uses trivial pre- and postconditions. The

and

and

keywords below simply aid readability of pre- and postconditions—they are identity functions.

keywords below simply aid readability of pre- and postconditions—they are identity functions.

Previously, we only showed the simple type for the

primitive, namely

primitive, namely

. In fact, in full detail and Hoare style, its type/specification is:

. In fact, in full detail and Hoare style, its type/specification is:

expressing that the primitive has no precondition, always fails with the provided exception, and does not modify the proof state. From the specifications of the primitives, and the automatically obtained Dijkstra monad, F\(^\star \) can already prove interesting properties about metaprograms. We show a few simple examples.

The following metaprogram is accepted by F\(^\star \) as it can conclude, from the type of

, that the assertion is unreachable, and hence

, that the assertion is unreachable, and hence

can have a trivial precondition (as

can have a trivial precondition (as

implies).

implies).

For

below, F\(^\star \) verifies that (given the precondition) the pattern match is exhaustive. The postcondition is also asserting that the metaprogram always succeeds without affecting the proof state, returning some unspecified goal. Calls to

below, F\(^\star \) verifies that (given the precondition) the pattern match is exhaustive. The postcondition is also asserting that the metaprogram always succeeds without affecting the proof state, returning some unspecified goal. Calls to

must statically ensure that the goal list is not empty.

must statically ensure that the goal list is not empty.

Finally, the

combinator below “splits” the goals of a proof state in two at a given index

combinator below “splits” the goals of a proof state in two at a given index

, and focuses a different metaprogram on each. It includes a runtime check that the given

, and focuses a different metaprogram on each. It includes a runtime check that the given

is non-negative, and raises an exception in the

is non-negative, and raises an exception in the

effect otherwise. Afterwards, the call to the (pure)

effect otherwise. Afterwards, the call to the (pure)

function requires that

function requires that

be statically known to be non-negative, a fact which can be proven from the specification for

be statically known to be non-negative, a fact which can be proven from the specification for

and the effect definition, which defines the control flow.

and the effect definition, which defines the control flow.

This enables a style of “lightweight” verification of metaprograms, where expressive invariants about their state and control-flow can be encoded. The programmer can exploit dynamic checks (

) and exceptions (

) and exceptions (

) or static ones (preconditions), or a mixture of them, as needed.

) or static ones (preconditions), or a mixture of them, as needed.

Due to type abstraction, though, the specifications of most primitives cannot provide complete detail about their behavior, and deeper specifications (such as ensuring a tactic will correctly solve a goal) cannot currently be proven, nor even stated—to do so would require, at least, an internalization of the typing judgment of F\(^\star \). While this is an exciting possibility [3], we have for now only focused on verifying basic safety properties of metaprograms, which helps users detect errors early, and whose proofs the SMT can handle well. Although in principle, one can also write tactics to discharge the proof obligations of metaprograms.

4 Meta-F\(^\star \), Formally

We now describe the trust assumptions for Meta-F\(^\star \) (Sect. 4.1) and then how we reconcile tactics within a program verifier, where the exact shape of VCs is not given, nor known a priori by the user (Sect. 4.2).

4.1 Correctness and Trusted Computing Base (TCB)

As in any proof assistant, tactics and metaprogramming would be rather useless if they allowed to “prove” invalid judgments—care must be taken to ensure soundness. We begin with a taste of the specifics of F\(^\star \)’s static semantics, which influence the trust model for Meta-F\(^\star \), and then provide more detail on the TCB.

Proof Irrelevance in F\({^{\star }}\mathbf{.}\) The following two rules for introducing and eliminating refinement types are key in F\(^\star \), as they form the basis of its proof irrelevance.

The \(\vDash \) symbol represents F\(^\star \)’s validity judgment [1] which, at a high-level, defines a proof-irrelevant, classical, higher-order logic. These validity hypotheses are usually collected by the type-checker, and then encoded to the SMT solver in bulk. Crucially, the irrelevance of validity is what permits efficient interaction with SMT solvers, since reconstructing F\(^\star \) terms from SMT proofs is unneeded.

As evidenced in the rules, validity and typing are mutually recursive, and therefore Meta-F\(^\star \) must also construct validity derivations. In the implementation, we model these validity goals as holes with a “squash” type [5, 53], where

, i.e., a refinement of

, i.e., a refinement of

. Concretely, we model

. Concretely, we model  as

as  using a unification variable. Meta-F\(^\star \) does not construct deep solutions to squashed goals: if they are proven valid, the variable

using a unification variable. Meta-F\(^\star \) does not construct deep solutions to squashed goals: if they are proven valid, the variable

is simply solved by the

is simply solved by the

value

value

. At any point, any such irrelevant goal can be sent to the SMT solver. Relevant goals, on the other hand, cannot be sent to SMT.

. At any point, any such irrelevant goal can be sent to the SMT solver. Relevant goals, on the other hand, cannot be sent to SMT.

Scripting the Typing Judgment. A consequence of validity proofs not being materialized is that type-checking is undecidable in F\(^\star \). For instance: does the unit value

solve the hole

solve the hole  Well, only if \(\phi \) holds—a condition which no type-checker can effectively decide. This implies that the type-checker cannot, in general, rely on proof terms to reconstruct a proof. Hence, the primitives are designed to provide access to the typing judgment of F\(^\star \) directly, instead of building syntax for proof terms. One can think of F\(^\star \)’s type-checker as implementing one particular algorithmic heuristic of the typing and validity judgments—a heuristic which happens to work well in practice. For convenience, this default type-checking heuristic is also available to metaprograms: this is in fact precisely what the

Well, only if \(\phi \) holds—a condition which no type-checker can effectively decide. This implies that the type-checker cannot, in general, rely on proof terms to reconstruct a proof. Hence, the primitives are designed to provide access to the typing judgment of F\(^\star \) directly, instead of building syntax for proof terms. One can think of F\(^\star \)’s type-checker as implementing one particular algorithmic heuristic of the typing and validity judgments—a heuristic which happens to work well in practice. For convenience, this default type-checking heuristic is also available to metaprograms: this is in fact precisely what the

primitive does. Having programmatic access to the typing judgment also provides the flexibility to tweak VC generation as needed, instead of leaving it to the default behavior of F\(^\star \). For instance, the

primitive does. Having programmatic access to the typing judgment also provides the flexibility to tweak VC generation as needed, instead of leaving it to the default behavior of F\(^\star \). For instance, the

primitive implements T-Refine. When applied, it produces two new goals, including that the refinement actually holds. At that point, a metaprogram can run any arbitrary tactic on it, instead of letting the F\(^\star \) type-checker collect the obligation and send it to the SMT solver in bulk with others.

primitive implements T-Refine. When applied, it produces two new goals, including that the refinement actually holds. At that point, a metaprogram can run any arbitrary tactic on it, instead of letting the F\(^\star \) type-checker collect the obligation and send it to the SMT solver in bulk with others.

Trust. There are two common approaches for the correctness of tactic engines: (1) the de Bruijn criterion [6], which requires constructing full proofs (or proof terms) and checking them at the end, hence reducing trust to an independent proof-checker; and (2) the LCF style, which applies backwards reasoning while constructing validation functions at every step, reducing trust to primitive, forward-style implementations of the system’s inference rules.

As we wish to make use of SMT solvers within F\(^\star \), the first approach is not easy. Reconstructing the proofs SMT solvers produce, if any, back into a proper derivation remains a significant challenge (even despite recent progress, e.g. [17, 31]). Further, the logical encoding from F\(^\star \) to SMT, along with the solver itself, are already part of F\(^\star \)’s TCB: shielding Meta-F\(^\star \) from them would not significantly increase safety of the combined system.

Instead, we roughly follow the LCF approach and implement F\(^\star \)’s typing rules as the basic user-facing metaprogramming actions. However, instead of implementing the rules in forward-style and using them to validate (untrusted) backwards-style tactics, we implement them directly in backwards-style. That is, they run by breaking down goals into subgoals, instead of combining proven facts into new proven facts. Using LCF style makes the primitives part of the TCB. However, given the primitives are sound, any combination of them also is, and any user-provided metaprogram must be safe due to the abstraction imposed by the

effect, as discussed next.

effect, as discussed next.

Correct Evolutions of the Proof State. For soundness, it is imperative that tactics do not arbitrarily drop goals from the proof state, and only discharge them when they are solved, or when they can be solved by other goals tracked in the proof state. For a concrete example, consider the following program:

Here, Meta-F\(^\star \) will create an initial proof state with a single goal of the form  and begin executing the metaprogram. When applying the

and begin executing the metaprogram. When applying the

primitive, the proof state transitions as shown below.

primitive, the proof state transitions as shown below.

Here, a solution to the original goal has not yet been built, since it depends on the solution to the goal on the right hand side. When it is solved with, say,

, we can solve our original goal with

, we can solve our original goal with

. To formalize these dependencies, we say that a proof state \(\phi \) correctly evolves (via f ) to \(\psi \), denoted

. To formalize these dependencies, we say that a proof state \(\phi \) correctly evolves (via f ) to \(\psi \), denoted  , when there is a generic transformation f, called a validation, from solutions to all of \(\psi \)’s goals into correct solutions for \(\phi \)’s goals. When \(\phi \) has n goals and \(\psi \) has m goals, the validation f is a function from

, when there is a generic transformation f, called a validation, from solutions to all of \(\psi \)’s goals into correct solutions for \(\phi \)’s goals. When \(\phi \) has n goals and \(\psi \) has m goals, the validation f is a function from  into

into  . Validations may be composed, providing the transitivity of correct evolution, and if a proof state \(\phi \) correctly evolves (in any amount of steps) into a state with no more goals, then we have fully defined solutions to all of \(\phi \)’s goals. We emphasize that validations are not constructed explicitly during the execution of metaprograms. Instead we exploit unification metavariables to instantiate the solutions automatically.

. Validations may be composed, providing the transitivity of correct evolution, and if a proof state \(\phi \) correctly evolves (in any amount of steps) into a state with no more goals, then we have fully defined solutions to all of \(\phi \)’s goals. We emphasize that validations are not constructed explicitly during the execution of metaprograms. Instead we exploit unification metavariables to instantiate the solutions automatically.

Note that validations may construct solutions for more than one goal, i.e., their codomain is not a single term. This is required in Meta-F\(^\star \), where primitive steps may not only decompose goals into subgoals, but actually combine goals as well. Currently, the only primitive providing this behavior is

, which finds a maximal common prefix of the environment of two irrelevant goals, reverts the “extra” binders in both goals and builds their conjunction. Combining goals using

, which finds a maximal common prefix of the environment of two irrelevant goals, reverts the “extra” binders in both goals and builds their conjunction. Combining goals using

is especially useful for sending multiple goals to the SMT solver in a single call. When there are common obligations within two goals,

is especially useful for sending multiple goals to the SMT solver in a single call. When there are common obligations within two goals,

them before calling the SMT solver can result in a significantly faster proof.

them before calling the SMT solver can result in a significantly faster proof.

We check that every primitive action respects the  preorder. This relies on them modeling F\(^\star \)’s typing rules. For example, and unsurprisingly, the following rule for typing abstractions is what justifies the

preorder. This relies on them modeling F\(^\star \)’s typing rules. For example, and unsurprisingly, the following rule for typing abstractions is what justifies the

primitive:

primitive:

Then, for the proof state evolution above, the validation function f is the (mathematical, meta-level) function taking a term of type int (the solution for

) and building syntax for its abstraction over x. Further, the

) and building syntax for its abstraction over x. Further, the

primitive respects the correct-evolution preorder, by the very typing rule (T-Fun) from which it is defined. In this manner, every typing rule induces a syntax-building metaprogramming step. Our primitives come from this dual interpretation of typing rules, which ensures that logical consistency is preserved.

primitive respects the correct-evolution preorder, by the very typing rule (T-Fun) from which it is defined. In this manner, every typing rule induces a syntax-building metaprogramming step. Our primitives come from this dual interpretation of typing rules, which ensures that logical consistency is preserved.

Since the  relation is a preorder, and every metaprogramming primitive we provide the user evolves the proof state according

relation is a preorder, and every metaprogramming primitive we provide the user evolves the proof state according  , it is trivially the case that the final proof state returned by a (successful) computation is a correct evolution of the initial one. That means that when the metaprogram terminates, one has indeed broken down the proof obligation correctly, and is left with a (hopefully) simpler set of obligations to fulfill. Note that since

, it is trivially the case that the final proof state returned by a (successful) computation is a correct evolution of the initial one. That means that when the metaprogram terminates, one has indeed broken down the proof obligation correctly, and is left with a (hopefully) simpler set of obligations to fulfill. Note that since  is a preorder,

is a preorder,

provides an interesting example of monotonic state [2].

provides an interesting example of monotonic state [2].

4.2 Extracting Individual Assertions

As discussed, the logical context of a goal processed by a tactic is not always syntactically evident in the program. And, as shown in the

call in

call in

from Sect. 3.4, some obligations crucially depend on the control-flow of the program. Hence, the proof state must crucially include these assumptions if proving the assertion is to succeed. Below, we describe how Meta-F\(^\star \) finds proper contexts in which to prove the assertions, including control-flow information. Notably, this process is defined over logical formulae and does not depend at all on F\(^\star \)’s WP calculus or VC generator: we believe it should be applicable to any VC generator.

from Sect. 3.4, some obligations crucially depend on the control-flow of the program. Hence, the proof state must crucially include these assumptions if proving the assertion is to succeed. Below, we describe how Meta-F\(^\star \) finds proper contexts in which to prove the assertions, including control-flow information. Notably, this process is defined over logical formulae and does not depend at all on F\(^\star \)’s WP calculus or VC generator: we believe it should be applicable to any VC generator.

As seen in Sect. 2.1, the basic mechanism by which Meta-F\(^\star \) attaches a tactic to a specific sub-goal is

. Our encoding of this expression is built similarly to F\(^\star \)’s existing

. Our encoding of this expression is built similarly to F\(^\star \)’s existing

construct, which is simply sugar for a pure function

construct, which is simply sugar for a pure function

of type

of type

, which essentially introduces a cut in the generated VC. That is, the term

, which essentially introduces a cut in the generated VC. That is, the term

roughly produces the verification condition

roughly produces the verification condition

, requiring a proof of

, requiring a proof of

at this point, and assuming

at this point, and assuming

in the continuation. For Meta-F\(^\star \), we aim to keep this style while allowing asserted formulae to be decorated with user-provided tactics that are tasked with proving or pre-processing them. We do this in three steps.

in the continuation. For Meta-F\(^\star \), we aim to keep this style while allowing asserted formulae to be decorated with user-provided tactics that are tasked with proving or pre-processing them. We do this in three steps.

First, we define the following “phantom” predicate:

Here

simply associates the tactic

simply associates the tactic

with

with

, and is equivalent to

, and is equivalent to

by its definition. Next, we implement the

by its definition. Next, we implement the

lemma, and desugar

lemma, and desugar

into

into

. This lemma is trivially provable by F\(^\star \).

. This lemma is trivially provable by F\(^\star \).

Given this specification, the term

roughly produces the verification condition

roughly produces the verification condition

, with a tagged left sub-goal, and

, with a tagged left sub-goal, and

as an hypothesis in the right one. Importantly, F\(^\star \) keeps the

as an hypothesis in the right one. Importantly, F\(^\star \) keeps the

marker uninterpreted until the VC needs to be discharged. At that point, it may contain several annotated subformulae. For example, suppose the VC is

marker uninterpreted until the VC needs to be discharged. At that point, it may contain several annotated subformulae. For example, suppose the VC is

below, where we distinguish an ambient context of variables and hypotheses \(\varDelta \):

below, where we distinguish an ambient context of variables and hypotheses \(\varDelta \):

In order to run the

tactic on

tactic on

, it must first be “split out”. To do so, all logical information “visible” for

, it must first be “split out”. To do so, all logical information “visible” for

(i.e. the set of premises of the implications traversed and the binders introduced by quantifiers) must be included. As for any program verifier, these hypotheses include the control flow information, postconditions, and any other logical fact that is known to be valid at the program point where the corresponding

(i.e. the set of premises of the implications traversed and the binders introduced by quantifiers) must be included. As for any program verifier, these hypotheses include the control flow information, postconditions, and any other logical fact that is known to be valid at the program point where the corresponding

was called. All of them are collected into \(\varDelta \) as the term is traversed. In this case, the VC for R is:

was called. All of them are collected into \(\varDelta \) as the term is traversed. In this case, the VC for R is:

Afterwards, this obligation is removed from the original VC. This is done by replacing it with

, leaving a “skeleton” VC with all remaining facts.

, leaving a “skeleton” VC with all remaining facts.

The validity of

and

and

implies that of

implies that of

. F\(^\star \) also recursively descends into

. F\(^\star \) also recursively descends into

and

and

, in case there are more

, in case there are more

markers in them. Then, tactics are run on the the split VCs (e.g.,

markers in them. Then, tactics are run on the the split VCs (e.g.,

on

on

) to break them down (or solve them). All remaining goals, including the skeleton, are sent to the SMT solver.

) to break them down (or solve them). All remaining goals, including the skeleton, are sent to the SMT solver.

Note that while the obligation to prove

, in

, in

, is preprocessed by the tactic

, is preprocessed by the tactic

, the assumption

, the assumption

for the continuation of the code, in

for the continuation of the code, in

, is left as-is. This is crucial for tactics such as the canonicalizer from Sect. 2.1: if the skeleton

, is left as-is. This is crucial for tactics such as the canonicalizer from Sect. 2.1: if the skeleton

contained an assumption for the canonicalized equality it would not help the SMT solver show the uncanonicalized postcondition.

contained an assumption for the canonicalized equality it would not help the SMT solver show the uncanonicalized postcondition.

However, not all nodes marked with

are proof obligations. Suppose

are proof obligations. Suppose

in the previous VC was given as

in the previous VC was given as

. In this case, one certainly does not want to attempt to prove

. In this case, one certainly does not want to attempt to prove

, since it is an hypothesis. While it would be sound to prove it and replace it by

, since it is an hypothesis. While it would be sound to prove it and replace it by

, it is useless at best, and usually irreparably affects the system. Consider asserting the tautology

, it is useless at best, and usually irreparably affects the system. Consider asserting the tautology

.

.

Hence, F\(^\star \) splits such obligations only in strictly-positive positions. On all others, F\(^\star \) simply drops the

marker, e.g., by just unfolding the definition of

marker, e.g., by just unfolding the definition of

. For regular uses of the

. For regular uses of the

construct, however, all occurrences are strictly-positive. It is only when (expert) users use the

construct, however, all occurrences are strictly-positive. It is only when (expert) users use the

marker directly that the above discussion might become relevant.

marker directly that the above discussion might become relevant.

Formally, the soundness of this whole approach is given by the following metatheorem, which justifies the splitting out of sub-assertions, and by the correctness of evolution detailed in Sect. 4.1. The proof of Theorem 1 is straightforward, and included in the appendix. We expect an analogous property to hold in other verifiers as well (in particular, it holds for first-order logic).

Theorem 1

Let E be a context with \(\varGamma \vdash E : prop \Rightarrow prop\), and \(\phi \) a squashed proposition such that \(\varGamma \vdash \phi : prop\). Then the following holds:

where \(\gamma (E)\) is the set of binders E introduces. If E is strictly-positive, then the reverse implication holds as well.

5 Executing Metaprograms Efficiently