Abstract

Assessments within the context of inquiry-based learning pose a special challenge: On the one hand, in the “Bologna degree programs,” the practice of academic assessment is generally lagging behind in the requirement that not just teaching and learning be done in a competency-oriented manner, but assessment as well; this has an especially grave impact on issues of performance recording relating to inquiry-based learning. On the other hand, the concept of inquiry-based learning allows teaching, learning and research to be combined in multiple formats and therefore needs to be differentiated – with corresponding consequences for research-related assessment. In the face of such challenges, the first step taken in this article is to propose a model of the possible combinations of teaching, learning and research, which suggests that a distinction can be made between at least three types of inquiry-based learning: learning to understand research, practicing research and conducting independent research. In a second step, the proposed model is expanded to include assessment.

You have full access to this open access chapter, Download chapter PDF

Similar content being viewed by others

Assessments within the context of inquiry-based learning pose a special challenge: On the one hand, in the “Bologna degree programs,” the practice of academic assessment is generally lagging behind in the requirement that not just teaching and learning be done in a competency-oriented manner, but assessment as well; this has an especially grave impact on issues of performance recording relating to inquiry-based learning. On the other hand, the concept of inquiry-based learning allows teaching, learning and research to be combined in multiple formats and therefore needs to be differentiated – with corresponding consequences for research-related assessment. In the face of such challenges, the first step taken in this article is to propose a model of the possible combinations of teaching, learning and research, which suggests that a distinction can be made between at least three types of inquiry-based learning: learning to understand research, practicing research and conducting independent research. In a second step, the proposed model is expanded to include assessment.

1 Competence-Oriented Assessment: Claim and Reality

Competence orientation has been a guiding idea for the design of degree programs since the beginning of the Bologna Process (Schaper 2012): Bachelor’s and master’s degree programs are modularized. A module should be designed in such a way that, in a best-case scenario, multiple courses jointly foster subject-specific and/or interdisciplinary competences, which can be covered in one final exam at the end of the module, and which can be assessed in terms of quality and level. Lurking behind the seemingly clear concept of competence, however, are different versions and models (Reinmann 2011; Tenberg 2014, p. 19 et seq.). At most, there is consensus that competences encompass several components, namely abilities & skills and attitudes (e.g. values, motives) in addition to knowledge. Although generally recognized, it is often forgotten that competences are dispositions relating to performance and, by definition, cannot be directly measured. Rather, one concludes that there is an (invisible) underlying competence based on a performance, in other words a visible achievement (Wilbers 2013, p. 302). Therefore, the common term proof of performance is quite appropriate for assessment within a higher-education context, especially as it draws attention to the non-trivial challenge that, strictly speaking, the conclusion about competence based on performance is still pending when the proof of performance is provided.

Precisely what competence-oriented assessments actually are remains largely undetermined: “Instead of testing on the content that has been taught, it is now necessary to test and assess what the learner is capable of in terms of competences at a given point of time during his/her academic studies or, respectively, at the end of study modules” (Schaper and Hilkenmeier 2013, p. 7). This description is not very enlightening, especially since even before the Bologna Process, “contents” were not “tested on assessments” in exams, but rather those assessments simply measured what content students command, thus what they know or how they can apply their knowledge to solve a task. An ability is likewise tied to the content, meaning that any discussions of competence which suggest that content no longer plays a role are, ultimately, misleading (Ladenthin 2011). If, despite the critique of competence orientation, one goes along with (and there are good reasons for doing so) the request for assessments that – in addition to knowledge, also measure the flexible application thereof as well as abilities, and possibly even attitudes – then another question arises, namely: When do these components constitute a special academic competence that students should be expected to acquire in and from a course of study at a university? According to the much-cited suggestion, academic competence (a) is reflective, (b) can be expatiated on (as a prerequisite for reflexivity) and (c) is based on knowledge acquired through theory and empiricism; while it is (d) not developed exclusively, it is developed primarily from the perspective of the selected discipline, (e) it is geared towards mastering novel and complex situations and problems and (f) it contributes to flexible employability in a field of activity that is near to the chosen discipline (Wick 2011, p. 5 et seq.; cf. also Schaper 2012, p. 22 et seq.).

A further requirement in the course of competence orientation is to base the design of assessments as much as possible on the expected learning outcomes that have been described as precisely as possible, and from there, to create assessment situations, tasks and forms (e.g. Bachmann 2011). Assessments must thus be coordinated with the courses in the sense of the “constructive alignment” (Biggs and Tang 2011) in such a way that, together with the goals (learning outcomes), they result as far as possible in a coherent whole. From an organizational point of view, it is expected that assessments take place at the end of each module in order to continuously monitor and evaluate knowledge and skills, and possibly attitudes, during the course of studies.

Continuous, competence-oriented and accurate assessment may sound desirable at first: It promises to relieve students of a few, all-important periods of time in which summative examinations are held (with legal consequences); it can be expected that it will widen the scope of assessment objectives, which will no longer be limited to merely querying students about fact-based knowledge; and it sounds like multiple forms of examination that will do justice to the various facets of academic competence.

However, current assessment practice within German institutions of higher learning is far from meeting these expectations. One of the big problems is the quantity of exams. Module exams certainly do remedy problems inherent in fewer exam periods (such as the Zwischenprüfung and Abschlussprüfung) (interim exam and leaving exam before graduation). Still, conversely, module exams mean that assessments are distributed throughout a course of study and thus constitute a concomitant and continuous burden as a result of the large number of these assessments. In addition, according to Bologna, one theoretically presumes that, as a general rule, module exams – by virtue of being integrated assessments – measure competences that are acquired in multiple courses. In practice, however, the types of assessment that are given are more often additive assessments, in which tasks from various courses are strung together (as in the case of written assessments); or sub-module exams, in which every course ends with an exam (Pietzonka 2014). It is therefore not uncommon for students to complete 50 to 60 assessments in the form of small final examinations during their bachelor’s degree, for example, all of which have legal consequences in that they are incorporated into the student’s overall grade.

The quantity of assessments is a burden not only on students, but also on instructors, and naturally influences the quality of assessments. The more assessments that must be planned, carried out and evaluated, the more efficient the forms of assessment must be in order to be able to cope with the high number of assessments (Franke and Handke 2012, p. 155). Especially efficient are those assessments that work with closed questions and are implemented electronically before being automatically evaluated. These forms of assessment can assess knowledge, but are not well suited – in their usual and widespread form – for measuring the required competences. In addition to assessments, the assessment practice also includes term papers and presentations, depending on the disciplines; there are sometimes also oral and practical exams. How these forms of assessment live up to the claim of measuring academic competence in particular remains an open question. Overall, the theoretically possible diversity faces the risk of practical impoverishment given the problems in terms of the quantity and quality of assessments.

2 Learning, Teaching, Researching – More Than one Connection

Certainly, assessments within the context of inquiry-based learning cannot be decoupled from the existing assessment situation with reference to the requirements and problems as outlined above. They can serve as a special impetus for assessment practice, however, because it is specifically inquiry-based learning or, respectively, various connections between learning, teaching and research – or as summarized by Huber (2014, p. 28), “research related” – that make academic skills development possible in the first place. To put it another way: “Towards what should a university education be oriented if not research?” (Gerdenitsch 2015, p. 89). An understanding of the diversity of these connections from the perspective of both learning and teaching is, in my view, a prerequisite for designing assessments that are suitable for measuring genuine academic achievements and assessing compentence.

2.1 Diversity from the Perspective of Learning and Teaching

It has often been lamented that inquiry-based learning is losing its contours due to its frequent and varied use in higher education instruction (cf. Huber 2009, 2014). Literally speaking, it only makes sense to talk about inquiry-based learning when students do their own research and learning. This means that all phases of research, from formulating a question and researching the associated state of research, to planning a methodical design and the implementation thereof, to describing and presenting the findings, are implemented by students alone or collaboratively in a team (observable for all parties involved). Inquiry-based learning (learning by performing one’s own research) means that students learn by scrutinizing the matter and posing independently substantiated questions (asking), selecting from among various methodical options in order to answer their questions (deciding), and implementing the goals and plans that arise as a result (acting). The learning processes being activated here are productive in the sense that they not only lead to new mental structures for the learners, but also prompt those learners to produce knowledge in the form of visible artifacts (summaries of existing findings, research plans, survey instruments, presentations of results, etc.).

As early as the 1970s – the first heyday of inquiry-based learning (cf. BAK 1970) – “genetic learning” was mentioned and legitimized in addition to learning by performing one’s own research. Learning within the context of research is genetic when a research process is intellectually reconstructed and subsequently understood without producing any visible artifacts. This learning method is by no means passive, because it is absolutely inconceivable how learning can occur when students are not at least mentally active. It makes more sense to call this learning method receptive (Prange 2005, p. 95), a label which implies mental activity. As a rule, receptive learning requires that one observes what one wishes to learn, insofar as someone is able to demonstrate the material, by listening, insofar as someone can orally present the material, and/or by reading if the knowledge that one wishes to acquire is in written form. Here, the connection between research and learning is that students learn to understand research by being taught how research is possible and accomplished, what Huber (2014, p. 24) refers to as “research-oriented.”

Receptive and productive learning cannot be clearly differentiated from one another. Rather, reception and production constitute the poles of a learning continuum and are thus orientation markers showing the direction in which a learning method goes. It is possible to classify all forms of practicing learning in the middle of this continuum. Various phases of research require knowledge and skills that can be practiced, even without implementing an entire research cycle oneself or as part of a team. This knowledge and these skills are, in part, very specific to academic thinking and activity; however, they are also applicable to non-academic areas. Practice may involve how to find, read and excerpt scholarly texts and how to classify them into the landscape of academic genres. It is also possible to practice methods in the respective chosen discipline: empirical methods, or hermeneutic, historical and other methods. Even writing scholarly texts, visualizing findings or presenting scholarly content can be practiced. Practicing means that students imitate what they are shown, try out what they have just learned about and develop routines that will become part of an approach. Practicing research – and this may be heading in the direction that Huber (2014, p. 24 et seq.) referred to as “research-oriented” – is more than, and different from, learning to understand research and may (but does not have to) be a prerequisite to performing one’s own research.

If we make a distinction between three types of connections between research and learning (specifically, learning to understand research, practicing research and performing research oneself), this would require different forms of teaching or, respectively, different forms of stimulating and supporting these learning methods along the continuum between reception and production.

From the perspective of teaching, receptive learning requires that students be taught how to conduct research. This may be done directly, in that instructors explicitly present, explain and clarify – using words and pictures or multimedia – or indirectly, in that the opportunity is used to draw attention to the logic, phases and specifics of research, for example. Teaching as a means of communication is a teaching method that is mainly pursued in lectures and seminars with a high proportion of mediating activities. This teaching method is, in fact, widespread and does not have a good reputation. Nevertheless, we must distinguish between the teaching method (receptive learning) and its potential (imparting knowledge) on the one hand, and the prevalence (dominance of imparting information through too many lectures) and implementation (poor imparting of information in the form of lectures that fail to promote learning) on the other.

From the perspective of educators, by contrast, productive learning requires encouraging students in their research activities, instructing them as needed, creating contexts and resources, and supporting the process of learning through research through these (or other) means. The degree of support thereby provided may vary: Needs-based, more intensive instruction in individual phases does not necessarily mean that the character of self-research is lost, as long as the goal is maintained. Students also learn independent research by experiencing research and actively (assisting in) shaping it. Teaching as a means of supporting inquiry-based learning is common in project seminars, in (independent) projects, and possibly also in colloquia, if these are arranged along the lines of research activity.

From the teaching perspective, it is particularly clear that learning through practice has both receptive and productive aspects. In order to practice research, one needs learning environments that allow students to imitate something, which requires that something be demonstrated and thus conveyed, while at the same time students must be allowed to try things out and sometimes build up routines; this requires support and feedback. In the broadest sense, teaching activities are required here that empower students to practice research. The type of empowerment largely depends upon the phase of research being practiced. Tutorials, seminars with a high proportion of tutorials and seminars held by postgraduates are the course formats that are suited for this and already established.

2.2 Interim Conclusion: A Suggested Model

The following proposed model serves to systematize the diversity that is possible when learning, teaching and research are combined. The starting point is therefore learning and the continuum between the poles of reception and production, on which it is possible to arrange receptive, practicing and productive learning. This means that students at the institution of higher learning should be able to (a) learn to understand research, (b) practice research and (c) and conduct research themselves in connection with research. These forms of academic learning are by no means distinct; rather, they serve as orientation markers, not just for students, but for educators and their teaching activities as well. Academic teaching involves varying combinations of (a) conveying scholarship, (b) empowering the students to engage with scholarship and (c) supporting students’ scholarly activities (Reinmann 2013). These forms of teaching largely correspond to the three forms of connections between research and learning, whereby this distinction is also to be understood as merely accentuating (see Fig. 9.1).

3 Learning, Teaching, Researching, Assessment – More Than one Possibility

According to the conclusion in the first section of this article, academic assessment must measure whether students have knowledge of the material and methods, can apply them in complex situations, and can reflect critically upon and utilize them for many activities. However, the question of whether currently established exams are suitable for conducting such an academic assessment has scarcely been explored. To the best of my knowledge, not even a theory of assessment exists. While there are various taxonomies for teaching/learning methods, for example (cf. Baumgartner, 2011), one searches in vain for a theory-based taxonomy for assessment at institutions of higher learning. Usually, various forms of assessment are merely listed without being systematically comparable. While the following sections cannot fill that gap, they nonetheless address the deficit and zero-in on a well-founded system of various forms of assessment that is simultaneously oriented towards practice.

3.1 The (Missing) System of Various Forms of Assessment

If we start with the frequency and degree of familiarity of assessment at institutions of higher learning, then exams, term papers, presentations and oral exams rank first; in application-related disciplines, proof of practical achievements also ranks highly. Using the modes of representation developed by Jerome Bruner (1966), it is clear that a large percentage of university assessments are of a symbolic nature, i.e. based on language; pictorial forms of representation (iconic) may be integrated therein. It is relatively easy to classify symbolic forms of assessment into oral and written exams. Oral exams are either dialogues in the sense that questions are asked and must be answered (akin to “surveys” which may transition into oral examinations); or they are monological in the sense that students report on their knowledge (i.e. “present” it). Written exams, on the other hand, are best classified into those that require the person’s actual presence and those that can be written away from the university (in absentia). Written exams taken in person (exams) are fixed in terms of time, duration and location; as a rule, written exams taken in absentia (term papers) are usually fixed only in terms of the time period and the scope.

Symbolic forms of assessment primarily assess knowledge (and the application thereof). With reference to research, one could state that, above all, it examines what knowledge students have regarding the research (assessment on research). Less frequently (depending on the subject), forms of assessment are used that can be referred to as enactive because they require action in situations arranged for them. These types of assessments tend to assess skills and abilities. Thus with reference to research, they assess what students are able to do in their research (assessment in research).

Enactive forms of assessment can be classified according to whether students show their abilities by acting, or whether they do so through action sequences in the form of artifacts. The former comes down to demonstrating a skill in a situation, and therefore I have chosen the term situated for this type of assessment. The second of these means that one deduces a skill from something that was produced, which is why I refer to this form of assessment as materialized. The lack of common designations alone, as compared to symbolic forms of assessment, shows to some extent how much less familiar these forms of assessment are (in part) in the assessment practice at institutions of higher learning.

If we utilize the symbolic – enactive determination on the one hand and forms of examination established in practice on the other, we can construe the following possible system (see Fig. 9.2):

A system of this sort with only a few basic forms of assessment can have practical advantages, for example when it comes to the design of assessment rules, but also in terms of the technical modeling in current campus management systems. A wide variety of assessment variants can be designed using these basic forms of assessment as a foundation. Design criteria may include, for example:

-

purpose (knowledge reproduction, knowledge application, knowledge creation),

-

social form (individually, in groups),

-

media use (with or without media, online or offline, text or multimedia etc.),

-

resources (none, limited, open),

-

conditions (e.g. field or laboratory conditions).

Without being able to go into greater detail here, the following figure provides an exemplary view of the variety of possibilities for assessment relating to the basic forms proposed here (Fig. 9.3).

Enactive forms of assessment, in which students demonstrate their abilities in research situations (e.g. conducting an interview, evaluating data) or produce research artifacts (e.g. visualizing a research design; research journal with field notes) clearly fall within the context of assessment in research. In contrast, symbolic forms of assessment, such as written exams and oral exams in the form of interviews, are generally designed in such a way that they can be relatively easily categorized as assessment on research. Term papers and presentations are also usually used in the assessment practice in such a way that it is possible to test about research if need be. In principle, however, they can be expanded into forms of assessment within research: Within the context of scientific courses, for example, presentations are also a research artifact, and a term paper can be further developed into a scientific article that is published (see Fig. 9.4).

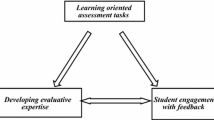

3.2 Conclusion: An Expanded Proposed Model

The model proposed above for a system of the possible diverse connections between learning, teaching and research distinguishes between (a) the goal that students learn to understand research, which requires the mediation of scholarship by educators, (b) the goal that students practice research, which requires that teaching empowers the students to engage with scholarship, and (c) the goal that students perform research themselves, which requires that teaching activities be scheduled that support students’ scholarly activities. If one wishes to extend this proposed model to include assessment, the first question to arise is whether one needs summative examinations (receptive, practicing, productive) with legal consequences for all research-related learning methods. If one takes the concept of practicing learning seriously, this prohibits summative assessment because the purpose of practicing is precisely to help students reach a level where they are prepared for exams. If courses and modules pursue the goal that students practice research, one could record achievements exclusively in a formative manner and provide feedback without giving grades (formative assessment). The second question is whether there is any usefulness in linking the above system of different forms of assessment with the two other forms of research-related learning and teaching. The following figure (Fig. 9.5) makes it clear that, in theory, assessment on research and receptive forms of learning on the one hand, and assessment in research and productive learning methods on the other correspond well. In practice, however, it is difficult to unambiguously classify common forms of assessment including options for their design (cf. Fig. 9.3).

4 Research-Related Assessment: Opportunities and Limits

In principle, combining learning methods with teaching methods and course formats in education, as well as possible forms of assessment, falls within the concept of “constructive alignment.” In the meantime, it has become common knowledge that the didactic design of assessments presents a great opportunity to introduce changes in learning and teaching; this is because, above all, students learn in the manner in which they are assessed. To conclude that educators should teach as they assess and vice versa, however, also carries risks: fixation on the assessment process, a compartmentalized operationalization of learning outcomes in favor of a high practicability of assessments and a loss of education options (cf. Tremp and Eugster 2006). This is especially true when trying to coordinate learning, teaching, assessment and research, i.e. when research becomes the focus as a constitutive element of academic learning and teaching.

The present text attempts to elucidate theoretically based classification criteria on the one hand, and conditions in teaching and assessment practice (current teaching formats, basic forms of assessment) on the other in order to relate learning, teaching, research and assessment with one another. It appears necessary to me that practical conditions be taken into account in order to increase the chances that the model will be implemented. Similar attempts have already been made in the Zurich model for inquiry-based learning in the narrower sense (cf. Tremp and Hildbrand 2012), however without taking into account the different variations in research-related learning. In this context, however, one has to conclude that, in comparison to research-related learning, there has as yet been insufficient theoretical discussion regarding research-related assessment at the micro-level of didactic design (one exception: BAK 1970). The preceding explanations address this deficiency and provide a starting point for further development. The didactic design of assessments cannot solve the problem concerning the number of exams, however. Here, changes must be made to the degree program design and formulation of assessment rules (e.g. a reduction in the number of exams that have legal consequences, consciously keeping courses or modules free of exams).

References

Bachmann, H. (2011). Formulieren von Lernergebnissen – learning outcomes. In H. Bachmann (Hrsg.), Kompetenzorientierte Hochschullehre (S. 34–49). Bern: Hep-Verlag.

Baumgartner, P. (2011). Taxonomie von Unterrichtsmethoden. Ein Plädoyer für didaktische Vielfalt. Münster: Waxmann.

Biggs, J./Tang, C. (2011). Teaching for quality learning at university. Glasgow: McGraw Hill.

Bruner, J. S. (1966). Toward a theory of instruction. Cambridge. Mass.: Harvard University Press.

Bundesassistentenkonferenz (BAK) (1970/2009). Forschendes Lernen – Wissenschaftliches Prüfen. Bielefeld: UniversitätsVerlagWebler.

Franke, P./Handke, J. (2012). E-Assessment. In J. Handke/A. M. Schäfer (Hrsg.), E-Learning, E-Teaching und E-Assessment in der Hochschullehre (S. 147–208). München: Oldenbourg.

Gerdentisch, C. (2015). Unterricht an Universitäten? Systematische Überlegungen zum intradisziplinären Transfer. In R. Egger/C. Wustmann/A. Karber (Hrsg.), Forschungsgeleitete Lehre in einem Massenstudium. Bedingungen und Möglichkeiten in den Erziehungs- und Bildungswissenschaften (S. 77–92). Berlin: Springer VS.

Huber, L. (2009). Warum Forschendes Lernen nötig und möglich ist. In L. Huber/J. Hellmer/F. Schneider (Hrsg.), Forschendes Lernen im Studium. Aktuelle Konzepte und Erfahrungen (S. 9–35). Bielefeld: UniversitätsVerlagWebler.

Huber, L. (2014). Forschungsbasiertes, Forschungsorientiertes, Forschendes Lernen: Alles dasselbe? Hochschulforschung, 1+2, 22–29.

Ladenthin, V. (2011). Kompetenzorientierung als Indiz pädagogischer Orientierungslosigkeit. Profil (Mitgliederzeitung des Deutschen Philologenverbandes), 09, 1–6.

Pietzonka, M. (2014). Die Umsetzung der Modularisierung in Bachelor-und Masterstudiengängen. Zeitschrift für Hochschulentwicklung, 9(2), 78–90.

Prange, K. (2005). Die Zeigestruktur der Erziehung. Grundriss der Operativen Pädagogik. München: Schöningh.

Reinmann, G. (2011). Kompetenz – Qualität – Assessment: Hintergrundfolie für das technologiebasierte Lernen. In M. Mühlhäuser/W. Sesink/A. Kaminski/J. Steimle (Hrsg.), Interdisziplinäre Zugänge zum technologiegestützten Lernen (S. 467–493). Münster: Waxmann.

Reinmann, G. (2013). Studientext Didaktisches Design. Retrieved 28 January 2016 from http://gabi-reinmann.de/wp-content/uploads/2013/05/Studientext_DD_Fassung2013.pdf

Schaper, N./Hilkenmeier, F. (2013). Umsetzungshilfen für kompetenzorientiertes Prüfen. HRK-Zusatzgutachten. Hochschulrektorenkonferenz Projekt nexus. Retrieved 28 January 2016 from http://www.hrk-nexus.de/fileadmin/redaktion/hrk-nexus/07-Downloads/07-03-Material/zusatzgutachten.pdf

Schaper, N. (2012). Fachgutachten zur Kompetenzorientierung in Studium und Lehre. Hochschulrektorenkonferenz. Retrieved 28 January 2016 from http://www.hrk-nexus.de/fileadmin/redaktion/hrk-nexus/07-Downloads/07-02-Publikationen/fachgutachten_kompetenzorientierung.pdf

Tenberg, R. (2014). Kompetenzorientiert studieren – didaktische Hochschulreform oder Bologna-Rhetorik? Journal of Technical Education, 1, 16–30.

Tremp, P./Eugster, B. (2006). Universitäre Bildung und Prüfungssystem – Thesen zu Leistungsnachweisen in modularisierten Studiengängen. Das Hochschulwesen, 5, 163–165.

Tremp, P./Hildbrand, T. (2012). Forschungsorientiertes Studium – universitäre Lehre: Das »Zürcher Framework« zur Verknüpfung von Lehre und Forschung. In T. Brinker/P. Tremp (Hrsg.), Einführung in die Studiengangentwicklung (S. 101–116). Bielefeld: Bertelsmann.

Wick, A. (2011). Akademisch geprägte Kompetenzentwicklung: Kompetenzorientierung in Hochschulstudiengängen. Heidelberg: HeiDOK. Retrieved 28 January 2016 from http://archiv.ub.uni-heidelberg.de/volltextserver/12001/1/Wick_Akademisch_gepraegte_Kompetenzen.pdf

Wilbers, K. (2013). Kompetenzmessung: Motor der Theorie- und Praxisentwicklung in der Berufsbildung? In S. Seufert und C. Metzger (Hrsg.), Kompetenzentwicklung in unterschiedlichen Kulturen (S. 298–321). Paderborn: Eusl.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Open Access This chapter is licensed under the terms of the Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License (http://creativecommons.org/licenses/by-nc-nd/4.0/), which permits any noncommercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence and indicate if you modified the licensed material. You do not have permission under this license to share adapted material derived from this chapter or parts of it.

The images or other third party material in this chapter are included in the chapter’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the chapter’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder.

Copyright information

© 2019 The Author(s)

About this chapter

Cite this chapter

Reinmann, G. (2019). Assessment and Inquiry-Based Learning. In: Mieg, H.A. (eds) Inquiry-Based Learning – Undergraduate Research. Springer, Cham. https://doi.org/10.1007/978-3-030-14223-0_9

Download citation

DOI: https://doi.org/10.1007/978-3-030-14223-0_9

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-14222-3

Online ISBN: 978-3-030-14223-0

eBook Packages: EducationEducation (R0)