Abstract

It is well-known that querying information is difficult for domain experts, for they are not familiar with querying actual relational schemata due to the notions of primary and foreign keys and the various ways of representing and storing information in a relational database. To overcome these problems, the Abstract Relational Model and the query language, SQLP, have been proposed. They are the theoretical foundations and ensure that explicit primary and foreign keys are hidden from the user’s view and that queries can be expressed more compactly. In this paper we evaluate these theoretical advantages with user studies that compare SQLP to plain SQL as the baseline. The experiments show significant statistical evidence that SQLP indeed requires less time for understanding and authoring queries, with no loss in accuracy. Considering the positive results, we develop a method to reverse engineer legacy relational schemata into abstract relational ones.

Similar content being viewed by others

1 Introduction

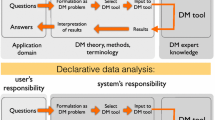

Writing and understanding queries, especially by domain experts, is well-known to have a steep learning curve. Therefore, four strategies have been proposed to alleviate this problem: either SQL is hidden behind a controlled natural language or a visual query language, or the relational or conceptual model is also shown as convenient overview of the database whilst querying in SQL. They have been shown to result in improvements over plain SQL at the database schema layer, demonstrating equal or fewer errors always in less time, and that a “good match of query language and database structure leads to better performance” [10] (see [3, 10] and references therein). A conceptual view (cf. SQL only) also enabled domain experts to invent new queries [4].

The major drawback of conceptual query languages is that most of them support only a subset of the full SQL and therefore have little uptake in industry. Querying with SQL and the relational model (RM) is one step up from the baseline of SQL on the SQL schema, but it still entails the drawback of premature resolution of identification issues (e.g., primary keys). In order to abstract away from that, the notion of referring expression types has been proposed [1, 2], which are object identifiers taken from a separate domain, \(D_\mathtt{oid}\), and included in each table in a schema as a \(\mathop {\mathtt{self}}\nolimits \) attribute. Each \(\mathop {\mathtt{self}}\nolimits \) is a single column with a functional dependency to an n-attribute (\(n \ge 1\)) identifier meaningful to the modeler. With this extension, the RM can be pushed up toward the conceptual layer into an Abstract Relational Model (ARM), which makes orthogonal those issues of identification [1, 2], and approaches a conceptual data model through lossless projections (vertical partitioning). Yet, SQL can be retained fully for data manipulation. Moreover, since each \(\mathop {\mathtt{self}}\nolimits \) is a single column, foreign key joins can always be expressed more compactly as attribute paths.

Attribute paths enable seamless path queries that do not require declaring multiple SQL joins manually. SQL with paths, SQLP (an extension of full SQL), is such a path query language (extending SQL\(^{path}\) [2]) where the foreign key joins are expressed with sequences of dot-separated attributes, which is a longstanding feature of class-based conceptual models [9]. This results in shorter queries and thus fewer chances of making mistakes, and it affords explicit navigation across the ARM to the desired elements. These are advantages in theory. It is not known whether this holds also in practice, especially regarding path query languages. In fact, while multiple path query languages have been proposed, to the best of our knowledge, only PathSQL that collapses left outer joins has been evaluated [7], claiming shorter writing time and fewer errors cf. plain SQL.

We seek to shed light on this issue with, first, a user evaluation that compares SQLP+ARM against the baseline of SQL+RM, testing for both time to write and to comprehend a query and correctness of the written queries. The main outcome of the evaluation is that working with SQLP has been shown to be statistically significantly faster, with the same level of correctness. Therefore, we have devised a novel method that transforms a legacy RM into the richer ARM. This method resolves the issues of identification automatically thanks to the referring expression type assignment inferred during the construction of the ARM, which is identity resolving, and therewith is also capable of making certain implicit constraints of the RM explicit in the ARM, such as disjointness and class subsumption (backward compatibility from SQLP\(\rightarrow \)SQL and ARM\(\rightarrow \)RM is already possible and ARM may be reconstructed in a Description Logic [2]). We also show that the space of SQLP queries remains invariant with respect to vertical partitioning, so that domain experts can use SQLP over a conceptual-like view of an ARM schema while database administrators can view the same schema as tables with many attributes.

In the remainder of the paper, we present the background and the novel method first in Sects. 2 and 3 and subsequently the user evaluation in Sect. 4. We discuss related work in Sect. 5 and conclude in Sect. 6.

2 Background

We begin by introducing the abstract relational model (ARM), which is based largely on an earlier version presented in [2], and extended where indicated below. An ARM augments the underlying domain of concrete values assumed in the relational model (RM) with an additional abstract domain of entities.

Definition 1

(Tables and Constraints). Let \(\mathsf {TAB}\), \(\mathsf {AT}\), and \(\mathsf {CD}\) be sets of table names, attribute names that includes \(\mathop {\mathtt{self}}\nolimits \), and concrete domains (data types), respectively, and let \(\mathtt{OID}\) be an abstract domain of entities (surrogates), disjoint from all concrete domains. A general relational model schema \(\varSigma \) is a set of table declarations of the formFootnote 1  , where \(T \in \mathsf {TAB}\), \(A_i \in \mathsf {AT}\), \(D_i \in (\mathsf {CD}\cup \{\mathtt{OID}\})\), and \(\varphi _j\) are constraints attached to table T. We write \({\textsc {Attrs}}(T)\) to denote \(\{A_1, \ldots , A_k\}\) and \(\textsc {Tables}(\varSigma )\) to denote all table names declared in \(\varSigma \). \(A_i\) is abstract if \(D_i\) is \(\mathtt{OID}\), and concrete otherwise, and \(\mathop {\mathtt{self}}\nolimits \) must always be abstract. In addition to attribute declarations “\((A_i ~D_i)\)”, a variety of constraints \(\varphi _j\) can occur in a table declaration of T, e.g.,

, where \(T \in \mathsf {TAB}\), \(A_i \in \mathsf {AT}\), \(D_i \in (\mathsf {CD}\cup \{\mathtt{OID}\})\), and \(\varphi _j\) are constraints attached to table T. We write \({\textsc {Attrs}}(T)\) to denote \(\{A_1, \ldots , A_k\}\) and \(\textsc {Tables}(\varSigma )\) to denote all table names declared in \(\varSigma \). \(A_i\) is abstract if \(D_i\) is \(\mathtt{OID}\), and concrete otherwise, and \(\mathop {\mathtt{self}}\nolimits \) must always be abstract. In addition to attribute declarations “\((A_i ~D_i)\)”, a variety of constraints \(\varphi _j\) can occur in a table declaration of T, e.g.,

-

1.

(primary keys) primary key (\(A_1, \ldots , A_k\))

-

2.

(foreign keys) constraint N foreign key (\(A_1, \ldots , A_k\)) references T

-

3.

(inheritance) isa \(T_1\)

-

4.

(disjointness constraints) disjoint with (\(T_1, \ldots , T_k\))

-

5.

(path functional dependencies) pathfd (\(\mathop {\mathsf {Pf}}\nolimits _1, \ldots , \mathop {\mathsf {Pf}}\nolimits _k\)) \(\rightarrow \) \(\mathop {\mathsf {Pf}}\nolimits \) \(\square \)

Primary keys and named foreign keys (“N” in item 2, above) are supported by standard SQL. Inheritance and disjointness constraints are only meaningful when \(\mathop {\mathtt{self}}\nolimits \) occurs as one of the attributes of T and each \(T_i\), and are satisfied when all (resp. no) \(\mathop {\mathtt{self}}\nolimits \)-value occurring in T occurs as a \(\mathop {\mathtt{self}}\nolimits \)-value in some (resp. all) \(T_i\) in the inheritance (resp. disjointness) cases. Path functional dependencies are a generalization of functional dependencies introduced in [13] that allow attribute paths in place of attributes. An attribute path \(\mathop {\mathsf {Pf}}\nolimits \) is either \(\mathop {\mathtt{self}}\nolimits \) or a dot-separated sequence of attribute names excluding \(\mathop {\mathtt{self}}\nolimits \), as defined in [2]. A path functional dependency is satisfied when any pair of T-tuples that agree on the value of each \(\mathop {\mathsf {Pf}}\nolimits _i\) also agree on the value of \(\mathop {\mathsf {Pf}}\nolimits \). Finally, RM and ARM are obtained by restricting how tables and constraints can be declared:

Definition 2

(RM and ARM). In RM: attributes \(A_i\) can only be declared to be concrete, and only primary and foreign key constraints are allowed. In ARM, every table has the attribute \(\mathop {\mathtt{self}}\nolimits \) declared to be its primary key. Consequently, every foreign key constraint must use a single abstract attribute. \(\square \)

Example 3

A commonly used visualization of RM schemata for a hypothetical course enrollment application is given in Fig. 1: each rectangle is a table, labelled by name, containing the attributes defined on that table. Attributes above the line in a rectangle give the primary key and directed edges between tables show foreign keys. The same visualization approach is used for a counterpart ARM schema in Fig. 2, where abstract attributes are indicated with an ‘*’. The respective definitions for, e.g., the CLASS table are as follows:

Logical Implication in Schemas. Given \(T \in \mathsf {TAB}(\varSigma )\) and constraint \(\varphi \in T\) (possibly not occurring in \(\varSigma \)), we write \(\varSigma \models (\varphi \in T)\) to say that \(\varphi \) always holds for T in any database over \(\varSigma \). For example, the requirement of foreign key constraints to be unary in ARM yields the following deduction:

in which the deduced constraint “\((A.B~D)\in T_1\)” states (with slight abuse of notation) that the attribute path “A.B” originating in \(T_1\) always ends in D. It is easy to see that the deduction above can be generalized to yield longer paths.

ARM can be formalized in a Description Logic (DL), e.g., the PTIME decidable  [11], where the problem of deciding when \(\varSigma \models (\varphi \in T)\) holds in ARM schemata can be reduced to reasoning about logical consequence [2].

[11], where the problem of deciding when \(\varSigma \models (\varphi \in T)\) holds in ARM schemata can be reduced to reasoning about logical consequence [2].

SQLP Queries. Since ARM schemata resemble RM schemata, simple revisions to the SQL standard yield SQLP: Fig. 3 shows a relationally complete fragment of the SQL query language grammar modified to allow attribute paths (definition for full SQL is analogous but beyond the limits of this paper). Example 4 illustrates the potential advantages of SQLP.

SQLP (fragment), extended from [2].

Example 4

Consider a query over the RM schema of Fig. 1, computing the names of students who have experienced being taught by professor Alan John.

The same query in SQLP over the corresponding ARM schema (Fig. 2) is:

Invariance of SQLP Under Vertical Partitioning. A hitherto unnoticed implicit benefit of ARM and SQLP, is SQLP’s invariance under vertical partitioning, which we specify explicitly and precisely here.

Definition 5

(Vertical Partition). Let \(\varSigma \) an ARM schema and

We say that an ARM schema \(\varSigma '\) is a partition of \(\varSigma \) (with respect to T) if

We say that an ARM schema \(\varSigma '\) is a partition of \(\varSigma \) (with respect to T) if

where \(T'\not \in \mathsf {TAB}(\varSigma )\). \(\square \)

Observe that vertical partitioning constitutes a lossless-join decomposition, and that every instance \( DB \) of \(\varSigma \) can be transformed to an instance \( DB '\) of \(\varSigma '\) with no loss of information. A repeated application of vertical partitioning ultimately leads to an ARM schema in which all tables are at most binary. This obtains a schema that comes very close to matching class and attribute-based conceptual models: a unary “\(\mathop {\mathtt{self}}\nolimits \)” table that may be viewed as a class, and binary tables that may in turn be viewed as attributes of the class. Our first result is that, for SQLP queries, answers are invariant with respect to such vertical partitioning:

Proposition 6

Let \(\varphi \) be a SQLP query over an ARM schema \(\varSigma \), \( DB \) an instance of \(\varSigma \) and \( DB '\) a corresponding instance of \(\varSigma '\) a partition of \(\varSigma \). Then \(\varphi ( DB )=\varphi ( DB ')\).

This feature allows users the freedom of formulating SQLP queries equivalently over a wide range of ARM schemata, as long as they are related by the “partition of” relation introduced in Definition 5. In particular, domain experts can now use SQLP over a conceptual-like view of a particular ARM schema while SQL programmers can think about the same schema in terms of tables with many attributes (this follows from the bi-directionality of Definition 5).

3 On Deriving ARM Schemata from RM Schemata

To present our second contribution, we need to introduce the notion of referring types and referring type assignments to tables in ARM that will be constructed by our algorithm together with the ARM schema. They enable one to execute SQLP queries formulated over the created ARM schema translations in [2].

Referring Expression Types and Assignments. To connect ARM and RM schemata, we use the notion of referring expression types from [2]: descriptions of how abstract \(\mathtt{OID}\) values used to identify entities in an ARM schema are represented in a corresponding RM schema. In particular, this entails a referring expression type assignment for each T in the ARM schema, denoted \({ \textsf {RET}}(T)\), with the general form given by

where \(k > 0\), and each attribute path \(\mathop {\mathsf {Pf}}\nolimits _{i,j}\) is defined on table \(T_i\). Each subexpression separated by preference indicators “;” is called a component of the \({ \textsf {RET}}\), and the “\(T_i \rightarrow \)” part its guard. The last component of the \({ \textsf {RET}}\) may not have its guard, which is then inferred to be “\(T \rightarrow \)”. Assigning a \({ \textsf {RET}}\) of this form to each table in the ARM schema, is identity resolving if naming issues are sufficiently resolved to enable translating any SQLP query over the ARM schema to an equivalent SQL query over its corresponding RM schema [2].

Example 7

The \({ \textsf {RET}}\) for the table CLASS in Example 3 is given by

stating that a class can be identified by a combination of the four values defined by the indicated paths. This yields the definition of the corresponding CLASS table in RM (see Fig. 1).

Observe that this simple kind of \({ \textsf {RET}}\) is still a strict generalization of traditional primary keys due to the possible use of the attribute paths, and that this example relies on this expressiveness. To see why more than one component might be needed, consider where \(\mathop {\mathtt{self}}\nolimits \)-values in the PROFESSOR and STUDENT tables could overlap (i.e., if professors could also be students). Were this possible, naming issues could still be resolved by choosing the \({ \textsf {RET}}\) for PROFESSOR. A professor who is also a student would then be identified by their snum-value in preference to her pnum-value. (This would also require additional attributes to be added to an RM counterpart to the PROFESSOR table declaration, attribute snum for example; see [1, 2] for details.)

for PROFESSOR. A professor who is also a student would then be identified by their snum-value in preference to her pnum-value. (This would also require additional attributes to be added to an RM counterpart to the PROFESSOR table declaration, attribute snum for example; see [1, 2] for details.)

From RM to ARM. In [2], a GenRM procedure is defined that computes an RM schema given an ARM schema and \({ \textsf {RET}}\) assignment as input. We now introduce a complementary GenARM procedure for reverse engineering RM schemata: given a RM schema \(\varSigma _1\) as input, GenARM modifies \(\varSigma _1\) to a corresponding ARM schema \(\varSigma _2\) and computes an \({ \textsf {RET}}\) for \(\varSigma _2\), for which GenRM  re-obtains \(\varSigma _1\) (up to names of re-introduced concrete attributes), and for which \({ \textsf {RET}}\) is identity resolving, which is a condition defined in [2] ensuring that any SQLP query over \(\varSigma _2\) can be mapped to an equivalent SQL query over \(\varSigma _1\).

re-obtains \(\varSigma _1\) (up to names of re-introduced concrete attributes), and for which \({ \textsf {RET}}\) is identity resolving, which is a condition defined in [2] ensuring that any SQLP query over \(\varSigma _2\) can be mapped to an equivalent SQL query over \(\varSigma _1\).

GenARM modifies \(\varSigma \) and computes \({ \textsf {RET}}\) with the use of a stack S of tables in \(\textsc {Tables}(\varSigma )\), and a pending assignment set \({ \textsf {PA}}\). The latter consists of 4-tuples \((T, \mathop {\mathsf {Pf}}\nolimits , T', A)\) that are used to incrementally compute \({ \textsf {RET}}\) as each table in S is processed. A 4-tuple asserts: the primary key of T has component \(\mathop {\mathsf {Pf}}\nolimits \circ A\), and depends via foreign key join path \(\mathop {\mathsf {Pf}}\nolimits \), on table \(T'\) having attribute A. Here, “\(\circ \)” composes attribute paths, in particular: \(\mathop {\mathsf {Pf}}\nolimits _1 \circ \mathop {\mathsf {Pf}}\nolimits _2\) denotes \(\mathop {\mathsf {Pf}}\nolimits _1\) (resp. \(\mathop {\mathsf {Pf}}\nolimits _2\)) if \(\mathop {\mathsf {Pf}}\nolimits _2\) (resp. \(\mathop {\mathsf {Pf}}\nolimits _1\)) is \(\mathop {\mathtt{self}}\nolimits \), and \(\mathop {\mathsf {Pf}}\nolimits _1 . \mathop {\mathsf {Pf}}\nolimits _2\) otherwise. GenARM is defined as follows:

Computation of S in the initialization phase of GenARM encodes a total order that ensures RETs are free of cycles. RETs not free of cycles might happen otherwise, e.g., where two RM tables had complementary foreign keys to each other from their primary keys. Regarding the conversion to an ARM schema, note the replacement of foreign key constraints with unary counterparts or with specialization constraints (lines 5.4.1, 5.4.2), the addition of disjointness constraints (line 5.3), path functional dependencies (line 6). Both are necessary to ensure RET is ultimately identity resolving in the sense outlined above.

The addition of disjointness constraints between any pair of tables with primary keys that differ in any way are justified by virtue of the fact that an input RM schema has no way of detecting when respective primary key values could co-refer otherwise. For example, if students can also be professors, then it becomes impossible to compile a SQLP query for department names of professors who are not students.

The GenARM procedure achieves the first of our main goals:

Proposition 8

Let \(\varSigma _1\) be a RM schema and \(\varSigma _2\) and R the ARM schema and referring type assignment generated by \(\textsc {GenARM}(\varSigma _1)\), respectively. Then for every SQLP query \(\varphi \) and database instance \( DB \) over \(\varSigma _2\) we have

where the functions \(\textsc {Concrete}\) and \(\textsc {SQLPtoSQL}\) map ARM instances and SQLP queries to their corresponding relational counterparts using the referring type assignment R.

Note that, for the above proposition to hold, it is essential that the referring type assignment R produced in Step 6 of GenARM is identity resolving in order to map equalities between \(\mathtt{OID}\) attributes in \(\varSigma _2\) to equalities over attributes in \(\varSigma _1\). Case analysis of the procedure shows that indeed R is identity resolving.

4 User Evaluation

The aim of the user evaluation is to ascertain whether querying with the ARM and SQLP has advantages over querying with the RM and SQL. We expect that it will take less time to construct the SQLP queries and they may also have fewer errors, for one does not have to painstakingly declare all the joins individually (recall also Example 4), which reduces the cognitive load as well as the size of the query, which may affect comprehension and authoring of queries. Two variables measure the potential difference: time taken and correctness, which lead to the following null (H) and alternative (A) statistical hypotheses:

- \(H_{t}\) :

-

: there is no difference between SQL and SQLP in the mean time taken;

- \(A_{t}\) :

-

: reading and writing in SQLP is faster than in SQL in the mean time taken;

- \(H_{c}\) :

-

: there no difference between SQL and SQLP in the mean correctness;

- \(A_{c}\) :

-

: SQLP queries have a higher level of correctness than SQL queries.

Because we assume SQLP will show an advantage over SQL, they will be assessed against the one sided alternatives, rather than the weaker two-sided option.

4.1 Experimental Design

Methods. Participants were recruited from undergraduate Computer Science major students at the University of Waterloo (UW)’s third year database class (CS348) and graduate students in Computer Science from UW’s Data Systems Lab. (The experimental design was reviewed and approved by the Human Research Ethics Committee of UW before recruitment of subjects.) The set-up of the experiment uses a cross-over design for the graduate students, and a simple comparison for the undergraduate students. Undergraduate students were treated differently, because only one-third of them turned out to have had SQL experience, whereas all graduate students had. Half of the undergraduates were randomly assigned to answer the six questions using SQLP (group \(U_p\), \(n=5\)) and the other half to answer the SQL questions (group \(U_s\), \(n=4\)). The graduate students answered all six questions using the cross-cover approach; they were randomly assigned to answer either first the SQL questions and then SQLP (group \(G_{s}\), \(n=8\)) and the other in reverse order (group \(G_{p}\), \(n=7\)). Of the undergraduates, only 2 were native English speakers (both had been randomly assigned to \(U_p\)); of the graduate students, 5 were native English speakers (4 of these had been randomly assigned to \(G_{p}\)).

The experimental protocol is as follows. All subjects were given five minutes to read instructions about the protocol and then 10 min to read instructions and examples on the use of SQL or SQLP, or both in case of the cross-over design. The subjects were not allowed to ask questions. Subsequently, each subject received each question sealed in its own envelope, noting that no student knew which query language would be required until their session began. Subjects recorded (to the nearest second) their start time on an envelope when they opened it as well as their completion time when their answer to that question was returned in the envelope. Questions Q1-Q6 (see below) were answered by all subjects in that order and no previous question could be consulted. The questions in a question set used only one of SQL or SQLP. All answers were given in hand-written form and subjects had no access to any other electronic device or information source. There was no time limit. Given the international nature of our students, the subjects also answered the question whether their first language was English or not. Upon completion of the experiment, each subject received a gift voucher.

Performance is measured on the time taken to complete each question and the correctness of each answer. Time taken is based on the self-reported time (see procedure). To measure correctness, answers for each question are independently scored by three assessors (authors WM, DT, and GW) and the scores averaged; the assessors are blinded to all aspects about the subject and the experimental conditions. The assessors agreed to score on a 4 point scale with half points allowed, where 0 meant to them completely wrong; 1: meant ‘does not solve the question but the subject has grasped the basic concepts’ (of SQL or SQLP); 2: meant ‘the answer contains mistakes, but is on the right track and joined most of the required tables correctly’; 3: meant ‘mostly correct, may only contain minor mistakes’; and 4: meant ‘solves the question completely and correctly’.

Regarding the statistical analysis, different methods can be applied depending upon whether the results of all students are combined, or separated by graduate/undergraduate. We will analyze both, as both have advantages (more data points and the cross-over insights, respectively).

Materials. Six questions were devised to cover both query comprehension and authoring: Q1-Q3 present code in SQL or SQLP and subjects have to provide a written summary of the query in English (comprehension); Q4-Q6 present a query in English and subjects have to code the query in SQL or SQLP (authoring), alike depicted in Example 4. The comprehension and authoring tasks focus on conjunctive cases involving no more than six table variables, except for Q2 that includes a not exists predicate. Authoring tasks were designed to be progressively more difficult (requiring more joins), as were comprehension questions Q1 and Q3. Figures 1 and 2, without marking abstract attributes with ‘*’, are the RM and ARM schemata used in the experiment. Details can be found in [8].

4.2 Results and Discussion

We will first present the combined results, and then the cross-over results.

Performance: all subjects combined. There are a total of 39 answers (20 for SQLP, 19 for SQL) for each performance measure with \(30 = 2\times 15\) from the graduate students (\(|G_{p}| + |G_{s}|\)) and \(9 = |U_p| + |U_s|\) from the undergraduates. The fact that each graduate student provides values for both SQLP and SQL is of little concern since the experimental design randomly assigned half of them to using SQLP first and half to using SQL first, thus balancing any order effect. Figure 4 shows the estimated values and 95% confidence intervals for the expected (or mean) performance for the correctness (left), and time taken (right). In both plots, SQL results are solid colored red, SQLP dashed blue.

Consider only the results for the comprehension questions Q1-Q3 for correctness. Looking at the SQL curve, we see increasing difficulty of these questions (as by design) in the decreasing mean values and increasing variability (interval length). The same pattern holds when performance on Q1-Q3 is measured by the expected time taken, though Q2 and Q3 are essentially indistinguishable.

Similarly, following the SQL curve for the authoring tasks given by Q4-Q6, we see increasing difficulty as measured by correctness and by time taken. For correctness, the difficulty shows as decreasing mean performance; for time taken, it shows dramatically as increasing mean time taken and as increased variability. A comparison of the SQL curves in both plots shows students taking less time and scoring more poorly on code reading (comprehension) questions and much more time but generally better scores on the code writing (authoring) questions.

More interesting are the results for the SQLP curve: except for Q1, the expected time to complete each question using SQLP is consistently lower, and typically has smaller variability than, when using SQL!

These estimates and intervals were based on standard normal theory (t-based confidence intervals), so to be conservative we also performed non-parametric two-sample Wilcoxon (or Mann-Whitney) tests comparing the sample times taken for SQL to those for SQLP for each question. The one sided test (\(H_{t}\) versus \(A_t\)) was performed giving the following p-values for each question: Q1 (\(p = 0.63\)), Q2 (\(p = 0.021\)), Q3 (\(p = 0.018\)), Q4 (\(p = 0.27\)), Q5 (\(p = 0.009\)), and Q6 (\(p = 0.03\)). Each p-value is the probability (assuming SQL and SQLP have the same distribution) of observing at least as large a difference as we did observe as measured by this test. The smaller the p-value is, the stronger the evidence against the null hypothesis (\(H_{t}\)) and in favor of the alternative (\(A_t\)). All but Q1 and Q4 would be judged to be highly statistically significant; SQLP outperforms SQL in time taken.

In contrast, no statistically significant difference between SQLP and SQL in correctness was found when the same tests were applied to the correctness scores. For correctness, the test yielded p-values for each question of Q1 (\(p = 0.90\)), Q2 (\(p = 0.63\)), Q3 (\(p = 0.097\)), Q4 (\(p = 0.41\)), Q5 (\(p = 0.07\)), and Q6 (\(p = 0.77\)). Such high probabilities mean that, as measured by this test, the data were consistent with the null hypothesis (\(H_{c}\)). If anything, in the two cases (Q3 and Q5) approaching statistical significance, the (left hand) plot shows SQLP outperforming SQL in correctness.

Finally, we consider how the performance fares with respect to English as first language or not. Figure 5 shows the confidence intervals for the mean time taken in these cases. Again, the expected time taken using SQLP is often the same or lower than when using SQL and often with less variability. Most striking are those cases where the student’s first language was not English; except for Q1, they appear to perform more quickly using SQLP than they do using SQL.

Performance: Graduate students only. The randomized cross-over design allows us to work with the difference between performance results for each student on each question and performance measure. The differencing should reduce variation between students. Figure 6 shows the differences plotted by question for each performance measure. As before, with the possible exception of Q5 (\(p = 0.00813\) for a one-sided test) where SQLP clearly outperforms SQL, there is no statistically significant difference between SQLP and SQL observed for correctness of the answers. Also as before, with the exception of Q1 SQLP significantly outperforms SQL for both comprehension and authoring in time taken with p-values (one-sided alternatives) by question: Q1 (\(p = 0.500\)), Q2 (\(p = 0.00269\)), Q3 (\(p = 0.00488\)), Q4 (\(p = 0.0661\)), Q5 (\(p = 0.00413\)), and Q6 (\(p = 0.0820\)). The last p-value is affected by the single outlying student near \(-1000\) (Q6); as the plot shows, the remaining points for Q6 would have produced a significant value.

Because graduate students used both SQL and SQLP on the same questions, we can investigate the effect on performance of the order in which the methods were used. A priori, we expect that performance will improve when students faced the same questions again, albeit via a different model (RM+SQL or ARM+SQLP). The order effect was as expected for correctness, with the exception of Q5 where SQLP produced better answers than did SQL independently of the order. The results for time taken are shown in Fig. 7: as expected, when SQL is used first, students took longer to answer than when they subsequently used SQLP (points being above the horizontal line in the left hand plot) on the same questions. In contrast, when students used SQLP first, again with Q1 as an exception, their performance with SQL was surprisingly not a marked improvement on SQLP (points were not below the horizontal line in the right hand plot). That is, SQL still took longer than SQLP even though it was used after SQLP on the same questions.

Discussion of the Results of the Experiment. The results of the experiments demonstrated that noted theoretical advantages of SQLP over SQL translated to SQLP outperforming SQL in time to completion (being consistently lower and having less variation), i.e., \(A_t\) was accepted. \(H_c\) could not be rejected, although wherever observed differences approached statistical significance, they also favoured SQLP over SQL. Also, the learning curve for SQLP appeared to be low, given that no participant knew about SQLP or ARM before they began the experiment and were given only 10 min to learn about it.

There was no statistically significant difference in performance for Q1. It was the easiest question, and as difficulty increased, the differences between SQLP and SQL often increased (recall Fig. 4, right). Therefore, examining in further detail the effects of query difficulty levels and different types of queries is a promising direction for future experimental work, as it may refine insights into the practical advantages of using SQLP over SQL.

In other experiments that vary the model (or notation thereof) or the query language, a difference in either semantic or syntactic accuracy and in time take is observed when notation is the variable [3, 6], or both when the query language abstracts away from plain SQL [7], and both when both are variables [10]. Our results on different query languages show a similar trend. The look-and-feel of the ARM diagram was made to look alike an RM one, in order to minimize the possibility that any difference observed could be attributable to the representation of the information rather than the query language. A future HCI experiment may be to devise more notations for ARM that have more or less vertical partitioning so as to examine those effects, which may then benefit also the accuracy.

5 Related Work

While many path query languages have been proposed, to the best of our knowledge, there has been only one experimental evaluation to compare it to a ‘non-path’ version. Junkkari and co-authors used PathSQL, which in their experiment showed that it reduces query writing time and have fewer errors cf. SQL [7]Footnote 2. PathSQL [12] constructs paths for queries over aggregation hierarchies as a way to represent a series of left outer joins more compactly. In contrast, SQLP’s paths can be constructed over joins in the direction of the functional dependencies.

Due to lack of other related work, we broadened the scope on conceptual queries that still relate to relational models. It has been shown that models at a higher level of abstraction have either equal or higher accuracy (fewer semantic errors) in the queries and are always formulated in less time when querying with the aid of a relational or conceptual model [6, 10]. Fewer syntax errors were observed when the aid was a textual relational model, but it was slower cf. a graphical depiction of the relational model, with no difference in accuracy of the queries [6]. It is not clear where the border lies between how detailed the graphically depicted relational model has to be to be optimal, but some parsimony seems to be favored especially for more complex SQL queries [3]. Thus, the results obtained in our experiment is in line with related, albeit different, experiments. Examining Bowen et al’s parsimonious and detailed diagrams [3], the ARM depicted in Fig. 2 may strike a good balance, but this deserves further assessment by HCI experts.

Querying at the conceptual layer has recently gained interest because of the relative popularity of Ontology-Based Data Access (OBDA). However, since SQLP is much more expressive than the queries one can use in a typical OBDA setting due to the open world assumption, OBDA techniques do not directly apply in our setting. However, note that many of the advantages of querying at the conceptual level are retained here. In particular, the ARM schema can be presented equivalently in an alternative graphical notation based on classes and features that resembles object diagrams [5] and can be formalized, e.g., in the description logic  .

.

6 Conclusions

Querying for information with the SQLP path query language and the Abstract Relational Model has been shown to be significantly faster than the baseline of SQL with the Relational Model, whilst maintaining accuracy. Thanks to the referring expression types and the lossless vertical partitioning it permits, an Abstract Relational Model can be made look like either a conceptual data model or function as a relational model. Therewith it can take advantage of both the benefit of conceptual queries and the full SQL support with the relational model within one formalism, whilst keeping an actual SQLP queries invariant in the face of such partitioning decisions. We have proposed a novel method to reverse engineer legacy relational models up to abstract relational models to facilitate its uptake, which, thanks to the automated analysis of the keys, also uncovers implicit constraints in the model, such as subsumption and disjointness.

Notes

- 1.

This is essentially the syntax for SQL’s create table commands.

- 2.

as stated in their abstract; despite efforts, we were not able to obtain the full paper.

References

Borgida, A., Toman, D., Weddell, G.: On referring expressions in query answering over first order knowledge bases. In: Proceedings of KR 2016, pp. 319–328. ACM (2016)

Borgida, A., Toman, D., Weddell, G.: On referring expressions in information systems derived from conceptual modelling. In: Comyn-Wattiau, I., Tanaka, K., Song, I.-Y., Yamamoto, S., Saeki, M. (eds.) ER 2016. LNCS, vol. 9974, pp. 183–197. Springer, Cham (2016). https://doi.org/10.1007/978-3-319-46397-1_14

Bowen, P.L., O’Farrell, R.A., Rohde, F.H.: An empirical investigation of end-user query development: the effects of improved model expressiveness vs. complexity. Info. Syst. Res. 20(4), 565–584 (2009)

Calvanese, D., Keet, C.M., Nutt, W., Rodríguez-Muro, M., Stefanoni, G.: Web-based graphical querying of databases through an ontology: the WONDER system. In: Proceedings of ACM SAC 2010, pp. 1389–1396. ACM (2010)

Jacques, J.S., Toman, D., Weddell, G.E.: Object-relational queries over \(\cal{CFDI}_\mathit{}nc\) knowledge bases: OBDA for the SQL-Literate. In: IJCAI 2016, pp. 1258–1264 (2016)

Jih, W.J., Bradbard, D.A., Snyder, C.A., Thompson, N.G.A.: The effects of relational and entity-relationship data models on query performance of end-users. Int. J. Man-Mach. Stu. 31(3), 257–267 (1989)

Junkkari, M., Vainio, J., Iltanenan, K., Arvola, P., Kari, H., Kekäläinen, J.: Path expressions in SQL: a user study on query formulation. J. DB Mgmt. 22(3), 22p (2016)

Ma, W.: On the Utility of Adding an Abstract Domain and Attribute Paths to SQL. Master’s thesis, University of Waterloo (2018)

Mylopoulos, J., Bernstein, P.A., Wong, H.K.T.: A language facility for designing database-intensive applications. ACM TODS 5(2), 185–207 (1980)

Siau, K.L., Chan, H.C., Wei, K.K.: Effects of query complexity and learning on novice user query performance with conceptual and logical database interfaces. IEEE Trans. Sys., Man Cybern. 34(2), 276–281 (2004)

Toman, D., Weddell, G.E.: On adding inverse features to the description logic \(\cal{CFD}^{\forall }_{\mathit{}nc}\). In: Proceedings of PRICAI 2014, pp. 587–599 (2014)

Vainio, J., Junkkari, M.: SQL-based semantics for path expressions over hierarchical data in relational databases. J. Info. Sci. 40(3), 293–312 (2014)

Weddell, G.: Reasoning about functional dependencies generalized for semantic data models. TODS 17(1), 32–64 (1992)

Author information

Authors and Affiliations

Corresponding authors

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2018 Springer Nature Switzerland AG

About this paper

Cite this paper

Ma, W., Keet, C.M., Oldford, W., Toman, D., Weddell, G. (2018). The Utility of the Abstract Relational Model and Attribute Paths in SQL. In: Faron Zucker, C., Ghidini, C., Napoli, A., Toussaint, Y. (eds) Knowledge Engineering and Knowledge Management. EKAW 2018. Lecture Notes in Computer Science(), vol 11313. Springer, Cham. https://doi.org/10.1007/978-3-030-03667-6_13

Download citation

DOI: https://doi.org/10.1007/978-3-030-03667-6_13

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-03666-9

Online ISBN: 978-3-030-03667-6

eBook Packages: Computer ScienceComputer Science (R0)