Abstract

Pansharpening technology integrates low spatial resolution (LR) multi-spectral (MS) image and high spatial resolution panchromatic (PAN) image into a high spatial resolution multi-spectral (HRMS) image. Various pansharpening methods have been proposed, and each of them has its own improvements in different aspects. Meanwhile, there also exist specified shortages within each pansharpening method. For example, the methods based on component substitution (CS) always cause color distortion and multi-resolution analysis (MRA) based methods may loss some details in PAN image. In this paper, we proposed a quality boosting strategy for the pansharpened image obtained from a given method. The A+ regressors learned from the pansharpened results of a certain method and the ground-truth HRMS images are used to overcome the shortages of the given method. Firstly, the pansharpened images are produced by ATWT-based pansharpening method. Then, the projection from the pansharpened image to ideal ground truth image is learned with adjusted anchored neighborhood regression (A+) and the learned A+ regressors are used to boost quality of pansharpened image. The experimental results demonstrate that the proposed algorithm provides superior performances in terms of both objective evaluation and subjective visual quality.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

Due to the trade-off of satellite sensors between spatial and spectral resolution, the earth observation satellites usually provide multi-spectral (MS) images and panchromatic (PAN) images [1]. The MS images have higher spectral diversity of bands. But they have lower spatial resolution than the corresponding monochrome PAN image [2]. HRMS images are widely used in many applications, such as land-use classification, change detection, map updating, disaster monitoring and so on [3]. In order to obtain the HRMS images, the pansharpening technique is used to effectively integrate the spatial details of the PAN image and the spectral information of the MS image to acquire the desired HRMS image.

The pansharpening algorithms based on component substitution (CS) strategy are the most classical methods which replace the structure component of low spatial resolution multi-spectral (LRMS) images with PAN images. The intensity-hue-saturation (IHS) [4], principal component analysis [5], the Gram–Schmidt (GS) [6] transform are usually used to extract the structure components of LRMS images. Most of CS-based methods are very efficient. Nevertheless, the pansharpened results may suffer from spectral distortion when the structure component of LRMS images not exactly equivalent to the corresponding PAN images. Differently, the MRA-based methods are developed with the ARSIS concept [7] that the missing spatial details of LRMS images can be obtained from the high frequencies of the PAN images. The stationary wavelet transform(SWT) [8], á trous wavelet transform (ATWT) [9] and high pass filter [10] are usually employed to extract the high frequencies of the PAN images. The MRA-based methods preserve color spatial details well. However, it is easy to produce spectral deformations when the algorithm parameters are set incorrectly. The pansharpening methods based on the spares representation [3, 11] and convolution neural network [12, 13] are becoming popular in the recent years. These methods have been proved to be effective and have achieved impressive pansharpened results.

All the presented pansharpening methods have various improvements in different aspects. Meanwhile, there also exist some shortages within each method and the specified shortages would be hard to overcome by optimizing itself parameters. We noticed that the shortages are always specific for a given method, which means that we can overcome the shortages by learning the projection from the pansharpened results of a certain method to the ground-truth HRMS images. Thus, we proposed a quality boosting strategy for the pansharpened images with adjusted anchored neighborhood regression in [14]. The pansharpened image are produced by the ATWT-based pansharpening method. The learned A+ regressors are used to obtain the residual image between the pansharpened result of a certain method and the ground-truth HRMS image. And the residual image is used to enhance the quality of the pansharpened image. QuickBird satellite images are used to perform the validation of the proposed method. The experimental results showed that the proposed algorithm outperformed the recent traditional pansharpening algorithms in terms of both subjective and objective measures.

The rest of this paper is organized into five sections. In Sect. 2, the A+ algorithm is briefly introduced. In Sect. 3, we give the general framework of the proposed algorithm. Experiment results and discussions are presented in Sect. 4. Finally, conclusions are given in Sect. 5.

2 Adjusted Anchored Neighborhood Regression (A+)

In our processing framework, the major task of A+ is to recover the HRMS images from the pansharpened version. In this section, we shortly review the A+ which combines the philosophy of neighbor embedding and spares representation [14]. The basic assumption of neighbor embedding is that low-dimensional nonlinear manifolds which formed from low-resolution image patches and it counterpart high-resolution image patches have similarity in local geometry. A+ use the sparse dictionary and the neighborhood of each atom in the dictionary to construct the manifold. We start the description of A+ from the stage of extracting pairs of observation.

Patch samples (or features obtained by feature extraction) and the corresponding original ground-truth patch samples are collected from training pool. A learned compact dictionary \( D = \left\{ {d_{\rm{1}} ,d_{\rm{2}} , \ldots ,d_{j} } \right\} \) is learned by dictionary training algorithms from the training samples. The atoms in the compact dictionary are served as anchored points (AP), each of which corresponding to a A+ regressor. For each atom \( d_{j} \), K local neighbor samples (noticed by \( S_{l,j} \)), extracted from the training pool, that lie closest to \( d_{j} \) are densely sampling the manifold where the AP lie on. For any input observation x closest to \( d_{j} \), the weight vectors \( \delta \) are obtained from the local neighbor samples \( S_{l,j} \) of \( d_{j} \) by solving the optimization problem as

where \( \beta \) is balance term. The closed-form solution of (1) is

where I is a unit matrix. The A+ assumed that the image patches and its counterpart ground-truth patches lie on a low-dimensional nonlinear manifold with similar local geometry and the patches in the original feature domain can be reconstructed as a weighted average of local neighbors using the same weights as in the observation feature domain. Therefore, the corresponding restored sample can be recovered by

where \( S_{h,j} \) is the high-resolution neighbors corresponding to \( S_{l,j} \). From (2) and (3), we obtain

where the projection matrix \( P_{G}^{j} = S_{h,j} \left( {S_{l,j}^{T} S_{l,j} + \beta {\kern 1pt} {\rm I}} \right)^{ - 1} S_{l,j}^{T} \). We called \( P_{G}^{j} \) as the A+ regressor corresponding to the atom \( d_{j} \). We can compute \( \left\{ {P_{G}^{1} ,P_{G}^{2} , \ldots ,P_{G}^{j} } \right\} \) for all the anchored points offline.

3 Boosting the Quality of Pansharpened Image

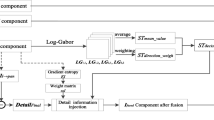

The general framework of our method is shown in Fig. 1. Just as the A+ used in single image super-resolution problem [14], the proposed method contains two main phases, namely offline training phase and online quality boosting phase. In order to better fit quality boosting task for pansharpened images slight change is made for A+. Instead of bicubic interpolation upsampling in [9], we need some pansharpening method to generate the input images as “starting points”. In the training phase, the LRMS images and HR PAN images are firstly fused by ATWT-based pansharpening method which is very efficient. Noticed that our proposed method is capable of collaborating with other pansharpening methods. We regress from the pansharpened image patch features to the residual image to correct the pansharpened image so that to overcome the deficiency of ATWT-based pansharpening method. The pansharpened images as well as the residual difference images between the pansharpened and the ground-truth images are used as the training data to enhance the error structure between them. We treat both pansharpened images and the residual difference images patch-wise over a dense grid. For each pansharpened image patch, we compute vertical and horizontal gradient responses and use them concatenated lexicographically as gradient features. The PCA is utilized to reduce feature vector’s dimension with 99.9% energy preservation (same as in A+ in [14]). Thus, we obtain extracted pairs of patch-wise features \( \left\{ {v_{i} ,i = 1,2, \ldots ,N} \right\} \) from the pansharpened images and patch vectors (normalized by \( l_{2} \) norm) of residual difference images.

A compact dictionary is learned by KSVD dictionary learning method in [15] from the \( \left\{ {v_{i} } \right\} \). For each anchored atom \( d_{j} \), K local neighbor samples (noticed by \( N_{l,j} \)) that lie closest to this atom is extracted from the \( \left\{ {v_{i} } \right\} \). The corresponding HR residual patches are construct the HR neighborhood \( N_{h,j} \). A+ regressors for \( d_{j} \) are computed as:

We can get all the A+ regressors \( \left\{ {F_{1} ,F_{2} , \ldots ,F_{j} } \right\} \) using the same way as in (5).

During quality boosting phase, the features \( \left\{ {u_{i} } \right\} \) extract from the input ATWT-based pansharpened image using the same feature extraction method as in training phase. For each feature \( u_{i} \), A+ search the nearest AP from \( D \) with highest correlation measured by Euclidean distance. The corresponding HR residual patch can be obtained by

Then, HR residual image \( R \) is reconstructed by averaging assembly and the final HRMS image Y is recovered by

where \( P \) is the input pansharpened image.

In addition, we used a post processing method called iterative back projection which is origin from computer tomography and applied to super-resolution in [16], to eliminate the inequality.

We set \( Y_{0} = Y \) and p is a Gaussian filter with the standard deviation and filter size are 1 and 5. M is a down-sample operator and \( I_{MS} \) is the input MS of a certain pansharpening method; t is the iteration number; \( \rm{(}\rm{.)} \uparrow s \) means up-sampling by a factor of s.

4 Experimental Results

We adopt Quickbird remote sensing images to achieve the experiments [17]. And the performances are evaluated by the comparison with different pansharpening methods. According to Wald’s protocol [18] that, any synthetic image should be as close as possible to the highest spatial resolution image which acquired by the corresponding sensor. Therefore, the experiments are implemented on original MS images using as reference images and degraded data sets which are down-sampling version of original MS and PAN images. In this paper, the objective measurement that reviewed in [20], the correlation coefficient(CC), the erreur relative global adimensionnelle de synthèse (ERGAS), the Q4 index and the spectral-angle mapper (SAM), are used to quantitative measure the quality of fused image and boosting image.

The QuickBird is a high-resolution remote sensing satellite and provides four band MS image with 2.88 m spatial resolution and PAN image with 0.7 m spatial resolution. In our experiment, we down-sample the 2.88 m four band MS images and 0.7 m PAN images by a factor of 4 to gain LRMS and PAN images which are used as input of pansharpening methods and the original 0.7 m MS images are used as reference image. In the experiments, the size of LRMS and PAN images is \( 125 \times 125 \) and \( 500 \times 500 \) and patch size is \( 3 \times 3 \). The ATWT with three levels decomposition is employed to produce pansharpened images and ATWT results. The number of iteration and maximal sparsity of K-SVD algorithm is 20 and 8. The influence of dictionary size, balance term and neighborhood size are showed in Fig. 2. The standard settings are dictionary size of 1024, neighborhood size of 2048, and balance term of 0.1. In Fig. 2, we can see that the values of the index become better and stable with dictionary size, balance term and neighborhood size increased. Therefore, we set dictionary size, balance term and neighborhood size as 4096, 1 and 8192 respectively. We utilized our method on ATWT pansharpened image and the result is compared with six well-known pansharpening method as follow: SVT [19], SWT [8], ATWT [9], GS, generalized IHS(GIHS), Brovey transform(BT). The GS, GIHS and BT that used in our experiment are adopted from [20]. In the GS, the LR PAN image for processing is produced by pixel averaging of LRMS bands. In the SVT, the \( \sigma^{2} \) in the Gaussian RBF kernel is set to 0.6, and the parameter \( \gamma \) of the mapped LSSVM is set to 1, which give the best results. In the SWT, we utilized three levels decomposition with Daubechies wavelet bases with six vanishing moments.

Figures 3 and 4 present two examples of the results of the proposed method and others methods. By comparing the results in Figs. 3 and 4 with corresponding reference image visually, we find that (1) the result of GIHS and BT suffer from spectral distortion while can improve spatial resolution in some extent; (2) It can be clearly observed that the SVT and GS result is blurring in some degree, but SVT result is clearer than GS result; (3) the SWT and ATWT can effectively improve the spatial resolution. However, the result of the SWT and ATWT looks unnatural, although preserve spectral information; (4) The proposed boosting method can improve the spectral quality of the ATWT result which make the image looks better as well as providing high spatial resolution. We showed two complete experiment results in Figs. 3 and 4. For other results, we only give the reference images, ATWT results and proposed method results in Fig. 5 due to the limitation of space.

The quantitative evaluation results of Figs. 3, 4, and 5 are shown in Table 1. The best results for each index labeled in bold. The CC measures the correlation between reference image and pansharpened image, high value of CC means better performance. In Table 1, our method provides highest CC values for all experimental images. The SAM measures the spectral similarity of reference image and pansharpened image. The proposed method also provides the best SAM results except Fig. 4. The SAM value of proposed method demonstrates obviously improvements by comparing with ATWT result in Fig. 4. The ERGAS provides an overall spectral quality measure of the pansharpened image by measuring the difference with reference image and The Q4 index comprehensively measures the spectral and spatial quality of pansharpened image. The ERGAS and Q4 values of the proposed method are the best results for all experimental images as well. This is mainly due to the proposed method learn the difference between pansharpened image and reference image and generated a residual image to overcome the disadvantage of pansharpening method and enrich the information of pansharpened image. In our experiment, the proposed method can effectively improve the spectral and spatial quality of ATWT pansharpened image. By comparing with the ATWT result, all index value of our method has improved and the ATWT pansharpened image becomes the best result among these pan–sharpening approaches after processed by proposed method.

5 The Conclusion

In this paper, we present a novel pansharpening image quality boosting algorithm based on A+ which learns a set of A+ regressors mapping pansharpened MS image to HRMS residual images. The residual images are used to compensate the pansharpened image from any pansharpening method. The experiment which evaluated visually and quantitatively on Quickbird data compared with GS, GHIS, BT, ATWT, SVT and SWT show that the proposed method not only improve spatial resolution, but also can effectively enhance spectral quality. Noticed that the proposed method can be collaborating with any other existing pansharpening algorithms. Of course, the output results quality would be improved if the advanced pansharpening method is used as pre-processing steps.

References

Yuan, Q., Wei, Y., Meng, X., Shen, H., Zhang, L.: A multiscale and multidepth convolutional neural network for remote sensing imagery pan-sharpening. IEEE J. Sel. Top. Appl. Earth Observations Remote Sens. PP(99), 1–12 (2018)

Garzelli, A.: A review of image fusion algorithms based on the super-resolution paradigm. Remote Sens. 8(10), 797 (2016)

Han, C., Zhang, H., Gao, C., Jiang, C., Sang, N., Zhang, L.: A remote sensing image fusion method based on the analysis sparse model. IEEE J. Sel. Top. Appl. Earth Observations Remote Sens. 9(1), 439–453 (2016)

Choi, M.: A new intensity-hue-saturation fusion approach to image fusion with a tradeoff parameter. IEEE Trans. Geosci. Remote Sens. 44(6), 1672–1682 (2006)

Shah, V.P., Younan, N.H., King, R.L.: An efficient pansharpening method via a combined adaptive PCA approach and contourlets. IEEE Trans. Geosci. Remote Sens. 46(5), 1323–1335 (2008)

Laben, C.A., Brower, B.V., Company, E.K.: Process for enhancing the spatial resolution of multispectral imagery using pansharpening. Websterny Uspenfieldny, US (2000)

Ranchin, T., Wald, L.: Fusion of high spatial and spectral resolution images: the ARSIS concept and its implementation. Photogram. Eng. Remote Sens. 66(1), 49–61 (2000)

Li, S.: Multisensor remote sensing image fusion using stationary wavelet transform: effects of basis and decomposition level. Int. J. Wavelets Multiresolut. Inf. Process. 6(01), 37–50 (2008)

Vivone, G., Restaino, R., Mura, M.D., Licciardi, G., Chanussot, J.: Contrast and error-based fusion schemes for multispectral image pansharpening. IEEE Geosci. Remote Sens. Lett. 11(5), 930–934 (2013)

Ghassemian, H.: A retina based multi-resolution image-fusion, In: Proceedings of IEEE International Geoscience and Remote Sensing Symposium, vol. 2, pp. 709–711 (2001)

Ghamchili, M., Ghassemian, H.: Panchromatic and multispectral images fusion using sparse representation. In: Artificial Intelligence and Signal Processing Conference, pp. 80–84 (2017)

Yang, J., Fu, X., Hu, Y., Huang, Y., Ding, X., Paisley, J.: PanNet: A deep network architecture for pansharpening. In: IEEE International Conference on Computer Vision, pp. 1753–1761. IEEE Computer Society (2017)

Wei, Y., Yuan, Q., Shen, H., Zhang, L.: Boosting the accuracy of multispectral image pansharpening by learning a deep residual network. IEEE Geosci. Remote Sens. Lett. 14(10), 1795–1799 (2017)

Timofte, R., Smet, V.D., Gool, L.V.: A+: Adjusted anchored neighborhood regression for fast super-resolution. In: Asian Conference on Computer Vision, vol. 9006, pp. 111–126. Springer, Cham (2014)

Aharon, M., Elad, M., Bruckstein, A.: K-SVD: an algorithm for designing overcomplete dictionaries for sparse representation. IEEE Trans. Signal Process. 54(11), 4311–4322 (2006)

Yang, J., Wright, J., Huang, T.S., Ma, Y.: Image super-resolution via sparse representation. IEEE Trans. Image Process. 19(11), 2861–2873 (2010)

DigitalGlobe.: QuickBird scene 000000185940_01_P001, Level Standard 2A, DigitalGlobe, Longmont, Colorado, 1/20/2002 (2003)

Wald, L., Ranchin, T., Mangolini, M.: Fusion of satellite images of different spatial resolutions: assessing the quality of resulting images. Photogram. Eng. Remote Sens. 63(6), 691–699 (1997)

Zheng, S., Shi, W.Z., Liu, J., Tian, J.: Remote sensing image fusion using multiscale mapped LS-SVM. IEEE Trans. Geosci. Remote Sens. 46(5), 1313–1322 (2008)

Vivone, G., Alparone, L., Chanussot, J., Mura, M.D., Garzelli, A., Licciardi, G.A., et al.: A critical comparison among pansharpening algorithms. IEEE Trans. Geosci. Remote Sens. 53(5), 2565–2586 (2015)

Acknowledgements

This paper is supported by the National Natural Science Foundation of China (No. 61871210, 61102108), Scientific Research Fund of Hunan Provincial Education Department (Nos. 16B225, YB2013B039), the Natural Science Foundation of Hunan Province (No. 2016JJ3106), Young talents program of the University of South China, the construct program of key disciplines in USC (No. NHXK04), Scientific Research Fund of Hengyang Science and Technology Bureau(No. 2015KG51), the Postgraduate Research and Innovation Project of Hunan Province in 2018, and the Postgraduate Science Fund of USC.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2018 Springer Nature Switzerland AG

About this paper

Cite this paper

Wang, X., Yang, B. (2018). Boosting the Quality of Pansharpened Image by Adjusted Anchored Neighborhood Regression. In: Lai, JH., et al. Pattern Recognition and Computer Vision. PRCV 2018. Lecture Notes in Computer Science(), vol 11256. Springer, Cham. https://doi.org/10.1007/978-3-030-03398-9_25

Download citation

DOI: https://doi.org/10.1007/978-3-030-03398-9_25

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-03397-2

Online ISBN: 978-3-030-03398-9

eBook Packages: Computer ScienceComputer Science (R0)