Abstract

We address the problem of image registration when speed is more important than accuracy. We present a series of simplification and approximations applicable to almost any pixel-based image similarity criterion. We first sample the image at a set of sparse keypoints in a direction normal to image edges and then create a piecewise linear convex approximation of the individual contributions. We obtain a linear program for which a global optimum can be found very quickly by standard algorithms. The linear program formulation also allows for an easy addition of regularization and trust-region bounds. We have tested the approach for affine and B-spline transformation representation but any linear model can be used. Larger deformations can be handled by multiresolution. We show that our method is much faster than pixel-based registration, with only a small loss of accuracy. In comparison to standard keypoint based registration, our method is applicable even if individual keypoints cannot be reliably identified and matched.

This work was supported by the Czech Science Foundation project 17-15361S.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

Image registration [1] is one of the key image analysis tasks, especially in medical imaging. There are many scenarios, when image registration needs to be fast — consider matching preoperative and intraoperative images during surgery, interactive change detection of CT or MRI data for a busy radiologist, deformation compensation or 3D alignment of large histological slices for a busy pathologist, or processing large amounts of images from today’s high-throughput imaging methods. On the other hand, sub-pixel or even pixel-level accuracy is not always required, which gives us the possibility to trade accuracy for speed. In this work, we shall present such a method.

Feature-based methods (e.g. [2]) are not always suitable for biomedical images, since there are few reliable and distinguishable features (e.g. corners) and weak constraints on the deformation field. The other class of registration methods, based on minimizing a pixel-based image similarity criterion, are often slow. Luckily, it turns out that criterion evaluation can be simplified, without compromising registration accuracy too much. Only a subset of the pixels can be used to evaluate the criterion [3] or its gradient [4], possibly with more weights given to pixels with a high gradient [5]. In the extreme, only edge pixels would be sampled, which leads to the idea of registering images by their segmentations, which can be done e.g. by descriptor matching [6], or region boundary matching [7].

We assume that a correspondences can be found reliably between regions but not between points in their interior. We represent the criterion as a sum of contributions from a set of sampling points placed sparsely on the region boundaries, as in [8]. Our main new insight is that if the region boundary moves in the normal direction, the criterion change is piecewise linear. This allows to formulate the optimization as a linear program (LP), which can be solved very efficiently. We present two variants of our registration method: LPSEG based on a segmentation, and LPNOSEG based on points of high gradient.

1.1 Other Related Work

Taylor and Bhusnurmath [9] create a global piecewise linear approximation of the similarity criterion, considering all pixels and all possible displacements, which is a lower bound of the true criterion. This is robust but slow. Ben-Ezra et al. [10] start by selecting a small set of keypoints using optical flow and a motion model is fitted using linear programming. This method is capable of running at several images per second but requires the motion to be small and the motion model to be simple. Linear programming can also be used for keypoint matching [11].

2 Method

2.1 Similarity Criterion

A pixel dissimilarity measure \(\varrho \) induces an image dissimilarity criterion

where \(\varOmega \subseteq \mathbb {R}^d\) is the image domain and f, g can be intensities, but also texture features, or segmentation labels. This formulation includes directly criteria such as SSD or SAD, while other popular dissimilarity measures such as mutual information can be represented approximately, with \(\varrho \) depending on the images and updated occasionally. During registration, we calculate the criterion \(J_c(f, g')\) between a reference image f and a transformed version \(g'({\varvec{\mathbf x}})=\bigl (g \circ T\bigr )({\varvec{\mathbf x}})=g\bigl (T({\varvec{\mathbf x}})\bigr )\) of the moving image g. Following [8], we assume to be given a set of M points \({\varvec{\mathbf p}}_i\) on the boundaries between regions in the image f, with normals \({\varvec{\mathbf n}}_i\). We are also given an a priori estimate \(T_0\) of T, which is locally close to linear. If \(T_0 \approx T\), then in g there is also a boundary close to a point \({\varvec{\mathbf v}}_i=T_0 ({\varvec{\mathbf p}}_i)\) with a scaled normal \({\varvec{\mathbf m}}_i= \bigl (\nabla T_0({\varvec{\mathbf p}}_i)\bigr ) {\varvec{\mathbf n}}_i\). The displacement along the boundary can be neglected, since both f and g are supposed to change only in the normal direction. Hence, the transformation T can be approximated by its normal projection \(\xi _i\) at points \({\varvec{\mathbf p}}_i\) (see Fig. 1, left)

and the criterion \(J_c\) can be approximated as a sum of contributions at \({\varvec{\mathbf p}}_i\),

The individual contributions are calculated by integration along the normals

with \(\sigma _i\) being the area corresponding to \({\varvec{\mathbf p}}_i\) and \(h_{\text {max}}\) the region width.

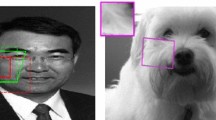

In the NLSEG method, a simple greedy strategy can provide sampling points \({\varvec{\mathbf p}}_i\) on the class boundaries and their normals [8], given a pixel- or superpixel-based segmentations. The NLNOSEG method differs in choosing points of high gradient, pruned with non-maxima suppression, and with \({\varvec{\mathbf n}}_i\) being the gradient direction. See Fig. 3ab for examples.

2.2 Piecewise Linear Approximation

Using the formula \(T({\varvec{\mathbf p}}_i+{\varvec{\mathbf n}}h)\approx {\varvec{\mathbf v}}_i + {\varvec{\mathbf m}}_i(\xi _i + h)\), the integral (5) can be approximated by a sum over h, sampling at 1 pixel intervals:

If \({\varvec{\mathbf p}}_i\) is at a boundary of a segmentation f, then \(f({\varvec{\mathbf p}}_i+{\varvec{\mathbf n}}_i h)\) is going to be equal to some \(f^-\) for \(h<0\) and otherwise to \(f^+\). Similarly, we assume that \(g({\varvec{\mathbf v}}_i+{\varvec{\mathbf m}}_i h)\) is equal to some \(g^-\) for \(h<\zeta _i\) and otherwise to \(g^+\), where \(\zeta _i\) is the unknown normal shift at \({\varvec{\mathbf p}}_i\) due to the difference between \(T_0\) and the true transformation.

The (continuous) contribution \(D_i(\xi _t)\) is a convolution of these step functions:

For \(|t|>h_{\text {max}}\), the contribution \(D_i(\xi _i)\) is constant and does not bring any information. This is avoided by choosing a suitable \(\xi _{\text {max}}\), ensuring that \(D_i(\xi _i)\) is only evaluated for \(|\xi _i|\le \xi _{\text {max}}\), assuming that the true shift also satisfies \(|\zeta _i|\le \xi _{\text {max}}\) and choosing \(h_{\text {max}}\ge 2\xi _{\text {max}}\).

Since the parameters in (7) are in general not known, we shall estimate them as follows: Evaluate \(D_i(\xi )\) for all shifts \(\xi =-\xi _{\text {max}}\dots \xi _{\text {max}}\) using (6). Keeping the minimum and find the slope by least-squares fitting:

and similarly for \(\hat{u}_i^-\). This is both faster and more robust than fitting all four parameters. Note that the value of \(u^0_i\) is not needed and can be dropped.

2.3 Geometric Model and Regularization

A geometrical transformation \(T:\mathbb {R}^d \rightarrow \mathbb {R}^d\) is represented as a linear combination

with some basis functions \(\varphi _j\) and N scalar coefficients \(c_j\). This includes many practically used transformation functions, such as an affine transformation, radial basis functions etc. For nonlinear (elastic) transformations, we shall use uniformly spaced B-splines [12], The image registration problem is then:

with the data criterion \(J({\varvec{\mathbf c}})=J\bigl (T({\varvec{\mathbf c}})\bigr )\) defined by (4) and (9). A regularization R is needed because the image is usually not completely covered by the sampling points \({\varvec{\mathbf u}}_i\) and some coefficients \(c_j\) might therefore not be completely determined. We have chosen to penalize the \(\ell _1\) norms of the coefficients and their first-order finite differences along coordinate axes [13]:

2.4 Linear Program

We can now proceed to formulate the linear program, which will help us recover the optimal transformation parameters \(c_j\), given the contribution approximation parameters \(\hat{u}^+_i\), \(\hat{u}^-_i\), \(\hat{\zeta }_i\) (8). The criterion (11) is written as

where the absolute values from (12) were replaced using inequalities

where (j, k) are pairs of indices of neighboring B-spline coefficients c. Similarly, we replace the piecewise linear model (7) by

It remains to eliminate \(\xi _i\) from the above equations, using the linear relationship between the shifts \(\xi _i\) and transformation coefficients \(c_j\) from (3) and (9)

The resulting LP with approximately \((2+d)N+M\) variables (neglecting the edge cases), namely \(D_i\), \(c_j\), \(r_{jk}\) and \(s_j\), is solved using the simplex or interior point methods.

2.5 Iteration and Multiresolution

Iteration: When the LP is solved, the resulting sampling point positions \(T({\varvec{\mathbf p}}_i)\) are compared with the sampled positions used in (6). If more than a given percentage (e.g. \(10\,\%\)) of the points are considered unacceptable, the procedure is repeated with the initial transformation \(T_0\) replaced by T. A point \(T({\varvec{\mathbf p}}_i)\) is unacceptable if its associated shift \(\xi _i\) is close to the assumed maximum amplitude \(\xi _{\text {max}}\) (usually 5–10 pixels) or if the angular difference with \(T_0({\varvec{\mathbf p}}_i)\) is larger than a given threshold (e.g. \(60^\circ \)).

Multiresolution: At each level, the image size is reduced by a factor of two and the resulting transformation is used as the initial transformation \(T_{0}\) at the next finer level. The number of sampling points is also reduced at each level by taking every second point, unless a desired minimum number of points is achieved. The final multiresolution aspect is that we usually start by an affine transformation and progressively shift to B-spline models with more and more parameters. This is controlled by the desired knot spacing.

3 Experiments

The first example in Fig. 2 shows histological slices and their alignment by the LPSEG algorithm. For these images of \(\approx 800\times 1100\) pixels, the complete registration process takes about \(2\,\text {s}\). However, most of the time is taken by the segmentation; once sampling points are extracted, the registration itself takes only \(0.2\,\text {s}\).

Reference image with sampling points (a) and its segmentation (b) with independently extracted sampling points, segmentation overlay before registration (c). Moving image (d), its segmentation (e), and segmentation overlay after registration (f). Image overlay before registration (g), image overlay after LPSEG registration using segmentation (h) and using LPNOSEG without segmentation (i).

Images in Fig. 3 are already much larger (\(\approx 5000\times 3000\) pixels) and the running time of the LPSEG method has grown to \(36\,\text {s}\), with \(33\,\text {s}\) taken by the segmentation and only \(3\,\text {s}\) by the registration itself. The segmentation-less LPNOSEG is faster, requiring around \(20\,\text {s}\), with \(15\,\text {s}\) to identify the sampling points and \(5\,\text {s}\) for the registration itself. In this case, the registration by LPNOSEG is better (see the bottom edge of Fig. 3hi).

The graphs in the right part of Fig. 1 show the dependency of the running time and the mean registration error (measured using manually identified landmarks [8]) on the number of sampling points for the LPNOSEG algorithm. Note that the error decreases with an increasing number of sampling points and then it stagnates, as further points are probably not sufficiently reliable or accurate. The running time increases with the number of points but only slowly, the dominant part seems to be the preprocessing.

Table 1 (right) compares the GLPKFootnote 1 and GurobiFootnote 2 LP solvers. Finally, the left part of Table 1 compares the speed and accuracy of our two proposed methods (LPSEG, LPNOSEG) with the alternatives mentioned in [8] on a subset of the same dataset with images of size around \(5000\times 3000\) (as in Fig. 3). FRSEG [8] (fast registration of segmented images) is similar to LPSEG but does not use the LP formulation. We see that LPSEG is fast and LPNOSEG even faster. The only other method with comparable speed is the GPU-accelerated version of RNiftyReg. At the same time, our methods are accurate, outperformed only by the slower FRSEG, which is based on the same ideas.

4 Conclusions

We have presented two very fast image registration methods based on simplifying images using segmentations (LPSEG method) and representing the similarity criterion using contributions at a set of sampling points. The novelty here is representing the problem as a linear program, allowing an efficient and robust optimization. We have also shown that segmentation can be sometimes advantageously replaced by edge-finding (LPNOSEG), with a further increase in speed. The bottleneck is currently the segmentation or edge-finding but we hope it should be possible to avoid it by using a faster segmentation algorithm or a GPU implementation.

References

Zitová, B., Flusser, J.: Image registration methods: a survey. Image Vis. Comput. 21, 977–1000 (2003)

Lowe, D.: Distinctive image features from scale-invariant keypoints. Int. J. Comput. Vis. 60(2), 91–110 (2004)

Thévenaz, P.: Halton sampling for image registration based on mutual information. Sampl. Theory Signal and Image Process. 7(2), 141–171 (2008)

Klein, S., Staring, M., Pluim, J.P.W.: Evaluation of optimization methods for nonrigid medical image registration using mutual information and B-splines. IEEE Trans. Image Proc. 16(12), 2879–2890 (2007)

Sabuncu, M., Ramadge, P.: Gradient based nonuniform subsampling for information-theoretic alignment methods. In: Conference of the IEEE Engineering in Medicine and Biology Society, pp. 1683–1686 (2004)

Domokos, C., et al.: Nonlinear shape registration without correspondences. IEEE Trans. Pattern Anal. Mach. Intell. 34(5), 943–958 (2012)

Droske, M., Ring, W.: A Mumford-Shah level-set approach for geometric image registration. SIAM Appl. Math. 66(6), 2127–2148 (2005)

Kybic. J., et al.: Fast registration of segmented images by normal sampling. In: CVPRW: BioImage Computing Workshop, pp. 11–19, June 2015

Taylor, C.J., Bhusnurmath, A.: Solving image registration problems using interior point methods. In: Forsyth, D., Torr, P., Zisserman, A. (eds.) ECCV 2008. LNCS, vol. 5305, pp. 638–651. Springer, Heidelberg (2008). https://doi.org/10.1007/978-3-540-88693-8_47

Ben-Ezra, M.: Real-time motion analysis with linear programming. Comput. Vis. Image Underst. 78(1), 32–52 (2000)

Jiang, H., et al.: Matching by linear programming and successive convexification. IEEE Trans. Pattern Anal. Mach. Intell. 29(6), 959–975 (2007)

Unser, M.: Splines: a perfect fit for signal and image processing. IEEE Signal Processing Magazine 16(6), 22–38 (1999)

Glocker, B.: Dense image registration through MRFs and efficient linear programming. Med. Image Anal. 12(6), 731–741 (2008)

Arganda-Carreras, I., Sorzano, C.O.S., Marabini, R., Carazo, J.M., Ortiz-de-Solorzano, C., Kybic, J.: Consistent and elastic registration of histological sections using vector-spline regularization. In: Beichel, R.R., Sonka, M. (eds.) CVAMIA 2006. LNCS, vol. 4241, pp. 85–95. Springer, Heidelberg (2006). https://doi.org/10.1007/11889762_8

Klein, S., Staring, M., Murphy, K.: Elastix: a toolbox for intensity-based medical image registration. IEEE Trans. Med. Imaging 29(1), 196–205 (2010)

Acknowledgments

The authors acknowledge the support of the Czech Science Foundation project 17-15361S and the OP VVV project CZ.02.1.01/0.0/0.0/16_019/0000765.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2018 Springer Nature Switzerland AG

About this paper

Cite this paper

Kybic, J., Borovec, J. (2018). Fast Registration by Boundary Sampling and Linear Programming. In: Frangi, A., Schnabel, J., Davatzikos, C., Alberola-López, C., Fichtinger, G. (eds) Medical Image Computing and Computer Assisted Intervention – MICCAI 2018. MICCAI 2018. Lecture Notes in Computer Science(), vol 11070. Springer, Cham. https://doi.org/10.1007/978-3-030-00928-1_88

Download citation

DOI: https://doi.org/10.1007/978-3-030-00928-1_88

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-00927-4

Online ISBN: 978-3-030-00928-1

eBook Packages: Computer ScienceComputer Science (R0)