Abstract

Caching in the context of expressive query languages such as SPARQL is complicated by the difficulty of detecting equivalent queries: deciding if two conjunctive queries are equivalent is NP-complete, where adding further query features makes the problem undecidable. Despite this complexity, in this paper we propose an algorithm that performs syntactic canonicalisation of SPARQL queries such that the answers for the canonicalised query will not change versus the original. We can guarantee that the canonicalisation of two queries within a core fragment of SPARQL (monotone queries with select, project, join and union) is equal if and only if the two queries are equivalent; we also support other SPARQL features but with a weaker soundness guarantee: that the (partially) canonicalised query is equivalent to the input query. Despite the fact that canonicalisation must be harder than the equivalence problem, we show the algorithm to be practical for real-world queries taken from SPARQL endpoint logs, and further show that it detects more equivalent queries than when compared with purely syntactic methods. We also present the results of experiments over synthetic queries designed to stress-test the canonicalisation method, highlighting difficult cases.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

These keywords were added by machine and not by the authors. This process is experimental and the keywords may be updated as the learning algorithm improves.

1 Introduction

SPARQL endpoints often encounter performance problems in practice: in a survey of hundreds of public SPARQL endpoints, Buil-Aranda et al. [2] found that many such services have mixed reliability and performance, often returning errors, timeouts or partial results. This is not surprising: SPARQL is an expressive query language that encapsulates and extends the relational algebra, where even the simplified decision problem of verifying if a given solution is contained in the answers of a given SPARQL query for a given database is known to be PSpace-complete [17] (combined complexity). Furthermore, evaluating SPARQL queries may involve an exponential number of (intermediate) results. Hence, rather than aiming to efficiently support all queries over all database instances for all users, the goal is rather to continuously improve performance: to increase the throughput of the most common types of queries answered.

An obvious means by which to increase throughput of query processing is to re-use work done for previous queries when answering future queries by caching results. In the context of caching for SPARQL, however, there are some significant complications. While many engines may apply low-level caches to avoid, e.g., repeated index accesses, generating answers from such data can still require a lot of higher-level query processing. On the other hand, caching at the level of queries or subqueries is greatly complicated by the fact that a given abstract query can be expressed in myriad equivalent ways in SPARQL.

Addressing the latter challenge, in this paper we propose a method by which SPARQL queries can be canonicalised, where the canonicalised version of two queries \(Q_1\) and \(Q_2\) will be (syntactically) identical if \(Q_1\) and \(Q_2\) are equivalent: having the same results for any dataset. Furthermore, we say that two queries \(Q_1\) and \(Q_2\) are congruent if and only if they are equivalent modulo variable names, meaning we can rewrite the variables of \(Q_2\) in a one-to-one manner to generate a query equivalent to \(Q_1\); our proposed canonicalisation method then aims to give the same output for queries \(Q_1\) and \(Q_2\) if and only if they are congruent, which will allow us to find additional queries useful for applications such as caching.

Example 1

Consider two queries \(Q_A\) and \(Q_B\) asking for names of aunts:

Both queries are congruent: if we rewrite the variable ?n to ?z in \(Q_B\), then both queries are equivalent and will return the same results for any RDF dataset. Canonicalisation aims to rewrite both queries to the same syntactic form. \(\square \)

Our main use-case for canonicalisation is to improve caching for SPARQL endpoints: by capturing knowledge about query congruence, canonicalisation can increase the hit rate for a cache of (sub-)queries [16]. Furthermore, canonicalisation may be useful for analysis of SPARQL logs: finding repeated/congruent queries without pair-wise equivalence checks; query processing: where optimisations can be applied over canonical/normal forms; and so forth.

A fundamental challenge for canonicalising SPARQL queries is the high computational complexity that it entails. More specifically, the query equivalence problem takes two queries \(Q_1\) and \(Q_2\) and returns true if and only if they return the same answers for any database instance. In the case of SPARQL, this problem is NP-complete even when simply permitting joins (conjunctive queries). Even worse, the problem becomes undecidable when features such as projection and optional matches are combined [18]. Canonicalisation is then at least as hard as the equivalence problem, meaning it will likewise be intractable for even simple fragments and undecidable when considering the full SPARQL language.

We thus propose a canonicalisation procedure that does not change the semantics of an input query (i.e., is correct) but may miss congruent queries (i.e., is incomplete) for certain features. We deem such guarantees to be sufficient for use-cases where completeness is not a strong requirement, as in the case of caching where missing a congruent query will require re-executing the query (which would have to be done in any case). For monotone queries [19] in a core SPARQL fragment, we provide both correctness and completeness guarantees.

The procedure we propose is based on first converting SPARQL queries to a graph-based (RDF) algebraic representation. We then initially apply canonical labelling to the graph to consistently name variables, thereafter converting the graph back to a SPARQL query following a fixed syntactic ordering. The resulting query then represents the output of a baseline canonicalisation procedure for SPARQL. To support further SPARQL features such as UNION, we extend this procedure by applying normal forms and minimisation over the intermediate algebraic graph prior to its canonicalisation. Currently we focus on canonicalising SELECT queries from SPARQL 1.0. However, our canonicalisation techniques can be extended to other types of queries (ASK, CONSTRUCT, DESCRIBE) as well as the extended features of SPARQL 1.1 (including aggregation, property paths, etc.) while maintaining correctness guarantees; this is left to future work.

Extended Version: An online version of this paper provides additional definitions, proofs, and experimental results [20].

2 Preliminaries

RDF: We first introduce the RDF data model, as well as notions of isomorphism and equivalence relevant to the canonicalisation procedure discussed later.

Terms and Graphs. RDF assumes three pairwise disjoint sets of terms: IRIs: \(\mathbf {I}\), literals \(\mathbf {L}\) and blank nodes \(\mathbf {B}\). An RDF triple (s, p, o) is composed of three terms – called subject, predicate and object – where \(s \in \mathbf {I} \mathbf {B} \), \(p \in \mathbf {I} \) and \(o \in \mathbf {I} \mathbf {L} \mathbf {B} \).Footnote 1 A finite set of RDF triples is called an RDF graph \(G \subseteq \mathbf {I} \mathbf {B} \times \mathbf {I} \times \mathbf {I} \mathbf {B} \mathbf {L} \).

Isomorphism. Blank nodes are defined as existential variables [10] where two RDF graphs differing only in blank node labels are thus considered isomorphic [7]. Formally, let \(\mu : \mathbf {I} \mathbf {B} \mathbf {L} \rightarrow \mathbf {I} \mathbf {B} \mathbf {L} \) denote a mapping of RDF terms to RDF terms such that \(\mu \) is the identity on \(\mathbf {I} \mathbf {L} \) (\(\mu (x) = x\) for all \(x \in \mathbf {I} \mathbf {L} \)); we call \(\mu \) a blank node mapping; if \(\mu \) maps blank nodes to blank nodes in a one-to-one manner, we call it a blank node bijection. Let \(\mu (G)\) denote the image of an RDF graph G under \(\mu \) (applying \(\mu \) to each term in G). Two RDF graphs \(G_1\) and \(G_2\) are defined as isomorphic – denoted \(G_1 \cong G_2\) – if and only if there exists a blank node bijection \(\mu \) such that \(\mu (G_1) = G_2\). Given two RDF graphs, the problem of determining if they are isomorphic is GI-complete [11], meaning the problem is in the same complexity class as the standard graph isomorphism problem.

Equivalence. The equivalence relation captures the idea that two RDF graphs entail each other [10]. Two RDF graphs \(G_1\) and \(G_2\) are equivalent – denoted \(G_1 \equiv G_2\) – if and only if there exists two blank node mappings \(\mu _1\) and \(\mu _2\) such that \(\mu _1(G_1) \subseteq G_2\) and \(\mu _2(G_2) \subseteq G_1\) [8]. A graph may be equivalent to a smaller graph (due to redundancy). We thus say that an RDF graph G is lean if it does not have a proper subset \(G' \subset G\) such that \(G \equiv G'\); otherwise we can say that it is non-lean. Furthermore, we can define the core of a graph G as a lean graph \(G'\) such that \(G \equiv G'\); the core of a graph is known to be unique modulo isomorphism [8]. Determining equivalence between RDF graphs is known to be NP-complete [8]. Determining if a graph G is lean is known to be coNP-complete [8]. Finally, determining if a graph \(G'\) is the core of a second graph G is known to be DP-complete [8].

Graph Canonicalisation. Our method for canonicalising SPARQL queries involves representing the query as an RDF graph, applying canonicalisation techniques over that graph, and mapping the canonical graph back to a SPARQL query. As such, our query canonicalisation method relies on an existing graph canonicalisation framework for RDF graphs called Blabel [12]; this framework offers a sound and complete method to canonicalise graphs with respect to isomorphism (\(\textsc {iCan}(G)\)) or equivalence (\(\textsc {eCan}(G)\)). Both methods have exponential worst-case behaviour; as discussed, the underlying problems are intractable.

SPARQL. We now provide preliminaries for the SPARQL query language [9]. For brevity, our definitions focus on SPARQL monotone queries (mqs) [19] – permitting selection (\(=,\wedge ,\vee \))Footnote 2, join, union and projection – for which we can offer sound and complete canonicalisation.

Syntax. Let \(\mathbf {V} \) denote a set of query variables disjoint with \(\mathbf {I} \mathbf {B} \mathbf {L} \). We define the abstract syntax of a SPARQL mq as follows:

-

1.

A triple pattern t is a member of the set \(\mathbf {V} \mathbf {I} \mathbf {B} \times \mathbf {V} \mathbf {I} \times \mathbf {V} \mathbf {I} \mathbf {B} \mathbf {L} \) (i.e., an RDF triple allowing variables in any position). A triple pattern is a query pattern.

-

2.

If both \(Q_1\) and \(Q_2\) are query patterns, then [\(Q_1\,\textsc {and}\,Q_2\)], and [\(Q_1\,\textsc {union}\,Q_2\)] are also query patterns.

-

3.

If Q is a query pattern and V is a set of variables such that for all \(v \in V\), v appears in some triple pattern contained in Q, then \(\textsc {select}_{V}(Q)\) is a query.Footnote 3

Blank nodes in SPARQL queries are considered to be non-distinguished query variables where we will assume they have been replaced with fresh query variables. Per the final definition, we currently do not support subqueries and assume, w.l.o.g., that all queries have a projection \(\textsc {select}_{V}(Q)\).

Algebra. We will now define an algebra for such queries. A solution \(\mu \) is a partial mapping from variables in \(\mathbf {V} \) appearing in the query to constants from \(\mathbf {I} \mathbf {B} \mathbf {L} \) appearing in the data. Let \(\mathrm {dom}(\mu )\) denote the variables for which \(\mu \) is defined. We say that two mappings \(\mu _1\) and \(\mu _2\) are compatible, denoted \(\mu _1 \sim \mu _2\), when \(\mu _1(v) = \mu _2(v)\) for every \(v \in \mathrm {dom}(\mu _1) \cap \mathrm {dom}(\mu _2)\). Letting M, \(M_1\) and \(M_2\) denote sets of solutions, we define the algebra as follows:

Union is defined here in the SPARQL fashion as a union of mappings, rather than relational algebra union: the former can be applied over solution mappings with different domains, while the latter does not allow this.

Semantics. Letting Q denote an mq pattern in the abstract syntax, we denote the evaluation of Q over an RDF graph G as \(Q(G)\). Before defining \(Q(G)\), first let t denote a triple pattern; then by \(\mathbf {V} (t)\) we denote the set of variables appearing in t and by \(\mu (t)\) we denote the image of t under a solution \(\mu \). Finally, we can define \(Q(G)\) recursively as follows:

Set vs. Bag. The previous definitions assume a set semantics for query answering, meaning that no duplicate mappings are returned as solutions [17]. However, the SPARQL standard, by default, considers a bag (aka. multiset) semantics for query answering [9], where the cardinality of a solution in the results captures information about how many times the query pattern matched the underlying dataset [1]. We thus use the extended syntax \(\textsc {select}^\varDelta _{V}(Q)\), where \(\varDelta =\texttt {true}\) indicates set semantics and \(\varDelta =\texttt {false}\) indicates bag semantics.

Containment and Equivalence. Query containment asks: given two queries \(Q_1\) and \(Q_2\), does it hold that \(Q_1(G) \subseteq Q_2(G)\) for all possible RDF graphs G? If so, we say that \(Q_2\) contains \(Q_1\), which we denote by the relation \(Q_1 \sqsubseteq Q_2\). On the other hand, query equivalence asks, given two queries \(Q_1\) and \(Q_2\), does it hold that \(Q_1(G) = Q_2(G)\) for all possible RDF graphs G? In other words, \(Q_1\) and \(Q_2\) are equivalent if and only if \(Q_1\) and \(Q_2\) contain each other. If so, we say that \(Q_1 \equiv Q_2\). In this paper, we relax the equivalence notion to ignore labelling of variables; more formally, let \(\nu : \mathbf {V} \rightarrow \mathbf {V} \) be a one-to-one mapping of variables and, slightly abusing notation, let \(\nu (Q)\) denote the image of Q under \(\nu \) (rewriting variables in Q wrt. \(\nu \)); we say that \(Q_1\) and \(Q_2\) are congruent (denoted \(Q_1 \cong Q_2\)) if and only if there exists \(\nu \) such that \(Q_1 \equiv \nu (Q_2)\). An example of such query congruence was provided in Example 1.

The complexity of query containment and equivalence vary from NP-complete when just and is allowed (with triple patterns), upwards to undecidable once, e.g., projection and optional matches are added [18]. For mqs, containment and equivalence are NP-complete for the related query class of Unions of Conjunctive Queries (ucqs) [19], which allow the same features as mqs but disallow joins over unions. Interestingly, though mqs and ucqs are equivalent query classes – i.e., for any ucq there is an equivalent mq and vice-versa – containment and equivalence for mqs jumps to \(\varPi _{2}^{P}\)-complete [19]. Intuitively this is because mqs are more succinct than ucqs; for example, to find a path of length n where each node is of type A or B, we can create an \(\textsc {mq} \) of size O(n), but it requires a \(\textsc {ucq} \) of size \(O(2^{n})\). We consider \(\textsc {mq}s \) since real-world SPARQL queries may arbitrarily nest joins and unions (canonicalisation will rewrite them to \(\textsc {ucq}s \)).

Most of the above results have been developed under set semantics. In terms of bag semantics, we can consider an analogous containment problem: that the answers of \(Q_1\) are a subbag of the answers of \(Q_2\), meaning that the multiplicity of an answer in \(Q_1\) is always less-than-or-equals the multiplicity of the same answer in \(Q_2\). In fact, the decidability of this problem remains an open question [4]; on the other hand, the equivalence problem is GI-complete [4], and thus in fact probably easier than the case for set semantics (assuming GI \(\ne \) NP): under bag semantics, conjunctive queries cannot have redundancy, so intuitively speaking we can test a form of isomorphism between the two queries.

3 Related Work

Various works have presented complexity results for query containment and equivalence of SPARQL [5, 13, 14, 18, 23, 24]. With respect to implementations, only one dedicated library has been released to check whether or not two SPARQL queries are equivalent: SPARQL Algebra [14]. The problem of determining equivalence of SPARQL queries can, however, be solved by reductions to related problems, where Chekol et al. [6] have used a \(\mu \)-calculus solver and an XPath-equivalence checker to implement SPARQL equivalence checks. Recently Saleem et al. [22] compared these SPARQL query containment methods using a benchmark based on real-world query logs; we use these same logs in our evaluation. These works do not deal with canonicalisation; using an equivalence checker would require quadratic pairwise checks to determine all equivalences in a set or stream of queries; hence they are impractical for a use-case such as caching.

To the best of our knowledge, little work has been done specifically on canonicalisation of SPARQL queries. In analyses of logs, some authors [3, 21] have proposed some syntactic canonicalisation methods – such as normalising whitespace or using a SPARQL library to format the query – that do manage to detect some duplicates, but not more complex cases such as per Example 1. Rather the most similar work to ours (to the best of our knowledge) is the SPARQL caching system proposed by Papailiou et al. [16], which uses a canonical labelling algorithm (specifically Bliss) to assign consistent labels to variables, allowing to recall isomorphic graph patterns from the cache for SPARQL queries. However, their work does not consider factoring out redundancy caused by query operators (aka. minimisation), and hence they would not capture equivalences as in the case of Example 1. In general, our work focuses on canonicalisation of queries whereas the work of Papailiou et al. [16] is rather focused on caching; compared to them we capture a much broader notion of query equivalence than their approach based solely on canonical labelling of query variables. It is worth noting that we are not aware of similar methods for canonicalising SQL queries.

4 Query Canonicalisation

Our approach for canonicalising SPARQL mqs involves representing the query as an RDF graph, performing a canonicalisation of the RDF graph (including the application of algebraic rewritings, minimisation and canonical labelling), ultimately mapping the resulting graph back to a final canonical SPARQL ucq.

4.1 Representational Graph for UCQs

The mq class is closed under join and union (see \(Q_A\), Example 1). As the first query normalisation step, we will convert mq queries to ucqs of the form \(\textsf {select}^\varDelta _{V}(\textsf {union}(\{ \textsf {and}(\{ Q_1^1, \ldots Q_m^1 \}),\ldots ,\textsf {and}(\{ Q_1^k, \ldots Q_n^k \}) \}))\) following a standard DNF-style expansion (we refer to the extended version for more details [20]). The output ucq may be exponential in size. Thereafter, given such a ucq, we define its representational graph (or \(\textsc {r}\text {-graph}\) for short) as follows.

Definition 1

Let \(\beta ()\) denote a function that returns a fresh blank node and \(\beta (x)\) a function that returns a blank node unique to x. Let \(\iota (\cdot )\) denote an id function such that if \(x \in \mathbf {I} \mathbf {L} \), then \(\iota (x) = x\); otherwise if \(x \in \mathbf {V} \mathbf {B} \), then \(\iota (x) = \beta (x)\). Finally, let Q be a ucq; we define \(\textsc {r}(Q)\), the \(\textsc {r}\text {-graph}\) of Q, as follows:

-

If Q is a triple pattern (s, p, o), then \(\iota (Q)\) is set as \(\beta ()\) and

$$\textsc {r}(Q) = \{ (\iota (Q),\mathtt {:s},\iota (s)), (\iota (Q),\mathtt {:p},\iota (p)), (\iota (Q),\mathtt {:o},\iota (o)), (\iota (Q),\mathtt {a},\mathtt {:TP}) \}$$ -

If Q is \(\textsf {and}(\{Q_1,\ldots ,Q_n\})\), then \(\iota (Q)\) is set as \(\beta ()\) and

$$\begin{aligned} \textsc {r}(Q)&= \{ (\iota (Q),\mathtt {:arg},\iota (Q_1)),\ldots , (\iota (Q),\mathtt {:arg},\iota (Q_n)), (\iota (Q),\mathtt {a},\mathtt {:And}) \}\\&\qquad \cup \, \textsc {r}(Q_1) \cup ... \cup \textsc {r}(Q_n) \end{aligned}$$ -

If Q is \(\textsf {union}(\{Q_1,\ldots ,Q_n\})\), then \(\iota (Q)\) is set as \(\beta ()\) and

$$\begin{aligned} \textsc {r}(Q)&= \{ (\iota (Q),\mathtt {:arg},\iota (Q_1)),\ldots , (\iota (Q),\mathtt {:arg},\iota (Q_n)), (\iota (Q),\mathtt {a},\mathtt {:Union}) \}\\&\qquad \cup \, \textsc {r}(Q_1) \cup ... \cup \textsc {r}(Q_n) \end{aligned}$$ -

If Q is \(\textsf {select}^\varDelta _{V}(Q_1)\), then \(\iota (Q)\) is set as \(\beta ()\) and

$$\begin{aligned} \textsc {r}(Q)&= \{ (\iota (Q),\mathtt {:arg},\iota (Q_1)), (\iota (Q),\mathtt {:distinct},\varDelta ), (\iota (Q),\mathtt {a},\mathtt {:Select}) \}\\&\qquad \cup \,\{ (\iota (Q),\mathtt {:var},\iota (v)) \mid v \in V \} \cup \textsc {r}(Q_1) \end{aligned}$$

where “\(\mathtt {a}\)” abbreviates \(\mathtt {rdf:type}\) and \(\varDelta \) is a boolean datatype literal. \(\square \)

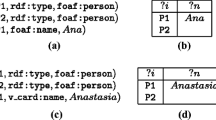

Example 2. Here we provide an example of the \(\textsc {r}\text {-graph}\) for query \(Q_A\) and \(Q_B\) in Example 1: the \(\textsc {r}\text {-graph}\) has the same structure for both queries assuming that a ucq normal form is applied beforehand (to \(Q_A\) in particular). For clarity, we embed the types of nodes into the nodes themselves; e.g., the uppermost node expands to  .

.

Due to the application of ucq normal forms, we have a projection, over a union, over a set of joins, where each join involves one or more triple patterns. \(\square \)

We also define the inverse \(\textsc {r}^{-}(\textsc {r}(Q))\), mapping an \(\textsc {r}\text {-graph}\) back to a ucq query, such that \(\textsc {r}^{-}(\textsc {r}(Q))\) is congruent to the Q [20].

4.2 Projection with Union

Unlike the relational algebra, SPARQL mqs allow unions of query patterns whose sets of variables are not equal. This may give rise to existential variables, which in turn can lead to further equivalences that must be considered [19].

Example 3

Returning to Example 1, consider a query \(Q_C \equiv Q_B\), a minor variant of \(Q_B\) using different non-projected variables in the union:

Such unions are permitted in SPARQL. Likewise we could rename both occurrences of ?a on the left of the union in \(Q_C\) without changing the solutions since ?a is not projected. Any correspondences between non-projected variables across a union are thus syntactic and do not affect the semantics of the query. \(\square \)

We thus distinguish the blank node representing every non-projected variable in each cq of the \(\textsc {r}\text {-graph}\) produced previously. Letting G denote \(\textsc {r}(Q)\), we define the cq roots of G as \(\mathsf {cq}(G) = \{ y \mid (y,\mathtt {a},\mathtt {:And}) \in G \}\). Given a term r and a graph G, we define G[r] as the sub-graph of G rooted in r, defined recursively as \(G[r]_0 = \{ (s,p,o) \in G \mid s = r \}\), \(G[r]_i = \{ (s,p,o) \in G \mid \exists x,y : (x,y,s) \in G[r]_{i-1} \} \cup G[r]_{i-1}\), with \(G[r] = G[r]_n\) such that \(G[r]_n = G[r]_{n+1}\) (the fixpoint).

We denote the blank nodes representing variables in G by  , and we denote the blank nodes representing unprojected variables in G by \(\mathsf {uvar}(G) = \{ v \in \mathsf {var}(G) \mid \not \exists s : (s,\mathtt {:var},v) \in G \}\). Finally we denote the blank nodes representing projected variables in G by \(\mathsf {pvar}(G) = \mathsf {var}(G) \setminus \mathsf {uvar}(G)\). We can now define how variables are distinguished.

, and we denote the blank nodes representing unprojected variables in G by \(\mathsf {uvar}(G) = \{ v \in \mathsf {var}(G) \mid \not \exists s : (s,\mathtt {:var},v) \in G \}\). Finally we denote the blank nodes representing projected variables in G by \(\mathsf {pvar}(G) = \mathsf {var}(G) \setminus \mathsf {uvar}(G)\). We can now define how variables are distinguished.

Definition 2

Let G denote \(\textsc {r}(Q)\) for a ucq Q. We define the variable distinguishing function \(\textsc {d}(G)\) as follows. If there does not exist a blank node x such that \((x,\mathtt {a},\mathtt {:Union}) \in G\), then \(\textsc {d}(G) = G\). Otherwise if such a blank node exists, we define \(\textsc {d}(G) = \{ (s,p,\delta (o)) \mid (s,p,o) \in G \}\), where \(\delta (o) = o\) if \(o \not \in \mathsf {uvar}(G)\); otherwise \(\delta (o) = \beta (r,o)\) such that \(r \in \mathsf {cq}(G)\) and \((s,p,o) \in G[r]\). \(\square \)

In other words, \(\textsc {d}(G)\) creates a fresh blank node for each non-projected variable appearing in the representation of a cq in G as previously motivated.

4.3 Minimisation

Under set semantics, ucqs may contain redundancy whereby, for the purposes of canonicalisation, we will apply minimisation to remove redundant triple patterns while maintaining query equivalence. After applying ucq normalisation, the \(\textsc {r}\text {-graph}\) now represents a ucq of the form  , with each \(Q_1, \ldots , Q_n\) being a cq and V being the set of projected variables. Under set semantics, we then first remove intra-cq redundancy from the individual cqs; thereafter we remove inter-cq redundancy from the overall ucq.

, with each \(Q_1, \ldots , Q_n\) being a cq and V being the set of projected variables. Under set semantics, we then first remove intra-cq redundancy from the individual cqs; thereafter we remove inter-cq redundancy from the overall ucq.

Bag Semantics. We briefly note that if projection with bag semantics is selected, the ucq can only contain one (syntactic) form of redundancy: exact duplicate triple patterns in the same cq. Any other form of redundancy mentioned previously – be it intra-cq or inter-cq redundancy – will affect the multiplicity of results [4]. Hence if bag semantics is selected, we do not apply any redundancy elimination other than removing duplicate triple patterns in cqs.

Set-Semantics/CQs. We now minimise the individual cqs of the \(\textsc {r}\text {-graph}\) by computing the core of the sub-graph induced by each cq independently. But before computing the core, we must ground projected variables to avoid their removal during minimisation. Along these lines, let G denote an \(\textsc {r}\text {-graph}\) \(\textsc {d}(\textsc {r}(Q))\) of Q. We define the grounding of projected variables as follows: \(L(G) = \{ (s,p,\lambda (o)) \mid (s,p,o) \in G \}\), where if o denotes a projected variable, \(\lambda (o) = \mathtt {:o}\) for \(\mathtt {:o}\) a fresh IRI computed for o; otherwise \(\lambda (o) = o\). We assume for brevity that variable IRIs created by \(\lambda \) can be distinguished from other IRIs. Finally, let \(\mathsf {core}(G)\) denote the core of G. We can then minimise each cq as follows.

Definition 3

Let G denote \(\textsc {d}(\textsc {r}(Q))\). We define the cq-minimisation of G as \(\textsc {c}(G) = \{ \mathsf {core}(L(G[x])) \mid x \in \mathsf {cq}(G) \}\). We call \(C \in \textsc {c}(G)\) a CQ core. \(\square \)

Example 4

Consider the following query, \(Q_D\):

This query is congruent to the previous queries \(Q_A\), \(Q_B\), \(Q_C\). After applying ucq normal forms, we end up with the following \(\textsc {r}\text {-graph}\) for \(Q_D\):

We then replace the blank node for the projected variable \(\mathtt {?z}\) with a fresh IRI, and compute the core of the sub-graph for each cq (the graph induced by the cq node with type \(\mathtt {:And}\) and any node reachable from that node in the directed \(\textsc {r}\text {-graph}\)). Figure 1 depicts the sub-\(\textsc {r}\text {-graph}\) representing the third cq (omitting the \(\mathtt {:And}\)-typed root node for clarity since it will not affect computing the core). The dashed sub-graph will be removed from the core per the map: \(\{\) _:vx3/_:vd3, _:t35/_:t32, _:t33/_:t32, _:vp3/\(\mathtt {:sister}\), _:ve3/_:vy3, _:t34/_:t36, _:vf3/\(\mathtt {:vz}\), ...\(\}\), with the other nodes mapped to themselves. Observe that the projected variable \(\mathtt {:vz}\) is now an IRI, and hence it cannot be removed from the graph.

If we consider applying this core computation over all three conjunctive queries, we would end up with an \(\textsc {r}\text {-graph}\) corresponding to the following query:

We see that the projected variable is preserved in all cqs. However, we are still left with (inter-cq) redundancy between the first and third cqs. \(\square \)

Set Semantics/UCQs. After minimising individual cqs, we may still be left with a union containing redundant cqs as highlighted by Example 4. Hence we must now apply a higher-level minimisation of redundant cqs. While it may be tempting to simply compute the core of the entire \(\textsc {r}\text {-graph}\) – as would work for Example 4 and, indeed, as would also work for unions in the relational algebra – unfortunately SPARQL union again raises some non-trivial complications [19].

Example 5

Consider the following (unusual) query:

If we were to compute the core over the \(\textsc {r}\text {-graph}\) for the entire ucq, we would remove the second cq as follows:

This would leave us with the following query:

But this has changed the query semantics where we lose non-cousin values. \(\square \)

Instead, we must check containment between pairs of cqs [19]. Let  denote the ucq under analysis. We need to remove from Q:

denote the ucq under analysis. We need to remove from Q:

-

1.

all \(Q_i\) (\(1 \le i \le n\)) such that there exists \(Q_j\) (\(1 \le j < i \le n\)) such that \(\textsf {select}_{V}(Q_i) \equiv \textsf {select}_{V}(Q_j)\); and

-

2.

all \(Q_i\) (\(1 \le i \le n\)) where there exists \(Q_j\) (\(1 \le j \le n\)) such that \(\textsf {select}_{V}(Q_i) \sqsubset \textsf {select}_{V}(Q_j)\) (i.e., proper containment where \(\textsf {select}_{V}(Q_i) \not \equiv \textsf {select}_{V}(Q_j)\));

The former condition removes all but one cq from each group of equivalent cqs while the latter condition removes all cqs that are properly contained in another cq. With respect to SPARQL union, note that these definitions apply to cases where cqs have different variables. More explicitly, let \(V_1, \ldots , V_n\) denote the projected variables appearing in \(Q_1, \ldots , Q_n\), respectively. Observe that \(\textsf {select}_{V_i}(Q_i) \sqsubseteq \textsf {select}_{V_j}(Q_j)\) can only hold if \(V_i = V_j\): assume without loss of generality that \(v \in V_i \setminus V_j\), where v must then generate unbounds in \(V_j\), creating a mapping \(\mu \), \(v \in \mathrm {dom}(\mu )\), that can never appear in \(V_i\).Footnote 4

To implement condition (1), let us first assume that all cqs contain all projection variables such that no unbounds can be returned. Note that in the previous step we have computed the cores of cqs in \(\textsc {c}(G)\) and hence it is sufficient to check for isomorphism between them; we can thus take the current \(\textsc {r}\text {-graph}\) \(G_i\) for each \(Q_i\) and apply iso-canonicalisation of \(G_i\) [12], removing any other \(Q_j\) (\(j > i\)) whose \(G_j\) is isomorphic. Thereafter, to implement condition (2), we can check if there exists a blank node mapping \(\mu \) such that \(\mu (G_j) \subseteq G_i\), for \(i \ne j\) (which is equivalent to checking simple entailment: \(G_i \models G_j\) [8]).

Now we drop the assumption that all cqs contain all variables in V, meaning that we can generate unbounds. To resolve such cases, we can partition \(\{ Q_1 , \ldots , Q_n \}\) into various sets of cqs based on the projected variables they contain, and then apply equivalence and containment checks in each part.

Definition 4

Let \(\textsc {c}(G) = \{ C_1, \ldots , C_n \}\) denote the cq cores of \(G = \textsc {d}(\textsc {r}(Q))\). A cq core \(C_i\) is in \(\textsc {e}(G)\) iff \(C_i \in \textsc {c}(G)\) and there does not exist a cq core \(C_j \in \textsc {c}(G)\) (\(i \ne j\)) such that: \(\mathsf {pvar}(C_i)\) = \(\mathsf {pvar}(C_j)\); and \(C_i \cong C_j\) with \(j < i\) or \(C_j \models C_i\). \(\square \)

Definition 5

Let \(\textsc {e}(G) = \{ C_1, \ldots , C_n \}\) denote the minimal cq cores of \(G = \textsc {d}(\textsc {r}(Q))\). Let \(P = \{ (s,p,o) \in G \mid \exists (s,\mathtt {a},\mathtt {:Select}) \in G \}\) and \(U = \{ (s,p,o) \in G \mid \exists (s,\mathtt {a},\mathtt {:Union}) \in G\text {, and }p = \mathtt {:arg}\text { implies }\exists C \in \textsc {e}(G) : \{ o \} = \mathsf {cq}(C)\}\). We define the minimisation of G as \(\textsc {m}(G) = \bigcup _{G' \in \textsc {e}(G)} L^{-}(G') \cup P \cup U\), where \(L^{-}(G')\) denotes the replacement of variable IRIs with their original blank nodes. \(\square \)

The result is an \(\textsc {r}\text {-graph}\) representing a redundancy-free ucq.

4.4 Canonical Labelling and Query Generation

We take the minimal \(\textsc {r}\text {-graph}\) \(\textsc {e}(G)\) generated by the previous methods and apply the iso-canonicalisation method \(\textsc {iCan}(\textsc {e}(G))\) to generate canonical labels for the blank nodes in \(\textsc {e}(G)\); having normalised the ucq algebra and removed redundancy, applying this process will finally abstract away the naming of variables in the original query from the \(\textsc {r}\text {-graph}\). Then we are left to map from the \(\textsc {r}\text {-graph}\) back to a query, which we do by applying \(\textsc {r}^{-}(\textsc {iCan}(\textsc {e}(G)))\); in \(\textsc {r}^{-}(\cdot )\), we order triple patterns in CQs, CQs in UCQs and variables in the projection lexicographically. The result is the final canonicalised ucq in SPARQL syntax. Soundness and completeness results for mqs are given in the extended version [20].

4.5 Other Features

We can represent other (non-mq) features of SPARQL (e.g., filters, optional, etc.) as an \(\textsc {r}\text {-graph}\) in an analogous manner to that presented here; thereafter, we can apply canonical labelling over that graph without affecting the semantics of the underlying query. However, we must be cautious with ucq rewriting and minimisation techniques. Currently in queries with non-ucq features, we detect subqueries that are ucqs (i.e., use only join and union) and apply normalisation only on those ucq subqueries considering any variable also used outside the ucq as a virtual projected variable. Combined with canonical labelling, this provides a cautious (i.e., sound but incomplete) canonicalisation of non-mq queries.

4.6 Implementation

We implement the described canonicalisation procedure using two main libraries: Jena for parsing and executing SPARQL queries; and Blabel for computing the core of RDF graphs and applying canonical labelling. The containment checks over cqs are implemented using SPARQL ASK queries (with Jena). In the following, we refer to our system as QCan: Query Canonicalisation. Source code is available at https://github.com/RittoShadow/QCan, while a simple online demo can be found at http://qcan.dcc.uchile.cl/.

5 Evaluation

We now evaluate the proposed canonicalisation procedure for monotone SPARQL queries. In particular, the main research questions to be empirically assessed are as follows. RQ1: How is the performance of canonicalisation? RQ2: How many additional duplicate queries can the canonicalisation process expect to find versus baseline syntactic methods in a real-world setting? To address these questions, we present two experimental settings. In the first setting, we apply our canonicalisation method over queries from the Linked SPARQL Queries (LSQ) dataset [21], which contains queries taken from the logs of four public SPARQL endpoints. In the second setting, we create a benchmark of more difficult synthetic queries designed to stress-test the process. All experiments were run on a single machine with two Intel Xeon E5-2609 V3 CPUs and 32 GB of RAM running Debian v.7.11.

5.1 Real-World Setting

In the first setting, we perform experiments over queries from endpoint logs taken from the LSQ dataset [21], where we extract the unique strings for SELECT queries that could be parsed successfully by Jena (i.e., that were syntactically valid), resulting in 768,618 queries (see the extended version [20] for details). Over these queries, we then apply three experiments for increasingly complete and expensive canonicalisation, as follows. Syntactic: We pass the query through the Jena SPARQL parser and serialiser, parsing the query into an abstract algebra and then writing the algebraic query back to a SPARQL query. QCan-Label: We parse the query, applying canonical labelling to the query variables and reordering triple patterns according to the order of the canonical labels. QCan-Full: We apply the entire canonicalisation procedure, including parsing, labelling, ucq rewriting, minimisation, etc. We can now address our research questions.

(RQ1:) Per Table 1, canonicalising with QCan-Label is 127 times slower than the baseline Syntactic method, while QCan-Full is 365 times slower than Syntactic and 2.7 times slower than QCan-Label; however, even for the slowest method QCan-Full, the mean canonicalisation time per query is a relatively modest 100 ms. In more detail, Fig. 2 provides boxplots for the runtimes over the queries; we see that most queries under the Syntactic canonicalisation generally take around 0.1–0.3 ms, while most queries under QCan-Label and QCan-Full take 10–100 ms. We did, however, find queries requiring longer: approximately 2.5 s in isolated worst cases for QCan-Full.

(RQ2:) Canonicalising with QCan-Label finds 2.7 times more duplicates than the baseline Syntactic method. On the other hand, canonicalising with QCan-Full finds no more duplicates than QCan-Label: we believe that this observation can be explained by the relatively low ratio of true mq queries in the logs [20], and the improbability of finding redundant patterns in real queries. The largest set of duplicate queries found was 12 in the case of Syntactic and 40 in the case of QCan-Label and QCan-Full.

5.2 Synthetic Setting

Many queries found in the LSQ dataset are quite simple to canonicalise. In order to see how the proposed canonicalisation methods perform for more complex queries, we propose two categories of synthetic query: the first category is designed to test the canonicalisation of cqs, particularly the canonical labelling and intra-cq minimisation steps; the second category is designed to test the canonicalisation of ucqs, particularly the ucq rewriting and inter-cq minimisation steps. Both aim at testing performance rather than duplicates found.

Synthetic CQ Setting. In order to test the minimisation of cqs, we select difficult cases for the canonical labelling and core computation of graphs [12]. More specifically, we select the following three (undirected) graph schemas:

-

2D grids: For \(k \ge 2\), the k-2D-grid contains \(k^2\) nodes, each with a coordinate \((x,y) \in \mathbb {N}_{1\ldots k}^2\), where nodes with distance one are connected; the result is a graph with \(2(k^2 - k)\) edges.

-

3D grids: For \(k \ge 2\), the k-3D-grid contains \(k^3\) nodes, each with a coordinate \((x,y,z) \in \mathbb {N}_{1\ldots k}^3\), where nodes with distance one are connected; the result is a graph with \(3(k^3 - k^2)\) edges.

-

Miyazaki: This class of graphs was designed by Miyazaki [15] to enforce a worst-case exponential behaviour in Nauty-style canonical labelling algorithms. For k, each graph has 20k nodes and 30k edges.

To create cqs from these graphs, we represent each edge in the undirected graph by a pair of triple patterns \((v_i,\mathtt {:p},v_j)\), \((v_j,\mathtt {:p},v_i)\), with \(v_i,v_j \in \mathbf {V} \) and \(\mathtt {:p}\) a fixed IRI for all edges. In order to ensure that the canonicalisation involves cq minimisation, we enclose the graph pattern in a SELECT DISTINCT v query, which provides the most challenging case for canonicalisation: applying set semantics and projecting (and thus “fixing”) a single query variable v. We then run the Full canonicalisation feature, which for cqs involves computing the core of the \(\textsc {r}\text {-graph}\) and applying canonical labelling. Note that under minimisation, 2D-Grid and 3D-Grid graphs collapse down to a core with a single undirected edge, while Miyazaki graphs collapse down to a core with a 3-cycle.

In Fig. 3 we present the runtimes of the canonicalisation procedure, where we highlight that the y-axis is presented in log scale. We see that instances of 2d-Grid for \(k\le 10\) can be canonicalised in under a second. Beyond that, the performance of canonicalisation lengthens to seconds, minutes and even hours.

Synthetic MQ Setting. We also performed tests creating mqs in CNF (joins of unions) of the form \((t_{1,1} \cup \ldots \cup t_{1,n})\bowtie \ldots \bowtie (t_{m,1} \cup \ldots \cup t_{m,n})\), where m is the number of joins, n is the number of unions, and \(t_{i,j}\) is a triple pattern sampled (with replacement) from a k-clique of triples with a fixed predicate (such that \(k = m + n\)) to stress-test the performance of the canonicalisation procedure, where each such query will be rewritten to a query of size \(O(n^m)\). Detailed results are available in the extended paper [20]; in summary, QCan-Full succeeds up to \(m = 4\), \(n = 8\), taking about 7.4 h, or \(m=8\), \(n=2\), taking 3 min; for values of \(m=8\), \(n=4\) and beyond, canonicalisation fails.

6 Conclusions

This paper describes a method for canonicalising SPARQL (1.0) queries considering both set and bag semantics. This canonicalisation procedure – which is sound for all queries and complete for monotone queries – obviates the need to perform pairwise containment/equivalence checks in a list/stream of queries and rather allows for using standard indexing techniques to find congruent queries. The main use-cases we foresee are query caching, optimisation and log analysis.

Our method is based on (1) representing the SPARQL query as an RDF graph, over which are applied (2) algebraic ucq rewritings, (3 – in the case of set semantics) intra-cq and inter-cq normalisation, (4) canonical labelling of variables and ordering of query syntax, before finally (5) converting the graph back to a canonical SPARQL query. As such, by representing the query as a graph, our method leverages existing graph canonicalisation frameworks [12].

Though the worst-case complexity of the algorithm is doubly-exponential, experiments show that canonicalisation is feasible for a large collection of real-world SPARQL queries taken from endpoint logs. Furthermore, we show that the number of duplicates detected doubles over baseline syntactic methods. In more challenging experiments involving synthetic settings, however, we quickly start to encounter doubly-exponential behaviour, where the canonicalisation method starts to reach its practical limits. Still, our experiments for real-world queries suggests that such difficult cases do not arise often in practice.

In future work, we plan to extend our methods to consider other query features of SPARQL (1.1), such as subqueries, property paths, negation, and so forth; we also intend to investigate further into the popular OPTIONAL operator.

Notes

- 1.

We use, e.g., \(\mathbf {I} \mathbf {B} \mathbf {L} \) as a shortcut for \(\mathbf {I} \cup \mathbf {B} \cup \mathbf {L} \).

- 2.

This is expressed by placing constants in triple patterns.

- 3.

Note that SELECT * is equivalent to returning all variables (or omitting the feature).

- 4.

We assume that cqs without variables may generate an empty mapping (\(\{\mu \}\) with \(\mathrm {dom}(\mu ) = \emptyset \)) if the cq is contained in the data, or no mapping (\(\{\}\)) otherwise. This means we will not remove such cqs (unless they are precisely equal to another cq) as they will generate a tuple of unbounds in the results if and only if the data match.

References

Angles, R., Gutierrez, C.: The multiset semantics of SPARQL patterns. In: Groth, P., et al. (eds.) ISWC 2016. LNCS, vol. 9981, pp. 20–36. Springer, Cham (2016). https://doi.org/10.1007/978-3-319-46523-4_2

Buil-Aranda, C., Hogan, A., Umbrich, J., Vandenbussche, P.-Y.: SPARQL web-querying infrastructure: ready for action? In: Alani, H., et al. (eds.) ISWC 2013. LNCS, vol. 8219, pp. 277–293. Springer, Heidelberg (2013). https://doi.org/10.1007/978-3-642-41338-4_18

Arias Gallego, M., Fernández, J.D., Martínez-Prieto, M.A., de la Fuente, P.: An empirical study of real-world SPARQL queries. In: Usage Analysis and the Web of Data (USEWOD) (2011)

Chaudhuri, S., Vardi, M.Y.: Optimization of real conjunctive queries. In: Principles of Database Systems (PODS), pp. 59–70. ACM Press (1993)

Chekol, M.W., Euzenat, J., Genevès, P., Layaïda, N.: SPARQL query containment under SHI axioms. In: AAAI Conference on Artificial Intelligence (2012)

Wudage Chekol, M., Euzenat, J., Genevès, P., Layaïda, N.: Evaluating and benchmarking SPARQL query containment solvers. In: Alani, H., et al. (eds.) ISWC 2013. LNCS, vol. 8219, pp. 408–423. Springer, Heidelberg (2013). https://doi.org/10.1007/978-3-642-41338-4_26

Cyganiak, R., Wood, D., Lanthaler, M.: RDF 1.1 Concepts and Abstract Syntax. W3C Recommendation, February 2014. http://www.w3.org/TR/rdf11-concepts/

Gutierrez, C., Hurtado, C.A., Mendelzon, A.O., Pérez, J.: Foundations of semantic web databases. J. Comput. Syst. Sci. 77(3), 520–541 (2011)

Harris, S., Seaborne, A., Prud’hommeaux, E.: SPARQL 1.1 Query Language. W3C Recommendation, March 2013. http://www.w3.org/TR/sparql11-query/

Hayes, P., Patel-Schneider, P.F.: RDF 1.1 Semantics. W3C Recommendation, February 2014. http://www.w3.org/TR/rdf11-mt/

Hogan, A.: Skolemising blank nodes while preserving isomorphism. In: World Wide Web Conference (WWW), pp. 430–440. ACM (2015)

Hogan, A.: Canonical forms for isomorphic and equivalent RDF graphs: algorithms for leaning and labelling blank nodes. ACM TWeb 11(4), 22:1–22:62 (2017)

Kaminski, M., Kostylev, E.V.: Beyond well-designed SPARQL. In: International Conference on Database Theory (ICDT), pp. 5:1–5:18 (2016)

Letelier, A., Pérez, J., Pichler, R., Skritek, S.: Static analysis and optimization of semantic web queries. ACM Trans. Database Syst. 38(4), 25:1–25:45 (2013)

Miyazaki, T.: The complexity of McKay’s canonical labeling algorithm. In: Groups and Computation, II, pp. 239–256 (1997)

Papailiou, N., Tsoumakos, D., Karras, P., Koziris, N.: Graph-aware, workload-adaptive SPARQL query caching. In: ACM SIGMOD International Conference on Management of Data, pp. 1777–1792. ACM (2015)

Pérez, J., Arenas, M., Gutierrez, C.: Semantics and complexity of SPARQL. ACM Trans. Database Syst. 34(3), 16:1–16:45 (2009)

Pichler, R., Skritek, S.: Containment and equivalence of well-designed SPARQL. In: Principles of Database Systems (PODS), pp. 39–50 (2014)

Sagiv, Y., Yannakakis, M.: Equivalences among relational expressions with the union and difference operators. J. ACM 27(4), 633–655 (1980)

Salas, J., Hogan, A.: Canonicalisation of monotone SPARQL queries. Technical report. http://aidanhogan.com/qcan/extended.pdf

Saleem, M., Ali, M.I., Hogan, A., Mehmood, Q., Ngomo, A.-C.N.: LSQ: the linked SPARQL queries dataset. In: Arenas, M., et al. (eds.) ISWC 2015. LNCS, vol. 9367, pp. 261–269. Springer, Cham (2015). https://doi.org/10.1007/978-3-319-25010-6_15

Saleem, M., Stadler, C., Mehmood, Q., Lehmann, J., Ngomo, A.N.: SQCFramework: SPARQL query containment benchmark generation framework. In: Knowledge Capture Conference (K-CAP), pp. 28:1–28:8 (2017)

Schmidt, M., Meier, M., Lausen, G.: Foundations of SPARQL query optimization. In: International Conference on Database Theory (ICDT), pp. 4–33. ACM (2010)

Theoharis, Y., Christophides, V., Karvounarakis, G.: Benchmarking database representations of RDF/S stores. In: Gil, Y., Motta, E., Benjamins, V.R., Musen, M.A. (eds.) ISWC 2005. LNCS, vol. 3729, pp. 685–701. Springer, Heidelberg (2005). https://doi.org/10.1007/11574620_49

Acknowledgements

The work was supported by the Millennium Institute for Foundational Research on Data (IMFD) and by Fondecyt Grant No. 1181896.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2018 Springer Nature Switzerland AG

About this paper

Cite this paper

Salas, J., Hogan, A. (2018). Canonicalisation of Monotone SPARQL Queries. In: Vrandečić, D., et al. The Semantic Web – ISWC 2018. ISWC 2018. Lecture Notes in Computer Science(), vol 11136. Springer, Cham. https://doi.org/10.1007/978-3-030-00671-6_35

Download citation

DOI: https://doi.org/10.1007/978-3-030-00671-6_35

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-00670-9

Online ISBN: 978-3-030-00671-6

eBook Packages: Computer ScienceComputer Science (R0)