Abstract

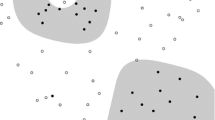

In this paper we address the problem of estimating the parameters of a Gaussian mixture model. Although the EM (Expectation-Maximization) algorithm yields the maximum-likelihood solution it requires a careful initialization of the parameters and the optimal number of kernels in the mixture may be unknown beforehand. We propose a criterion based on the entropy of the pdf (probability density function) associated to each kernel to measure the quality of a given mixture model. Two different methods for estimating Shannon entropy are proposed and a modification of the classical EM algorithm to find the optimal number of kernels in the mixture is presented. We test our algorithm in probability density estimation, pattern recognition and color image segmentation.

Chapter PDF

Similar content being viewed by others

Keywords

- Covariance Matrice

- Gaussian Mixture Model

- Entropy Estimation

- Probability Density Estimation

- Color Image Segmentation

These keywords were added by machine and not by the authors. This process is experimental and the keywords may be updated as the learning algorithm improves.

References

Beirlant, E., Dudewicz, E., Gyorfi, L., Van der Meulen, E.: Nonparametric entropy estimation. International Journal on Mathematical and Statistical Sciences 6(1), 17–39 (1996)

Bertsimas, D.J., Van Ryzin, G.: An asymptotic determination of the minimum spanning tree and minimum matching constants in geometrical probability. Operations Research Letters 9(1), 223–231 (1990)

Blake, C.L., Merz, C.J.: Uci repository of machine learning databases. University of California, Irvine, Dept. of Information and Computer Sciences (1998)

Cover, T., Thomas, J.: Elements of Information Theory. J. Wiley and Sons, Chichester (1991)

Dellaportas, P., Papageorgiou, I.: Multivariate mixtures of normals with unknown number of components. Statistics and Computing (to appear)

Dempster, A., Laird, N., Rubin, D.: Maximum likelihood estimation from incomplete data via the em algorithm. Journal of the Royal Statistical Society 39(1), 1–38 (1977)

Figueiredo, M.A.T., Jain, A.K.: Unsupervised selection and estimation of finite mixture models. In: International Conference on Pattern Recognition, ICPR 2000, Barcelona, Spain. IEEE, Los Alamitos (2000)

Figueiredo, M.A.T., Leitao, J.M.N., Jain, A.K.: On fitting mixture models. In: Hancock, E.R., Pelillo, M. (eds.) EMMCVPR 1999. LNCS, vol. 1654, pp. 54–69. Springer, Heidelberg (1999)

Hero, A.O., Michel, O.: Asymptotic theory of greedy approximations to minnimal k-point random graphs. IEEE Trans. on Infor. Theory 45(6), 1921–1939 (1999)

Hero, A.O., Michel, O.: Applications of entropic graphs. IEEE Signal Processing Magazine 19(5), 85–95 (2002)

Parzen, E.: On estimation of a probability density function and mode. Annals of Mathematical Statistics 33(1), 1065–1076 (1962)

Redner, R.A., Walker, H.F.: Mixture densities, maximum likelihood, and the em algorithm. SIAM Review 26(2), 195–239 (1984)

Richardson, S., Green, P.J.: On bayesian analysis of mixtures with unknown number of components (with discussion). Journal of the Royal Statistical Society B (1) (1997)

Ueda, N., Nakano, R., Ghahramani, Z., Hinton, G.E.: Smem algorithm for mixture models. Neural Computation 12(1), 2109–2128 (2000)

Viola, P., Schraudolph, N.N., Sejnowski, T.J.: Empirical entropy manipulation for real-world problems. Adv. in Neural Infor. Proces. Systems 8(1) (1996)

Vlassis, N., Likas, A.: A kurtosis-based dynamic approach to gaussian mixture modeling. IEEE Trans. Systems, Man, and Cybernetics 29(4), 393–399 (1999)

Vlassis, N., Likas, A.: A greedy em algorithm for gaussian mixture learning. Neural Processing Letters 15(1), 77–87 (2000)

Zhang, Z., Chan, K.L., Wu, Y., Chen, C.: Learning a multivariate gaussian mixture models with the reversible jump mcmc algorithm. Statistics and Computing (1) (2004)

Author information

Authors and Affiliations

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2006 Springer-Verlag Berlin Heidelberg

About this paper

Cite this paper

Peñalver, A., Escolano, F., Sáez, J.M. (2006). Two Entropy-Based Methods for Learning Unsupervised Gaussian Mixture Models. In: Yeung, DY., Kwok, J.T., Fred, A., Roli, F., de Ridder, D. (eds) Structural, Syntactic, and Statistical Pattern Recognition. SSPR /SPR 2006. Lecture Notes in Computer Science, vol 4109. Springer, Berlin, Heidelberg. https://doi.org/10.1007/11815921_71

Download citation

DOI: https://doi.org/10.1007/11815921_71

Publisher Name: Springer, Berlin, Heidelberg

Print ISBN: 978-3-540-37236-3

Online ISBN: 978-3-540-37241-7

eBook Packages: Computer ScienceComputer Science (R0)