Abstract

Spectral filter array emerges as a multispectral imaging technology, which could benefit several applications. Although several instantiations are prototyped and commercialized, there are yet only a few raw data available that could serve research and help to evaluate and design adequate related image processing and algorithms. This document presents a freely available spectral filter array database of images that combine visible and near infra-red information.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

Multispectral imaging (MSI) has been proven to be beneficial to a great variety of applications, but its use to general computer vision was limited due to complexity of imaging set-up, calibration and specific imaging pipelines. Spectral filter arrays (SFA) technology [9] seems to provide an adequate solution to overcome this limitation. In fact, increasing the number of spectral bands in filter arrays, along with using a high resolution sensor could lead to a small, efficient and affordable solution for single-shot MSI. In addition, SFA was developed around a very similar imaging pipeline than color filter arrays (CFA), which is rather well understood and already implemented in most solutions. In this sense, SFA provide a conceptual solution that could be exploitable in actual vision systems in a relatively straightforward manner.

We consider that the use of the SFA technology may reach a large scale of use soon. On one hand, SFA concept has been developed to a great extend using data from simulations, in particular on demosaicing [11, 12, 14, 26, 27, 29], but also on other aspects [8, 17, 21, 24, 28]. On the other hand, recent practical works on optical filters [1, 15, 16, 30] in parallel to the development of SFA camera prototypes in the visible electromagnetic range [5], in the near infra-red (NIR) [2] and in combined visible and NIR [7, 25], lead to the commercialization of solutions (e.g. IMEC [3], SILIOS [23], PIXELTEQ [18]). Furthermore, several cameras include custom filter arrays that are in-between CFA and SFA (e.g. Jia et al. [4] and Monno et al. [13]). Rest to validate the simulations with real data and adapt the imaging framework to state that this solution is ready to be used into practical applications. We help to address the first point by providing experimental data that can serve to the validation of simulation.

Through this article, we provide a freely accessible database of SFA images. The spectral calibration of the camera and the illuminant used during acquisition are provided along with several SFA raw images of various scenes. These data can be used as benchmarks for future works by the research community, and could lead to further development on SFA technology.

In the following sections, we first describe the camera design in term of spectral sensitivity, spatial arrangement and hardware. We then show the method to construct our SFA database, by presenting the experiment setup and the illuminant used. Finally, we draw a first benchmark to exploit the data; a visualization framework to display the multispectral data as a sRGB representation. To conclude, we outline the potential use of the proposed database in the research area and discuss future work.

(a) Joint spectral characteristics of optical filters and sensor from the camera used to recover the database images [25]. (b) Spatial distribution of filters over the sensor, following Miao et al. [10] method. (c) Camera designed at Le2i laboratory, composed of a FPGA board and an attached sensor board holding the detector array.

2 Camera Design

From our previous work [9, 25], we designed and developed a proof-of-concept prototype SFA imaging system, that achieves multispectral snapshot capabilities. The camera setup is based on a commercial sensor coupled with a hybrid filter array for recovering visible and NIR information. The commercial sensor is from the e2v [22] manufacturer. The associated spectral filter array is manufactured by hybridization of the Silios Technologies [23]. The relative spectral sensitivities of the camera cover the electromagnetic spectrum from 380 nm to 1100 nm. Spectral characterization of the camera is fully described in the related paper [25]. The resulting characteristics of this vision system are shown in Fig. 1. From this work, we want to provide a useful set of data to go further in the practical investigation.

3 Database Description

We capture 18 scenes, composed of several categories of objects, ranging objects from: metal, biological, spatially homogeneous/heterogeneous, spectrally homogeneous/heterogeneous, showing specular reflections, translucent materials, containing industrial pigments, containing art pigments, clothes, etc. For the dataset, we fix a single exposure time, a single aperture and a single illuminant to limit multiple parameter dependence problems that could arise when analyzing multispectral images.

In practice, data is recovered from our camera through an Ethernet connection, linking the FPGA board (Zedboard, see Fig. 1(c)) and a PC. The FPGA holds a mezzanine card, which is an electronic interface towards the SFA sensor (the electronic design was initially developed by Lapray et al. [6]). Information concerning the hardware, like the optics, the electronics and the exposure times are given in Table 2. A simulated D65 source has been chosen to illuminate the scene (see Fig. 2). The object was small enough to be in a part where illumination was supposed to be sufficiently uniform, we will see later that illumination is yet far from flat.

A pre-processing step is necessary before any use of the produced images. This processing is composed by a dark correction and a downsampling; it is described in Thomas et al. [25]. All the images provided with this document are pre-processed accordingly and ready to use. The mosaiced images of the database are shown on Fig. 3. The entire database can be freely downloaded at http://chic.u-bourgogne.fr. The zip file is organised according to Table 1.

4 Obtention of Color Images

Prior to perform any visualization, it is necessary to reconstruct the full resolution color image from the sampled spectral mosaiced data.

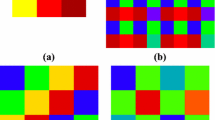

Since the information acquired using SFA method is intrinsically sparse over the full image resolution, we need a mean in order to reconstruct the full spatial information on the spectral image. Here, Miao et al. [10] demosaicing algorithm is employed. This method is naturally chosen to be the benchmark method because the spatial arrangement of our filters (see Fig. 1(a)) has been specially selected following this method. There are 8 channels in the camera design, thus 8 independent images are produced from one mosaiced image. An example of a demosaiced image is shown on Fig. 4. These images are stored in a multiband tiff file in the database. Note that we have not implemented any devignetting correction on the data. This could be seen on some images.

Example of demosaiced image from the database. The interpolation method is done by applying the Miao binary tree algorithm [10]. So the channels 1 to 8 are reconstructed to provide the full spatial resolution of images.

The color version of these images, in Fig. 5 is obtained by fitting a linear color transform from the 8 bands to CIEXYZ values, then to sRGB values. The linear model is based on the reflectance measurements of the Gretag Macbeth color checker in the visible and NIR shown in Fig. 6.

The model is defined by M, that transforms color values C from the object into sensor values S, such as in Eq. 1.

where \(M^+\) is the generalized inverse of M (i.e. Moore-Penrose pseudo-inverse here) computed from the data obtained by integrating the Gretag Macbeth reflectance spectra and the illumination over the sensor sensitivities and over the standard 2 degrees CIE 1931 standard observer of the CIE according to the CIE recommendations. To this aim, all data are re-sampled at 10 nm by using linear interpolation, and the normalization factor k is computed according to \(\bar{y}(\lambda )\) and the normalized illumination of Fig. 2. The data are provided in Table 3.

The CIEXYZ values are then transformed into sRGB following the standard formulation and only an implicit gamut mapping, i.e. a clipping, is performed. Although for a three band sensor, Luther and Yves conditions may not be respected and a linear transform would probably not be sufficient, in our case of multispectral values, the colorimetric error is very small. Note, however, that even if we incorporated the NIR part in the color characterization, the sensitivity of the sensor in the NIR domain impacts the accuracy of the color reconstruction due to some amount of metamerism. Indeed, as it has been shown by Sadeghipoor et al. [19, 20], the NIR contribution to the signal is a source of noise for the color accuracy. Our database may also help to evaluate the adequate processing that must be used to correct these data.

5 Conclusion

We acquired a database of SFA images with a prototype sensor sensitive in the visible and NIR part of the electromagnetic field. A great variety of objects have been captured and the parameters of the acquisition have been measured, such as scene illumination. In addition, the colorimetric transform that permits to generate color images is provided. A benchmark demosaicing performed with the most established demosaicing method for SFA is also given. These data may serve for evaluation of state of the art demosaicing and color reconstruction methods as well as for further development and proof of concept in this field.

References

Eichenholz, J.M., Dougherty, J.: Ultracompact fully integrated megapixel multispectral imager. In: SPIE OPTO: Integrated Optoelectronic Devices, p. 721814. International Society for Optics and Photonics (2009)

Geelen, B., Blanch, C., Gonzalez, P., Tack, N., Lambrechts, A.: A tiny VIS-NIR snapshot multispectral camera. In: SPIE OPTO, p. 937414. International Society for Optics and Photonics (2015)

IMEC: Hyperspectral-imaging. http://www2.imec.be

Jia, J., Barnard, K.J., Hirakawa, K.: Fourier spectral filter array for optimal multispectral imaging. IEEE Trans. Image Process. 25(4), 1530–1543 (2016)

Kiku, D., Monno, Y., Tanaka, M., Okutomi, M.: Simultaneous capturing of RGB and additional band images using hybrid color filter array. In: Proceedings of SPIE, vol. 9023, pp. 90230V–90230V-9 (2014). http://dx.doi.org/10.1117/12.2039396

Lapray, P.J., Heyrman, B., Ginhac, D.: Hardware-based smart camera for recovering high dynamic range video from multiple exposures. Opt. Eng. 53(10), 102110 (2014). http://dx.doi.org/10.1117/1.OE.53.10.102110

Lapray, P.J., Thomas, J.B., Gouton, P.: A multispectral acquisition system using MSFAs. In: Color and Imaging Conference 2014, pp. 97–102 (2014). http://www.ingentaconnect.com/content/ist/cic/2014/00002014/00002014/art00016

Lapray, P.J., Thomas, J.B., Gouton, P., Ruichek, Y.: Energy balance in spectral filter array camera design. J. Eur. Opt. Soc. Rapid Pub. 13(1), 1 (2017). http://dx.doi.org/10.1186/s41476-016-0031-7

Lapray, P.J., Wang, X., Thomas, J.B., Gouton, P.: Multispectral filter arrays: recent advances and practical implementation. Sensors 14(11), 21626 (2014). http://www.mdpi.com/1424-8220/14/11/21626

Miao, L., Qi, H., Ramanath, R., Snyder, W.E.: Binary tree-based generic demosaicking algorithm for multispectral filter arrays. IEEE Trans. Image Process. 15(11), 3550–3558 (2006)

Mihoubi, S., Losson, O., Mathon, B., Macaire, L.: Multispectral demosaicing using intensity-based spectral correlation. In: The fifth International Conference on Image Processing Theory, Tools and Applications, IPTA 2015, Orléans (2015). https://hal.archives-ouvertes.fr/hal-01196983

Mihoubi, S., Losson, O., Mathon, B., Macaire, L.: Multispectral demosaicing using intensity in edge-sensing and iterative difference-based methods. In: SITIS, COMI 2016, Naples, December 2016

Monno, Y., Kikuchi, S., Tanaka, M., Okutomi, M.: A practical one-shot multispectral imaging system using a single image sensor. IEEE Trans. Image Process. 24(10), 3048–3059 (2015)

Monno, Y., Tanaka, M., Okutomi, M.: N-to-sRGB mapping for single-sensor multispectral imaging. In: 2015 IEEE International Conference on Computer Vision Workshop (ICCVW), pp. 66–73, December 2015

Park, H., Crozier, K.B.: Multispectral imaging with vertical silicon nanowires. Sci. Rep. 3, 2460 (2013)

Park, H., Dan, Y., Seo, K., Yu, Y.J., Duane, P.K., Wober, M., Crozier, K.B.: Vertical silicon nanowire photodetectors: spectral sensitivity via nanowire radius. In: CLEO: Science and Innovations, p. CTh3L-5. Optical Society of America (2013)

Péguillet, H., Thomas, J.B., Ruicheck, Y., Gouton, P.: Energy balance in single exposure multispectral sensors. In: CVCS 2013 (2013)

PIXELTEQ: Micro-patterned optical filters. https://pixelteq.com/

Sadeghipoor, Z., Lu, Y.M., Ssstrunk, S.: Correlation-based joint acquisition and demosaicing of visible and near-infrared images. In: 2011 18th IEEE International Conference on Image Processing (ICIP), pp. 3165–3168, September 2011

Sadeghipoor, Z., Thomas, J.B., Süsstrunk, S.: Demultiplexing visible and near-infrared information in single-sensor multispectral imaging. In: Color and Imaging Conference 2016, (24), 1 (2016). http://www.ingentaconnect.com/content/ist/cic/2016

Shrestha, R., Hardeberg, J.Y., Khan, R.: Spatial arrangement of color filter array for multispectral image acquisition. In: Proceedings of SPIE, vol. 7875, pp. 787503–787503-9 (2011). http://dx.doi.org/10.1117/12.872253

e2v Technologies: Ev76c661 BW and colour CMOS sensor (2009). http://www.e2v.com/products-and-services/high-performance-imaging-solutions/imaging-solutions-cmos-ccd-emccd/, www.e2v.com

SILIOS Technologies: Micro-optics supplier. http://www.silios.com/

Thomas, J.: Illuminant estimation from uncalibrated multispectral images. In: Colour and Visual Computing Symposium, CVCS 2015, Gjovik, 25–26 August 2015, pp. 1–6. IEEE (2015). http://dx.doi.org/10.1109/CVCS.2015.7274900

Thomas, J.B., Lapray, P.J., Gouton, P., Clerc, C.: Spectral characterization of a prototype SFA camera for joint visible and NIR acquisition. Sensors 16(7), 993 (2016). http://www.mdpi.com/1424-8220/16/7/993

Wang, C., Wang, X., Hardeberg, J.Y.: A linear interpolation algorithm for spectral filter array demosaicking. In: Elmoataz, A., Lezoray, O., Nouboud, F., Mammass, D. (eds.) ICISP 2014. LNCS, vol. 8509, pp. 151–160. Springer, Cham (2014). doi:10.1007/978-3-319-07998-1_18

Wang, X., Thomas, J.B., Hardeberg, J.Y., Gouton, P.: Discrete wavelet transform based multispectral filter array demosaicking. In: Colour and Visual Computing Symposium (CVCS 2013), pp. 1–6 (2013)

Wang, X., Green, P.J., Thomas, J.-B., Hardeberg, J.Y., Gouton, P.: Evaluation of the colorimetric performance of single-sensor image acquisition systems employing colour and multispectral filter array. In: Trémeau, A., Schettini, R., Tominaga, S. (eds.) CCIW 2015. LNCS, vol. 9016, pp. 181–191. Springer, Cham (2015). doi:10.1007/978-3-319-15979-9_18

Wang, X., Thomas, J.B., Hardeberg, J.Y., Gouton, P.: Median filtering in multispectral filter array demosaicking. In: Proceedings of SPIE (EI), vol. 8660, pp. 86600E–86600E-10 (2013). http://dx.doi.org/10.1117/12.2005256

Yi, D., Kong, L., Wang, J., Zhao, F.: Fabrication of multispectral imaging technology driven MEMS-based micro-arrayed multichannel optical filter mosaic. In: SPIE MOEMS-MEMS, p. 792711. International Society for Optics and Photonics (2011)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2017 Springer International Publishing AG

About this paper

Cite this paper

Lapray, PJ., Thomas, JB., Gouton, P. (2017). A Database of Spectral Filter Array Images that Combine Visible and NIR. In: Bianco, S., Schettini, R., Trémeau, A., Tominaga, S. (eds) Computational Color Imaging. CCIW 2017. Lecture Notes in Computer Science(), vol 10213. Springer, Cham. https://doi.org/10.1007/978-3-319-56010-6_16

Download citation

DOI: https://doi.org/10.1007/978-3-319-56010-6_16

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-56009-0

Online ISBN: 978-3-319-56010-6

eBook Packages: Computer ScienceComputer Science (R0)