Abstract

Ultrasound-based interventions require experience and good hand-eye coordination. Especially for non-experts, correctly guiding a handheld probe towards a target, and staying there, poses a remarkable challenge. We augment a commercial vision-based instrument guidance system with haptic feedback to keep operators on target. A user study shows significant improvements across deviation, time, and ease-of-use when coupling standard ultrasound imaging with visual feedback, haptic feedback, or both.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

The use of ultrasound for interventional guidance has expanded significantly over the past decade. With research showing that ultrasound guidance improves patient outcomes in procedures such as central vein catheterizations and peripheral nerve blocks [3, 7], the relevant professional certification organizations began recommending ultrasound guidance as the gold standard of care, e.g. [1, 2]. Some ultrasound training is now provided in medical school, but often solely involves the visualization and identification of anatomical structures – a very necessary skill, but not the only one required [11].

Simultaneous visualization of targets and instruments (usually needles) with a single 2D probe is a significant challenge. The difficulty of maintaining alignment (between probe, instrument, and target) is a major reason for extended intervention duration [4]. Furthermore, if target or needle visualization is lost due to probe slippage or tipping, the user has no direct feedback to find them again. Prior work has shown that bimanual tasks are difficult if the effects of movements of both hands are not visible in the workspace; when there is lack of visual alignment, users must rely on their proprioception, which has an error of up to 5 cm in position and 10\(^{\circ }\) of orientation at the hands [9]. This is a particular challenge for novice or infrequent ultrasound users, as this is on the order of the range of unintended motion during ultrasound scans. Clinical accuracy limits (e.g. deep biopsies to lesions) are greater than 10 mm in diameter. With US beam thickness at depth easily greater than 2 cm, correct continuous target/needle visualization and steady probe position is a critical challenge. Deviations less than 10 mm practically cannot be confirmed by US alone. One study [13] found that the second most common error of anesthesiology novices during needle block placement (occurring in 27 % of cases) was unintentional probe movement.

One solution to this problem is to provide corrective guidance to the user. Prior work in haptic guidance used vibrotactile displays effectively in tasks where visual load is high [12]. The guiding vibrations can free up cognitive resources for more critical task aspects. Combined visual and haptic feedback has been shown to decrease error [10] and reaction time [16] over visual feedback alone, and has been shown to be most effective in tasks with a high cognitive load [6].

Handheld ultrasound scanning systems with visual guidance or actuated feedback do exist [8], but are either limited to just initial visual positioning guidance when using camera-based local tracking [15], or offer active position feedback only for a small range of motion and require external tracking [5].

To improve this situation, we propose a method for intuitive, always-available, direct probe guidance relative to a clinical target, with no change to standard workflows. The innovation we describe here is Plane Assist: ungrounded haptic (tactile) feedback signaling which direction the user should move to bring the ultrasound imaging plane into alignment with the target. Ergonomically, such feedback helps to avoid information overload while allowing for full situational awareness, making it particularly useful for less experienced operators.

2 Vision-Based Guidance System and Haptic Feedback

Image guidance provides the user with information to help aligning instruments, targets, and possibly imaging probes to facilitate successful instrument handling relative to anatomical targets. This guidance information can be provided visually, haptically, or auditorily. In this study we consider visual guidance, haptic guidance, and their combinations, for ultrasound-based interventions.

2.1 Visual Guidance

For visual guidance, we use a Clear Guide ONE (Clear Guide Medical, Inc., Baltimore MD; Fig. 1), which adds instrument guidance capabilities to regular ultrasound machines for needle-based interventions. Instrument and ultrasound probe tracking is based on computer vision, using wide-spectrum stereo cameras mounted on a standard clinical ultrasound transducer [14]. Instrument guidance is displayed as a dynamic overlay on live ultrasound imaging.

Fiducial markers are attached to the patient skin in the cameras’ field of view to permit dynamic target tracking. The operator defines a target initially by tapping on the live ultrasound image. If the cameras observe a marker during this target definition, further visual tracking of that marker allows continuous 6-DoF localization of the probe. This target tracking enhances the operator’s ability to maintain probe alignment with a chosen target. During the intervention, as (inadvertent) deviations from this reference pose relative to the target – or vice versa in the case of actual anatomical target motion – are tracked, guidance to the target is indicated through audio and on-screen visual cues (needle lines, moving target circles, and targeting crosshairs; Fig. 1).

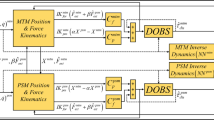

From an initial target pose \(^{US}P\) in ultrasound (US) coordinate frame and camera/ultrasound calibration transformation matrix \(^{C}T^{US}\), one determines the pose of the target in the original camera frame:

In a subsequent frame, where the same marker is observed in the new camera coordinate frame (C, t), one finds the transformation between the two camera frames (\(^{C,t}T^{C}\)) by simple rigid registration of the two marker corner point sets. Now the target is found in the new ultrasound frame (US, t):

Noting that the ultrasound and camera frames are fixed relative to each other (\(^{US,t}T^{C,t} =\, ^{US}T^{C}\)), and expanding, we get the continuously updated target positions in the ultrasound frame:

This information can be used for both visual and haptic (see below) feedback.

2.2 Haptic Guidance

To add haptic cues to this system, two C-2 tactors (Engineering Acoustics, Inc., Casselberry, FL) were embedded in a silicone band that was attached to the ultrasound probe, as shown in Fig. 2. Each tactor is 3 cm wide, 0.8 cm tall, and has a mass of 17 g. The haptic feedback band adds 65 g of mass and 2.5 cm of thickness to the ultrasound probe. The tactors were located on the probe sides to provide feedback to correct unintentional probe tilting. Although other degrees of freedom (i.e. probe translation) will also result in misalignment between the US plane and target, we focus this initial implementation on tilting because our pilot study showed that tilting is one of the largest contributors to error between US plane and target.

Haptic feedback is provided to the user if the target location is further than 2 mm away from the ultrasound plane. This \(\pm {2}\) mm deadband thus corresponds to different amounts of probe tilt for different target depthsFootnote 1. The tactor on the side corresponding to the direction of tilt is vibrated with an amplitude proportional to the amount of deviation.

3 Experimental Methods

We performed a user study to test the effectiveness of haptic feedback in reducing unintended probe motion during a needle insertion task. All procedures were approved by the Stanford University Institutional Review Board. Eight right-handed novice non-medical students were recruited for the study (five male, three female, 22–43 years old). Novice subjects were used as an approximate representation of medical residents’ skills to evaluate the effect of both visual and haptic feedback on the performance of inexperienced users and to assess the efficacy of this system for use in training. (Other studies indicate that the system shows the greatest benefit with non-expert operators.)

3.1 Experiment Set-Up

In the study, the participants used the ultrasound probe to image a synthetic homogeneous gelatin phantom (Fig. 2(b)) with surface-attached markers for probe pose tracking. After target definition, the participants used the instrument guidance of the Clear Guide system to adjust a needle to be in-plane with ultrasound, and its trajectory to be aligned with the target. After appropriate alignment, they then inserted the needle into the phantom until reaching the target, and the experimenter recorded success or failure of each trial. The success of a trial was determined by watching the needle on the ultrasound image; if it intersected the target, the trial was a success, otherwise a failure. The system continuously recorded the target position in ultrasound coordinates (\(^{US,t}P\)) for all trials.

(a) Ultrasound probe, augmented with cameras for visual tracking of probe and needle, and a tactor band for providing haptic feedback. (b) Participant performing needle insertion trial into a gelatin phantom using visual needle and target guidance on the screen, and haptic target guidance through the tactor band.

3.2 Guidance Conditions

Each participant was asked to complete sixteen needle insertion trials. At the beginning of each trial, the experimenter selected one of four pre-specified target locations ranging from 3 cm to 12 cm in depth. When the experimenter defined a target location on the screen, the system saved the current position and orientation of the ultrasound probe as the reference pose.

During each trial, the system determines the current position and orientation of the ultrasound probe, and calculates its deviation from the reference pose. Once the current probe/target deviation is computed, the operator is informed of required repositioning using two forms of feedback: (1) Standard visual feedback (by means of graphic overlays on the live US stream shown on-screen) indicates the current target location as estimated by visual tracking and the probe motion necessary to re-visualize the target in the US view port. The needle guidance is also displayed as blue/green lines on the live imaging stream. (2) Haptic feedback is presented as vibration on either side of the probe to indicate the direction of probe tilt from its reference pose. The participants were instructed to tilt the probe away from the vibration to correct for the unintended motion.

Each participant completed four trials under each of four feedback conditions: no feedback (standard US imaging with no additional guidance), visual feedback only, both visual and haptic feedback, and haptic feedback only. The conditions and target locations were randomized and distributed across all sixteen trials to mitigate learning effects and differences in difficulty between target locations. Participants received each feedback and target location pair once.

4 Results

In our analysis, we define the amount of probe deviation as the perpendicular distance between the ultrasound plane and the target location at the depth of the target. In the no-feedback condition, participants had an uncorrected probe deviation larger than 2 mm for longer than half of the trial time in 40 % of the trials. This deviation caused these trials to be failures as the needle did not hit the original 3D target location. This poor performance highlights the prevalence of unintended probe motion and the need for providing feedback to guide the user. We focus the remainder of our analysis on the comparison of the effectiveness of the visual and haptic feedback, and do not include the results from the no-feedback condition in our statistical analysis.

The probe deviation was averaged in each trial. A three-way ANOVA was run on the average deviation with participant, condition, and target location as factors (Fig. 3(a)). Feedback condition and target locations were found to be significant factors (\(p<0.001\)). No significant difference was found between the average probe deviations across participants (\(p>0.1\)). A multiple-comparison test between the three feedback conditions indicated that the average probe deviation for the condition including visual feedback only (\(1.12 \pm 0.62\) mm) was significantly greater than that for the conditions with both haptic and visual feedback (\(0.80 \pm 0.38\) mm; \(p<0.01\)) and haptic feedback only (\(0.87 \pm 0.48\) mm; \(p<0.05\)).

Additionally, the time it took for participants to correct probe deviations larger than the 2 mm deadband was averaged in each trial. A three-way ANOVA was run on the average correction time with participant, condition, and target location as factors (Fig. 3(b)). Feedback condition was found to be a significant factor (\(p<0.0005\)). No significant difference was found between the average probe deviations across participants or target locations (\(p>0.4\)). A multiple-comparison test between the three feedback conditions indicated that the average probe correction time for the condition including visual feedback only (\(2.15 \pm 2.40\) s) was significantly greater than that for the conditions with both haptic and visual feedback (\(0.61 \pm 0.36\) s; \(p<0.0005\)) and haptic feedback only (\(0.77 \pm 0.59\) s; \(p<0.005\)). These results indicate that the addition of haptic feedback resulted in less undesired motion of the probe and allowed participants to more quickly correct any deviations.

Several participants indicated that the haptic feedback was especially beneficial because of the high visual-cognitive load of the needle alignment portion of the task. The participants were asked to rate the difficulty of the experimental conditions on a five-point Likert scale. The difficulty ratings (Fig. 4) support our other findings. The condition including both haptic and visual feedback was rated as significantly easier (\(2.75\pm 0.76\)) than the conditions with visual feedback only (\(3.38\pm 0.92\); \(p<0.05\)) and haptic feedback only (\(3.5\pm 0.46\); \(p<0.01\)).

5 Conclusion

We described a method to add haptic feedback to a commercial, vision-based navigation system for ultrasound-guided interventions. In addition to conventional on-screen cues (target indicators, needle guides, etc.), two vibrating pads on either side of a standard handheld transducer indicate deviations from the plane containing a locked target. A user study was performed under simulated conditions which highlight the central problems of clinical ultrasound imaging – namely difficult visualization of intended targets, and distraction caused by task focusing and information overload, both of which contribute to inadvertent target-alignment loss. Participants executed a dummy needle-targeting task, while probe deviation from the target plane, reversion time to return to plane, and perceived targeting difficulty were measured.

The experimental results clearly show (1) that both visual and haptic feedback are extremely helpful at least in supporting inexperienced or overwhelmed operators, and (2) that adding haptic feedback (presumably because of its intuitiveness and independent sensation modality) improves performance over both static and dynamic visual feedback. The considered metrics map directly to clinical precision (in the case of probe deviation) or efficacy of the feedback method (in the case of reversion time). Since the addition of haptic feedback resulted in significant improvement for novice users, the system shows promise for use in training.

Although this system was implemented using a Clear Guide ONE, the haptic feedback can in principle be implemented with any navigated ultrasound guidance system. In the future, it would be interesting to examine the benefits of haptic feedback in a clinical study, across a large cohort of diversely-skilled operators, while directly measuring the intervention outcome (instrument placement accuracy). Future prototypes would be improved by including haptic feedback for additional degrees of freedom such as translation and rotation of the probe.

Notes

- 1.

Note that we ignore the effects of ultrasound beam physics resulting in varying resolution cell widths (beam thickness), and instead consider the ideal geometry.

References

Emergency ultrasound guidelines: Ann. Emerg. Med. 53(4), 550–570 (2009)

Revised statement on recommendations for use of real-time ultrasound guidance for placement of central venous catheters. Bull. Am. Coll. Surg. 96(2), 36–37 (2011)

Antonakakis, J.G., Ting, P.H., Sites, B.: Ultrasound-guided regional anesthesia for peripheral nerve blocks: an evidence-based outcome review. Anesthesiol. Clin. 29(2), 179–191 (2011)

Banovac, F., Wilson, E., Zhang, H., Cleary, K.: Needle biopsy of anatomically unfavorable liver lesions with an electromagnetic navigation assist device in a computed tomography environment. J. Vasc. Interv. Radiol. 17(10), 1671–1675 (2006)

Becker, B.C., Maclachlan, R.A., Hager, G.D., Riviere, C.N.: Handheld micromanipulation with vision-based virtual fixtures. In: IEEE International Conference of Robotics Automation, vol. 2011, pp. 4127–4132 (2011)

Burke, J.L., Prewett, M.S., Gray, A.A., Yang, L., Stilson, F.R., Coovert, M.D., Elliot, L.R., Redden, E.: Comparing the effects of visual-auditory and visual-tactile feedback on user performance: a meta-analysis. In: Proceedings of the 8th International Conference on Multimodal Interfaces, pp. 108–117. ACM (2006)

Cavanna, L., Mordenti, P., Bertè, R., Palladino, M.A., Biasini, C., Anselmi, E., Seghini, P., Vecchia, S., Civardi, G., Di Nunzio, C.: Ultrasound guidance reduces pneumothorax rate and improves safety of thoracentesis in malignant pleural effusion: report on 445 consecutive patients with advanced cancer. World J. Surg. Oncol. 12(1), 1 (2014)

Courreges, F., Vieyres, P., Istepanian, R.: Advances in robotic tele-echography-services-the otelo system. In: 26th Annual International Conference of the IEEE Engineering in Medicine and Biology Society, IEMBS 2004, vol. 2, pp. 5371–5374. IEEE (2004)

Gilbertson, M.W., Anthony, B.W.: Ergonomic control strategies for a handheld force-controlled ultrasound probe. In: 2012 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), pp. 1284–1291. IEEE (2012)

Oakley, I., McGee, M.R., Brewster, S., Gray, P.: Putting the feel in ‘look and feel’. In: Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, CHI 2000, pp. 415–422. ACM (2000)

Shapiro, R.S., Ko, P.P., Jacobson, S.: A pilot project to study the use of ultrasonography for teaching physical examination to medical students. Comput. Biol. Med. 32(6), 403–409 (2002)

Sigrist, R., Rauter, G., Riener, R., Wolf, P.: Augmented visual, auditory, haptic, and multimodal feedback in motor learning: a review. Psychon. Bull. Rev. 20(1), 21–53 (2013)

Sites, B.D., Spence, B.C., Gallagher, J.D., Wiley, C.W., Bertrand, M.L., Blike, G.T.: Characterizing novice behavior associated with learning ultrasound-guided peripheral regional anesthesia. Reg. Anesth. Pain Med. 32(2), 107–115 (2007)

Stolka, P.J., Wang, X.L., Hager, G.D., Boctor, E.M.: Navigation with local sensors in handheld 3D ultrasound: initial in-vivo experience. In: SPIE Medical Imaging, p. 79681J. International Society for Optics and Photonics (2011)

Sun, S.Y., Gilbertson, M., Anthony, B.W.: Computer-guided ultrasound probe realignment by optical tracking. In: 2013 IEEE 10th International Symposium on Biomedical Imaging (ISBI), pp. 21–24. IEEE (2013)

Van Erp, J.B., Van Veen, H.A.: Vibrotactile in-vehicle navigation system. Transp. Res. Part F: Traffic Psychol. Behav. 7(4), 247–256 (2004)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2016 Springer International Publishing AG

About this paper

Cite this paper

Culbertson, H., Walker, J.M., Raitor, M., Okamura, A.M., Stolka, P.J. (2016). Plane Assist: The Influence of Haptics on Ultrasound-Based Needle Guidance. In: Ourselin, S., Joskowicz, L., Sabuncu, M., Unal, G., Wells, W. (eds) Medical Image Computing and Computer-Assisted Intervention – MICCAI 2016. MICCAI 2016. Lecture Notes in Computer Science(), vol 9900. Springer, Cham. https://doi.org/10.1007/978-3-319-46720-7_43

Download citation

DOI: https://doi.org/10.1007/978-3-319-46720-7_43

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-46719-1

Online ISBN: 978-3-319-46720-7

eBook Packages: Computer ScienceComputer Science (R0)