Abstract

In this paper, we propose a novel multiplanar autoregressive (AR) model to exploit the correlation in cross-dimensional planes of a similar patch group collected in an image, which has long been neglected by previous AR models. On that basis, we then present a joint multiplanar AR and low-rank based approach (MARLow) for image completion from random sampling, which exploits the nonlocal self-similarity within natural images more effectively. Specifically, the multiplanar AR model constraints the local stationarity in different cross-sections of the patch group, while the low-rank minimization captures the intrinsic coherence of nonlocal patches. The proposed approach can be readily extended to multichannel images (e.g. color images), by simultaneously considering the correlation in different channels. Experimental results demonstrate that the proposed approach significantly outperforms state-of-the-art methods, even if the pixel missing rate is as high as 90 %.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

Image restoration aims to recover original images from their low-quality observations, whose degradations are mostly generated by defects of capturing devices or error prone channels. It is one of the most important techniques in image/video processing, and low-level computer vision. In our work, we mainly focus on an interesting problem: image completion from random sampling, which has attracted many researchers’ attention [5, 10–12, 15, 22, 24, 26]. The problem is to recover the original image from its degraded observation, which has missing pixels randomly distributed. Such problem is a typical ill-posed problem, and different kinds of image priors have been employed.

One of the most commonly used image priors is the nonlocal prior [1], also known as the self-similarity property of natural images. Such prior reflects the fact that there are many similar contents frequently repeated in the whole image, which can be well utilized in image completion. A classic way is to process the collected similar patch groups. The reason is that similar degraded patches contain complementary information for each other, which contributes to the completion. According to the manipulation scheme applied to the patch group, there are generally two kinds of methods in the literature:

Cube-based methods stack similar patches directly, and then manipulate the data cube. The well-known denoising method Block-Matching and 3D filtering (BM3D) algorithm [6] is one of the most representative cube-based methods, which performs a 1D transform on each dimension of the data cube. The idea has been widely studied, and many extensions have been presented [16, 24]. These methods perform a global optimization on the data cube, neglecting the local structures inside the cube. Also, they process the data cube along each dimension, failing to consider the correlation that exists in cross-dimensional planes of the data cube. In this paper, we propose a multiplanar autoregressive (AR) model to address these problems. Specifically, the multiplanar AR model is to constrain the local stationarity in different sections of the data cube. Nonetheless, the multiplanar AR model is not good at smoothing the intrinsic structure of similar patches.

Matrix-based methods stretch similar patches into vectors, which are spliced to form a data matrix. Two popular approaches, sparse coding and low-rank minimization, can be applied to such matrices. For sparse coding, the sparse coefficients of each vector in the matrix should be similar. This amounts to restricting the number of nonzero rows of the sparse coefficient matrix [17, 28]. Zhang et al. [23] presented a group-based sparse representation method, which regards similar patch groups as its basic units. For low-rank minimization, since the data matrix is constructed by similar vectors, the rank of its underlying clean matrix to be recovered should be low. By minimizing the rank of the matrix, inessential contents (e.g. the noise) of the matrix can be eliminated [7, 12]. However, in image completion from random samples, such methods may excessively smooth the result, since they only consider the correlation of pixels at the same location of different patches. Also, unlike stacking similar patches directly, representing image patches by vectors shatters the local information stored in image patches.

Upon these analyses, these two kinds of methods seem to be relatively complemented. Thus, motivated by combining the merits of cube-based and matrix-based methods, we present a joint multiplanar autoregressive and low-rank approach (MARLow) for image completion (Fig. 1). Instead of performing a global optimization on the data cube grouped by similar patches, we propose the concept of the multiplanar autoregressive model to exploit the local stationarity on different cross-sections of the data cube. Meanwhile, we jointly consider the matrix grouped by stretched similar patches, in which the intrinsic content of similar patches can be well recovered by low-rank minimization. In summary, our contributions lie in three aspects:

-

We propose the concept of multiplanar autoregressive model, to characterize the local stationarity of cross-dimensional planes in the patch group.

-

We present a joint multiplanar autoregressive and low-rank approach (MARLow) for image completion from random sampling, along with an efficient alternating optimization method.

-

We extend our method to multichannel images by simultaneously considering the correlation in different channels, presenting encouraging performance.

The framework of the proposed image completion method MARLow. After obtaining an initialization of the input image, similar patches are collected. Then, the joint multiplanar autoregressive and low-rank approach is applied on grouped patches. After all patches are processed, overlapped patches are aggregated into a new intermediate image, which can be used as the input for the next iteration.

2 Related Work

In this section, we briefly review and discuss the existing literature that closely relates to the proposed method, including approaches associated with the autoregressive model and low-rank minimization.

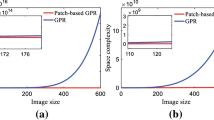

Autoregressive model. The autoregressive (AR) model has been extensively studied in the last decades. AR model refers to modeling a pixel as the linear combination of its supporting pixels, usually its known neighboring pixels. Based on the assumption that natural images have the property of local stationarity, pixels in a local area share the same AR parameters, i.e. the weight for each neighbor. AR parameters are often estimated from the low-resolution image [14, 25]. Dong et al. proposed a nonlocal AR model [8] using nonlocal pixels as supporting pixels, which is taken as a data fidelity constraint. The 3DAR model has been proposed to detect and interpolate the missing data in video sequences [9, 13]. Since video sequences have the property of temporal smoothness, AR model can be extended to temporal space by combining the local statistics in the single frame. Different from approaches mentioned above, we focus on different cross-sections of the data cube grouped by similar nonlocal patches in a single image and constrain the local stationarity inside different planes in the data cube simultaneously (Fig. 2).

Low-Rank minimization. As a commonly used tool in image completion, low-rank minimization aims to minimize the rank of an input corrupted matrix. It can be used for recovering/completing the intrinsic content of a degraded potentially low-rank matrix. The original low-rank minimization problem is NP-hard, and cannot be solved efficiently. Candès and Recht [3] proposed to relax the problem by using nuclear norm of the matrix, i.e. the sum of singular values, which has been widely used in low-rank minimization problems since then. As proposed in [7, 12], similar patches in images/videos are collected to form a potentially low-rank matrix. Then, the nuclear norm of the matrix is minimized. Zhang et al. further presented the truncated nuclear norm [22], minimizing the sum of small singular values. Ono et al. [18] proposed the block nuclear norm, leading to a suitable characterization of the texture component. Low-rank minimization can also be used on tensor completion. Liu et al. [15] regarded the whole input degraded color image as a potentially low-rank tensor, and defined the trace norm of tensors by extending the nuclear norm of matrices. However, most general natural images are not potentially low-rank. Thus, Chen et al. [4] attempted to recover the tensor while simultaneously capturing the underlying structure of it. In our work, we apply the nuclear norm of matrices, and we use singular value thresholding (SVT) method [2] to solve the low-rank minimization problem. Jointly combined with our multiplanar model (as elaborated in Sect. 3), our method produces encouraging image completion results.

3 The Proposed Image Completion Method

As discussed in previous sections, cube-based methods and matrix-based methods have their drawbacks, and they complement each other in some sense. In this section, we introduce the proposed multiplanar AR model to utilize information from cross-sections of the data cube grouped by similar patches. Moreover, combined with low-rank minimization, we present the joint multiplanar autoregressive and low-rank approach (MARLow) for image completion. At the end of this section, we extend the proposed method to multichannel images. For an input degraded image, we first conduct a simple interpolation-based initialization on it (see Fig. 1), to provide enough information for patch grouping.

3.1 Multiplanar AR Model

Considering a reference patch of size \(n\times n\), we collect its similar nonlocal patches. For a data cube grouped by similar patches, we observe its different cross-sections (cross-dimensional planes). As shown in Fig. 2(a), different cross-sections of the data cube possess local stationarity. Since AR models can measure the local stationarity of image signals, we naturally extend the conventional AR model to the multiplanar AR model to measure cross-dimensional planes.

Generally, the conventional AR model is defined as

where X(i, j) represents the pixel located at (i, j). \(X(i+m,j+n)\) is the supporting pixel with spatial offset (m, n), while \(\varphi (m,n)\) is the corresponding AR parameter. \(\mathcal {N}\) is the set of supporting pixels’ offsets and \(\sigma (i,j)\) is the noise.

(a) Different cross-sections of a data cube grouped by similar patches also possess local stationarity, which can be well processed by AR models. (b) White dots represent pixels (i.e. small rectangles in \(6\times 6\) image patches). Black arrows connect the center pixel of a multiplanar AR model with its supporting pixels.

In our work, the multiplanar AR model consists supporting pixels from different cross-dimensional planes (as illustrated in Fig. 2(b)). For a data cube grouped by similar patches of an image patch located at i, the multiplanar AR model of pixel \(X_i(j,k,l)\) with offset (j, k, l) in the data cube is defined as

where \(\mathcal {N}_1\) represents the set of supporting pixels’ planar offsets and \(\mathcal {N}_2\) represents the set of supporting pixels’ spatial offsets (assuming the order of the multiplanar AR model \(N_{order} = |\mathcal {N}_1|\times |\mathcal {N}_2|\)). \(Y_i(j + m,k + p,l + q)\) is the supporting pixel with offset (m, p, q) in the data cube and \(\varphi _i (m,p,q)\) is the corresponding AR parameter. \(\sigma _i(j,k,l)\) is the noise. Y is the initialization of the input image X. The reason we use Y here is that it is difficult to find enough known pixels to support the multiplanar AR model under high pixel missing rate.

For an \(n\times n\) patch, assuming N patches are collected, the aforementioned multiplanar AR model can be transformed into a matrix form, that is,

where \(X_i\in \mathbb {R}^{(n^2\times N)\times 1}\) is a vector containing all modeled pixels. \(T_i(\cdot )\) represents the operation that extract supporting pixels for \(X_i\). Each row of \(T_i(Y)\in \mathbb {R}^{(n^2\times N)\times N_{order}}\) contains values of supporting pixels of each pixel and \(\varphi _i\in \mathbb {R}^{N_{order}\times 1}\) is the multiplanar AR parameter vector.

Thus, the optimization problem for \(X_i\) and \(\varphi _i\) can be formulated as follows,

where \(\Vert \cdot \Vert _F\) is the Frobenius norm. In order to enhance the stability of the solution, we introduce the Tikhonov regularization to solve this problem. Specifically, a regularization term is included in the minimization problem, forming the following regularized least-square problem

where \(\varGamma = \alpha I\) and I is an identity matrix.

3.2 MARLow

Since the multiplanar AR model is designed to constrain a pixel with its supporting pixels on different cross-sections of the patch group, it can deal more efficiently with local image structures. For instance, assume there is an edge on an image that is severely degraded, with only a few pixels on it. After collecting similar patches, low-rank minimization or other matrix-based methods may regard the remaining pixels as noises and remove them. However, with the multiplanar AR model, these pixels can be used to constrain each other and strengthen the underlying edge. Nevertheless, AR models are not suitable for smoothing the intrinsic structure, while low-rank minimization methods specialize in it. So we propose to combine the multiplanar AR model with low-rank minimization (MARLow) as follows,

where the last part is the low-rank minimization term restricting the fidelity while minimizing the nuclear norm (i.e. \(\Vert \cdot \Vert _*\)) of the data matrix. \(R_i(\cdot )\) is an extraction operation that extracts similar patches of the patch located at i. \(R_i(X) = [X_{i_1},X_{i_2},...,X_{i_N}]\in \mathbb {R}^{n^2\times N}\) is similar patch group of the reference patch \(X_{i_1}\), and \(R_i(Y) = [Y_{i_1},Y_{i_2},...,Y_{i_N}]\in \mathbb {R}^{n^2\times N}\) represents the corresponding patch group extracted from Y.

Figure 3 presents the completion results by using only low-rank without the multiplanar AR model, and by MARLow. From the figure, we can see that MARLow can effectively connect fractured edges.

3.3 Multichannel Image Completion

For multichannel images, instead of applying the straightforward idea, that is, the separate procedure (i.e. processing different channels separately and combining the results afterward), we present an alternative scheme to simultaneously process different channels. At first, we collect similar patches of size \(n\times n\times h\) (where h represents the number of channels) in a multichannel image. After that, each patch group is processed by simultaneously considers all channels. Specifically, the collected patches can be formed into h data cubes by stacking slices (of size \(n\times n\times 1\)) in the corresponding channel of different patches. For multiplanar AR model, the minimization problem in Eq. (5) turns into

For low-rank minimization, N collected patches are formed into a potentially low-rank data matrix of size \((n^2\times h)\times N\) by representing each patch as a vector.

Taking an RGB image for an example, in patch grouping, we search for similar patches using reference patches with the size \(n\times n \times 3\). The multichannel image completion problem can be solved by minimizing

where

The notations are given similarly as the preceding definitions. By utilizing the information in multichannel images, the patch grouping can be more precise. Furthermore, rich information in different channels can compensate for each other and constrain the completion result. Figure 4 illustrates the difference between processing different channels separately and simultaneously (with 80 % pixels missing). Compared with the separate procedure, the multichannel image completion approach can significantly improve the performance of our method. Thus, in Sect. 5, for those methods dedicated to gray-scale image completion, we do not apply the separate procedure to them to obtain color image completion results since it may be unfair. Instead, we compare our multichannel image completion method with other state-of-the-art color image completion methods.

4 Optimization

In this section, we present an alternating minimization algorithm to solve the minimization problems in Eqs. (6) and (8). Take Eq. (6) for an example. We address each of the variable \(X_i\) and \(\varphi _i\) separately and present an efficient optimization algorithm.

When fixing \(X_i\), the problem turns into

which is a standard regularized linear least square problem, and can be solved by ridge regression. The closed-form solution is given by

where \(\hat{Y} = {T_i}{(Y)}\).

With \(\varphi _i\) fixed, the problem for updating \(X_i\) becomes

Here we notice that \(X_i\) and \(R_i(X)\) contain the same elements. Their only difference is the formation: \(X_i\) is a vector and \(R_i(X)\) is a matrix. Since we use Frobenius norm here, the value of the norm does not change if we reform the vector into a matrix form. So we reform \(X_i\) into a matrix \(M_i\) corresponding to \(R_i(X)\) (in this way, \(R_i(X)\) does not need to be reformed, and it can be represented by \(M_i\) directly). The vector \(T_i(Y)\cdot \varphi _i\) is also reformed into a matrix form, represented by \(Y_{1_i}\). By denoting \(Y_{2_i} = R_i(Y)\), we can get the simplified version of Eq. (11):

It is a modified low-rank minimization problem and can be transformed into the following formation

where \(Y_i'=(1-\lambda )Y_{1_i}+\lambda Y_{2_i}\) and \(\lambda = \mu /(\mu +1)\). The problem now turns into a standard low-rank minimization problem [2]. Its closed-form solution is given as

where \(S_\tau (\cdot )\) represents the soft shrinkage process.

With the input random sampled image Y and the mask matrix \(M_{mask}\) indicating known pixels (0s for missing pixels and 1s for known pixels), our alternating minimization algorithm for image completion from random sampling can be summarized in Algorithm 1.

5 Experimental Results

Experimental results of compared methods are all generated by the original authors’ codes, with the parameters manually optimized. Both objective and subjective comparisons are provided for a comprehensive evaluation of our work. Peak Signal to Noise Ratio (PSNR) and structural similarity (SSIM) index are used to evaluate the objective image quality. In our implementation, if not specially stated, the size of each image patch is set to \(8\times 8\) (\(5\times 5\times 3\) in color images) with four-pixel (one-pixel in color images) overlap. The number of similar patches is set to \(N = 64\) for gray-scale image and \(N = 75\) for color image. Other parameters in our algorithm are empirically set to \(\alpha = \sqrt{10}\), \(\mu = 10\). Please see the electronic version for better visualization of the subjective comparisons. More results can be found in the supplementary materials.

5.1 Gray-Scale Image Completion

For gray-scale images, we compare our method with state-of-the-art gray-scale image completion methods BPFA [28], BNN [18], ISDSB [10], and JSM [24]. Table 1 shows PSNR/SSIM results of different methods on test images with 80 % pixels missing. From Table 1, the proposed method achieves the highest PSNR and SSIM in all cases, which fully demonstrates the effectiveness of our method. Specifically, the improvement on PSNR is 1.06 dB and that on SSIM is 0.0148 on average compared with the second best algorithm (i.e. JSM).

Figure 5 compares the visual quality of completion results for test images (with 80 % pixels missing). From Fig. 5, ISDSB and BNN successfully recover the boundaries of the image, but fail to restore rich details. BPFA performs better completion on image details. Nonetheless, there are plenty of noises along edges recovered by BPFA. At the first glance, the completion results of JSM and our method are both of high quality. However, if we get a closer look, it can be observed that there are isolated noises on image details (such as structures on Lena’s hair and her hat) in the result generated by JSM. JSM also cannot recover tiny structures. Our method presents the best visual quality, especially on image details and edges.

5.2 Color Image Completion

We compare our method with state-of-the-art color image completion methods FoE [19], BPFA [28], GSR [23] and ST-NLTV [5]. Table 2 lists PSNR/SSIM results of different methods on color images with 80 % and 90 % pixels missing. It is clear that the proposed method achieves the highest PSNR/SSIM in all cases. Compared with gray-scale images, our image completion method performs even better on color images judging from the average PSNR and SSIM. The proposed method outperforms the second best method (i.e. BPFA) by 2.78 dB on PSNR and 0.0288 on SSIM. Note that, when tested on image Woman with 90 % pixels missing, the PSNR and SSIM improvements achieved by our method over BPFA are 5.92 dB and 0.0539, respectively.

Figure 6 shows the visual quality of color image completion results for test images (with 90 % pixels missing). Apparently, all the comparing methods are doing great on flat regions. However, FoE and ST-NLTV cannot restore fine details. GSR is better on recovering details, but it generates noticeable artifacts around edges and fails to connect fractured edges. BPFA produces sharper edges, but its performance under higher missing rate is not satisfying. The result of our method is of the best visual quality, especially under higher missing rate.

We also compare our method with state-of-the-art low-rank matrix/tensor completion based methods TNNR [22], LRTC [15] and STDC [4]. Since these methods regard the whole image as a potentially low-rank matrix, the input image should have strong correlations between its columns or rows. Thus, to be fair, we also test this kind of images to evaluate the performance of our method. From Fig. 7, TNNR and LRTC tends to erase tiny objects of the image, such as the colorful items (see the close-ups in Fig. 7). STDC imports noticeable noises into the whole image. The proposed method presents not only accurate completion on sharp edges, but also high-quality textures, exhibiting the best visual quality.

5.3 Text Removal

Text removal is one of the classic case of image restoration. The purpose of text removal is to recover the original image from a degraded version by removing the text mask. We have compared our method with four state-of-the-art algorithms: KR [21], FoE [20], JSM [24] and BPFA [28]. Our experimental settings of text removal are the same with those in color image restoration. Table 3 shows the PSNR and SSIM results of different methods. Figure 8 presents visual comparison of different approaches, which further illustrates the effectiveness of our method.

5.4 Image Interpolation

The proposed method can also be applied on basic image processing problems, such as image interpolation. In fact, image interpolation can be regarded as a special circumstance of image restoration from random samples. To be more specific, locations of the known/missing pixels in image interpolation are fixed. Since our method is designed to deal with image restoration from random samples, we do not utilize this feature in our current implementation. Even so, we evaluate the performance of the proposed method with respect to image interpolation by comparing with other state-of-the-art interpolation methods. The compared methods including AR model based interpolation algorithms NEDI [14] and SAI [25], and a directional cubic convolution interpolation DCC [27]. Objective results are given in Table 4 and subjective comparisons are demonstrated in Fig. 9, showing that proposed method is competitive with other methods.

6 Conclusion

In this work, we introduce the new concept of the multiplanar model, which exploits the cross-dimensional correlation in similar patches collected in a single image. Moreover, a joint multiplanar autoregressive and low-rank approach for image completion from random sampling is presented, along with an alternating optimization algorithm. Our image completion method can be extended to multichannel images by utilizing the correlation in different channels. Extensive experiments on different applications have demonstrated the effectiveness of our method. Future works include the extensions on more other applications, such as video completion and hyperspectral imaging. We are also interested in adaptively choosing the size of the processing image patch since it might improve the completion result.

References

Buades, A., Coll, B., Morel, J.M.: A review of image denoising algorithms, with a new one. Multiscale Model. Simul. 4(2), 490–530 (2005)

Cai, J.F., Cands, E.J., Shen, Z.: A singular value thresholding algorithm for matrix completion. SIAM J. Optim. 20(4), 1956–1982 (2010)

Candès, E., Recht, B.: Exact matrix completion via convex optimization. Found. Comput. Math. 9(6), 717–772 (2009). http://dx.doi.org/10.1007/s10208-009-9045-5

Chen, Y.L., Hsu, C.T., Liao, H.Y.: Simultaneous tensor decomposition and completion using factor priors. IEEE Trans. Pattern Anal. Mach. Intell. 36(3), 577–591 (2014)

Chierchia, G., Pustelnik, N., Pesquet-Popescu, B., Pesquet, J.C.: A nonlocal structure tensor-based approach for multicomponent image recovery problems. IEEE Trans. Image Process. 23(12), 5531–5544 (2014)

Dabov, K., Foi, A., Katkovnik, V., Egiazarian, K.: Image denoising by sparse 3-D transform-domain collaborative filtering. IEEE Trans. Image Process. 16(8), 2080–2095 (2007)

Dong, W., Shi, G., Li, X.: Nonlocal image restoration with bilateral variance estimation: a low-rank approach. IEEE Trans. Image Process. 22(2), 700–711 (2013)

Dong, W., Zhang, L., Lukac, R., Shi, G.: Sparse representation based image interpolation with nonlocal autoregressive modeling. IEEE Trans. Image Process. 22(4), 1382–1394 (2013)

Goh, W., Chong, M., Kalra, S., Krishnan, D.: Bi-directional 3D auto-regressive model approach to motion picture restoration. In: IEEE International Conference on Acoustics, Speech, and Signal Processing, vol. 4, pp. 2275–2278, May 1996

He, L., Wang, Y.: Iterative support detection-based split bregman method for wavelet frame-based image inpainting. IEEE Trans. Image Process. 23(12), 5470–5485 (2014)

Heide, F., Heidrich, W., Wetzstein, G.: Fast and flexible convolutional sparse coding. In: IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pp. 5135–5143, June 2015

Ji, H., Liu, C., Shen, Z., Xu, Y.: Robust video denoising using low rank matrix completion. In: IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pp. 1791–1798, June 2010

Kokaram, A., Rayner, P.: Detection and interpolation of replacement noise in motion picture sequences using 3D autoregressive modelling. In: IEEE International Symposium on Circuits and Systems, vol. 3, pp. 21–24, May 1994

Li, X., Orchard, M.: New edge-directed interpolation. IEEE Trans. Image Process. 10(10), 1521–1527 (2001)

Liu, J., Musialski, P., Wonka, P., Ye, J.: Tensor completion for estimating missing values in visual data. In: IEEE International Conference on Computer Vision, pp. 2114–2121, September 2009

Maggioni, M., Boracchi, G., Foi, A., Egiazarian, K.: Video denoising, deblocking, and enhancement through separable 4-D nonlocal spatiotemporal transforms. IEEE Trans. Image Process. 21(9), 3952–3966 (2012)

Mairal, J., Bach, F., Ponce, J., Sapiro, G., Zisserman, A.: Non-local sparse models for image restoration. In: IEEE International Conference on Computer Vision, pp. 2272–2279, September 2009

Ono, S., Miyata, T., Yamada, I.: Cartoon-texture image decomposition using blockwise low-rank texture characterization. IEEE Trans. Image Process. 23(3), 1128–1142 (2014)

Roth, S., Black, M.: Fields of experts: a framework for learning image priors. In: IEEE Conference on Computer Vision and Pattern Recognition (CVPR), vol. 2, pp. 860–867, June 2005

Roth, S., Black, M.J.: Fields of experts. Int. J. Comput. Vis. 82(2), 205–229 (2009)

Takeda, H., Farsiu, S., Milanfar, P.: Robust kernel regression for restoration and reconstruction of images from sparse noisy data. In: IEEE International Conference on Image Processing, pp. 1257–1260 (2006)

Zhang, D., Hu, Y., Ye, J., Li, X., He, X.: Matrix completion by truncated nuclear norm regularization. In: IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pp. 2192–2199, June 2012

Zhang, J., Zhao, D., Gao, W.: Group-based sparse representation for image restoration. IEEE Trans. Image Process. 23(8), 3336–3351 (2014)

Zhang, J., Zhao, D., Xiong, R., Ma, S., Gao, W.: Image restoration using joint statistical modeling in a space-transform domain. IEEE Trans. Circ. Syst. Video Technol. 24(6), 915–928 (2014)

Zhang, X., Wu, X.: Image interpolation by adaptive 2-d autoregressive modeling and soft-decision estimation. IEEE Trans. Image Process. 17(6), 887–896 (2008)

Zhang, Z., Ely, G., Aeron, S., Hao, N., Kilmer, M.: Novel methods for multilinear data completion and de-noising based on tensor-svd. In: 2014 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pp. 3842–3849, June 2014

Zhou, D., Shen, X., Dong, W.: Image zooming using directional cubic convolution interpolation. IET Image Process. 6(6), 627–634 (2012)

Zhou, M., Chen, H., Paisley, J., Ren, L., Li, L., Xing, Z., Dunson, D., Sapiro, G., Carin, L.: Nonparametric bayesian dictionary learning for analysis of noisy and incomplete images. IEEE Trans. Image Process. 21(1), 130–144 (2012)

Acknowledgements

This work was supported by National High-tech Technology R&D Program (863 Program) of China under Grant 2014AA015205, National Natural Science Foundation of China under contract No. 61472011 and Beijing Natural Science Foundation under contract No. 4142021.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2016 Springer International Publishing AG

About this paper

Cite this paper

Li, M., Liu, J., Xiong, Z., Sun, X., Guo, Z. (2016). MARLow: A Joint Multiplanar Autoregressive and Low-Rank Approach for Image Completion. In: Leibe, B., Matas, J., Sebe, N., Welling, M. (eds) Computer Vision – ECCV 2016. ECCV 2016. Lecture Notes in Computer Science(), vol 9911. Springer, Cham. https://doi.org/10.1007/978-3-319-46478-7_50

Download citation

DOI: https://doi.org/10.1007/978-3-319-46478-7_50

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-46477-0

Online ISBN: 978-3-319-46478-7

eBook Packages: Computer ScienceComputer Science (R0)