Abstract

Models of groundwater systems help to integrate knowledge about the natural and human system covering different spatial and temporal scales, often from multiple disciplines, in order to address a range of issues of concern to various stakeholders. A model is simply a tool to express what we think we know. Uncertainty, due to lack of knowledge or natural variability, means that there are always alternative models that may need to be considered. This chapter provides an overview of uncertainty in models and in the definition of a problem to model, highlights approaches to communicating and using predictions of uncertain outcomes and summarises commonly used methods to explore uncertainty in groundwater management predictions. It is intended to raise awareness of how alternative models and hence uncertainty can be explored in order to facilitate the integration of these techniques with groundwater management.

You have full access to this open access chapter, Download chapter PDF

Similar content being viewed by others

Keywords

These keywords were added by machine and not by the authors. This process is experimental and the keywords may be updated as the learning algorithm improves.

1 Introduction

“Elementary, ” said he. “It is one of those instances where the reasoner can produce an effect which seems remarkable to his neighbour, because the latter has missed the one little point which is the basis of the deduction.” – Sherlock Holmes in “The Crooked Man”, The Memoirs of Sherlock Holmes (1893)

“How often have I said to you that when you have eliminated the impossible, whatever remains, however improbable, must be the truth?” – Sherlock Holmes, The Sign of the Four, ch. 6 (1890)

“You know my methods, Watson. There was not one of them which I did not apply to the inquiry. And it ended by my discovering traces, but very different ones from those which I had expected.” – Sherlock Holmes in “The Crooked Man”, The Memoirs of Sherlock Holmes (1893, Doubleday p. 416)’

The issue of exploring uncertainty in model -based prediction can be described through three quotes by Sherlock Holmes. Firstly, any particular model may fail to capture a crucial characteristic of a problem. Hence, prediction needs to involve exploration of ‘alternative ’ models in the hope that one may include the one little point which is important for obtaining a sufficiently accurate prediction. Secondly, because the scientific method cannot prove correctness, prediction of uncertain outcomes needs to focus on eliminating the impossible and incorrect. Thirdly, it is often necessary to use multiple methods – because groundwater management involves a hidden and poorly characterized subsurface, there is no definite way of determining which of many methods will provide the necessary information . Although the world of all possible methods are only within reach of experts like Sherlock Holmes, anybody who deals with prediction of uncertain outcomes will benefit from becoming aware of the approaches available and the principles underlying them. This is the purpose of this chapter.

Addressing uncertainty is an indispensable part of prediction. Groundwater management faces uncertainty on many fronts, in understanding the behaviour of the groundwater system, anticipating possible future climatic, economic or geopolitical conditions, prioritising objectives, all combining to add ambiguity in the evaluation of management options. Focussing on the first, it is apparent that scientific research has achieved relative success in reducing this uncertainty, culminating in the ability to approximate the behaviour of a groundwater system using a ‘model ’. There are, however, limits to the ability of science. Far from being all-knowing, there will always be recognised and unrecognised unknowns that mean that a model will always be a simplification of reality, and the predictions it makes will always be uncertain (Hunt and Welter 2010; Guillaume et al. 2012).

A model is simply a tool to encapsulate and transparently express what we think we know and illuminate what we do not (Doherty 2011). It is only as good as the conceptualization that was put in it, and when misused can easily result in ‘garbage-in’ producing ‘garbage-out’. In interpreting results, the end-user should only weight their assessment of a model’s prediction by the confidence they have in the model itself. Given the open system being modelled, an end-user and modeller likely will have sufficient confidence in a family of possible models, each of which makes a different prediction (Barnett et al. 2012). Creating an ensemble of predictions of an uncertain outcome ultimately amounts to using many models and their associated confidence to produce a probability distribution, a confidence interval, or simply a set of scenarios which are believed to have utility for the modelling purpose.

This chapter provides an overview of commonly used methods to explore uncertainty in groundwater management predictions. Their common element is that they help produce ‘alternative ’ models in which the end-user may have sufficient confidence, even though there may be other models which appear better. The presentation is aimed at end-users of groundwater management predictions, including managers and water users, to help them become aware of the methods available to generate alternative model s and hence relate to prediction uncertainty. It may therefore also be of use to modellers to help explain how a technique helps address uncertainty. It is generally accepted amongst modellers that stakeholders ultimately decide whether the accuracy of a prediction is acceptable (Refsgaard and Henriksen 2004). Evaluating prediction accuracy requires understanding how it was produced. In a modelling context, this means being able to critique the reasons why a given model (s) was selected. We encourage all end-users, particularly groundwater managers , to be aware of the different reasoning underlying these methods. We do not expect the reader of this chapter to become an expert. We focus primarily on uncertainty in groundwater models, as a fundamental tool for expressing uncertainty in groundwater management. However, many of the methods can be used with other types of models. We expect the reader may gain an understanding of how modellers can ‘dance’ with a model to explore alternatives . They may be better prepared to participate in judging the value of the information that was put in the modelling, and hence enhance their confidence in the predictions of uncertain outcomes produced. We hope this will consequently help dispel the magical aura and unassailable authority that model predictions often seem to carry, while giving a language for relating the uncertainty that surrounds all predictions.

In order to describe methods to explore uncertainty in a groundwater model , the chapter initially sets the scene by discussing the construction of a clear modelling problem definition , and options for using and communicating predictions of uncertain outcomes. The methods covered include creating alternative model s with different input values and different structures (in terms of both conceptualisation and implementation). Other methods select different parameters using statistical properties of data as well as fitting observations of (multiple) predicted variables , or by aiming to test a hypothesis, or estimate the importance of variables. We finish with methods to anticipate surprise by supporting adaptation and exploring the ‘known unknowns’ of Hunt and Welter (2010). For each method, the general principles tend to be broadly applicable to other types of models, but are illustrated with cases specific to groundwater modelling .

2 Starting from a Clear Problem Definition

The methods described later in the chapter assume that the scope of the problem has been defined. In particular, this means that there are clear predictions to make (Barnett et al. 2012). Models are a simplification of reality, and therefore do not represent all aspects of reality, but modelling needs to adequately capture the salient behaviour of the system of interest for a given purpose (Jakeman et al. 2006). Knowing how predictions will be used should directly inform the modelling approach because it forms the basis for deciding which simplifications and simulation processes are required in the model , and which can be omitted. For example, prediction of groundwater head is a fundamentally different (and easier) problem than prediction of groundwater transport.

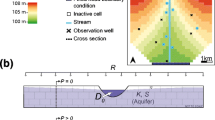

Once a clear set of predictions is identified, the problem is expressed in modelling terms. A model , by definition, is a simplification of a system. As shown in Fig. 28.1, it produces outputs from given inputs , such as rainfall and pumping . The response of the model to these inputs can be modified by changing the value of so-called parameters , such as properties of the modelled aquifer . We need to know what inputs are needed and what outputs are expected. An alternative view of a model is the XLRM framework (Lempert et al. 2003). An end-user may be interested in investigating the effect of different exogenous uncertainties (X) and policy levers (L), and will be expecting that the relationship (R) captured by a model and its parameters will produce certain measures (M) to evaluate them by.

Figure 28.2 shows a more detailed example of the use of a groundwater model as part of a broader integrated model aimed at assessing the impacts of changes in climate conditions and water allocation policies on surface and groundwater-dependent economic and ecological systems (Jakeman et al. 2014). At the core of the integrated model is the hydrological component. The hydrological model takes historical climate data, or future climate projections, as inputs and produces estimates of natural surface-water flows and groundwater levels as outputs . A Water Extraction Limits Model uses the estimated water availability and selected water policy options to calculate the allocations available to landholders. A Farm Decision Model then calculates actual water usage and farm profit based on the pre-extraction water availability , crop characteristics and the modelled decision-making behaviour of landholders. The landholder behaviour is simulated by a social model, which considers levels of compliance and adoption of various land management practices. Finally, the model uses post-extraction surface water flows and groundwater levels to assess ecological impacts.

Diagram of an integrated model (Adapted from Jakeman et al. 2014)

Problem definition s may however be uncertain. They may be affected by constraints on the modelling exercise, such as on cost, time, availability and quality of data and expertise, not all of which may be apparent from the start. Different users may have different conflicting objectives or contradictory understandings of the problem (Brugnach et al. 2008). There may be linguistic ambiguity, with multiple conflicting interpretations of a statement, particularly where people of different professional or disciplinary backgrounds are involved. Even if a problem statement seems qualitatively quite clear, it may be difficult to translate it into quantitative terms. For example, a groundwater well may be considered unusable or “dry” before the bore itself becomes dry (e.g. if the water level falls below the pump intake or if the remaining saturated thickness is insufficient to meet a water need). Moreover, as more is learned, the predictions required may also evolve. Preliminary results might show that other scenarios or policies need to be considered, or it might become apparent that an accurate prediction is simply not possible given the available knowledge, but alternative predictions might still be possible.

In each case, failure to address uncertainty may result in useless predictions. We will limit our discussion of uncertainty in problem definition to a few basic principles:

-

Modellers and stakeholders need to work together to define a problem, in a manner cognisant of the uncertainty involved. All parties should avoid oversimplification of defining the problem; guidelines on this issue are available elsewhere (e.g. Johnson 2008; Voinov and Bousquet 2010)

-

Modellers and stakeholders need to actively seek out different perspectives of the problem. Casting a wide net for views will help ensure that they encompass not only those views important now, but also those that may become important later. This may involve drawing on multidisciplinary teams, considering different parts of a system or seeking out contrasting world views (van Asselt and Rotmans 2002). For example, creating policy and administering may have different requirements. Modelling techniques allow for multiple objectives to be included (e.g. Reed and Minsker 2004; Mantoglou and Kourakos 2007), so it is better to avoid narrowing down prematurely.

-

Be prepared to iterate – do not expect it to be correct the first time. Even as the modelling exercise progresses, remain open to the potential for the problem definition and conceptual models to change dramatically (Bredehoeft 2005). This corresponds to a Bayesian view of the world, wherein data are used to progressively update prior understanding.

In traditional management literature, decision processes are considered to have three main stages: identifying a problem, developing possible courses of action, and selecting a course of action (Janssen 1992). However, it is also possible that in highly complex and deeply uncertain problems, the definition of the problem may be dependent on one’s idea for solving it (Rittel and Webber 1973). Feasible objectives of groundwater management can be limited by practical constraints and uncertainties in how a system will respond to different management interventions. For example, the objective of groundwater management can be restoring groundwater storage to a specified level, or improving groundwater storage relative to the current level. We may find the uncertainty is too high to allow us to predict the actual groundwater storage, but we can predict the direction of change (e.g. improve from current) with higher level of certainty. This finding may trigger us to reconsider what type of management objectives are likely to be achievable given uncertainties and thus what indicators /predictions we want to include in the models.

Therefore, in the context of modelling for decision making, an iterative discovery method designed for co-development of management targets (reflected by the indicators /predictions and model produces) and interventions (reflected by the drivers and scenarios used in the model) can be useful for exploring feasible management targets under deep uncertainties (Fu et al. 2015). As shown in Fig. 28.3, the method starts by evaluating a scenario describing the current best solution, for instance the current groundwater policy . Using visualizations of the solution and its impacts, the user is prompted to identify desirable or undesirable outcomes of the current best scenario. This provides the starting point for three cycles, focusing on model assumptions, alternative groundwater management interventions, and management targets such as maintaining or improving or restoring groundwater storage or the health of groundwater dependent ecosystem s. The outcome of this method is a list of management targets that can and cannot be achieved, the potential interventions that correspond to these targets, and the assumptions and uncertainties associated with these interventions. These outcomes can then be used as inputs for trade-off or cost-benefit analysis of different interventions to select a suitable course of action.

The iterative discovery method. Starting from the current best scenario, potential desirable and undesirable outcomes are identified which prompt the three cycles (assumption, intervention and management target) in order to identify achievable and specific management targets and alternative interventions under uncertainty (From Fu et al. 2015)

This iterative discovery method highlights the importance of using models for capacity building in groundwater decision making under uncertainties. Rather than simply providing ‘the’ answer, the method and models are used to build a knowledge partnership between modelers and decision makers . This kind of method is therefore most useful to analysts preparing recommendations rather than decision makers receiving them.

3 Communicating and Using Predictions of Uncertain Outcomes

The selection of methods to explore uncertainty in predictions depends not just on the problem, but also on how predictions will be used. This in turn depends on why uncertainty needs to be addressed. Notwithstanding earlier discussion, there may be times when uncertainty does not need to be addressed in detail, such as if a wrong prediction will have no impact, impacts are entirely reversible at little cost, or adverse effects of omission can be effectively handled by other aspects of decision making such as engineering safety factors. By way of contrast, we can identify six reasons that uncertainty in predictions may need to be addressed:

-

(a)

Testing whether a conclusion may be wrong. Model predictions form the basis for expecting a result – a conclusion that might later turn out to be wrong. In groundwater management terms, this might include that a project satisfies regulatory requirements on impacts to groundwater, that an extraction limit will prevent drawdown, or that a contaminant will not reach a well. The simplest means of communicating uncertainty in this case is to present a model (s) that cannot be rejected as implausible and in which a conclusion is not guaranteed, for example, describing a potential preferential flow pathway that would be consistent with collected data.

-

(b)

Identifying plans that are robust given uncertainty . Predictions are frequently used within a planning or decision-making framework. Decision-making frameworks based on the concept of robustness aim to identify plans that perform well in a set of models that includes potential unfavourable conditions. The “min-max” and “min-max regret” optimisation methods find a single ‘robust’ solution that respectively provides the best performance in the worst model scenario, or minimises regret if the future turns out to be described by a different model than expected (Kouvelis and Yu 1997). Extensions to this concept include considering multiple solutions that are close to the best one or considering more than just the worst case (Kalai et al. 2012). Optimisation under uncertainty also includes a number of methods that use sets of models with names such as ‘chance constraints’, ‘stochastic programming’, and ‘probabilistic ranking’ (Wagner and Gorelick 1989; Gorelick 1990; Chan 1993; Morgan et al. 1993; Bayer et al. 2008). For example, Feyen and Gorelick (2004) ensure that water-table elevations in sensitive wetland areas are not excessively lowered by the withdrawal of groundwater by verifying that constraints on hydraulic head are satisfied by all model realisations in a ‘stack’ of alternative model s . Communication of uncertainty consists of describing the characteristics of models over which a plan has been tested.

-

(c)

Identifying uncertain factors that have the greatest influence. The field of sensitivity analysis examines “how the variation in the output of a model … can be apportioned … to different sources of variation” (Saltelli et al. 2004; Matott et al. 2009; Saltelli and Annoni 2010). This is typically done by comparing outputs of large sets of alternative model s with known differences and calculating statistics developed for the purpose (e.g. Doherty and Hunt 2009). This can help to identify dominant and insignificant drivers of system behaviour, e.g. comparing the effect of pumping in multiple wells on drawdown or comparing the effect of parameters on a prediction. Understanding uncertainty in dominant drivers will facilitate associated reductions in uncertainty in model outputs. Communication focuses on providing a ranking of factors, often with some quantitative measure of significance. Note that a ranking will likely itself be uncertain because estimates of uncertainty are themselves uncertain, in which case it may be necessary to test whether the resulting conclusion may be wrong (See item a in this list).

-

(d)

Prompting changes to models or knowledge. Model uncertainty is related to a model ’s limitations. Understanding the source and nature of that uncertainty can help improve modelling . This includes lack of knowledge, variability and contradiction (Refsgaard et al. 2007; Brugnach et al. 2008; Guillaume et al. 2012). Identifying a knowledge gap may prompt changes that could reduce uncertainty in predictions, such as collecting additional monitoring data. Not all uncertainties are equal – identifying important sources of uncertainty and knowledge gaps in models helps to prioritise research efforts (Fu and Guillaume 2014). Understanding the causes of variability may allow them to be explicitly modelled. Identifying the existence of contradictory views may allow the design of experiments to resolve the debate. For example: model construction itself is a means of dealing with uncertainty, as “the model-construction process organizes and formalizes potential conceptual models of a ground water system” (Hunt and Welter 2010). The field of identifiability analysis aims “to expose inadequacies in the data or suggest improvements in the model structure” (Matott et al. 2009). Data acquisition planning aims to inform what data should be collected (Beven 1993; James and Gorelick 1994; Dausman et al. 2010; Fienen et al. 2010, 2011). Each of these involves exploring and improving the state of inherently imperfect models. Communication of uncertain predictions focuses on its implications for later analyses, or on helping to justify why changes to a model have been made.

-

(e)

Providing quantitative estimates of uncertainty to other users. The ‘need’ to provide an estimate of uncertainty is among the most commonly cited reason for using techniques to explore uncertainty in predictions. As the previous four points indicate, the need reflects a larger context, where uncertainty is a means to a decision-making end. In many cases, information about uncertainty can be communicated and used without necessarily expressing it in quantitative form. A more quantitative characterization of uncertainty may however be used in other processes, such as for risk management and decision theory (Freeze et al. 1990), and may be required by law in some countries as part of cost-benefit analyses or impact assessments. It may also be necessary to pass uncertainty information on to users without knowing how they will use it. In these cases, it is considered good practice to present “the modeller’s estimate of the representative uncertainty given what is known about the system, the type of prediction(s), and the modeller’s experience with the model and model calibration ” (Hunt 2012).

Estimates of uncertainty can be represented at various levels of detail, as illustrated in Fig. 28.4 (Walker et al. 2003; Guillaume et al. 2010). For a given source of uncertainty, there may only be enough information to represent it as bounds or scenarios, rather than probabilities. For example, it might be more appropriate to use best-case and worst-case scenarios (Renard 2007; Paté-Cornell 1996). Where there are many sources of uncertainty, they may need to be represented at multiple different levels, for example variability of rainfall as a distribution, future prices of irrigated crops as bounds, and possible groundwater policies or irrigator pumping patterns as scenarios (Guillaume et al. 2012). Uncertainties that are known at a high level of detail can also be represented at lower levels of detail. For example, probabilities can be represented not just as probability distributions or cumulative distribution functions, but also using means and standard-deviations, confidence intervals and an ensemble of samples from a distribution.

Fig. 28.4 In all cases, the consumers of the uncertainty estimate become the primary focus of how best to relate estimates to others. A groundwater scientist cannot expect that those needing to use the estimates will understand the academic terms and metrics (Hunt 2012). Therefore, translation of estimates into formats of direct use to the decision-making process should be used when possible (e.g. Hunt et al. 2001). Care needs to be taken when communicating estimates of uncertainty, particularly in the case of probabilities. Interpretation of probabilities tends to be biased, such that it is better to communicate them as frequencies, even if the probability refers to the likelihood of a one-off event (Anderson 1998). Rather than providing tables of probabilities, they may be better visualised (Barnett et al. 2012) by using maps or graphs. Where possible, expressing probability with its consequence allows it to be interpreted in terms of risk, reliability or probabilities of exceedance (Paté-Cornell 1996), and therefore provides a closer tie to its implications. Crucially, because of the potential for unrecognised unknowns, presentation of uncertain predictions should avoid stating uncertainty estimates in isolation. It is preferable to instead list the specific aspects of uncertainty that have been considered in producing an estimate, with the understanding that some may have been overlooked (Hunt and Welter 2010; Roy 2010; Guillaume et al. 2012). There is an extensive literature on the presentation and interpretation of uncertainty estimates (Wardekker et al. 2008; Kloprogge et al. 2007), even in the case of scenarios (Alcamo 2008).

-

(f)

Passing on qualitative information about uncertainty . In a strict theoretical sense, the presence of unknowable model structure error means that true uncertainty cannot be characterized (e.g. Beven 2009), and true quantitative estimates are unattainable. Moreover, where a prediction is used only as a scenario to prompt discussion, a qualitative approach may be sufficient. The emphasis in this case may be on how the prediction was produced, and the limitations involved in doing so. For example, modelling of limits to growth was deliberately aimed to open a debate (Meadows et al. 1972), and uncertainty primarily needs to be addressed to convince the audience to take the arguments made by the model seriously. One way of approaching this is through quality assurance of the modelling process and its constituent assumptions (Refsgaard et al. 2005; Guillaume 2011). Another is to include qualitative judgements about the information and how it is produced (Funtowicz and Ravetz 1990; Kloprogge et al. 2011; Van Der Sluijs et al. 2005).

4 Methods for Generating Alternative Models

The preceding section described multiple ways of using uncertainty information . Alternative model s often form an important construct within them for expressing uncertainty, where the uncertainty is represented by using a combination, or ensemble, of model realisations. Each model realisation can consist of different parameter values, inputs and/or model structures, as described in Fig. 28.1. The remainder of this chapter briefly presents a variety of methods for generating alternative model s . Each section describes how the method produces models and key underlying assumptions with reference to examples. The methods are summarised in Box 28.1.

Box 28.1 Types of Methods for Generating Alternative Models

-

28.4.1

Models with different input values

-

28.4.2

Models with different ‘structures’

-

Models with different conceptualisations

-

Models with different mathematical and computational implementations

-

-

28.4.3

Models with different parameter values

-

Geostatistics : models satisfying statistical properties of data

-

Parameter estimation : sampling models that fit data

-

Multi-objective parameter estimation : sampling models that fit contrasting data

-

Hypothesis testing : searching for models that fit data and satisfy a hypothesis

-

Sensitivity analysis : selecting models to understand influence of drivers

-

-

28.4.4

Models to anticipate surprise

-

Models to support adaptation

-

Models that explore the unknown

-

4.1 Models with Different Input Values

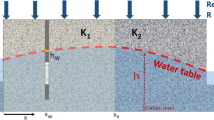

Model results depend on the inputs of sources, sinks and system properties and initial and boundary conditions. Hydraulic heads are given as initial and boundary conditions at the start of the modelled period and at boundaries of the modelled aquifer , such as water levels in lakes, rivers or the ocean. Flows are given as conditions to capture inflows or outflows, whether above ground (e.g. pumping , streamflow or rainfall), or under-ground to and from outside the model area (e.g. regional groundwater flow).

Inputs and boundary conditions are approximate, can be expected to contain errors, and can be expected to change over time. Alternative model s can therefore be created by changing the values of these inputs . We give some examples, but any model input could be altered. Values can be set based on expectations in the future (e.g. sea level rise, development of irrigation ). Values can be randomly sampled from a feasible range of distribution in what is referred to as a Monte Carlo procedure. To capture historical variability , observations can be sampled from existing time series (e.g. Guillaume et al. 2012). Time series can be generated by using a statistical model, for example a weather generator. Outputs can be used from other studies, for example climate scenarios. Groundwater models can also be coupled or integrated with other models, such as ones that models surface water flows and levels (e.g. Graham and Butts 2005; Kollet and Maxwell 2006; Brunner and Simmons 2012), water flows and temperature (Hunt et al. 2013), or irrigator decision making and pumping (Hanson et al. 2010; Guillaume et al. 2012). Corrections to time series can also be made by using parameters that can be estimated along with other parts of the model (Vrugt et al. 2008).

These methods assume that it is sufficiently easy to modify the data used in the modelling software, and that input scenarios chosen are meaningful. It is not cost-effective or useful to produce many scenarios unless there is a clear way of summarising and understanding them, whether as a statistical distribution of a phenomenon, or as standalone scenarios.

4.2 Models with Different ‘Structures’

As discussed with reference to Fig. 28.1, modellers distinguish the model proper from its parameters and inputs . The model proper is referred to as its ‘structure’, and can differ both in how it is conceptualised, which processes are included or excluded, and how it is implemented in mathematical and computational terms (Gupta et al. 2012).

4.2.1 Models with Different Conceptualisations

A model ’s conceptualisation includes both its physical structure, such as the layout of an aquifer or catchment, and process structure, including recharge , aquifer flow and discharge mechanisms (Gupta et al. 2012). In groundwater flow modelling , physical structure tends to be a greater issue because the subsurface environment is observed by sampling, which is necessarily incomplete. Surprises in conceptualisation of the physical structure have included (Bredehoeft 2005) flow of brine within salt, faster flow through unknown factures, lack of evidence of whether a fault is or is not permeable, and lack of understanding of the connection of surface and groundwater, at surface seeps or in river bed. Although groundwater processes are generally well understood, there may still be unanticipated recharge events, unexpected effects of land subsidence, and overlooked chemical reactions.

There are several ways to provide diverse model conceptualisations, each with their own assumptions. A simple approach is to use a set of models pre-determined by hydrogeologists and modellers. However, it cannot be assumed that it is possible to identify all possibilities (Bredehoeft 2005).

An alternative is to approach modelling iteratively, building on previous effort (Haitjema 1995, p. 245; Bredehoeft 2005). This involves starting from an initial simple model , then using a stepwise process to identify limitations and refining models to include additional processes or physical structures. For example, there might be changes in system properties such as subsidence due to potential changes in human operations, such that indirect factors that influence pumping (e.g. economic considerations) should be included as part of a model rather than as a separate input (e.g. Hanson et al. 2010).

Using all possible models can however be overwhelming. Professional judgement or statistical criteria (e.g. Singh et al. 2010) can be used to rank the models or filter some out to know where to focus. However, this risks eliminating models that might turn out to better represent the unknowable future, so it is worth treating such a decision as provisional, and keeping an open mind about returning to the models excluded.

Fortunately for decision-making , it is often not necessary for the model to describe precisely what is occurring in the groundwater system. Instead, conservative estimates can be used that can inform decisions regarding margins of safety (e.g. in Bredehoeft 1983; Tiedeman and Gorelick 1993). In practice, the best one can hope for is to identify models that bracket the true value (Doherty 2011), from which safety factors can be derived from model results even if deliberately over- and under-estimated (Guillaume et al. 2012). This approach however assumes that there is a known bad thing to avoid (Freeze et al. 1990) and costs of being overly conservative are formally recognized.

4.2.2 Models with Different Computational Implementations

Modelling requires that conceptualisations be made explicit in mathematical and computational form. Achieving this level of precision typically requires additional assumptions, regarding spatial variability , equations and their computational solution (Gupta et al. 2012).

Most numerical groundwater models discretize space into piecewise-constant quantities with a nodal grid or mesh. This discretization process raises the question of appropriate scale, and how the trade-off of computational burden and model resolution is decided. It is also possible to vary the resolution, and to use a combination of fine and coarse resolutions (Mehl et al. 2006). No grid or set of elements will fully capture a conceptual model, so the trade-off is subjective in that a modeller and end-user have discretion to select a variety of alternative scales based on practical considerations, such as computational cost. The objective of the model is of primary importance; models used to determine regional trends in groundwater level will require a different resolution than those used to evaluate the local flow of a contaminant.

4.3 Models with Different Parameter Values

Parameters play a key role in easily generating alternative model s . In the context of groundwater modelling , Bredehoeft (2005) observed that “in many cases hydrogeologists were not sufficiently informed to imagine what is the entire set of possible conceptual models.” In most cases, detailed properties of specific groundwater systems would be even more difficult to specify a priori. Instead, the modeller uses a general structure and defines parameters that when varied will encompass a variety of specific system properties. Multiple sets of parameter values can then be specified, or can be estimated or constrained by observations from the field. A strength of these methods is that hydrogeologists’ knowledge of the broad scale system, and its effect on local scale properties that result, can be tested and evaluated; and vice-versa, hydrogeologists’ knowledge can be used to evaluate the quality of data.

4.3.1 Geostatistics : Models Satisfying Statistical Properties of Data

Geostatistics provides a systematic means of using statistical properties of observed spatial data to generate alternative conceptualisations of physical properties. It interpolates given data points while satisfying observed heterogeneity and connectivity, which is particularly important for flow of contaminants (Renard 2007).

Data are used to generate a statistical model of heterogeneity (Marsily et al. 2005). The statistical model is in most cases a ‘variogram ’, which captures the probabilistic degree of dependence between any two points in space (Delhomme 1979). More advanced techniques, like multiple-point statistics , adopt a richer model of heterogeneity where the relationship between complex patterns of points is enclosed in a so called training image (e.g. Mariethoz and Caers 2014), complementing data with additional geological ‘soft’ knowledge (Strebelle 2002; Hu and Chugunova 2008; Meerschman et al. 2013). Both these geostatistical methods are stochastic, in the sense that once a model of heterogeneity is selected (variogram or training image), an infinite number of equally probable realisations of geological heterogeneity can be generated, allowing the exploration of the corresponding uncertainties.

Four realisations of a multiple-point statistics conditional simulation are illustrated in Fig. 28.5, together with observed data points. Noting that a sand channel is observed at points 2, 4 and 3, one might ask: what is the probability that the points are connected by the same sand channel? In three of the four realisations presented here the three points belong to the same sand channel (realisations #1, #2, and #4) while in the other (realisation #3) the point 2 belongs to a different sand channel. The statistical techniques by which these realisations are generated allow probabilities to be calculated, given a sufficiently large number of realisations, if necessary assumptions are satisfied.

Conditioning data and four realizations of a multiple-point statistics simulation of a sand channel system (The training image used for the simulation is taken from Strebelle 2002)

Geostatistical methods require dedicated tools and training to be used. They can require significant computer time, and depending on complexity of the problem, not all physical relationships in structure can yet be captured by theory. In addition, the choice of the model of heterogeneity in itself represents a source of uncertainty , closely related to the conceptualization of the geological model (see Sect. 28.4.2.1). Notwithstanding these drawbacks, geostatistical techniques are expanding from the mining and oil industries into the groundwater sector as they represent an important tool to explore uncertainty-related problems.

4.3.2 Parameter Estimation: Sampling Models That Fit Data

Parameters within a model can be easily manipulated, yielding a family of model realisations that can be explored, each with the same site geometry or structure (Barnett et al. 2012). So, for example, many models with different hydraulic conductivity and storativity in parts of an aquifer might fit the data relatively well, even if the overall structure of the aquifer is kept constant.

However, groundwater models typically carry sufficient resolution to represent hydraulic conductivity and storativity at a fine spatial and temporal scale, and it is often not possible to directly estimate parameters at that level of detail (Barnett et al. 2012), due to computational difficulties or expense of data collection. Instead, parameters are estimated for ‘zones’ or ‘pilot points’ from which all the more detailed parameters are calculated. The use of zones, also known as parameter lumping, involves subdividing the model based on geological boundaries or other reasons into regions that will be given the same hydraulic properties. Although this approach is conceptually simple, disadvantages include that it can be difficult to define such zones ahead of time, it may become apparent that geological properties do vary within a zone, and the abrupt changes in hydraulic properties at the edges of zones may not seem natural. Moreover, such a traditional zonation approach can reduce the effectiveness of the model to extract information from the field data in ways that cannot be quantified (Hunt et al. 2007; Doherty and Hunt 2010a). The pilot points approach involves setting parameter values at a fixed set of points and then interpolating across the model, using some of the geostatistical techniques described above (de Marsily 1978; Doherty 2003; Doherty et al. 2010a). This results in a more automated process generating a smooth variation of hydraulic properties.

Values of parameters can be estimated with a number of different approaches. A first aim is to identify a single model that best fits the observed data and the soft-knowledge of the system, to form a single construct for decision-making purposes. This initially involves a process of trial and error, trying different parameter values to progressively minimise the difference between the model outputs and data. This history matching is then typically automated using formal nonlinear regression methods, which automate the trial and error testing of parameters to minimise an ‘objective function’ that provides a measure of difference between model outputs and data. A variety of approaches are available for the design of the optimisation algorithm (Duan et al. 1992; Vrugt et al. 2003) parameterization approach (Doherty and Hunt 2010b), and selection of objective functions (Renard 2007; Schoups and Vrugt 2010; Bennett et al. 2013). Problems can prevent automated parameter estimation from consistently identifying a unique set of parameters (Sorooshian and Gupta 1983; Doherty and Hunt 2010b; Barnett et al. 2012). Most notably, all models simplify the world and leverage additional soft-knowledge to ensure the complexity of the model does not exceed the information available in the data (Jakeman and Hornberger 1993; Moore and Doherty 2005; Hunt et al. 2007; Barnett et al. 2012). This allows a single set of parameters to be identified, in a process known as regularisation (Moore and Doherty 2006), whether done ad hoc as in trial and error history matching, or with advanced algorithms (Hunt et al. 2007).

The second type of method does not seek to identify a single best parameter set but instead identifies realisations, or a set of models, that fit the data well enough by either statistical or less formal ‘acceptable performance’ criteria. Statistical criteria make assumptions about the distribution of errors (Schoups and Vrugt 2010), which, if correct, allow estimation of probability distributions of parameters . All models are in principle retained, but for a given output (e.g. hydraulic head at a point in time and space), models that yield extreme output values can be ignored. For example, by accepting that one in every 100 identical predictions could be wrong, a 99 % confidence interval can be calculated for the model output. Depending on the mathematical form of the model, ‘linear methods’ can be used to provide quick estimates (e.g. Doherty et al. 2010b). Even computationally more demanding techniques (e.g. when few parameters are used, Markov Chain Monte Carlo , Keating et al. 2010; Laloy and Vrugt 2012) are still approximate in that all estimates of uncertainty will be lacking in some regard (Barnett et al. 2012).

Approaches that use less formal ‘acceptable performance’ criteria can be quite diverse. Set membership methods identify parameters when the error in data is assumed to be bounded (Walter and Piet-Lahanier 1990). Generalised Likelihood Uncertainty Estimation (GLUE) extends this idea by defining limits of acceptability (Beven 2006, 2009) against which randomly sampled models are tested. Similarly Null Space Monte Carlo (Tonkin and Doherty 2009) uses theory about parameter estimation to randomly sample other parameters that satisfy a minimum performance requirement.

Many of these techniques that rely on random sampling may require a long time or many computers to run. This is particularly the case if the model is slow (takes longer than a few minutes), or if the method requires a large number of model runs (i.e. highly parameterized models). In these cases, it may be advantageous to use a ‘surrogate model’ (e.g. Keating et al. 2010; Doherty and Christensen 2011; Asher et al. 2015). A surrogate model uses a smaller number of model runs to then mathematically approximate a complex model using a simpler function. They therefore run faster and allow the more complex techniques to still be used.

4.3.3 Multi-objective Parameter Estimation: Sampling Models That Fit Contrasting Data

The methods discussed in the preceding section can be extended to evaluate models against multiple types of data. As models are necessarily a simplification of reality, even if a model fits one type of data well, such as a local pumping test, it may not make accurate predictions of other outputs , such as regional flows. It is known that information about hydraulic head alone does not allow both recharge and transmissivity to be simultaneously estimated in some conditions (Haitjema 1995, 2006). It is therefore desirable to use a variety of data sources to determine in which alternative model s we might have sufficient confidence (Kim et al. 1999; Schoups et al. 2005; Hunt et al. 2006; Renard 2007). Groundwater models in particular can potentially predict a number of different outputs, for which data can often be obtained. Flow data can be compared to predicted spring flows and leakage to and from a river. Temperature data can be compared to temperature resulting from mixing, e.g. of surface water and groundwater . Salinity or concentrations of some contaminants measured in the field can be compared to predicted concentrations of these substances. Use of tracer substances, either introduced or naturally occurring in the aquifer , can be compared to predicted flow paths, travel time and groundwater age (time since water entered the aquifer). Recent ecohydrological tracers such as viruses (e.g. Hunt et al. 2014) allow characterization of very short time of travel (<3 years) – ages not well characterized by traditional tracers.

It can also commonly occur that a model with a single parameter set is not able to simulate every prediction equally well. Instead, there is a trade-off between fitting different datasets that may or may not inform parameters important for prediction, and the prediction of interest. Therefore, it is recognized that multiple alternative model s may be required to provide better predictions for when there is more than one prediction of interest (Moore and Doherty 2005).

Where the uncertainty in predictions is too great, models can be used to optimize data collection to cost-effectively reduce the uncertainty associated with a given prediction. For example, existing models can be used to estimate the effect of establishing a new monitoring borehole at particular locations (Dausman et al. 2010), though results may be affected by the existing assumptions in the models used (Fienen et al. 2011). New data collection often consists of extensions of existing head and flux monitoring networks, but can also encompass estimates of model parameters obtained from dedicated tests, such as from soil properties of geological drilling logs, lab tests and from measuring the response in groundwater level during aquifer tests (Illman et al. 2008).

4.3.4 Hypothesis Testing: Searching for Models That Fit Data and Satisfy a Hypothesis

Alternative model s can be explicitly identified that seek to test a hypothesis, rather than just focussing on fitting data, as is the case with all the previous methods to obtain models with different parameters . The idea is that communicating uncertainty will involve assessing the probability that something bad might happen (Freeze et al. 1990; Doherty 2011), for example, a contaminant reaches the drinking water well, or an ecosystem dies from lack of water. Knowing this ahead of time, we can explicitly search for a plausible model that might return such a prediction.

One approach is to define criteria by which to test whether alternative model s are acceptable and to find the model that is closest to meeting the hypothesis, for example the model where the contaminant comes closest to the well, or the ecosystem has the least volume of water possible. This can be achieved by making conservative (yet defensible) simplifying assumptions, or by expressing the relevant criteria mathematically and using ‘constrained optimisation’ tools.

Yet, a priori determinations of what is plausible can artificially limit the range of alternative model s evaluated. Moore et al. (2010) remove this limitation by expressing the problem as a trade-off of predicted value against fit to the observed data, which in turn allows the user to determine the level of acceptable uncertainty (Fig. 28.6). Rather than explicitly defining acceptable performance criteria, “Pareto front” graphs are drawn showing the intrinsic trade-off of the prediction reaching a societally relevant threshold against the fit given by existing data for the system simulated. This makes it easy to relate uncertainty in terms of the prediction of interest, and can be constructed for multiple possible hypotheses and levels of acceptable criteria.

Pareto front defining trade-off between objective function (lower numbers indicate better fit) and predicted particle travel time (From Moore et al. 2010)

4.3.5 Sensitivity Analysis: Selecting Models to Understand Influence of Drivers

Purpose-designed methods are also available where the aim is to efficiently identify uncertain drivers – those that have the greatest influence. These techniques are commonly referred to as ‘sensitivity analysis ’ (Saltelli et al. 2004). These techniques may be based on local perturbations, where they only provide information about the specific model and set of parameters that is perturbed. Local sensitivity information may not reflect the sensitivity over the full range of plausible parameters. Therefore, sensitivity methods can also be global, which provides information about a broader sample of values the different sources of variation can take. Sensitivity analysis can either provide information about the effect of a factor keeping all others constant (Saltelli and Annoni 2010), or total effect of a factor with interactions with other factors (e.g. global sensitivity statistics such as Morris, Sobol, FAST).

4.4 Models to Anticipate Surprise

It is inevitable in all environmental modelling that there might be ‘unknown unknowns ’ and therefore surprises (Bredehoeft 2005; Hunt and Welter 2010). Model structures are unlikely to serendipitously include processes or structures that modellers do not know exist. Models that are calibrated by using existing data are tuned to reflect processes that can be identified from that data. Predictions of flow of contaminants could be completely underestimated if fractures exist that were not explicitly incorporated into the model . We discuss two approaches to creating models that help deal with this surprise : models to support adaptation , and models to explore the unknown.

4.4.1 Models to Support Adaptation

In principle, surprise can be dealt with by adaptive management . Rather than expecting modelling to anticipate all uncertainty , management plans remain open to change and plans for an iterative modelling and management process (Bredehoeft 2005). In the context of groundwater , models are still crucial to this process. Due to slow response times, when a change is detected, it may already be too late to do anything about it (Bredehoeft and Durbin 2009). Even if action is taken immediately, impacts may still worsen before they improve. It is therefore essential to try to anticipate the delays that might occur within a system. Model scenarios can also help to predict “sell-by” dates at which current plans might be expected to fail, to help plan adaptive pathways (Haasnoot et al. 2013). As discussed earlier, models can be used to help plan the monitoring needed to detect unexpected changes with an understanding of the time until impact. In parallel, model scenarios can be created to evaluate how future options might be curtailed as a consequence of short-term choices (Wong and Rosenhead 2000). Methods exist to allow model parameters to be efficiently updated given new data, and to detect when the data does not fit the current model (e.g. Cheng et al. 2011). This is particularly relevant where models are used operationally, such as in mine dewatering and water supply.

4.4.2 Models to Explore the Unknown

Potential surprises can also be anticipated by placing fewer restrictions on what is considered possible, and using the model prediction as a discussion point. Considering a larger set of models helps inform adaptation by discussing “what we do if this situation did occur?” This is even possible if no data are available.

This can be thought of as vulnerability analysis , identifying model properties in which negative outcomes occur. For example, Nazemi et al. (2013) identify changes in climate that would result in water scarcity problems, deferring the judgement as to whether those climate changes could occur. Scenario discovery (Bryant and Lempert 2010) randomly samples a large number of parameters and then identifies the values of parameters for which the negative outcome might occur. Break-even analysis identifies models at tipping point s, for example, the infiltration rate or hydraulic conductivity at which managed aquifer recharge using basin infiltration is uneconomical (Frey and Patil 2002). Similar techniques have been applied to identify the circumstances in which two management options are equivalent, i.e. the point at which a different option becomes superior (Ravalico et al. 2009).

5 Conclusions

This chapter discussed a variety of methods for generating alternative model s in order to explore uncertainty in predictions that can be applied to integrated groundwater management . The methods used depend on how the problem is defined, resources available, and how it is intended that the predictions of uncertain outcomes are used. Although many of these methods require hydrogeological, mathematical and computational expertise, together they provide a broad toolbox for identifying a more encompassing view of what might happen. Stakeholders are more likely to be forewarned with a range of plausible alternatives that they may have to face, which, in turn, can facilitate better decision making.

References

Alcamo J (ed) (2008) Environmental futures: the practice of environmental scenario analysis. Elsevier, Amsterdam

Anderson JL (1998) Embracing uncertainty: the interface of Bayesian statistics and cognitive psychology. Conserv Ecol 2(1):2

Asher MJ, Croke BFW, Jakeman AJ, Peeters LJM (2015) A review of surrogate models and their application to groundwater modeling. Water Resour Res 51(8). doi:10.1002/2015WR016967

Barnett B, Townley R, Post V, Evans R, Hunt RJ, Peeters L, Richardson S, Werner AD, Knapton A, Boronkay A (2012) Australian groundwater modelling guidelines. Report no 82. National Water Commission, Canberra

Bayer P, Bürger CM, Finkel M (2008) Computationally efficient stochastic optimization using multiple realizations. Adv Water Resour 31(2):399–417. doi:10.1016/j.advwatres.2007.09.004

Bennett ND, Croke BFW, Guariso G, Guillaume JHA, Hamilton SH, Jakeman AJ, Marsili-Libelli S, Newham LTH, Norton JP, Perrin C, Pierce SA, Robson B, Seppelt R, Voinov AA, Fath BD, Andreassian V (2013) Characterising performance of environmental models. Environ Model Software 40:1–20. doi:10.1016/j.envsoft.2012.09.011

Beven K (1993) Prophecy, reality and uncertainty in distributed hydrological modeling. Adv Water Resour 16:41–51. doi:10.1016/0309-1708(93)90028-E

Beven K (2006) A manifesto for the equifinality thesis. J Hydrol 320(1–2):18–36. doi:10.1016/j.jhydrol.2005.07.007

Beven KJ (2009) Environmental modelling: an uncertain future? Routledge, New York

Bredehoeft JD (1983) Ground water: a review. Rev Geophys 21(3):760–765. doi:10.1029/RG021i003p00760

Bredehoeft J (2005) The conceptualization model problem – surprise. Hydrogeol J 13(1):37–46. doi:10.1007/s10040-004-0430-5

Bredehoeft J, Durbin T (2009) Ground water development – the time to full capture problem. Ground Water 47(4):506–514. doi:10.1111/j.1745-6584.2008.00538.x

Brugnach M, Dewulf A, Pahl-Wostl C, Taillieu T (2008) Toward a relational concept of uncertainty: about knowing too little, knowing too differently, and accepting not to know. Ecol Soc 13(2):30

Brunner P, Simmons CT (2012) HydroGeoSphere: a fully integrated, physically based hydrological model. Ground Water 50(2):170–176. doi:10.1111/j.1745-6584.2011.00882.x

Bryant BP, Lempert RJ (2010) Thinking inside the box: a participatory, computer-assisted approach to scenario discovery. Technol Forecast Soc Chang 77:34–49. doi:10.1016/j.techfore.2009.08.002

Chan N (1993) Robustness of the multiple realization method for stochastic hydraulic aquifer management. Water Resour Res 29(9):3159–3167. doi:10.1029/93wr01410

Cheng W-C, Putti M, Kendall DR, Yeh WWG (2011) A real-time groundwater management model using data assimilation. Water Resour Res 47(6). doi:10.1029/2010wr009770

Dausman AM, Doherty J, Langevin CD, Sukop MC (2010) Quantifying data worth toward reducing predictive uncertainty. Ground Water 48(5):729–740. doi:10.1111/j.1745-6584.2010.00679.x

de Marsily G (1978) De l’identification des systèmes hydro-géologiques. Centre d’Informatique Géologique, Ecole des Mines de Paris, Paris

Delhomme JP (1979) Spatial variability and uncertainty in groundwater flow parameters: a geostatistical approach. Water Resour Res 15(2):269–280. doi:10.1029/WR015i002p00269

Doherty J (2003) Ground water model calibration using pilot points and regularization. Ground Water 41(2):170–177. doi:10.1111/j.1745-6584.2003.tb02580.x

Doherty J (2011) Modeling: picture perfect or abstract art? Ground Water 49(4):455–455. doi:10.1111/j.1745-6584.2011.00812.x

Doherty J, Christensen S (2011) Use of paired simple and complex models to reduce predictive bias and quantify uncertainty. Water Resour Res 47(12), W12534. doi:10.1029/2011WR010763

Doherty J, Hunt RJ (2009) Two statistics for evaluating parameter identifiability and error reduction. J Hydrol 366(1–4):119–127. doi:10.1016/j.jhydrol.2008.12.018

Doherty J, Hunt RJ (2010a) Response to comment on: Two statistics for evaluating parameter identifiability and error reduction. J Hydrol. doi:10.1016/j.jhydrol.2009.10.012

Doherty JE, Hunt RJ (2010b) Approaches to highly parameterized inversion: A guide to using PEST for groundwater-model calibration. U.S. Geological Survey Scientific Investigations Report 2010-5169, 60 p. http://pubs.usgs.gov/sir/2010/5169/

Doherty JE, Fienen MF, Hunt RJ (2010a) Approaches to highly parameterized inversion: Pilot-point theory, guidelines, and research directions. U.S. Geological Survey Scientific Investigations Report 2010-5168, 36 p. http://pubs.usgs.gov/sir/2010/5168/

Doherty JE, Hunt RJ, Tonkin MJ (2010b) Approaches to highly parameterized inversion: A guide to using PEST for model-parameter and predictive-uncertainty analysis. U.S. Geological Survey Scientific Investigations Report 2010-5211, 71 p. http://pubs.usgs.gov/sir/2010/5211/

Duan Q, Sorooshian S, Gupta V (1992) Effective and efficient global optimization for conceptual rainfall-runoff models. Water Resour Res 28(4):1015–1031. doi:10.1029/91wr02985

Feyen L, Gorelick SM (2004) Reliable groundwater management in hydroecologically sensitive areas. Water Resour Res 40(7), W07408. doi:10.1029/2003wr003003

Fienen MN, Doherty JE, Hunt RJ, Reeves HW (2010) Using prediction uncertainty analysis to design hydrologic monitoring networks – Example applications from the Great Lakes Water Availability Pilot Project. U.S. Geological Survey Scientific Investigations Report 2010-5159, 44 p. http://pubs.usgs.gov/sir/2010/5159/

Fienen MN, Hunt RJ, Doherty JE, Reeves HW (2011) Using models for the optimization of hydrologic monitoring. U.S. Geological Survey Fact Sheet 2011-3014, 6 p. http://pubs.usgs.gov/fs/2011/3014/

Freeze RA, Massmann J, Smith L, Sperling T, James B (1990) Hydrogeological decision analysis: 1. A framework. Ground Water 28(5):738–766. doi:10.1111/j.1745-6584.1990.tb01989.x

Frey CH, Patil SR (2002) Identification and review of sensitivity analysis methods. Risk Anal 22:553–578. doi:10.1111/0272-4332.00039

Fu B, Guillaume JHA (2014) Assessing certainty and uncertainty in riparian habitat suitability models by identifying parameters with extreme outputs. Environ Model Software 60:277–289

Fu B, Guillaume JHA, Jakeman AJ (2015) An iterative method for discovering feasible management interventions and targets conjointly using uncertainty visualizations. Environ Modell Softw 71:159–73. doi:10.1016/j.envsoft.2015.05.017

Funtowicz SO, Ravetz JR (1990) Uncertainty and quality in science for policy. Springer, Dordrecht

Gorelick S (1990) Large scale nonlinear deterministic and stochastic optimization: formulations involving simulation of subsurface contamination. Math Program 48(1–3):19–39. doi:10.1007/bf01582250

Graham DN, Butts MB (2005) Flexible, integrated watershed modelling with MIKE SHE. In: Singh VP, Frevert DK (eds) Watershed models. CRC Press, Boca Raton, pp 245–272. ISBN:0849336090

Guillaume JHA (2011) A risk-based tool for documenting and auditing the modelling process. In: Chan F, Marinova D, Anderssen RS (eds) MODSIM2011, 19th international congress on modelling and simulation. Modelling and Simulation Society of Australia and New Zealand, December 2011, pp 1652–1658. ISBN: 978-0-9872143-1-7. www.mssanz.org.au/modsim2011/I9/guillaume.pdf. Accessed 14 Jan 2014

Guillaume JHA, Pierce SA, Jakeman AJ (2010) Managing uncertainty in determining sustainable aquifer yield. Paper presented at the National Groundwater Conference 2010, Canberra. http://www.groundwater2010.com/documents/GuillaumeJoseph_000.pdf. Accessed 14 Jan 2014

Guillaume JHA, Qureshi ME, Jakeman AJ (2012) A structured analysis of uncertainty surrounding modeled impacts of groundwater extraction rules. Hydrogeol J 205(5):915–932. doi:10.1007/s10040-012-0864-0

Gupta HV, Clark MP, Vrugt JA, Abramowitz G, Ye M (2012) Towards a comprehensive assessment of model structural adequacy. Water Resour Res 48(8), W08301. doi:10.1029/2011wr011044

Haasnoot M, Kwakkel JH, Walker WE, ter Maat J (2013) Dynamic adaptive policy pathways: a method for crafting robust decisions for a deeply uncertain world. Glob Environ Chang 23(2):485–498. doi:10.1016/j.gloenvcha.2012.12.006

Haitjema HM (1995) Analytic element modeling of groundwater flow. Academic Press, San Diego, p 394

Haitjema H (2006) The role of hand calculations in ground water flow modeling. Ground Water 44(6):786–791. doi:10.1111/j.1745-6584.2006.00189.x

Hanson RT, Schmid W, Faunt CC, Lockwood B (2010) Simulation and analysis of conjunctive use with MODFLOW’s farm process. Ground Water 48(5):674–689. doi:10.1111/j.1745-6584.2010.00730.x

Hu LY, Chugunova T (2008) Multiple-point geostatistics for modeling subsurface heterogeneity: a comprehensive review. Water Resour Res 44(11), W11413. doi:10.1029/2008wr006993

Hunt RJ (2012) Uncertainty. In: Australian groundwater modelling guidelines. Waterlines report series No 82, National Water Commission, Canberra, pp 92–105. ISBN: 978-1-921853-91-3

Hunt RJ, Welter DE (2010) Taking account of “Unknown Unknowns”. Ground Water 48(4):477. doi:10.1111/j.1745-6584.2010.00681.x

Hunt RJ, Steuer JJ, Mansor MTC, Bullen TD (2001) Delineating a recharge area for a spring using numerical modeling, Monte Carlo techniques, and geochemical investigation. Ground Water 39(5):702–712. doi:10.1111/j.1745-6584.2001.tb02360.x

Hunt RJ, Feinstein DT, Pint CD, Anderson MP (2006) The importance of diverse data types to calibrate a watershed model of the Trout Lake Basin, Northern Wisconsin, USA. J Hydrol 321(1–4):286–296. doi:10.1016/j.jhydrol.2005.08.005

Hunt RJ, Doherty J, Tonkin MJ (2007) Are models too simple? Arguments for increased parameterization. Ground Water 45(3):254–261. doi:10.1111/j.1745-6584.2007.00316.x

Hunt RJ, Walker JF, Selbig WR, Westenbroek SM, Regan RS (2013) Simulation of climate-change effects on streamflow, lake water budgets, and stream temperature using GSFLOW and SNTEMP, Trout Lake Watershed, Wisconsin. U.S. Geological Survey Scientific Investigations Report 2013-5159, 118 p. http://pubs.usgs.gov/sir/2013/5159/

Hunt RJ, Borchardt MA, Bradbury KR (2014) Viruses as groundwater tracers: using ecohydrology to characterize short travel times in aquifers. Ground Water 52(2):187–193. doi:10.1111/gwat.12158

Illman WA, Craig AJ, Liu X (2008) Practical issues in imaging hydraulic conductivity through hydraulic tomography. Ground Water 46(1):120–132. doi:10.1111/j.1745-6584.2007.00374.x

Jakeman AJ, Hornberger GM (1993) How much complexity is warranted in a rainfall-runoff model? Water Resour Res 29(8):2637–2649. doi:10.1029/93wr00877

Jakeman AJ, Letcher RA, Norton JP (2006) Ten iterative steps in development and evaluation of environmental models. Environ Model Software 21(5):602–614. doi:10.1016/j.envsoft.2006.01.004

Jakeman A, Kelly R, Ticehurst J, Blakers R, Croke B, Curtis A, Fu B, Sawah S, Gardner A, Guillaume J, Hartley M, Holley C, Hutchings P, Pannell D, Ross A, Sharp E, Sinclair D, Wilson A (2014) Modelling for managing the complex issue of catchment-scale surface and groundwater allocation. In: Obaidat MS, Filipe J, Kacprzyk J, Pina N (eds) Simulation and modeling methodologies, technologies and applications, vol 256. Springer, pp 25–41. doi:10.1007/978-3-319-03581-9_2

James BR, Gorelick SM (1994) When enough is enough: the worth of monitoring data in aquifer remediation design. Water Resour Res 30(12):3499–3513. doi:10.1029/94wr01972

Janssen R (1992) Multiobjective decision support for environmental management. Kluwer, Dordrecht, 232 p

Johnson MS (2008) Public participation and perceptions of watershed modeling. Soc Nat Res 22(1):79–87. doi:10.1080/08941920802220347

Kalai R, Lamboray C, Vanderpooten D (2012) Lexicographic α-robustness: an alternative to min-max criteria. Eur J Oper Res 220(3):722–728. doi:10.1016/j.ejor.2012.01.056

Keating EH, Doherty J, Vrugt JA, Kang Q (2010) Optimization and uncertainty assessment of strongly nonlinear groundwater models with high parameter dimensionality. Water Resour Res 46(10), W10517. doi:10.1029/2009wr008584

Kim K, Anderson MP, Bowser CJ (1999) Model calibration with multiple targets: a case study. Ground Water 37(3):345–351. doi:10.1111/j.1745-6584.1999.tb01110.x

Kloprogge P, Sluijs JP, Wardekker JA (2007) Uncertainty communication: issues and good practice. Copernicus Institute for Sustainable Development and Innovation, Utrecht University, Utrecht

Kloprogge P, Van Der Sluijs JP, Petersen AC (2011) A method for the analysis of assumptions in model-based environmental assessments. Environ Model Software 26(3):289–301. doi:10.1016/j.envsoft.2009.06.009

Kollet SJ, Maxwell RM (2006) Integrated surface–groundwater flow modeling: a free-surface overland flow boundary condition in a parallel groundwater flow model. Adv Water Resour 29(7):945–958. doi:10.1016/j.advwatres.2005.08.006

Kouvelis P, Yu G (1997) Robust discrete optimization and its applications. Kluwer, New York

Laloy E, Vrugt JA (2012) High-dimensional posterior exploration of hydrologic models using multiple-try DREAM(ZS) and high-performance computing. Water Resour Res 48(1), W01526. doi:10.1029/2011wr010608

Lempert RJ, Popper SW, Bankes SC (2003) Shaping the next one hundred years: new methods for quantitative, long-term policy analysis. Rand Corporation, Santa Monica

Mantoglou A, Kourakos G (2007) Optimal groundwater remediation under uncertainty using multi-objective optimization. Water Res Manag 21(5):835–847. doi:10.1007/s11269-006-9109-0

Mariethoz G, Caers J (2014) Multiple-point geostatistics: stochastic modelling with training images. Wiley-Blackwell, Chichester, 400 p, (in press)

Marsily G, Delay F, Gonçalvès J, Renard P, Teles V, Violette S (2005) Dealing with spatial heterogeneity. Hydrogeol J 13(1):161–183. doi:10.1007/s10040-004-0432-3

Matott LS, Babendreier JE, Purucker ST (2009) Evaluating uncertainty in integrated environmental models: a review of concepts and tools. Water Resour Res 45(6):W06421. doi:10.1029/2008wr007301

Meadows DH, Meadows DL, Randers J, Behrens WW III (1972) The limits to growth: a report for the Club of Rome’s project on the predicament of mankind. Potomac Associates, New York

Meerschman E, Pirot G, Mariethoz G, Straubhaar J, Van Meirvenne M, Renard P (2013) A practical guide to performing multiple-point statistical simulations with the Direct Sampling algorithm. Comput Geosci 52:307–324. doi:10.1016/j.cageo.2012.09.019

Mehl S, Hill MC, Leake SA (2006) Comparison of local grid refinement methods for MODFLOW. Ground Water 44(6):792–796. doi:10.1111/j.1745-6584.2006.00192.x

Moore C, Doherty J (2005) Role of the calibration process in reducing model predictive error. Water Resour Res 41(5):W05020. doi:10.1029/2004wr003501

Moore C, Doherty J (2006) The cost of uniqueness in groundwater model calibration. Adv Water Resour 29(4):605–623. doi:10.1016/j.advwatres.2005.07.003

Moore C, Wöhling T, Doherty J (2010) Efficient regularization and uncertainty analysis using a global optimization methodology. Water Resour Res 46(8):W08527. doi:10.1029/2009wr008627

Morgan DR, Eheart JW, Valocchi AJ (1993) Aquifer remediation design under uncertainty using a new chance constrained programming technique. Water Resour Res 29(3):551–561. doi:10.1029/92wr02130

Nazemi A, Wheater HS, Chun KP, Elshorbagy A (2013) A stochastic reconstruction framework for analysis of water resource system vulnerability to climate-induced changes in river flow regime. Water Resour Res 49(1):291–305. doi:10.1029/2012wr012755

Paté-Cornell ME (1996) Uncertainties in risk analysis: six levels of treatment. Reliab Eng Syst Saf 54(2–3):95–111. doi:10.1016/S0951-8320(96)00067-1

Ravalico JK, Maier HR, Dandy GC (2009) Sensitivity analysis for decision-making using the MORE method – a Pareto approach. Reliab Eng Syst Saf 94(7):1229–1237. doi:10.1016/j.ress.2009.01.009

Reed PM, Minsker BS (2004) Striking the balance: long-term groundwater monitoring design for conflicting objectives. J Water Resour Plan Manag 130(2):140–149. doi:10.1061/(ASCE)0733-9496(2004)130:2(140)

Refsgaard JC, Henriksen HJ (2004) Modelling guidelines – terminology and guiding principles. Adv Water Resour 27(1):71–82. doi:10.1016/j.advwatres.2003.08.006

Refsgaard JC, Henriksen HJ, Harrar WG, Scholten H, Kassahun A (2005) Quality assurance in model based water management – review of existing practice and outline of new approaches. Environ Model Software 20(10):1201–1215. doi:10.1016/j.envsoft.2004.07.006

Refsgaard JC, van der Sluijs JP, Højberg AL, Vanrolleghem PA (2007) Uncertainty in the environmental modelling process – a framework and guidance. Environ Model Software 22(11):1543–1556. doi:10.1016/j.envsoft.2007.02.004

Renard P (2007) Stochastic hydrogeology: what professionals really need? Ground Water 45(5):531–541. doi:10.1111/j.1745-6584.2007.00340.x

Rittel HJ, Webber M (1973) Dilemmas in a general theory of planning. Pol Sci 4:155–169

Roy B (2010) To better respond to the robustness concern in decision aiding: four proposals based on a twofold observation. In: Zopounidis C, Pardalos PM (eds) Handbook of multicriteria analysis, vol 103. Springer, Berlin/Heidelberg, pp 3–24. doi:10.1007/978-3-540-92828-7_1

Saltelli A, Annoni P (2010) How to avoid a perfunctory sensitivity analysis. Environ Model Software 25(12):1508–1517. doi:10.1016/j.envsoft.2010.04.012

Saltelli A, Chan K, Scott E (2004) Sensitivity analysis. Wiley, New York

Schoups G, Vrugt JA (2010) A formal likelihood function for parameter and predictive inference of hydrologic models with correlated, heteroscedastic, and non-Gaussian errors. Water Resour Res 46(10):1–17. doi:10.1029/2009wr008933

Schoups G, Lee Addams C, Gorelick SM (2005) Multi-objective calibration of a surface water-groundwater flow model in an irrigated agricultural region: Yaqui Valley, Sonora, Mexico. Hydrol Earth Syst Sci 9(5):549–568. doi:10.5194/hess-9-549-2005

Singh A, Mishra S, Ruskauff G (2010) Model averaging techniques for quantifying conceptual model uncertainty. Ground Water 48(5):701–715. doi:10.1111/j.1745-6584.2009.00642.x

Sorooshian S, Gupta VK (1983) Automatic calibration of conceptual rainfall-runoff models: the question of parameter observability and uniqueness. Water Resour Res 19(1):260–268. doi:10.1029/WR019i001p00260

Strebelle S (2002) Conditional simulation of complex geological structures using multiple-point statistics. Math Geol 34(1):1–21. doi:10.1023/a:1014009426274

Tiedeman C, Gorelick SM (1993) Analysis of uncertainty in optimal groundwater contaminant capture design. Water Resour Res 29(7):2139–2153. doi:10.1029/93wr00546

Tonkin M, Doherty J (2009) Calibration-constrained Monte Carlo analysis of highly parameterized models using subspace techniques. Water Resour Res 45(12). doi:10.1029/2007wr006678

van Asselt MBA, Rotmans J (2002) Uncertainty in integrated assessment modelling. Clim Change 54(1):75–105. doi:10.1023/A:1015783803445

van der Sluijs JP, Craye M, Funtowicz S, Kloprogge P, Ravetz J, Risbey J (2005) Combining quantitative and qualitative measures of uncertainty in model-based environmental assessment: the NUSAP system. Risk Anal 25(2):481–492. doi:10.1111/j.1539-6924.2005.00604.x

Voinov A, Bousquet F (2010) Modelling with stakeholders. Environ Model Software 25(11):1268–1281. doi:10.1016/j.envsoft.2010.03.007

Vrugt JA, Gupta HV, Bastidas LA, Bouten W, Sorooshian S (2003) Effective and efficient algorithm for multiobjective optimization of hydrologic models. Water Resour Res 39(8). doi:10.1029/2002wr001746

Vrugt JA, ter Braak CJF, Clark MP, Hyman JM, Robinson BA (2008) Treatment of input uncertainty in hydrologic modeling: doing hydrology backward with Markov chain Monte Carlo simulation. Water Resour Res 44(12):W00B09. doi:10.1029/2007wr006720

Wagner BJ, Gorelick SM (1989) Reliable aquifer remediation in the presence of spatially variable hydraulic conductivity: from data to design. Water Resour Res 25(10):2211–2225. doi:10.1029/WR025i010p02211

Walker WE, Harremoës P, Rotmans J, van der Sluijs JP, van Asselt MBA, Janssen P, von Krauss MPK (2003) Defining uncertainty: a conceptual basis for uncertainty management in model-based decision support. Integr Assess 4(1):5–17. doi:10.1076/iaij.4.1.5.16466

Walter E, Piet-Lahanier H (1990) Estimation of parameter bounds from bounded-error data: a survey. Math Comp Simul 32(5–6):449–468. doi:10.1016/0378-4754(90)90002-Z

Wardekker JA, van der Sluijs JP, Janssen PHM, Kloprogge P, Petersen AC (2008) Uncertainty communication in environmental assessments: views from the Dutch science-policy interface. Environ Sci Pol 11(7):627–641. doi:10.1016/j.envsci.2008.05.005

Wong HY, Rosenhead J (2000) A rigorous definition of robustness analysis. J Oper Res Soc 51(2):176–182. doi:10.2307/254258

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Open Access This chapter is distributed under the terms of the Creative Commons Attribution-Noncommercial 2.5 License (http://creativecommons.org/licenses/by-nc/2.5/) which permits any noncommercial use, distribution, and reproduction in any medium, provided the original author(s) and source are credited.

The images or other third party material in this chapter are included in the work’s Creative Commons license, unless indicated otherwise in the credit line; if such material is not included in the work’s Creative Commons license and the respective action is not permitted by statutory regulation, users will need to obtain permission from the license holder to duplicate, adapt or reproduce the material.

Copyright information

© 2016 The Author(s)

About this chapter

Cite this chapter

Guillaume, J.H.A., Hunt, R.J., Comunian, A., Blakers, R.S., Fu, B. (2016). Methods for Exploring Uncertainty in Groundwater Management Predictions. In: Jakeman, A.J., Barreteau, O., Hunt, R.J., Rinaudo, JD., Ross, A. (eds) Integrated Groundwater Management. Springer, Cham. https://doi.org/10.1007/978-3-319-23576-9_28

Download citation

DOI: https://doi.org/10.1007/978-3-319-23576-9_28

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-23575-2

Online ISBN: 978-3-319-23576-9

eBook Packages: Earth and Environmental ScienceEarth and Environmental Science (R0)