Abstract

Acronyms are an idiosyncratic part of our everyday vocabulary. Research in word processing has used acronyms as a tool to answer fundamental questions such as the nature of the word superiority effect (WSE) or which is the best way to account for word-reading processes. In this study, acronym naming was assessed by looking at the influence that a number of variables known to affect mainstream word processing has had in acronym naming. The nature of the effect of these factors on acronym naming was examined using a multilevel regression analysis. First, 146 acronyms were described in terms of their age of acquisition, bigram and trigram frequencies, imageability, number of orthographic neighbors, frequency, orthographic and phonological length, print-to-pronunciation patterns, and voicing characteristics. Naming times were influenced by lexical and sublexical factors, indicating that acronym naming is a complex process affected by more variables than those previously considered.

Similar content being viewed by others

Introduction

Acronyms represent a significant and idiosyncratic part of our everyday vocabulary. The demands of a highly technical society have dramatically increased the proportion of acronyms encountered in everyday language. Acronyms are nowadays regularly found in scientific and nonscientific journals (e.g., DNA, EEG, CD-ROM, DVD, radar, sonar, VAT, CPI, OXO, NATO, NHS, etc.) and are actively used in text messages and e-mail communications (e.g., lol, MYOB, BW, etc.). The practice of abbreviating complex words is not new (e.g., INRI is an acronym that dates back to Roman times); however, their use has been relatively sparse until the second world war, when the formation of new acronyms escalated, since they were a convenient way of accelerating and encrypting communication. As an indication of the breathtaking expansion of acronyms in the language, the first edition (1960) of the Acronyms, Initialisms and Abbreviations Dictionary (AIAD) comprised 12,000 headwords, while the 16th edition (1992) included more than 520,000 headwords. The AIAD dictionary has been recognized as one of the most important books of reference by the American Library Association (1985), and its 43rd edition has just been made available to the public in June 2010. Strictly speaking, the term acronym refers to pronounceable abbreviations formed with the initial letters of a compound term, while initialism is the name for the same type of abbreviations that are “unpronounceable.” Despite this original distinction, the label initialism is rarely used, while acronym has extended its meaning to pronounceable and unpronounceable abbreviations. It is in this extended sense that the term acronym is going to be used here.

A distinctive characteristic of acronyms is that their configuration does not obey orthographic and/or phonological rules. They are often formed by a sequence of illegal letter strings that can become highly familiar to the language user (e.g., ABC, BBC, CNN, FBI, fm, HIV, KFC, pm, TV, USB, etc.). Due to this peculiar illegality, acronyms have recently been used in the study of two influential models of reading aloud: the triangle model and the dual-route cascade model (Laszlo & Federmeier, 2007b). An important discrepancy between these two models lies in the relative relevance given to the frequency of the word in contrast to its regularity when it is read aloud. One of the models under investigation in Laszlo and Federmeier (2007b) was the connectionist triangle model (Harm & Seidenberg, 2004; Seidenberg & McClelland, 1989). The model proposes a single processing system for reading all known words, irrespective of their frequency and regularity, and all unknown/novel words. This is achieved by means of a learning mechanism that extracts the statistically more reliable (frequent) spelling–sound relationships in English. Importantly, orthographic and/or phonological rules are redundant in the model, and therefore, they have not been specifically implemented. The other model investigated is the nonconnectionist dual-route cascade model (Coltheart, Curtis, Atkins, & Haller, 1993; Coltheart, Rastle, Perry, Langdon, & Ziegler, 2001). It proposes two reading routes or procedures: a lexical route and a nonlexical route. The lexical route entails direct connections between the mental representations of the written form of the word and the spoken form of the word and, also, detoured connections between written and spoken word forms with their corresponding conceptual representations in the semantic system. The nonlexical route converts letters into sounds applying the orthographic and phonologic rules of the language. The latter route is indispensable for reading novel words and nonwords, since no mental representation for them has been formed. Nonlexical processing will also give the correct pronunciation of regular words, although this is not the only reading pathway available to them. Correct reading of irregular words, however, needs to be accomplished via the lexical route, since these words do not stick to the pronunciation rules of the language.

Laszlo and Federmeier (2007b) tested these models by looking at the differential N400 repetition effect found for words (hat) and pseudowords (e.g., dawk) but not for orthographically illegal nonwords (mdtp). They argued that according to the dual-route model, the sensitivity of the N400 component to the repetition of legal letter strings, for both words and pseudowords (Deacon, Dynowska, Ritter, & Grose-Fifer, 2004; Rugg, 1990), could only reflect the performance of the nonlexical pathway, since this is the only route available for reading novel items such as the pseudowords. In consequence, no repetition effects in the N400 should be observed when acronyms are read, since their irregularity precludes the use of the nonlexical route. It is important to note that it is not clear how the predicted and reported absence of repetition effects for illegal letter strings fits into the argument, since illegal letter strings also make use of the nonlexical pathway. Connectionist models, alternatively, would predict repetition effects in the N400 for words, pseudowords, and acronyms, since the same process underpins the recognition of any type of letter string. Laszlo and Federmeier (2007b) found N400 repetition effects for words, pseudowords, and acronyms, but not for illegal nonwords. They concluded that this outcome could be accommodated only by the connectionist account for oral reading. However, Laszlo and Federmeier (2007b) failed to notice that pseudowords—in particular, pseudohomophones and those pseudowords extracted from high-frequency words—can generate activation in the lexical pathway (Coltheart, 2007). The lexical route will not produce the correct reading of pseudowords but can be, nevertheless, stimulated. Taking this into account, their results can be perfectly explained by the dual-route model through the activation of the lexical route by words, acronyms, and pseudowords. This explanation also reconciles better with the lexico-semantic processing found to be associated with the N400 component (Sheehan, Namy, & Mills, 2007; van Elk, van Schie, & Bekkering, 2010). Equivalent N400 amplitudes were found for words and acronyms in a subsequent study (Laszlo & Federmeier, 2008) in which the N400 sentence anomaly paradigm was used. The authors concluded that this pattern of results is not reconcilable with the dual-route model.

Acronyms have also played an important part in the investigation of the word superiority effect (WSE). Gibson, Bishop, Schiff, and Smith (1964), for example, investigated the relative contribution that meaningfulness and pronounceability had in the WSE. They devised two experimental conditions: one formed by meaningful but unpronounceable trigrams (these were all acronyms), and the other by meaningless but pronounceable trigrams (these were all pseudowords). They showed an advantage for acronyms in word recognition memory and recall, suggesting that meaning, rather than pronounceability, had a more powerful influence in these processes. Similar results were reported by Henderson (1974), who also manipulated meaning and pronounceability using acronyms and pseudowords. He found that participants were faster at judging pairs of items as being the same (e.g., FBI–FBI; BLI–BLI) or different (e.g., FBI–IMB; BLI–LSF) if a meaningful item or acronym was in the pair. A number of later studies have replicated the influence of meaning in the WSE, using acronyms in their experimental sets (Laszlo & Federmeier, 2007a; Noice & Hock, 1987; Staller & Lappin, 1981).

In sum, acronyms have been an integral part of experimental manipulations in a number of studies of word recognition and reading (Gibson et al., 1964; Henderson, 1974). The main reason for the use of acronyms has been their unusual combination of meaning and pronunciation, especially because the latter does not obey the standard spelling-to-sound correspondences of the language in use. The orthographic irregularity of acronyms, thus, has been paired with that of illegal letter strings, while their meaning and familiarity have been considered as equivalent to that of other words in the language. Although their meaning and peculiar pronounceability are indeed acronym characteristics, these might have been overemphasized to the detriment of other factors also known to be relevant in oral reading and word recognition processes. First, for example, not all acronyms comprise only consonants or all vowels, and those that do can be read by the application of a particular rule (i.e., letter naming). This rule might make acronyms somehow “regular” and different from other illegal letter strings. Second, acronyms tend to be items that are acquired during adulthood, and there is abundant evidence showing that late learned words are processed slower than early acquired words (for reviews, see Johnston & Barry, 2006; Juhasz, 2005). Third, acronyms are related to a more restricted number of familiar meanings than are conventional words, and words with few meanings tend to be processed more slowly than words with many meanings (Azuma & Van Orden, 1997; Ferraro & Hansen, 2002; Hino & Lupker, 1996; Klepousniotou & Baum, 2007). Another important difference is that orthographic and phonological length is often uncorrelated in acronyms. In contrast to conventional words, an acronym can often be orthographically short but phonologically long (e.g., HIV has only three letters but five sounds, ai-ch-eye-v-ee ). Finally, the number of orthographic neighbors associated to acronyms is generally much lower than those found in standard words. Orthographic neighborhood refers to all the words that can be formed by changing one letter from a target word while keeping constant the rest. Evidence shows that words with few orthographic neighbors take longer to be recognized (Alameda & Cuetos, 2000; Andrews, 1992; Perea, Acha, & Fraga, 2008; Whitney & Lavidor, 2005). All these properties (e.g., a late age of acquisition, short letter length, low number of meanings, etc.) make acronyms a very idiosyncratic material, possibly more than ever thought. More important, sets of acronyms and familiar words merely matched in letter length might not be easily comparable, and results from previous studies (Laszlo & Federmeier, 2007a, 2007b, 2008) could have been confounded with a number of uncontrolled variables.

Here, the authors present an investigation of 146 acronyms in relation to their orthographic illegality, peculiar pronunciation and six other lexico-semantic characteristics. Acronyms have generally been viewed as some kind of irregular word or even as a sort of “nonword with meaning.” However, the question of whether acronyms are processed as irregular words has never been tested. In order to address this question, the authors contrasted acronym-naming times against a number of lexical and semantic factors known to be relevant when mainstream words are read and manifestly overlooked in previous studies involving acronyms. The study is important since acronyms appear to be an effective material in the investigation of word recognition and reading aloud. Interestingly, in most word recognition and naming studies in which no acronyms but conventional words are used, a careful selection of the material is carried out to ensure that only the factor under investigation varies, while intercorrelated variables are controlled for. Normative data have proven useful in these studies of word recognition and production, yet there is a complete absence of norms for acronyms. This is in spite of the fact that acronyms are not only useful material to facilitate the experimental manipulations in word-processing research, but also a topic of scientific inquiry. Thus, a number of studies (Besner, Davelaar, Alcott, & Parry, 1984; Coltheart, 1978) have been concerned with the lexicality of acronyms, and attempts have been made to clarify whether acronyms enjoy the cognitive status of a word or a nonword. In the latest of these studies, Brysbaert, Speybroeck, and Vanderelst (2009) found that acronyms produced an associative priming effect equivalent to that generated by conventional words, and importantly, this effect was independent of case presentation. Brysbaert et al. (2009) concluded that acronyms are lexicalized items integrated in our mental lexicon.

In recognition of the growing interest of acronyms in psycholinguistic research and the imperative need of normative data for this type of stimuli, the authors present here an investigation of the lexico-semantic properties of 146 acronyms and their relationship with acronym-naming speed. The present norms will provide researchers with an inclusive database to enable appropriate experimental control in future research. The factors considered were age of acquisition (AoA), bigram frequency, trigram frequency, imageability, number of orthographic neighbors, number of letters, number of phonemes, number of syllables, acronyms’ print-to-pronunciation pattern, word frequency, word familiarity, and voicing. These norms will benefit research in acronyms and word reading in healthy and clinical populations. The authors start by describing the acronym characteristics considered in the present study in alphabetic order. Then the data collection for the norms and the acronym study are presented.

A secondary aim is to investigate the nature of acronym reading by inspecting how they are influenced by the factors included in the norms. The fact that acronyms are orthographically illegal does not necessarily mean that they are processed as irregular words. A major proportion of acronyms are pronounced by naming each constituent letter aloud, which endows acronyms with some kind of regularity that is a long way away from the sporadic grapheme-to-phoneme correspondences characteristic of irregular words. The potential regularity or irregularity of acronyms will be tested by contrasting the impact that a series of factors has on acronym naming and recognition speed and accuracy. Thus, for example, reduced or no AoA effects have been found when regular words are named. Robust AoA effects in acronym reading will indicate similarities between those processes governing acronym naming and irregular word naming. The factors under investigation, along with their specific predictions, are described below.

Acronym characteristics: What can they tell us?

The selection of acronym properties included was guided by those factors that have been shown to affect single-word processing (e.g., reading words aloud, distinguishing real words from invented words, or naming objects). Main findings related to each of the variables selected are briefly reviewed next, along with explicit hypotheses regarding their influence in acronym-naming times and accuracy. The selected variables are presented in alphabetic order.

Age of acquisition

AoA refers to the moment in time in which words, objects, and faces are first learned. Differences in order of learning or AoA have been shown to affect processing times, accuracy, amplitude of ERP components, eye fixation durations, and spatially distinctive brain regions (Cuetos, Barbón, Urrutia, & Dominguez, 2009; Ellis, Burani, Izura, Bromiley, & Venneri, 2006; Gilhooly & Logie, 1982; Juhasz & Rayner, 2006; Morrison & Ellis, 1995, 2000; Pérez, 2007; Weekes, Chan, & Tan, 2008). Evidence shows that early acquired material has an advantage over late acquired material in terms of processing time, accuracy, and resistance to brain damage (see reviews in Johnston & Barry, 2006; Juhasz, 2005).

Ratings have been the most common way of measuring AoA. Here, participants are asked to estimate, on 7-point or 9-point scales, the age at which they believe they learned a list of words. Although these estimations might seem too subjective, they have been shown to correlate highly with objective AoA values (Carroll & White, 1973; Gilhooly & Gilhooly, 1980; Pérez, 2007).

The relevance of the AoA effect in cognitive processes lies in the wide range of tasks, languages, and population samples influenced by it. Thus, AoA effects have been reported in lexical decision, word and object naming, word associate generation, semantic categorization, object and face recognition, written word production, and repetition priming (Barry, Johnston, & Wood, 2006; Bonin, 2005; Brysbaert, Van Wijnendaele, & De Deyne, 2000; Catling, Dent, & Williamson, 2008; Gerhand & Barry, 1999; Holmes, Fitch, & Ellis, 2006; J. Monaghan & Ellis, 2002; Richards & Ellis, 2008). Also, evidence shows that AoA influences performance of healthy and brain-damaged participants, bilingual speakers, and monolingual speakers of a variety of languages such as English, Chinese, Dutch, French, Icelandic, Italian, Spanish, and Turkish, among others (Alija & Cuetos, 2006; Bonin, Barry, Meot, & Chalard, 2004; Izura & Ellis, 2002; Liu, Hao, Shu, Tan, & Weekes, 2008; Menenti & Burani, 2007; Pind & Tryggvadottir, 2002; Raman, 2006).

The arbitrary mappings hypothesis is one of the current explanations for the AoA effect. According to this hypothesis, AoA is the result of arbitrary connections created between two representations in the learning process. Object naming is a good example of this type of unpredictable links, because there is no information in the shape or intrinsic meaning of the object that could possible predict its name. Conversely, when the mapping established between representations is consistent, AoA effects would not be noticeable, since late acquired material will benefit from the regularities extracted from the early acquired material. Research carried out on object and word naming supports the arbitrary mappings hypothesis, showing larger AoA effects in object than word naming, since the nature of the connections between orthography and phonology is more or less consistent in alphabetic languages (Brysbaert & Ghyselinck, 2006; Ghyselinck, Lewis, & Brysbaert, 2004).

The arbitrary mappings account for AoA effects allows the investigation of the assumed irregularity of acronyms. Thus, if acronym processing is similar to that of irregular words, AoA effects will be observed in acronym-naming times. However, if letter naming can be taken as a rule that confers acronyms with some kind of regularity, no AoA effects will be observable.

Bigram and trigram frequency

Bigram and trigram frequencies refer to the frequency at which a pair of letters or sets of three letters appear together in written words of any given length. Thus, from a word formed from n letters, n−1 bigrams and n−2 trigrams can be formed. Bigram and trigram frequencies are sublexical measures of what is known as orthographic redundancy or orthographic familiarity (Andrews, 1992; Graves, Desai, Humphries, Seidenberg, & Binder, 2010).

Anisfeld (1964) proposed bigram and trigram frequencies as an alternative explanation to the consistency effects found in word processing. He argued that it could be that consistent words are processed more efficiently not because of their “consistent pronounceability” but because they are formed by letters with higher bigram and trigram frequencies than are inconsistent words.

Bigram frequency has been reported to affect tasks involving word recognition (Conrad, Carreiras, Tamm, & Jacobs, 2009; Owsowitz, 1953; Rice & Robinson, 1975; Westbury & Buchanan, 2002). The effect of bigram frequency in these studies was such that words with low bigram frequencies facilitated recognition, whereas words formed by letters with high bigram frequencies were somehow slowed down.

As a consequence of the reported significance of bigram frequency in word recognition, many researchers in word naming have considered orthographic familiarity (bigram and/or trigram frequencies) as a relevant factor to have under control. However, the few studies that have investigated the influence of bigram frequency in word naming have reported no effects (Andrews, 1992; Bowey, 1990; Strain & Herdman, 1999).

Available evidence indicates a general absence of bigram and/or trigram frequency effects in standard word naming, but effects have been reported in word recognition. If acronym processing is similar to the processing of any other word in the language, bigram or trigram frequency effects are not predicted in acronym-naming speed.

Imageability

Imageability refers to the ease with which a word evokes a mental image (Paivio, Yuille, & Madigan, 1968). The lexical relevance of imageability emerged in the 1960s as an interpretation of the superiority of concrete over abstract nouns. This was supported by the fact that concrete words were rated as more imageable than abstract words (Paivio, 1965). Subsequent research has shown that highly imageable words are better recognized and memorised than low imageable words in tasks of lexical decision and cued and free recall (Balota, Cortese, Sergent-Marshall, Spieler, & Yap, 2004; Kennet, McGuire, Willis, & Schaie, 2000; Paivio 1965). The dual-code hypothesis (Paivio, 1971, 1991) accounts for the imageability effect, arguing that abstract words activate verbal codes, while concrete words activate verbal and imagery codes. The hypothesis states that the assistance of the imagery system facilitates the processing of concrete words.

A number of studies have also shown that high-imageable words are consistently better named by patients with a phonological impairment but some preservation of their reading ability (Hirsh & Ellis, 1994; Tree, Perfect, Hirsh, & Copstick, 2001; Weekes & Raman, 2008). Patients with better accuracy at naming abstract words also occur, although these cases have been reported less frequently (Papagno, Capasso, Zerboni, & Miceli, 2007; Reilly, Grossman, & McCawley, 2006). The influence of imageability in unimpaired oral reading, however, is uncertain. Strain, Patterson, and Seidenberg (1995) argued that the imageability influence shown in patients implies a relationship with reading. In Experiments 2 and 3, they found significant interactions between imageability and consistency for low-frequency words. This meant that significant longer times were required to read low-imageability and inconsistent words. In their view, translation from orthography to phonology is fast and efficient for words with regular/consistent spelling patterns (regardless of their frequency or imageability values) because orthography-to-phonology correspondences are assisted by the regular/consistent connections established by high-frequency words. However, low-frequency inconsistent words (e.g., dread, mischief) generate slow naming times, because neither the regularity of the word nor its frequency can aid in their pronunciation. As a consequence, the intervention of semantic information facilitates the reading processes of those inconsistent and low-frequency words with richer semantic representations or high imageability.

However, other studies (Gerhand, 1998; J. Monaghan & Ellis, 2002) have failed to observe imageability effects in word naming once AoA has been taken into account (J. Monaghan & Ellis, 2002).

Most acronyms can be considered inconsistent, and often they are also low frequency. Thus, imageability effects should be observable when acronyms are read and recognized, assuming that semantic intervention is necessary at the time of word/acronym recognition and low-frequency and inconsistent word reading.

Number of orthographic neighbors or neighborhood size (N)

The role of lexical similarity in the process of word recognition and naming has been the subject of extensive investigation. One of the fundamental questions under examination is how the system distinguishes the word to be recognized (e.g., word) from a set of similar candidates (e.g., ward, wore, warm, war). One way in which the lexical similarity of a word has been operationalized is counting the number of words formed by changing one letter from the given word while keeping constant the position and identity of the rest of the letters (Coltheart, Davelaar, Jonasson, & Besner, 1977). For example, the word peace produces four neighbors: peach, pence, pease , and place. It is often referred to as N, and it is the more commonly used measure in studies of lexical similarity. A common finding in word naming is that words with high N are named faster than words with low N (Andrews, 1989, 1992; Mathey, 2001; Sears, Hino, & Lupker, 1999).

A further concern, of relevance to the present study, relates to the locus from which the N-effect emerges. Andrews (1989) proposed an early origin, suggesting that the N-effect is a product of the interaction between letter and lexical units (neighbor words receive and feedback activation from and to their constituent letters, increasing the activation of the target letters and accelerating in this way the recognition of the correct word).

The word’s orthographic body is a structural characteristic of words that correlates with word rhyme, and N and has led to the suggestion of a late locus for the N-effect. In English, a great proportion of neighbors result from changing the first letter of the word. As a consequence, high-N words tend to share their orthographic body, and in addition, this orthographic body usually rhymes. This relationship between N, orthography, and phonology introduces the possibility that N-effects might be the consequence of phonological, rather than orthographic, computation. Adelman and Brown (2007) tested this hypothesis by analyzing the results from four existing megastudies of word recognition in English (Balota et al., 2000; Balota & Spieler, 1998; Spieler & Balota, 1997; Seidenberg & Waters, 1989). They conducted a series of regression analyses in which they included phonographic neighborhood, which refers to the number of words formed by changing one letter and phoneme from a given word, as a predictor variable. Other variables included in their analysis were word frequency, orthographic neighborhood size, first phoneme, number of letters, word regularity, number of friends, number of enemies, and rime consistency ratio. The results showed a significant facilitation of number of phonographic neighbors over and above the effects of regularity and rime consistency. Number of orthographic neighbors did not reliably predict reaction times in any of the four sets analyzed (apart from a small impact in the Seidenberg and Waters’s, 1989, data). Adelman and Brown (2007) concluded that neighborhood effects cannot be accounted for by orthographic processing only; instead, the conversion of print to sound is the more likely source of the effect.

In relation to acronym naming, N-effects are predicted only if they emerge from the early processing of their constituent letters. In contrast, if the N-effects derive from phonological similarity or from the interaction between orthography and phonology, the impact of N in acronym naming would be reduced or absent, since for most acronyms, the translation from letters into sounds will not correspond to that of its neighboring words in terms of single phonemes or rhyme units (e.g., as in EEG, leg, peg, beg, egg).

Orthographical and phonological length

Word length measured in terms of its orthographical (number of letters) or its phonological (number of syllables or phonemes) aspects shows a positive correlation with word-naming and recognition times (Balota et al., 2004; Hudson & Bergman, 1985). Phonological and orthographic measures of word length are also strongly intercorrelated in mainstream words, since increasing the number of syllables or phonemes inevitably increases the number of letters. Slower reaction times for words with many letters are a common finding in oral reading (Balota et al., 2004; Forster & Chambers, 1973; Frederiksen & Kroll, 1976; Spieler & Balota, 1997; Ziegler, Perry, Jacobs, & Braun, 2001). In addition, Balota et al. (2004) also observed an interaction between letter length and word frequency, with a greater influence of letter length over low-frequency words. However, null effects of letter length when skilled readers name words have also been reported (Bijeljac-Babic, Millogo, Farioli, & Grainger, 2004; Weekes, 1997).

A number of studies have also shown an influence of the number of syllables in oral reading times and accuracy. Number of syllables, like number of letters, also interacts with word frequency, with more pronounced length effects reported for multisyllabic low-frequency words (Ferrand, 2000; Jared & Seidenberg, 1990). Theoretically, length effects have been conceptualized as indicators of serial processing. Taking the dual-route model as the theoretical framework, the reported interaction between word length and frequency could be explained as the result of the rapid, parallel processing of high-frequency words via the lexical pathway (irrespective of word length) but the slow processing of low-frequency words by the same lexical route. The slowness in the lexical processing of low-frequency words makes the activity of the sublexical route more apparent, showing facilitation when short words are processed (Balota et al., 2004; Coltheart et al., 2001).

Number of letters and syllables were calculated for the acronyms included in the present study. The correlation between these variables was predicted to be low since, often, acronyms are short in number of letters but long in number of syllables (e.g., BBC, DVD, etc.). The disparity between letter and syllable length would help to reveal the relative contribution of orthographic and phonological length in acronym reading. In addition, since many acronyms are pronounced by naming each of the constituent letters aloud, a linear length effect was intuitively predicted in acronym-naming times.

Print-to-pronunciation patterns: Typicality and ambiguity

The spelling system of modern English is the result of a complex and rich language history that has produced a distinctive way of translating letters into sounds. The classification of the spelling regularities and, therefore, also inconsistencies, along with the examination of their influence on reading, has been profusely studied (Coltheart et al., 2001; Rastle & Coltheart, 1999; P. Monaghan & Ellis, 2010; Strain, Patterson, & Seidenberg, 2002; Zorzi, Houghton, & Butterworth, 1998). The difficulty of this enterprise is reflected in the fact that establishing the best classification method still is a bone of contention.

Venezky (1970) was one of the first to study the letter-to-sound patterns in English. He grouped the written representation of sounds into graphemes (letter or combination of letters equivalent to one sound) and established two types of grapheme-to-phoneme correspondences: major for those occurring with higher frequency and minor for those occurring with lower frequency. As an illustrative example of Venezky’s taxonomy, the pronunciation of ea as in seal was described as a major correspondence, while the pronunciations for ea in steak or bread were minor correspondences. Adhering to Venezky’s classification, Coltheart (1978) proposed a ruled-based mechanism for coding phonological information, known as the grapheme-to-phoneme correspondences (GPC) system. The application of the rules governing major correspondences, or the GPC system, allows the correct pronunciation of all the English regular words. However, a different but parallel lexical mechanism is required to allow for correct pronunciation of irregular words (those whose graphemes are converted to phonemic correspondences not embedded in the GPC system). The lexical and sublexical GPC mechanisms (also referred as routes) will produce the correct pronunciation for all regular words and nonwords. However, these two routes generate conflicting pronunciations for irregular words. The resolution of the conflict takes time, and this slows down responses. A common finding supporting the existence of these two routes for reading is that regular words are processed faster and more accurately than irregular words (Baron & Strawson, 1976; Gough & Cosky, 1977; Parkin, 1982; Stanovich & Bauer, 1978; Waters & Seidenberg, 1985).

An alternative word-reading account is based on the amount of features shared by the words in the vocabulary. Glushko (1979) showed that the pronunciation of a nonword could be achieved through a mechanism based on features shared with known words. According to Glushko, the most important characteristic when letters are translated into sounds is the consistency of the pronunciation of words with similar spelling. For example, the word body ade, as in wade, is pronounced in the same way in all similarly spelled words (e.g., bade and fade) and is, hence, described as consistent. In contrast, save is pronounced differently from have and is, therefore, an example of an inconsistent word. In Experiments 1 and 2, Glushko demonstrated that pseudowords created from words with irregular pronunciations (such as heaf from the irregular word deaf) were named slower than pseudowords based on words with regular spelling-to-sound correspondences (e.g., hean from dean). Glushko argued that the longer production latency for heaf over hean was the result of the eaf ending stemming from a group of exception words (e.g., deaf, leaf).

Glushko’s Experiment 3 indicated that words with regular grapheme–phoneme correspondences but inconsistent word bodies were named slower than regular words with consistent word bodies. Glushko argued that consistent words are named faster because the activation of neighboring nodes facilitates their processing. Cortese and Simpson (2000) and Jared (2002) also varied GPC regularity and word body consistency orthogonally in tests of word naming. Both studies indicated that consistency had an impact on production latency over and above any effects of regularity, as well as on the number of errors made by participants. These findings support the position that a hard and fast rule system might be insufficient for the conversion of words from print to sound. A rule system such as the grapheme–phoneme correspondences can only split words into two halves—those that follow the rules and those that violate them.

The problem of how the cognitive system deals with the translation of letters into sounds in English is complex and open to debate. Pronunciation of acronyms, however, might be less limited by the idiosyncrasies of the English language than are mainstream words. Neither of the two classification systems reviewed can be employed satisfactorily with acronyms. This is because the majority of the acronyms would be classified as inconsistent (e.g., in EEG, the word body -eg is common to leg, beg, and Meg, but the pronunciation is very different) and irregular (the application of GPC rules to acronym reading would produce either incorrect or impossible responses (e.g., HIV and BBC, respectively). However, most acronyms would be pronounced correctly by applying a simple rule: naming its letters.

Two features have been taken into account at the time of classifying the pronunciation of acronyms: pronunciation typicality and ambiguity. Acronyms named by spelling aloud each of their letters (e.g., DVD) have been classified as typically pronounced acronyms, while acronyms named following the spelling-to-sound correspondences of the language (e.g., DOS) have been classified as atypically pronounced acronyms. In addition, acronyms formed entirely by consonants or vowels (e.g., CNN, AOA) have an unambiguous pronunciation, naming each of its letters aloud, and have been considered as unambiguous. Acronyms containing a mixture of consonants and vowels have the potential of a “word-like” pronunciation (e.g., SARS, ROM).

However, this pronunciation potential is not always fulfilled (e.g., HIV, ISP), and that is why these acronyms have been classified as ambiguous. The combination of these features, pronunciation typicality and pronunciation ambiguity, provides three different types of acronym pronunciations: (1) ambiguous and typical (e.g., HIV), (2) ambiguous and atypical (e.g., ROM), and (3) typical and unambiguous (DVD). The definition of unambiguous pronunciation prevents the existence of atypical and unambiguous acronyms.

Word frequency and word familiarity

Word frequency refers to the number of times an individual encounters or uses a particular word. The intuition that frequency of occurrence could have an influence in word processing was first supported by Howes and Solomon’s (1951) findings, and its importance in word processing has been extensively demonstrated ever since. High-frequency words are recognized, produced, and recalled faster and with greater accuracy than low-frequency words (Connine, Mullinex, Shernoff, & Yelen, 1990; Oldfield & Wingfield, 1965; Whaley, 1978; Yonelinas, 2002).

Two main procedures have been employed to measure word frequency: statistical and rated estimations. Statistical valuations of frequency derived from corpora of written language have been commonly considered the objective measure of frequency. However, it has been observed that frequency norms generated from corpus of printed frequency might not be truly representative of the language in use (Brysbaert & New, 2009; Gernsbacher, 1984). This is because written language is edited, more diverse than spoken language, and fixed to the linguistic style of its time. Other sources of criticism come from the sample bias associated to statistical estimations. This bias is more pronounced in small corpuses where low-frequency words, in particular, lose discriminatory power (Burgress & Livesay, 1998; Zevin & Seidenberg, 2002). Brysbaert and New conducted a study looking at traditional and more contemporary frequency norms. They found that the bias for low-frequency words represents a concern only on corpuses sized below 16 million words. Brysbaert and New compared the predictive power of word frequency as obtained from six different frequency norms on word recognition times (as available from Balota et al., 2004). They showed that norms available from Internet discussion groups (Hyperspace Analogue to Language (HAL); Lund & Burgress, 1996) and subtitles (SUBTLEXus; Brysbaert & New, 2009) showed the highest correlations with word-processing variables.

The biases found in word frequency counts have prompted some researchers to study word recognition processes using frequency ratings (often in addition to written frequency measures: Balota et al., 2004; Connine et al., 1990; Gernsbacher, 1984). In order to obtain frequency ratings, participants are asked to estimate how many times they encounter and/or use a particular word. This measure of frequency is normally considered to be subjective and is often used interchangeably with the concept of word familiarity. In this study, a rated estimation of the subjective frequency/familiarity of a list of acronyms is presented along with a printed frequency measure for each acronym. Frequency corpuses tend to underrate the frequency of acronyms because they either avoid the inclusion of abbreviations (Zeno, Ivens, Millard, & Duvvuri, 1995) or are based on language samples where acronyms are scarcely represented (e.g. from subtitles SUBTLEXus). For this reason, acronyms’ printed frequency was calculated using three Internet search engines (www.altavista.com; www.google.co.uk; www.bing.com), as suggested by Blair, Urland, and Ma’s (2002) method. That is, each acronym was entered into the search function, and the number of hits returned was recorded as the measure of the acronym frequency. The validity of this method was provided by Blair et al. They compared frequency estimations based on two commonly used corpuses (i.e., the Kučera & Francis [1967] corpus and the Celex database [Baayen, Piepenbrock, & Gulikers, 1995]) with frequency calculations based on the number of hits returned by four Internet search engines (i.e., Alta Vista, Northern Light, Excite, and Yahoo). Frequencies from the search engines were collected at two points in time, with an interval of 6 months between them. Results showed high correlations between the frequency values provided by corpuses of written text and those generated by the search engines (e.g., Alta Vista frequencies correlated .81 with Kučera and Francis and .76 with Celex [Baayen et al., 1995]) and high test–retest reliabilities (r = .92). These correlations were based in a word sample of 382 words.

In the present study three different search engines were used in order to provide an indication of reliability. In addition, a rated estimation of each acronym subjective frequency/familiarity was also collected.

The importance researchers have assigned to word frequency is reflected in the fact that most models of word processing and word learning have incorporated word frequency in their operating architectures (Coltheart, 2001; Harm & Seidenberg, 2004; P. Monaghan & Ellis, 2010). Frequency effects in word naming tend to interact with word regularity and/or consistency (Ellis & Monaghan, 2002; Jared & Seidenberg, 1990; J. Monaghan & Ellis, 2002; Weekes, Castles, & Davies, 2006). This means that reading times are particularly slow and inaccurate for low-frequency inconsistent and/or irregular words. Considering the orthographic inconsistency/irregularity of acronyms and assuming that acronym naming exploits the same reading system as that used when mainstream words are named, large frequency effects are predicted in acronym-naming times and accuracy.

Word’s initial sound

A number of studies have shown that the acoustic characteristics of the word’s first phoneme influence the accuracy of voice key measurements. This is because voice keys are not reliable at detecting the acoustic onset of a word (Rastle & Davis, 2002). Rastle and Davis investigated the effects of onset complexity on reading times as captured by two different types of voice keys. The simple threshold voice key recorded the moment at which an amplitude value exceeded a predetermined threshold, and the integrative voice key was sensitive to the amplitude and, also, to the duration of the signal. Onset complexity had two levels that were operationalized as (1) words with two-phoneme onsets (e.g., /s/ followed by /p/ or /t/, as in spat or step) and (2) words with just one phoneme onset (e.g., /s/ as in sat). Results showed that the simple threshold voice key was triggered at the onset of voicing, which did not coincide with the real word’s onset, since all the words used started with the voiceless phoneme /s/.

In order to address voice key issues, some studies of word naming enter the characteristics of the initial phoneme of the words into their regression analyses. The procedure requires the transformation of each phonetic feature into a dummy variable that is then considered in the analyses (Balota et al., 2004; Treiman, Mullennix, Bijeljac-Babic, & Richmond-Welty, 1995). However, taking into account the phonetic features of the first phoneme of a word might not be enough, since voice key biases have been reported to emerge not only from the initial phoneme, but also from other consonants and vowels in the acoustic onset (Kessler, Treiman, & Mullennix, 2002; Rastle & Davis, 2002). Taking initial phoneme features plus complex consonant onsets into account requires adding an important number of variables (i.e., from 10 onward). These added variables do not pose a problem in multiple regression analyses comprising large number of stimuli (e.g., 2,428 words in Balota et al. [2004] and 1,329 words in Treiman et al. [1995]). However, ten or more new variables could be an excessive addition of factors in studies with a relatively small number of different stimuli.

In the present study, the aim was to investigate the characteristics of 146 acronyms. In order to keep a reasonable ratio of predictors and observations and in light of the results reported by Rastle and Davis (2002), the present study considered one of the phonetic characteristics of the acoustic onset: voicing. Thus, the sonority associated to the first phoneme of the acronyms (voiced or voiceless) is provided.

Norms

Method

Participants

One hundred twenty English native speakers, 34 males and 86 females, participated in the compilation of these norms. Each of the factors to be estimated—rated frequency, imageability, and AoA–was rated by a set of 40 participants. Participants were volunteers from Swansea University with a mean age of 24 years (range, 18–37). They all had normal or corrected-to-normal vision. None participated in the estimation of more than one factor, and all received course credit for their participation.

Materials

A total of 269 acronyms were initially selected from the Oxford English Dictionary (2009) and from the Acronyms, Initialisms and Abbreviations Dictionary (Mossman, 1994). Acronyms were gathered if they were intuitively thought to be relatively familiar, and an effort was made to select acronyms from a diversity of domains, such as science, technology, business, industry, jargon, medicine, etc. The set of 269 acronyms originally chosen was randomized. The randomized list was subsequently split into two questionnaires of approximately equal lengths (131 and 138) for administration to participants. A randomised set of 20 acronyms were present in both lists to allow an assessment of reliability. This procedure increased the sizes of the lists to be rated to 141 and 148 acronyms each. Twenty acronyms were printed per page, in the same randomized order for the estimation of rated frequency or word familiarity, AoA, and imageability.

Care was taken to make sure that the selected acronym definitions (from Oxford English Dictionary and the Acronyms, Initialisms and Abbreviations Dictionary) corresponded to the more dominant meaning available to the participants tested in the present study. In order to accomplish this, a word association task was devised. Twenty participants (3 male, 17 female), none of whom had participated in any other acronym-related task and with a mean age of 21 years (SD = 1.997), were presented with each of the 269 acronyms using E-Prime (Schneider, Eschman, & Zuccolotto, 2002). They were instructed to say aloud the first thing that came to their mind in response to the acronym presented onscreen. A microphone placed approximately 10 cm away from the participant detected his/her vocal response. Then, the participant could type the word he/she had just said. Participant responses were then placed into five broad categories: semantic, orthographic, phonological, compound, and erratic. Semantic responses included those referring to the full term for the acronym, as well as semantic-related information (e.g., BBC–television). In order to establish the dominance of the acronym definition, only the semantic associations were taken into account. The full term listed here is the sense of the acronym that elicited the majority of semantic association responses.

The present database comprises 146 out of the original 269 acronyms. One hundred sixteen acronyms were excluded because they were reported to be unknown by more than 50% of the participants who completed the AoA questionnaire. A further 7 acronyms were deleted because they were unknown to more than 50% of the participants who completed the association task.

Acronyms were not included if they consisted of fewer than three letters (BA), contained lowercase letters (kJ), used numerical characters (4WD), or formed a mainstream word (AIDS).

Database variables

The list of 146 acronyms is presented in the Appendix, along with their definitions, the percentage of participants who gave an associated response semantically related to the definition provided, and their values for AoA, bigram and trigram frequencies, imageability, number of orthographic neighbors, number of letters, syllables, and phonemes, print-to-pronunciation patterns, rated frequency, printed frequencies, and voicing.

Procedure

Age of acquisition

The 141 and 148 acronym lists were presented to two groups of 20 participants (8 male, 32 female; mean age = 25 years, SD = 1.86Footnote 1), who were asked to estimate when they first had learned each of the acronyms in the lists by writing down the estimated age in a box located beside each acronym. This method has been used successfully in the past (Ghyselinck, De Moor, & Brysbaert, 2000; Izura, Hernandez-Muñoz, & Ellis, 2005). The method has greater flexibility to provide late age ranges, and this was thought particularly useful for generating AoA values of a material that might be learned relatively late. One hundred acronyms were presented per page in five equal columns. The estimated reliability (Cronbach’s alpha) for the group was .93. Since the ratio of male and female participants was considerably different, the average ratings for male and female were submitted to a t-test analysis. No significant differences were found, t(139) = −1.27, p > .1.

Bigram and trigram frequency

Bigram and trigram frequency values were obtained from the MCWord, an orthographic word form database (Medler & Binder, 2005). The unrestricted bigram and frequency values were used here. This measure simply counts the number of times that any bigram or trigram appears in the CELEX database (Baayen et al., 1995).

Imageability

Two groups of 20 participants (14 male, 26 female; mean age = 23 years, SD = 1.52) were presented with one of two lists of acronyms and were asked to estimate the imageability of each acronym on a 7-point scale. One list consisted of 141 acronyms, the other listed 148, and each was presented in a randomized order. The instructions and scale, adapted from Paivio et al. (1968) required participants to indicate the ease with which each of the acronyms evoked a mental image. Numbers in the scale were labeled to inform participants of the different degrees of image-evoking difficulty. These ranged from 1 (image aroused after long delay/not at all) to 7 (image aroused immediately). Twenty acronyms were presented per page. Twenty acronyms were included for rating by both of the groups of participants, and these ratings were correlated to assess interrater reliability. The internal reliability for the group, using Cronbach’s alpha, was .94. Since the ratio of male and female participants was different, the average ratings for male and female ratings were submitted to a t-test analysis. Ratings were significantly different, t(139) = 5.17, p < .001, with the females’ ratings being higher in imageability than the males’ ratings.

Number of orthographic neighbors

The number of orthographic neighbors was calculated by counting the number of words that differed in one letter with the target acronym while preserving the identity and position of the rest of the letters in the acronym. The calculation was based on the words listed in the CELEX database (Baayen et al., 1995). Where a word generated in this way was listed in the database more than once (e.g., as a verb and a noun), this was counted as only one neighbor.

Orthographic and phonological length

The length of each acronym was considered in terms of number of letters, number of syllables, and number of phonemes.

Printed frequency

Printed frequency estimates were generated following the procedure used by Blair et al. (2002). The number of hits returned by the Internet search engines (Google, Bing, and AltaVista) were computed as indexes of word frequency. All were advance searches restricted to the English language. The value presented here is the log transformation of the number of hits returned for each acronym.

Rated frequency/word familiarity

The two randomized lists of acronyms (141 and 148 items long, respectively) were each presented to a group of 20 participants for frequency rating (10 male, 30 female; mean age = 25 years, SD = 2.04). Each page consisted of 20 acronyms to be rated on how frequently they were used or encountered. Ratings were made using a 7-point Likert scale ranging from 1 (rarely/never) through to 7 (more than twice daily). Each page was headed with the same instructions detailing that responses were to be made by circling the appropriate number and that the full range of the scale could be used if it was felt appropriate. One page of acronyms was presented as part of both versions of the questionnaire. Interrater reliability (Cronbach’s alpha) was .91. Since the ratio of male and female participants was different, the average ratings for male and female ratings were submitted to a t-test analysis. No significant differences were found, t(139) = −.698, p > .1.

Results and discussion

The ratings collected were collapsed across lists for AoA, frequency, and imageability estimations. Descriptive statistics for each of the continuous variables considered in this study are shown in Table 1. The variable related to the voicing of the acronym’s initial sound was dichotomized in voiced (n = 116) or voiceless (n = 30) and was considered, therefore, as a categorical variable. Similarly, three additional categorical variables were created to account for the acronym print-to-pronunciation pattern. These were unambiguous pronunciation (n = 85), ambiguous but typically pronounced acronyms (n = 48), and ambiguous and atypically pronounced acronym (n = 13).

Acronyms and all the normative values are presented alphabetically in the Appendix. The correlation matrix for all the continuous variables considered in this study is shown in Table 2. To ensure that the significance of the correlations reported was meaningful and valid, data were appropriately transformed to deal with skewed distributions. Thus, a logarithm transformation was applied to the printed frequency values obtained from the Google, Bing, and AltaVista search engines and also to rated frequency, number of syllables, number of phonemes, number of letters, and imageability. One unit was added before the logarithm transformation was applied to number of orthographic neighbors, bigram frequency, and trigram frequency. AoA ratings were normally distributed.

Some of the correlations in Table 2 are of particular importance. Interestingly, the number of letters shows a negative correlation with the number of syllables and the number of phonemes. Thus, shorter acronyms require more syllables and phonemes when pronounced (e.g., naming each letter aloud). It is also worth noting that the three acronym printed frequencies (from Google, Bing, and AltaVista) correlate significantly with rated frequency and are also highly intercorrelated, indicating a high level of reliability. However, they do not show the same pattern of correlations with the number of syllables, the number of orthographic neighbors, and imageability. All three printed frequencies correlate positively with the number of letters and negatively with the number of phonemes, meaning that high-frequency acronyms tend to have more letters but fewer phonemes. In addition, and in contrast to what is normally found with mainstream words, none of the printed frequencies showed a significant correlation with AoA. This lack of correlation is unusual in studies using common words (see Zevin & Seidenberg, 2002, 2004). This atypical relationship might reflect the fact that a number of newly introduced acronyms refer to technological devices, programs, organizations, and so forth that are becoming part of everyday live and language (e.g., DVD, GPS). The recent introduction of some of these acronyms means that they are learned late in life, despite their high frequency of appearance in print. AoA ratings showed significant and negative correlations with imageability and rated frequency, meaning that the later acquired the acronym, the lower its imageability and perceived frequency. These inverse relations of AoA with imageability and rated frequency have typically been found in studies using mainstream words (Morrison, Chappell, & Ellis, 1997; Stadthagen-Gonzalez & Davis, 2006). A linear correlation was found between rated frequencies and AoA ( r = −.18, p < .05; see Table 2), suggesting that the printed frequency estimations used in the present study overrated the perceived frequency of some acronyms—in particular, those at the higher end in the AoA scale. Thus, a number of late acquired acronyms appeared with greater printed than rated frequencies (e.g., PSP [play station personal], TFT [Thin Film Transition], MBA [Masters in Business Administration]).

It is also interesting to note that the number of orthographic neighbors correlates positively with the number of letters but negatively with the number of phonemes. That is, the more letters and fewer phonemes in the acronym, the greater the number of neighbors. This correlation departs from the correlations reported with mainstream words (see Adelman & Brown, 2007; Balota et al., 2004) and indicates that acronyms pronounced following grapheme-to-phoneme correspondences (e.g., those that have a few number of phonemes) tend to have a higher number of orthographic neighbors.

Word-naming experiment

Method

Participants

Twenty students from Swansea University with a mean age of 20 years (range, 18–24 years) participated in this experiment. None of them had collaborated in the collection of acronym associative responses, AoA, imageability, or frequency ratings, and they had not been involved in the completion of the acronym association task. The 15 female and 5 male participants were all native speakers of English, were nondyslexic, and had normal or corrected-to-normal vision. Course credit was offered as a reward for participation.

Procedure

Participants named the 146 acronyms with complete database entries for frequency, AoA, imageability, number of orthographic neighbors, and orthographic and phonological acronym length. Acronyms were presented one at a time in black capital letters on a white screen (19-in. monitor) in size 12, Times New Roman font. Each trial started with a fixation cross that appeared in the middle of the screen for 1,500 ms. Then an acronym appeared in the middle of the screen and remained there until the participant made a response. Participant responses were detected by a highly sensitive microphone (approximately 10 cm away from the participant’s mouth) attached to the computer. Activation of the microphone triggered the presentation of the next fixation cross. Trials were randomized for each participant. This was controlled by E-Prime (version 1.0.1, Psychology Software Tools, 1999) using a Dell computer with an Intel Pentium 4 1.5-GHz processor. The experimenter noted all the errors. In addition, the experimental sessions were audio recorded for further inspection of accuracy in the data. Following the completion of the naming task, participants were given a list with all the acronyms they had been asked to read and were required to indicate next to each acronym whether they knew it or not.

Results

Although the major purpose of this study was not to investigate the influence of acronym knowledge on acronym naming, it was thought interesting to examine participants’ accuracy when naming known and unknown acronyms. Once the acronym-naming task was finished, participants noted the acronyms they knew and those they did not know. The numbers of known and unknown acronyms were used to classify correct and incorrect responses in a two (known, unknown) by three (unambiguous, ambiguous typical, and ambiguous atypical) contingency table. Table 3 shows the percentage of correct and incorrect responses in each of the categories created.

Four Friedman’s ANOVAs were carried out with acronym’s print-to-pronunciation pattern as a between-subjects variable and number of responses as the dependent variable. The four analyses corresponded to the orthogonal manipulation of response accuracy (correct, incorrect) and acronym knowledge (known, unknown). Potential differences between the three types of acronyms (unambiguous, ambiguous typical, and ambiguous atypical) were examined in each of these four Friedman tests. Correct responses to unambiguous, ambiguous typical, and ambiguous atypical acronyms were not significantly different when the acronyms were known to the participants, χ 2(2) = 0.86, p > .1, or when the acronyms were unknown, χ 2(2) = 0.86, p > .1. However, significant differences among the three types of acronyms were detected for incorrect responses to known acronyms, χ 2(2) = 12.88, p < .001. This difference was further inspected using Wilcoxon tests. Bonferroni correction was applied, and, therefore, effects are reported at α/3 (i.e., .0167) level of significance. A significant difference was found between the errors produced when ambiguous typical and ambiguous atypical acronyms were named, T = 0, p < .01, r = −.36. The difference between erroneous responses to unambiguous and ambiguous atypical acronyms known to the participant approached significance, T = 6, p = .025, r = −.23. No significant differences were found between incorrect responses to unambiguous and ambiguous typical acronyms known to the participants. Finally, a main effect of acronym’s type was found for incorrect responses to unknown acronyms, χ 2(2) = 11.47, p < .01. Further inspection of this effect using Wilcoxon tests (Bonferroni correction applied at α/3 level of significance) showed a significant difference between ambiguous typical and ambiguous atypical acronyms, T = 0, p ≤ .016, r = −.29, and between unambiguous and ambiguous atypical acronyms, T = 0, p ≤ .016, r = −.23.

Thus, the results show that more errors occurred when ambiguous and atypical acronyms were read than when any of the other two types of acronyms were read. Interestingly, this higher error rate occurred when the acronym was known and when the acronym was unknown. The specific difficulty encountered by the participants when naming ambiguous atypical acronyms is likely to emerge from the shift in pronunciation patterns, since the orthographic configurations of ambiguous atypical acronyms and ambiguous typical acronyms are thought to be the same.

Reaction time analyses

Participant errors (2.12%), voice key malfunctions (3.94%), and response times that were 2.5 standard deviation above or below the mean (1.13%) were removed from the analyses of reaction times. Correlations between harmonic means of response times, percentage accuracy, and each of the numerical variables considered in this study are presented in Table 4.Footnote 2

Acronym-naming times show a negative correlation with number of orthographic neighbors, imageability, and all the frequency measures considered here (rated and printed), indicating that highly imageable and high-frequency acronyms with a high number of orthographic neighbors were named faster than low-imageability and low-frequency acronyms with a low number of orthographic neighbors. Reaction time correlations with N, imageability and frequency are also characteristically found in word-naming studies (Barca, Burani, & Arduino, 2002; Morrison & Ellis, 2000). Similarly, and in line with other word-naming studies (Balota et al., 2004), number of letters shows a correlation with acronym-naming times and accuracy, meaning that long acronyms were named slower and with more errors. In contrast to what has been found in other word-naming studies (Balota et al., 2004; Morrison & Ellis, 2000), the number of syllables and the number of phonemes showed negative correlations with accuracy, indicating that phonologically long words produced smaller numbers of errors.

Having looked at the relationships between the dependent variables (naming times and accuracy) and independent variables (number of letters, number of syllables, number of phonemes, number of orthographic neighbors, imageability, rated frequency, printed frequencies, AoA, bigram frequency, and trigram frequency), the predictive power of each independent factor was examined. The particular technique used here to analyze the data is known as the multilevel or hierarchical model (Miles & Shevlin, 2001). Multilevel models are linear regressions in which variation of groups can be modeled at different levels (Gelman & Hill, 2007). For the purpose of this study, the data were structured hierarchically with a three-level hierarchy: one corresponding to the participants, and the other two to the predictor variables. One of the advantages of this model over classical regression is that it allows an examination of the predictive power of independent variables while accounting for systematic unexplained variation among the group of participants. For the purpose of all the analyses reported here, acronym-naming times were log transformed to reduce skew. The software used in all analyses was SPSS (16.0).

The three measures of acronym printed frequency were examined first in order to select the measure with greater predictive power for final analyses. Thus, the logarithm transformations of the printed frequencies as derived from the Google, Bing, and AltaVista search engines were compared. The three measures provided a significant change in the proportion of variance explained when included in the last step of the multilevel model (Altavista, ΔR 2 = .004; Google, ΔR 2 = .002; Bing, ΔR 2 = .003). The log transformation of the printed frequencies derived from the AltaVista search engine accounted for the greater proportion of variance, and therefore, this was the measure selected for subsequent analyses.

A series of four multilevel regression analyses was carried out as the result of alternating the submission of only one of the measures of phonological word length (number of syllables or number of phonemes) and one of the letter frequencies (bigram or trigram frequencies). Acronym’s print-to-pronunciation pattern, number of letters, number of phonemes, number of orthographic neighbors, imageability, rated frequency, and AoA were entered as predictors in all the analyses. The curvilinear relationships of two predictors (i.e., imageability and number of letters) with reaction times violated the regression assumption of linearity. The quadratic term of imageability and number of letters was introduced into the analysis as a procedure that tackles this problem (Kline, 2005). In these cases, variable Y (i.e., reaction times) is regressed on both X (i.e., imageability) and X 2 (i.e., imageability2). The presence of the squared variable adds a curvature to the regression line, and its regression coefficient indicates the influence of the quadratic aspect of imageability on reaction times.

The four analyses carried out yielded very similar results. A summary of the results from the analyses that accounted for the greatest proportion of the variance can be seen in Table 5.

In order to ensure that multicollinearity did not add noise in the precision of the estimations, the condition number (k), and the variance inflation factor (VIF) were examined in each of the four analyses. VIF values were within a tolerable range (ranging from 1.13 to 7.99), and the condition number k (ranging from 8.21 in one analysis to 13.71 in another analysis) indicated the presence of medium but not potentially harmful collinearity (k > 30).

Four potential interactions were also assessed. These were acronym’s print-to-pronunciation characteristics with word frequency (printed and rated), with AoA, and also with imageability. An interaction term was created by centering the continuous variables (printed and rated frequency, AoA, and imageability) and multiplying the result by each of the dummy variables representing acronym print-to-pronunciation characteristics.

In order to introduce the three types of acronym print-to-pronunciation patterns (unambiguous, ambiguous typical, ambiguous atypical) into the analyses, two of the dummy variables, ambiguous typical and ambiguous atypical, were included in the analyses, while unambiguous acronyms worked as the reference category. Both dummy variables were entered in step 2 of each analysis so the results could be meaningfully compared with the reference category.

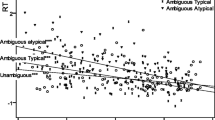

The analysis explaining the greatest percentage of the variance associated to acronym naming times included bigram frequency and number of syllables as predictor variables (see Table 5). Consistent main effects were found across the analyses for voicing, number of letters, printed and letter frequency, AoA, and letter frequency (bigram or trigram). The main effect of number of orthographic neighbors was significant only when the bigram frequency was in the analyses. The number of phonemes emerged as significant predictor in the analysis with trigram frequency and approached significance in the analysis with bigram frequency. Imageability did not emerge as a significant predictor in any of the analyses. In terms of interactions, the printed frequency showed significant interaction in all the analyses with both types of ambiguous acronyms (typical and atypical). AoA and imageability also showed an interaction in all the analyses with ambiguous typical acronyms. Finally, the interaction between rated frequency and ambiguous typical acronyms approached significance in all but one analysis. In order to inspect the nature of these interactions a bit further, a regression line was fitted for each type of acronym in terms of their reaction times and printed frequency (see Fig. 1), AoA (see Fig. 2), and imageability (see Fig. 3). Thus, in relation to acronyms’ frequency, high-frequency typical acronyms (ambiguous or unambiguous) were named faster than low-frequency typical acronyms. However, high-frequency atypical acronyms were named slower than low-frequency atypical acronyms. The same interaction pattern was revealed when rated, instead of printed, frequency was used.

Another interaction observed in all analyses was between AoA and ambiguous typical acronyms. Again, a regression line for each acronym type was plotted against their naming times and AoA values (see Fig. 2). Early acquired typical acronyms (ambiguous and unambiguous) were named faster than late acquired typical acronyms. However, the slope for atypical acronyms shows an inverse relation between reaction times and AoA, with slower RTs for early acquired acronyms.

Finally, the interaction between imageability and ambiguous but typically pronounced acronyms is depicted in Fig. 3. High-imageability acronyms were named faster than low-imageability acronyms. The imageability effect was stronger for typically pronounced acronyms (ambiguous or unambiguous) than for atypically pronounced acronyms.

Another series of multilevel regression analyses were carried out in order to assess the individual contribution of each predictor variable over and above the other factors. The procedure was the same as explained above, with the addition of a fourth step in the regression analysis in which the variable under consideration was assessed.Footnote 3 Results are shown in Table 6.

Errors analyses

Four logistic multilevel hierarchical analyses were conducted with accuracy as the dependent variable. The multilevel technique allowed taking into account the accuracy of each participant for each acronym, and therefore, accuracy was registered as a dummy variable (correct responses coded as 0, incorrect responses as 1). As in the analyses of reaction times, data was structured hierarchically with a three-level hierarchy: one corresponding to the participants, and the other two to the predictor variables. The main effects of voicing and number of orthographic neighbors were found significant across the four analyses. The main effects of imageability and rated frequency approached significance across all the analyses. The main effect of printed acronym frequency was found in one of the analysis only (i.e., with the number of syllables and bigram frequency included; see Table 7), and bigram frequency had an effect only in one out of the four analyses (i.e., with the number of phonemes in). None of the interactions was significant, although the interaction between rated frequency and ambiguous atypical acronym pronunciation approached significance in two out of the four analyses. A summary of the results from one of the analyses can be seen in Table 7.

General discussion

One of the aims of the present study was to investigate the processing features of acronyms by conducting a detailed examination of acronyms’ characteristics and an evaluation of the manner in which they intercorrelate.

The study started collecting values for acronyms in a series of selected variables. Thus, questionnaires were created to rate acronyms in terms of their frequency of occurrence, AoA, and imageability. Acronyms voicing and phonological and orthographic length were computed by hand, while number of orthographic neighbors were extracted from a program based on the CELEX database (Baayen et al., 1995). Bigram and trigram frequencies were also considered and derived from the MCWord, an orthographic word form database (Medler & Binder, 2005). Print-to-pronunciation patterns in acronyms were divided into three categories: unambiguous pronunciation pattern (e.g., BBC), ambiguous but typical pronunciation pattern (e.g. ,HIV), and ambiguous atypical pronunciation pattern (e.g., SCUBA). Acronyms’ print-to-pronunciation patterns were considered as a further variable of interest in the study.

The way in which acronym characteristics were correlated resembled, to a certain extent, the correlations reported among standard words. For example, AoA correlated negatively with imageability and rated frequency, meaning that early acquired acronyms were more imageable and familiar (Morrison et al., 1997; Stadthagen-Gonzalez & Davis, 2006). However, some correlations conflicted with what is normally found with mainstream words. For example, the negative correlations found between letter length and syllable length and, between letter length and number of phonemes show an inverse relationship between orthographic and phonological length not present in mainstream words. For the acronyms studied here, as orthographic length increased, phonological length decreased. This is possibly the result of the variety of print-to-pronunciation patterns observed in acronyms. Short acronyms tend to be pronounced naming each of their constituent letters (e.g., DVD), but long acronyms are more likely to include vowels and be pronounced following grapheme-to-phoneme correspondences (e.g., SCUBA).

Other peculiar relationships are the positive correlations of orthographic length with printed frequencies and with the number of orthographic neighbors but the negative correlations of phonological length in terms of the number of phonemes with printed frequencies and with the number of orthographic neighbors. This means, the more letters and fewer phonemes in the acronym, the higher its frequency and N. Longer acronyms are more likely to be formed by a mixture of consonants and vowels. These structures are more likely to be akin to other words and, therefore, produce a high number of orthographic neighbors. In addition, vowels require fewer phonemes to be named aloud than consonants (e.g., /ae/ for a vs. /eich/ for h).

The results of the second step of the hierarchical multilevel analysis of reaction times showed that ambiguous atypical acronyms were read significantly slower (760 ms) than unambiguous acronyms (689 ms). This difference should be interpreted with caution, due to the low amount of ambiguous atypical acronyms present in the study. However, the difference could be the result of a contextual effect. That is, in the context of naming lists of acronyms, participants found it particularly difficult to produce those acronyms whose pronunciation is atypical for acronyms, albeit common for mainstream words. This account is supported by the fact that naming times did not differ for ambiguous typical (679 ms) and unambiguous (689 ms) acronyms by definition pronounced in a typical acronym manner.

The contribution of the selected set of predictor variables and interactions on acronym naming times were examined in the third step of the analyses. Acronyms initial sound, number of letters, printed and rated word frequency, AoA, and letter frequencies (bigram and trigram) successfully predicted naming times in all the analyses carried out. The number of orthographic neighbors emerged as a significant predictor only when the bigram frequency was in the analyses. Imageability interacted with typically pronounced acronyms, indicating that its influence was stronger in this type of acronyms than in atypically pronounced acronyms.

As was predicted, number of letters affected acronym-naming times reflecting the general serial nature of acronym naming. From the phonological measures of word length, only number of phonemes had an influence on reaction times. Bigram frequency affected reaction times and accuracy, while trigram frequency made a significant contribution to naming times only. Studies of standard word naming have struggled to find bigram frequency effects once other variables such as N, onsets, and rimes are taken into account (Andrews, 1992; Bowey, 1990; Strain & Herdman, 1999). The fact that a particular variable does not show an effect on a particular behavior (e.g., reaction time or accuracy) does not mean that the processes associated to that behavior are free from its influence. Although bigram frequency effects are not commonly found in measures of word-naming performance, its influence has been detected when measuring brain activity (Binder, Medler, Westbury, Liebenthal, & Buchanan, 2006; Hauk, Davis, Ford, Pulvermüller & Marslen-Wilson, 2006; Hauk, Patterson et al., 2006). The number of orthographic neighbors (N) exerted an influence in acronym-naming times (analyses with bigram frequency) and accuracy. This result can only support Andrews’s (1989) proposal of an early origin for the N-effect as a product of the interaction between letter and lexical units. This is because the translation from letters to sounds in acronyms does not correspond, in the great majority of the cases, to that of the neighboring words in relation to single phonemes or rhyme units (e.g., EEG and leg, peg, beg, egg).