Abstract

According to traditional linguistic theories, the construction of complex meanings relies firmly on syntactic structure-building operations. Recently, however, new models have been proposed in which semantics is viewed as being partly autonomous from syntax. In this paper, we discuss some of the developmental implications of syntax-based and autonomous models of semantics. We review event-related brain potential (ERP) studies on semantic processing in infants and toddlers, focusing on experiments reporting modulations of N400 amplitudes using visual or auditory stimuli and different temporal structures of trials. Our review suggests that infants can relate or integrate semantic information from temporally overlapping stimuli across modalities by 6 months of age. The ability to relate or integrate semantic information over time, within and across modalities, emerges by 9 months. The capacity to relate or integrate information from spoken words in sequences and sentences appears by 18 months. We also review behavioral and ERP studies showing that grammatical and syntactic processing skills develop only later, between 18 and 32 months. These results provide preliminary evidence for the availability of some semantic processes prior to the full developmental emergence of syntax: non-syntactic meaning-building operations are available to infants, albeit in restricted ways, months before the abstract machinery of grammar is in place. We discuss this hypothesis in light of research on early language acquisition and human brain development.

Similar content being viewed by others

Introduction

Our ability to speak and understand language rests on our brain’s capacity to combine words into phrases, and phrases into sentences and larger units of discourse. A rich tradition in linguistics (Chomsky, 1965; Montague, 1970; Partee, 1973) has developed theories of natural language syntax and semantics that posit composition operations precisely designed to capture this capacity. Given two or more lexical items (e.g., “red” and “apple”), composition yields the syntactic and semantic structures that correspond to arranging these items in an order permitted by the grammar of the language (e.g., in English, “red apple”). The nature and scope of composition can vary across theories. In Compositional Semantics, composition is a logico-syntactic operation (Heim & Kratzer, 1998) applying to formal representations of expressions, and not directly to meanings. However, composition has definite and predictable consequences for how such formal representations are to be interpreted semantically: the rules that determine how these representations are composed correspond one-to-one to the rules that determine how the results of composition should be interpreted. This correspondence is known as the Principle of Compositionality (Partee, 1975, 1995; Partee, ter Meulen, & Wall, 1990). Within Generative Grammar, specifically in the Minimalist Program (Chomsky, 1995), syntactic composition amounts to Merge: a recursive set-formation operation on pairs of syntactic objects (i.e., words or phrases) whose outputs (unordered sets of syntactic objects) may then be interpreted phonologically and semantically (see Bouchard, 1995, for a minimalist view of syntax-semantics correspondence rules). In spite of important differences, in all these theories semantic interpretation depends entirely on syntactic composition: “syntax proposes and semantics disposes” (Crain & Steedman, 1985).

In recent years, theories and models have been developed that stand in partial contrast with the syntax-based analyses of composition from Generative Grammar and Compositional Semantics. Three research programs are particularly relevant here. One is Jackendoff’s (1999, 2007) Parallel Architecture approach to grammar and language processing, which postulates independent generative operations in phonology, syntax, and semantics, linked via interface rules. Meaning composition may involve operations that are lexical-semantic or conceptual-semantic in nature, and may not be reflected in the syntax or be fully constrained by it (see also Culicover & Jackendoff, 2005).

The second relevant theoretical development is the Late Assignment of Syntax Theory (LAST) by Townsend and Bever (2001), and Analysis-by-Synthesis (A×S) models of speech or language processing (Bever & Poeppel, 2010; Poeppel & Monahan, 2011), more generally. In LAST, initial representations of phrases or sentences (e.g., specifying thematic or grammatical roles) are derived based on lexical, statistical, and other cues immediately available from the input. The grammar component then uses these initial representations to internally generate a syntactic structure, which is finally compared to the actual input string (Analysis-by-Synthesis). In this type of account, composition, and some other semantic operations, may be carried out before a syntactic structure is eventually assigned to the input, thus reversing the traditional order of operations.

The third recent development is vector-based semantics, in which the meaning of each expression (e.g., a word) is represented as a vector encoding information (e.g., distributional, conceptual, sensory-motor) associated with the expression, and where composition is modeled via algebraic operations on vectors corresponding to different expressions (Baroni, 2013; Clarke, 2012; Mitchell & Lapata, 2010). In this framework, composition is constrained by syntax, but may involve semantic operations on vectors that do not have a clear syntactic counterpart – for example, priming or preactivation of vector elements across words in a sentence (Erk, 2012).

Together, these proposals point to a common insight: in at least some instances, semantic processing is not reducible to syntax. We refer to this thesis as Autonomous Semantics (or AS; Culicover & Jackendoff, 2005, 2006; Jackendoff, 2002; for a cognitive neuroscience perspective and a review of the evidence, see Baggio, 2018).

Autonomous semantics: Conceptual preliminaries

How can semantics operate autonomously from syntax? And what semantic processes are available to a system with limited syntactic processing capacity? Here, we address these issues from a developmental perspective: what semantic processes are available to infants and toddlers before they have acquired the fundamentals of the grammar of the target language? Answering this question will require a review of the experimental literature and a preliminary conceptual analysis of the problem.

Let us introduce working definitions of the concepts “syntax” and “semantics.” By “semantics,” we mean a (compositional) system for structuring meanings beyond single words, i.e., beyond lexical and referential semantics. By “syntax” or “grammar,” we mean a system of constraints on the placement of syntactic words in phrases and sentences. Each syntactic word is labeled as belonging to a specific syntactic category (noun, verb, adjective, etc.). Syntactic words are different from phonological words (e.g., in English, a sequence of phonemes including at least one stressed syllable and one full vowel) and from lexical words (i.e., a lexeme, a unit of meaning shared by morphologically related words; for discussion, see Di Sciullo & Williams, 1987; Dixon & Aikhenvald, 2002). Not all syntactic words are phonological words, or vice versa; that is why this distinction is paramount, also from a developmental stance. For example, clitics are syntactic words, but they are not phonological words: we’ll, it’s, and don’t are each a single phonological word and each a pair of syntactic words. Properties of the speech stream (e.g., prosody, pauses, etc.) can assist the child in discovering phonological words (via segmentation), but the syntactic representation of speech also requires labeling words as nouns, verbs, etc. These basic linguistic concepts may serve as a compass for navigating the maze of empirical data on child language acquisition (see section Early language acquisition: Syntax and semantics).

Equipped with this minimal conceptual apparatus, we may now proceed to fine tune the Autonomous Semantics thesis. The view that lexical and referential semantics do not depend on syntax is of little interest here: It seems uncontroversial that infants acquire the meaning and reference of some words before they master the grammar, in the technical sense above, of the target language. What is more contentious is whether infants can relate or integrate semantic information before they possess enough syntax for it to fully constrain semantic composition. The issue is whether there is evidence for non-syntactic precursors of meaning composition: early-emerging operations that allow children to combine meanings before they are able to represent the syntactic structure of phrases and sentences. Jackendoff (2007) discusses forms of “asyntactic integration,” in the context of language evolution, development, processing, and acquired disorders, when comprehenders may exploit “a coarse-grained interface directly from phonology to semantics without syntactic intervention” (p. 19). Our goal is to identify early forms of asyntactic integration in child development, and evaluate the experimental evidence for or against them. Computational and experimental work suggests that several forms of semantic processing – independent of syntax and intermediate between pure lexical activation and full-blown composition – are possible (see, among others, Lavigne et al., 2012, 2016, and Michalon & Baggio, 2019); these range from complex forms of priming (e.g., pattern priming) to the assignment of grammatical roles (e.g., subject and object) or thematic roles to the arguments of verbal predicates. These processes may support early speech comprehension in infants and toddlers, before the machinery of syntax is in place and before it can fully constrain composition.

Autonomous semantics: Developmental implications

What are the neurodevelopmental implications of the contrast between syntax-based and autonomous models of semantic processing? The traditional notion that complex meanings result from the application of syntactic operations suggests a developmental model where the capacity to perform those operations arises and becomes functional early on during infancy. If interpretation relies entirely on syntactic structure-building, as implied by the traditional view of syntax-semantics relations, then the child’s ability to compose lexical meanings requires a prior capacity to build syntactic structures:

-

(H1) The formal dependence of phrasal and sentential semantics on syntax in the theory of grammar is reflected in corresponding patterns of neurodevelopmental dependence or precedence of syntactic and semantic processing abilities.

Importantly, H1 is neither a strict logical consequence of the traditional view, nor is it the only developmental hypothesis suggested by it. However, it is a parsimonious way of extracting a developmental constraint from modern theories of grammar. Moreover, H1, or equivalent, is actively being explored empirically in psycholinguistics. Friederici (2005), for example, presents a neurodevelopmental model of language acquisition in which the capacity to activate and process the meaning of words in context, or “lexical semantics,” arises between 12 and 14 months of age. Semantic processes at the phrase and sentence levels would be established only later, between 30 and 36 months of age, when local “phrase structure building operations” are available to the child (Friederici, 2005, 2006; see Friederici, 2017, for recent developments of the model). On this view, the semantic processes that are operative before the infant can build phrase structures would be limited to (context-sensitive) activation of lexical semantic information (e.g., word meaning); in contrast, semantic composition becomes available only later, driven and supported by syntactic structure building operations.

Complex meanings, at all levels of linguistic structure, from words to discourse, in children and adults, are composed via morphological and syntactic operations, plus an array of additional operations (e.g., inference) that are not reflected in the grammar (for examples and discussions, see Baggio, Stenning, & van Lambalgen, 2016; Baggio, van Lambalgen, & Hagoort, 2012; and Pylkkänen & McElree, 2006). There appears to be little disagreement on this general point. However, it is unclear what operations are available in the first 2 to 3 years of life that would account for the child’s ability to combine words or concepts during productive and receptive communication, and in other non-communicative tasks. We consider one hypothesis alternative to H1:

-

(H2) Non-syntactic operations (either semantic or shared with other cognitive domains) are available to the infant for building complex meanings, albeit in restricted ways, early on in development, before the full machinery of grammar is available.

Here, we refer to these operations as non-syntactic precursors of semantic composition, and we review experiments on semantic processing in infants and toddlers, primarily using event-related brain potentials (ERPs) or fields (ERFs), in search for evidence for or against H1 or H2. Several studies have reported modulations of specific ERP or ERF components, in particular the N400 or its neuromagnetic counterpart. Our review will focus on experiments reporting modulations of the N400 amplitude in young children, in response to various stimulus or task manipulations (see section on Development of the N400 component and effect). We first discuss the antecedent conditions of the N400 in adults (Antecedent conditions of the N400), and we summarize current thinking on its functional interpretation (Functional accounts of the N400). In addition, we establish a tentative link between the N400 and Autonomous Semantic processes (“asyntactic integration”). Next, we turn to cognitive development, focusing on two main areas: (i) early language development, including the acquisition of word meaning and grammar (Early language acquisition: Syntax and semantics), and (ii) the maturation of cortical networks underlying the generation of the N400 component (Maturation of fronto-temporal N400 generators). We then systematically review ERP studies reporting N400 effects in children (Development of the N400 component and effect), and we conclude with a discussion of H1-H2 in light of the reviewed studies (Meaning before grammar).

Signatures of asyntactic integration: The N400

A number of ERP components are affected by semantic properties of stimuli, including the P600 and post-N400 negativities (for discussion, see Swaab et al., 2012). However, the N400 has proven a reliable neural dependent measure of core semantic processes, from lexical access to contextual integration, across the lifespan (Kutas & Federmeier, 2011). These specific processes do not affect other ERP components, such as the P600, and they are often difficult to study in real time using behavioral (e.g., eye tracking) or neuroimaging techniques (e.g., fMRI), in particular in young children.

Antecedent conditions of the N400

The N400 was first observed by Kutas and Hillyard (1980) in an ERP study in which the semantic congruency of words in context was manipulated:

-

(1)

He spread warm bread with butter/socks.

The incongruent word “socks,” compared to “butter,” produced a larger negative-going deflection in the ERP that peaked at about 400 ms from word onset. Every lexical word generates an N400 component. The N400 effect is the difference in amplitude of N400 components between two conditions. The amplitude of the N400 component is among the most robust and widely used dependent measures in the cognitive neuroscience of language (Kutas & Federmeier, 2011). Here, we focus on three main results.

First, the N400 amplitude may be modulated by shifts in the degree of semantic relatedness between the eliciting word and the context: the N400 is a graded response to continuously-varying properties of stimuli, not an “all-or-none” reaction to semantic anomalies or violations (Hagoort & Brown, 1994; Kutas & Hillyard, 1984). Second, the context that modulates the N400’s amplitude at the eliciting word may be a word, or a word list, a sentence, a discourse, or non-linguistic material, such as gestures, pictures, and movies. The elicitation and modulation of the N400 do not require that the context is in any way syntactically organized. In this sense, the N400 is a plausible candidate ERP signature of asyntactic integration. The effects of semantic relatedness between a prime and a target on the amplitude of the N400 at the target are a clear example of this (Lau, Phillips, & Poeppel, 2008). But when the context is structured, its logical and syntactic forms may affect the N400’s amplitude and the perceived plausibility of the input (see Nieuwland & Kuperberg, 2008, for N400 effects of negation; see Urbach & Kutas, 2010, for effects of quantifier structure; see Baggio, Choma, van Lambalgen, & Hagoort, 2010; Ferretti, Kutas, & McRae, 2007; Kuperberg, Choi, Cohn, Paczynski, & Jackendoff, 2010, for effects of argument or event structure). Third, the N400 effect is sensitive to semantic relations set up by discourse, even when they override stored knowledge (Nieuwland & van Berkum, 2006; van Berkum, Hagoort, & Brown, 1999; van Berkum, Zwitserlood, Hagoort, & Brown, 2003). Together, these findings show that the amplitude of the N400 component is an inverse function of the relative strength of semantic relations between the eliciting word and its context. These relations are either activated from memory or established on-line (Baggio, 2018; Hagoort, Baggio, & Willems, 2009).

Functional accounts of the N400

Two main functional accounts of the N400 have been developed and often contrasted. On one type of theory, the N400 reflects the ease of activating (accessing or retrieving) lexical and semantic information associated with the eliciting word: the context would provide semantic “cues” that facilitate those processes, and the N400 amplitude may be reduced in proportion to that (Kutas & Federmeier, 2011; Lau et al., 2008). This theory would explain N400 effects of priming or preactivation and contextual expectancy, but it is not clear how it would explain plausibility effects and more generally modulations of the N400 by logical form, event structure, and discourse (see above). On a different type of theory, the N400 reflects instead the costs of integrating a word’s meaning into the ongoing sentence or discourse model: the context would provide information that facilitates this process, and the N400 amplitude would decrease accordingly (Brown & Hagoort, 1993; Hagoort, Hald, Bastiaansen, & Petersson, 2004). This account explains N400 effects of manipulations of logical form, event structure, discourse relations, and pragmatics, but it does not easily explain N400 priming or preactivation effects. It then seems that the activation and integration views are complementary, yet fundamentally limited and unable to explain the full range of occurrences of the N400.

Recently, a third type of functional account has emerged, that aims to reconcile the strengths of the activation and integration views and to overcome their respective weaknesses. Baggio and Hagoort (2011) proposed a unified theory of the N400 based on a neurocognitive model of semantic processing in the brain. The model posits that each lexical word, whether auditory or visual, gives rise to a “cycle” of neural activation in left perisylvian cortex: beginning around 250 ms from word onset, in the posterior middle and superior temporal gyri (pMSTG); recruiting the middle and anterior segments of the inferior frontal gyrus (IFG, BA 45/47) around 300 ms; and finally re-engaging the pMSTG around 400 ms. Each of these three phases corresponds to a specific functional event: the access and initial activation of lexical meanings through the pMSTG (~250 ms); the generation of dynamic indices (i.e., “tokens”) for those meanings in the IFG (~300 ms); and top-down binding of information in pMSTG, under the influence of IFG (~400 ms). The N400 wave is a manifestation of one processing cycle. “Binding” is the result of the interplay of a bottom-up analytic mechanism, by which lexical concepts are combined into complex structures, recruiting the left anterior temporal lobe rapidly (LATL, ~250 ms; Bemis & Pylkkänen, 2011), and a top-down synthetic mechanism, where elements of lexical meanings, if preactivated by the context, are related or integrated before the corresponding words are given as input, i.e., before bottom-up binding may take place. In this model: (a) semantics is autonomous (AS), it cannot be reduced to syntax-driven composition; (b) it follows the principle of Analysis-by-Synthesis (A×S; representations are derived partly top-down, imposing constraints on syntactic and semantic analyses of the input); (c) it fits with algebraic accounts of lexical semantics: operations such as composition and preactivation may be applied in parallel on vectorial representations of words (Erk, 2012; for further details and discussion, see Baggio, 2018).

There is growing support for the notion that the N400 has multiple generators, contributing differently, and in different time frames, to lexical semantic activation and integration (Lau, Namyst, Fogel, & Delgado, 2016; Nieuwland et al., 2019). A multi-lab ERP study (N=334) by Nieuwland et al. (2019) has provided compelling evidence that the N400 reflects both the predictability (in an earlier time frame) and the plausibility (in a later, partly overlapping time frame) of words in context. This finding settles the activation-versus-integration dispute in favor of hybrid, multi-process, multiple-generators models of the N400, such as the cycle model outlined here (Baggio, 2012, 2018; Baggio & Hagoort, 2011; see also Lau et al., 2016; Newman, Forbes, & Connolly, 2012). We will use this conclusion in our review of ERP studies of semantic processing in infants and toddlers, but we will not assume that the N400 component or effect always reflect lexical activation and top-down binding from their very first instances in development. Rather, we will use published reports of the N400 effect to trace the developmental origins of asyntactic integration: the processes that allow infants to relate or integrate meanings (e.g., of a word and context), before the elements of syntax that enable fully productive composition have been acquired.

On language acquisition and brain development

In this section, we present key facts about early language acquisition and human brain development. These facts provide a minimal backdrop against which one can interpret the results of ERP studies in infants and toddlers (see Development of the N400 component and effect), and relative to which one can evaluate the plausibility of hypotheses H1-H2 (see Meaning before grammar).

Early language acquisition: Syntax and semantics

Language acquisition does not begin from either syntax or semantics: to gain access to the form and content of utterances, the infant has to “crack the speech code” first (Kuhl, 2004). In the first months of life, infants track sequential statistics, typically transition probabilities, across levels of linguistic structure, and use those statistics to categorize speech sounds and extract phonological words from speech (for a review of statistical learning in early language acquisition, see Romberg & Saffran, 2010). These processes allow the child to gradually bootstrap the syntax and semantics of the target language, first building representations of lexical and syntactic words, and eventually learning to combine such representations on-line. The emergence of syntax and semantics occurs largely in parallel. Yet, a few key developmental facts deserve close attention, and may bear directly on the contrast between H1 and H2.

One such key fact is that lexical words emerge earlier than syntactic words. This suggests that operations on lexical words may emerge or apply earlier than operations on syntactic words (Pinker, 1984, was already aware of this possibility, and discussed it at length; e.g., pp. 118, 138 ff.). If correct, this may lend some plausibility to H2. Let us examine what it would take for infants to build lexical versus syntactic representations of words, given that they already possess phonological representations of words, i.e., that their ability to extract phonological words from continuous speech is fully operative at 9 months of age, or earlier. Building lexical semantic representations of words requires that concepts or referents are associated to words or utterances. Six months after birth, language-guided fixations indicate that infants understand the meaning of a few words uttered by caregivers (Bergelson & Aslin, 2017; Bergelson & Swingley, 2012, 2015) or by unfamiliar individuals (Bergelson & Swingley, 2018; Tincoff & Jusczyk, 1999, 2012). In addition, 6-month-olds appreciate semantic relations between early-learned words (Bergelson & Aslin, 2017). These findings do not show that composition or asyntactic integration are present at 6 months. However, they do indicate that the lexical material upon which such processes operate is available early on in infancy.

The same cannot be said about syntactic words. Several studies have provided evidence for the emergence of “precursors of syntax” already in the first few weeks and months of life (Benavides-Varela & Gervain, 2017; Benavides-Varela & Mehler, 2015; Christophe et al., 2008; Gervain et al., 2008; Gervain & Werker, 2013; Gomez & Gerken, 1999; Jusczyk et al., 1992; Marcus et al., 1999; Saffran & Wilson, 2003). Infants are able to track statistical or phonological properties of words and phrases that correlate with syntactic structure. This is an important finding, but it does not follow that infants can represent syntactic structure as such. That would require (as per the definitions in Autonomous Semantics: Conceptual preliminaries) that words are labeled syntactically (as N, V, Det etc.) or that grammatical relations are established between words (subject, object etc.). Studies reporting word order effects in newborns (Benavides-Varela & Gervain, 2017), or showing that young infants learn word order in artificial grammars (Markus et al., 1999), or that 7- to 8-month-olds show a preference for the native language’s word order (e.g., Gervain et al., 2008; Gervain & Werker, 2013), do not imply that infants can represent words as syntactic objects. Yet, this is strictly required by syntax-driven composition: without syntactic labels (Det, N etc.), there can be no phrase structure rules or constraints that can guide composition in the ways envisaged by linguistic theory and by H1. Therefore, these studies do not support H1, and do not contradict H2. Research shows that infants’ sensitivity to word order, in the correct technical sense (i.e., order of labeled syntactic objects), emerges later than some studies on young infants would suggest: around 19 months (see the eye-tracking study by Franck et al., 2013). Infants understand two-word instructions already at 14–16 months (Hirsh-Pasek & Golinkoff, 1999; Huttenlocher, 1974; Sachs & Truswell, 1978). Slobin (1999) has observed “that infants barely over a year in age, often with no productive word combinations, can comprehend combinations of words,” and “Yet there is nothing in such findings that forces one to endow the child with syntactic parsing or a hierarchical sentence structure.” One possibility here may be that, although there exist early “precursors of syntax” (processes tracking regularities in the input that correlate with syntactic structures), syntax itself (constraints on syntactic words) develops later than asyntactic integration, i.e., the kind of operations on lexical meanings sufficient to understand simple utterances. This picture is largely consistent with H2, but the exact nature of the relevant semantic operations remains to be clarified (more below).

What does it take to build syntactic representations of words? As noted by Aslin and Newport (2014), the child is “confronted with the tasks of (a) discovering how many grammatical categories there are in the natural language spoken by the infant‘s parents and (b) correctly assigning words to the appropriate category.” Distributional learning plays a key role here. This differs from statistical learning, since what is being tracked is not sequence statistics, but rather word co-occurrence statistics (Mintz et al., 2002; Mintz, 2003). Distributional learning kicks off around 12 months of age, and even then word categories are formed only if distributional cues correlate with phonological and semantic cues: that is, distributional cues are necessary, but not sufficient, for category learning (Gómez & Lakusta, 2004; Lany & Saffran 2010, 2011). The earliest category to form is that of nouns (N), beginning at 12 months or later (Waxman & Markow, 1995). One-year-olds do not yet possess the category of determiners (Det) and other functors. It is only around 14–16 months of age that infants begin to appreciate the syntactic role that determiners play in NPs, e.g., that of introducing a noun (Höhle et al., 2004; Kedar et al., 2006). Infants store individual function words and use them to segment adjacent words already at 7 months of age (Höhle & Weissenborn, 2003; Shi, 2014; Shi, Werker, & Cutler, 2006). But categories of function words (e.g., Det, Pro etc.) emerge only later, based on stored representations of individual functors. Christophe et al. (2008) have argued that “these results suggest that infants within their second year of life are already figuring out what the categories of functional items are in their language. The next step for them is to exploit the function words to infer the syntactic categories of neighboring content words.” On this view, 14-month-olds, and perhaps older children, do not yet possess syntactic categories for function or content words. From this follows that 1-year-olds cannot yet do syntax-driven composition, though they already understand some word combinations (see above). This would suggest that the infant’s early capacity to process meanings does not fully rely on syntax (H2).

Studies of semantic processing in infants and toddlers, using implicit measures such as the amplitude of the N400 or other ERP components, may provide information on the semantic operations available to children in the first 2 years of life. Below, we discuss the maturation of the brain networks underlying the N400 component.

Maturation of fronto-temporal N400 generators

Among language-related ERP components, the N400 is relatively well understood, including in terms of its cortical generators. Localization studies of other ERP components, such as the (E)LAN and P600, have implicated the left frontal operculum, anterior STG, and the basal ganglia (Friederici & Kotz, 2003; Friederici, Wang, Herrmann, Maess, & Oertel, 2000; Kotz, Frisch, von Cramon, & Friederici, 2003). These areas are distinct from the neural sources the N400, which is consistent with the relative autonomy of syntax and semantics. The N400 is generated in regions of the temporal lobe, in particular medial (the hippocampus and parahippocampal cortex) and lateral regions (pMSTG and ATL), as shown by M/EEG studies (Dale et al., 2000; Halgren et al., 2002; Helenius, Salmelin, Service, & Connolly, 1998; Marinkovic et al., 2003; Simos, Basile, & Papanicolaou, 1997; van Petten & Luka, 2006). However, fMRI studies, using the same stimulus types that modulate the N400 amplitude in ERPs, such as semantic incongruities, have found activations of LIFG (BA 45/47) instead of temporal cortex (Hagoort et al., 2004). It may not come as a surprise that M/EEG and fMRI can produce different results, both in this case and in general. Evoked M/EEG responses tend to reflect processes that are phase-locked or time-locked to the onsets of stimuli, whereas the BOLD signal in fMRI tracks neural events that exhibit greater intertrial variability or last longer (Liljeström, Hultén, Parkkonen, & Salmelin, 2009). The cycle model hypothesizes that currents are injected in compact waves in the left pMSTG, corresponding to the N400’s onset (~250 ms, currents from auditory or visual cortices) and peak (~400 ms, feed-back currents from LIFG) (Baggio, 2012; Baggio, 2018; Baggio & Hagoort, 2011). This would explain why activity in pMSTG is best captured in MEG or EEG recordings. In addition, in this model, LIFG has dual top-down binding and maintenance functions. Accordingly, compared to temporal regions, LIFG is more active continuously and over longer time periods, which is consistent with the self-sustaining activation profiles of neurons in PFC (e.g., Curtis & D’Esposito, 2003; Durstewitz, Seamans, & Sejnowski, 2000; Miller, 2000). This would explain why fMRI shows stronger or even the strongest responses in LIFG in semantic processing experiments (Hagoort et al., 2009). The two main cortical generators of the N400, therefore, likely make different functional contributions to semantics (pMSTG is involved in activating lexical meanings; LIFG is engaged in binding meanings together) and have different activation time courses (pMSTG neurons show stronger responses shortly after inputs are received from the sensory systems or LIFG; LIFG neurons show persistent activity that remains stable or rises over time).

Human developmental neuroanatomy has firmly established that maturational trajectories differ across cortical regions. Some brain structures, such as the PFC, grow comparatively slowly and over prolonged time intervals. This enables experience and environmental interactions to finely tune neuronal connections, as is required by the acquisition of complex skills or behaviors that are not rigidly pre-wired. Maturational rises, as evidenced by synaptogenesis and glucose uptake peaks, occur in the first few months of life in sensory, motor, temporal, and parietal cortices, and only later, from 6–8 months of age, in (pre)frontal regions (Johnson, 2001). This suggests that the left temporal generators of the N400 (pMSTG and ATL) possibly mature faster and before its left frontal generators do (LIFG). There is evidence of five resting-state networks at birth: primary visual cortex; motor and somatosensory cortex; temporal (or auditory) and parietal cortices; posterior lateral and midline parietal cortex and the cerebellum; and the medial and lateral anterior PFC (Fransson et al., 2007; Fransson et al., 2009). However, there is no evidence that the LIFG is functionally integrated into any of these networks in newborns. Studies measuring age-related changes in gray matter density show that higher-order association areas, such as the PFC, mature after sensory-motor areas (Gogtay et al., 2004). However, the temporal cortex may be an important exception: the evidence points to a maturational trajectory that closely follows that of perceptual systems. That is to be expected, since the temporal lobe hosts convergence zones that support the construction of poly-modal (eventually amodal) representations of objects and events (Damasio, 1989; Mesulam, 2008), and it implements the hierarchical, deep, multi-layer architecture that connects sensory areas to the hippocampal system, thus enabling encoding of episodic memories. Recent work further shows that the temporal lobe plays a major causal role in the spontaneous organization of the infant’s brain, by producing and propagating the “instructional signals” that drive maturation in other key areas (Arichi et al., 2017). The child’s brain, therefore, builds itself “from the inside out,” or rather from the center to the periphery – the “center” includes temporal regions that subserve semantic processing later in life (Hickok & Poeppel, 2004, 2007).

This picture is further substantiated by studies of white matter connectivity in infants. The semantic system in the brain relies heavily on a set of ventral white matter pathways that connect the occipito-temporal cortex to the anterior temporal lobe (the inferior longitudinal fasciculus; ILF), the posterior temporal lobe (pMSTG) to IFG (the extreme capsule and inferior fronto-occipital fasciculus; EmC/IFOF), and the anterior temporal lobe (ATL) to IFG (via the uncinate fasciculus; UF) (Catani, Jones, Donato, & Ffytche, 2003; Catani & Thiebaut de Schotten, 2008, 2012; Dick & Tremblay, 2012; Makris & Pandya, 2009; Martino, Brogna, Robles, Vergani, & Duffau, 2010). This ventral network subserves language comprehension in adults and in older children (Hickok & Poeppel, 2004, 2007; Saur et al., 2008), and evidence suggests it implements the kind of relational semantic processes manifested by the N400: for example, it is known that stimulating ventral perisylvian white matter (EmC/IFOF) induces semantic paraphasia (the substitution of words that should be produced in a task with semantically related words), while phonological paraphasia (the substitution of words with phonologically related words) occurs after stimulation of dorsal tracts, such as the arcuate fasciculus (AF) (e.g., see Duffau et al., 2005; Mandonnet, Nouet, Gatignol, Capelle, & Duffau, 2007; Matsumoto et al., 2004; Moritz-Gasser, Herbet, & Duffau, 2013). The ventral perisylvian pathways mature before the dorsal AF tract, but the gap narrows during the first months of life (Dubois et al., 2016). These results suggest that, during early infancy, semantic processing and acquisition rely on systems that localize in the lateral temporal cortex (pMSTG and ATL), interfaced with sensory-motor systems and with the hippocampal complexes. As development unfolds, parietal and frontal cortices, including LIFG, mature and connect to other regions, such as the pMSTG and ATL, becoming increasingly more engaged in semantics and in speech processing. MEG and fMRI studies have shown that initially only temporal and right frontal activations are found in response to speech in babies, while LIFG activity increases later, between 6 and 12 months of age (Dehaene-Lambertz, Dehaene, & Hertz-Pannier, 2002; Imada et al., 2006). At 8 months, perceptual binding effects are also first observed (e.g., γ band responses to illusory Kanizsa figures, similar to those found in adults) (Csibra, Davis, Spratling, & Johnson, 2000). Top-down binding processes in several perceptual and cognitive domains emerge between 6 and 12 months, possibly as a consequence of maturation and integration of PFC, including IFG, into the broader network of cortical systems that support complex perceptual and cognitive tasks. These binding processes may be involved in early semantic processing of speech and may be reflected in modulations of the N400’s amplitude.

Interim summary: Early development of syntax and semantics

The search for structure and meaning in speech signals begins early on in infancy, and largely simultaneously. To achieve this, however, children must first pierce through the barrier of continuous speech, segmenting it into relevant phonological units. Once this process gets going, syntax and semantics develop in parallel, but not at the same pace. Infants store phonological and lexical semantic representations of many words before they can categorize and process these words syntactically. Nouns are a prime example: infants understand the meaning of many common nouns (Bergelson & Swingley, 2012) before they begin to form the grammatical category Noun (Waxman & Markow, 1995). Moreover, 1-year-olds understand new multi-word utterances before most syntactic categories are fully formed (Harris, 1982; Hirsh-Pasek & Golinkoff, 1999; Huttenlocher, 1974; Sachs & Truswell, 1978; Slobin, 1999). This understanding could not be based on syntax-driven composition, because that requires precisely the grammatical categories (and the constraints on the arrangement of such categories in phrases and sentences) that 1-year-olds lack, or are only starting to acquire. Early asyntactic comprehension processes are likely supported by early-developing temporal lobe structures, enabling direct mappings of sound to meaning (Hickok & Poeppel, 2004, 2007) and increasingly, with the maturation and functional integration of LIFG within the speech and language network, binding of information across modalities or over time. Below, we review ERP studies on semantic processing in infants and toddlers, with a focus on N400 research, with the aim of clarifying the nature of early asyntactic comprehension processes.

Development of the N400 component and effect

M/EEG experiments have yielded new insights into semantic processing in infants and toddlers. This line of work has produced some evidence for the availability of semantic processes prior to the developmental emergence of syntax. Many of these experiments use ERPs, such as the N400, as direct, implicit measures of semantic processing: that is the focus of the present review. As suggested above, the N400 is likely to reflect a cycle of cortical processing, beginning with activity in pMSTG (~250 ms) marking the access of lexical meanings, followed by engagement of the middle and anterior portions of the (L)IFG (~300 ms), and again by activation of pMSTG (~400 ms), under the top-down influence of IFG, resulting in binding of lexical information into a representation of the semantic context (Baggio & Hagoort, 2011; Hagoort et al., 2009). Baggio (2018) argues that the sequence of processes manifested by the N400 comprise predictive, top-down, and context-sensitive unification of semantic information within and across modalities. In adults and older children, top-down binding proceeds in parallel and in interaction with bottom-up, syntax-driven composition, which would not be reflected by the N400. On this view, top-down semantic binding and bottom-up syntax-driven composition are independent processes. As a consequence, in infants and toddlers, top-down contextual binding of semantic information may be available before grammatical processing skills are acquired. This theory predicts early instances of the N400 effect, before bottom-up syntax-driven composition is available, i.e., before infants can compose syntactic words into syntactic phrases and sentences, in the sense defined in Early language acquisition: Syntax and semantics.

Methodology

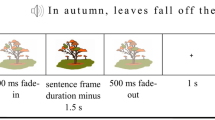

We carried out a literature review by searching for published peer-reviewed articles in several on-line databases (PubMed, Google Scholar, and Science Direct), using different combinations of search terms and strings to cover the following areas: N400; semantic priming; semantic processing; lexical processing; sentence processing. In all cases, the search strings also included the terms “infants,” “toddlers,” and “children.” Only articles published in English in peer-reviewed scientific journals, describing original research, were selected for this review. The articles had to report MEG/EEG studies in infants or toddlers (0-36 months of age), in which the N400 was the main dependent measure of interest, irrespective of whether N400 effects were actually reported: null results were also included. Given the variety of paradigms and tasks used in this field, we adopted a qualitative approach. We classified studies based on (a) the stimuli used (grouped by presentation modality and temporal structure of the trials) and (b) the age of children. Here, we first review studies in six broad thematic areas with relevance for theories of language and cognitive development. The selected studies are shown, according to the above two-way classification, in Table 1 and Fig. 1, and they are further discussed in the sections below. Constraints on a possible meta-analysis are considered in the section Meta-analyses of the N400 effect in infant studies .

Timeline of reported M/EEG effects in infants and young children between 3 and 24 months of age (see Table 1 and main text for studies with older children)

The N400 and action sequences

Understanding a sequence of connected actions (e.g., grasping and holding a fork, then using it to collect food) and anticipating its outcome (bringing the fork to one’s mouth to eat) is among the first cognitive tasks, performed by infants, that require integration of semantic information (e.g., about the identity of the objects involved, such as the fork and food, and about the intentions and goals of the agent). Reid et al. (2009) presented, to 7- and 9-month-old infants, action sequences as unimodal (visual) series of pictures that had either an expected outcome (the fork and food reach the actor’s mouth) or an unexpected outcome (they reach the actor’s forehead). Infants at 9 months showed an N400 effect (for unexpected vs. expected outcomes) similar to the incongruent action sequence N400 effect observed in adults. An N400 effect was not seen in 7-month-olds (Reid et al., 2009) or in 5-month-olds (Michel,Kaduk, Ní Choisdealbha, & Reid, 2017). Instead, a positive slow wave, possibly reflecting attention processes, was observed in response to unexpected action outcomes in younger infants. Using the same paradigm, Kaduk et al. (2016) also found an N400 effect to unexpected outcomes in 9-month-old children. The amplitude of the N400 was positively correlated to the infants’ language comprehension scores at 9 months and to language production scores at 18 months as measured by using the Swedish Early Communicative Development Inventory (SECDI; Eriksson, Westerlund, & Berglund, 2002). These N400 results are fully consistent with eye-tracking data, showing that 6-month-olds anticipate the outcomes of some human goal-directed actions (e.g., feeding with a spoon), and that this capacity further refines in the course of the first 2 years of life (Elsner, Bakker, Rohlfing, & Gredebäck, 2014; Kochukhova & Gredebäck, 2010). These data demonstrate that infants can integrate the different elements that constitute a goal-directed action, and that initial elements in a sequence constrain processing of downstream elements, as is also the case in speech processing and in other instances of context-sensitive information integration.

The N400 and early word-learning skills

Experiments in infants suggest that semantic processing is initially not fully reliant on grammatical competence or on a large vocabulary. Yet, semantic processes are tightly integrated with lexical learning, as is shown by studies reporting rapid learning effects on the N400 (e.g., Borovsky, Kutas, & Elman, 2010, 2012; Mestres-Missé, Rodriguez-Fornells, & Münte, 2007). For example, in an ERP study, Friedrich and Friederici (2011) presented 6-month-old infants with object-word pairs that they either had or had not been exposed to in a crossmodal learning phase. They found modulations of the N400 component during training, following five to eight presentations of the stimuli, and a smaller N400-like negativity for incongruous (untrained) versus congruous (trained) pairings, one day after training. The presence of an N400 effect during training suggests that infants map labels to objects rapidly as adults do, and that they retain these mappings to some extent. Less stable ERP signals 1 day after training indicate weaker consolidation, or weaker encoding, at 6 months: these capacities emerge later, during the second year of life (Borgström, Torkildsen, & Lindgren, 2015a; Friedrich & Friederici, 2008). An ERP experiment conducted on 3-month-olds showed no modulations of the N400 following training or testing with object pictures and spoken word pairs (Friedrich & Friederici, 2017). Despite finding a word recognition effect on the N200-500 component, it is safe to assume that infants, at this young age, cannot yet form robust associations of words and meanings. In research by Junge, Cutler, and Hagoort (2012), 9-month-old children were trained on pairings of common nouns (e.g., “cat”) to pictures of the objects they denote, using images of the same or of different exemplars over successive trials. They then presented infants with congruous (trained) or incongruous (untrained) pairings. They found an N400 effect in the contrast between conditions, whose amplitude was positively correlated with the number of words and utterances understood by infants at 9 months, assessed using the Dutch version of the MacArthur-Bates Communicative Development Inventory (CDI).

Evidence of early word-learning skills has also been provided by behavioral and eye-tracking studies, with the earliest effects found in 6-month-old infants (Bergelson & Aslin, 2017; Bergelson and Swingley 2012). Two visual-fixation habituation studies by Shi & Werker (2001, 2003) reported that 6-month-old children prefer lexical words over grammatical words. Taken together with ERP data, these results show that, from 6 months onward, infants can integrate semantic information (such as relations between words and possible referents) from different modalities, primarily visual and auditory, based on limited exposure to the relevant stimuli. Moreover, the effects of cross-modal learning of relations between words and referents are manifested on-line during word processing by modulations of the N400 amplitude.

Early N400 effects and subsequent language development

Several studies have investigated the relationships between early semantic processing and later expressive language development, using early instances of the N400 effect as predictors of subsequent language skills. Friedrich and Friederici (2006) retroactively analyzed ERPs from 19-month-old children, divided into two groups after a language test at 30 months: an age-adequate expressive language ability group and a low ability group. Language development was assessed using the SETK-2 test for German, which includes receptive tests (comprehension of words and sentences) and expressive tests (elicited production of words and sentences). Children with subsequent age-adequate expressive language skills displayed an N400 effect when presented with incongruous words or legal pseudowords, following a picture of a single object (to which congruent words could refer), while children with lower expressive language skills did not show an N400 effect. In children with larger productive vocabularies, recent results show a linear reduction of the N400 amplitude during learning of new names (pseudowords) of given pictured objects, while children with smaller productive vocabularies display a decrease in the N400 amplitude, but only at the end of the training phase (Borgström et al., 2015a). Torkildsen et al. (2009) found that 20-month-olds with larger productive vocabularies can recognize new words after just three presentations of those words in picture contexts; instead, five presentations are needed to reveal recognition effects in low producers. High producers showed an N200-400 repetition effect for real and new words, whereas low producers displayed an effect for real words only. Borgström et al. (2015b) presented 20- and 24-month-olds with visual object shapes and object parts in an object-word mapping task. In 20-month-olds, the N400 effect for words, primed by object shapes, was correlated with vocabulary size at that age, and was predictive of vocabulary size at 24 months. These ERP experiments suggest that the N400 is an early neural marker of developmental processes correlated with vocabulary expansion and the emergence and growth of expressive language skills.

The N400 and semantic categorization

An extended or expanding vocabulary could support the ability of children to organize lexical meanings and concepts by categories. Rämä, Sirri, and Serres (2013) presented 18- and 24-month-old children with semantically related word pairs (e.g., glass-bottle) and unrelated word pairs (e.g., jacket-bottle). In an auditory word-word priming task, an N400 effect of incongruity was found over right parietal-occipital electrode sites in 24-month-old toddlers and in 18-month-olds with high word production skills. These results indicate that 2-year-old children represent and track on-line semantic relations between words, a process that also underlies adult language comprehension (Baggio, 2018). The relevant relations here are not (strictly) semantic associations, but involve categorial relations (e.g., glass and bottle belong to the category of liquid containers). In adults, tracking of multiple types of semantic relations between words in sentences or discourse, in addition to associative and categorial relations, is manifested by the N400 effect – e.g., semantic relations that depend on logical form, argument structure, event structure etc. (see section Antecedent conditions of the N400 above; Kutas & Federmeier, 2011; Baggio, 2018).

Research has provided some evidence of semantic categorization in the second year of life (Friedrich & Friederici, 2005a, 2005b, 2005c, 2010; Torkildsen et al., 2006; Rämä et al., 2013), despite the fact that children’s system for categorizing information is still developing under the influence of many factors, e.g., vocabulary size (Borgström et al., 2015a, 2015b; Friedrich & Friederici, 2010; Rämä et al., 2013; Torkildsen et al., 2008), brain maturation (Kuhl & Rivera-Gaxiola, 2008), and language experience (i.e., in bilinguals vs. monolinguals). A study by Torkildsen et al. (2006) reports incongruity effects in ERPs in children younger than 2 years. This study shows that 20-month-old toddlers differentiate between congruent stimuli versus within-category violations (e.g., a picture of a dog followed by a meowing sound). Furthermore, the results indicate that children at this age have a “semantically graded” lexicon, in which categorically related concepts (e.g., dog/cat) are better connected together than unrelated items (dog/car) (Torkildsen et al., 2006). The capacity to track or bind information based on semantic relations seems relatively advanced by age 24 months, when the N400 in toddlers is, in some respects, quite similar to that of adults (Torkildsen, Syversen, Simonsen, Moen, & Lindgren, 2007). One recent study indicates that, already at 18 months of age, infants are sensitive to semantic relations between words (Sirri & Rämä, 2015). An ERP study by Friedrich and Friederici (2005a) showed that 19- and 24-month-old children are able to integrate semantic information in subject-verb-object sentences, and they recognize semantic violations at a noun with respect to the preceding verb. Their study suggests that, by 2 years of age, children represent aspects of the semantics of verbs, including some compositional restrictions they entail (for an experiment with 30-month-olds, see Silva-Pereyra et al., 2005a). Research using the intermodal preferential looking paradigm, in which infants look at named actions, shows that 15-month-olds do not yet understand verbs; 18-month-olds look at both typical and atypical targets, as if “they accepted all verb-argument mappings, independent of their probability in the real world” (Meints, Plunkett, & Harris, 2008, p. 453); 24-month-olds show a preference for typical targets; and 3-year-olds (much like adults) also accept atypical targets. This study points to 18 months of age as an important stage in the development of compositional semantics, as Meints et al. (2008) also note: “this stage could be seen as a more general, precursory stage in the development of verb knowledge in which the argument slot can be filled with any potential argument, be it a likely patient or not”. An intermodal preferential looking experiment by Arias-Trejo and Plunkett (2009) similarly shows that sensitivity to thematic relations between lexical items emerges around age 24 months. In summary, ERP measures show effects of semantic (e.g., categorial) relations already at 18 months, whereas behavioral studies point to the emergence of sensitivity to thematic relations around 24 months of age.

The N400 and semantic priming

Priming is defined as a change in ability to recall a target item as a result of a stimulus (“prime”) previously presented (Schacter & Buckner, 1998; Tulving & Schacter, 1990). The early priming paradigms used word-stem- or word-fragment-completion tasks. In primed word-stem-completion studies, participants are given a list of words to read. After several minutes to 2 hours, they are asked to complete the stem of the words as quickly as possible (e.g., “ele______” completed by “phant”). Typically, participants would generate words that had been read or heard before, implying a priming effect of active word memory traces on the completions. Semantic priming more specifically refers to faster responses to the target item (e.g., the picture of a roof) when it is preceded by a semantically related prime (e.g., a house) rather than an unrelated prime. The context for semantic priming may be multimodal in nature, with prime and target items being delivered to different sensory modalities (e.g., vision vs. hearing). The ability of infants to process (serially presented) inputs across sensory modalities is well documented. Before children can relate or integrate the meaning of words (when presented in close succession, as in lexical semantic priming), they are sensitive to the effects of affective primes, such as maternal language (Moon, Cooper, & Fifer, 1993), or facial displays of emotions, or some other bodily expressions (Grossmann, Striano, & Friederici, 2006). Rajhans, Jessen, Missana, and Grossmann (2016) presented 8-month-olds with a bodily expression that was either happy or fearful, followed by a facial expression which was congruent (i.e., happy bodily expression + smiling face) or incongruent (fearful bodily expression + smiling face). Congruent stimuli were associated with modulations of a negative-going ERP component in the ~400- to 600-ms time window relative to the onset of face stimuli. Semantic priming experiments have reported modulations of the N400, specifically, at 19 months (Friedrich & Friederici, 2005b), 14 months (Friedrich & Friederici, 2005c), or 12 months of age, depending on the child’s expressive language skills (Friedrich & Friederici, 2010): in these studies, auditory words were used as stimuli, typically following a picture (Table 1). However, N400 effects have been also observed in infants before the age of one, using non-verbal stimuli. Asano et al. (2015) presented visual stimuli (e.g., a round shape) to 11-month-old infants, followed by a new auditory word that either matched the shape sound-symbolically (e.g., “moma”) or not (e.g., “kipi”). Matching words evoked stronger γ-band responses shortly after word onset. An N400 was elicited by mismatching words, suggestive of semantic activation. Priming or preactivation between stimuli, whether these are temporally overlapping or not, and whether they are delivered in the same or in different modalities, therefore modulates the N400 effect already in the first year of life (Table 1, Fig. 1). There is evidence from behavioral studies that infants can relate or integrate lexical meanings, but this evidence is reported at later ages compared to related ERP experiments. For example, Arias-Trejo and Plunkett (2009) found that 21-month-olds, but not 18-month-olds, were sensitive to lexical-semantic priming. Similarly, Styles and Plunkett (2009) reported that 24-month-olds, but not 18-month-olds, looked more at target images when presented with related versus unrelated word pairs.

The N400, semantics, and social signaling

Complex meanings may be primarily conveyed by speech, yet communication typically occurs through a combination of signaling methods, including symbolic (e.g., linguistic expressions), indexical (pointing), and iconic methods (e.g., manual or facial gestures, intonation etc.; Clark, 1996). We may then ask how the child’s capacity to comprehend (linguistic) signals in a social setting develops, and how it interacts with the growth of the semantic system. Grossman et al. (2006) recorded ERPs while 7-month-old infants processed congruent versus incongruent facial expressions and voice prosody information. They found an N400 incongruency effect, suggesting that the capacity to integrate the different sources of information that constitute social communicative signals emerges early on in infancy. Further, 8-month-old infants can distinguish among different facial emotions and bodily expressions, indicating that they are sensitive and responsive to the emotional context provided by the overt behaviors of others (Rajhans et al., 2016). Parise and Csibra (2012) showed that 9-month-olds are sensitive to the identity of the source of linguistic signals: an N400 effect was observed when the referent of a spoken word did not match with the object that appeared from behind an occluder. The effect was larger if the word was spoken by the child’s mother than by the experimenter.

Social interaction is crucial also when infants are learning new lexical semantic information. Hirotani, Stets, Striano, and Friederici (2009) investigated learning of novel associations between unfamiliar objects and words by 18- to 21-month-old children. Presentations of words and objects that had not been associated during the training phase resulted in a stronger N400-like response between 200 and 600 ms from word onset, independent of the social condition of learning: with joint attention (where the experimenter looked at the infant, established eye contact, and accompanied speech production with positive facial and vocal expressions), or without joint attention (no eye contact and a neutral tone of voice). However, only in the joint attention condition did the incongruent pairs also elicit a later (~800–1,200 ms) and more widely distributed negative-going effect. This finding is compatible with studies reporting modulations of the N400 as a result of training or exposure (see above), but it also suggests that novel information acquired in different (social) conditions is either stored, retrieved, or used differently by children. Research has also indicated that even young infants expect that signals convey meaning and that specific types of signals from adults are evidence that they are being addressed or invited into communication (for a theory and discussion, see Csibra & Gergely, 2009, 2011). Other experiments show that children can track the reliability and the familiarity of sources of information about language and the world (e.g., see Tummeltshammer, Wu, Sobel, & Kirkham, 2014; Zmyj, Buttelmann, Carpenter, & Daum, 2010). Increased θ-band activity has been found when 11-month-old infants can anticipate that lexical or semantic information (e.g., the name of a new object) will be provided, especially if the source is a speaker of the infant’s native language (Begus, Gliga, & Southgate, 2016). These results show that social interaction provides a gating mechanism that helps the infant focus, in the process of learning words and meanings, on specific kinds of communicative or speech signals, or on signals that display certain preferred characteristics (Kuhl, 2007; Vouloumanos & Werker, 2004). Newer accounts of early word learning are exploring the possibility that communication is essential for language acquisition, not only because it provides a setting in which learning unfolds, but also because it defines the computational problem (coordination and information sharing) that the child gradually solves by acquiring a language (Yurovsky, 2017).

On meta-analyses of the N400 effect in infant studies

We close this section with a technical note. We evaluated the possibility of carrying out a meta-analysis on data from the studies selected for the present review. Meta-analytic techniques have gained traction recently in cognitive science as means of summarizing large and complex bodies of knowledge in a principled manner. Among these methods, a prominent place is occupied by analyses aimed at determining the presence and the magnitude of potential publication bias in a particular literature (Rothstein, Sutton, & Borenstein, 2006; Sutton, Duval, Tweedie, Abrams, & Jones, 2000).

We set out to explore the possibility of performing a meta-analytic assessment of the relevant N400 literature. It should be noted that the nature of the N400 effect, as an object of scientific research, challenges the assumption of conceptual comparability underlying meta-analyses. As discussed above, the N400 component does not reflect a single cognitive process, but likely a set or sequence of such processes, which encompass lexical and semantic activation and forms of contextual integration or binding (see section Functional accounts of the N400). Moreover, researchers have employed a number of different heuristics to identify N400 components or effects, varying in the target time interval or channel localization. This is especially true in studies with infants and children, where ERP components can vary in onset, latency, duration, and topographical distribution across participants, age groups, and experimental conditions. The challenge for a meta-analysis then is to use a set of inclusion criteria that maximizes the comparability of the reported N400 effects, by choosing studies that use similar experimental stimuli and tasks. We considered all and only M/EEG studies where the N400 effect or its neuromagnetic counterpart:

-

i.

was elicited by incongruent versus congruent stimuli, consisting of word-picture pairs presented in the auditory and visual modalities, respectively;

-

ii.

occurred within the 200- to 1,200-ms time interval from stimulus onset;

-

iii.

was found in non-adult participants between 9 and 36 months of age.

These criteria led to identification of eight published studies (Asano et al., 2015; Borgström et al., 2015a; Borgström et al., 2015b; Friedrich & Friederici, 2004; Hirotani et al., 2009; Junge et al., 2012; Torkildsen et al., 2006; Travis et al., 2011) that add up to the analysis of over 250 children. The degree of detail with which statistical analyses were reported is uneven across studies. Non-significant results, if reported, were usually not accompanied by statistics. This would render meta-analytic estimates of the true effect substantially biased: at best, they may be understood as upper-bound estimates of the true effects, on the assumption that no publication bias has occurred. The experiments in these articles, moreover, vary across a number of important dimensions, such as the placement, configuration, and number of recording sites, the time window used for the statistical analysis, and other factors related to experimental setups (see Coll, 2018, for similar considerations relating to ERP meta-analyses in a different literature). A recent simulation-based study of the different techniques available for estimating publication bias identified effect heterogeneity as one main factor hindering the reliability of such methods (Renkewitz & Keiner, 2018). Assuming a heterogeneity of τ=0.3 (which has been argued to be a realistic estimate of heterogeneity in meta-analyses in psychology: Stanley, Carter, & Doucouliagos, 2018; van Erp, Verhagen, Grasman, & Wagenmakers, 2017), in a set of ten experimental studies with underlying real effect between d=0-0.5, the majority of detection techniques display a power below 0.4 for diverse publication bias scenarios (Renkewitz & Keiner, 2018) – which is below the conventional 0.8 value embraced by psychological research. Therefore, the small sample sizes (participant N) in the studies selected here, and the small number of studies included in the selection, coupled with the methodological issues just discussed, suggest that the field is not ripe yet for valid application of meta-analytic methods.

Meaning before grammar

The studies reviewed here point to the early developmental emergence of a number of cognitive operations, revealed by the N400 component and effect, supporting forms of semantic processing in infancy and early childhood. Some of these operations emerge already during the first year of life, and there is evidence that, by the end of the second year, toddlers can relate and integrate aspects of semantic information, delivered either simultaneously or sequentially from multiple sources and modalities, such as speech or other stimulus types. It is premature to draw any definite conclusions from these data. Specifically, it would be untimely to try to adjudicate between H1 and H2 on that basis alone. However, the ERP experiments reviewed here, along with the behavioral studies discussed in the section Early language acquisition: Syntax and semantics, do shift the evidence base slightly in favor of H2: N400 effects show that non-syntactic operations are available to the child early on in development, and are used in processing semantic information before the resources of grammar are fully available, and before syntax can fully constrain meaning composition. A dedicated research program is needed to assess more thoroughly the plausibility of H2 versus H1 and other hypotheses, using longitudinal designs and dependent measures beyond M/EEG, eye movements, and overt behavior. In addition, it is important to show exactly how the processes identified here – forms of context-driven relational and integrative semantic processing – can actually support speech comprehension in early childhood, using both experimental and computational methods. Below we synthesize the results of the N400 experiments reviewed here, also to clarify the current empirical basis on which such a research program could be developed.

Early semantic processing: Towards a developmental timeline

ERP studies in infants and toddlers may be classified along two dimensions (Fig. 1): (a) the type of stimuli used, depending on sensory modality and temporal structure of trials, and (b) the mean age of the group of children in which the N400 effect was (not) observed. The great diversity of experimental paradigms and stimulus types used, and the lack of longitudinal studies, prevent us from making any finer-grained distinctions in the present classification. Moreover, direct comparisons with adult N400 effects may not always be possible, due to the lack of data from adult samples in the vast majority of ERP studies reviewed here. However, the approach adopted here is sufficient to draw some preliminary conclusions.

Children are sensitive to semantic relations between overlapping or coincident multimodal stimuli already during the first year of life (Fig. 1, Table 1). Infants may be tested reliably on non-verbal semantic tasks very early on, with the earliest signs of an N400 effect occurring in the first months of life. This is consistent with the idea that infants rely on non-verbal, specifically visual, communication and interaction channels early on (e.g., facial expression, voice prosody, and gestures), and increasingly on verbal communication as language develops (Sheehan, Namy, & Mills, 2007). Further, infants display associative learning between stimuli (e.g., a pseudoword and an object) from 6 months of age, or possibly earlier (Friedrich & Friederici, 2011, 2017; Grossman et al., 2006). Multimodal processing of overlapping or coincident stimuli is a commonly used paradigm to test semantic processing in infancy. This paradigm is sufficient to reliably elicit N400 effects in young infants (9 months or younger; Friedrich, Wilhelm, Born, & Friederici, 2015; Junge et al., 2012). As suggested by the spread of these studies across the age axis (Fig. 1, overlapping or coincident multimodal stimuli; in blue or green), this type of paradigm does not seem to be sensitive enough to reveal age differences in the development of semantic processing skills.

ERP experiments using sequentially presented unimodal visual stimuli (Fig. 1, red) show no evidence of N400 effects at 5 or 7 months of age, and the first effects are seen at 9 months. N400 effects to sequential multimodal audio-visual stimuli (Fig. 1, orange) also emerge at 9 months, whereas N400 effects to unimodal stimuli (auditory words; Fig. 1, light blue) are first reported at 18 months of age. To our knowledge, there are no reports of null effects (e.g., N400) for sequential unimodal auditory (word) stimuli before age 18 months, or for sequential multimodal stimuli (audio-visual) before age 9 months, in contrast with reported null effects for unimodal visual stimuli before 9 months. Whether this is due to a lack of attempted experiments or rather to a file drawer problem (e.g., to unpublished null results), it would invite some caution in interpreting any positive results in the context of the construction of a developmental timeline: these findings suggest that the capacity to relate or integrate sequentially presented semantic information across the auditory and visual modalities, and within the auditory modality (words), emerge at the latest at 9 and 18 months, respectively.

There is evidence of the occurrence of N400 effects in sentence contexts only at later ages, from 19 months on, and later at 24 and 30 months (see above; see Fig. 1, purple). Lexical semantic processing of auditory words in a sentence context is similar in some respects to processing spoken word sequences in priming paradigms. In both cases, the early stimuli (e.g., the first words in a sentence or the prime) can preactivate lexical (semantic) representations of downstream stimuli, thus facilitating processing and reducing N400 amplitudes. For example, Sirri and Rämä (2015) and Friedrich and Friederici (2005a) found N400 effects with auditory word sequences and sentences at 18 and 19 months, respectively. Our remark above on lack of (reported) experiments on younger infants applies here, too. However, several studies point to age 18 months, at the latest, as the point in development at which children are able to relate or integrate semantic information within or across modalities, and over time, as required by natural speech and sentence processing.

In summary, N400 research in infants and toddlers provides evidence for three critical points in development: (i) the emergence of the capacity to relate or integrate semantic information from simultaneous stimuli across modalities, at 6 months of age at the latest; (ii) the emergence of the capacity to relate or integrate stimuli over time, within and across modalities, at 9 months of age at the latest; and (iii) the emergence of the capacity to relate or integrate auditory words in sequences and in sentences, at 18 months of age at the latest. The attested functional links between the N400 effect and relational semantic processing, particularly in older children and adults, allow us to propose that occurrences of the N400 in infants and toddlers are evidence that forms of relational semantic processing are operative in infancy and toddlerhood. This hypothesis is in accord with the neurodevelopmental results discussed in the section Maturation of fronto-temporal N400 generators, suggesting that, at 6 months of age, structures of the temporal lobes have reached a maturation stage sufficient to support integration of information across modalities, if stimuli are presented simultaneously or in close temporal succession. Integration and binding of information over time, within or across modalities, require instead support from PFC regions, which are increasingly engaged in this type of process starting from 6 months onward. These considerations, together with the ERP results reviewed here, suggest that, by 18 months of age at the latest, children are able to relate and integrate, within and across modalities, semantic information conveyed by temporally extended sequences of stimuli, crucially including auditory words in sentences. The implications of this provisional conclusion for language acquisition are discussed below.

ERPs and the development of morphological and syntactic processing

Our review so far has focused primarily on ERP studies of early semantic processing. It is useful to compare those findings with ERP research on early grammatical processes as well as with knowledge of early language acquisition obtained from behavioral and observational studies (see Early language acquisition: Syntax and semantics). It should be noted that no experiments have been carried out on infants or toddlers to directly address syntactic or semantic composition specifically. As is the case for the N400 studies above, therefore, ERP studies on syntax and morphology in young children may only speak indirectly to the issue of the neural precursors of meaning composition. Secondly, known ERP components are unlikely to tell the whole story about semantic and syntactic processing in the brain: for example, MEG studies have revealed activity in the anterior temporal lobe, related to conceptual processing (including forms of semantic combination), around 200–250 ms from word onset, thus before the N400’s peak (Bemis & Pylkkänen, 2011); in addition, early ERP components, such as the auditory MMN, can be also modulated by grammatical errors (e.g., see Herrmann, Maess, Hasting, & Friederici, 2009; Pulvermüller, Shtyrov, Hasting, & Carlyon, 2008; Shtyrov, 2010). These signatures of fast syntactic processing have not yet been carefully investigated in infants and young children, and they seem difficult to reconcile with current knowledge of the phonological MMN: specifically, several of the “syntactic MMN” effects described in the literature (e.g., at 130–150 ms, in Pulvermüller et al., 2008, and 100–180 ms in Herrmann et al., 2009) paradoxically precede the MMN elicited during phonological analyses of speech (250–280 ms; see Kujala, Alho, Service, Ilmoniemi, & Connolly, 2004; Tavabi, Obleser, Dobel, & Pantev, 2007). Nonetheless, the existence of (yet undetected) early brain responses to syntactic or semantic features of linguistic stimuli is a logical possibility. Finally, the dichotomous view of the N400 as a “pure index” of lexical semantic processing and of the LAN and P600 as “pure indices” of syntactic processing is currently debated (see Bornkessel-Schlesewsky & Schlesewsky, 2019; Bornkessel-Schlesewsky, Staub, & Schlesewsky, 2016). As suggested above (Functional accounts of the N400), the N400 likely reflects a predictive, top-down, context-sensitive semantic mechanism, and the same mutatis mutandis could be argued about the role of the P600 in syntactic or grammatical processing (for a model in the framework of Analysis-by-Synthesis, see Baggio, 2018, and Michalon & Baggio, 2019). Therefore, the N400 and P600 should not be understood either as signatures of bottom-up semantic or syntactic composition, or as the only signatures of semantic and syntactic processing more generally.