Abstract

In our daily life, we often encounter situations in which different features of several multidimensional objects must be perceived simultaneously. There are two types of environments of this kind: environments with multidimensional objects that have unique feature associations, and environments with multidimensional objects that have mixed feature associations. Recently, we (Goldfarb & Treisman, 2013) described the association effect, suggesting that the latter type causes behavioral perception difficulties. In the present study, we investigated this effect further by examining whether the effect is determined via a feedforward visual path or via a high-order task demand component. In order to test this question, in Experiment 1 a set of multidimensional objects were presented while we manipulated the letter case of a target feature, thus creating a visually different but semantically equivalent object, in terms of its identity. Similarly, in Experiment 2 artificial groups with different physical properties were created according to the task demands. The results indicated that the association effect is determined by the task demands, which create the group of reference. The importance of high-order task demand components in the association effect is further discussed, as well as the possible role of the neural synchrony of object files in explaining this effect.

Similar content being viewed by others

In our daily life, we often encounter situations in which different features of several multidimensional items must be perceived simultaneously. When organizing a party, one might need to perceive whether the number of plates on the table matches the number of glasses. This type of comparison reflects a within-class or within-category comparison (i.e., a comparison within the “shape” feature). Another type of comparison may require a between-category comparison. For example, when organizing a Christmas party, the decorator can check whether there are more red items or more ornaments. In this example, one needs to compare a certain shape feature to a certain color feature. Although these types of comparisons are frequently performed in daily life, the roles that govern the between-category comparisons are not entirely clear.

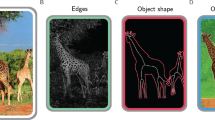

In Goldfarb and Treisman (2013), we demonstrated an important role that governs the perception of between-category features—the role of unique versus mixed target feature associations. In that study, participants were asked to compare the number of “X”s with the number of occurrences of red items (participants had to decide whether they saw more red objects, more “X”s, or the same numbers of “reds” and “X”s). The experiments had two important conditions: (a) Unique/consistent feature associations, in which the relevant shape (e.g., “X”) and the relevant color (e.g., red) were consistently paired; this condition was created when all of the red items were paired with all of the “X”s or when the “X”s were never red (e.g., in the Christmas party example above, when none of the ornaments are red; see Fig. 1a and b). (b) Mixed/inconsistent color associations, in which the relevant shape (e.g., “X”) and the relevant feature (e.g., red) were paired inconsistently; this condition was created, for example, when some of the “X”s were red but others were not (in other words, in the Christmas party example, this condition fits a situation in which some ornaments are red but others are not; see Fig. 1c). The study revealed an association effect, and accordingly, response times (RTs) were slower in the mixed color association condition than with the unique color associations.

Examples of the unique association condition and the mixed association condition. From “Counting Multidimensional Objects: Implications for the Neural-Synchrony Theory,” by L. Goldfarb and A. Treisman, 2013, Psychological Science, 24, pp. 266–271. Copyright 2013 by the Association for Psychological Science. Adapted with permission

In Goldfarb and Treisman (2013), we suggested that one possible way of explaining this association effect is within the object file and the synchrony assumptions. The object file theory is one of the theories that attempts to explain how multifeature objects are perceived (Kahneman, Treisman, & Gibbs, 1992). The theory suggests that, because in the early perception stage the different features of objects are represented separately in the brain, a rebinding process must occur. According to the theory, this is an effortful process in which a temporary object file is created for each relevant feature and location of a single object. Specifically, the theory suggests that features activate the long-term stored knowledge of their categories or types, which are represented as nodes in a recognition network. After this activation, the relevant information is attached to each location in the temporary episodic representation (object files). Hommel and Colzato (2009) suggested that the reattachment of features to the corresponding temporary object file may be accomplished by temporal neural firing (Engel & Singer, 2001; von der Malsburg, 1999). Accordingly, features that share the same location have a common neural synchrony. For example, in order to represent a red circle among green triangles, the neurons that encode the color red fire at the same rate as the neurons that encode the circle shape.

This synchrony of object file theory actually predicts the behavioral association effect. Remember that the task is to compare the number of “X”s to the number of red objects. As can be seen in Fig. 1, in the unique color association, the relevant shape is associated with the relevant color. In such a condition, the firing rate associated with the task-relevant shape—“X”—can be synchronized with the relevant locations (e.g., Locations 1 and 3). In the example shown in Fig. 1a, the “X” shape and Locations 1 and 3 share a common synchrony that we can label SCa (“Synchrony Correlation a”). In addition, firing associated with the task-relevant color—red—can be synchronized with other locations (e.g., Locations 2 and 5; i.e., they share a common synchrony that we can label SCb). Figure 1b presents another case in which a consistent or unique association is created. Here, all “X”s (the shape target) are printed in red (the color target). This means that the two target features always share the same location, allowing both red objects and “X”s to be synchronized. On the other hand, the mixed color–shape association in Fig. 1c is theoretically hypothesized as one that cannot be synchronized at the same time. In this condition, the features characterizing different elements occur independently of each other. As can be seen in Fig. 1c, in the mixed color condition, the firing rate associated with Locations 3 and 5 can be synchronized with the task-relevant color red. However a problem arises when the task-relevant shape “X” also has to be synchronized, since the “X” shape is associated with Location 3 (but not with Location 5). Hence, it would not be possible to simultaneously synchronize the color red and the shape “X” via their locations (since some of the “X” locations have already been used by the synchrony value of the color red). In Goldfarb and Treisman (2013), we suggested that although these kind of strings are impossible to synchronize simultaneously, they can be synchronized one after the other (i.e., first the color can be synchronized with its location, and then in another representation the shape can be synchronized with its location). In each new synchronization, new object files must be created, and this might result in the RT cost that is observed in this condition.

In the present study, we attempted to further investigate the association effect, by examining whether the effect is governed by a low-level perceptual aspect of the stimuli or by a high-order task demand component. This question is important for understanding how multidimensional objects are perceived and how between-object comparisons can be performed. In addition, the different outcomes have different implications for different feature integration theories, as will be discussed in the General Discussion section.

Two possibilities are that the association effect reported in Goldfarb and Treisman (2013) is restricted to a simple, low-level visual property of the display or is governed mainly by the feedforward sweep of information processing (Lamme & Roelfsema, 2000). It has been well documented that in a sequential display, binding between the physical features of a stimulus can occur automatically, and when objects are presented sequentially, repeated items that share only some of their physical features are perceived the most slowly (e.g., if the observer perceives a red “X,” then in the following trial it will be hardest to perceive a red “O” or a green “X”; see, e.g., Hommel, 1998, 2004). Part of this effect has been explained by the fast, feedforward connection in memory (Lamme & Roelfsema, 2000) between the physical features of an object, and consequently the difficulty to rebinding partly overlapping features (e.g., Hommel & Colzato, 2009). Hence, if the current association effect involves serial processing, this could explain why it would be hard to perceive a mixed association on the physical level.

Similarly, the association effect could be governed by the physical aspect of the stimuli, if physically different items within the feature category cannot share the same synchrony value. It has been suggested that in the initial processing stage, each feature is automatically processed in a unique feature map, and that this is based on the stimuli’s low-level physical characteristics (e.g., Treisman, 2006; Treisman & Gelade, 1980; Treisman & Schmidt, 1982). For example, the different shapes might be stored in the shape map and different colors in the color map. In order to physically distinguish different instances within a single map (such as different shapes), the different instances cannot share the same synchrony value (i.e., if the physical shapes “X” and “O” or “A” and “a” need to be physically distinguished, they cannot have the same synchrony value). Hence, it can be assumed that the synchrony between maps is pre-formed, on the basis of the synchrony values on those early-stage “physical” maps (in which each item within a map must be physically distinguished). Consequently, in this case, the association effect between the different maps (i.e., color and shape) would be governed by the synchrony value that is given to the physical aspect of the stimuli within a map (i.e., the physical shapes “X” and “O” or “A” and “a” within the shape map).

An alternative possibility is that the association effect is governed by high-order task demand components. It has been well documented that top-down control and the specific task demands can alter and organize our perception (Corbetta, Miezin, Dobmeyer, Shulman, & Petersen, 1991; Treisman, 1969; Wolfe, 1994). In addition, according to the object file theory, a single physical feature can activate many kinds of knowledge in long-term memory, and an object file is created by information fed by long-term stored knowledge of the relevant categories. Hence, if the association effect is governed by high-order task demand components, then targets that are determined by the task in hand as belonging to the same group (regardless of their visual physical identity) act as a single group, and consequently can share the same color. For example, if we think of the association effect in terms of the synchrony of object files, then physically different items can share the same value if they are tagged as members of the same group, and consequently all those members can share the same synchrony value. Note that according to this possibility, the synchrony is performed on the identity tag that is determined by the task demand. In the present study, we aimed to investigate those options.

Experiment 1

In this experiment, participants were required to compare the number of “A/a”s (regardless of case) to the number of red objects. Two critical conditions were created: one with mixed physical associations but unique task demand associations, and one with the reverse associations (see Fig. 2). This setting allowed us to examine whether the association effect is governed by a high-order task demand component or whether it is restricted to low-level physical attributes of the stimuli. If the first possibility is correct, then it would be easier to represent the “task demand unique/physical mixed” condition (Fig. 2a) than the “task demand mixed/physical unique” condition (Fig. 2b). On the other hand, if the second possibility is correct, then an opposite pattern would emerge.

Examples of the different stimuli in Exp. 1 and possible synchronization solutions for those stimuli. (2a) A stimulus whose associations are unique at the task demand level (all target letters are red). However, at the physical level the associations are mixed (the red item group contains two physically different letters). (2b) A stimulus whose associations are mixed at the task demand level (not all target letters are red). However, at the physical level the associations are unique (each physically different letter has a unique color). (2c & 2d) The regular conditions. In the stimulus in panel 2c, the associations are unique at both the physical and task demand levels (each physically different letter has a unique color, and the reds are never the target letters). In the stimulus in panel 2d, the associations are mixed at both the physical and task demand levels (one A is red and one A is green)

Method

Participants

A group of 12 undergraduate students from Haifa University, with normal or corrected-to-normal vision, participated in the experiment in partial fulfillment of course requirements.

Stimuli

The strings presented consisted of the target letters “A” (uppercase), “a” (lowercase), and one or two of the distractors letters: “X” and “T.” Each letter was colored either in the color target red or in one or two of the distractor colors: green and blue. The stimulus displayed consisted of five-letter strings. In each string, the target features (“A/a” and red) appeared two or three times, and one of the letter targets was “a” (lowercase). The size of each letter was approximately 1.2°, and the string appeared in the center of a white screen.

Similar to the method of Experiment 1 in Goldfarb and Treisman (2013), in the regular unique/constant color–shape association condition, the “A/a”s and the red objects never shared a common location (i.e., the “A/a”s were never printed in red). In the regular mixed color–shape association condition, when “more A/a” was the required response, one of the two “A”s was printed in red and the other was not. When “more red” or “same” was the required response, both target letters (“A/a”) and nontarget letters were printed in red. To create the physical mixed/semantically unique condition, both “A” and “a” were colored red (i.e., the red item group contained two physically different letters). To create the physical unique/semantically mixed condition, the letter “a” was colored in a “unique” color (not sharing visual features with the other letter shapes), and the capital A was colored in a different “unique” color. Within these constraints, the order of the letters and their color was randomized within each string in each display.

Procedure

The experiment was programmed in E-Prime 2.0. A Compaq computer with an Intel core i7-2600 central processor was used to present the stimuli and collect the data. Stimuli were presented on a Samsung 22-in. monitor while participants sat about 60 cm from the screen. A keyboard on which the participants pressed their answers was placed on a table next to the screen. Each participant was tested individually. Stickers with the labels “same,” “more A\a,” and “more red” were pasted on the keyboard keys “g,” “h,” and “j,” respectively. Participants were instructed to compare the number of “A/a”s to the number of red objects and told that the possible responses were “more red,” “more A\a,” or “same.” The participants were asked to respond as quickly as possible but to avoid mistakes. Each trial started with a white display appearing for 1,000 ms, followed by the letter string. The letter strings were chosen randomly for each participant. The items disappeared when the participants responded, and then the next trial began. In the case of an inaccurate response, the word “incorrect” appeared on the screen for 1,000 ms. The computer registered the participant’s responses as well as the RT, in milliseconds, from the string onset to the participant’s response. Before the beginning of the experimental block, participants were given 12 trials of practice, regardless of how many errors they made, and then they performed a block of 80 experimental trials.

Results and discussion

For the correct trials, the mean RT was calculated for each participant in each condition. A two-way analysis of variance was applied to these data with two within-participants factors: (a) Matching between the physical and task demand levels—matched (the regular condition) and not matched (the condition of interest); and (b) Association Type—unique and mixed associations. A significant main effect was found for association type, F(1, 11) = 20.85, MSE = 83,652, p < .05. Further analysis revealed a significant association effect (unique vs. mixed associations) in the regular condition (in which the physical and task demand levels were matched), t(11) = 2.17, p < .05. This replicated the previous findings of Goldfarb and Treisman (2013). In addition, in the condition of interest (in which the physical and task demand levels were mismatched), another significant association effect was found, t(11) = 2.86, p < .01. Most importantly, this effect suggested a significantly slower RT in the task demand mixed (but physically unique) association than in the task demand unique (but physically mixed) association condition. See Table 1 for the mean RTs in the different conditions. These results suggest that the association effect of multidimensional objects is not determined simply by the physical characteristics of the stimuli. Rather, the task demand determines what a group is, and only in that classification does the association effect take place.

Experiment 2

Experiment 1 revealed that the association effect for multidimensional objects is determined by higher characteristics of the stimuli that determine the group of reference. In Experiment 1, semantic identity determined the group of reference. Hence, Experiment 2 was designed to demonstrate that this effect is not restricted to a semantic association, but can be governed by any character that determines a group of reference. Therefore, in the second experiment we verified that the same effect reported in Experiment 1 remained even when the group of reference was an artificial one (i.e., the letters “A” and “B” were considered the target group). Hence, in the present experiment participants were asked to compare the number of A/Bs to the number of red items.

Method

The method of Experiment 2 was similar to that of Experiment 1 with the following changes. The letter “B” replaced the letter “a” in all of the stimuli described in Experiment 1, so that participants were instructed to compare the number of “A/B”s to the number of red objects. Stickers with the labels “same,” “more A\B,” and “more red” were pasted on the keys “g,” “h,” and “j,” respectively. Overall, ten participants took part in this experiment.

Results and discussion

For the correct trials, the mean RT was calculated for each participant in each condition. As in Experiment 1, an analysis of variance was applied to these data with two within-participants factors: (a) Matching between the physical and task demand levels—matched (the regular condition) and not matched (the condition of interest); and (b) Association Type—unique associations and mixed associations. This analysis revealed a significant main effect for matching between the physical and task demand levels, F(1, 9) = 8.82, MSE = 176,594, p < .05. In addition, a significant main effect was found for association type (unique vs. mixed associations), F(1, 9) = 8.83, MSE = 317,032, p < .05. As in Experiment 1, a further analysis revealed a significant association effect (unique vs. mixed associations) in the regular condition, t(9) = 4.64, p < .01, and in the condition of interest, in which RTs in the semantically mixed (but physically unique) association was slower than in the semantically unique (but physically mixed) association condition, t(9) = 1.83, p < .05. (See Table 1 for the mean RTs in the different conditions.) The present results indicate once again that the association effect is governed by higher-task-demand routes.

General discussion

The results of the experiments replicated the previous association effect reported by Goldfarb and Treisman (2013). Most importantly, the results also indicate a significantly slower RT for the physically unique/task-relevant mixed association condition than for the task-relevant unique/physically mixed association condition.

As we noted in the introduction, the association effect could theoretically be determined by the physical characteristics of the stimuli (see, e.g., Fig. 2b) or via the feedforward sweep of information processing (as in the sequential case; e.g., Colzato, Raffone, & Hommel, 2006; Hommel & Colzato, 2009). If the association effect were indeed governed by the lower-level physical aspects of the stimuli, then objects with different visual shapes that shared the same color would create the mixed association condition, regardless of the task demands. However, the present results support the notion that the association effect is governed by a high-order task demand component. Accordingly, top-down control—and, specifically, the task demand—alters the organization of the group of reference. In other words, the targets determined by the task in hand as belonging to the same group (regardless of their visual identity) acted as a single group, and consequently could share the same color.

How can this behavioral finding be incorporated within the existing perceptual theories? In Goldfarb and Treisman (2013), we suggested that the synchrony of object files can explain the association effect. We noted the assumptions of synchrony theory, postulating that the same spatial location cannot serve mixed targets. For example, in the “regular” mixed color–shape association, it is not possible to simultaneously synchronize two features (e.g., the color and the shape) via their locations, since some of the relevant locations of certain features (e.g., color) are already being used by the synchrony value of the other relevant feature (e.g., shape). The present results add to the previously discussed framework and suggest that if the synchrony is indeed responsible for the regular association effect, then the synchrony itself is not performed on the “basic” information provided by initial maps. In other words, the synchrony is conducted at a late perceptual stage in which the object file tags are already initiated (i.e., after the attention system has tagged each element as a target group, according to the task demands). Although object tags are clearly fed to some degree by the lower visual maps, top-down feeding based on the task must also have an important role, since a single physical shape can have many tags (e.g., the shape of a rose can be tagged as a “rose,” but also as a “flower”). Hence, the perceiver’s goal (to find roses or to find any kind of flower) must influence this tagging procedure.

It is important to note that the synchrony of object files is not the only possible explanation of how features integrate with one another. For example, it has been suggested that integrated objects could be represented in a topographical saliency map (e.g., Koch & Ullman, 1985). In these maps, the fewer features an object shares with other locations, the more salient the location of the object. However, on the basis of those assumptions, it is not clear why in the association effect only partial feature sharing would damage perception, whereas constant sharing or constant separation does not. In addition, it is not clear how the task demand affects this saliency and alters the saliency of physically unique associations so that they become harder to perceive (as in the present experiment). Another important feature perception theory is the Boolean map theory (e.g., Huang, Treisman, & Pashler, 2007). According to this theory, at an early perception stage, each instance of a feature is represented in a separate map, and these maps cannot be accessed simultaneously. According to this theory, multiple locations of identical features (i.e., many reds) are represented in a single feature map (i.e., the “red” map). These multiple identical features can be accessed simultaneously via each map. One possible theoretical option that can arise from this kind of representation is that when the number of a certain instance needs to be compared to the number of another, counting can be conducted in each map separately. This can be done independently of the existence of other locations filled with other features in the separate feature maps. Hence, it is not clear why a location that is shared by two targets represented in different maps would cause the observed effect and why the effect is not observed at the physical level (as is shown in the present study). However, although the effects described in this study are not directly predicted by these alternative models, it is possible that in the future, with additional assumptions, these models might be able to accommodate the present behavioral effects.

Finally, we would note that the present study describes behavioral effects in the context of the perception of complex multidimensional objects. Although the processes involved in feature integration have been massively studied over the past years, only a few studies have directly addressed the unique perceptual problems involved in such complex perception. The lack of sufficient research in this field is in contrast to the complex natural scenes in our daily life. Specifically, natural scenes often contain repetitions of several dimensions or mixed associations, and comparisons between features are often needed. Hence, an important step in developing natural perceptual models will be to behaviorally identify the difficulties that these situations create. The present study is one step in this direction, and the more studies that are conducted in this field, the better we will be able to understand the human perceptual system.

References

Colzato, L. S., Raffone, A., & Hommel, B. (2006). What do we learn from binding features? Evidence for multilevel feature integration. Journal of Experimental Psychology: Human Perception and Performance, 32, 705–716. doi:10.1037/0096-1523.32.3.705

Corbetta, M., Miezin, F. M., Dobmeyer, S., Shulman, G. L., & Petersen, S. E. (1991). Selective and divided attention during visual discriminations of shape, color, and speed: Functional anatomy by positron emission tomography. Journal of Neuroscience, 11, 2383–2402.

Engel, A. K., & Singer, W. (2001). Temporal binding and the neural correlates of sensory awareness. Trends in Cognitive Sciences, 5, 16–25. doi:10.1016/S1364-6613(00)01568-0

Goldfarb, L., & Treisman, A. (2013). Counting multidimensional objects: Implications for the neural-synchrony theory. Psychological Science, 24, 266–271. doi:10.1177/0956797612459761

Hommel, B. (1998). Event files: Evidence for automatic integration of stimulus–response episodes. Visual Cognition, 5, 183–216. doi:10.1080/713756773

Hommel, B. (2004). Event files: Feature binding in and across perception and action. Trends in Cognitive Sciences, 8, 494–500. doi:10.1016/j.tics.2004.08.007

Hommel, B., & Colzato, L. S. (2009). When an object is more than a binding of its features: Evidence for two mechanisms of visual feature integration. Visual Cognition, 17, 120–140. doi:10.1080/13506280802349787

Huang, L., Treisman, A., & Pashler, H. (2007). Characterizing the limits of human visual awareness. Science, 317, 823–825. doi:10.1126/science.1143515

Kahneman, D., Treisman, A., & Gibbs, B. J. (1992). The reviewing of object files: Object-specific integration of information. Cognitive Psychology, 24, 175–219. doi:10.1016/0010-0285(92)90007-O

Koch, C., & Ullman, S. (1985). Shifts in selective visual attention: Towards the underlying neural circuitry. Human Neurobiology, 4, 219–227.

Lamme, V. A. F., & Roelfsema, P. R. (2000). The distinct modes of vision offered by feedforward and recurrent processing. Trends in Neurosciences, 23, 571–579. doi:10.1016/S0166-2236(00)01657-X

Treisman, A. M. (1969). Strategies and models of selective attention. Psychological Review, 76, 282–299. doi:10.1037/h0027242

Treisman, A. (2006). How the deployment of attention determines what we see. Visual Cognition, 14, 411–443. doi:10.1080/13506280500195250

Treisman, A. M., & Gelade, G. (1980). A feature-integration theory of attention. Cognitive Psychology, 12, 97–136. doi:10.1016/0010-0285(80)90005-5

Treisman, A., & Schmidt, H. (1982). Illusory conjunctions in the perception of objects. Cognitive Psychology, 14, 107–141. doi:10.1016/0010-0285(82)90006-8

von der Malsburg, C. (1999). The what and why of binding: The modeler’s perspective. Neuron, 24(95–104), 111–125.

Wolfe, J. M. (1994). Guided Search 2.0: A revised model of visual search. Psychonomic Bulletin & Review, 1, 202–238. doi:10.3758/BF03200774

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Goldfarb, L., Sabah, K. Multidimensional representation of objects—The influence of task demands. Psychon Bull Rev 23, 405–411 (2016). https://doi.org/10.3758/s13423-015-0894-4

Published:

Issue Date:

DOI: https://doi.org/10.3758/s13423-015-0894-4