Abstract

The efficacy of working-memory training is a topic of considerable debate, with some studies showing transfer to measures such as fluid intelligence while others have not. We report the results of a study designed to examine two forms of working-memory training, one using a spatial n-back and the other a verbal complex span. Thirty-one undergraduates completed 4 weeks of n-back training and 32 completed 4 weeks of verbal complex span training. We also included two active control groups. One group trained on a non-adaptive version of n-back and the other trained on a real-time strategy video game. All participants completed pre- and post-training measures of a large battery of transfer tasks used to create composite measures of short-term and working memory in both verbal and visuo-spatial domains as well as verbal reasoning and fluid intelligence. We only found clear evidence for near transfer from the spatial n-back training to new forms of n-back, and this was the case for both adaptive and non-adaptive n-back.

Similar content being viewed by others

Working memory is of great interest across psychology. Defined as the ability to simultaneously store and process information in the service of a goal (Baddeley, 1992; Miyake & Shah, 1999), working memory is frequently measured by performance on computerized tasks where one must remember a series of items while performing accurately on an interleaved secondary task such as reading a sentence or solving arithmetic problems (Daneman & Carpenter, 1980; Turner & Engle, 1989). What makes the construct so central is its ability to predict performance on a wide array of complex cognitive tasks such as reading and language comprehension (Daneman & Carpenter, 1980; Daneman & Merikle, 1996), reasoning (Kyllonen & Christal, 1990), and mathematical (LeFevre, DeStefano, Coleman, & Shanahan, 2005) performance as well as measures of fluid intelligence (Kane, Conway, Hambrick, & Engle, 2007). Additionally, poor working-memory performance is seen in disorders such as attention-deficit hyperactivity disorder (ADHD; Barkley, 1997), schizophrenia (Goldman-Rakic, 1994), and learning disabilities (Swanson, 2006). Therefore, a natural question is whether improvements in working-memory capacity will lead to improved performance on complex tasks.

The broader question of whether cognitive enhancement can be achieved through the training of basic cognitive tasks is an old one (Thorndike & Woodworth, 1901), but the 21st century has seen a growing number of studies suggesting that such training regimens can be effective, especially when working memory is the target. Klingberg, Forssberg, and Westerberg (2002) reported that the use of a computerized working-memory program in children with ADHD showed transferable improvements to working memory as well as a measure of fluid intelligence. One feature deemed critical for success was the adaptive nature of their program, i.e. the difficulty was constantly adjusted for each individual based on their current performance so they would always be challenged but not frustrated. Participants run on a non-adaptive version of the program did not show the same benefits (Klingberg, Forssberg, & Westerberg, 2002; Klingberg et al., 2005). More startling and more controversial was a report by Jaeggi, Buschkuehl, Jonides, and Perrig (2008) demonstrating the effects of working-memory training in college students. Here working memory was trained using an adaptive dual n-back paradigm and transfer was seen to measures of fluid intelligence thus raising the possibility that working-memory capacity could be increased in an already high functioning sample and show generalizable benefits. Chein and Morrison (2010) also reported transferable improvements following a different form of working-memory training based on complex span tasks similar to those commonly used to measure working-memory capacity. They reported transfer to both working and short-term memory as well as to the Stroop task and a reading comprehension measure. However, they did not find transfer of training to fluid intelligence.

These data were met with both excitement (Sternberg, 2008) and considerable skepticism. A meta-analysis of working-memory training studies found little support for transfer to other cognitive abilities (Melby-Lervåg & Hulme, 2013), while Shipstead, Redick and Engle (2012) highlighted a number of methodological weaknesses with many of the initial studies including (1) inadequate measurement, i.e. using a single task to measure a construct such as fluid intelligence, (2) conflation of working memory with short-term memory, and (3) inadequate control groups. There have been a growing number of studies addressing these methodological concerns. However, the question of whether working-memory training is effective and the extent to which it shows transfer to measures of fluid intelligence and cognitive control is still unclear, with some studies reporting evidence of such transfer (Jaeggi, Buschkuehl, Shah, & Jonides, 2014; Jaušovec & Jaušovec, 2012; Rudebeck et al., 2012; Stephenson & Halpern, 2013;) while others have not (Chooi & Thompson, 2012; Redick et al., 2013; Schwarb, Nail, & Schumacher, 2015; Sprenger et al., 2013; Thompson et al., 2013). Therefore, important questions that still have no clear answer are what is being trained in working-memory training and what are the mechanisms through which transfer, especially far transfer, can be achieved?

Jaeggi and colleagues have proposed that working-memory training may be viewed as analogous to basic physical conditioning where working memory plays a role similar to the cardiovascular system (Jaeggi et al. 2011). Improving cardiovascular fitness benefits performance on a wide array of sports. Therefore, working-memory training may be viewed as improving the efficiency of key cognitive processes such as attentional control or inhibitory processing that are involved across multiple areas of cognition. Von Bastian and Oberauer (2014) have proposed two routes working-memory improvements could take. The first is that training may improve the capacity of working memory such that after training individuals can keep a larger amount of information active. If working-memory training increases capacity, then wide transfer across different tasks would be predicted (von Bastian & Oberauer, 2014). However, theoretically, capacity based changes should be domain specific, i.e. improvements in verbal capacity transfers to tasks using verbal working memory and visuo-spatial improvements transfer to visuo-spatial tasks. The second route is training induces increased working-memory efficiency due to knowledge and skills learned during training. An example would be participants developing specific strategies such as chunking to improve on the training task. Transfer would then be more specific to tasks where participants could apply these strategies. However, a third possibility could simply be that some participants become more motivated or exert more effort on cognitive tasks after working-memory training especially if they hold a strong belief in the efficacy of the training. Such a motivational shift might manifest itself in improved performance across transfer tasks when transfer of training would not be predicted by mechanistic or strategic changes with training.

Another important question is: are the mechanisms responsible for transfer of training necessarily the same in different working-memory training paradigms? Working memory is involved in many different tasks, therefore it would be possible to develop different training regimes with one showing transfer due to changes in cognitive architectural parameters such as capacity while another leads to more skill-based improvements in working-memory efficacy such as active strategies developed by participants to maximize task performance (Kieras, Meyer, Mueller, & Seymour, 1999). To date, two tasks have been most frequently used for training working memory, the n-back (e.g., Jaeggi et al., 2008) and the complex span (e.g., Chein & Morrison, 2010). Briefly, in the n-back task, participants see or hear a series of stimuli and for each stimulus must judge whether it is the same stimulus seen n items back. In the dual n-back, participants perform a visual and auditory n-back simultaneously. In complex span tasks, participants must remember a series of stimuli (e.g., letters) with a processing task such as judging the veracity of a mathematical statement interleaved between each item. Both tasks are deemed working-memory tasks because they require the simultaneous processing and storage of information and both predict variance in fluid intelligence scores (Kane, Conway, Miura, & Colflesh, 2007). Both tasks would appear to require selective attention, attentional control (Engle, 2002), storage of items in primary memory and retrieval from secondary memory (Unsworth & Engle, 2007). However, a recent meta-analysis of studies measuring the relationship between the n-back and complex span found only a weak relationship between the two tasks and concluded they tap different components of working memory (Redick & Lindsey, 2013). Therefore, training based improvements may rely more on processes specific to the particular training task. For example, the n-back clearly involves a great deal of updating information while span tasks require shifting between two unrelated tasks. Both may require inhibiting no longer relevant items, but in the n-back this is required constantly within trials while for span tasks inhibition is needed between trials. Performance on a working-memory task may improve with training due to any mixture of reasons such as (1) increased capacity of short-term store/primary memory, (2) improved executive function which may involve better attentional focus or the suppression of irrelevant information or both, or (3) other task-specific processes such as updating or shifting.

A third important consideration for researchers attempting to demonstrate the efficacy of any form of training is the choice of control group. Since a pre to post increase on a particular task could be due simply to practice effects, a comparison group is needed to demonstrate effects due to the training intervention. While some studies have employed a simple no-contact control group in which participants are simply tested on the transfer measures twice with no other interaction with experimenters, an active control group in which participants undergo some form of intervention is preferred. However, recently a number of concerns about active control groups have been raised including participant awareness that they are in the control group and the role of differential expectancies of both the participants in a particular intervention and the laboratory assistants testing them (Boot, Blakely, &Simons, 2011; Boot, Simons, Stothart, & Stutts, 2013). As Boot et al. (2011) have noted, placebo effects are a big concern for researchers studying cognitive training as participant expectancies and effort alone may improve performance.

Finally, a growing concern has been raised with the limitations of the standard analysis approach of null hypothesis significance testing (NHST) when evaluating the evidence of transfer of training (Dougherty, Hamovitz, & Tidwell, 2016; Sprenger et al., 2013). While a number of studies as noted above have reported no evidence for transfer effects from working-memory training, most have only reported their results using NHST, which does not allow an adequate assessment of the extent to which the data collected support the null hypothesis, i.e. that transfer was not present. However, the use of Bayesian analysis does allow for an estimate of the evidence in favor of the null hypothesis (Rouder, Morey, Speckman, & Province, 2012). Sprenger et al. (2013) included Bayesian analyses in their studies of working-memory training and reported evidence in favor of the null. Dougherty et al. (2016) conducted a meta-analysis of working-memory training studies using a Bayesian approach and found that studies that using a passive control group were more likely to favor the alternative hypothesis that training was effective, while studies employing active control groups were more likely to support the null. Therefore, in our study, we report our results using both traditional NHST and Bayesian analyses.

In the current study, we took a novel approach to investigating the possible sources of transferable improvement resulting from training working memory by both comparing the effects of two different forms of working-memory training, n-back and complex span, on the same set of transfer measures as well as utilizing two different active control groups. Both n-back training and complex span training have been proposed as having domain general transfer effects upon working memory although typically the training protocols include both verbal and spatial variants of the training task. In the case of the n-back, single n-back training in which the only spatial or only verbal stimuli are used has been reported as being as effective as the dual n-back (combining verbal and spatial) on measures of spatial reasoning (Jaeggi, Studer-Luethi, Buschkuehl, Su, Jonides, & Perrig, 2010). However, most studies of complex span training have incorporated both spatial and verbal variants into the training protocol making it difficult to ascertain whether transfer depends on separate improvements in verbal and spatial SHORT-TERM MEMORY capacity or the possible executive components involved in performing a complex span task. Therefore, we chose to isolate each form of working-memory training to one domain in order to better compare whether any possible transfer effects were isolated to the domain trained or generalized across verbal and visuo-spatial domains.

In addition to comparing two different forms of working-memory training, we included two active control groups. The first has been used in prior studies of working-memory training in which participants practice a non-adaptive version of the n-back task (Klingberg et al. 2002; 2005). We also had this group complete fewer training sessions (12 compared to 20) so that this group both lacked the adaptive component and the number of training sessions initially reported as important for transfer to occur (Jaeggi et al., 2008). However, as noted above, this form of control group can been criticized for failing to control expectancy effects as the less challenging and repetitive nature of the non-adaptive n-back task might be less motivating for participants as well as leading some participants to be become suspicious they were in a control condition. Therefore, we also included a second active control group in which participants learned and practiced a cognitively demanding real-time strategy (RTS) video game, Starcraft. Real-time strategy games would appear to place a demand on working memory as players must maintain information over time in the service of complex goals. However, previous research has found no effects in younger college aged adults of playing RTS games on working memory and fluid intelligence measures such as the 2-back task, Operation Span, and Raven’s Matrices for either expert video-gamers compared to non-gamers or novices who were trained on RTS games (Boot et al. 2008). Additionally, a recent meta-analysis of the effects of playing video games on cognition reported little to no improvement of executive function including measures of intelligence and working memory as a result of playing video games (Powers, Brooks, Aldrich, Palladino, & Alfieri, 2013). Therefore, in this group we expected the participants to be more engaged and have greater expectations for transfer, but past research has not found evidence of transfer to many of the tasks used in our pre-test post-test battery.

We designed transfer to range from very near, i.e., the same task used in training with different content to far transfer such as reasoning. This was done with the goal of trying to isolate possible improvements in short-term memory, executive function, and working-memory capacity and their contributions to any observed transfer to measures of complex cognition such as reasoning, fluid intelligence, and mathematical performance. We collected multiple measures of each construct so that evidence of transfer would not rely upon a single task and when possible tried to choose tasks that had shown prior evidence of being sensitive to some form of training intervention. We included verbal and visuo-spatial variants of our short-term memory, working memory, and reasoning transfer measures. This allowed us to test the claim that the effects of working-memory training are domain general. In addition to reporting our results using the standard NHST, we also computed the Bayes Factor assessing the extent to which the evidence favored the alternative or the null hypothesis. Finally, we measured individual differences in personality as well as self-reported motivation, and beliefs in the efficacy of the training as these have been proposed as one source of variance in why some studies see transfer effects while others do not (Jaeggi et al., 2014). We tested whether these variables predicted either the amount of improvement on the training task or the degree of transfer observed.

Based on the hypothesized possible mechanisms of working-memory training described above, we developed the following set of predictions.

-

1)

If a particular form of working-memory training has its effects primarily on short-term capacity, then transfer should be domain specific and include at least the measures of short-term memory and working memory within the domain (verbal or spatial) trained.

-

2)

If a particular form of working-memory training improves processes specific to the structure of the training task, then we would predict task based transfer (e.g., specific to span tasks or to n-back tasks) across verbal and non-verbal domains.

-

3)

If a form of training generally benefits executive processes such as attentional capacity or inhibitory function, then we predict wider transfer across working-memory tasks in both domains as well as improvements on our executive function transfer tasks.

-

4)

If improvements over training are accomplished primarily through the development of specific strategies, then we predict a narrow pattern of transfer related to that strategy such as specific improvements on tasks using stimuli specific to a strategy (such as using chunking to remember letters or creating a shape to remember a series of locations).

Certainly we would add the caveat that these hypotheses are not meant to be exhaustive or even mutually exclusive. It may be that a particular working-memory training regimen affects both short-term storage and the ability to dual-task, and transfer is seen both to short-term memory in one domain and span tasks in both domains. However, given the general murkiness surrounding the possible mechanisms of transfer, the extent of transfer and the degree to which different working-memory training paradigms are equivalent, we viewed a comparison between the patterns of transfer related to each form of training as a necessary first step to understanding the nature of transfer within each paradigm.

Method

Participants

A total of 132 College of Idaho students were recruited by campus flyers advertising a “brain training” study. They were paid US$10 an hour with a US$50 completion bonus with an average total compensation of US$300. After agreeing to participate in the study, participants were initially randomly assigned to one of the four conditions. Participants who were assigned to the video game group were asked during pre-testing how often a week they played video games and if they had played real-time strategy (RTS) games before. If an individual indicated that he or she had played RTS games on a weekly basis, that participant was reassigned to one of the three other groups. This was done simply to ensure that we did not have participants who were already skilled players in our RTS group. Only four participants were reassigned based on these criteria (one to the spatial n-back, one to verbal complex span, and two to the non-adaptive n-back). In our final sample, 34 participants were assigned to the spatial n-back training with three participants dropping out. Thirty-five participants were assigned to the verbal complex span training with three dropouts, 33 were assigned to the video game training with seven dropouts, and 30 were assigned to the non-adaptive n-back group with three dropouts. We based our planned sample sizes on the medium effect size (Cohen’s d = .65) reported by Jaeggi et al. (2008) using a similar sample size. The demographics for the final sample are shown in Table 1.

Materials

Training

For each of our working-memory training regimens, we preserved each type of training in its original form both in terms of the length and the number of sessions rather than trying to alter one to fit the other. This resulted in a difference of approximately 10 min per session with the Verbal Span group having slightly longer sessions. Similarly for the real-time strategy game group we used a training procedure similar to that reported by Boot et al. (2008). In this we erred on the side of faithfully replicating the procedures that had or in the latter case had not resulted in transfer rather than altering these procedures to exactly match each other. For the non-adaptive n-back group, we chose to have participants complete fewer sessions than the working-memory training groups so that this group not only lacked an adaptive element of training, but also had less exposure to the task, i.e., a lower dosage of training.

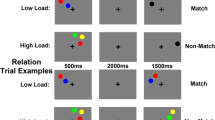

Adaptive spatial n-back

To train visuo-spatial working memory, we used the spatial n-back procedure described by Jaeggi et al. (2010). In this task, participants saw a series of blue squares appear in eight different possible locations based on a 3 × 3 grid excluding the center square. For each square, they were to determine whether the current location was the same as the location n-back by pressing the “y” key. If the location did not match the location n-back, no response was made. Each stimulus appeared for 500 ms with an inter-stimulus interval of 2,500 ms. If participants made less than three errors during a block, the n-back level was increased by one. If participants made more than five errors during a block, the level was decreased by one. Each training session consisted of 15 blocks with each block consisting of 20+n trials and lasted approximately 20 min. Participants were scheduled for five sessions each week for 4 weeks for a total of 20 sessions.

Verbal complex span

To train verbal working memory, we used the verbal complex span task developed by Chein and Morrison (2010). In this task, participants were asked to remember a series of letters presented one at a time for 1 s. However, between each letter, participants completed 4 s of a lexical decision task, therefore engaging in simultaneous storage of the previous letters and processing of un-related material. At recall, participants were shown a 4 × 4 array of letters and reported the sequence by clicking on the letters in the same order they were presented. There was feedback on both recall accuracy as well as performance on the lexical decision task (% correct). Each training session began with a 4-item list. If a participant remembered all the letters in the correct sequence while maintaining a level of 75 % correct on the lexical decision task for two consecutive trials, then the number of items in the sequence was increased by one. If participants performed two incorrect trials in a row, the number was decreased by one. Similar to Chen and Morrison (2010), participants performed 30 trials of the task each training session which lasted between 30 and 40 min. Participants were scheduled for five sessions each week for 4 weeks for a total of 20 sessions.

Real-time strategy game training

Participants in this group were trained on Blizzard's 1996 RTS game “Starcraft: Brood War”. We adopted a training protocol similar to those used by Boot et al. (2008) and Basak et al. (2008). Training occurred across 4 weeks and consisted of tutorial missions and matches against a computer opponent. The participants trained for 1.5 h/session, three times a week. Each session provided time for 3–6 games. During the first week participants played as the “Terran” race, as the “Protoss” in the second, “Zerg” in the third, with the final week being dedicated to randomized matches of all three. Aside from the tutorial missions, participants played a different map each week. During the fourth week, players were randomly assigned to a different map for each game.

Non-adaptive spatial n-back

This group was trained on the same n-back task as the adaptive group with all the same task parameters with the subtraction of the adaptive component that increased or decreased the n-back level based on participant performance. Instead this group alternated between seven blocks of 2-back training and seven blocks of 3-back training with a single block of 4-back training at the end of each session. These participants completed 12 approximately 20-min sessions of 15 blocks each over a 4-week period.

Transfer tasks

N-back

We used two variants of the n-back task to test for specific transfer in n-back performance.

-

Verbal n-back: This task was a verbal analogue of the n-back training task. Instead of locations, participants were given a continuous stream of block letters presented in the center of the screen. Eight letters were used: C, G, Q, T, H, K, P, and W. Participants were instructed to press the “y” key if the letter was the same as the letter n-back, otherwise they were not to respond. There was an initial practice block of a 2-back task with 12 trials with three hits. This was followed by three blocks of 2-back judgments, three blocks of 3-back judgments, and three blocks of 4-back judgments. Each block consisted of 20 stimulus presentations in addition to the first 2, 3, or 4 stimuli needed to establish a pattern. In each block, there were six target trials (hits) and 14 non-target trials. The dependent measure was the total number of hits minus false alarms.

-

Object n-back: This task was identical in construction to the verbal n-back except that instead of letters, the stimuli were eight random polygons.

Speed of processing

We used simple choice reaction time tasks to measure speed of processing using verbal and visuo-spatial stimuli. In the verbal domain, the Animal and Word tasks were used. In the visuo-spatial, the Dot Judgment and Array Matching tasks were used (Chen et al. 2007). All consisted of 20 trials and used the “z” key for left responses and the “/” key for right side responses with an equal number of correct “left” and “right” responses. All tasks had a short practice block of five trials. Both accuracy and reaction time were measured for each of these tasks.

-

Animal task: Participants were shown two words on the left and right of the screen and asked to decide as quickly and accurately as possible which of the words was an animal.

-

Word task: Participants were shown two strings of letters and asked to decide as quickly and accurately as possible which letter string was an English word.

Dot judgment

Participants saw three dots on the screen, a central white dot and a blue and a red dot and were asked to decide as quickly and accurately as possible which of the colored dots was the closest to the white dot.

Array matching

Participant saw three arrays of shapes, one array at the top of the screen and two choice arrays on the bottom left and right. Participants were asked to decide which of the choice arrays would be the best match to the top array based on color, number, or shape. For example, if the sample array was a set of four blue circles and the choice arrays were a set of two red squares and a set of three blue triangles, the blue triangles would constitute the best match.

Short-term memory

To measure short-term storage, we used two tasks to measure verbal storage, letter span, and digit span, and two tasks to measure visuo-spatial storage, arrow span, and circle span. All four tasks consisted of 20 trials with lengths of three items in a series up to 12 with two trials at each length and a short block of four practice trials with 2-, 3-, and 4-item trials. The score for each task was the number of trials correctly answered.

-

Digit span: Participants were shown a series of digits presented one at a time for 1,500 ms with an ISI of 500 ms and were asked to remember the sequence of digits in their presented order. At test, participants saw a number pad with the digits 1–9 as well as a series of boxes presented in a horizontal line at the bottom of the screen. The box that was colored green indicated the position of the digit in the number series that was to be recalled. Participants then used the mouse to click on the number that was presented in that position.

-

Letter span: This task was identical to the Digit Span except that instead of digits, the letters B, F, H, J, L, N, P, R, and X were used.

-

Arrow span: In this task, participants were asked to remember a series of locations pointed to by a sequence of arrows that appeared one at a time in a 5 × 4 grid for 1,500 ms followed by a 500-ms ISI. At test, participants saw a set of black checks in the grid marking all but one of the locations to which the blue arrows pointed to and were asked to click on the missing location.

-

Circle span: In this task, participants saw a set of 25 circles randomly distributed on the screen. During each trial, a series of circles turned red one at time for 1,500 ms with a 500-ms ISI. At test, participants saw the same cloud with all the circles that turned red filled in with black except one and they were asked to click on the missing circle with the mouse.

Working memory

We used three measures of verbal working-memory span and three measures of visuo-spatial working-memory span.

-

Reading span: In this task participants were asked to remember a sequence of two to sevwn letters. Between each letter presentation they were asked to read a sentence and judge whether the sentence made sense. At test, participants were shown a grid of letters and had to click on the letters that were presented in the order that they were presented. Participants completed 15 trials. We used the absolute score calculated as the sum of all correctly recalled sets as described in Unsworth, Heitz, Schrock, and Engle (2005).

-

Operation span: In this task, participants were again asked to remember a series of two to seven letters. In between letter presentations, participants were asked to solve a simple mathematical problem and then asked to click on the letters seen in the order presented at the end of each trial. Participants completed 15 trials and the absolute score was used (Unsworth et al., 2005).

-

Letter-number span: In this task, participants were asked to remember a series of alternating letters and numbers with the instruction that they would then be asked to recall the sequence reorganized with the digits first in numerical order followed by the letters in alphabetical order. Participants’ memory for the sequence was tested by asking them to identify one member of the reordered sequence. Participants were cued to report the nth item in the correctly ordered sequence and then to click on the identity of that item using a visual keypad presented on the screen consisting of either the digits 1–9 or the letters B, F, H, J, L, N, P, R, or X. There were four practice trials consisting of sequence lengths of from two to four items. The experimental block consisted of 20 trials with two trials of each length between three and 12 items with the trial lengths presented in random order. The dependent measure was the total number of items correctly recalled.

-

Symmetry span: In this task, participants were asked to remember a sequence of two to five locations presented one at a time. Between each location presentation, participants were shown a shape and asked to decide whether it was symmetrical or not. At the end of each trial, participants were asked to indicate the locations seen in order using a 16-location array. Participants completed 12 trials. The score was thus the total number of correct items in the correct position (Unsworth et al., 2005).

-

Rotation span: Participants were asked to memorize a series of locations indicated by black Xs appearing one at a time for 1,500 ms on a 5 × 4 grid. Between each X, participants were shown two figures (letters chosen from the Thai alphabet), one upright and the other rotated by 60° or 120°. The participant had to judge whether the rotated figure was identical to or a mirror image of the upright figure while remembering the previous presented locations. At test, participants were asked to click on all the locations in which a black X appeared in the order that they were presented. Participants completed three blocks of four trials consisting of 2, 3, 4, and 5 items for a total of 12 trials. The dependent measure was the sum of the number of locations correctly recalled in its proper sequence.

-

Alignment span: Participants were shown a series of red dots appearing on a 4 × 5 grid. Each red dot was presented with two white dots for 1,500 ms. Participants judged whether the accompanying white dots formed a straight line with the red dot while simultaneously remembering the locations of each of the previous red dots (Hale et al., 2011). At test, a grid containing all the red dots in the current sequence but one appeared and the participants clicked on the location of the missing dot. Participants completed a practice block of four trials and a block of 20 trials consisting of two trials of three to 12 items each. The dependent measure was the sum of trials correctly completed.

Executive function

We used two measures of executive processing.

-

Attention network task: In this task, developed by Fan et al. (2002), participants performed a standard flanker task in which they indicated whether a central arrow is pointing to the right or left. The flanking three arrows on each side were either compatible or incompatible with the central arrow. In addition, the arrows appeared on either the top or bottom of the screen. Participants were either uncued as to when a trial will start, received a centered cue that alerts them to the beginning of the next trial, or an informative spatial cue appearing on either on the top or bottom of the screen, allowing them to orient to the location of the next stimulus. This resulted in three measures of attention, Alerting Attention (AA) the difference between average response time (RT) on trials where no cue is present compared to a center cue, Orienting Attention (OA) the difference between trials cued with a central cue and a spatial cue, and Executive Attention (EA) the difference between RT on trials with incongruent flankers compared to compatible flankers.

-

Simon task (Simon, Acosta, Mewaldt, & Speidel, 1976): In this task, participants saw an arrow pointing to the left or right side of the screen. Their job was to indicate which direction the arrow pointed and to press “l” key if the arrow pointed right and “a” if it pointed left. Participants first completed a block of 36 neutral trials where the arrow was presented in the center of the screen. The remaining two blocks consisted of 120 mixed spatially compatible and incompatible trials where the arrow appears on the left or right side of the screen. For compatible trials, the arrow appears on the same side of the screen that the arrow is pointing to, e.g., a right-pointing arrow appears on the right of the screen while for incompatible trials, the arrow appears on the opposite side of the screen compared to the direction the arrow is pointing. One of the mixed blocks consisted of 25 % incompatible trials and the other of 75 % incompatible trials. The order of these two blocks was randomized across participants.

Reasoning

To measure possible transfer to reasoning abilities, we used two verbal asoning tasks from the ETS Kit of Factor-Referenced Tests (Ekstrom, French, & Harman, 1976), the Nonsense Syllogisms and Inference Tests, as well as two reasoning measures in the visuo-spatial domain, the Ravens Progressive Matrices (RPM; Raven, Raven, & Court, 1998) and the Cattell Culture Fair Tests (Cattell, 1961). For the ETS and Cattell measures, there were two forms already existing that were then counterbalanced across pre- and post-test administrations. For the RPM, the items were split into odd and even sets and also counterbalanced across testing times.

-

Inference test: Participants were given one or two statements similar to those seen in newspaper articles and then a set of possible inferences based on those statements. Participants were instructed to choose the only inference that can be drawn from the given statements without assuming any additional information. There were ten items for which 6 min were given. The dependent measure was the number of correct items subtracting for incorrect items.

-

Nonsense syllogisms test: In this test, participants were given 15 sets of syllogisms and instructed to evaluate whether the conclusion followed logically from the premises. The dependent measure was the number of correct minus incorrect items completed within 4 min.

-

Raven’s: We used a computerized version of the Raven’s Standard Progressive Matrices test separated into two forms of 30 items. Participants saw a 3 × 3 matrix of shapes presented with the last shape missing and chose the item out of a set of six to eight choices that best completes the pattern both across the rows and columns. Participants were given 15 min to complete the task. The dependent measure was the total number of items answered correctly.

-

Cattell: This is a timed paper and pencil measure of non-verbal intelligence consisting of four tests, Series (13 items), Classifications (14 items), Matrices (13 items), and Conditions (ten items). There are parallel forms, A and B, which were counterbalanced at pre- and post-test across participants. The dependent measure was the total number of items correct on each test and then summed across tests.

Mathematical problem-solving measures

To test the possibility of far transfer to tasks measured in real-world settings, we measured pre- and post-test performance of basic mathematical and arithmetic aptitudes also drawn from the ETS kit.

-

Mathematical aptitude test: This test consisted of 15 basic algebraic word problems in which participants had to solve for a single variable and identify the correct answer out of five choices. Participants were given 10 min. The dependent measure was the correct number of responses minus the number of incorrect responses.

-

Arithmetic aptitude test: In this test, participants were asked to solve 15 simple arithmetic word problems by choosing the correct alternative out of five possible responses. Ten minutes were given and the dependent measure was the number of correct subtracting incorrect items.

Survey measures

In addition to surveying participants on their levels of motivation, strategies during training and beliefs about the efficacy of the training, we also collected a set of individual difference measures that we felt may help us distinguish between more and less motivated participants in our samples.

-

Need for Cognition Questionnaire (Caccioppo & Petty, 1982): This 18-item questionnaire measures how motivated individuals are to engage in challenging activities.

-

The Short Grit Scale (Duckworth & Quinn, 2009): This eight-item questionnaire measures trait-based perseverance and passion for long-term goals.

-

The Brief Self-Control Scale (Tangey, Baumeister & Boone, 2004): This is a 13-item measure of self-reported self-control with items such as: “I am self-indulgent at times,” and “I never allow myself to lose control.”

-

The Big Five Inventory (BFI) (John, Donahue, & Kentle, 1991): This is a short 44-item inventory that measures the personality traits of Extraversion, Agreeableness, Conscientiousness, Neuroticism, and Openness.

-

The Perceived Stress Scale (Cohen, Kamarck, & Mermelstein, 1983): This is a ten-item self-report questionnaire that measures a person’s evaluation of the stressfulness of the situations in the past month of his or her life.

-

The Life Orientations Test (Revised) (Scheier, Carver & Bridges, 1994): This ten-item survey measures traits based optimism.

-

The Adult Hope Scale (Synder et al., 1991): This consists of 12 items designed to measure how hopeful an individual typically is.

-

The Barratt Impulsiveness Scale, Version 11 (Patton et al., 1995): The BIS-11 is one of the most frequently used self-report instruments for impulsivity and consists of 30 questions. It is designed to give an overall measure of general impulsiveness as well as three subscales of attentional, motor, and non-planning impulsiveness.

-

Participant motivation and strategies: After post-testing, participants completed a laboratory-based measure asking them a series of open-ended questions on the nature of their training experience focusing on: (1) what strategies did they use to improve their performance on the training task, (2) how motivated they were during training and how challenging did they find the tasks, and (3) whether they felt that the training had improved their cognition and did they notice any specific improvements on any of the post-test tasks. These questions are reproduced in the Appendix.

Procedure

All participants completed a week of pre-testing with approximately 60–90 min of testing each day. Pre-testing included an initial measurement of all the transfer tasks as well as the personality measures. The following week participants began their training regimens. The week after training, participants had a week of post-testing in which we took a second measure of all the transfer tasks. Participants also completed our motivation and strategy questionnaire on the last day of post-testing.

Results

Training results

All four groups showed improved performance with practice. The dependent measure for the spatial n-back training was the highest n-back level achieved in each session. Figure 1a shows the average amount of improvement across the 20 sessions where the mean n-back level increased from 3.6 to 4.5, F (19, 570) = 2.8, p < .001, η p 2= .09. This is a smaller training improvement than reported by Jaeggi et al. (2010) where participants were achieving an average n-back level of close to 7-back by the end of a similar training regime. A closer examination of our data revealed considerable variability in the maximum n-back level achieved during training and how well that level was maintained across the 20 sessions. Of our 31 participants, three peaked at a maximum level of 4-back, six at 5-back, 12 at 6-back, seven at 7-back, one at 8-back, and two at 9-back. The average maximum level reached during training at any point was 6.1 with an average gain of 2.5 levels (from lowest n-back level to highest). However, we did see a great deal of fluctuation for most participants in n-back level achieved from session to session.

We performed a similar analysis for the verbal span group using the highest level (i.e., the longest string of letters correctly reported) achieved in a session as the dependent variable. We found the average number of letters retrieved went from 6.6 to 9.9 across 20 sessions of practice, F (19, 589) = 13.8, p < .001, η p 2 = .31. These training data are shown in Fig. 1b.

The dependent measure for the video game group was developed using the participants’ game scores. To keep track of each participant's progress, we recorded the scores following each game with the total score consisting of the player's performance with their units, structures, and resources. Performance within the units’ sub-score was determined by how many units were created, how many were lost in battle, and how many enemy units the player’s army killed. Performance within the structures’ sub-score was calculated similarly to the units category (built, raised, lost), and the resources’ sub-score was determined by totaling the amount of resources collected (the amount of resources a player spends is also tracked). This score ranged from 700 to 123,580 points at Session 1 and from 34,896 to 305,840 points by Session 12. These data are shown in Fig. 1c. Again we saw significant improvements in game performance with training as well as considerable variability, F (1, 25) = 40.4, p <.001, η p 2 = .62. However, only one individual showed no or negative improvement with a high score of 123,580 on the first day with notably worse performance with an average score of 46,000 across the remaining days of training. Although we initially screened based on self-reported RTS gaming experience, it is possible this individual misrepresented her previous experience or just scored exceptionally well the first day and then showed no improvement across the remaining sessions. Therefore, we dropped this participant from further analysis. All other participants demonstrated positive improvements in game score across training sessions.

Finally we also compared the accuracy data for the first versus the last block of the non-adaptive n-back control training to ensure that participants were performing well on the task throughout training. We found a significant improvement in accuracy from the first session (M = .85) to the last (M = .92), F (1, 25) = 12.8, p < .001, η p 2 = .33.

Personality measures and training improvements

First, we tested whether there were any significant differences between our four groups on any of the personality measures. The means and standard deviations for each group are shown in Table 2. The only difference between any of the groups that approached significance was on the Adult Hope Scale, F (3, 116) = 2.59, p = .056, η p 2 = .07.

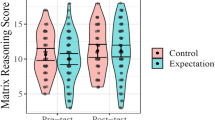

In order to test whether any of the personality measures would help predict working-memory training improvements, we took the difference in performance on the first session of training compared to the last and converted this into a z-score so that spatial n-back and verbal complex span improvements were on the same scale. We then tested for any correlations between personality and improvement and found that only self-reported stress, r (63) = −.26, p = .04 and neuroticism, r (63) = −.25, p =.05, showed any relationship with training improvements. The scatterplots of these relationships are shown in Fig. 2.

Transfer data

The descriptive statistics for all tasks broken down by group and testing time are presented in Table 3. To examine possible transfer, we were interested in creating composite gain scores similar to those used in previous studies (Redick et al., 2013) based on: (1) General N-back performance, (2) Speed of Processing, (3) Verbal Short-term Memory, (4) Visuo-spatial Short-term Memory, (5) Verbal Working Memory, (6) Visuo-spatial Working Memory, (7) Executive Function, (8) Verbal Reasoning, (9) Visuo-spatial Reasoning, and (7) Math Performance as described above in the Methods section. This entailed first computing a standardized gain score for each measure by dividing the pre- to post-test gain for each participant by the standard deviation of the pre-test score for all participants, then averaging the gain scores for each task entered into the composite.

To ensure that the measures chosen for each construct were in fact related in our dataset, we computed the correlations between tasks at pre-test across all four groups. This correlation matrix is shown in Table 4. All tasks were significantly correlated with at least one other measure in their proposed construct with two exceptions. The first was for the Visuo-spatial Short-Term Memory construct in which the Arrow Span and Circle Span scores were not significantly correlated at pre-test. This was likely due to the poor test-retest reliability shown by the Arrow Span task (r = −.12, p = .26 across groups) as compared to the Circle Span (r = .45, p <.001). One possibility is that some participants initially misunderstood that they were to indicate the squares the arrows were pointing to as opposed to the locations where the arrows appeared. However, as the program only recorded the accuracy of the response and not the actual location, we could not test this possibility directly. We can only infer this or some other initial difficulty with the task from the greater variability and larger range of the Arrow Span task at pre-test. Therefore, although Arrow and Circle Span were correlated at post-test, r = .59, p < .001, we decided against combining the two measures into a composite and report the results for each task separately. The second was the lack of any correlation between the AA, OA, or EA sub-scores on the ANT and the Simon task at either pre- or post-test. Therefore, we did not combine these measures and examined the effect of group separately for each score.

The remaining final composites were then analyzed using ANOVA with group as a between-subjects factor. We also computed the Bayes factor (BF01) comparing the null model to the alternative model using the Bayesian ANOVA provided in the JASP statistical package (JASP Team, 2016). BF01 quantifies the strength of evidence favoring one model over the other as represented by the relative probability of the observed data under the two models with a BF01 >1 as evidence favoring the null, a BF01<1 favoring the alternative, and a BF01=1 as equivocal evidence with neither model more probable than the other. As noted by Sprenger et al. (2013), a BF01 < .05 (odds of 1/20) indicates positive support for the alternative hypothesis while a BF01 > 3 is taken as positive support for the null with values >10 indicating strong support for the null (Kass & Raftery, 1995). The results of the analyses on the composite variables are presented in Table 5 while the task level results for which we also included the Bayes factor comparing the null to the interaction model (Group × Session) are given in Table 6. Only two composites showed a significant effect of group. For the remaining composites, the Bayes factors were all >3 indicating support for the null. The composites showing strong support for the alternative were Verbal short-term memory (VSTM) and the General N-back. We then examined each of the component tasks within each composite (Letter and Digit Span for the VSTM) (Verbal and Object n-back for the General N-back) using mixed factorial ANOVAs with group as the between-subjects factor and testing time (pre- vs. post-test) as a within-subjects factor. We also graphically examined the pattern of the group pre- to post-test changes in performance to determine whether any significant interaction provided more or less ideal evidence for positive transfer as described by Redick (2015) in which pre-test performance would be similar across groups and all groups would show improvement at post-test, but the critical training group(s) would show a significantly larger post-test improvement across related tasks. Only one of our composites met these criteria. For the Verbal SHORT-TERM MEMORY tasks, only the Letter span showed a significant group × testing time interaction, F (3,111) = 2.8, p = .05, η p 2 = .07. However, a visual inspection of the Letter span data suggested this interaction was driven by a decrease from pre- to post-test for the Non-adaptive n-back group. While the verbal complex span group did show an increase in performance from pre to post-test, simple comparisons comparing pre- to post-test improvement of the verbal training group to the gains of the RTS group (t = .68, p = .5) and the Spatial n-back group (t = −.88, p = .39) were not significant. The only significant difference was between the verbal training group and the Non-adaptive n-back group (t = 2.7, p =.01). However, given the large difference in pre-test performance between the two groups and the subsequent decline in performance for the Non-adaptive group, regression towards the mean cannot be ruled out as an explanation of the group differences seen. These data are shown in Fig. 3. For the n-back transfer tasks, we found a significant interaction for the Verbal n-back task, F (3, 112) = 5.6, p < .001, η p 2 = .13, but not for the Object n-back task, F (3, 112) = 2.2, p =.10, η p 2 = .05. A graphical examination revealed the same pattern for both tasks where overall all groups improved their performance from pre- to post-test (with the exception of the RTS group on the verbal n-back) with the spatial n-back adaptive and non-adaptive training groups showing even larger increases than the RTS and verbal span training groups. These data are shown in Fig. 4.

Similar to Colom et al. (2013) and Redick et al. (2013), we conducted a post hoc power analysis (G*Power; Faul, Lang, & Buchner, 2007) to ensure that our sample size which we had based on previous reports of working-memory transfer (e.g., Jaeggi et al., 2008) was large enough to detect significant Group × Testing time interactions in our transfer measures. Using the average correlation between our pre- and post-test measurements (average r =. 55), our power to detect a medium (Cohen’s d =.5) or a large effect (Cohen’s d =.8) was over .99 and using our lowest correlation r =.14 (ANT), power was .94.

Survey data

Personality measures and transfer

We tested for any relationships between the personality measures gathered and the amount of transfer seen for the n-back training group on the verbal and visual n-back tasks as well as the improvements seen for the complex span group on the letter span task. However, unlike the training data, we found no personality measures that were related to transfer in our samples.

Strategies reported for working-memory training tasks

For verbal complex span, all of our participants reported some sort of chunking strategy for remembering the letters with the most popular being the assignment of a word to each letter and creating sentences with those words. Twenty-four of our participants explicitly reported this strategy while the rest of our participants reported either chunking the letters into people’s initials or acronyms. Only one participant reported an explicitly visual strategy in which words were assigned to the letters and then combined into a visual image.

For the n-back training group, there was a greater diversity of strategies reported. The most common strategy was to use the locations where the stimuli appeared to draw shapes or patterns in the mind’s eye. Twelve of our participants reported using this method either alone or in combination with counting the number of squares that had appeared as well as tapping or tracing out a corresponding grid on their leg or on the table. Eleven participants reported counting either as the sole strategy or in combination with tapping or drawing shapes. Three participants reported assigning a noise or musical note to each location and trying to keep track of the sequence that way. Finally, seven participants did not report a clear strategy or simply wrote they had no strategy. Amongst our best performers there was no one strategy that predominated.

Motivation and beliefs about training

We also collected open-ended responses to the following questions: (1) how motivated the participant was, (2) how challenging they found the training intervention, and (3) the extent to which they felt it had improved their cognitive function. Participant responses were coded onto a 5-point scale with 1 indicating lack of motivation, challenge, or improvement and 5 indicating a great deal of motivation, challenge, or improvement. Two individuals independently rated participants’ answers blind to group membership with an inter-rater reliability of .90. On items where there was disagreement, the average of the two ratings was used as the final value. Overall participants reported being fairly motivated with an average score of 3.8 out of 5. We found group differences both in reported motivation, F (3,114) = 2.96, p = .04, η p 2 = .08 and how challenging the training was perceived, F (3,114) = 16.9, p <.001, η p 2 = .32 with the non-adaptive n-back group reporting being less motivated and challenged. However, there was no significant difference in the degree of improvement participants reported in their cognitive functioning, F (3,114 ) = 1.74, p =.16, η p 2 = .05, and a majority of participants in each group endorsed recommending the training they participated in to others. These data are reported in Table 7.

Discussion

In this experiment we attempted to compare the results of two forms of working-memory training on the same set of transfer measures and using the same two active control groups. By doing so, we had hoped to elucidate the possible mechanisms by which training on two different working-memory tasks can show transfer to other working-memory measures as well as possibly improve performance on higher-level cognitive tasks such as fluid intelligence and reasoning. However, our data yielded strong evidence only for transfer of training in the case of the spatial n-back to other variants of the n-back task while at the composite level, we found positive support for no transfer of training to measures of speed of processing, working memory, reasoning, and mathematical performance using the Bayes factor. We will discuss our results for each type of working-memory training and how these results relate to previous work before moving to the larger picture of working-memory training and transfer.

We begin with the verbal complex span training as we were surprised to see no evidence for transfer to either verbal short-term memory or span tasks in spite of the robust training gains in which our participants showed improvements equivalent to or greater than previous studies (36 % increase compared to the 25 % increase reported in Chein & Morrison (2010)). A number of laboratories have demonstrated transfer from complex span training to other span-based measures (Chein & Morrison, 2010; Harrison et al., 2013; Richmond, Wolk, Chein, & Olson, 2014; von Bastian & Oberauer, 2013). However, it may be that such transfer requires variability in training (Barnett & Ceci, 2002; Schmidt & Bjork, 1992) as most other studies using this form of working-memory training have used at least two variants of the tasks. This appears to be unlike n-back training in which equivalent effects have been reported for using a single or dual n-back paradigm (Jaeggi et al., 2010). Another possibility is that this form of working-memory training is more effective in fostering transfer when using spatial materials, although Gibson et al. (2011) reported equal transfer for groups of ADHD participants training on verbal or spatial versions of CogMed with each training program consisting of multiple training tasks. Gibson and colleagues (2013) also have reported that lowering the accuracy threshold for the items to be recalled from 100 % (as in our study) to 80 % can increase the amount of transfer seen. Therefore, it appears that unlike the n-back task, training using a lone complex span task is not effective in producing transfer.

Within our verbal span group, there was clear evidence of an almost universal strategy during training. All but one of our participants described using some form of chunking to help them remember the series of letters. However, while our verbal span training group did show a pre- to post-test increase on the Letter span task, the only group comparison that was significant was to a group showing a pre- to post-test decrease in performance, and there was no evidence of transfer to any other transfer task using letters such as the letter-number span or the letter n-back. It may be that the gains seen during the verbal complex span training were due at least in part to participants becoming more efficient at the lexical decision task which allowed them to better attend to the letters and apply chunking strategies in that specific context which then did not generalize to other span tasks even those using letters as stimuli. Therefore, it appears that single domain training using a span task is unlikely to yield any transfer, and the broader transfer seen in other studies may rely on training with multiple complex span tasks which allows participants to attend to the shared task structure and learn to shift between task demands more efficiently.

In contrast to the complex span training, our spatial n-back group did show clear evidence for transfer of training to letter and object variants of the n-back task compared to our complex span and video game control group. These results are similar to Sprenger et al. (2013), who reported only finding evidence for transfer to tasks similar in task structure or stimuli to the training tasks. Their participants who had the n-back task as part of their training showed improvement on the n-back, but not to other working-memory measures. Although a number of our participants reported using strategies such as mentally tracing shapes to help keep track of the sequence of locations, we observed no measurable transfer to either of the visuo-spatial short-term memory tasks which involved keeping track of locations or to the three visuo-spatial working-memory tasks (Symmetry, Rotation, or Alignment spans). Other research has also reported no evidence of transfer of training to SHORT-TERM MEMORY or working-memory measures (Lilienthal, Tamez, Shelton, Myerson, & Hale, 2013; Schwab et al., 2015; Sprenger et al., 2013). Therefore, training on the n-back would not seem to increase the capacity of working memory and the transfer we observed did not appear to be related to the self-reported strategies used during training. We also saw a similar improvement on the letter and object n-back transfer tasks for the non-adaptive n-back group, which raises two possibilities: (1) participants in both n-back groups improved on a cognitive process common to n-back tasks, or (2) that the similarity in task structures led to an increased confidence or expectation that they could perform well on the task. In terms of cognitive processing, participants may have improved at familiarity based responding which has been shown to be a key component to n-back performance (Kane, et al., 2007). This was supported by our data, indicating that the primary locus of improvement was in the reduction of the number of false alarms as opposed to an increase in the number of hits. Unfortunately, we did not systematically manipulate the type of lures in our n-back paradigms to test whether our n-back groups would be less susceptible to near lures. Other research groups have also suggested that the ability to inhibit irrelevant information in working memory may be the locus of n-back training (Jaeggi et al., 2014; Schwarb et al., 2015). In terms of our study, improvements in inhibitory processing could fit our n-back data though we did not find any evidence for transferable improvement to the Simon task or the ANT. Sprenger et al. (2013) also did not find transfer to Stroop or antisaccade and only reported evidence for transfer to a flanker task when the training regimen included a flanker-like task. Therefore, while transfer of training was observed, we cannot rule out task-related expectancies, i.e., participants expect to improve on tasks similar to those used at training as the source.

While we were able to observe transfer to other n-back tasks, we were unable to replicate the transfer of training reported by some to other measures of fluid intelligence and reasoning (Jaeggi et al., 2014; Jaušovec & Jaušovec, 2012; Rudebeck et al., 2012; Stephenson & Halpern, 2013). In this our results mirror those of other researchers who have also been unable to demonstrate transfer to reasoning and fluid ability tasks from working-memory training (Chooi & Thompson, 2012; Colom et al., 2013; Harrison et al., 2013; Heinzel, et al., 2014; Redick et al., 2013; Schwarb, Nail, & Schumacher, 2015; Sprenger, et al., 2013; Thompson, et al., 2013; von Bastian & Eschen, 2015). One possible reason for the lack of far transfer to the reasoning measures in our study is that the training improvements seen in our spatial n-back group were smaller than earlier reports (e.g., Jaeggi et al., 2010). Correlations between the amount of training improvement and the amount of transfer observed have been viewed by some researchers as evidence that transfer is moderated by training gains (Jaeggi et al., 2011). However, such responder analyses (i.e., individuals showing the most gains during training have larger transfer) have recently been criticized as logically insufficient and unusable as either support for or against the effectiveness of any particular training program (Tidwell et al., 2014). In addition, two recent meta-analyses of working-memory training have not found the rate of improvement over training to be a significant predictor of transfer (Au et al., 2015; Karbach & Verhaeghen, 2014).

A second possibility is participants’ motivation for participating. Jaeggi et al. (2014) proposed that one explanation for why some studies find transfer to fluid intelligence and others do not may depend on how intrinsically motivated the participants are and that paying participants may undermine intrinsic motivation. We did not rely upon unpaid volunteers, but we did measure our participants’ reported levels of motivation and whether they believed the training had improved their cognitive performance. Overall they reported feeling motivated and that they had benefited cognitively from the training. However, we did not measure how motivated our participants were at the beginning of the study, their reasons for participating in the study, or whether the degree of challenge or interest they had in their training task changed across the training.

Personality differences have also been raised as a factor that affects transfer of training (Jaeggi et al., 2014). It may be that individuals who volunteer and persist in a multi-week training intervention with little to no financial incentive may be systematically different in either personality or cognitive abilities than those who participate for compensation. Jaeggi et al. (2014) found that volunteers self-reported more cognitive failures and described these participants as having “some self-perceived deficit that many have influenced their interest in improving their memory and cognitive performance in the first place” (Jaeggi et al., 2014). While we did measure a number of personality and individual differences that we thought might contribute to training improvements and possible transfer, similar to previous studies, the few correlations seen between training and personality variables were small and underpowered. Studer-Luethi et al. (2012) reported weak correlations between neuroticism and training performance (r = −. 24) and conscientiousness and training performance (r =. 28). We did see a similar relationship between neuroticism and training (r =−.25) as well as between self-reported stress and training (r = −.26). However, we did not see any relationships between training and conscientiousness or related variables such as need for cognition, grit, or self-control nor evidence of a relationship between personality and the near transfer observed.

We will conclude by discussing two challenges for our study and future studies of working-memory training: What constitutes an appropriate control group and the problem of small effect sizes. We embarked on a fairly large training study confident based on initial reports that we would observe substantial transfer of training. We used an active control group similar to those used in previous studies intended to control for the basic task structure and materials of one of our working-memory training groups while omitting the adaptive component of training that was believed to be important for transfer (Klingberg et al., 2005). Instead our non-adaptive n-back group showed the same evidence of improvement on the transfer n-back tasks as our n-back training group. This is consistent with very recent research suggesting that neither the length nor number of training sessions (Au et al., 2015) nor an adaptive element to training is necessary for transfer (Karbach & Verhaeghen, 2014; Vartanian et al., 2013; von Bastian & Eschen, 2015). However, this does leave open the question of what is being trained and how best to control for placebo effects if factors such as training duration do not affect transfer. We also used a RTS video game group as a second active control group to control for possible differences in engagement and expectancies for improvement between our first control and our training groups. While our first control group can be criticized as not a “control” group, but rather a training group based on the similar transfer seen, our second control group could also be criticized as possibly being more of a training group even though prior studies do not support that interpretation as Boot et al. found no transferable improvement after 21 h of training (although see Basak et al., 2008 for evidence of transfer in older adults). Glass, Maddox, and Love (2013) reported improvements in tasks measuring cognitive flexibility in a group trained on Starcraft, but only after 40 h of carefully managed play emphasizing maintained awareness of resources and rapid switching. A second group played Starcraft for the same 40 h, but without these specific demands, and showed no improvement. Additionally, video game training would not control for task-specific expectancies for improvement such as those possibly seen in our non-adaptive n-back control group. Given that the choice of control group has been shown to affect the observation of transfer across studies (Au et al., 2015; Melby-Lervåg, & Hulme, 2013), the question of what constitutes an appropriate active control merits greater attention in the field.

A second major concern is that recent estimates of effect sizes especially for far transfer to reasoning and fluid intelligence appear to be much smaller than reported in the earliest working-memory studies. Therefore, perhaps our study did not have sufficient power to detect a training-related change in fluid intelligence. This seems possible given the results of recent meta-analyses (Au et al., 2015; Karbach & Verhaeghen, 2014; Melby-Lervåg & Hulme, 2013) reporting small effect sizes for far transfer. Based on these estimates, our current study would only have had a power of .81 to detect a group difference in Ravens (r =. 8) using a small effect size (f = .10) and we would have needed a sample size of 176 participants to achieve power of .95. Karbach and Verhaeghen (2014) have proposed that samples sizes of over 300 participants were necessary to achieve 80 % power and concluded that most studies to date have been underpowered. If this is correct, then power is a problem not only for the current study, but for most research conducted on working-memory training and transfer and the conclusions drawn from them.

Working-memory training and transfer remains a contentious area of research with some arguing that the evidence for far transfer is clear (Au et al., 2015; Jaeggi et al., 2014), while others remain skeptical (Haier, 2014; Redick, Melby-Lervåg, Hulme, & Grovak, 2014; Shipstead, Redick, & Engle, 2012). Certainly the results from our study and many others would suggest that the mechanisms underlying transfer are still not well understood. In our study, it would not appear that working-memory capacity was increased directly nor was the efficiency of working memory improved through the development and practice of different strategies. Instead our clearest evidence of transfer was from training on a spatial n-back, both adaptive and non-adaptive, to non-spatial n-back tasks. The use of Bayesian analyses was helpful in evaluating the extent to which our dataset favored the null hypothesis. In the cases of speed of processing and verbal working memory, the odds were greater than 10:1 in favor of the null model while more modest odds of approximately 3:1 in favor of the null were seen for visuo-spatial reasoning and working memory.

Conclusion

Our understanding of working-memory training continues to evolve at a rapid pace although key questions about the origins and breadth of transfer still remain. Given the large effect sizes reported in the earlier literature, we expected to observe far transfer in our study. However, it may be that such transfer is most easily found in certain subsets of the population and the effect size is much smaller than originally reported. Both merit serious consideration in future working-memory research and have important implications for the utility of working memory training in institutional settings such as schools (e.g., Rode, Robson, Purviance, Geary, & Mayr, 2014).

References

Au, J., Sheehan, E., Tsai, N., Duncan, G. J., Buschkuehl, M., & Jaeggi, S. M. (2015). Improving fluid intelligence with training on working memory: a meta-analysis. Psychonomic Bulletin & Review, 22, 366–377.

Baddeley, A. (1992). Working memory. Science, 255, 556–559.

Barkley, R. A. (1997). Behavioral inhibition, sustained attention, and executive functions: Constructing a unifying theory of ADHD. Psychological Bulletin, 121, 65–94. doi:10.1037/0033-2909.121.1.65

Barnett, S. M., & Ceci, S. J. (2002). When and where do we apply what we learn? A taxonomy for far transfer. Psychological Bulletin, 128, 612–637.

Basak, C., Boot, W. R., Voss, M. W., & Kramer, A. F. (2008). Can training in a real-time strategy video game attenuate cognitive decline in older adults? Psychology and Aging, 23, 765–777. doi:10.1037/a0013494

Boot, W. R., Kramer, A. F., Simons, D. J., Fabiani, M., & Gratton, G. (2008). The effects of video game playing on attention, memory, and executive control. Acta Pyschologica, 129, 387–398.

Boot, W. R., Blakely, D. P., & Simons, D. J. (2011). Do action video games improve perception and cognition? Frontiers in Psychology, 2, 226. doi:10.3389/fpsyg.2011.00226

Boot, W. R., Simons, D. J., Stothart, C., & Stutts, C. (2013). The pervasive problem with placebos in psychology: Why active control groups are not sufficient to rule out placebo effects. Perspectives in Psychological Science, 8, 445–454. doi:10.1177/1745691613491271

Caccioppo, J. T., & Petty, R. E. (1982). The need for cognition. Journal of Personality and Social Psychology, 42, 116–131.

Cattell, R. B. (1961). The Culture Free Intelligence Test, Scale 3. Champaign, IL: Institute for Personality and Ability Testing.

Chen, J., Hale, S., & Myerson, J. (2007). Predicting the size of individual and group differences on speeded cognitive tasks. Psychonomic Bulletin & Review, 14, 534–541. doi:10.3758/bf03194103

Chein, J. M., & Morrison, A. B. (2010). Expanding the mind’s workspace: Training and transfer effects with a complex working memory span task. Psychonomic Bulletin & Review, 17, 193–199.

Chooi, W.-T., & Thompson, L. A. (2012). Working memory training does not improve intelligence in healthy young adults. Intelligence, 40, 531–542.

Colom, R., Román, F. J., Abad, F. J., Chun Shi, P., Privado, J., Froufe, M., Escorial, S., Martínez, K., Burgaleta, M., Quiroga, M. A., Karama, S., Haier, R. J., Thompson, P. M., & Jaeggi, S. M. (2013). Adaptive n-back training does not improve fluid intelligence at the construct level: Gains on individual tests suggest that training may enhance visuospatial processing. Intelligence, 41, 712–727.

Cohen, S., Kamarck, T., & Mermelstein, R. (1983). A global measure of perceived stress. Journal of Health and Social Behavior, 24, 385–396.

Daneman, M., & Carpenter, P. A. (1980). Individual differences in working memory and reading. Journal of Verbal Learning and Verbal Behavior, 19, 450–466.

Daneman, M., & Merikle, P. M. (1996). Working memory and language comprehension: A meta-analysis. Psychonomic Bulletin and Review, 3, 422–433.

Dougherty, M. R., Hamovitz, T., & Tidwell, J. W. (2016). Reevaluating the effectiveness of the n-back training on transfer through the Bayesian lens: Support for the null. Psychonomic Bulletin and Review, 23, 306–316.

Duckworth, A. L., & Quinn, P. D. (2009). Development and validation of the Short Grit Scale (GRIT–S). Journal of Personality Assessment, 91, 166–174.

Ekstrom, R. B., French, J. W., Harman, H. H., & Dermen, D. (1976). Manual for kit of factor-referenced cognitive tests. Princeton, NJ: Educational Testing Service.

Engle, R. W. (2002). Working memory capacity as executive attention. Current Directions in Psychological Science, 11, 19–23.

Fan, J., McCandliss, B. D., Sommer, T., Raz, A., & Posner, M. I. (2002). Testing the efficiency and independence of attentional networks. Journal of Cognitive Neuroscience, 14, 340–347.

Faul, F., Erdfelder, E., Lang, A. G., & Buchner, A. (2007). G*Power 3: A flexible statistical power analysis program for the social, behavioral, and biomedical sciences. Behavior Research Methods, 39, 175–191.

Gibson, B. S., Gondoli, D. M., Johnson, A. C., Steeger, C. M., Dobrzenski, B. A., & Morrissey, R. A. (2011). Component analysis of verbal versus spatial working memory training in adolescents with ADHD: A randomized, controlled trial. Child Neuropsychology: A Journal on Normal and Abnormal Development in Childhood and Adolescence, 17(6), 546–563. doi:10.1080/09297049.2010.551186

Gibson, B. S., Gondoli, D. M., Kronenberger, W. G., Johnson, A. C., Steeger, C. M., & Morrissey, R. A. (2013). Exploration of an adaptive training regimen that can target the secondary memory component of working memory capacity. Memory & Cognition, 41, 726–737.

Glass, B. D., Maddox, W. T., & Love, B. C. (2013). Real-time strategy game training: Emergence of a cognitive flexibility trait. PLOS One, 8(8), e70350.

Goldman-Rakic, P. S. (1994). Working memory dysfunction in schizophrenia. Journal of Neuropsychiatry and Clinical Neurosciences, 6(4), 348–357.

Haier, R. J. (2014). Increased intelligence is a myth (so far). Frontiers in Systems Neuroscience, 8, 34. doi:10.3389/fnsys.2014.00034

Hale, S., Rose, N. S., Myerson, J., Strube, M. J., Sommers, M., Tye-Murray, N., & Spehar, B. (2011). The structure of working memory abilities across the adult life span. Psychology & Aging, 26, 92–110. doi:10.1037/a0021483