Abstract

The hypotheses that memories are ordered according to time and that contiguity is central to learning have recently reemerged in the human memory literature. This article reviews some of the key empirical findings behind this revival and some of the evidence against it, and finds the evidence for temporal organization unconvincing. A central problem is that, as many memory experiments are done, they have a prospective, as well as a retrospective, component. That is, if subjects can anticipate how they will be tested, they encode the to-be-remembered material in a way that they believe will facilitate performance on the anticipated test. Experiments that avoid this confounding factor have shown little or no evidence of organization by contiguity.

Similar content being viewed by others

The idea that memory is organized according to time—functionally if not anatomically—is an old one. At a meeting of the Royal Society in 1682, Robert Hooke proposed that a person’s every thought is recorded in a tiny packet at the lead end of a growing chain of memory traces in the brain. Older memories are further back along the chain, although their order becomes jumbled as new memories push them aside in an outwardly growing spiral (Hintzman, 2003; Hooke, 1705/1969). The neuropsychologist, T. Ribot (1882) used a similar idea to explain why in amnesia, more recent memories are the most likely to be lost and the last to recover. The biologist Richard Semon proposed that “engrams” are laid down sequentially in “chronological strata” (Schacter, Eich, & Tulving, 1978; Semon, 1923). Similarly, Koffka (1935) invoked the concept of the “trace column,” which allowed application of Gestalt laws of perceptual organization to events distributed in time. Hypnotic age regression was interpreted by Reiff and Scheerer (1959) as moving back along the trace column. And Murdock (1974) proposed a “conveyor belt” model of short-term memory, in which memory traces continually recede into the past.

A temporally organized memory seems consistent with the intuition that one’s personal past consists of instances, episodes, and eras of one’s life, all of which were laid out in time. The temporal-organization hypothesis also fits naturally with the principle of learning by contiguity, which dates back at least to Aristotle. James (1890) argued that association by contiguity was a fundamental principle of brain physiology—a view that was reinforced by Pavlov’s (1927) objective experiments on conditioning in animals.

The decline of contiguity and temporal organization

Ironically, perhaps, time was not kind to the general hypothesis that memory is organized by time or to the principle of contiguity. More than 80 years ago, Thorndike (1931, 1932) had his students listen passively as he read a series of unrelated sentences aloud. He then tested for cued recall, and found that the end of a sentence would cue recall of the beginning of the same sentence, but not the beginning of the sentence that followed, even though it had come immediately after the cue. In paired-associate learning, Thorndike found no evidence that associations were learned between items in different pairs, even after they had co-occurred 24 times. Thorndike proposed that something he called “belongingness” was far more important than contiguity in forming associations, and speculated that contiguity by itself might be completely ineffective.

The Gestalt theorists viewed association as secondary to the concept of organization. A memory trace was seen as a configural, interactive representation that subsumed the components of the remembered experience (Kohler, 1941). Experiments using both visual and verbal materials showed that associations between elements were learned much better if the elements were presented as a unified whole than if they were seen side by side (Asch, 1969). According to Asch, “What is most important about contiguity is that it is a condition of the emergence of relations” (p. 98). Research on mnemonic techniques also supported the Gestalt hypothesis. Bower (1970) found that in order for visual imagery to aid paired-associate learning, the imagined objects needed to interact. Imaging to-be-associated objects in a side-by-side relation did not measurably enhance recall over a nonimagery control.

Two articles by Glenberg and Bradley (1979; Bradley & Glenberg, 1983) asked whether mental contiguity, by itself, makes any contribution to learning. In several experiments, subjects were required to rehearse pairs of words from one to 24 times while performing an unrelated digit-recall task. On a subsequent test, associative learning of the word pairs proved to be poor and showed no tendency to increase with the number of joint rehearsals. Bradley and Glenberg (1983) concluded that forming a word–word association requires processing the relations between the words—the more relations, the better the association—and does not depend on the amount of cognitive capacity devoted to processing the words or on how long they are held simultaneously in awareness.

A major effect of the so-called “cognitive revolution” of the mid-20th century was to take the explanation of human learning and memory away from the vicissitudes of the environment and hand it to the mind’s penchant for top-down organization. Contiguity by itself was not seen as sufficient to cause learning. To the extent that it played a role, this role was to facilitate the discovery—or deliberate construction—of meaningful relations between ideas held simultaneously in working memory. Contiguity was downgraded even in the study of animal learning, in which experiments showed that the mere co-occurrence of Pavlovian conditioned (CS) and unconditioned (US) stimuli was unimportant. For a CS to be effective, it must predict the time, place, and quality of the US, and not be redundant with a stimulus that already does so (Rescorla & Wagner, 1972).

Friedman (1993) conducted a thorough review of the laboratory and real-life literature on human memory for time, including some of the studies that will figure in the following discussion. He concluded that one’s sense of a temporally ordered personal past is a reconstruction based on associative information, relative order information, and a vague sense of an event’s recency, or distance into the past. “The sense of an absolute chronology in our lives is an illusion, a thin veneer on the more basic substance of coincidence, locations in recurrent patterns, and independent sequences of meaningfully related events” (pp. 61–62). If our personal memories seem to be temporally organized, that does not reflect an inherent property of the memory system; it is because the experiences recorded in memory were, in fact, distributed in time.

The resurrection of contiguity and temporal organization

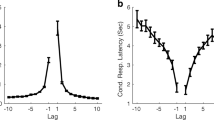

More recently, the concepts of temporal organization and contiguity have reemerged in the literature on human memory. The impetus seems to have been an article by Kahana (1996) reporting an extensive reanalysis of a trove of free-recall data from Murdock (1962). As is typical of free-recall studies, Murdock’s (1962) experiments were done by presenting list after list of words to a subject, each time testing for recall of as many words as the subject could come up with from the just-presented list. A key statistic reported by Kahana was the lag-CRP function: the conditional probability that recall of an item from a given input position was followed by recall of an item a given distance (or lag) away from it in the study list, in either the forward (+) or backward (–) direction. In an N-item list, lags can range from –(N – 1) to (N – 1), although usually only the center section (e.g., from –5 to +5) of the lag-CRP function is graphed. Figure 1, which I have replotted from Farrell and Lewandowsky (2008), is an exception.

Lag-CRP functions computed from 14 data sets, replotted from Fig. 2 of Farrell and Lewandowsky (2008). Data are restricted to correct-recall transitions from the first two output positions, and extreme lags have been outside-justified, as though all lists were of length 10. From “Empirical and Theoretical Limits on Lag-Recency in Free Recall,” by S. Farrell & S. Lewandowsky, 2008, Psychonomic Bulletin & Review, 15, p. 1240. Copyright 2008 by the Psychonomic Society

Although the lag-CRP plots from different individuals and experiments vary, they tend to be sharply peaked in the middle and biased in the forward direction, showing a tendency for nearby words to be recalled together, roughly in the order in which they were studied, as is illustrated in Fig. 1. A subtle confounding arises in these plots, however, from the nonuniform shape of the classic free-recall serial-position curve. Because most recalls come from near the beginning or the end of the list, medium-lag transitions—to or from the low central “asymptote”—would be relatively infrequent, even if the recall order were random. For example, if a subject recalls only the first and last three words of a ten-item list, lags of ±3 or ±4 would necessarily have frequencies of zero in the scoring of that subject’s data. This artifact helps explain why the complete lag-CRP function turns upward when it is plotted out to the extremes, as was documented by Farrell and Lewandowsky (2008) and can be seen in Fig. 1. The artifact also contributes to the peak in the center, although the peak is usually too high to be explained in this way (M. Kahana, personal communication, March 2013). Nor can it explain why transitions are biased in the positive direction, so there is little reason to doubt that the lag-CRP peak represents a real phenomenon.

The resurrection of contiguity as a theoretical concept derives primarily from attempts to explain the peaked shape of lag-CRP functions. Various versions of the theory exist (e.g., Howard & Kahana, 2002; Howard, Shankar, Aue, & Criss, 2015), but they all invoke a concept of temporal context, which is assumed to change in a regular way with the passage of time. When a word is studied, it is assumed to be associated with the prevailing context. When the next word is studied, it is not associated directly with the preceding word, but with the context. Because the temporal context changes relatively slowly, successive words tend to be associated with common contextual elements. If a word is recalled during testing, the context retrieved by that word mediates the activation of other words that were its neighbors during study. This process is assumed to be automatic or “obligatory.” Thus, because contextual change is driven by time, memory retrieval will be chronologically organized.

The following discussion is limited to the issue of whether studies of memory retrieval reveal an automatic effect of temporal contiguity. Other topics that are often discussed under the rubric of “temporal organization” (e.g., recency effects, serial-position curves, and the role of temporal discrimination in recall) will be addressed only to the extent that they seem directly relevant to this question. Nor will I have much to say about different versions of temporal context theory or their formal implementations. The sole question is whether the available empirical evidence supports the key assumption that this theory shares with those that were mentioned in the opening paragraph: Is memory inherently organized by temporal contiguity?

Empirical evidence

Immediate free recall

Any memory experiment conducted in such a way that subjects can anticipate how they will be tested involves both prospective and retrospective memory processes. This has been understood for some time (e.g., Kay & Poulton, 1951), although it is often ignored. Atkinson and Shiffrin (1968) drew a now well-known distinction between the unvarying features of the memory system and its control processes, which “are selected, constructed, and used at the option of the subject and may vary dramatically from one task to another even though superficially the tasks may appear very similar” (p. 90). An intelligent subject faced with a difficult task will try to encode the to-be-remembered material in a way that he or she thinks will facilitate performance in that particular task. Free recall is difficult, as is evidenced by the many errors of omission, and the task by its nature allows subjects great leeway in how they adapt to its demands. In most investigations of free recall, the same subjects study and recall list after list, sometimes returning for multiple sessions or even for multiple experiments.

How do subjects learn to cope with free recall? Reitman (1970) obtained thinking-aloud protocols from a subject who was learning the task. He summarized the protocols as follows:

We find that much of the subject’s time is spent in working out and testing various coding and retrieval strategies. As he acquires experience with the task, he becomes sensitive to list length and begins to differentiate among the parts of the list. He notes that if he can judge when a list is within six or seven items of the end, he can try to retain the last few items by constant repetition of the final sequence that is presented. Gradually, he learns to switch to such a procedure at that point. Within the main body of the list, he experiments over trials with a variety of coding strategies. . . . The subject finds some items easier to recall than others, and words presented in subsequent lists sometimes remind him of items in previous lists he was unable to recall. He thus obtains a certain amount of feedback on his performance as the experiment proceeds, and he spends part of his time using that feedback to locate and try to improve inadequate strategies. (p. 475)

Reitman (1970) commented further:

If different subjects choose different strategies, or an individual changes from one strategy to another, then trying to induce the structure of a general decoupled memory component from data aggregated over such variations would appear on its face a difficult task. There is no more reason to expect such an effort to succeed than to expect, say, that we could induce the hardware or software organization of some unknown computer from an examination of its outputs aggregated over different unknown programs. (p. 479)

The quotes above come from a chapter based on a talk Reitman gave at a conference on modeling memory. His comments may not have resonated well with readers of that volume. I have found no evidence that the chapter has ever been cited.

Healey and Kahana (2014) performed an exhaustive analysis of data from 126 subjects, averaged over 16 free-recall lists each, in an attempt to objectively identify and characterize individual differences in memory search strategies. They concluded that although there were differences in the serial positions at which subjects initiated recall, features such as the serial-position effect and the peaked lag-CRP function were remarkably consistent. Although the individual subjects’ data showed considerable differences in the heights of the lag function’s peak, that feature appeared to always be present. The authors concluded that, although it was possible that different subjects converged on essentially the same strategy, it was more likely that the lag-CRP function reflects a fundamental, strategy-independent characteristic of the memory system.

However, there is another way to learn what strategies subjects use, and that is to ask them. In Experiment 1 of Delaney and Knowles (2005), subjects studied and were tested on four successive free-recall lists and then were asked to describe the strategies they had used on the different lists. The reported strategies were categorized as shallow, intermediate, or deep. The authors reported that the vast majority of the subjects began on the first list with a shallow strategy, such as rehearsal, but many switched to a deeper strategy by the fourth list. Deeper strategies included making up sentences, constructing a story, and creating interactive images. In their Experiment 2, Delaney and Knowles manipulated encoding strategies by asking some subjects to rehearse, and others to make up a story. Recall performance by the subjects instructed to make up stories was almost 60% higher than that of those who were told to rehearse.

Integrating the words into a kind of story, and surreptitiously using it to recall the words, would help a resourceful subject cope with several problems that are inherent in free-recall experiments: It would give the list an integrative structure, help separate the present list from earlier lists, and lessen the burden of remembering which words have already been recalled, to avoid repetition. A narrative would be constructed “on the fly,” as the words came into working memory, and at recall it would tend to be reconstructed in the same order. This idea is consistent with Laming’s (2006) observation that the order in which words are recalled can be predicted from the pattern of rehearsals during study. It seems likely that a strategy such as this is one cause of the peaked lag-CRP function.

It is obvious that a narrative technique would also be effective in serial recall. Bower and Clark (1969) had subjects study 12 successive lists of ten words each. Some subjects were told to construct a narrative to aid in recalling the list, and the control subjects were left to their own devices. All subjects were given as much time as they wanted for study, and all performed near ceiling on immediate recall. However, following the 12th list, they were asked to recall each list when given the first word as a cue. Median recall on this test was 93% for the narrative subjects, versus 13% for the controls.

Two experiments by Von Wright (1977; Von Wright & Meretoja, 1975) investigated task-specific transfer effects. Subjects were tested on recognition memory, serial recall, or free recall after they had been led to expect either a recognition, serial-recall, or free-recall test (a 3 × 3 design). Generally, subjects performed better if they got the expected test, but there was one exception: The best preparation for free recall was not the expectation of free recall, but of serial recall. It has been noted that the lag-CRP functions from serial recall and free recall are similar (Bhatarah, Ward, & Tan, 2008; Klein, Addis, & Kahana, 2005). This evidence points to a some kind of seriation technique—such as making up a sentence or a story—as an effective way to do free recall. Most well-practiced subjects are likely to have settled on such a strategy by the time they have studied and recalled several lists, and free-recall subjects are typically given plenty of practice.

If the temporal organization that has been observed in free recall derives from a task-appropriate strategy, then subjects who do not expect free recall should not show that organization. Nairne, Cogdill, and Lehman (2013) performed such an experiment, although they did so for a different purpose. Subjects rated individual words for relevance to one of two scenarios and then were surprised by a test for free recall. As was indicated by the temporal factor measure (Sederberg, Miller, Howard, & Kahana, 2010), temporal organization was not significantly different from chance, in either scenario condition. This result is very hard to explain on the hypothesis that temporal order in free recall reflects an automatic or obligatory process.

Final free recall

One assumption of temporal context theory is that the same processes at work over short intervals of time, such as those that separate items within a list, also extend over longer intervals. Thus, if context mediates the association of two words within a list, it should do the same—although more weakly—for words in adjacent lists. The underlying mechanism is said to be scale-invariant.

Howard, Youker, and Venkatadass (2008) applied this logic to lag-CRP effects in final free recall. Subjects studied and recalled 48 lists, and then were given an unexpected final free-recall test on which they were asked to recall as many words as possible in any order from all of the preceding lists. Of interest were those cases in which the recall of one word was followed by recall of a word from a different list. The across-list lag-CRP function for these transitions displayed a peaked shape resembling that for within-list transitions—that is, jumps to nearby lists were more common than those between lists that were more widely separated in time. Similar final free-recall results have been reported by Unsworth (2008). The favored interpretation is that temporal context effects are not strictly local, but can be observed over intervals that differ by several orders of magnitude.

There is, however, an alternative explanation of the finding. At the time of the final test, each of the lists had already been recalled. In immediate free recall, as has been often observed, subjects sometimes mistakenly recall items from earlier lists; and as has been documented by Zaromb et al. (2006), these intrusions tend to be graded in recency, such that the most likely source is the immediately preceding list. As I have argued elsewhere (Hintzman, 2011), one cannot plausibly assume that memory encoding is turned off during the test phase of an experiment. Thus the initial recall experience for each list will be encoded as it unfolds, including intrusions from earlier lists. And not all such intrusions may be observable, because words incorrectly recalled from an earlier list may be edited out during testing (see the earlier quote from Reitman, 1970). If final free recall tends to follow the trajectory of initial recall, it should include transitions to nearby lists just as a matter of course.

Autobiographical memory

Moreton and Ward (2010) looked for time-scale invariance over longer intervals, by constructing an analogue of the lag-CRP function for autobiographical memories. Three different groups of undergraduates were given 8 min to recall as many personal experiences as they could, from either the last 5 weeks, the last 5 months, or the last 5 years, writing down a brief summary of each memory. Despite the different instructions, the subjects in each of the three groups came up with an average of about 20 memories. Following the recall period, each subject estimated a date for each remembered event. Using these dates, the researchers sorted the memories into bins of five successive weeks, five successive months, or five successive years (relative retention intervals, or RRIs), depending on the instructed time interval. They found no statistically reliable differences in RRI transitions across the three instruction groups, and all three displayed a lag function that was peaked at a lag of 0 (i.e., transitions within the same RRI). Such lag-0 transitions were significantly more common than would be expected if each subject had recalled the same memories in random order.

Moreton and Ward (2010) concluded that they had observed time-scale similarity in contiguity effects over much longer intervals than had been reported earlier, and that their results were consistent with temporal contiguity theory. However, they admitted almost in passing that the “analysis by time necessarily confounds time with other semantic factors that shape retrieval” (p. 514). This caution deserves to be emphasized, because human memory is content-addressable. If you recall one event from your past, it is likely to remind you of another event that you experienced with the same companions, or in the same location, or engaged in the same activity, and so on—and in everyday life, such factors tend to change with the passage of time. Subjective reports show that people deliberately use such cues when they search autobiographical memory (see Reiser, Black, & Kalamarides, 1986; Williams & Hollan, 1981). Thus, semantic factors alone could explain Moreton and Ward’s results.

If temporal contiguity theory is right, memory for one event should trigger memory of a contiguous event even if there is no other relationship between them. Television commercials should be associated with the programs with which they aired, and also with each other; unrelated news stories should be interconnected just because you learned of them at about the same time; phone calls should be associated with the activities they interrupted; and routine “time sharing” among tasks should produce a virtual spaghetti-plate of irrelevant connections.Footnote 1 There seems to be little empirical information on this matter, but in Wagenaar’s (1986) study of his own autobiographical memory, he concluded that there was no tendency for the recall of one memory to prime another memory from the same day, except when the two memories were related.

Cued recall

In a typical paired-associate experiment, the subject studies pairs of words that appear side by side on the screen of a computer, and is then tested with one member of each pair as the cue to recall the other. This task entails different cognitive demands than free recall. Finley and Benjamin (2012) showed that subjects who expect such a cued-recall test do much better on it than do subjects who expect free recall, and vice versa. The researchers also showed that these differences were related to test-appropriate changes in the strategies adopted by their subjects as they became better at one of the tasks. It would seem, therefore, that a good test of contiguity theory would be to look for evidence of organization by contiguity in paired-associate learning. Davis, Geller, Rizzuto, and Kahana (2008) reported such a result. In two experiments, when subjects recalled a list item other than the target word, there was a strong tendency for the intrusion to come from a pair that had been nearby during study. The distribution of errors appeared similar to the typical lag-CRP function for transitions in free recall.

The experimental procedure used by Davis et al. (2008), however, was highly unusual. Presentation of the words was auditory, one word at a time—12 pairs in one experiment, and 18 pairs in another. A 2-s interval separated the onsets of the A and B members of a pair, and a 3-s interval separated the onset of each B term from the following A term. Moreover, testing could be either forward (A to B) or backward (B to A). Subjects did not know the direction of recall for a given pair at study, or even at test. The only clue to the “correct” direction of recall lay in the time intervals that had been experienced during study. It is hard to imagine how a subject would attempt to cope with the demands of this procedure, which resembles the probe-cue task of Waugh and Norman (1965) as much as it does standard paired-associate learning.

Subjects in the Davis et al. (2008) experiments studied and were tested on 11 to 16 different lists. It seems likely that at least some of them would have tried to use a seriation strategy of some kind—especially if they had prior experience in free or serial recall. The authors argued that their subjects would not have used a strategy of learning interpair associations, because “such a strategy would have been counterproductive insofar as it would have induced high levels of associative interference between pairs” (p. 67). Given the procedure, the question is how subjects could have avoided forming such associations.

Davis et al. (2008) did not say why they adopted this unusual methodology. If temporal context theory is right, it should be possible to demonstrate temporally graded intrusions after subjects have studied pairs in the usual side-by-side manner. Failure to find such a result would be good evidence that temporal context theory is wrong. It would also be consistent with the paired-associates observations of Thorndike (1931, 1932) that were described earlier.

Spacing and order judgments

If successive events are automatically connected to each other, either directly or through a shared temporal context, one would expect subjects to have at least an inkling that they happened at about the same time. In three experiments (Hintzman & Block, 1973; Hintzman, Summers, & Block, 1975), subjects studied a long list of words for a later memory test, and then, unexpectedly, were asked to judge how far apart certain pairs of events had been in the list. The spacings of the pairs ranged from 0no other items (corresponding to a lag of 1) up to 25 other items. The types of pairs that were tested were of same words (the item was repeated), associatively related words, unrelated words, and homographs in contexts that biased either the same or different meanings. Spacing judgments tracked the actual spacings to various degrees in all conditions, except for the ones that involved unrelated words. Figure 2 shows typical results from one of the experiments. In no case was the slope of the curve for unrelated pairs reliably different from zero. The flat functions—displaying no ability to discriminate a spacing of 0 from longer spacings when the words are were unrelated—are hard to reconcile with the hypothesis that memory is organized by temporal contiguity.

The theoretical interpretation of these results is worth considering further. Hintzman and colleagues (Hintzman & Block, 1973; Hintzman et al., 1975) proposed that the second of two related events reminded the subject of the first, and that the reminding experience included an implicit judgment of how long ago the first event had occurred. The configural trace of the reminding experience, retrieved on the final test, would thereby include a clue to the temporal separation of the events. Other researchers took this interpretation further, to include order information. Logically, one could remember that A and B were about a minute apart without knowing which was first and which was second. But if A reminding one of B is subjectively different from B reminding one of A, the experience stored at the time of the second event should provide a clue to their order. Confirming this prediction, judgments of the orders of verbal items in a previous list are more accurate when the items are related than when they are unrelated (Tzeng & Cotton, 1980; Winograd & Soloway, 1985). According to Friedman (1993), such “order codes” for related events contribute to the illusion that memory is chronologically ordered.

Recognition memory

Schwartz, Howard, Jing, and Kahana (2005) looked for order effects in recognition memory for stimuli that had been downloaded from a travel-photograph website. Their subjects studied six different 64-picture lists, each followed by an old–new recognition test. The researchers compared recognition in two conditions: one in which the test items were preceded by the same pictures that had preceded them during study (adjacent), and one in which they were preceded by old items that had appeared more than ten positions away (remote). Adjacent items were recognized slightly but significantly better than remote items. This finding supports a role for temporal contiguity in recognition memory, but there is reason to doubt that contiguity by itself produced the result.

Consider again our explanation of the spacing judgment data in Fig. 2. Subjects were able to judge spacing only when the first and second items were meaningfully related, and these judgments were most accurate when the related items were temporally contiguous. Our account of the result assumes that when the subject notices that items are related, the experience is encoded in a configural trace. Recognition performance appears to benefit from such configural information. In the Hintzman, Summers, and Block (1975) study, related items seen at a spacing of 0 were recognized better than those in the other conditions, and a similar result had been reported earlier by Jacoby and Hendricks (1973). Experimenters often implicitly assume that items in a randomly ordered list are “unrelated,” and when the items are words sampled from a large word pool, this may not be too far from the truth; but travel scenes tend to fall into preferred categories, such as landscapes, buildings, and bodies of water. Schwarz et al. (2005) say nothing about how their stimuli were selected, but three of the five photos shown in their Fig. 1 are water scenes, and two include boats. This strongly suggests that successive pictures in their study were sometimes related, in ways that an attentive subject would notice.

Mean spacing judgments from Exp. 1 of Hintzman, Summers, and Block (1975). As was also true in their two other experiments, the slope for unrelated words was not significantly different from zero. From “Spacing Judgments as an Index of Study-Phase Retrieval,” by D. L. Hintzman, J. J. Summers, & R. A. Block, 1975, Journal of Experimental Psychology: Human Learning and Memory, 1, p. 34. Copyright 1975 by the American Psychological Association

Position judgments

If memory is temporally organized, judgments of when an event occurred should display a simple generalization gradient along the temporal dimension. Hintzman, Block, and Summers (1973) had subjects study multiple (two to four) lists of words. Each list was followed by a recognition memory test on a small subset of words from the middle of that list. Following the last list, subjects were given an unexpected test that required judgments of list membership and within-list position on the remaining (untested) words. Although judgments of list membership displayed a temporal gradient, judgments of within-list position did not. If an item was assigned to the wrong list, it tended to be assigned to the correct list position, even if that violated temporal contiguity. For example, if a word from near the end of List 2 was judged to have occurred in List 3, it tended to be placed near the end instead of the beginning. Also under incidental memory conditions, but using shorter lists and a different judgment task, Nairne (1991) found comparable results, from which he concluded that list and within-list position are independent memory dimensions.

Hintzman, Block, and Summers (1973) did not assume strict independence, but rejected the hypothesis that memory is chronologically ordered, proposing instead that items are associated with mental context. Such context might include posture, boredom, anticipation of the end of the list or of the experiment, and the subjects’ free-ranging “internal monologue” about strategies, distractions, and so on (Bower, 1972). If such mental context is associated with list items and retrieved on a test, it could provide useful clues both to a test item’s list membership and to its within-list position. An advantage of this hypothesis is that it is consistent with a more general theory of source judgments proposed by Johnson, Hashtroudi, and Lindsay (1993). Although this cognitive-context view is similar to temporal context theory in some respects, it does not assume that context change is driven primarily by time or that memory is chronologically organized.

Recency judgments

In the running recency judgment task, subjects go through a long list of items that includes test trials. In some studies, the subject gives a numerical judgment of how many items back in the list the test item was when it was last encountered (Hinrichs & Buschke, 1968); in others, the subject chooses the more recent of two test items (Yntema & Trask, 1963). Results based on both techniques show that apparent recency follows a roughly logarithmic function, with trace ages becoming less and less discriminable as the remembered events drift into the past. Lockhart (1969) found that forced-choice data could be predicted from the distributions of numerical recency judgments.

Introspection offers little guidance to how recency judgments are made, but some experimental evidence has suggested they are determined by the passage of time, not by the number of items that filled it (Hintzman, 2004). The best explanation may be that some retrievable quality of the memory trace is correlated with its age and that subjects are attuned to that. As was argued by Friedman (1993), the fact that people have access to crude “distance” information of this kind does not imply that memory is temporally organized.

A recent article advocating one version of temporal-context theory (Howard et al., 2015) placed great emphasis on a different kind of recency judgment experiment that uses short, rapidly presented lists. The prime example is Experiment 2 of Hacker (1980). Hacker showed subjects lists of 10 to 14 consonants, at rates of 5.6 to 16.7 items/s. Each list terminated with a mask and a forced-choice test for which of two probe consonants had been more recent in the preceding list. There were four subjects, each of whom had at least three practice sessions, followed by 24 sessions of 384 lists each. Choice reaction times decreased with the recency of the probe that was chosen, whether or not it was the correct choice. The recency of the nonchosen item had no reliable effect. These results are as predicted by models that assume backward parallel scanning for the first-encountered probe. Howard et al. (2015) proposed that a backward search from the present moment is the general basis of recency judgments, including forced-choice and numerical judgments in very long lists. This ignores the prospective nature of Hacker’s experiment. Over 1,000 trials of practice gave Hacker’s subjects ample opportunity to develop special encoding and retrieval strategies to cope with a very difficult immediate-memory task.

A study by Chan et al. (2009) challenges the idea that backward scanning is obligatory in such experiments. Some of their subjects were instructed to indicate which of the test probes occurred later in the list (a recency judgment), and others were instructed to indicate which probe occurred earlier in the list. Both conditions produced data consistent with parallel scanning, but reaction times and errors for the earlier-instruction condition were virtually the mirror image of those for the later-instruction condition—as though subjects could scan either forward or backward, depending on the instructions. An advocate of temporal-organization theory might propose that subjects in the Earlier condition first scanned back to find the beginning of the list and then scanned forward from there to find one of the probes. Contrary to that idea, reaction times in the Earlier condition were generally faster than those in the Later condition, suggesting that, if anything, forward scanning may be more natural.

Chan et al. (2009) concluded that a subtle difference in instructions can bias memory scanning in either direction. However, this conclusion again ignores the prospective nature of the experiments. Different subjects served in the two conditions, so they knew before each list was presented what they would be expected to do, and they had adequate opportunity to develop encoding strategies appropriate for their anticipated task. It is unwarranted to assume that the representations of the lists were the same in both conditions, and it is certainly unwarranted to assume that conclusions drawn from an immediate-memory task such as Hacker’s (1980) can be extended to other recency judgment paradigms.

Concluding comments

Is learning by contiguity a fundamental principle of human cognition? Are memories chronologically organized? I have reviewed findings from several memory tasks that have been offered in support of these hypotheses. In every case, the allegedly favorable results are open to a plausible alternative account, and studies that minimize confounding factors have been more consistent with the null hypothesis. Complete rejection of the contiguity principle may seem implausible, given the prominence of “Hebbian learning” in skill-learning theories (e.g., Ashby, Ennis, & Spiering, 2007; Petrov, Dosher, & Lu, 2005), but skill acquisition is cumulative and slow. By contrast, the kinds of learning seen in episodic-memory experiments can occur in a single trial lasting 1–3 s. Because episodic memory records conscious experience, these processes are also partly accessible to awareness. Considering this, the widespread reluctance of memory researchers to ask for subjective reports would seem to be counterproductive.

Elsewhere (Hintzman, 2011), I have discussed several implicit assumptions that haunt research in the study of memory, even though almost everyone knows they are wrong. These assumptions are insidious, because they are unstated and therefore are seldom examined. Among them are the fallacy of process-pure study and test phases (that encoding only happens during study and retrieval only happens at test) and the fallacy of list isolation (that only the target list exists in memory, so earlier lists can be ignored). To those we might add the fallacy of purely retrospective memory. This is the assumption that subjects process to-be-remembered material in the same way, regardless of how they expect to be tested. In colloquial terms, the assumption is wrong because experimental subjects (like the students most of them are) “study to the test.”

To some extent, all of these biases may derive from the computer analogy. The read function tells a computer what data to take in and when to do it, and the fetch function tells the computer when to retrieve data and where to find it. They are completely separate operations. The computer does not discover coincidences in the data, or transform it in ways other than what it has been explicitly instructed to do. As useful as the computer analogy has been for decades in guiding memory research, it is well to remember that humans are not digital computers.

The prospective nature of many memory experiments has been a focus of the previous discussion. A savvy experimental subject will actively prepare for an anticipated test. Free recall, as it is almost always done, is a case in point: If you accept that free-recall subjects develop encoding strategies, that the strategies are often effective, and that they are likely to influence the order in which words are recalled, then you cannot use the temporal ordering of free recall to measure an automatic or obligatory process, such as the one central to temporal context theory (Howard & Kahana, 2002; Howard et al., 2015). If strategic effects and other artifacts could somehow be removed from statistics such as the lag-CRP, what remains would surely be smaller than the literature makes it appear.

The studies reviewed here that failed to support a contiguity effect are linked by a common thread, despite their very different methodologies. In Thorndike’s (1931, 1932) paired-associate experiment, subjects knew they would be tested, but not on the surreptitiously arranged pairings. In the spacing judgment experiments (Hintzman & Block, 1973; Hintzman et al., 1975), subjects knew they were in a memory experiment, but could not have anticipated what kind of memory test they would be given. In the experiments on joint rehearsal (Bradley & Glenberg, 1983; Glenberg & Bradley, 1979), subjects thought the word pairs were incidental to their primary task. Likewise, none of the subjects in Nairne, Cogdill, and Lehman’s (2013) experiment were likely to have anticipated a test of free recall on the words they were rating. In all of these cases, the prospective-memory component that is present in many memory experiments—especially when subjects study and are tested on list after list—was absent. Such absence would seem to be a crucial condition for showing that a memory process is automatic or obligatory, and not induced by a strategy.

Notes

Irrelevant between-task associations should also be formed in memory experiments in which study words are alternated with a distractor task, such as simple arithmetic problems (e.g., Bjork & Whitten, 1974).

References

Asch, S. (1969). A reformulation of the problem of associations. American Psychologist, 24, 92–102.

Ashby, F. G., Ennis, J. M., & Spiering, B. J. (2007). A neurobiological theory of automaticity in perceptual categorization. Psychological Review, 114, 632–656. doi:10.1037/0033-295X.114.3.632

Atkinson, R. C., & Shiffrin, R. M. (1968). Human memory: A proposed system and its control processes. In K. W. Spence & J. T. Spence (Eds.), The psychology of learning and motivation: Advances in research and theory (Vol. 2, pp. 89–195). New York, NY: Academic Press.

Bhatarah, P., Ward, G., & Tan, L. (2008). Examining the relationship between free recall and immediate serial recall: The serial nature of recall and the effect of test expectancy. Memory & Cognition, 36, 20–34. doi:10.3758/MC.36.1.20

Bjork, R. A., & Whitten, W. B. (1974). Recency-sensitive retrieval processes in long-term free recall. Cognitive Psychology, 6, 173–189. doi:10.1016/0010-0285(74)90009-7

Bower, G. H. (1970). Imagery as a relational organizer in associative learning. Journal of Verbal Learning and Verbal Behavior, 9, 529–533.

Bower, G. H. (1972). Stimulus-sampling theory of encoding variability. In A. W. Melton & E. Martin (Eds.), Coding processes in human memory (pp. 85–123). Washington, DC: Winston & Sons.

Bower, G. H., & Clark, M. (1969). Narrative stories as mediators for serial learning. Psychonomic Science, 14, 181–182.

Bradley, M. M., & Glenberg, A. M. (1983). Strengthening associations: Duration, attention, or relations? Journal of Verbal Learning and Verbal Behavior, 22, 650–666. doi:10.1016/S0022-5371(83)90385-7

Chan, M., Ross, B., Earle, G., & Caplan, J. B. (2009). Precise instructions determine participants’ memory search strategy in judgments of relative order in short lists. Psychonomic Bulletin & Review, 16, 945–951. doi:10.3758/PBR.16.5.945

Davis, O. C., Geller, A. S., Rizzuto, D. S., & Kahana, M. J. (2008). Temporal associative processes revealed by intrusions in paired-associate recall. Psychonomic Bulletin & Review, 15, 64–69. doi:10.3758/PBR.15.1.64

Delaney, P. F., & Knowles, M. E. (2005). Encoding strategies changes and spacing effects in the free recall of unmixed lists. Journal of Memory and Language, 52, 120–130. doi:10.1016/j.jml.2004.09.002

Farrell, S., & Lewandowsky, S. (2008). Empirical and theoretical limits on lag-recency in free recall. Psychonomic Bulletin & Review, 15, 1236–1250. doi:10.3758/PBR.15.6.1236

Finley, J. R., & Benjamin, A. S. (2012). Adaptive and qualitative changes in encoding strategy with experience: Evidence from the test-expectancy paradigm. Journal of Experimental Psychology: Learning, Memory, and Cognition, 38, 632–652. doi:10.1037/a0026215

Friedman, W. J. (1993). Memory for the time of past events. Psychological Bulletin, 113, 44–66. doi:10.1037/0033-2909.113.1.44

Glenberg, A. M., & Bradley, M. M. (1979). Mental contiguity. Journal of Experimental Psychology: Human Learning and Memory, 5, 88–97. doi:10.1037/0278-7393.5.2.88

Hacker, M. J. (1980). Speed and accuracy of recency judgments for events in short-term memory. Journal of Experimental Psychology: Human Learning and Memory, 6, 651–675. doi:10.1037/0278-7393.6.6.651

Healey, M. K., & Kahana, M. J. (2014). Is memory search governed by universal principles or idiosyncratic strategies? Journal of Experimental Psychology: General, 143, 575–596. doi:10.1037/a0033715

Hinrichs, J. V., & Buschke, H. (1968). Judgment of recency under steady-state conditions. Journal of Experimental Psychology, 78, 574–579.

Hintzman, D. L. (2003). Robert Hooke’s model of memory. Psychonomic Bulletin & Review, 10, 1–14.

Hintzman, D. L. (2004). Time versus items in judgment of recency. Memory & Cognition, 32, 1298–1304. doi:10.3758/BF03206320

Hintzman, D. L. (2011). Research strategy in the study of memory: Fads, fallacies, and the search for the “coordinates of truth.”. Perspectives on Psychological Science, 6, 253–271. doi:10.1177/1745691611406924

Hintzman, D. L., & Block, R. A. (1973). Memory for the spacing of repetitions. Journal of Experimental Psychology, 99, 70–74.

Hintzman, D. L., Block, R. A., & Summers, J. J. (1973). Contextual associations and memory for serial position. Journal of Experimental Psychology, 97, 220–229.

Hintzman, D. L., Summers, J. J., & Block, R. A. (1975). Spacing judgments as an index of study-phase retrieval. Journal of Experimental Psychology: Human Learning and Memory, 1, 31–40. doi:10.1037/0278-7393.1.1.31

Hooke, R. (1969). Lectures of light. Section VII. In The posthumous works of Robert Hooke (pp. 138–148). New York, NY: Johnson Reprint Corp (Original work published 1705).

Howard, M. W., & Kahana, M. J. (2002). A distributed representation of temporal context. Journal of Mathematical Psychology, 46, 269–299. doi:10.1006/jmps.2001.1388

Howard, M. W., Shankar, K. H., Aue, W., & Criss, A. H. (2015). A distributed representation of internal time. Psychological Review, 122, 24–53.

Howard, M. W., Youker, T. E., & Venkatadass, V. S. (2008). The persistence of memory: Contiguity effects across hundreds of seconds. Psychonomic Bulletin & Review, 15, 58–63. doi:10.3758/PBR.15.1.58

Jacoby, L., & Hendricks, R. L. (1973). Recognition effects of study organization and test context. Journal of Experimental Psychology, 100, 73–82.

James, W. (1890). The principles of psychology (Vol. 1). New York, NY: Henry Holt & Co.

Johnson, M. K., Hashtroudi, S., & Lindsay, D. S. (1993). Source monitoring. Psychological Bulletin, 114, 3–28. doi:10.1037/0033-2909.114.1.3

Kahana, M. J. (1996). Associative retrieval processes in free recall. Memory & Cognition, 24, 103–109.

Kay, H., & Poulton, E. C. (1951). Anticipation in memorizing. British Journal of Psychology, 42, 34–41.

Klein, K. A., Addis, K. M., & Kahana, M. J. (2005). A comparative analysis of serial and free recall. Memory & Cognition, 33, 833–839.

Koffka, K. (1935). Principles of gestalt psychology. New York, NY: Harcourt, Brace & World.

Kohler, W. (1941). On the nature of associations. Proceedings of the American Philosophical Society, 84, 489–502.

Laming, D. (2006). Predicting free recalls. Journal of Experimental Psychology: Learning, Memory, and Cognition, 32, 1146–1163. doi:10.1037/0278-7393.32.5.1146

Lockhart, R. S. (1969). Recency discrimination predicted from absolute lag judgments. Perception & Psychophysics, 6, 42–44.

Moreton, B. J., & Ward, G. (2010). Time scale similarity and long-term memory for autobiographical events. Psychonomic Bulletin & Review, 17, 510–515. doi:10.3758/PBR.17.3.510

Murdock, B. B., Jr. (1962). The serial position effect of free recall. Journal of Experimental Psychology, 64, 482–488. doi:10.1037/h0045106

Murdock, B. B., Jr. (1974). Human memory: Theory and data. Potomac, MD: Erlbaum.

Nairne, J. S. (1991). Positional uncertainty in long-term memory. Memory & Cognition, 19, 332–340. doi:10.3758/BF03197136

Nairne, J. S., Cogdill, M., & Lehman, M. (2013). Recall dynamics after survival processing. Paper presented at the 54th Meeting of the Psychonomic Society, Toronto, ON.

Pavlov, I. (1927). Conditioned reflexes (G. V. Anrep, Trans.). London, UK: Oxford University Press.

Petrov, A. A., Dosher, B. A., & Lu, Z.-L. (2005). The dynamics of perceptual learning: An incremental reweighting model. Psychological Review, 112, 715–743. doi:10.1037/0033-295X.112.4.715

Reiff, R., & Scheerer, M. (1959). Memory and hypnotic age regression: Developmental aspects of cognitive function explored through hypnosis. New York, NY: International Universities Press.

Reiser, B. J., Black, J. B., & Kalamarides, P. (1986). Strategic memory search processes. In D. C. Rubin (Ed.), Autobiographical memory (pp. 100–121). Cambridge, UK: Cambridge University Press.

Reitman, W. (1970). What does it take to remember? In D. A. Norman (Ed.), Models of human memory (pp. 469–509). New York, NY: Academic Press.

Rescorla, R. A., & Wagner, A. R. (1972). A theory of Pavlovian conditioning: Variations in the effectiveness of reinforcement and non-reinforcement. In A. H. Black & W. F. Prokasy (Eds.), Classical conditioning: II. Current research and theory (pp. 64–99). Appleton-Century-Crofts: New York, NY.

Ribot, T. (1882). Diseases of memory. New York, NY: Appleton-Century-Crofts.

Schacter, D. L., Eich, J. E., & Tulving, E. (1978). Richard Semon’s theory of memory. Journal of Verbal Learning and Verbal Behavior, 17, 721–743.

Schwartz, G., Howard, M. W., Jing, B., & Kahana, M. J. (2005). Shadows of the past: Temporal retrieval effects in recognition memory. Psychological Science, 16, 898–904. doi:10.1111/j.1467-9280.2005.01634.x

Sederberg, P. B., Miller, J. F., Howard, M. W., & Kahana, M. J. (2010). The temporal contiguity effect predicts episodic memory performance. Memory & Cognition, 38, 689–699. doi:10.3758/MC.38.6.689

Semon, R. (1923). Mnemic psychology (B. Duffy, Trans.). London, UK: George Allen & Unwin.

Thorndike, E. L. (1931). Human learning. New York, NY: Century.

Thorndike, E. L. (1932). The fundamentals of learning. New York, NY: Teachers College.

Tzeng, O. J., & Cotton, B. (1980). A study-phase retrieval model of temporal coding. Journal of Experimental Psychology: Human Learning and Memory, 6, 705–716. doi:10.1037/0278-7393.6.6.705

Unsworth, N. (2008). Exploring the retrieval dynamics of delayed and final recall. Journal of Memory and Language, 59, 223–236.

Von Wright, J. (1977). On the development of encoding in anticipation of various tests of retention. Scandinavian Journal of Psychology, 18, 116–120.

Von Wright, J., & Meretoja, M. (1975). Encoding in anticipation of various tests of retention. Scandinavian Journal of Psychology, 16, 108–112. doi:10.1111/j.1467-9450.1975.tb00170.x

Wagenaar, W. A. (1986). My memory: A study of autobiographical memory over six years. Cognitive Psychology, 18, 225–252.

Waugh, N. C., & Norman, D. A. (1965). Primary memory. Psychological Review, 72, 89–104. doi:10.1037/h0021797

Williams, M. D., & Hollan, J. D. (1981). The process of retrieval from very long-term memory. Cognitive Science, 5, 87–119.

Winograd, E., & Soloway, R. M. (1985). Reminding as a basis for temporal judgments. Journal of Experimental Psychology: Learning, Memory, and Cognition, 11, 262–271. doi:10.1037/0278-7393.11.2.262

Yntema, D. B., & Trask, F. P. (1963). Recall as a search process. Journal of Verbal Learning and Verbal Behavior, 2, 65–74.

Zaromb, F. M., Howard, M. W., Dolan, E. D., Sirotin, Y. B., Tully, M., Wingfield, A., & Kahana, M. J. (2006). Temporal associations and prior-list intrusions in free recall. Journal of Experimental Psychology: Learning, Memory, and Cognition, 32, 792–804. doi:10.1037/0278-7393.32.4.792

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Hintzman, D.L. Is memory organized by temporal contiguity?. Mem Cogn 44, 365–375 (2016). https://doi.org/10.3758/s13421-015-0573-8

Published:

Issue Date:

DOI: https://doi.org/10.3758/s13421-015-0573-8