Abstract

Recent scientific literature shows that emotional cues conveyed by human vocalizations and odours are processed in an asymmetrical way by the canine brain. In the present study, during feeding behaviour, dogs were suddenly presented with 2-D stimuli depicting human faces expressing the Ekman’s six basic emotion (e.g. anger, fear, happiness, sadness, surprise, disgust, and neutral), simultaneously into the left and right visual hemifields. A bias to turn the head towards the left (right hemisphere) rather than the right side was observed with human faces expressing anger, fear, and happiness emotions, but an opposite bias (left hemisphere) was observed with human faces expressing surprise. Furthermore, dogs displayed higher behavioural and cardiac activity to picture of human faces expressing clear arousal emotional state. Overall, results demonstrated that dogs are sensitive to emotional cues conveyed by human faces, supporting the existence of an asymmetrical emotional modulation of the canine brain to process basic human emotions.

Similar content being viewed by others

The ability to recognize other individuals’ emotions plays a pivotal role in the creation and maintenance of social relationships in animals living in social groups (Nagasawa, Murai, Mogi, & Kikusui, 2011). It allows them to correctly evaluate the motivation and intentions of another individual and to adjust accordingly their behaviour during daily interactions. For humans, facial expressions constitute an important source of information, as age, gender, direction of attention (Tsao & Livingstone, 2008), and, most importantly, the individual emotional state (Ekman, Friesen, & Ellsworth, 2013).

Living in close contact with humans, dogs have developed unique socio-cognitive skills that enable them to interact and communicate efficiently with humans (Lindblad-Toh et al., 2005). The recent literature reports dogs’ ability to interpret different human visual signals expressed by both body postures (e.g. pointing gestures; Soproni, Miklósi, Topál, & Csányi, 2002) and human faces as well. Dogs’ peculiar sensitivity for human faces is demonstrated by a specialization of the brain temporal cortex regions for processing them (Cuaya, Hernández-Pérez, & Concha, 2016; Dilks et al., 2015) and by evidences coming from behavioural observations. In particular, looking at human face, dogs are able to detect the direction of humans’ gaze, their attentional and emotional state (Call, Bräuer, Kaminski, & Tomasello, 2003; Müller, Schmitt, Barber, & Huber, 2015). Dogs successfully discriminate between neutral facial expressions and emotional ones (Deputte, & Doll, 2011; Nagasawa et al., 2011), and, among these, they can learn to differentiate happy faces from angry faces (Müller et al., 2015).

Recent literature shows that dogs process human faces similarly to humans. They are able to discriminate familiar human faces using the global visual information both of the faces and the head (Huber, Racca, Scaf, Virányi, & Range, 2013), scanning all the facial features systematically (e.g. eyes, nose and mouth; Somppi et al., 2016) and relying on configural elaboration (Pitteri, Mongillo, Carnier, Marinelli, & Huber, 2014). Moreover, dogs, as well as humans, focus their attention mainly in the eye region, showing faces identification impairments when it is masked (Pitteri et al., 2014; Somppi et al., 2016). Interestingly, their gazing pattern of faces informative regions varies according to the emotion expressed. Dogs tend to look more at the forehead region of positive emotional expression and at the mouth and the eyes of negative facial expressions (Barber, Randi, Müller, & Huber, 2016), but they avert their gaze from angry eyes (Somppi et al., 2016). The attentional bias shown toward the informative regions of human emotional faces suggests, therefore, that dogs use facial cues to encode human emotions. Furthermore, in exploring human faces (but not conspecific ones), dogs, as humans, rely more on information contained in their left visual field (Barber et al., 2016; Guo, Meints, Hall, Hall, & Mills, 2009; Ley & Bryden, 1979; Racca, Guo, Meints, & Mills, 2012). Although symmetric, the two sides of human faces differ in emotional expressivity. Previous studies employing mirrored chimeric (i.e. composite pictures made up of the normal and mirror-reversed hemiface images, obtained by splitting the face down the midline) and 3-D rotated pictures of faces, reported that people perceive the left hemiface as displaying stronger emotions more than the right one (Lindell, 2013; Nicholls, Ellis, Clement, & Yoshino, 2004), especially for negative emotions (Borod, Haywood, & Koff, 1997; Nicholls et al., 2004; Ulrich, 1993). Considering that the muscles of the left side of the face are mainly controlled by the contralateral hemisphere, such a difference in the emotional intensity displayed suggests a right hemisphere dominant role in expressing emotions (Dimberg & Petterson, 2000). Moreover, in humans, the right hemisphere has also a crucial role in the processing of emotions, since individuals with right-hemisphere lesions showed impairments in their ability to recognize others emotions (Bowers, Bauer, Coslett, & Heilman, 1985). A right-hemispheric asymmetry in processing human faces has also been found in dogs, which showed a left gaze bias in attending to neutral human faces (Barber et al., 2016; Guo et al., 2009; Racca et al., 2012). Nevertheless, the results on dogs looking bias for emotional faces are inconsistent. Whilst a left gaze bias was shown in response to all human faces regardless the emotion expressed (Barber et al., 2016), Racca et al. (2012) observe this preference only for neutral and negative emotions, but not for the positive ones. Thus, the possibility that such a preference is dependent on the valence of the emotion conveyed and subsequently perceived cannot be excluded. Furthermore, it remains still unclear whether dogs understand the emotional message conveyed by human facial expressions and which significance and valence they attribute to it.

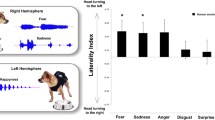

Recent studies indicate that the analysis of both the valence (lateralized behaviour) and arousal dimensions (physiological response) is a useful tool to deeply investigate brain emotional functioning in canine species (Siniscalchi, d’Ingeo, Fornelli, & Quaranta, 2018; Siniscalchi, d’Ingeo, & Quaranta, 2016). In dogs, the asymmetry in processing human emotional stimuli with different valence has been reported for olfaction (D’Aniello, Semin, Alterisio, Aria, & Scandurra, 2018; Siniscalchi et al., 2016) and audition (Siniscalchi et al., 2018). In particular, right hemisphere dominance was reported in response to human odours (e.g. veterinary sweat; Siniscalchi et al., 2011) and emotional vocalizations with a clear negative emotional valence (head turning preferentially toward the left in response to ‘fear’ and ‘sadness’ vocalizations; Siniscalchi et al., 2018). On the contrary, left hemisphere dominance was shown in the analysis of positive vocalizations (head turning preferentially toward the right in response to “happiness” vocalizations; Siniscalchi et al., 2018) and during sniffing approaching eliciting odours (collected in fear and physical stress conditions; Siniscalchi et al., 2016). Concerning visual emotional stimuli, dogs showed a bias to turn their head toward the left side (right hemisphere dominance) when presented with a potential threatening stimuli (e.g. a snake silhouette; Siniscalchi, Sasso, Pepe, Vallortigara, & Quaranta, 2010). Overall, physiological response support the hypothesis that dogs are sensitive to emotional cues conveyed by both human vocalizations and odours, since a high cardiac activity was recorded in response to clear arousal stimuli (Siniscalchi et al., 2016, 2018).

In the light of these reports, we presented to dogs pictures of human faces expressing the Ekman’s six basic emotions cross-culturally recognized (i.e. anger, fear, happiness, sadness, surprise, disgust; Ekman, 1993), evaluating their head-turning response (valence dimension), their physiological activity (cardiac activity) and their behaviour (arousal dimension).

Furthermore, in order to deepen the current knowledge about the mechanism of dogs’ perception of human emotional faces and their similarity with humans’ one, we presented to dogs two chimeric mirrored pictures of the same emotional face, comparing their response toward the right and left chimeras.

Materials and methods

Visual stimuli

Four right-handed volunteers, two men and two women, between ages and 33 years of age, were photographed while posing the six Ekman’s universal emotions (Ekman, 1993): fear, anger, happiness, surprise, sadness, and disgust. In addition, a picture of a neutral expression was taken, where subjects had to relax and look straight ahead (Moreno, Borod, Welkowitz, & Alpert, 1990).

All the facial emotional expressions were captured using a full HD digital camera (Sony Alpha 7 II ILCE-7M2K®) positioned on a tripod and centrally placed in front of the subject at a distance of about 2 m. Before being portrayed, subjects were informed about the aim of the study and the procedure to be followed. They had to avoid make-up (except mascara) and to take off glasses, piercings, and earrings that could be used by dogs as a cue to discriminate the different expressions. Furthermore, an experimenter showed them a picture of the emotional facial expressions used by Schmidt and Cohn (2001), as a general reference for the expressive characteristics required. Subjects were then asked upon oral command to pose the different emotional facial expressions with the greatest intensity as possible. The order of the oral command was randomly assigned.

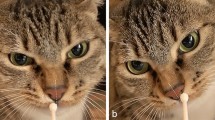

All the photographs were edited using Adobe Photoshop to homogenize the size of the stimuli and to add a uniform black background. Each face was cut along the vertical midline bisecting the right and the left hemiface, following the procedure described in Moreno et al. (1990). A composite photograph (mirrored chimeric picture) was then created for each of the two pictures, consisting of the original and its mirror-reversed hemiface photograph (a right-right (R-R) or left-left (L-L) hemifaces chimeric picture). As a result, two different pictures per each emotion were obtained, representing respectively the left and right hemiface expression of the same emotion (see Fig. 1). A Sencore ColorPro 5 colorimeter sensor and Sencore ColorPro 6000 software were used to calibrate the colours of the monitor to CIE Standard Illuminant D65 and to equalize pictures’ brightness.

All the 56 visual stimuli (due pictures × seven emotions × four subjects) were then presented to four women and four men, between 23 and 62 years of age, in order to select the most significant ones. The pictures were shown as a PowerPoint slideshow in full screen mode on a monitor (Asus VG248QE®) and in a random order between subjects. Each volunteer seated in front of the screen and had to rate on a 6-point scale (ranging between 0 and 5) the intensity of neutral, happiness, disgust, fear, anger, surprise, and sadness perceived per each facial expression shown. According to the questionnaire results, the pictures of a man and a woman were selected for the final test. (see Fig. 1).

Subjects

Twenty-six domestic dogs of various breeds were recruited for this research. To be involved in the study, subjects had to satisfy several criteria: They had to live in households, to be food motivated, and not to be affected by chronic diseases. In addition, a Veterinary Behaviourist of the Department of Veterinary Medicine had to certify their health and the absence of any ocular and behavioural pathologies. Subjects had to fast for at least 8 hours before the testing session. We excluded five subjects: three dogs did not respond to any visual stimuli (i.e. did not stop feeding behaviour), and two dogs were influenced by the owner during the experiment. Hence, the final sample consisted of 21 subjects, 12 males (three neutered) and nine females (six neutered) whose ages ranged from 1 to 13 years (M = 3.90, SD = 2.83).

Experimental setup

The experiment was carry out in an isolated and dark room of the Department of Veterinary Medicine, University of Bari. A lamp was used to illuminate the room artificially and uniformly, to avoid that any light reflections on the screen could interfere with dogs perception of the visual stimuli. Two monitors (Asus VG248QE®, 24-in. FHD, 1920 × 1080; Brightness(Max): 350 cd/m2) connected to a computer by an HDMI splitter were used to display simultaneously the visual stimuli. They were positioned on the two sides of a bowl containing dogs’ favourite food, at a distance of 1,90 m and aligned with it (see Fig. 2).

In addition, two plastic panels (10-cm high, 50-cm in depth) were located on the two side of the bowl at a distance of 30 cm, to ensure dogs’ central position during the test. Furthermore, two cameras, one recording in the standard mode and the other in night mode, were used to record the dog’s behaviour during trials. They were positioned on tripods in front of the subject, at a distance of about 3 m and 3.50 m and at a high of 1.30 m and 2 m, respectively (see Fig. 2).

Procedure

Participants were randomly divided in two groups according to the gender of the presented human faces, so that each subject was presented with only female or male pictures. The test consisted in 2 weekly trials in which a maximum of two different emotional faces dyads were shown per each dog until the full set of stimuli was completed (i.e. each subject was presented with all the seven emotional faces).

The right-right (R-R) or left-left (L-L) hemifaces chimeric pictures of the same emotion were randomly assigned to each trial (and counterbalanced considering the whole sample), as well as the order of the emotional faces displayed.

Once in the testing room, the owner led the dog to the bowl on a loose leash, helping it to take a central position in the testing apparatus and waited till he started to feed. Then, he let the dog off the leash and positioned himself 2.5 m behind it. During the test, the owner had to maintain this position, looking straight to the wall in front of him and avoiding any interactions with the dog. After 10 seconds from the owner positioning, the first emotional face was displayed. Visual stimuli appeared simultaneously on the two screens, where they remain for 4 seconds. The chimeric pictures of the different emotions were presented in the middle of the screen. The interstimulus interval was at least 7 seconds, but if a subject did not resume feeding within this time, the following stimulus presentation was postponed. The maximum time allowed to resume feeding was 5 minutes. Visual stimuli were presented as a PowerPoint slideshow in which the first, the last, and in between stimuli slides were homogeneous black. All the seven emotional face dyads were displayed only once per each dog since it was registered a high level of habituation to the stimuli during the pilot test.

Two experimenters controlled the stimuli presentation from an adjacent room with the same system described in Siniscalchi et al. (2018).

Data analysis

Head-orienting response

Lateral asymmetries in the head turning response were considered since they represent an indirect parameter of the main involvement of the hemisphere contralateral to the side of the turn in processing the stimulus (Siniscalchi et al., 2010). Three different responses were evaluated: turn right, turn left, and no response, when a subject did not turn its head within 6 seconds from the picture appearance. The asymmetrical response was computed attributing a score of 1.0 for left head turning responses, −1.0 for the head turning to the right side or zero in the event of no turns of the head.

Behavioural score

Dogs’ behaviours were video recorded continuously throughout the experiment. A total of 26 behaviours were considered, belonging to the stress behavioural category (Handelman, 2012): ears held in tension, slightly spatulate tongue, tongue way out, braced legs, tail down-tucked, panting, salivating, look away of avoidance, flattened ears, head lowered, paw lifted, lowering of the body posture, vocalization, whining, shaking of the body, running away, hiding, freezing, lips licking, yawning, splitting, blinking, seeking attention from the owner, sniffing on the ground, turn away, and height seeking posture.

Two trained observers, analysed the video footages and allocated a score of 1 per each behaviour shown. The interobserver reliability was assessed by means of independent parallel coding of videotaped sessions and calculated as percentage agreement; percentage agreement was always more than 91%. Furthermore, the latency time needed to turn the head toward the stimuli (i.e. reactivity) and to resume feeding from the bowl after the pictures appearance were computed.

Cardiac activity

The heart rate response to the stimuli presentation was evaluated following the procedures and the analysis previously described in Siniscalchi et al. (2016) and Siniscalchi et al. (2018). The PC-Vetgard+tm Multiparameter wireless system, to which dogs were previously accustomed, was used to record continuously the cardiac activity during the test. The heart rate response was analysed from the pictures appearance for at least the following 10 seconds or till the dog resumed to feed (maximum time allowed was 5 minutes). For the analysis, a heart rate curve was obtained during a pre-test in order to calculate the heart rate basal average (HR baseline). The highest (HV) and lowest (LV) values of the heart rate registered during the test were scored. Moreover, the area delimited by the HR curve and the baseline was computed for each dog and each visual stimulus using Microsoft Excel®. The area under the curve (above baseline and under curve; AUC) and the area above the curve (under baseline and above curve; AAC) values were calculated as number of pixels employing Adobe Photoshop. HR changes for each dog during presentations of different emotional faces were then analysed by comparing different area values with the corresponding baseline.

Statistical analysis

Head-orienting response

Given that data for percentage of responses (%Res) were not normally distributed, the analysis was conducted by means of nonparametric tests (Friedman’s ANOVA).

A binomial GLMM analysis was performed to assess the influence of emotion category, human face gender, and sex on the test variable: head-orienting response, with subjects as random a variable. To detect differences between the emotion categories, Fisher’s least significant difference (LSD) pairwise comparisons were performed. In addition, asymmetries at group-level (i.e. emotion category) were assessed via one-sample Wilcoxon signed ranks test, to report significant deviations from zero.

Latency to resume feeding, reactivity, behavioural score and cardiac activity

GLMM analyses was performed to assess the influence of emotion category, human face gender, and sex on the test variable: latency to resume feeding, reactivity, AUC, AAC, and stress behaviours, with subjects as a random variable. To detect differences between the emotion categories Fisher’s least significant difference (LSD), pairwise comparisons were performed.

Ethics statement

The experiments were conducted according to the protocols approved by the Italian Minister for Scientific Research in accordance with EC regulations and were approved by the Department of Veterinary Medicine (University of Bari) Ethics Committee EC (Approval Number: 5/15); in addition, before the experiment began, informed consent was obtained from all the participants included in the study.

Results

Head-orienting response

Friedman’s ANOVA revealed that there was no effect of human facial expression on the percentage of response, χ2(6, N = 21) = 8,900, p = .179; average %: anger (90.4%), fear (80.9%), disgust (71.4%), sadness (71.4%), surprise (76.2%), happiness (95.2%), and neutral (71,4%).

Results for the head-orienting response to visual stimuli are shown in Fig. 3. A significant main effect of different human facial expression was observed, F(6, 107) = 3.895, p = .001 (GLMM analysis). Pairwise comparisons revealed that this main effect was due to fear, anger., and happiness stimuli being significantly different from surprise (p < .001) and neutral (fear and anger vs. neutral, p < .005; happiness vs. neutral, p < .001). The analysis revealed also that disgust was significantly different from anger and happiness (p < .05). In addition, separate analysis for different human faces revealed that for fear, anger, and happiness facial expressions, dogs consistently turned their head with the left eye leading (fear: Z = 117.000, p = .029; anger: Z = 150.000, p = .012; happiness: Z = 168.000, p = .007; one-sample Wilcoxon signed ranks test; see Fig. 3). A slight tendency to turn the head to the left side was observed for ‘sadness’ human faces, but it was not statistically significant (Z = 60.000, p = .593). On the other hand, dogs significantly turned their head to the right side in response to pictures of human ‘surprise’ emotional faces (Z = 34.000, p = .046). No statistical significant biases in the head-turning response were found for ‘disgust’ and ‘neutral’ visual stimuli (p > .05). In addition, binomial GLMM analysis revealed that the direction of head-orienting response turns was not significantly influenced by human face gender, F(1, 107) = 3.820, p = 0.053; sex, F(1, 107) = 1.359, p = 0.246; and visual stimuli chimeras, F(1, 107) = 2.985, p = 0.087.

Head-orienting response to human faces expressing different emotions. Laterality index for the head-orienting response of each dog to visual stimuli: A score of 1.0 represents exclusive head turning to the left side and −1.0 exclusive head turning to the right side (group means with SEM are shown); asterisks indicate significant biases. *p < .05, **p < .01 (one-sample Wilcoxon signed ranks test)

Latency to resume feeding and reactivity

A significant main effect of visual emotional stimuli was identified in mean latency to resume feeding, F(6, 107) = 10.359, p = .000 (GLMM analysis; see Fig. 4a): pairwise comparisons revealed that the latency was longer for ‘anger’ than for any other emotional human faces (p < .001, Fisher’s LSD). In addition the dogs were less likely to resume feeding from the bowl when they attend to ‘fear’ stimulus with respect to ‘disgust’ (p = .020), and ‘neutral’ (p = .031) stimuli. No effects of human face gender, F(1, 107) = 0.305, p = .582, and sex, F(1, 107) = 2.985, p = 0.087,) on the latency to resume feeding were revealed.

Latency to resume feeding (a) and reactivity (b). a Latency to resume feeding from the bowl for each dog for each visual stimulus (group means with SEM are shown); asterisks indicate significant biases. *p < .05,. ***p < .001, Fisher’s LSD test. b Latency time needed to turn the head toward the stimuli (i.e. reactivity) (group means with SEM are shown); asterisks indicate significant biases. *p < .05, ***p < .001, Fisher’s LSD test

Finally, GLMM analysis revealed that left-left chimeric pictures (M = 5.498, SEM = 0.323) elicited significant longer latencies with respect to right-right ones (M = 4.526, SEM = 0.324), F(1, 107) = 6.654, p = .011.

As for reactivity, a significant main effect of visual emotional stimuli was identified: F(6, 107) = 3.702, p = .002 (GLMM analysis; see Fig. 4b): Pairwise comparisons revealed that the reactivity was shorter for fear and anger than for any other emotional human faces (p < .05; Fisher’s LSD, fear and anger vs. neutral, p < .001). No effects of human face gender, F(1, 107) = 1.350, p = 0.248; sex, F(1, 107) = 0.158, p = .692; and visual stimuli chimeras, F(1, 107) = 0.005, p = .943, on the reactivity to respond to visual stimuli were revealed.

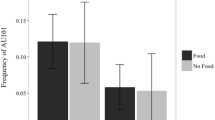

Behavioural score

As to behavioural score, analysis of the stressed behavioural category revealed that there was a significant difference between visual stimuli, F(6, 106) = 29.074, p = .000 (GLMM analysis: see Fig. 5). Post hoc analysis revealed that dogs showed more stress-related behaviours when they attended to anger and happiness stimuli than to the other emotional faces (p < .001, Fisher’s LSD). In addition, stressed behavioural score was higher for fear than for disgust and neutral (p < .001). Pairwise comparisons analysis revealed also that stress behaviours were higher when subjects attended to sadness and disgust stimuli with respect to surprise and neutral ones (sadness and disgust vs. surprise, p < .01; sadness vs. neutral, p < .001; disgust vs. neutral, p < .01).

No effects of human face gender, F(1, 106) = 0.012, p = 0.913, and sex, F(1, 106) = 0.301, p = 0.584, on scores for stress behaviours were revealed.

Finally, GLMM analysis revealed that left-left chimeric pictures (M = 4.528, SEM = 0.185) elicited significant higher scores for stress behaviours with respect to right-right ones (M = 3.564, SEM = 0.181), F(1, 106) = 15.495, p < .001.

Cardiac activity

Results for the cardiac activity are shown in Fig. 6. A statistically significant main effect of different emotional faces was observed in the overall time at which heart-rate values were higher than the basal average, AUC: F(6, 107) = 49.117, p < .001; overall, pairwise comparisons analysis revealed that AUC values were higher for stimuli depicting clear arousing emotional state than for the other stimuli: fear, anger, and happiness vs. sadness, disgust, surprise, and neutral (p < .001); anger vs. happiness (p = .040); fear vs. happiness (p = .002), vs. neutral (p = .004); in addition the overall time at which heart-rate values were higher than the basal average was higher for surprise than disgust (p = .004) and neutral (p = .043). Similarly to the behavioural results, GLMM analysis for left-left and right-right human chimeric faces revealed that the composite pictures made up of the left hemiface elicited significantly stronger AUC levels with respect to the composite pictures made up of the right hemiface (L-L pictures: M = 6,809,123.945, SEM = 178,468.906; R-R pictures: M = 5,933,745.620, SEM = 178,471.283), F(1, 107) = 12.878, p = .001. No effects of human face gender, F(1, 107) = 0.012, p = 0.913, or sex, F(1, 107) = 0.873, p = 0.352, on AUC values were revealed.

No statistical significant effects were observed in AAC values: emotion category, F(6, 107) = 0.578, p = .747; human face gender, F(1, 107) = 0.016, p = .899; sex, F(1, 107) = 0.018, p = .893; and visual stimuli chimeras, F(1, 107) = 0.627, p = .238.

Discussion

Overall, our results revealed side biases associated with left-right asymmetries in the head-orienting response to visual stimuli depicting Ekman’s basic emotional facial expressions. In particular, dogs turned their head to the left in response to anger, fear and happiness emotional faces. Given that the information presented in lateral part of each visual hemifield are mainly analysed by the contralateral hemisphere (Siniscalchi et al., 2010), the left head-turning response suggests a right-hemispheric-dominant activity in processing these emotional stimuli. The prevalent activation of the right hemisphere in the visual analysis of anger and fear stimuli is consistent with the specialisation found in several vertebrates of right neural structures for the expression of intense emotions, including aggression, escape behaviour, and fear (Rogers & Andrew, 2002; Rogers, Vallortigara, & Andrew, 2013). Specifically, in dogs the main involvement of the right hemisphere in the analysis of arousing visual stimuli has been reported in response to ‘alarming’ black animal silhouettes (i.e. a cat silhouette, displaying a defensive threat posture, and a snake silhouette, considered as an alarming stimulus for mammals; Lobue & DeLoache, 2008; Siniscalchi et al., 2010). Furthermore, a right hemispheric bias in the analysis of stimuli with a high emotional valence has been found in the auditory and olfactory sensory modalities (Siniscalchi et al., 2016; Siniscalchi et al., 2018). In particular, regarding olfaction, it was observed a prevalent use of the right nostril (i.e. a right hemisphere activation, since the mammalian olfactory system ascends mainly ipsilaterally to the brain; Royet & Plailly, 2004) during a free sniffing behaviour of odours that are clearly arousing for dogs (e.g. adrenaline and veterinary sweat; Siniscalchi et al., 2011) and in sniffing at human ‘fear’ odours (i.e. sweat samples collected while humans watched a fear-eliciting video; Siniscalchi et al., 2016). Similarly, dogs showed an asymmetrical head-orienting response to the left side (right hemisphere activity) in response to the playbacks of human fear emotional vocalizations (Siniscalchi et al., 2018).

Overall, our results from the arousal dimension supported the prevalent activation of the right hemisphere in the analysis of anger, fear, and happiness human faces since tested subjects exhibited a longer latency to resume feeding and a higher stress levels in response to these emotional stimuli compared to the others over the experiment.

In addition, the dominant role of the right hemisphere in the analysis of anger, fear, and happiness faces is supported by a higher cardiac activity registered for these visual stimuli compared with the others. In fact, in dogs, as well as in other mammals, the right hemisphere has a greater effectiveness in the regulation of the sympathetic outflow to the heart, which is a fundamental organ for the control of the ‘fight or flight’ behavioural response (Wittling, 1995, 1997).

Thus, although humans and dogs show similarities in the perception of faces expressing emotions with a negative valence, such as anger and fear, our results about ‘happiness’ faces suggest that dogs process human smiling faces differently than humans do. One possible logical explanation for the involvement of the right hemisphere in the analysis of a ‘happiness’ emotional face is that, due to the absence of auditory information, the evident bared teeth with lifted lips characterizing human smiles could elicit an alerting behavioural response in dogs (right hemisphere activity). In fact, in dog’s body communication, showing evident bared teeth with lips lifted and tongue retracted are clear messages to back off and are often followed by more serious aggression behaviour (Handelman, 2012). This hypothesis is supported by recent findings demonstrating that dogs’ perception of canine and human facial expressions is based, indeed, on the composition formed by eyes, midface, and mouth (Somppi et al., 2016). An alternative hypothesis is that, given the low visual acuity in periphery and similarity in facial configuration between happy and angry expression, it is plausible that dogs will mistake happy with angry expression at the initial face detection stage, hence activating right hemisphere to process negative emotions. However, differences in the latency of head turning toward different expression categories (i.e. anger and fear with respect to other emotional faces) indicate that dogs are able to detect different expressive faces presented at periphery visual field, suggesting that the latter hypothesis is unlikely.

The absence of a significant bias in the head turning response to ‘sadness’ visual stimuli could be explained by the fact that the functional and communicative levels of this emotion could vary in relation to different contexts in which it is produced and perceived. For example, although previous studies reported that human ‘sadness’ vocalizations are perceived as having a negative emotional valence (dogs showed a right hemisphere advantage in processing these sounds; Siniscalchi et al., 2018), Custance and Mayer (2012) demonstrated that ‘sadness’ facial expression displayed by a human pretending to cry could clearly elicit an approaching behavioural response (left hemisphere) in the receiver (namely the dog), even if they are unknown.

Regarding ‘surprise’ facial expressions, a clear right bias in dogs’ head-turning response was observed, suggesting the prevalent activation of the left hemisphere in processing these stimuli. Previous studies on humans reported that the emotion of surprise could express different levels of arousal intensity and, therefore, the emotional valence attributed (negative or positive) could be strictly dependent on the individual prior experiences (Maguire, Maguire, & Keane, 2011). One possible explanation for the involvement of dogs left hemisphere in the analysis of this emotion could be found in previous neuroimaging studies on humans which showed a greater discrimination accuracy of stimuli with variable levels of arousal occurring in the left human amygdala compared to the right one (Hardee, Thompson, & Puce, 2008; Morris et al., 1996). Furthermore, relying only on visual and not on auditory information (as previously described for ‘happiness’), dogs would have interpreted the ‘surprise’ face as a relaxed expression which typically elicits an approaching behavioural response that is under the left hemisphere control (Siniscalchi, Lusito, Vallortigara, & Quaranta, 2013). In interspecific communicative patterns, indeed, the open mouth without evident bared teeth and lifted lips are often associated with a relaxed emotional state and willingness of approach (Handelman, 2012). The latter hypothesis would be confirmed by the evidence that although the cardiac activity increased during the presentation of ‘surprise’ stimuli, stress levels remained very low.

Regarding ‘disgust’ human emotional faces, no biases in dogs’ head-turning response were observed. This finding fits in with our previous results on dogs’ perception of human ‘disgust’ vocalizations (Siniscalchi et al., 2018) and confirms the hypothesis of Turcsán Szánthó, Miklósi, and Kubinyi (2015) about the ambiguous valence that this emotion could have for canine species. Dogs, indeed, perceive ‘disgust’ as being a less distinguishable emotion than the others, and the valence that they attribute to it could be strictly dependent on their previous experiences. In everyday life, the same object or situation could elicit different motivational and emotional states in humans and dogs. For instance, dog faeces could elicit a ‘disgust’ emotional state in the owner, while, on the contrary, they could be considered as ‘attractive’ by dogs, eliciting their approaching responses.

Finally, as for separated analysis of mirrored chimeric faces (composite pictures made up of the left-left and the right-right hemifaces), our results showed that dogs displayed a higher behavioural response and cardiac activity in response to left-left pictures compared to right-right ones. Thus, it can be concluded that dogs and humans show similarities in processing human emotional faces, since it has been reported that people perceive the left hemiface composite pictures as displaying stronger emotions than the right one (Lindell, 2013; Nicholls et al., 2004). Moreover, this finding is consistent with the general hypothesis of the main involvement of the right hemisphere in expressing high arousal emotions (Dimberg & Petterson, 2000).

Overall, our data showed that dogs displayed a higher behavioural and cardiac activity in response to human face pictures expressing clear arousal emotional states, demonstrating that dogs are sensitive to emotional cues conveyed by human faces. In addition, a bias to the left in the head-orienting response (right hemisphere) was observed when they looked at human faces expressing anger, fear and happiness emotions, while an opposite bias (left hemisphere dominant activity) was observed in response to ‘surprise’ human faces. These findings support the existence of an asymmetrical emotional modulation of dogs’ brain to process basic human emotions. In particular, they are consistent with the valence model, since they show a right hemisphere main involvement in processing clearly arousing stimuli (negative emotions) and a left hemisphere dominant activity in processing positive emotions. Regarding ‘happiness’ faces, although it has been previously reported dogs ability to differentiate happy from angry faces (Müller et al., 2015; Somppi et al., 2016), the prevalent use of the right hemisphere in response to ‘happiness’ visual stimulus indicated that in the absence of the related vocalization (‘happiness’ human vocalization elicits a clear activation of the left hemisphere in the canine brain), dogs could perceive this emotional face as a stimulus with a negative emotional valence, focusing the importance to baring the teeth during human–dog interactions. Thus, the study findings highlight the importance of evaluating both the valence dimension (head-turning response) and the arousal dimension (behaviour and cardiac activity) for a deep understanding of dogs’ perception of human emotions.

References

Barber, A. L., Randi, D., Müller, C. A., & Huber, L. (2016). The processing of human emotional faces by pet and lab dogs: Evidence for lateralization and experience effects. PLOS ONE, 11(4), e0152393. https://doi.org/10.1371/journal.pone.0152393

Borod, J. C., Haywood, C. S., & Koff, E. (1997). Neuropsychological aspects of facial asymmetry during emotional expression: A review of the normal adult literature. Neuropsychology Review, 7(1), 41–60. https://doi.org/10.1007/BF02876972

Bowers, D., Bauer, R. M., Coslett, H. B., & Heilman, K. M. (1985). Processing of faces by patients with unilateral hemisphere lesions: I. Dissociation between judgments of facial affect and facial identity. Brain and Cognition, 4(3), 258–272. https://doi.org/10.1016/0278-2626(85)90020-X

Call, J., Bräuer, J., Kaminski, J., & Tomasello, M. (2003). Domestic dogs (Canis familiaris) are sensitive to the attentional state of humans. Journal of Comparative Psychology, 117(3), 257. https://doi.org/10.1037/0735-7036.117.3.257

Cuaya, L. V., Hernández-Pérez, R., & Concha, L. (2016). Our faces in the dog's brain: Functional imaging reveals temporal cortex activation during perception of human faces. PLOS ONE, 11(3), e0149431. https://doi.org/10.1371/journal.pone.0149431

Custance, D., & Mayer, J. (2012). Empathic-like responding by domestic dogs (Canis familiaris) to distress in humans: An exploratory study. Animal cognition, 15(5), 851–859. https://doi.org/10.1007/s10071-012-0510-1

D’Aniello, B., Semin, G. R., Alterisio, A., Aria, M., & Scandurra, A. (2018). Interspecies transmission of emotional information via chemosignals: From humans to dogs (Canis lupus familiaris). Animal Cognition, 21(1), 67–78. https://doi.org/10.1007/s10071-017-1139-x

Deputte, B. L., & Doll, A. (2011). Do dogs understand human facial expressions?. Journal of Veterinary Behavior: Clinical Applications and Research, 6(1), 78–79. https://doi.org/10.1016/j.jveb.2010.09.013

Dilks, D. D., Cook, P., Weiller, S. K., Berns, H. P., Spivak, M., & Berns, G. S. (2015). Awake fMRI reveals a specialized region in dog temporal cortex for face processing. PeerJ, 3, e1115. https://doi.org/10.7717/peerj.1115

Dimberg, U., & Petterson, M. (2000). Facial reactions to happy and angry facial expressions: Evidence for right hemisphere dominance. Psychophysiology, 37(5), 693–696. https://doi.org/10.1017/S0048577200990759

Ekman, P. (1993). Facial expression and emotion. American Psychologist, 48(4), 384. https://doi.org/10.1037/0003-066X.48.4.384

Ekman, P., Friesen, W. V., & Ellsworth, P. (2013). Emotion in the human face: Guidelines for research and an integration of findings. New York, NY: Elsevier.

Guo, K., Meints, K., Hall, C., Hall, S., & Mills, D. (2009). Left gaze bias in humans, rhesus monkeys and domestic dogs. Animal Cognition, 12(3), 409–418. https://doi.org/10.1007/s10071-008-0199-3

Handelman, B. (2012). Canine behavior: A photo illustrated handbook. Wenatchee, WA: Dogwise Publishing.

Hardee, J. E., Thompson, J. C., & Puce, A. (2008). The left amygdala knows fear: Laterality in the amygdala response to fearful eyes. Social Cognitive and Affective Neuroscience, 3(1), 47–54. https://doi.org/10.1093/scan/nsn001

Huber, L., Racca, A., Scaf, B., Virányi, Z., & Range, F. (2013). Discrimination of familiar human faces in dogs (Canis familiaris). Learning and Motivation, 44(4), 258–269. https://doi.org/10.1016/j.lmot.2013.04.005

Ley, R. G., & Bryden, M. P. (1979). Hemispheric differences in processing emotions and faces. Brain and Language, 7(1), 127–138. https://doi.org/10.1016/0093-934X(79)90010-5

Lindblad-Toh, K., Wade, C. M., Mikkelsen, T. S., Karlsson, E. K., Jaffe, D. B., Kamal, M.,… Mauceli, E. (2005). Genome sequence, comparative analysis and haplotype structure of the domestic dog. Nature, 438 (7069), 803. https://doi.org/10.1038/nature04338

Lindell, A. K. (2013). Continuities in emotion lateralization in human and non-human primates. Frontiers in Human Neuroscience, 7, 464. https://doi.org/10.3389/fnhum.2013.00464

LoBue, V., & DeLoache, J. S. (2008). Detecting the snake in the grass: Attention to fear-relevant stimuli by adults and young children. Psychological Science, 19(3), 284–289. https://doi.org/10.1111/j.1467-9280.2008.02081.x

Maguire, R., Maguire, P., & Keane, M. T. (2011). Making sense of surprise: An investigation of the factors influencing surprise judgments. Journal of Experimental Psychology: Learning, Memory, and Cognition, 37(1), 176. https://doi.org/10.1037/a0021609

Moreno, C. R., Borod, J. C., Welkowitz, J., & Alpert, M. (1990). Lateralization for the expression and perception of facial emotion as a function of age. Neuropsychologia, 28(2), 199–209. https://doi.org/10.1016/0028-3932(90)90101-S

Morris, J. S., Frith, C. D., Perrett, D. I., Rowland, D., Young, A. W., Calder, A. J., & Dolan, R. J. (1996). A differential neural response in the human amygdala to fearful and happy facial expressions. Nature, 383(6603), 812. https://doi.org/10.1038/383812a0

Müller, C. A., Schmitt, K., Barber, A. L., & Huber, L. (2015). Dogs can discriminate emotional expressions of human faces. Current Biology, 25(5), 601–605. https://doi.org/10.1016/j.cub.2014.12.055

Nagasawa, M., Murai, K., Mogi, K., & Kikusui, T. (2011). Dogs can discriminate human smiling faces from blank expressions. Animal Cognition, 14(4), 525–533. https://doi.org/10.1007/s10071-011-0386-5

Nicholls, M. E., Ellis, B. E., Clement, J. G., & Yoshino, M. (2004). Detecting hemifacial asymmetries in emotional expression with three-dimensional computerized image analysis. Proceedings of the Royal Society B: Biological Sciences, 271(1540), 663. https://doi.org/10.1098/rspb.2003.2660

Pitteri, E., Mongillo, P., Carnier, P., Marinelli, L., & Huber, L. (2014). Part-based and configural processing of owner’s face in dogs. PLOS ONE, 9(9), e108176. https://doi.org/10.1371/journal.pone.0108176

Racca, A., Guo, K., Meints, K., & Mills, D. S. (2012). Reading faces: Differential lateral gaze bias in processing canine and human facial expressions in dogs and 4-year-old children. PLOS ONE, 7(4), e36076. https://doi.org/10.1371/journal.pone.0036076

Rogers, L. J., & Andrew, R. (Eds.). (2002). Comparative vertebrate lateralization. Cambridge, UK: Cambridge University Press.

Rogers, L. J., Vallortigara, G., & Andrew, R. J. (2013). Divided brains: The biology and behaviour of brain asymmetries. Cambridge, UK: Cambridge University Press.

Royet, J. P., & Plailly, J. (2004). Lateralization of olfactory processes. Chemical Senses, 29(8), 731–745. https://doi.org/10.1093/chemse/bjh067

Schmidt, K. L., & Cohn, J. F. (2001). Human facial expressions as adaptations: Evolutionary questions in facial expression research. American Journal of Physical Anthropology, 116(S33), 3–24. https://doi.org/10.1002/ajpa.2001

Siniscalchi, M., d’Ingeo, S., Fornelli, S., & Quaranta, A. (2018). Lateralized behavior and cardiac activity of dogs in response to human emotional vocalizations. Scientific reports, 8(1), 77. https://doi.org/10.1038/s41598-017-18417-4

Siniscalchi, M., d’Ingeo, S., & Quaranta, A. (2016). The dog nose “KNOWS” fear: Asymmetric nostril use during sniffing at canine and human emotional stimuli. Behavioural Brain Research, 304, 34–41. https://doi.org/10.1016/j.bbr.2016.02.011

Siniscalchi, M., Lusito, R., Vallortigara, G., & Quaranta, A. (2013). Seeing left-or right-asymmetric tail wagging produces different emotional responses in dogs. Current Biology, 23(22), 2279–2282. https://doi.org/10.1016/j.cub.2013.09.027

Siniscalchi, M., Sasso, R., Pepe, A. M., Dimatteo, S., Vallortigara, G., & Quaranta, A. (2011). Sniffing with the right nostril: Lateralization of response to odour stimuli by dogs. Animal Behaviour, 82(2), 399–404. https://doi.org/10.1016/j.anbehav.2011.05.020

Siniscalchi, M., Sasso, R., Pepe, A. M., Vallortigara, G., & Quaranta, A. (2010). Dogs turn left to emotional stimuli. Behavioural Brain Research, 208(2), 516–521. https://doi.org/10.1016/j.bbr.2009.12.042

Somppi, S., Törnqvist, H., Kujala, M. V., Hänninen, L., Krause, C. M., & Vainio, O. (2016). Dogs evaluate threatening facial expressions by their biological validity–Evidence from gazing patterns. PLOS ONE, 11(1), e0143047. https://doi.org/10.1371/journal.pone.0143047

Soproni, K., Miklósi, Á., Topál, J., & Csányi, V. (2002). Dogs’ (Canis familaris) responsiveness to human pointing gestures. Journal of Comparative Psychology, 116(1), 27. https://doi.org/10.1037/0735-7036.116.1.27

Tsao, D. Y., & Livingstone, M. S. (2008). Mechanisms of face perception. Annual Review Neuroscience, 31, 411-437. https://doi.org/10.1146/annurev.neuro.30.051606.094238

Turcsán, B., Szánthó, F., Miklósi, Á., & Kubinyi, E. (2015). Fetching what the owner prefers? Dogs recognize disgust and happiness in human behaviour. Animal Cognition, 18(1), 83–94. https://doi.org/10.1007/s10071-014-0779-3

Ulrich, G. (1993). Asymmetries of expressive facial movements during experimentally induced positive vs. negative mood states: A video-analytical study. Cognition & Emotion, 7(5), 393-405. https://doi.org/10.1080/02699939308409195

Wittling, W (1995). Brain asymmetry in the control of autonomic-physiologic activity. In R. J. Davidson & K. Hugdahl (Eds.), Brain asymmetry (pp. 305–357). Cambridge, MA: MIT Press.

Wittling, W. (1997). The right hemisphere and the human stress response. Acta Physiologica Scandinavica: Supplementum, 640, 55–59.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Siniscalchi, M., d’Ingeo, S. & Quaranta, A. Orienting asymmetries and physiological reactivity in dogs’ response to human emotional faces. Learn Behav 46, 574–585 (2018). https://doi.org/10.3758/s13420-018-0325-2

Published:

Issue Date:

DOI: https://doi.org/10.3758/s13420-018-0325-2