Abstract

This study examined the role of visual reliability and action relevance in mutual visual-proprioceptive attraction in a virtual grasping task. Participants initially enclosed either the width or the height of a visual rectangular object with two cursors controlled by the movements of the index finger and thumb. Then, either the height or the width of this object or the distance between the fingers was judged. The judgments of object’s size were attracted by the felt finger distance, and, vice versa, the judged finger distance was attracted by the size of the grasped object. The impact of the proprioceptive information on object judgments increased, whereas the impact of visual object information on finger judgments decreased when the reliability of the visual stimulus was reduced. Moreover, the proprioceptive bias decreased for the action-relevant stimulus dimension as compared with the action-irrelevant stimulus dimension. These results indicate sensory integration of spatially separated sensory signals in the absence of any direct spatial or kinematic relation between them. We therefore suggest that the basic principles of sensory integration apply to the broad research field on perceptual-motor interactions as well as to many virtual interactions with external objects.

Similar content being viewed by others

Introduction

Research suggests that, under diverse conditions, the human body and its real or potential movement affect what we visually perceive (e.g., Bhalla & Proffitt, 1999; Kirsch & Kunde, 2013; Lindemann, & Bekkering, 2009; Schubö, Prinz, & Aschersleben, 2004; Witt, & Sugovic, 2012; for reviews, see, e.g., Harris et al., 2015; Proffitt & Linkenauger, 2013; Thomaschke, Hopkins, & Miall, 2012; Witt, 2011). This research caused intense debates, and the processes that might mediate body-related effects on judgments of visual objects’ features remain poorly understood (e.g., Durgin, Klein, Spiegel, Strawser, & Williams, 2012; Firestone, & Scholl, 2015; Kirsch & Kunde, 2018; Philbeck, & Witt, 2015). According to a common idea, initial sensory information is assumed to be scaled in motor units (e.g., Proffitt & Linkenauger, 2013). A related approach suggests that perception and action interact on the level of rather abstract features coded in a common representational domain (e.g., Hommel, Müsseler, Aschersleben, & Prinz, 2001). Both accounts postulate a certain kind of integration of visual and body-related signals that give rise to changes in visual perception following changes in body-related variables. However, this critical integration process is underspecified.

We recently suggested that actions’ influences on perception are constrained by the same principles that apply for the sensory integration of multimodal signals in general (Kirsch, Herbort, Ullrich, & Kunde, 2017). This approach basically holds that the effects of action on perception are a consequence of the attempt to handle noisy sensory information (cf. Ernst, 2006). To generate a robust percept, signals that originate from the same event are integrated (“object unity principle” hereafter), whereas the weight of each signal depends on its precision, and the integrated estimate achieves a higher precision than the unimodal signals alone (“reliability-based weighting principle” hereafter).

One well-known outcome of the integration process is the mutual biases of body-related and visual signals when they provide marginally divergent information. For example, if observers look at and grasp an object with the index finger and thumb and a discrepancy between visually and haptically specified object’s size is introduced, the perceived size of the object is in between the visual and haptic estimates (Ernst & Banks, 2002). Thus, if an effect of a certain body-state (here, finger distance) on visual object perception is due to multisensory integration, then the visual appearance of that object should, in turn, also affect the perception of the body-related state. Note that this should be so, at least in theory, for any action-related influence on visual perception because virtually all these influences are demonstrated by varying body-related variables while holding the visual input constant (i.e., by introducing a kind of cross-modal conflict).

We have previously tested this prediction in a virtual grasping paradigm (Kirsch et al., 2017). The participants repeatedly enclosed a virtual object (a rectangle) with two cursors controlled by their index finger and thumbs, and we measured the perceived visual size of the rectangle and the finger–thumb distance subsequently. The visual-proprioceptive discrepancy introduced during such virtual grasping resulted in a mutual impact of visual and body-related signals: the judgments of visual object size were attracted toward the felt finger posture, and, vice versa, the judged finger distance was attracted toward the visible size of the grasped object. Importantly, this result was observed even though the rectangle was at a substantial distance to the fingers and in a different spatial plane. Moreover, we made a conceptually similar observation in a virtual reaching task. These findings are intriguing, because sensory integration is well documented for situations in which multimodal signals originate from spatially close origins such as in natural grasping (see above), whereas distances between these signals typically decrease integration (Gepshtein, Burge, Ernst, & Banks, 2005). Our results, however, indicate sensory integration between spatially, clearly separated sources of information.

Some observations from tool use already suggest that sensory integration can occur in spite of a spatial separation of multimodal signals under certain conditions. When participants in a cursor-control task are asked to perform arm movements on a horizontal plane while misaligned visual feedback (i.e., cursor) of the movement direction is displayed in the fronto-parallel plane, the judgment of the felt hand direction shifts toward the seen cursor direction and, vice versa, the judged cursor direction shifts toward the felt hand direction (Debats, Ernst, & Heuer, 2017a, 2017b; Rand & Heuer, 2013, 2016). Moreover, Takahashi and colleagues reported evidence for visual-haptic integration when the hand was offset in respect to the object being grasped—however, only if a tool, such as plier or tong, was used for grasping (Takahashi, Diedrichsen, & Watt, 2009; Takahashi & Watt, 2014, 2017). In these studies, the visual illustrations of tools connected the spatial position of the hand with the spatial position of the object. In a similar vein, Helbig and Ernst (2007) study, visual-haptic integration was observed when participants saw their hand manipulating an object through a mirror.

The object unity principle holds that signals are integrated only if they provide information about the same external object or event (i.e., if they are redundant), as described previously. Thus, whether and to which extent signals are integrated depends on the assessment of whether these signals are causally linked to the same event (cf. Deroy, Spence, & Noppeney, 2016; Ernst & Bülthoff, 2004; Körding et al., 2007; Shams & Beierholm, 2010).Footnote 1 The tool-use studies mentioned above indicate that a kinematic correspondence between motor and visual signals or some explicit cues, such as a mirror or a visible tool, can provide evidence that two signals belong together and thus should be combined even though they have distinct spatial sources. The results of our study extend these findings in that it indicates sensory integration in the absence of any kinematic relation between body movements and an external objectFootnote 2 as well as in the absence of any explicit cues, such as visible tools, which bridge the spatial distance between the seen and the felt object. At this point, our results broaden the application of sensory integration to virtually all cases where body movements have consequences on distal objects. However, more research is needed to substantiate this claim.

The present study served this purpose. Using the virtual grasping task, we focused on two issues. First, we tested how the reliability of visual signals affects the mutual perceptual biases of body-related and visual signals. The reliability-based weighting principle predicts an increase of proprioceptive impact on visual estimates and a decrease of visual impact on proprioceptive estimates with a decrease in reliability of a visual signal. Second, we tested whether the perceptual biases that we previously observed vary depending on the relevance of objects’ characteristics for action. For this purpose, we included the visual estimates of the stimulus dimension that was unrelated to the grasping movements additionally to the judgments of the action-relevant dimension. After, for example, the height of the visual stimulus was enclosed by the movement cursors, either the height (action-relevant feature) or the width (action-irrelevant feature) of the stimulus was judged. The rationale was as follows. For an action-relevant stimulus feature, visual and proprioceptive signals can be considered as redundant (i.e., they inform about the same event). In contrast, for an action-irrelevant feature, the proprioceptive signal is unrelated to the visual signal—that is, the signals are not redundant. Because only redundant signals can be assumed to be integrated according to the object unity principle, visual-proprioceptive integration can be expected for the action-relevant, but not (or substantially less) for the action-irrelevant dimension.Footnote 3 Accordingly, the impact of proprioceptive signal on visual perception should be reduced or even disappear for the action-irrelevant as compared with the action-relevant dimension. Note that the inverse bias (i.e., impact of visual signals on proprioceptive perception) should persist irrespective of whether the height or the width of the object is enclosed because visual and proprioceptive signals relate to the same event in both cases (i.e., each object feature can be considered as informative for the crucial finger distance). In short, a change in the reliability of visual signals should alter the (a) symmetry of visual-proprioceptive attraction, whereas changes in action relevance should be expressed in changes of the strength of this attraction (cf. Debats et al., 2017a).

Method

Participants

Thirty six right-handed participants participated in the study. The sample included 27 females and nine males (Mage = 26, SD = 7). Participants gave written informed consent for the procedures and received monetary compensation (16 €) or course credit for their participation. All were naive to the purpose of the experiment and had normal or corrected-to-normal vision. The sample size was determined a priori based on a pilot experiment and ensured a power of 1 − β = 0.95 for effect sizes of dz = 0.56.

Apparatus and stimuli

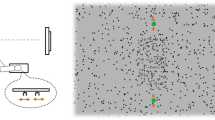

The study was performed in a dimly lit experimental room. Stimuli were displayed on a 19-in. monitor (Fujitsu Siemens P19-1) with a resolution of 1,280 × 1,024 pixels and a refresh rate of 60 Hz. One pixel of the monitor was 0.294 mm in size. Participants were seated at a distance of approximately 68 cm from the screen, with their head supported by a combined chin-and-forehead rest. The main visual stimuli were dark-gray dots (0.3 mm × 0.6 mm in size, with coordinates RGB: 36, 36, 36 in the color space; see also below), a pair of green circles (about 2.5 mm in diameter), a pair of blue lines (7 mm in length and 0.6 mm in width), and six orange lines arranged as a pair of U-shaped objects (7 mm × 11 mm × 7 mm; see Fig. 1). All stimuli were presented on a light-gray background (RGB: 128, 128, 128). The dots were randomly distributed along the defined width and height of a virtual rectangle. We refer to this target stimulus as “rectangle” hereafter.

Apparatus and stimuli used in the present study. Initially, the fingers of the right hand moved the cursors (green circles) toward the opposed edges of a rectangular object consisting of small dots. Then, either the height or the width of the object or the current hand opening was judged with the left hand using a computer mouse. (Color figure online)

A finger movement device was mounted on a table and was manipulated by the index finger and thumb of the right hand. The fingers were placed on U-shaped metal plates which were interlocked so that moving one plate/finger resulted in a mirror-symmetric movement of the second plate/finger. We also bound the index and the middle fingers of the right hand together to prevent exploratory movements of the middle finger during the judgments of finger distance.

Procedure and task

The procedure consisted of two successive parts—object grabbing and perceptual judgment.

Object grabbing

At the beginning of each trial, a pair of dark-gray arrows (5 mm × 0.3 mm, RGB: 68, 68, 68) was presented. The arrows were placed vertically, one upon the other, with arrowheads oriented to each other. This was a signal to move the index and the middle fingers of the right hand inserted into the finger movement device toward each other to initiate a next trial. Then, in the majority of trials, a pair of green circles (i.e., cursors) and a rectangle appeared, and the participants had to place the circles at the opposed edges of the rectangle by moving their fingers (i.e., they were asked to virtually grab the rectangle by visual movement cursors). The cursors always moved in the same direction as the fingers (i.e., toward each other or away from each other). The cursors disappeared when their distance to the edges was less than 5 mm, and a clicking noise was presented when this distance was less than 1 mm (i.e., when cursors reached the edges of the rectangle). Participants were instructed to maintain this finger position and to perform corrective movements when the noise disappeared (i.e., when the circles left the edges of the rectangle).

In some trials (baseline trials), a German word for “search” appeared at the middle of the screen. The task here was to move the fingers until a certain finger distance was reached, at which time a clicking noise was presented. That finger distance always corresponded to one of the finger distances adopted during virtual object grabbing. These trials were included to ensure that changes in the estimates of finger distance following virtual object manipulation were not due to a general bias toward mean finger posture (i.e., due to a central tendency effect).

Perceptual judgment

Following a period of approximately 1.5 s during which the noise was presented, the rectangle disappeared and either a pair of blue lines or orange, U-shaped objects appeared on the screen. When the blue lines appeared at the left side of the screen (~7 cm in respect to the center), the task was to estimate the height of the rectangle. That is, participants had to adjust the distance between the lines so that it corresponded to the height of the rectangle. When the blue lines appeared at the bottom part of the screen (~7 cm in respect to the center), the task was to estimate the width of the rectangle. When the orange objects were presented (~9 cm to the right of the screen center), the current distance between the index and the middle fingers had to be estimated. These judgments were made by pressing buttons of a computer mouse with the left hand. Pressing the left/right button led to an increase/decrease of the distance. The judgment was confirmed by pressing the middle mouse button (scroll wheel). The initial distance between the lines/objects randomly amounted either 50% or 150% of the corresponding feature of the rectangle/actual finger distance (as measured between the inner plates of the movement device).

If participants changed the fingers’ posture of their right hand during the judgments, or if the left or the right mouse buttons were pressed during the grabbing phase, or if the middle mouse button was pressed before an estimate was made, then error feedback was presented, and the trial was repeated.

Design

There were four gain conditions. Finger movements were transformed to the movements of the cursors so that the finger distance amounted to 0.4, 0.8, 1.2, or 1.6 of the distance between the cursors. This ensured that a given rectangle feature (i.e., height or width) was associated with a finger distance during grabbing corresponding to 40%, 80%, 120%, or 160% of that feature. Two rectangles were used (3.7 cm × 4.3 cm and 4.3 cm × 3.7 cm) that consisted of either 69 (low-noise condition) or 12 (high-noise condition) dots randomly distributed within the defined rectangle area. Cursors moved either vertically or horizontally—that is, either along the height or width of the rectangle. Also, the type of judgment (rectangle’s width, rectangle’s height, finger distance) varied as mentioned. There were thus five main factors—gain (four levels), rectangle (two levels), visual noise (two levels), cursor orientation (two levels), type of judgment (three levels)—resulting in 96 experimental conditions. Additionally, we also included eight baseline conditions in which judgments of finger distance were required after no visual object was virtually grabbed. Here, the adopted finger postures corresponded to those required for grabbing each side of both rectangles (i.e., 3.7 cm and 4.3 cm) using the four gains.

The main experiment included 416 trials (four repetitions of each condition) distributed over eight blocks. The order of conditions was random. Before the main experiment started, participants performed 14 practice trials that were not included in the analyses.

Data analysis

For each trial, a difference score was computed between the actual and the estimated spatial distance (constant error hereafter). By definition, positive values reflect overestimation; negative values indicate underestimation. Then, mean constant-error values were calculated for each participant and each experimental condition. To measure the impact of the gain variation on judgment behavior, we performed linear regression analyses (cf. Debats et al., 2017b). The gain was used as a predictor variable and mean constant-error values as the dependent variable. These analyses were performed for each of the remaining factors and for each participant. The resulting slope parameters (i.e., unstandardized regression coefficients, “B”) indicating the magnitude of biases (and hence the strength of intersensory coupling) were then statistically analyzed using analyses of variance (ANOVAs) and a priory t tests. The data of rectangle and of finger judgments were analyzed separately. The raw data are publicly available (https://osf.io/uh2nj/).

Results

Mean constant-error values for each of the main experimental conditions are shown in Fig. 2a. An increase in gain was generally associated with an increase of rectangle’s estimates and with a decrease of the judged finger distance, as expected. This is a replication of our previous results. More importantly, the magnitude of the biases varied as a function of the match between the judged stimulus dimension and the relevance of this dimension for action: the factor gain had a stronger impact on rectangle’s judgment when the judged dimension was relevant for the grabbing action than when it was not relevant. Moreover, the gain had more impact on rectangle’s judgments and less impact on finger judgments in the high-noise condition as compared with the low-noise condition.

These observations are supported by the results of the regression analyses. Figure 2b shows mean slope coefficients of the different experimental conditions when regressing the judgment data on the four gains. Except for the baseline conditions (see below), all coefficients were significantly different from zero, all t(35) < .04. An ANOVA, including these values as a dependent measure, and rectangle, visual noise, cursor orientation, and type of judgment (only height and width) as within-participants factors, revealed a significant main effect for the factor visual noise, F(1, 35) = 6.87, p = .013, ηp2 = .164, and a significant interaction between cursor orientation and type of judgment, F(1, 35) = 6.04, p = .019, ηp2 = .147 (other ps > .139). The slope was lower for the vertical than for the horizontal cursor orientation when the width was judged, t(35) = 2.26, p = .030, and vice versa when the height judgment was required, t(35) = 2.03, p = .050. The slope of the height judgments was larger than the slope of the width judgments when the cursors moved vertically, t(35) = 2.05, p = .048, and vice versa when the cursors moved horizontally, t(35) = 1.61, p = .116.

An ANOVA including the coefficients of finger judgments and rectangle, visual noise and cursor orientation as within-participants factors revealed significant main effects for visual noise, F(1, 35) = 5.25, p = .028, ηp2 = .130, and cursor orientation, F(1, 35) = 7.03, p = .012, ηp2 = .167, and a significant interaction between rectangle and cursor orientation, F(1, 35) = 11.64, p = .002, ηp2 = .250. In addition to the predicted effect of visual noise, we observed larger gain effects (i.e., slopes) in the finger judgments for the vertical than for the horizontal cursor orientation (cf. Fig. 2b). Also, the wider rectangle was associated with a more negative slope when the cursor moved horizontally (M = −12.1) than when it moved vertically (M = −11.4), whereas the opposite was true for the narrower rectangle (with mean values of −9.9 and −13.5). We do not discuss these side effects further.

When no rectangle was presented (i.e., in the baseline trials), mean slope coefficients of finger judgments amounted to −.4 and 1.3 for the finger distances according to the grabbing of wider and narrower rectangle, respectively. Both were not significantly different from zero, t(35) = .25, p = 806, and t(35) = .68, p = .503, respectively. Thus, the observed biases in the finger judgments following virtual grabbing were not due to the tendency toward a mean finger distance.

As shown in Fig. 2, participants generally tended to overestimate the size of the rectangle, and this bias varied to some extent depending on the type of judgment, cursor orientation, and visual noise. Moreover, finger distances were underestimated on average. These tendencies could have several origins. For example, a space filled with a number of distinct elements usually appears larger than when it is empty (e.g., Collier & Lawson, 2016). Accordingly, the extent of the rectangle should appear larger than the distance between the lines by means of which the judgment was made. As a consequence, the distance between the lines is adjusted to a larger rectangle. Moreover, the observed underestimation of finger distances could be related to the known changes in proprioceptively sensed limb position during visual occlusion (e.g., Wann & Ibrahim, 1992). A detailed discussion of these and related factors that do not relate to the introduced visual-proprioceptive discrepancy (i.e., gain) is beyond the scope of the present study.

Discussion

We examined the role of visual reliability and action relevance in perceptual attraction between proprioceptive and visual signals in a virtual grasping task. Participants initially enclosed either the height or the width of a visual object by two manually controlled cursors. Then, they judged either the height or the width of the object, or the distance between their fingers. Finger movements were transformed in cursor movement by different gains so that the same object was grabbed by different finger openings. The judgments of object’s size were generally attracted by the felt finger posture, and, vice versa, judged finger distance was generally attracted by the size of the grasped object. However, the impact of the finger distance on rectangle judgments was substantially less than the impact of the object size on finger judgments. This observation is in line with previous reports (e.g., Kirsch et al., 2017; Rand & Heuer, 2013) and indicates that visual signals received more weight than the proprioceptive information under the present task conditions. The visual input appears thus to have been generally more reliable in spite of the implemented degradation of the visual stimulus, its short presentation, and proprioceptive information being available during perceptual judgments.

Importantly, this asymmetry of the perceptual attraction depended on the quality of visual information: The impact of proprioceptive information on visual object judgments increased while the impact of visual object information on proprioceptive judgments decreased when the visual stimulus was more degraded. Moreover, the proprioceptive bias in object judgments was reduced when the action-irrelevant as compared with the action-relevant stimulus dimension was judged. This indicates that the strength of sensory integration was smaller for the action-relevant than for the action-irrelevant dimension.

This predicted outcome corresponds well to the core principles of sensory integration, according to which multimodal signals are integrated when they provide information about the same environmental property taking into account the precision of each signal. Several factors such as spatial or temporal correspondences or causal beliefs about the task can inform about whether signals relate to a common source (see, e.g., Bresciani et al., 2005; Gepshtein et al., 2005; see also Deroy et al., 2016, for a review). In the present virtual grasping task, there was a high spatial and temporal similarity between the finger movements and their visual counterparts (i.e., cursors). Moreover, participants consistently received auditory feedback indicating a virtual touching of object edges. Thus, it was rather obvious that the finger movements refer to the visual target object in spite of their spatial distance.

However, the proprioceptive signal was potentially informative only for the stimulus dimension being virtually grasped, but not for its action-irrelevant dimension. Nonetheless, an indication of sensory integration was also observed for the action-irrelevant stimulus dimension, being, however, less pronounced as for the action-relevant dimension. In other words, finger movements affected the size of the target object in addition to their predicted impact on object’s shape. This suggests that participants partly erroneously combined signals that did not relate to the same environmental feature (but that related to the same object). The reason for this outcome is not clear so far, and could be that it is rather difficult to isolate two features of a single object, such as its height and width, as potentially independent information sources, at least as compared with separate objects. This would be consistent with a more general version of the object unity principle than we proposed. In particular, sensory signals from one modality could be combined with signals from another modality as long as they belong to the same object and irrespective of whether they inform about the same object feature. Alternatively, other than causal inference processes, such as a response bias, could be responsible for this specific outcome.

In the research on the link between perception and action, the finding of a mutual impact of both is not new (e.g., Grosjean, Zwickel, & Prinz, 2009; Schubö et al., 2004; Zwickel, Grosjean, & Prinz, 2010a, 2010b). Both repulsive (or “contrast”) and attraction (or “assimilation”) biases have been reported, and factors such as “feature overlap,” “functional relation,” “concurrency,” “ambiguity,” and “planning versus control” have been assumed to determine the direction of the effect (see Thomaschke et al., 2012; Zwickel & Prinz, 2012, for reviews). However, this issue is still not resolved (cf. Kirsch & Kunde, 2014). Interestingly, contrast effects have often been reported when stimuli and actions were functionally unrelated and merely overlapped in time. In contrast, when stimuli and actions were functionally related, assimilation effects have often been observed (cf. Zwickel & Prinz, 2012). This pattern of results fits well into the multisensory perspective suggested here. Contrast phenomena can be expected when perceptual and body-related signals are assumed to be unrelated and thus should be kept separate. Attraction biases, in contrast, indicate a sensory coupling of signals that are assumed to belong together. Thus, the direction of an effect (as well as its magnitude) could mainly be determined by causal inference processes assessing the extent of the relation between visual and body-related events. Task-related factors, such as those mentioned above, could then be construed as cues providing evidence in favor or against “object unity.” This view is not at odds with the common coding approach that has often been used to explain repulsive and attraction phenomena (see the Introduction),Footnote 4 and it provides a new perspective on these phenomena that may foster their deeper understanding.

In sum, the present study provides strong clues for the integration of proprioceptive signals and visual object information in spite of a clear spatial separation between their origins. This makes the basic principles of multisensory integration potentially applicable to many daily interactions with the world, including, but potentially not limited to, using virtual tools.

Author note

This research was supported by Grant KI 1620/3-1 awarded to W. Kirsch by the German Research Council (DFG). The raw data have been made publicly available via the Open Science Framework and can be accessed at https://osf.io/uh2nj/.

Notes

Such causal inference processes can be construed as expectations (priors in Bayesian terms) which determine the probability that two signals refer to the same object and thus the magnitude of integration (e.g., Ernst, 2006, 2007; Roach, Heron, & McGraw, 2006). Accordingly, the outcome of this process varies on a continuum from a complete fusion of the signals into a single percept to partial integration and a complete independence.

Note that in our study (Kirsch, Herbort, Ullrich, & Kunde, 2017), finger movements were accompanied by movements of visual cursors as in several previous tool-use studies. However, in contrast to the latter studies, our participants additionally manipulated a static object, and then the perception of this object was measured. Accordingly, while there was a strong kinematic correspondence between body movements and cursor movements, there was no kinematic relation between body movements and the critical object being manipulated in our study.

This logic is also in line with findings indicating that intersensory discrepancies alter the perceived shape of an object (see, e.g., Welch & Warren, 1980). For example, if a manually explored rectangle is optically distorted and looks like a square, its perceived shape is in between the haptic and the visual shape (Helbig & Ernst, 2007; cf. also Rock & Victor, 1964). Note that the term “object unity principle” is somewhat misleading in the present context because it apparently implies sensory integration of signals that inform about different object features (i.e., of nonredundant signals) when both belong to the same object (see also Discussion section).

For example, contrast effects in visual judgments are assumed to arise if a feature integrated in an action plan (e.g., spatial feature “left” during planning a movement to the left) is less available for a concurrent perceptual task (e.g., Hommel, 2004; for a similar suggestion, see Schubö et al., 2004; Zwickel et al., 2010a). Assimilation effects, in contrast, should follow a disintegration of features (i.e. a dissolving of binding) according to this line of thought (Zwickel & Prinz, 2012).

References

Bhalla, M., & Proffitt, D. R. (1999). Visual–motor recalibration in geographical slant perception. Journal of Experimental Psychology: Human Perception and Performance, 25(4), 1076–1096.

Bresciani, J. P., Ernst, M. O., Drewing, K., Bouyer, G., Maury, V., & Kheddar, A. (2005). Feeling what you hear: Auditory signals can modulate tactile tap perception. Experimental Brain Research, 162, 172–180.

Collier, E. S., & Lawson, R. (2016). Defining filled and empty space: reassessing the filled space illusion for active touch and vision. Experimental Brain Research, 234, 2697–2708.

Debats, N. B., Ernst, M. O., & Heuer, H. (2017a). Kinematic cross-correlation induces sensory integration across separate objects. European Journal of Neuroscience, 46, 2826–2834.

Debats, N. B., Ernst, M. O., & Heuer, H. (2017b). Perceptual attraction in tool-use: Evidence for a reliability-based weighting mechanism. Journal of Neurophysiology, 117, 1569–1580.

Deroy, O., Spence, C., & Noppeney, U. (2016). Metacognition in multisensory perception. Trends in Cognitive Sciences, 20(10), 736–747.

Durgin, F. H., Klein, B., Spiegel, A., Strawser, C. J., & Williams, M. (2012). The social psychology of perception experiments: Hills, backpacks, glucose, and the problem of generalizability. Journal of Experimental Psychology: Human Perception and Performance, 38, 1582–1595.

Ernst, M. O. (2006). A Bayesian view on multimodal cue integration. In G. Knoblich, I. M. Thornton, M. Grosjean, & M. Shiffrar (Eds.). Human body perception from the inside out (pp. 105–131). New York, NY: Oxford University Press.

Ernst, M. O. (2007). Learning to integrate arbitrary signals from vision and touch. Journal of Vision, 7, 1–14.

Ernst, M. O., & Banks, M. S. (2002). Human integrate visual and haptic information in a statistically optimal fashion. Nature, 415(24), 429–433.

Ernst, M. O., & Bülthoff, H. H. (2004). Merging the senses into a robust percept. Trends in Cognitive Science 8(4), 162–169.

Firestone, C., & Scholl, B. J. (2015). Cognition does not affect perception: Evaluating the evidence for “top-down” effects. Behavioral and Brain Sciences, 1–72. Advance online publication. doi:https://doi.org/10.1017/S0140525X15000965

Gepshtein, S., Burge, J., Ernst, M. O., & Banks, M. S. (2005). The combination of vision and touch depends on spatial proximity. Journal of Vision, 5, 1013–1023.

Grosjean, M., Zwickel, J., & Prinz, W. (2009). Acting while perceiving: Assimilation precedes contrast. Psychological Research, 73(1), 3–13.

Harris, L. R., Carnevale, M. J., D’Amour, S., Fraser, L. E., Harrar, V., Hoover, A. E. N., Mander, C., & Pritchett, L. M. (2015). How our body influences our perception of the world. Frontiers in Psychology, 6, 819. doi:https://doi.org/10.3389/fpsyg.2015.00819

Helbig, H. B., & Ernst, M. O. (2007). Knowledge about a common source can promote visual–haptic integration. Perception, 36, 1523–1533.

Hommel, B. (2004). Event files: Feature binding in and across perception and action. Trends in Cognitive Sciences, 8(11), 494–500.

Hommel, B., Müsseler, J., Aschersleben, G., & Prinz, W. (2001). The theory of event coding (TEC): A framework for perception and action planning. Behavioral and Brain Sciences, 24, 849–937.

Kirsch, W., Herbort, O., Ullrich, B., & Kunde, W. (2017). On the origin of body-related influences on visual perception. Journal of Experimental Psychology: Human Perception and Performance, 43(6), 1222–1237.

Kirsch, W., & Kunde, W. (2013). Visual near space is scaled to parameters of current action plans. Journal of Experimental Psychology: Human Perception and Performance,39(5), 1313–1325.

Kirsch, W., & Kunde, W. (2014). Impact of planned movement direction on judgments of visual locations. Psychological Research, 78, 705–720.

Kirsch, W., & Kunde, W. (2018). The paddle effect in the Pong task is not due to blocking ability of the observer. Journal of Experimental Psychology: Human Perception and Performance. 44(11), 1799–1804.

Körding, K. P., Beierholm, U., Ma, W. J., Quartz, S., Tenenbaum, J. B., & Shams, L. (2007). Causal inference in multisensory perception. PLOS ONE, 2, e943. doi:https://doi.org/10.1371/journal.pone.0000943

Lindemann, O., & Bekkering, H. (2009). Object manipulation and motion perception: Evidence of an influence of action planning on visual processing. Journal of Experimental Psychology: Human Perception and Performance, 35, 1062–1071.

Philbeck, J. W., & Witt, J. K. (2015). Action-specific influences on perception and postperceptual processes: Present controversies and future directions. Psychological Bulletin, 141, 1120–1144.

Proffitt, D. R., & Linkenauger, S. A. (2013). Perception viewed as a phenotypic expression. In W. Prinz (Ed.), Tutorials in action science (pp. 171–197). Cambridge, MA: MIT Press.

Rand, M. K., & Heuer, H. (2013). Implicit and explicit representations of hand position in tool use. PLOS ONE, 8(7), e68471. doi:https://doi.org/10.1371/journal.pone.0068471

Rand, M.K., & Heuer, H. (2016). Effects of reliability and global context on explicit and implicit measures of sensed hand position in cursor control tasks. Frontiers in Psychology, 6, 2056. doi:https://doi.org/10.3389/fpsyg.2015.02056

Rock, I., & Victor, J. (1964). Vision and touch: An experimentally created conflict between the two senses. Science, 143, 594–596.

Roach, N. W., Heron, J., & McGraw, P. V. (2006). Resolving multisensory conflict: A strategy for balancing the costs and benefits of audio-visual integration. Proceedings Biological Sciences, 273, 2159–2168.

Schubö, A., Prinz, W., & Aschersleben, G. (2004). Perceiving while acting: Action affects perception. Psychological Research, 68, 208–215.

Shams, L., & Beierholm, U. R. (2010). Causal inference in perception. Trends in Cognitive Sciences, 14(9), 425–432.

Takahashi, C., Diedrichsen, J., & Watt, S. J. (2009). Integration of vision and haptics during tool use. Journal of Vision, 9, 3–13.

Takahashi, C., & Watt, S. J. (2014). Visual-haptic integration with pliers and tongs: Signal “weights” take account of changes in haptic sensitivity caused by different tools. Frontiers in Psychology, 5, 109. doi:https://doi.org/10.3389/fpsyg.2014.00109

Takahashi, C., & Watt, S. J. (2017). Optimal visual-haptic integration with articulated tools. Experimental Brain Research, 235, 1361–1373.

Thomaschke, R., Hopkins, B., & Miall, R. C. (2012). The planning and control model (PCM) of motorvisual priming: Reconciling motorvisual impairment and facilitation effects. Psychological Review, 119(2), 388–407.

Welch, R. B., & Warren, D. H. (1980). Immediate perceptual response to intersensory discrepancy. Psychological Bulletin, 88, 638–667.

Wann, J. P., & Ibrahim, S. F. (1992). Does limb proprioception drift? Experimental Brain Research, 91, 162–166.

Witt, J. K. (2011). Action’s effect on perception. Current Directions in Psychological Science, 20, 201–206.

Witt, J. K., & Sugovic, M. (2012). Does ease to block a ball affect perceived ball speed? Examination of alternative hypotheses. Journal of Experimental Psychology: Human Perception and Performance, 38, 1202–1214.

Zwickel, J., Grosjean, M., & Prinz, W. (2010a). On interference effects in concurrent perception and action. Psychological Research, 74, 152–171.

Zwickel, J., Grosjean, M., & Prinz, W. (2010b). What part of an action interferes with ongoing perception? Acta Psychologica, 134, 403–409.

Zwickel, J., & Prinz, W. (2012). Assimilation and contrast: the two sides of specific interference between action and perception. Psychological Research, 72(2), 171–182.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Kirsch, W., Kunde, W. On perceptual biases in virtual object manipulation: Signal reliability and action relevance matter. Atten Percept Psychophys 81, 2881–2889 (2019). https://doi.org/10.3758/s13414-019-01783-8

Published:

Issue Date:

DOI: https://doi.org/10.3758/s13414-019-01783-8