Abstract

Perceptual control theory (PCT) views behavior as being organized around the control of perceptual variables. Thus, from a PCT perspective, understanding behavior is largely a matter of determining the perceptions that organisms control—the perceptions that are the basis of the observed behavior. This task is complicated by the fact that very often the perceptions that seem to be the obvious basis of some behavior are not. This problem is illustrated using a simple pursuit-tracking task in which the goal was to keep a cursor vertically aligned with a target set at various horizontal distances from the cursor. The “obvious” perceptual basis of the behavior in this task is the vertical distance between cursor and target. But a control model suggests that a better description of the perceptual basis of the behavior is the angle between cursor and target. The experiment shows how a control model can be used to do the test for the controlled variable, a control-theory-based approach to distinguishing the actual from the apparent perceptual basis of any behavior.

Similar content being viewed by others

Perceptual control theory (PCT) assumes that the behavior of organisms is organized around the control of perceptual variables (Marken, 1982; Powers, 2005). Thus, from a PCT perspective, understanding behavior is largely a matter of discovering the perceptions that an organism controls, which is equivalent to determining the perceptual basis of the organism’s behavior. The perceptions that an organism controls are called controlled variables. So, understanding the perceptual basis of behavior is a matter of identifying the controlled variables around which behavior is organized.

What are you doing?

The idea of trying to understand behavior in terms of the perceptions that an organism controls may seem somewhat strange, but it is actually something we do quite often in everyday life. In particular, we are doing it when we see people doing something and ask ourselves what they are doing. When you think about it, this is an odd question to ask when the behavior we are asking about is happening right before our eyes. But the question rarely strikes us as odd because we know we are asking, not about the behavior we can see but, rather, about the purpose of that behavior—what these people are trying to accomplish—which is not easy to see at all. The reason for this, according to PCT, is that purpose is a perception in the brains of those doing the behavior.

The concept of purpose as perception can be illustrated by considering the purpose of your opponent’s behavior in a game such as chess. The behavior that is easy to see is each of the opponent’s moves. The behavior that is hard to see is the purpose of these moves. It is difficult to see the opponent’s purpose because it is a perception that the opponent is producing for him- or herself. In the chess game, it is a perception of the relationship between the pieces on the board. The problem of determining the opponent’s purpose results from the fact that there are many different ways to perceive the same set of relationships. For example, the relationship between pieces on the board that results from the opponent’s move could be seen as a threat of capture, as solidifying the opponent’s “control of the center,” or as setting a trap. The purpose of the opponent’s move is to produce one (or more) of these perceptions, you just don’t know which.

The process of acting to produce a particular perception—such as a particular relationship between pieces on the chess board—is called control (Marken, 1990). Like purpose, control involves the production of preselected perceptions and doing so in the face of unpredictable disturbances, such as the moves that you make in response to those of your opponent. Thus, determining the purpose of behavior is equivalent to determining the perceptions that the person is controlling. It is difficult to determine what these perceptions are because they exist only in the brain of the person doing the controlling.

Jumping to conclusions

The difficulty of determining a person’s purposes—the perceptions they control—does not stop us from jumping to conclusions about what those purposes are. This may result from the apparently innate inclination of humans (and, possibly, some nonhuman primates) to understand behavior in terms of inferences about its purpose (Heider & Simmel, 1944; Premack & Woodruff, 1978). The tendency to jump to conclusions about the purpose of behavior can be a particular problem for psychologists who are trying to understand the nature of purposeful behavior (Marken, 1992). This is because a correct understanding of any particular example of purposeful behavior requires that the actual purpose of that behavior be accurately identified (Marken, 2002).

The problem of jumping to conclusions about the purpose of behavior can be illustrated using a simple pursuit-tracking task, like that shown in Fig. 1. In this task the participant is asked to keep a cursor, c, aligned with a moving target, t. The subject sees just the purple oval target and green oval cursor, both moving in a vertical path on the computer screen. The cursor moves as a result of the participant’s mouse movements, q.o; the target moves as a result of time variations in a computer-generated disturbance, d. This is a control task in which the participant’s purpose—the perception to be controlled—seems obvious. It is a perception of zero distance between cursor and target: t − c = 0. This is certainly the assumption made in most studies of tracking (Jagacinski & Flach, 2002). However, this assumption may be wrong; other aspects of the relationship between target and cursor might be the perception being controlled in this task. In order to see why this might be the case, it is necessary to look at a model of the behavior in this tracking task.

PCT model of purposeful behavior

Figure 2 is a diagram of the basic PCT model of the behavior in a pursuit-tracking task. The participant in this task is viewed as a control system controlling a perceptual representation of the distance between target and cursor (t − c). This distance, called q.i in the diagram, is the input to the control system. A perceptual input function, I, transforms q.i into a perceptual signal, p, which is compared to a reference signal, r, that specifies the desired state of that perception. The comparison is performed by a comparator, C, which continuously computes the difference between p and r, r − p. This difference is a time-varying error signal, e, that drives the participant’s outputs, q.o , via the output function, O. These outputs have a feedback effect, via q.i and p, on the error signal that is the cause of those outputs.

The behavioral organization diagrammed in Fig. 2 represents a closed-loop system in which inputs cause outputs while, at the same time, outputs cause inputs. When the effect of outputs is to reduce the error that is causing those outputs, the system in Fig. 2 is a negative feedback control system. Such a system controls in the sense that it keeps a perception, p, close to the reference signal, r, protected from disturbance, d (Powers, 2005). In the pursuit-tracking task, this system will keep its perception of the difference between target and cursor close to the reference signal value (assumed to be zero), protected from disturbance, which is the changing position of the target.

Control of perception

The fact that a control system, like that shown in Fig. 2, controls a perception means that you cannot really tell what it is doing—its purpose—by looking at its visible behavior.Footnote 1 In a pursuit-tracking task, the visible behavior of the participant consists of the movements of the mouse, q.o, as well as a measure of the average deviation of cursor from the target. If mouse movements keep the cursor near the target, so that the average deviation between cursor and target is small, an observer is likely to conclude that the subject’s purpose is to control t − c. But since it is actually a perception that is being controlled, there are other possibilities, as is illustrated in Fig. 3.

Figure 3 shows two possible perceptions that might be under control in the pursuit-tracking task. The upper diagram in Fig. 3 shows the perceptual function, I, computing a perception, p 1, that is proportional to the difference between t and c:

This is the perception that an observer is likely to conclude is the one being controlled in the pursuit-tracking task.

The lower diagram in Fig. 3 shows another possibility. In this case, the perceptual function, I, is computing a perception, p 2, that is proportional to the angular separation between t and c. This angle depends on both the vertical distance between target and cursor, t − c, as well as the horizontal distance between target and cursor, s. The perceptual function is assumed to carry out the equivalent of computing the arcsine of the tangent of the triangle connecting target and cursor:

Testing for the controlled variable

The two perceptions, p 1 and p 2, that might be the basis of the purposeful behavior in the pursuit-tracking task are possible controlled variables. According to PCT, understanding the purposeful behavior in this (or any) task is largely a matter of determining which perception is actually under control: that is, determining the controlled variable. This can be done using a control-theory-based methodology called the test for the controlled variable, or TCV (Marken, 2009; Powers, 1979; Runkel, 2003).

The TCV is based on the fact that a variable that is under control—a controlled variable—will be protected from disturbance by the actions of a control system. In the pursuit-tracking task, the variation in target position is a disturbance to both the target–cursor distance (p 1) and the target–cursor angle (p 2). Typically, the only variable thought to be under control in pursuit tracking is target–cursor distance. The effect of target movement on target–cursor distance is measured in terms of RMS error—the square root of the average distance between cursor from target. To the extent that RMS error is close to zero, the perception of target–cursor distance is considered to be under control. However, this result is also consistent with the possibility that the variable that is actually under control is target–cursor angle. This is because disturbances created by target movement have the same effect on target–cursor angle—arcsine[(t − c)/s]—as they do on target–cursor distance—k (t − c).

The TCV starts with a hypothesis regarding the perceptual basis of a particular behavior. In the case of this pursuit-tracking task, two hypotheses can be tested simultaneously: the perceptual basis of tracking behavior—the controlled variable—is either (1) target–cursor distance or (2) target–cursor angle. These two hypotheses can be tested by applying a disturbance that would be expected to have an effect on one of these perceptions but not the other. Since, according to Eqs. 1 and 2, the horizontal separation between target and cursor, s, affects only target–cursor angle, and not target–cursor distance, variations in s should disturb target–cursor angle but not distance.

In most applications of the TCV, the controlled variable is revealed by lack of an effect of disturbances to the hypothetical controlled variable due to the compensatory actions of the participant (Runkel, 2003). Thus, it would be concluded that the participant is controlling target–cursor angle if variations in s had less than the expected effect on this variable. However, in the present case, the participant cannot compensate for the effect of variations of s on target–cursor angle. Therefore, a version of the TCV that uses computer simulation (Marken, 2005) must be used to determine the nature of the expected effect of variations in s on tracking performance, depending on whether the participant is controlling target–cursor distance or angle. The behavior of the computer simulation can then be compared to that of the human participant to see which hypothesis about the variable under control produces simulation data that give the best fit to the human data.

Mathematical basis of the computer simulation

A computer simulation of the behavior in the pursuit-tracking task is based on the PCT model diagrammed in Figs. 2 and 3. The model can be represented by a set of three equations that can be turned into computer program statements. The first equation, called the system function, describes the behavior of the system (a human, in this case) doing the tracking:

This equation says that variations in output (q.o; i.e., the mouse movements in a tracking task) are proportional to variations in an error signal, r − p, that is the difference between a reference specification, r, and a perception, p, of the relationship between target and cursor. The constant of proportionality, k.o, is the output gain: the amount of output produced per unit error.

The second equation, called the environment function, describes the physical relationships between system outputs and inputs:

This equation says that variations in the input to the system, q.i, are proportional to the sum of the effects of variations in output, q.o, and disturbance, d. In the pursuit-tracking task q.i is the time-varying difference between target and cursor. Equation 4 says that this difference depends on a mouse movement, q.o, which determines the state of the cursor, and a time-varying disturbance, d, which determines the state of the target. The constant k.e in Eq. 4 is the feedback function that relates system output, q.o, to input, q.i, whereas k.d is the disturbance function that determines the effect of the disturbance on target movements. In the present pursuit-tracking task, both k.e and k.d are equal to 1.

Finally, the third equation defines the perceptual function that transforms the input variable, q.i, defined in the system function equation (4), into the perceptual variable, p, defined in the environment function equation (3):

In this equation, k.i represents either of the two perceptual functions described by Eqs. 1 and 2. The computer simulation can be run with each of these different functions, to see which gives the best fit to the behavior observed in a pursuit-tracking task.

Computer simulation

A computer implementation of the equations that define the PCT model of pursuit tracking is described in the following set of pseudocode program statements:

This code assumes that one trial of a pursuit-tracking task consists of NSamples of a time-varying disturbance that determines the position of the target over the course of the trial. The code in Display 6 loops through the NSamples, setting the target position, t, to the current value of the disturbance, d[i], and setting the cursor position, c, to the current value of the output, q.o, produced by the simulated tracker. The difference between t and c in each sample interval is the input variable, q.i. The input is then transformed into a perception by a perceptual function, k.i(q.i), that produces perception p 1 or p 2, as defined by Eqs. 1 and 2, respectively. Finally, a new value of the output, q.o, is calculated as an increment to the current value, the size of the increment being proportional, by the gain factor k.o and a slowing factor, slow, to the difference between a reference specification, r, and the perception, p.

Program statements 6.1–6.4 implement the environment function described by Eq. 4; statement 6.5 carries out the perceptual function implied by Eqs. 1 and 2; and statement 6.6 implements the system function described by Eq. 3. The system function in statement 6.6 is implemented as a “leaky integration” in order to take into account the fact that the variables in the tracking task are changing over time.

Pursuit-tracking experiment

Two participants, R.M. and M.T., were tested in a computer-based pursuit-tracking task. The target and cursor appeared on the screen as shown in Fig. 1. The target moved vertically, driven by a computer-generated filtered random noise disturbance. The participant kept the cursor as closely aligned with the target as possible by moving the mouse forward or back to move the cursor up or down. The horizontal separation between target and cursor, s, was different on different trials, with the distance ranging from 0 to 980 pixels. The center frequency of the noise disturbance determined the difficulty of the task in terms of the speed of the oscillatory movements of the target; a disturbance with a low center frequency resulted in a slowly moving target, and thus an easier task, than one with a high center frequency.

After several practice trials, the participants performed two tracking trials, one with an easy and one with a difficult disturbance, at five different horizontal separations between target and cursor, for a total of ten trials. Each trial lasted 1 min, and the trials were presented in a random order.

Comparing the control model to human behavior

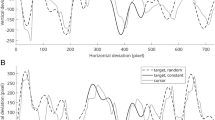

Figure 4 shows how well the behavior of the PCT model compared to that of a human participant during a segment of one trial in a compensatory tracking task. The figure shows the cursor movements made by a human participant (labeled Human) and the PCT model controlling p 1 (the cursor–target distance, labeled Distance Control) and p 2 (cursor–target angle, labeled Angle Control) during a 15-s segment of a pursuit-tracking task. The horizontal distance between target and cursor (s) during this trial was 980 pixels (20 cm). The figure also shows movements of the target (labeled Target) during this segment of the task.

It is clear from Fig. 4 that the behavior (cursor movements) of both the distance control and angle control models closely approximates that of the human. The next step in the TCV is to determine which model provides a better fit to the human data. Because the models make different predictions about the effect of variations in horizontal target–cursor separation, s, on behavior, it is possible to determine which model is best by comparing the behavior of the models to that of the human at different values of s. Since the models control different perceptions, the model that gives the best account of the data can be considered to be controlling a perceptual variable that is most like the one controlled by the humans.

The models were tested by having the computer version of each model (the code in Eq. 6) perform the same tracking task as the human participants; the computer tracked the same target movements at the same horizontal separations between target and cursor, s, as did the human participants. The performance of both human participants and the models was measured as the ability to control the vertical distance between cursor and target, keeping it close to zero. The measure of control used was the ratio of the observed variance in target–cursor deviation, var(t − c), to the variance of the target, var(t), which can be considered the expected variance of t − c if the participant did nothing (so that c was constant). If control is good, var(t − c) will be very small relative to var(t), so the ratio var(t − c)/var(t) will be very small. The negative log of this ratio is taken, so that the better the control [i.e., the smaller the ratio var(t − c)/var(t)], the larger the number representing the quality of control.

Tests based on performance measures

The behavior of the distance and angle control models was fit to the human performance data by adjusting the slowing and gain parameters to get the closest fit of the model to human performance at each separation. Figure 5 shows measures of performance at different horizontal separations, s, of cursor and target for the two human participants (R.M., M.T.), as well as for the best-fitting versions of the distance and angle control models. The human performance results are shown as solid black diamonds. The performance of both humans declines as s increases. This decline is captured by the angle control model (solid blue triangles), but not by the distance control model (solid red squares). Indeed, the performance of the distance control model is nearly the same at all values of s for both participants. The performance of the distance control model even increases slightly for the trials performed by participant R.M.

The fit of the models to the human performance data can be measured in terms of the squared correlation, R 2, between human and model performance at each separation, s. The R 2 for the fit of the distance control model to the human performance, averaged over M.T. and R.M., was .51, whereas the average R 2 for the fit of the angle control model to the human performance was .99. Clearly, the angle control model fits the human performance data much better than does the distance control model in terms of the decline in tracking performance with increasing s.

The decline in performance for the angle control model results from the fact that s is included in the calculation of the controlled variable, arctan[(t − c)/s]. Increases in s affect the controlled angle variable in a way that reduces the loop gain of the control system. Loop gain is the product of all gain factors around the control loop. In the model described by Eqs. 3–5, the gain factors are k.i (input function), k.o (output function), and k.e (feedback function). So, the loop gain is proportional to the product k.i*k.o*k.e: The higher the loop gain in a control loop, the better the control (in terms of keeping the controlled variable close to the reference, r). If angle is the controlled variable, then k.i is proportional to the derivative of arctan(t − c)/s. So,

Since the distance between target and cursor (t − c) during a tracking trial is typically being kept relatively small, k.i will decrease exponentially as s increases, resulting in the decrease in performance of the angle control model.

Improving the fit of the models to the human performance data

It is possible that the poor fit of the distance control model to the human performance data results from the fact that the perception of vertical target–cursor distance degrades with increasing horizontal separation, s. Thus, it should be possible to improve the fit of the distance control model by degrading the perception of distance with a “threshold” band. This band was placed around the value of the distance perception, t − c, such that only variations in this variable that are outside of the band are perceived. The width of this threshold band increased with increasing s. By appropriate selection of a threshold width for each value of s, it was possible to match the performance of the distance control model to that of the human participants quite well, as can be seen in the plots of the threshold distance model (the open red squares in Fig. 5).

In order to capture the decline in human performance with increasing s, four parameters, representing the width of the threshold band, must be estimated for the threshold distance model. This decline is captured “automatically” by the angle control model through its inclusion of a number proportional to the value of s—the psychological value of s—in the calculation of the controlled angle perception, which reduces the loop gain with increasing s. However, the performance of the angle control model is much better than that of the humans at all values of s, as can be seen by the fact that, for both R.M. and M.T., the plot of the performance of that model as a function of s (solid blue triangles in Fig. 5) runs parallel to but is much higher on the graph than that for the human data.

The angle control model can be made to more closely approximate the human performance by adding low-pass-filtered random noise to the output of the model. The noise amplitude that produced the best fit for the angle control model was 3 % of the output range. This level of noise seems to be of the correct order of magnitude, on the basis of estimates of the magnitude of neural noise levels derived from neurophysiologic measures (Miller & Troyer, 2002; Nakajima et al., 1978). The performance of the angle control model with added noise (the open blue triangles in Fig. 5; Angle Control + Noise) can be seen to fit the human performance data as well as the threshold distance model.

Since the noise level added to the angle control model was the same for all values of s, only one parameter (noise amplitude) was estimated to achieve the fit of the angle control model to the human data, whereas four parameters—the threshold widths at the different horizontal separations, s—were required to get the same fit for the threshold distance model. Also, the distance control model includes no mechanism that explains the increase in threshold width with the increase in s. Therefore, parsimony would seem to recommend a model that controls angle over one that controls distance as giving the best account of the human data in this task.

However, before concluding that angle is the controlled variable in this task, it is possible to make a more detailed comparison of the models by measuring how well they fit the detailed cursor movements made by the human participants on each trial. If the two models were equally good predictors of overall tracking performance, they would be expected to do equally well at accounting for the detailed time variations in human cursor movements (shown in Fig. 4).

Tests based on model fit to detailed cursor movements

Figure 6 shows the average RMS deviations of model from human cursor movements for the two models that gave equally good fits to the human performance data—the threshold distance and angle control + noise models. The fits of the models are shown as a function of the horizontal separation of target from cursor, s. The difference in average RMS deviation of the two models from the time variations in human cursor movements is significant for both M.T. [t(4) = 3.37, p < .011] and R.M. [t(4) = 2.76, p < .025]. The results in Fig. 6 show that the angle control + noise model gives a much better fit to the human data than does the threshold distance model at all values of s, but particularly at larger values of s. This is strong evidence that angle rather than distance is the perception controlled in this tracking task: The hypothesis that distance is the perceptual basis of tracking can be rejected.

A close look at the time traces of the human and model cursor movements suggests why the angle control + noise and threshold distance models account for the performance data equally well (Fig. 5), whereas the angle control + noise model accounts for the detailed human cursor variation data much better than does the threshold distance model (Fig. 6). The observed decrease in the performance of the two models with increasing s, as can be seen in Fig. 5, results from different characteristics of the detailed behavior of each model. The poorer performance of the angle control + noise model with increasing s results from the fact that, as with the human, the variation of model cursor movements around the target increased as s increased, a reflection of the decreased gain of the control model with increasing s. On the other hand, the poorer performance of the threshold distance model with increasing s resulted from the fact that, unlike the human cursor movements, the model cursor movements remained a constant distance from the target, a distance that increased with increasing s.

Understanding behavior in terms of controlled variables

The comparison of the models controlling different perceptions suggests that angle rather than distance is likely to be the perceptual basis of behavior in the pursuit tracking task. The purpose of the participants’ behavior—mouse movements—in this task is to keep the target–cursor angle rather than distance close to 0.0. Although this is an interesting and counterintuitive finding—since, as mentioned above, causal observation suggests that the participant’s purpose in this task is to control the distance between cursor and target—it may not be considered particularly significant, since tracking is not a particularly significant behavior. But this research demonstrates a methodology—the TCV—that can serve as the basis for understanding any example of purposeful behavior in terms of the perceptions that are under control. It is a methodology that differs from more familiar, traditional methodologies that do not take the purpose of behavior into account (Marken, 2013).

The approach to understanding purposeful behavior demonstrated in this research has been used to understand “real-world” examples of purposeful behavior, including the hording behavior of rats (Bell & Pellis, 2011), the posture control of crickets (Pellis, Gray, Gray, & Cade, 2009), the parenting behavior of chimps (Plooij, 1984), the shock avoidance behavior of rats (Powers, 1971), the object interception behavior of humans and canines (Marken, 2005; Shaffer, Krauchunas, Eddy, & McBeath, 2004; Shaffer, Marken, Dolgov & Maynor, in press), and the self-image control behavior of college freshmen (Robertson, Goldstein, Mermel, & Musgrave, 1999). Clearly, the TCV can be used to understand purposeful behaviors that involve the control of perceptions that are more complex than distances and angles.

Notes

Human behavior presumably involves the control of many perceptions simultaneously. But it should be noted that not everything that a person perceives can be (or is being) controlled. Figure 2 shows that only those perceptions that are affected by the system’s output can be controlled, so only these perceptions can be the basis of observed behavior.

References

Bell, H. C., & Pellis, S. M. (2011). A cybernetic perspective on food protection in rats: Simple rules can generate complex and adaptable behaviour. Animal Behaviour, 82, 659–666.

Heider, F., & Simmel, M. (1944). An experimental study of apparent behavior. American Journal of Psychology, 57, 243–259.

Jagacinski, R., & Flach, J. (2002). Control theory for humans: Quantitative approaches to modeling performance. Mahwah, NJ: Erlbaum.

Marken, R. S. (1982). Intentional and accidental behavior: A control theory analysis. Psychological Reports, 50, 647–650.

Marken, R. S. (1990). A science of purpose. American Behavioral Scientist, 34, 6–13.

Marken, R. S. (1992). Mind readings: Experimental studies of purpose. New Canaan, CT: Benchmark.

Marken, R. S. (2002). Looking at behavior through control theory glasses. Review of General Psychology, 6, 260–270.

Marken, R. S. (2005). Optical trajectories and the informational basis of fly ball catching. Journal of Experimental Psychology: Human Perception and Performance, 31, 630–634.

Marken, R. S. (2009). You say you had a revolution: Methodological foundations of closed-loop psychology. Review of General Psychology, 13, 137–145.

Marken, R. S. (2013). Taking purpose into account in experimental psychology: Testing for controlled variables. Psychological Reports, 112, 184–201.

Miller, K. D., & Troyer, T. W. (2002). Neural noise can explain expansive, power-law nonlinearities in neural response functions. Journal of Neurophysiology, 87, 653–659.

Nakajima, H., Fukamachi, A., Isobe, I., Miyazaki, M., Shibazaki, T., & Ohye, C. (1978). Estimation of neural noise: Functional anatomy of the human thalamus. Applied Neurophysiology, 41, 193–201.

Pellis, S. M., Gray, D., Gray, D., & Cade, W. H. (2009). The judder of the cricket: The variance underlying the invariance in behaviour. International Journal of Comparative Psychology, 22, 188–205.

Plooij, F. X. (1984). The behavioral development of free-living chimpanzee babies and infants. Norwood, NJ: Ablex.

Powers, W. T. (1971). A feedback model of behavior: Application to a rat experiment. Behavioral Science, 16, 558–563.

Powers, W. T. (1979). The nature of robots: Pt. 4: Looking for controlled variables. Byte, 4, 96–118.

Powers, W. T. (2005). Behavior: The control of perception (2nd ed.). New Canaan, CT: Benchmark.

Premack, D., & Woodruff, G. (1978). Chimpanzee problem-solving: A test for comprehension. Science, 202, 532–535. doi:10.1126/science.705342

Robertson, R. J., Goldstein, D. M., Mermel, M., & Musgrave, M. (1999). Testing the self as a control system: Theoretical and methodological issues. International Journal of Human–Computer Studies, 50, 571–580.

Runkel, P. (2003). People as living things. The psychology of perceptual control. Hayward, CA: Living Control Systems.

Shaffer, D. M., Krauchunas, S. M., Eddy, M., & McBeath, M. K. (2004). How dogs navigate to catch Frisbees. Psychological Science, 15, 437–441.

Shaffer, D. M., Marken, R. S., Dolgov, I., & Maynor, A. B. (in press). Chasin’ choppers: Using unpredictable trajectories to test theories of object interception. Attention, Perception, & Performance. doi:10.1037/0096-1523.31.3.630

Author note

I acknowledge the assistance of Martin M. Taylor, who wrote the pursuit-tracking program and participated in the experiment as participant M.T.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Marken, R.S. Testing for controlled variables: a model-based approach to determining the perceptual basis of behavior. Atten Percept Psychophys 76, 255–263 (2014). https://doi.org/10.3758/s13414-013-0552-8

Published:

Issue Date:

DOI: https://doi.org/10.3758/s13414-013-0552-8