Abstract

Research has investigated how sleep affects emotional memory and how emotion enhances visual processing, but these questions are typically asked by re-presenting an emotional stimulus at retrieval. For the first time, we investigate whether sleep affects neural activity during retrieval when the memory cue is a neutral context that was previously presented with either emotional or nonemotional content during encoding. Participants encoded scenes composed of a negative or neutral object on a neutral background either in the morning (preceding 12 hours awake; wake group) or evening (preceding 12 hours including a night of sleep; sleep group). At retrieval, participants viewed the backgrounds without their objects, distinguishing new backgrounds from those previously studied. Occipital activity was greater within the sleep group than the wake group specifically during the successful retrieval of neutral backgrounds that had been studied with negative (but not neutral) objects. Moreover, there was enhanced connectivity between the middle occipital gyrus and hippocampus following sleep. Within the sleep group, the percentage of REM sleep obtained correlated with activity in the middle occipital gyrus, lingual gyrus, and cuneus during the successful retrieval of neutral backgrounds previously paired with negative objects. These results confirm that emotion affects neural activity during retrieval even when the cues themselves are neutral, and demonstrate, for the first time, that this residual effect of emotion on visual activity is greater after sleep and may be maximized by REM sleep.

Similar content being viewed by others

The retrieval of emotional memories is associated not only with limbic engagement but also with strong engagement of sensory regions (Keightley, Chiew, Anderson, & Grady, 2011; Kensinger & Schacter, 2007; Mitchell, Mather, Johnson, Raye, & Greene, 2006). The majority of this work has investigated emotional memory retrieval using emotional cues (i.e., the emotionally salient word or image that was presented during encoding; reviewed by Buchanan, 2007). Additional studies have, however, shown that the retrieval of neutral information that was once presented in an emotional context is also associated with enhanced limbic and sensory processing. For example, Smith, Henson, Dolan, and Rugg (2004) found that recognition of neutral images previously encoded in an emotional relative to neutral context was associated with enhanced activity in regions associated with episodic memory (e.g., parahippocampal cortex, hippocampus, prefrontal cortex), as well as in emotion processing regions, including the amygdala, orbitofrontal cortex, and anterior cingulate cortex (Smith et al., 2004). Maratos, Dolan, Morris, Henson, and Rugg (2001) similarly found increased activity in emotion processing regions (e.g., amygdala, insula), memory retrieval regions (e.g., hippocampus, parahippocampal gyrus), and also sensory regions (i.e., cuneus, precuneus, lingual gyrus) during the retrieval of words that had been studied in a negative relative to a neutral context (Maratos et al., 2001). These results provide evidence of residual emotion effects on neural processes, even when the retrieval cue is neutral.

There are a number of reasons why information encoded in a negatively emotional context would be associated with more sensory activity at retrieval than information encoded in a neutral context. Negative emotion enhances perceptual processing (e.g., Todd, Talmi, Schmitz, Susskind, & Anderson, 2012; Zeelenberg, Wagenmakers, & Rotteveel 2006), and viewing emotionally salient images is associated with increased activity in the striate (Bradley et al., 2003; Padmala & Pessoa, 2008) and extrastriate visual cortex (Bradley et al., 2003; Lang et al., 1998; Sabatinelli, Bradley, Fitzsimmons, & Lang, 2005; Vuilleumier, Richardson, Armony, Driver, & Dolan, 2004). This sensory activity can be recapitulated at retrieval, with emotion enhancing the similarity between encoding and retrieval patterns in many visual processing regions, including the superior, middle, and inferior occipital gyri, cuneus, and lingual gyrus (Ritchey, Wing, LaBar, & Cabeza, 2013). This similarity between encoding and retrieval is mediated by the hippocampus, providing evidence for hippocampal–cortical interactions during retrieval (Ritchey et al., 2013).

This study assesses the novel question of whether sleep influences the degree to which sensory activity is evoked during the retrieval of neutral information that had previously been studied in an emotional context. It is well documented that sleep affects emotional reactivity, although the direction of that influence is still debated: Sleep has been shown to potentiate (e.g., Wagner, Gais, & Born, 2001), preserve (e.g., Baran, Pace-Schott, Ericson, & Spencer, 2012), and depotentiate (e.g., Cunningham et al., 2014; van der Helm et al., 2011) the affective response to emotional stimuli during retrieval. These studies typically use emotional cues during retrieval to assess this question, primarily investigating how amygdala activity and connectivity with other emotional processing regions (such as the ventromedial prefrontal cortex; Sotres-Bayon, Bush, & LeDoux, 2004) during the retrieval of emotional stimuli differs following a period of sleep relative to wake or sleep deprivation. However, it has not been examined whether sleep would also alter the neural mechanisms active during retrieval of neutral information that was previously seen in an emotional versus neutral context, nor how sleep may affect the engagement of sensory regions during the retrieval of these stimuli.

On one hand, sleep may decrease the engagement of sensory regions during the retrieval of neutral information that was previously presented with emotional relative to neutral information. The sleep-to-forget, sleep-to-remember (SFSR) hypothesis (van der Helm & Walker, 2009) proposes that sleep serves to reduce the emotional tone associated with a memory yet preserves the content of that episode. Given that negative emotion is related to increased activation of visual regions during retrieval (e.g., Keightley et al., 2011; Kensinger & Schacter, 2007; Mitchell et al., 2006) and emotional arousal enhances the similarity between neural mechanisms active during encoding and retrieval (Ritchey et al., 2013), the SFSR hypothesis might suggest a decrease in activity to neutral stimuli originally paired with emotional content following sleep. If the emotion associated with a neutral cue were dampened by sleep, this would likely result in less sensory activation.

Alternatively, given that sleep has been shown to alter associative memory networks and to restructure information in adaptive ways (e.g., Ellenbogen, Hu, Payne, Titone, & Walker, 2007; Payne, 2011; Walker & Stickgold, 2010), one might expect to observe enhanced residual effects of emotion following a period of sleep. These effects may be reflected in increased visual activity, with some work showing that synaptic potentiation in the visual cortex occurs during sleep (Aton et al., 2009), and that this strengthening of synapses may promote sleep-dependent consolidation. In addition, it has been proposed that the coordinated replay of events in the sensory cortex and hippocampus during sleep may contribute to or reflect the result of memory consolidation (Ji & Wilson, 2007). In support of this idea, Sterpenich et al. (2013) showed that cortical responses during retrieval were enhanced following the reactivation of memories during REM sleep (i.e., by re-presenting auditory cues that were paired with faces during encoding). This enhancement of visual activity in response to the faces presented during retrieval may be specific to REM sleep, as reactivation during Stage 2 sleep resulted in neither enhanced recollection nor cortical activity during retrieval (Sterpenich et al., 2013; see also Payne, 2014a, for discussion). Although a wealth of literature has shown that sleep (and particularly REM sleep) enhances emotional memory consolidation (e.g., Baran et al., 2012; Nishida, Pearsall, Buckner, & Walker, 2009; Payne et al., 2015; Payne, Stickgold, Swanberg, & Kensinger, 2008; Wagner et al., 2001), and that sleep refines and centers the emotional memory retrieval network on limbic regions (Payne & Kensinger, 2011; Sterpenich et al., 2009), when the emotional element of the scene is removed (i.e., when context is the cue), the effects of sleep on retrieval-related activity may be reflected in visual rather than limbic activity.

This study sought to determine how sleep influences neural activity during the retrieval of neutral backgrounds that had been presented with negative relative to neutral foreground objects during encoding. In other words, even though all backgrounds were neutral in valence, would the analysis of retrieval-related activity to backgrounds show any “emotional residue,” differentiating between backgrounds that were previously presented with a negative versus neutral object during encoding? If so, might this depend on whether participants sleep or stay awake during the consolidation interval? Because sleep is known to enhance V1 response potentiation (Aton, Suresh, Broussard, & Frank, 2014), and because emotional images undergo enhanced perceptual processing (e.g., Todd et al., 2012; Zeelenberg et al., 2006), we had an a priori interest in visual regions. We hypothesized that participants who slept between encoding and retrieval would show enhanced visual activity during the retrieval of neutral backgrounds that were paired with negative (but not neutral) objects during encoding, relative to those who stayed awake. We also hypothesized that this effect would be specifically linked to REM sleep, as prior work has shown that REM sleep plays an active role in emotional memory consolidation (Baran et al., 2012; Nishida et al., 2009; Payne, Chambers, & Kensinger, 2012; Wagner et al., 2001). Memory reactivation during REM sleep of auditory cues associated with emotional faces during encoding has been shown to increase cortical activity during retrieval (Sterpenich et al., 2013), providing further evidence that REM sleep transforms memories, often favoring schematization, generalization, and the integration of new memories with existing memories, primarily within corticocortical networks (Payne, 2011, 2014a, b; Walker & Stickgold, 2010). Finally, we hypothesized that this enhancement in visual activity during retrieval following sleep may be driven by increased connectivity between visual regions and the hippocampus (e.g., Ritchey et al., 2013), and as such, we focus on visual and medial temporal lobe (MTL) regions. Because these neutral backgrounds are presented during retrieval without the embedded negative or neutral object with which they were originally studied (counterbalanced across participants), differences in neural activity during retrieval will be attributed to differences in the type of object the backgrounds were paired with during encoding. Further, a comparison of neural activity following sleep versus wakefulness will elucidate the effects of sleep during a delay that allowed time for consolidation (hereafter referred to as “during consolidation”) on subsequent retrieval-related activity of neutral backgrounds that were previously studied with emotional versus neutral content.

Method

Participants

Participants were 47 right-handed native English speakers (18 to 34 years old, M = 22.7) with normal or corrected-to-normal vision who were tested as part of a larger study investigating the effects of sleep and cortisol on emotional memory (Bennion, Mickley Steinmetz, Kensinger, & Payne, 2014, 2015). This study includes all memory measures and models all fMRI data. Participants were screened for neurological, psychiatric, and sleep disorders, and for medications affecting the central nervous system or sleep architecture. They also were required to sleep for at least 7 hours a night and be in bed by 2:00 am for the five nights leading up to the study; participants kept a sleep log during these five nights. Informed consent was obtained in a manner approved by the Boston College Institutional Review Board.

Participants were assigned to either the sleep or wake group, which were scheduled simultaneously. Although full random assignment was not possible due to class schedules and other scheduling conflicts, we ensured that the groups did not differ in scores on the Morningness-Eveningness Questionnaire (Horne & Ostberg, 1976; p = .25), thus minimizing concerns about time of day preference effects between groups. To minimize the possibility that any differences between the sleep and wake groups were due to circadian effects, two additional groups of participants, who viewed and were tested on the stimuli after a 20-minute delay either in the morning (morning short delay; N = 23) or evening (evening short delay; N = 23), were also tested. No effects of circadian time were revealed in behavior (see Table 1), and as such, data from only the sleep and wake groups are reported here (but see Footnote 1 for comment on short delay groups).

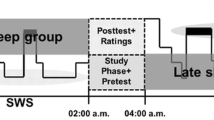

Conditions

There were 26 participants (13 female) in the sleep condition, and 21 participants (10 female) in the wake condition. Participants in these groups were matched on a number of factors, including age (p = .42) and scores on the Beck Depression Inventory (BDI; Beck & Beamesderfer, 1974; p = .69) and Beck Anxiety Inventory (BAI; Beck, Epstein, Brown, & Steer 1988; p = .51). Participants in the wake condition viewed the stimuli in the morning (7:00–10:00 a.m.) and were tested 12 hours later following a full day of wakefulness; all participants stated that they did not nap between sessions. Participants in the sleep condition viewed the stimuli in the evening (8:00–10:00 p.m.) and were tested 12 hours later, following a full night of polysomnographic (PSG) monitored sleep in the laboratory. Sleep amount, as measured by PSG for the sleep group and self-report for the wake group, was statistically equivalent between groups the night before retrieval (sleep: M = 7.02, SD = .86; wake: M = 6.89, SD = 1.46), t(45) = .39, p = .70).

Encoding procedure

During encoding, participants studied 124 composite scenes for 3 seconds each. These scenes were composed of either a negative object or a neutral object (62 each; counterbalanced across participants) placed on a plausible neutral background. By “plausible,” we mean that either version of the scene could theoretically be observed in real life; for instance, an avenue would be a plausible neutral background for both a taxi cab (neutral) and taxi cab accident (negative). Objects had been previously rated for valence and arousal by young and older adults, with negative objects rated as highly arousing and low in valence and neutral objects rated as nonarousing and neutral in valence (see Kensinger, Garoff-Eaton, & Schacter, 2007, and Waring & Kensinger, 2009, for more detail on how these objects were rated). Participants in the present study also gave valence and arousal ratings for the objects at the end of the study on a 7-point Likert scale (1 = low; 7 = high); their ratings confirmed that negative objects were highly arousing and low in valence (arousal: M = 5.33, SD = 1.35; valence: M = 2.58, SD = 1.37) and that neutral objects were nonarousing and neutral in valence (arousal: M = 3.85, SD = 1.23; valence: M = 4.44, SD = 1.14).

To ensure that participants were actively thinking about each scene, participants indicated whether they would approach or back away from the scene if they encountered it in real life (as in Payne & Kensinger, 2011). This task was chosen because it requires participants to think about their reactions to the scenes, a type of self-referential processing that is likely to lead to deeper encoding (Symons & Johnson, 1997). Following this approach or back-away decision, a fixation cross was presented at variable interstimulus interval (ISI) durations (mean ISI = 3.87 s; range: 3–12 s) or was triggered to advance to the next trial upon participants’ fixation, as measured via eye tracking; this varied across trials. Although participants encoded stimuli outside of the scanner, the variable ISIs were designed to mimic the jitter required to isolate the hemodynamic response to each stimulus necessary in an event-related fMRI design (Dale, 1999; note that participants in the present study underwent fMRI during retrieval). Encoding occurred in two blocks of 62 images each with a short (~10–60 second) break between blocks, with negative and neutral scenes randomly intermixed within each block.

Recognition procedure

Following the 12-hour delay, participants performed an unexpected recognition task. They viewed objects and backgrounds, presented separately and one at a time for 2.5 seconds each, and indicated whether each was “old” (included in a previously studied scene) or “new” (not previously studied). On the recognition test were 124 old objects (62 negative, 62 neutral), 124 old backgrounds (62 studied with a negative object, 62 studied with a neutral object), 124 new objects (62 negative, 62 neutral), and 124 new backgrounds (by definition, all neutral). These were presented in a pseudorandom order for each participant, as determined by the program optseq (written by Doug Greve), to optimize jittering within the fMRI environment and to ensure that each particular trial type (e.g., new background) is equally likely to have been proceeded by all of the other trial types. Following each recognition decision, a fixation cross was presented at variable ISI durations (mean ISI = 3.87 s, range: 3–15 s). Analyses in the current study focus on participants’ memory for backgrounds, which, at the time of retrieval, are neutral in valence (i.e., these backgrounds are presented during retrieval without the negative or neutral foreground object that they were paired with during encoding, and participants who have never seen these backgrounds before rate them as neutral).

FMRI image acquisition and preprocessing

Data were acquired on a 3.0 T Siemens Trio Scanner (Trio, Siemens Ltd., Erlangen, Germany) using a standard 12-channel head coil. The stimuli were projected from a Macintosh MacBook to a color LCD projector that projected onto a screen mounted in the magnet bore. Participants viewed the screen through a mirror located on the head coil.

Anatomical images were acquired using a high-resolution 3-D multiecho magnetization prepared rapid acquisition gradient echo sequence (MEMPRAGE; repetition time = 2,200 ms; echo times = 1.64 ms, 3.5 ms, 5.36 ms, 7.22 ms; flip angle = 7 degrees; field of view = 256 × 256 mm; acquisition matrix 256 × 256; number of slices = 176; 1 × 1 × 1-mm resolution). Coplanar and high-resolution T1-weighted localizer images were acquired. In addition, a T1-weighted inversion recovery echo-planar image was acquired for auto alignment.

Functional images were acquired via a T2*-weighted EPI sequence sensitive to the blood-oxygen level-dependent (BOLD) signal, with a repetition time of 3,000 ms, an echo time of 30 ms, and a flip angle of 85 degrees. Forty-seven interleaved axial-oblique slices (parallel to the line between the anterior and the posterior commissures) were collected in a 3 × 3 × 3-mm matrix.

Preprocessing and data analysis were completed using SPM8 (Statistical Parametric Mapping; Wellcome Department of Cognitive Neurology, London, UK). Slice time correction was completed, and motion correction was run, using a six-parameter, rigid-body transformation algorithm by SPM8. The images were normalized to the Montreal Neurological Institute (MNI) template. The resultant voxel size was 3 × 3 × 3 mm, and spatial smoothing was completed at a 6-mm isotropic Gaussian kernel. Global mean intensity and motion outliers were identified using Artifact Detection Tools (ART; available at www.nitrc.org/projects/artifact_detect). Global mean intensity outliers were those scans with a global mean intensity that differed by more than 3 standard deviations from the mean. Acceptable motion parameters were set to ±5 mm for translation and ±5 degrees for rotation, and only scan runs with fewer than 10 total outliers were included in the analysis.

Event-related fMRI data analysis

At the first level of analysis for each subject, the regressors of interest were the time points when studied backgrounds were viewed, broken down by background memory (hits vs. misses) and object valence (whether the neutral background shown during retrieval was paired with a negative vs. neutral object during encoding). Specifically, this included hits to backgrounds paired with negative objects, misses to backgrounds paired with negative objects, hits to backgrounds paired with neutral objects, and misses to backgrounds paired with neutral objects. The first-level model also included the following regressors of no interest: Hits and misses to objects were modeled separately (hits to negative objects, misses to negative objects, hits to neutral objects, misses to neutral objects), and instances where new items were presented (false alarms and correct rejections) were modeled together. Additionally, a regressor accounting for linear drift was included. Motion parameters were not included as nuisance regressors, nor were time or dispersion derivatives included in addition to the canonical HRF hemodynamic response function.

At the first level of analysis, two contrast analyses were run. The first contrast compared hits to misses for backgrounds paired with negative objects. The second contrast compared hits to misses for backgrounds paired with neutral objects.

At the second-level group analysis, we ran an ANOVA to examine the effect of group (sleep vs. wake) on the activity associated with successful retrieval of backgrounds paired with negative objects (i.e., the first, first-level contrast noted above). Second, we ran a regression analysis, within the sleep participants, to examine the relation between REM sleep and activity associated with this successful retrieval (i.e., the effect of a REM regressor on activity in that first, first-level contrast). Third, we ran an ANOVA to examine the effect of study valence (negative vs. neutral) on the activity within the sleep group (i.e., comparing activity in the two contrast analyses that had been run at the first level). In all analyses, only regions that consist of at least nine contiguous voxels, with peak activity at p < .005, are reported in the results. This was determined by a frequently used (e.g., Cairney, Durrant, Power, & Lewis, 2014; Gutchess, Welsh, Boduroĝlu, & Park, 2006; Zaki, Davis, & Ochsner, 2012) Monte Carlo simulation that takes into account the smoothness of the data and the normalized voxel size to correct for multiple comparisons at p < .05 (script downloaded from https://www2.bc.edu/~slotnics/scripts.htm; see Slotnick, Moo, Segal, & Hart, 2003).

To minimize the likelihood of reporting false-positive results, we only report activity within our a priori regions of interest within the visual system and MTL in the Results section. All regions are listed in the tables and depicted in the figures.

GPPI connectivity analysis

We used the generalized psychophysiological interactions (gPPI; http://brainmap.wisc.edu/PPI; McLaren, Ries, Xu, & Johnson, 2012) toolbox in SPM8 to examine connectivity between the occipital lobe and MTL regions during the successful retrieval of neutral backgrounds that were paired with negative versus neutral objects during encoding. For each participant, we created a single gPPI model including the following four regressors: Hits to backgrounds studied with negative objects (“negative contexts”), misses to backgrounds studied with negative objects, hits to backgrounds studied with neutral objects (“neutral contexts”), misses to backgrounds studied with neutral objects. Within each model, we looked at the following contrasts: Hits to negative contexts > Misses to negative contexts, Hits to neutral contexts > Misses to neutral contexts, Hits to negative contexts > Hits to neutral contexts, Hits to negative contexts > Baseline, Hits to neutral contexts > Baseline. To identify a seed region, we chose the middle occipital gyrus because it was significant in all three analyses that we conducted (see Tables 2a, 3, and 4a for a description of these analyses). Having hypothesized a particular role of REM sleep in enhancing visual activity during the retrieval of negative contexts following sleep, we chose a seed region centered at 30 -84 -8 (BA18) because activity here correlated with participants’ percentage of REM sleep obtained during consolidation; prior work has shown that REM sleep enhances cortical responses during retrieval and that the middle occipital gyrus (MOG) is involved in processing emotion (Sterpenich et al., 2013). We then created a volume of interest (VOI) for each subject by creating a 6-mm sphere around this voxel, and used the gPPI toolbox to extract data from each participant’s individualized activity within the 6-mm sphere and estimate functional connectivity between this region and the whole brain during retrieval. We focused specifically on the MTL, due to our a priori hypotheses. The significance threshold was set at p < .005 with a 9-voxel extent (correcting results to p < .05).

Results

Memory for backgrounds, by object valence and group

A mixed-effects analysis of variance, with Valence (within-subjects) and Group (between-subjects) entered as factors of interest, determined that there was a significant main effect of Valence on memory for scene backgrounds, F(1, 45) = 36.974, p < .001; ηp 2 = .45. Memory performance was defined as the number of correctly remembered backgrounds divided by the total number of studied backgrounds, computed separately for backgrounds presented with a negative or neutral object during encoding. “False alarm” rates to the backgrounds could not be separated by valence, as all new backgrounds presented during retrieval were neutral in valence. Therefore, behavioral memory performance reports the hit rates only. Memory was significantly better for neutral backgrounds that had been paired with neutral objects (i.e., “neutral contexts;” M ± SD: 49.5 ± 16.6 %) compared to neutral backgrounds that had been paired with negative objects (i.e., “negative contexts”; 39.4 ± 13.0 %), reflecting the typical memory trade-off effect (e.g., Kensinger et al., 2007; Payne et al., 2008; see Table 1). There was no main effect of Group on memory for backgrounds, F(1, 45) = .624, p = .43; ηp 2 = .014, indicating that memory for backgrounds was comparable regardless of whether participants slept (negative contexts: 40.3 ± 13.1 %; neutral contexts: 51.4 ± 15.8 %) or stayed awake (negative contexts: 38.2 ± 13.2 %; neutral contexts: 47.0 ± 17.5 %) during the consolidation interval. Similarly, there was no Valence × Group interaction, F(1, 45) = .436, p = .47; ηp 2 = .012, indicating that the effect of Valence was comparable regardless of whether participants slept or stayed awake during the consolidation interval.

FMRI results

All fMRI analyses focus on activity and/or connectivity during the successful retrieval (hits minus misses) of neutral background scenes, as a function of whether they had been previously paired with negative objects versus neutral objects during encoding. As mentioned, whether each background was encoded with a negative or a neutral object was counterbalanced across participants at encoding. Analyses examined how this study history influenced successful retrieval processes and the effect of group (sleep, wake) on those processes.

Activity during successful retrieval (hit > miss) of negative encoding contexts

Comparison of activity between the sleep and wake groups

Numerous clusters within the occipital lobe were more active for the sleep group than for the wake group during the successful retrieval of negative contexts (i.e., neutral backgrounds that had been paired with negative objects during encoding).Footnote 1 These regions included two clusters within the middle occipital gyrus [-38 -92 -2 (BA18); 40 -82 -2 (BA19, spanning BA18)], three within the lingual gyrus [30 -72 -10 (BA18); -18 -88 -8 (BA17); 22 -66 -6 (BA19)], and one in the inferior occipital gyrus [-40 -74 -6 (BA19); see Fig. 1]. There were no regions within the occipital lobe that were more active for the wake group than for the sleep group, but rather, activity in the parahippocampal gyrus [-38 -36 -8 (BA37)] and hippocampus [34 -46 6] showed this distinction. For a full comparison of activity between the sleep and wake groups, see Table 2a, b.

Visual activity was greater for the sleep group than wake group during successful retrieval of neutral backgrounds that were paired with negative objects during encoding. These regions included two clusters within the middle occipital gyrus [-38 -92 -2 (BA18); 40 -82 -2 (BA19, spanning BA18)], three within the lingual gyrus [30 -72 -10 (BA18); -18 -88 -8 (BA17); 22 -66 -6 (BA19)], and one in the inferior occipital gyrus [-40 -74 -6 (BA19)]

Effects of REM sleep on successful retrieval activity

Within the sleep group, participants’ REM sleep percentage was correlated with activity in several occipital regions during successful retrieval of negative contexts, including the lingual gyrus [6 -74 -4 (BA18)], middle occipital gyrus [30 -84 -8 (BA18)], and three clusters within the cuneus [4 -92 6 (BA18); 30 -90 24 (BA19); 20 -100 -2 (BA18); see Fig. 2]. Importantly, REM sleep was the only stage of sleep to correlate with activity in any visual region. REM sleep percentage was also correlated with activity in the parahippocampal gyrus [-12 -30 -8 (BA30)].Footnote 2 See Table 3 for a full list of regions in which successful retrieval-related activity to negative contexts correlated with REM sleep. During the retrieval of neutral contexts, participants’ REM sleep percentage was correlated with activity in the caudate [-26 -44 16; 26 -42 12], thalamus [2 -30 6], and cerebellum [6 -48 -28], but no visual regions.

The percentage of REM sleep obtained by sleep group participants during consolidation was correlated with activity in several occipital regions, including the lingual gyrus [6 -74 -4 (BA18)], middle occipital gyrus [30 -84 -8 (BA18)], and three clusters within the cuneus [20 -100 -2 (BA18); 4 -92 6 (BA18); 30 -90 24 (BA19)] during the retrieval of neutral backgrounds paired with negative objects during encoding. Some of these regions partially overlapped with (in green) those that showed greater activity during successful retrieval of “negative” backgrounds for the sleep group than wake group (i.e., overlap with results shown in Fig. 1) or (in yellow) those that showed greater activity in the sleep group for “negative” compared to “neutral” backgrounds (i.e., overlap with results shown in Fig. 3)

Comparing activity during successful retrieval (hit > miss) of negative versus neutral encoding contexts in the sleep and wake groups

The next analyses examined whether the prior effects of sleep leading to an enhancement of visual activity during retrieval were specific to contexts that had been paired with negative objects (and not with neutral objects) at encoding. Within the sleep group, activity within the cuneus [-24 -96 22 (BA19)], fusiform gyrus [42 -68 -14 (BA19)], lingual gyrus [2 -88 -4 (BA18)], and middle occipital gyrus [34 -86 2 (BA19, spanning BA18)] was greater during successful retrieval of negative contexts as compared to neutral contexts (see Fig. 3). This was not the case for the wake group, in which activity in the middle frontal gyrus [-24 48 -4 (BA11)], cingulate gyrus [14 30 32 (BA32); 4 -22 24 (BA23)], and cerebellum [32 -48 -46; -20 -68 -34] was enhanced during the successful retrieval of negative relative to neutral contexts. Interestingly, during the retrieval of neutral contexts relative to negative contexts, activity within the parahippocampal gyrus was greater in both the sleep group [20 -24 -20 (BA35)] and the wake group [30 -24 -22 (BA35)], as was activity in the middle occipital gyrus [-38 -94 10 (BA19)] in the wake group only. Although here we focus on visual regions (vs. the whole brain), due to our a priori hypotheses, see Table 4a, b for a full comparison of sleep participants’ activity during retrieval of negative greater than neutral contexts, and vice versa. This information can be found for the wake group in Table 5a, b.

Enhanced visual activity following sleep was specific to the retrieval of backgrounds that had been studied with emotional content during encoding. Specifically, activity within the cuneus [-24 -96 22 (BA19)], fusiform gyrus [42 -68 -14 (BA19)], lingual gyrus [2 -88 -4 (BA18)], and middle occipital gyrus [34 -86 2 (BA19, spanning BA18)] was greater during successful retrieval of negative contexts as compared to neutral contexts

Connectivity between the middle occipital gyrus and medial temporal lobe

The middle occipital gyrus (BA18) showed enhanced activity in all of the aforementioned analyses; this region was more active following sleep than wake both during the successful retrieval of negative contexts (see Table 2a) and during successful retrieval of negative greater than neutral contexts following sleep (see Table 4a), and its activity during successful retrieval of negative contexts was correlated with participants’ percentage of REM sleep obtained during consolidation (see Table 3). GPPI analyses revealed increased connectivity between the MOG (a 6-mm sphere centered at 30 -84 -8; see Fig. 4, shown in violet) and the hippocampus [-34 -8 -20] when individuals in the sleep group successfully retrieved negative contexts as compared to neutral contexts (see Fig. 4, shown in yellow). A separate gPPI analysis revealed that activity within an overlapping hippocampal region [-32 -8 -22] showed a Valence × Group interaction, such that this enhanced connectivity between the MOG and hippocampus during successful retrieval of negative contexts was specific to the sleep group and did not extend to the wake group (see Fig. 4, shown in cyan). In fact, the wake group showed the strongest connectivity between the MOG and hippocampus during retrieval of neutral contexts.

GPPI analyses revealed increased connectivity between a MOG seed region [6 mm centered at 30 -84 -8, shown in violet] and the hippocampus [-34 -8 -20, shown in yellow] during the successful retrieval of negative contexts relative to neutral contexts within the sleep group. Activity in an overlapping hippocampal region [-32 -8 -22, shown in cyan] showed a valence by group interaction, indicating that enhanced MOG-hippocampal connectivity during negative context retrieval was specific to the sleep group

Discussion

This study demonstrated, for the first time, that sleep during consolidation enhances the sensory activity associated with the retrieval of neutral information that had been previously studied with negative versus neutral content. These results are particularly interesting because they reveal differences in sensory processing to exactly the same background scenes, as a function of whether they had been previously paired with emotional or neutral content during encoding, and whether participants slept during the consolidation interval. Specifically, activity in multiple regions within the ventral visual processing stream (BAs 17, 18, and 19) was greater during the retrieval of neutral backgrounds paired with negative objects during encoding following a 12-hour delay consisting of a full night of sleep relative to the equivalent amount of time spent awake. Further, results suggest that this enhanced activity may be linked to REM sleep and to increased connectivity between the middle occipital gyrus and hippocampus following sleep.

Prior work using emotional cues has established that emotion enhances perceptual processing (e.g., Todd et al., 2012; Zeelenberg et al., 2006), is associated with increased activity in the striate (e.g., Bradley et al., 2003; Padmala & Pessoa, 2008) and extrastriate cortex (e.g., Bradley et al., 2003; Lang et al., 1998; Vuilleumier et al., 2004) during viewing, and leads to enhanced visual specificity of a memory (e.g., Kensinger et al., 2007). Here, by assessing retrieval-related activity to neutral scenes that were once presented with either emotional or nonemotional content, we show that these effects of emotion on visual processing persist even once the emotional element of the scene has been removed, and may be dependent on sleep occurring during the consolidation interval. These residual effects of emotion were evident during successful memory retrieval (hits minus misses) of neutral backgrounds that were paired with negative objects during encoding, but, critically, there was no increase in visual activity during the retrieval of the identical backgrounds when they were presented with neutral objects during encoding. Importantly, because we counterbalanced across participants whether a background was presented with a negative or neutral object during encoding, there are no stimulus differences (i.e., perceptual features, distinctiveness, interestingness, etc.) that might explain these findings.

This link between emotion and visual processing is consistent with prior work, as enhanced activity in the lingual gyrus has been observed during the retrieval of negative relative to neutral images (Taylor et al., 1998). Similarly, Maratos et al. (2001) found enhanced sensory (i.e., cuneus/precuneus, lingual gyrus) activity during the retrieval of words that were presented in a negative context relative to a neutral context during encoding. For the first time, we show that these residual effects of emotion are strengthened when sleep occurs during a consolidation interval, which is consistent with prior work showing that V1 response potentiation (as measured by orientation-specific increases in V1 visually evoked potentials) requires sleep, and is blocked by sleep deprivation (Aton et al., 2014). Further, the reactivation of memories during REM sleep enhances cortical responses during retrieval (Sterpenich et al., 2013), suggesting that during sleep, recent memories are being processed and integrated within cortical circuits.

Aligning with Sterpenich et al. (2013), this study also shows a specific role of REM sleep in enhancing these residual emotion effects. Activity in the middle occipital gyrus (BA18), lingual gyrus (BA18), and cuneus (BAs 18 and 19) during the retrieval of these neutral backgrounds once presented with emotional content correlated with the percentage of REM sleep obtained during consolidation, highlighting the critical role of sleep (and particularly REM sleep) in enhancing visual activity during retrieval of these stimuli. This effect is specific to backgrounds paired with emotional relative to neutral content during encoding, perhaps suggesting that REM sleep promotes binding between the experienced emotion and the neutral background, yielding residual effects of emotion that are reflected in enhanced visual activity during retrieval. Prior research also has demonstrated that REM sleep, relative to other stages of sleep and wakefulness, can enhance emotional memory (e.g., Wagner et al., 2001; see Ackermann & Rasch, 2014, for review), and can correspond with the magnitude of emotional memory enhancement (Nishida et al., 2009; Payne et al., 2012). Here, we demonstrate that REM sleep modulates retrieval processes, as shown by an increase in visual activity, even when the emotional content of an event is not re-presented at retrieval.

When hypothesizing which regions would show residual emotion effects (as indicated by a difference in activity to backgrounds originally presented with emotional versus, neutral foreground content) following a period of sleep, two things were unclear: whether these effects would be reflected in visual regions or the limbic system and the direction of the effects (i.e., whether activity would increase or decrease). The SFSR hypothesis, which argues that sleep dampens the emotional response associated with a stimulus, would predict a decrease in limbic, and, although not explicitly stated, perhaps also sensory engagement given that negative emotion leads to increased visual activity during retrieval (e.g., Keightley et al., 2011; Kensinger & Schacter, 2007; Mitchell et al., 2006). If the SFSR hypothesis would indeed argue for decreased sensory activity in memory related regions following sleep, our findings are more consistent with work suggesting that sleep serves to preserve (Baran et al., 2012; Groch, Wilhelm, Diekelmann, & Born, 2013) or even potentiate (Lara-Carrasco, Nielsen, Solomonova, Levrier, & Popova, 2009; Wagner et al., 2001) the emotion associated with a stimulus. Here, we show that even once the emotional content has been removed, there is nonetheless enhanced visual activity when retrieving the neutral background that was once paired with it.

Although the amygdala is implicated in successful emotional memory encoding and retrieval (for review, see Hamann, 2001; Labar & Cabeza, 2006), and sleep during consolidation leads to a more refined emotional memory retrieval network centered on limbic regions (Payne & Kensinger, 2011; Sterpenich et al., 2009), there was no evidence of amygdala activity during retrieval of these neutral backgrounds presented with emotional content during encoding (following sleep or wake). Rather, within the MTL, there was activity in the parahippocampal gyrus following sleep, greater during the retrieval of neutral backgrounds studied with neutral objects relative to negative objects. This is consistent with prior literature showing that the parahippocampal gyrus is important for scene recognition (e.g., Epstein, 2008; Epstein & Kanwisher, 1998), and is also consistent with the emotional memory trade-off literature, suggesting that for neutral scenes paired with neutral relative to negative objects during encoding, the scene may be processed more holistically (i.e., with less of a central/peripheral trade-off; e.g., Christianson & Loftus, 1991; Easterbrook, 1959; Kensinger, Piguet, Krendl, & Corkin, 2005). While the parahippocampal gyrus is elicited during the retrieval of scenes presented with neutral content at encoding, results suggest that presentation of these scenes with emotional content at encoding leads to greater recruitment of visual regions during retrieval following a period of sleep.

This being said, while the present results may initially seem inconsistent with previous findings of enhanced activity in emotion processing regions during the retrieval of stimuli initially presented in negative relative to neutral contexts (Maratos et al., 2001; Smith et al., 2004), this is likely explained by the increase in length of the delay interval and the inclusion of sleep during consolidation in the present study. In both aforementioned studies, the delay between study and test was approximately 5 minutes, while the delay in the present study was approximately 12 hours. It is likely that while limbic and sensory activity is maintained during retrieval after such a short delay, these regions play less of a role in retrieval after a longer delay (i.e., in the wake group in the present study)—unless sleep is involved, in which case the processing of emotional memories during REM sleep in particular (Sterpenich et al., 2013) may lead to enhanced cortical activity during the retrieval of emotional contexts. Similarly, it is important to note that the sleep occurring during consolidation may explain why the present study did not reveal amygdala activity during the retrieval of emotional contexts, unlike Smith et al. (2004) and Maratos et al. (2001). Sleep has been shown to selectively enhance emotional memory (e.g., Hu, Stylos-Allan, & Walker, 2006; Wagner et al., 2001; Wagner, Hallschmid, Rasch, & Born, 2006), often at the cost of memory for neutral information presented concurrently (Payne et al., 2008; Payne & Kensinger, 2011). For instance, sleep (relative to wake) leads to enhanced amygdala activity during the retrieval of emotional objects presented with neutral backgrounds during encoding, but not their backgrounds encoded concurrently (Payne & Kensinger, 2011). These selective effects of sleep on emotional memory, effectively pulling apart emotional objects from the backgrounds with which they were presented during encoding, may explain why the present study did not observe enhanced limbic activity during retrieval, after the emotional element of the scene had been removed. Rather than being reflected in enhanced limbic activity, residual emotion effects, as measured by cases in which participants successfully remembered the neutral backgrounds encoded with negative content, may be reflected in enhanced visual activity following a substantial (at least 12-hour) delay including a period of sleep relative to wake.

It is especially interesting to note dissimilarities in how emotion and sleep affect neural activity during retrieval compared to behavioral memory performance. For instance, behavioral results showed an emotional memory trade-off in sleep and wake participants (e.g., Kensinger et al., 2007; reviewed by Reisberg & Heuer, 2004): enhanced memory for the negative relative to neutral objects, but poorer memory for backgrounds studied with negative relative to neutral foreground content (with no group differences). Nevertheless, despite overall poorer memory performance for backgrounds presented with negative (vs. neutral) content, there was greater perceptual processing in those cases where participants managed to successfully retrieve negative contexts, but only following sleep. The fact that group differences were revealed only in neural activity and not in behavior suggests that neural markers may be more sensitive to the effects of study history and sleep than behavioral outcomes, which is consistent with work in many domains that find a similar pattern of results (neural effects in absence of behavioral effects; e.g., Henckens et al., 2012; Van Stegeren, Roozendaal, Kindt, Wolf, & Joëls, 2010). Importantly, because there were no behavioral group differences, the number of correctly recognized objects did not differ across groups, and it is unlikely that memory trace strength differed (as this should have resulted in behavioral group differences). Rather, these results demonstrate that during retrieval, the exact same visual information can elicit different neural signatures based on whether participants slept and whether that context had been previously presented with emotional content during encoding.

Limitations

One potential limitation in the present study is that participants did not have an acclimation night in the laboratory; rather, sleep participants spent only one night in the laboratory (following encoding and prior to retrieval). While we acknowledge that the first night of PSG studies often shows an alteration of sleep architecture (Agnew, Webb, & Williams, 1966), the impact of the majority of the data presented here depends not on specific characteristics or stages of sleep, but rather whether or not sleep occurred during consolidation. Another limitation is that participants in the wake group were not monitored by actigraphy during their 12-hour consolidation delay. While it is possible that a participant could have taken a nap during this time, these concerns are minimized by the fact that no participant reported having napped when asked upon their return to the laboratory for the retrieval test.

Another limitation of the present study is that the sleep group retrieved stimuli in the morning, while the wake group retrieved stimuli in the evening, thus allowing for the possibility of time-of-day effects. Prior work has shown evidence of increased occipital activity in response to visual stimuli in the morning relative to evening (Gorfine & Zisapel, 2009), which would be consistent with our finding that the sleep group, who tested in the morning, showed heightened occipital activity during the retrieval of negative contexts relative to the wake group, who tested in the evening. Importantly, however, this was not the case when comparing the morning short delay and evening short delay groups in the present study. Moreover, time-of-day effects could not explain the REM correlations seen within the sleep group, as everyone in that group was tested in the morning. For these reasons, we think our effects are unlikely to be due to time of day. Nevertheless, especially given concerns about false-positive results in fMRI studies (e.g., Eklund, Nichols, & Knutsson 2016), replications across studies are of critical importance. We cannot think of a flawless design: A nap design could eliminate time-of-day effects but, because of the low levels of REM sleep typical during a nap, might miss important effects of overnight sleep. A sleep deprivation design could also eliminate time-of-day effects, but would add the stressful effects of sleep deprivation, which might confound the effects on emotion processing. Such complications emphasize the need to replicate results across studies using a range of methods that, together, may serve to rule out a series of possible confounds.

Another limitation is that the recognition memory trials we discuss here (the background contexts) were intermixed with trials in which negative and neutral objects were presented. It is possible that this intermixing encouraged participants to retrieve (explicitly or implicitly) the associated foreground content of the scenes, and that this would not have occurred with a different design. For instance, as emotion has been shown to lead to enhanced sensory processing (e.g., Kark & Kensinger, 2015; Keightley et al., 2011), the presentation of negative objects intermixed with neutral objects and neutral backgrounds during retrieval may have primed visual areas to be globally more active during retrieval (i.e., even during the retrieval of neutral stimuli) relative to a design in which no emotional content was presented. However, this design feature in itself is unlikely to explain differences between the sleep and wake groups, as the recognition lists were identical in those two conditions. Moreover, it seems an unlikely explanation for our findings because within the sleep group, the successful retrieval of negative contexts (i.e., hits compared to misses) was associated with greater visual activity than the successful retrieval of neutral contexts, while the retrieval of negative greater than neutral objects was not. Nonetheless, future work could circumvent this alternative explanation for our findings by presenting emotional and neutral stimuli in separate blocks rather than intermixed, as was done here.

While we do not believe that the intermixing of negative and neutral stimuli during retrieval explains why the sleep group relative to wake group showed greater visual activity to negative contexts, it is possible that emotion spillover effects could be stronger in the sleep group than the wake group, and that this could be driven by sleep recency. For instance, emotion processing is potentiated by sleep (e.g., Wagner et al., 2001) and connectivity between the medial prefrontal cortex and amygdala has been shown to be greater following sleep than extended periods of wakefulness (i.e., sleep deprivation; Yoo, Gujar, Hu, Jolesz, & Walker, 2007). Indeed, activity during the successful retrieval of negative objects showed greater activity in visual regions including the lingual gyrus [-32 -56 2 (BA19)] and fusiform gyrus [38 -2 -24 (BA 20)] following sleep relative to wake. However, even though sleep may affect visual activity to the negative objects, what is remarkable is that sleep also enhanced visual activity when comparing negative to neutral contexts—despite the fact that, for those trials, all backgrounds contained only neutral content. Thus, the results emphasize that not only does sleep increase visual responses to negative content, but it also increases activity to information previously associated with negative content, as shown across multiple analyses: Visual activity during the retrieval of backgrounds studied with emotional content was enhanced following sleep versus wake (i.e., Fig. 1), correlated with the percentage of REM sleep obtained during consolidation (i.e., Fig. 2), and was specific to backgrounds studied with negative versus neutral content following sleep (i.e., Fig. 3).

Conclusion

Overall, these results suggest that the ability for emotion to enhance perceptual processing depends on sleep occurring during the consolidation interval. These enhancements are maximized by REM sleep, leading to greater visual activity during successful retrieval. These are not global effects of sleep leading to enhanced visual activity during retrieval, but rather are specific to those backgrounds that had been paired with emotional (vs. neutral) content during encoding, and may be due to increased connectivity between the middle occipital gyrus and hippocampus following sleep. This study provides further evidence for emotion enhancing perceptual processing by demonstrating that emotion effects persist even once the emotional element of the scene has been removed. Further, for the first time, we show that sleep occurring during a consolidation delay plays an important role, reflecting emotion’s residual effects through enhanced visual activity during retrieval.

Notes

Differences in visual activity were not due to effects of circadian time, as we also ran these analyses in our two groups of participants (morning short delay and evening short delay) who encoded and retrieved stimuli with a 20-minute study-test delay. There was only one region within the right inferior parietal lobule [58 -34 36 (BA40)] that showed greater activity during the successful retrieval of negative contexts when tested in the morning compared to the evening.

Slow-wave sleep (SWS) percentage was not correlated with activity in any visual regions. Rather, participants’ SWS correlated only with one cluster in the middle temporal gyrus [60 -46 8 (BA21)] and one cluster in the postcentral gyrus [-14 -38 72 (BA3)].

References

Ackermann, S., & Rasch, B. (2014). Differential effects of non-REM and REM sleep on memory consolidation? Current Neurology and Neuroscience Reports, 14(2), 1–10.

Agnew, H. W., Webb, W. B., & Williams, R. L. (1966). The first night effect: An EEG study of sleep. Psychophysiology, 2(3), 263-266.

Aton, S. J., Seibt, J., Dumoulin, M., Jha, S. K., Steinmetz, N., Coleman, T., . . . Frank, M. G. (2009). Mechanisms of sleep-dependent consolidation of cortical plasticity. Neuron, 61(3), 454–466.

Aton, S. J., Suresh, A., Broussard, C., & Frank, M. G. (2014). Sleep promotes cortical response potentiation following visual experience. Sleep, 37(7), 1163–1170.

Baran, B., Pace-Schott, E. F., Ericson, C., & Spencer, R. M. (2012). Processing of emotional reactivity and emotional memory over sleep. Journal of Neuroscience, 18(32), 1035–1042.

Beck, A. T., & Beamesderfer, A. (1974). Assessment of depression: The depression inventory. Modern Problems of Pharmacopsychiatry, 7, 151–169.

Beck, A. T., Epstein, N., Brown, G., & Steer, R. A. (1988). An inventory for measuring clinical anxiety: Psychometric properties. Journal of Consulting and Clinical Psychology, 56, 893–897.

Bennion, K. A., Mickley Steinmetz, K. R., Kensinger, E. A., & Payne, J. D. (2014). Eye tracking, cortisol, and a sleep vs. wake consolidation delay: Combining methods to uncover an interactive effect of sleep and cortisol on memory. Journal of Visualized Experiments, 88, e51500–e51500.

Bennion, K. A., Mickley Steinmetz, K. R., Kensinger, E. A., & Payne, J. D. (2015). Sleep and cortisol interact to support memory consolidation. Cerebral Cortex, 25, 646–657.

Bradley, M. M., Sabatinelli, D., Lang, P. J., Fitzsimmons, J. R., King, W., & Desai, P. (2003). Activation of the visual cortex in motivated attention. Behavioral Neuroscience, 117(2), 369.

Buchanan, T. W. (2007). Retrieval of emotional memories. Psychological Bulletin, 133(5), 761–779.

Cairney, S. A., Durrant, S. J., Power, R., & Lewis, P. A. (2014). Complementary roles of slow-wave sleep and rapid eye movement sleep in emotional memory consolidation. Cerebral Cortex, 25(6), 1565–1575. doi:10.1093/cercor/bht349

Christianson, S.-A., & Loftus, E. F. (1991). Remembering emotional events: The fate of detailed information. Cognition and Memory, 5, 81–108.

Cunningham, T. J., Crowell, C. R., Alger, S. E., Kensinger, E. A., Villano, M. A., Mattingly, S. M., & Payne, J. D. (2014). Psychophysiological arousal at encoding leads to reduced reactivity but enhanced emotional memory following sleep. Neurobiology of Learning and Memory, 114, 155–164.

Dale, A. M. (1999). Optimal experimental design for event-related fMRI. Human Brain Mapping, 8(2/3), 109–114.

Easterbrook, J. A. (1959). The effect of emotion on cue utilization and the organization of behavior. Psychological Review, 66, 183–201.

Eklund, A., Nichols, T. E., & Knutsson, H. (2016). Cluster failure: Why fMRI inferences for spatial extent have inflated false-positive rates. Proceedings of the National Academy of Sciences, 113(28), 7900–7905.

Ellenbogen, J. M., Hu, P. T., Payne, J. D., Titone, D., & Walker, M. P. (2007). Human relational memory requires time and sleep. Proceedings of the National Academy of Sciences, 104(18), 7723–7728.

Epstein, R. A. (2008). Parahippocampal and retrosplenial contributions to human spatial navigation. Trends in Cognitive Sciences, 12(10), 388–396.

Epstein, R., & Kanwisher, N. (1998). A cortical representation of the local visual environment. Nature, 392(6676), 598–601.

Gorfine, T., & Zisapel, N. (2009). Late evening brain activation patterns and their relation to the internal biological time, melatonin, and homeostatic sleep debt. Human Brain Mapping, 30(2), 541–552.

Groch, S., Wilhelm, I., Diekelmann, S., & Born, J. (2013). The role of REM sleep in the processing of emotional memories: Evidence from behavior and event-related potentials. Neurobiology of Learning and Memory, 99, 1–9.

Gutchess, A. H., Welsh, R. C., Boduroĝlu, A., & Park, D. C. (2006). Cultural differences in neural function associated with object processing. Cognitive, Affective, & Behavioral Neuroscience, 6(2), 102–109.

Hamann, S. (2001). Cognitive and neural mechanisms of emotional memory. Trends in Cognitive Sciences, 5, 394–400.

Henckens, M. J. A. G., Pu, Z., Hermans, E. J., Van Wingen, G. A., Joëls, M., & Fernández, G. (2012). Dynamically changing effects of corticosteroids on human hippocampal and prefrontal processing. Human Brain Mapping, 33, 2885–2897.

Horne, J. A., & Ostberg, O. (1976). A self-assessment questionnaire to determine morningness-eveningness in human circadian rhythms. International Journal of Chronobiology, 4, 97–110.

Hu, P., Stylos-Allan, M., & Walker, M. P. (2006). Sleep facilitates consolidation of emotional declarative memory. Psychological Science, 17, 891–898.

Ji, D., & Wilson, M. A. (2007). Coordinated memory replay in the visual cortex and hippocampus during sleep. Nature Neuroscience, 10(1), 100–107.

Kark, S. M., & Kensinger, E. A. (2015). Effect of emotional valence on retrieval-related recapitulation of encoding activity in the ventral visual stream. Neuropsychologia, 78, 221–230.

Keightley, M. L., Chiew, K. S., Anderson, J. A., & Grady, C. L. (2011). Neural correlates of recognition memory for emotional faces and scenes. Social Cognitive and Affective Neuroscience, 6(1), 24–37.

Kensinger, E. A., & Schacter, D. L. (2007). Remembering the specific visual details of presented objects: Neuroimaging evidence for effects of emotion. Neuropsychologia, 45(13), 2951–2962.

Kensinger, E. A., Piguet, O., Krendl, A. C., & Corkin, S. (2005). Memory for contextual details: Effects of emotion and aging. Psychology and Aging, 20, 241–250.

Kensinger, E. A., Garoff-Eaton, R. J., & Schacter, D. L. (2007). How negative emotion enhances the visual specificity of a memory. Journal of Cognitive Neuroscience, 19(11), 1872–1887.

LaBar, K. S., & Cabeza, R. (2006). Cognitive neuroscience of emotional memory. Nature Reviews Neuroscience, 7(1), 54–64.

Lang, P. J., Bradley, M. M., Fitzsimmons, J. R., Cuthbert, B. N., Scott, J. D., Moulder, B., & Nangia, V. (1998). Emotional arousal and activation of the visual cortex: An fMRI analysis. Psychophysiology, 35(2), 199–210.

Lara-Carrasco, J., Nielsen, T. A., Solomonova, E., Levrier, K., & Popova, A. (2009). Overnight emotional adaptation to negative stimuli is altered by REM sleep deprivation and is correlated with intervening dream emotions. Journal of Sleep Research, 18(2), 178–187.

Maratos, E. J., Dolan, R. J., Morris, J. S., Henson, R. N., & Rugg, M. D. (2001). Neural activity associated with episodic memory for emotional context. Neuropsychologia, 39(9), 910–920.

McLaren, D. G., Ries, M. L., Xu, G., & Johnson, S. C. (2012). A generalized form of context-dependent psychophysiological interactions (gPPI): A comparison to standard approaches. NeuroImage, 61(4), 1277–1286.

Mitchell, K. J., Mather, M., Johnson, M. K., Raye, C. L., & Greene, E. J. (2006). A functional magnetic resonance imaging investigation of short-term source and item memory for negative pictures. Neuroreport, 17(14), 1543–1547.

Nishida, M., Pearsall, J., Buckner, R. L., & Walker, M. P. (2009). REM sleep, prefrontal theta, and the consolidation of human emotional memory. Cerebral Cortex, 19, 1158–1166.

Padmala, S., & Pessoa, L. (2008). Affective learning enhances visual detection and responses in primary visual cortex. The Journal of Neuroscience, 28(24), 6202–6210.

Payne, J. D. (2011). Sleep on it!: Stabilizing and transforming memories during sleep. Nature Neuroscience, 14(3), 272–274.

Payne, J. D. (2014a). Seeing the forest through the trees. Sleep, 37(6), 1029–1030.

Payne, J. D. (2014b). The (gamma) power to control our dreams. Nature Neuroscience, 17(6), 753–755.

Payne, J. D., & Kensinger, E. A. (2011). Sleep leads to changes in the emotional memory trace: Evidence from fMRI. Journal of Cognitive Neuroscience, 23, 1285–1297.

Payne, J. D., Stickgold, R., Swanberg, K., & Kensinger, E. A. (2008). Sleep preferentially enhances memory for emotional components of scenes. Psychological Science, 19, 781–788.

Payne, J. D., Chambers, A. M., & Kensinger, E. A. (2012). Sleep promotes lasting changes in selective memory for emotional scenes. Frontiers in Integrative Neuroscience, 6, 1–11.

Payne, J. D., Kensinger, E. A., Wamsley, E. J., Spreng, R. N., Alger, S. E., Gibler, K., . . . Stickgold, R. (2015). Napping and the selective consolidation of negative aspects of scenes. Emotion, 15(2), 176.

Reisberg, D., & Heuer, F. (2004). Memory for emotional events. In D. Reisberg & P. Hertel (Eds.), Memory and emotion (pp. 3–41). Oxford, UK: Oxford University Press.

Ritchey, M., Wing, E. A., LaBar, K. S., & Cabeza, R. (2013). Neural similarity between encoding and retrieval is related to memory via hippocampal interactions. Cerebral Cortex, 23(12), 2818–2828.

Sabatinelli, D., Bradley, M. M., Fitzsimmons, J. R., & Lang, P. J. (2005). Parallel amygdala and inferotemporal activation reflect emotional intensity and fear relevance. NeuroImage, 24(4), 1265–1270.

Slotnick, S. D., Moo, L. R., Segal, J. B., & Hart, J. (2003). Distinct prefrontal cortex activity associated with item memory and source memory for visual shapes. Cognitive Brain Research, 17, 75–82.

Smith, A. P. R., Henson, R. N. A., Dolan, R. J., & Rugg, M. D. (2004). FMRI correlates of the episodic retrieval of emotional contexts. NeuroImage, 22(2), 868–878.

Sotres-Bayon, F., Bush, D. E., & LeDoux, J. E. (2004). Emotional perseveration: An update on prefrontal-amygdala interactions in fear extinction. Learning & Memory, 11(5), 525–535.

Sterpenich, V., Albouy, G., Darsaud, A., Schmidt, C., Vandewalle, G., Dang-Vu, T., . . . Maquet, P. (2009). Sleep promotes the neural reorganization of remote emotional memory. Journal of Neuroscience, 29(16), 5143–5152.

Sterpenich, V., Schmidt, C., Albouy, G., Matarazzo, L., Vanhaudenhuyse, A., Boveroux, P., . . . Maquet, P. (2013). Memory reactivation during rapid eye movement sleep promotes its generalization and integration in cortical stores. Sleep, 37(6), 1061–1075.

Symons, C. S., & Johnson, B. T. (1997). The self-reference effect in memory: A meta-analysis. Psychological Bulletin, 121, 371–394.

Taylor, S. F., Liberzon, I., Fig, L. M., Decker, L. R., Minoshima, S., & Koeppe, R. A. (1998). The effect of emotional content on visual recognition memory: A PET activation study. NeuroImage, 8(2), 188–197.

Todd, R. M., Talmi, D., Schmitz, T. W., Susskind, J., & Anderson, A. K. (2012). Psychophysical and neural evidence for emotion-enhanced perceptual vividness. The Journal of Neuroscience, 32(33), 11201–11212.

van der Helm, E., & Walker, M. P. (2009). Overnight therapy? The role of sleep in emotional brain processing. Psychological Bulletin, 135(5), 731–748.

van der Helm, E., Yao, J., Dutt, S., Rao, V., Saletin, J. M., & Walker, M. P. (2011). REM sleep depotentiates amygdala activity to previous emotional experiences. Current Biology, 21(23), 2029–2032.

Van Stegeren, A. H., Roozendaal, B., Kindt, M., Wolf, O. T., & Joëls, M. (2010). Interacting noradrenergic and corticosteroid systems shift human brain activation patterns during encoding. Neurobiology of Learning and Memory, 93, 56–65.

Vuilleumier, P., Richardson, M. P., Armony, J. L., Driver, J., & Dolan, R. J. (2004). Distant influences of amygdala lesion on visual cortical activation during emotional face processing. Nature Neuroscience, 7(11), 1271–1278.

Wagner, U., Gais, S., & Born, J. (2001). Emotional memory formation is enhanced across sleep intervals with high amounts of rapid eye movement sleep. Learning & Memory, 8, 112–119.

Wagner, U., Hallschmid, M., Rasch, B., & Born, J. (2006). Brief sleep after learning keeps emotional memories alive for years. Biological Psychiatry, 60(7), 788–790.

Walker, M. P., & Stickgold, R. (2010). Overnight alchemy: Sleep-dependent memory evolution. Nature Reviews Neuroscience, 11(3), 218–222.

Waring, J. D., & Kensinger, E. A. (2009). Effects of emotional valence and arousal upon memory trade-offs with aging. Psychology and Aging, 24, 412–422.

Yoo, S. S., Gujar, N., Hu, P., Jolesz, F. A., & Walker, M. P. (2007). The human emotional brain without sleep—A prefrontal amygdala disconnect. Current Biology, 17(20), R877–R878.

Zaki, J., Davis, J. I., & Ochsner, K. N. (2012). Overlapping activity in anterior insula during interoception and emotional experience. NeuroImage, 62(1), 493–499.

Zeelenberg, R., Wagenmakers, E. J., & Rotteveel, M. (2006). The impact of emotion on perception bias or enhanced processing? Psychological Science, 17(4), 287–291.

Acknowledgments

This research was supported by Grant BCS-0963581 from the National Science Foundation (to E.A.K. and J.D.P.), and the Scott Mesh Honorary Grant and Dissertation Research Award from the American Psychological Association (to K.A.B.). This research was conducted with government support under and awarded by DoD, Air Force Office of Scientific Research, National Defense Science and Engineering Graduate (NDSEG) Fellowship, 32 CFR 168a (to K.A.B.). Preparation of this manuscript was additionally supported by NSF Grant BCS-1539361 (to E.A.K. and J.D.P.). This manuscript was included as part of K.A.B.’s dissertation at Boston College. The authors thank Katherine Mickley Steinmetz for her help conceptualizing the study and with data collection, and Halle Zucker, John Morris, and Maite Balda for their help with data collection. None of the authors has a conflict of interest to report.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Bennion, K.A., Payne, J.D. & Kensinger, E.A. Residual effects of emotion are reflected in enhanced visual activity after sleep. Cogn Affect Behav Neurosci 17, 290–304 (2017). https://doi.org/10.3758/s13415-016-0479-3

Published:

Issue Date:

DOI: https://doi.org/10.3758/s13415-016-0479-3