Abstract

In this study, three machine learning techniques, the XGBoost (Extreme Gradient Boosting), LSTM (Long Short-Term Memory Networks), and ARIMA (Autoregressive Integrated Moving Average Model), are utilized to deal with the time series prediction tasks for coastal bridge engineering. The performance of these techniques is comparatively demonstrated in three typical cases, the wave-load-on-deck under regular waves, structural displacement under combined wind and wave loads, and wave height variation along with typhoon/hurricane approaching. To enhance the prediction accuracy, a typical data preprocessing method is adopted and an improved prediction framework for the LSTM model after the rolling forecast prediction is proposed. The obtained results show that: (a) When making a prediction on data featured with periodic regularity, both the XGBoost and ARIMA models perform well, and the XGBoost model can make predictions multi-step ahead, (b) The ARIMA model can predict just one step ahead based on aperiodic dataset with limited amplitude more accurately, while the XGBoost and LSTM models can predict multi-step ahead with appropriate data preprocessing, and (c) All the three models can predict the data tendency with model updating over time, but the prediction accuracy of the LSTM model is more favorable. The successful application of these three machine learning techniques can provide guidance to resolve engineering problems with time-history prediction requirements.

Similar content being viewed by others

1 Introduction

More intensive economic activities in coastal zones trigger the necessity of constructing more long and flexible coastal bridges that usually cross vast and deep water. These sea-crossing bridges usually serve as the backbone in the transportation network connecting the islands and mainland. For example, Table 1 lists several major long-span bridges built in coastal zones in China since the late twentieth century. As evidenced from Table 1, with the development of the bridge construction technology, the forms of sea-crossing bridges are gradually diversified with increased span length, and the functions are also transformed from highway only to dual-use of highway and railway.

The harsh environment, particularly huge waves and strong winds brought by tropical cyclones or hurricanes, as well as earthquakes, tides, and current, poses high challenges for the safety and resilience of these bridge structures during their service life. Many lessons have learned from hurricanes Ivan in 2004 and Katrina in 2005 that a large number of coastal low-lying bridges along the Gulf of Mexico were heavily damaged. Since then, many studies have been conducted on the bridge-deck-interaction (Bradner 2008; McPherson 2010; Sheppard and Marin 2009; Cuomo et al. 2009; Xu et al. 2018). The main reason for the bridge damage is that hurricane-induced storm surge and wave loads are not adequately accounted for in designing these low-laying bridges.

With the development of the bridge construction technology, coastal bridges may reach vast and deep ocean zones such that the marine environment at the bridge site would be more complex. Existing studies have shown that long span sea-crossing bridges are more vulnerable to extreme environmental loads (Zhu and Zhang 2017; Ti et al. 2018; Zhang et al. 2019a, b). For long span sea-crossing bridges, the structural stability and safety of the bridge tower and foundation are key issues since these structural components directly contact with the hydraulic forces. To disentangle these issues, Guo et al. (2016) took a bridge tower model as the experimental research object to test its vibration under coupled wind and wave loads, and concluded that the bridge tower will vibrate obviously when the structural frequency is close to the loading frequency, i.e., resonance would be dominant under the action of low-speed wind and regular waves. Meng et al. (2018) put forward a frequency spectrum method by considering the correlation between wind and wave loads based on theoretical analysis of experimental data. Wei et al. (2017) investigated the structural dynamic response of an elastic bridge tower model with a scale of 1:150 in a flume under the action of regular waves and current and observed the changes of the shear force and vibration amplitude at the pile foundation under different load situations.

To address the structural safety and resilience for coastal bridges under various extreme environmental conditions, quick and accurate prediction of the major loads and structural dynamic responses in advance would be highly desirable, especially for the stakeholders to make expedient decisions on the evacuation route before a hurricane landing. Therefore, time series prediction, from the perspective of timely evaluating the loads and structural dynamics for coastal bridges, is of high interest. Generally speaking, time series prediction is a regression prediction process, which uses the existing data for statistical analysis and data processing to predict their future values. Until now, the time series prediction technique has been substantially developed. The ARIMA (Autoregressive Integrated Moving Average Model), SVM (Support Vector Machine), random forest, ANN (Artificial Neural Network), XGBoost (Extreme Gradient Boosting), GRU (Gated Recurrent Unit), LSTM (Long Short-Term Memory Networks) and other machine learning models have emerged and extended for time series prediction purpose. Until now, the application of time series prediction techniques in bridge engineering is quite limited. Lee et al. (2008) applied the ANN model to evaluate the reliability of individual bridge elements and fixed the missing historical condition data. After that, a variety of machine learning techniques, including SVM, BP neural network (Back Propagation neural network), BDMs (Bayesian dynamic models), ARMA (Autoregressive Moving Average Model), are used to monitor the bridge’s health and assess its reliability (Yang and Zhou 2011; Li et al. 2012; Liu et al. 2014; Tang et al. 2015).

In recent years, time series prediction has been ever used in predicting the bridge conditions. For example, (Sun and Hao 2011) analyzed the girder deflection of the Xushui river bridge to establish a SHM (Structural Health Monitoring) system for early warning and found that the time series analysis can effectively predict the variations of structural response. Yi (2015) studied the internal stress of the bridge tower for a long span bridge subjected to typhoon and applied the BP neural network based on clustering to predict the tower stress, showing that the nonlinear time series prediction has high validity. (Gong and Li 2018) adhibited the RWTLS (robust weighted total least-squares) to predict two observed data sets for the pier settlement by taking the errors in the coefficient matrix and possible gross errors into consideration, proving that the RWTLS model can be much more reliable and accurate than LS (least-squares), RLS (robust least-squares) and WTLS (weighted total least-squares) models. Shi et al. (2019) adopted the liner regression model to predict the routine maintenance costs for reinforced concrete beam bridges where the logarithm of the historical routine maintenance cost is set as the dependent variable and the bridge age is taken as the independent variable. Kaloop et al. (2019) estimated the safety behavior of the Incheon large span bridge with the ARMA model and revealed that the bridge is safe under traffic loads. Liu et al. (2020) regarded the dynamic coupled extreme stresses of bridges as time series data and applied the Bayesian probability recursive processes to successfully predict the value of stresses. However, currently, there are rare studies on using time series prediction techniques for estimating the response of bridges under dynamic loads in coastal environment, which is essential in terms of the hazard prevention for coastal bridges.

This study aims to address the particular features of the major loads and structural dynamics for coastal bridges by using three competitive time series prediction techniques, the XGBoost, LSTM, and ARIMA. The three models are selected for their proved ability for precisely predicting and wide application in academic achievements. The ARIMA model, a combination of the AR (Autoregressive) model and MA (Moving Average) model, is specially proposed for the time series prediction with limited hyper-parameters, high accuracy and fast calculation speed. The XGBoost model is a newly proposed decision tree model. Based on the GBDT (Gradient Boosting Decision Tree) model, the XGBoost model has been developed to enhance the prediction accuracy and calculating speed. Since then, many participants won prizes in modeling competitions, e.g., Kaggle, with the XGBoost model, confirming its superiority. The LSTM model is a classical and widely used deep learning model and it well solves the gradient exploding and gradient vanishing problems. In addition, the overfitting problem can be reduced by regularization. The performances of these techniques are comparatively demonstrated in three typical cases, the wave-load-on-deck under regular waves, structural displacement under combined wind and wave loads, and wave height variation along with typhoon/hurricane approaching. The features of the statistical data sets associated with coastal bridges are representative and therefore, this study can provide guidance to resolve similar engineering problems with time-history prediction requirements.

2 Time series prediction techniques

2.1 ARIMA

The ARIMA model, known as Autoregressive Integrated Moving Average model, can be used for stationary and non-white noise time series forecasting. The ARIMA model consists of three aspects, capture the three key aspects of the model. AR for autoregression, I for integrated, and MA for moving average. Compared with the ARMA model, ARIMA model can deal with the non-stationary process by a degree of differencing. At present, many scholars have successfully used the ARIMA model combined with certain other technical means to predict a variety of data. For example, the ARIMA model, combined with the wavelet analysis, was used to predict the network flow, leading to a higher prediction accuracy than the original ARIMA model (Li et al. 2009). The ARIMA and DBN (Deep Belief Network) model were combined and applied to multiple classical datasets prediction, and find that to predict the value with DBN model and predict the error with ARIMA model separately can be a better choice than use the ARIMA model only (Hirata et al. 2015). The ARIMA model was also used in mechanical engineering to predict the residual life and fault conditions of mechanical products, e.g., estimating the service life of water pumps (Sanayha and Vateekul 2017) and the remaining useful life of aircraft engines (Ordóñez et al. 2019), where rather high prediction accuracy is attained. For applications in bridge engineering, Xin et al. (2018) predicted the structure deformation of a bridge with Kalman-ARIMA-GARCH (Generalized Autoregressive Conditional Heteroskedasticity) Model.

The ARIMA model is developed based on the ARMA model and the main equation of the ARMA model is given as follows.

where p′ is the autoregressive order, q is the moving average order, Li is the lag operator, Xt refers to the real value at time t, αi indicates the parameters of the autocorrelation part for the model, θi refers to the parameters of the moving average part, and εt is the error term.

Assume that the polynomial \( \left(1-{\sum}_{i=1}^{p^{\prime }}{\alpha}_i{L}^i\right) \) has a unit root (1 − L) of multiplicity d, then the core equation of ARIMA model can be obtained as

where p = p′ − d, φi are the parameters of autocorrelation part of the model.

In Eq. (2), the value of d is the number of differences needed for stationary, aka the degree of differencing. The parameters of p, q, and d should be determined in establishing the model. In determining the specific value of p, q and d parameters, the autocorrelation coefficient and partial autocorrelation coefficient of the model need to be calculated firstly. The two coefficients can be roughly estimated by observing the graph of ACF (Autocorrelation Function) and PACF (Partial Autocorrelation Function), then precisely determined by grid search, information criterion function, thermodynamic diagram or other methods.

2.2 XGBoost

The XGBoost model, i.e., extreme gradient boosting, is an open source framework proposed by (Chen and Guestrin 2016) for the gradient enhancement, where the existing gradient boosting algorithm can be optimized. Because favorable prediction results have been obtained by using this model, it is widely used in machine learning competitions. Meanwhile, the XGBoost model performs excellently on the prediction of the sales volume, stock price, and traffic flow (Gurnani et al. 2017; Wang and Guo 2020; Lu et al. 2020). However, there are few applications of this algorithm for prediction tasks in engineering practices due to its the late advent. Chen et al. (2019) used the XGBoost model to predict the quality of welding and the error rate on the test set is 20.5%. (Zheng and Wu 2019) predicted the wind power by employing the XGBoost model and several other machine learning techniques, the BP neural network, classification and regression tree, random forests, and support vector regression and the result shows the XGBoost model attains the highest prediction accuracy.

The XGBoost model consists of many trees, each of which has its own number of layers. For a single tree, several functions can be added to predict the output, which is shown as

where \( l\left({y}_i,{\hat{y}}_i^{\left(t-1\right)}+{f}_t\left({x}_i\right)\right) \) is the loss function, yi is the target value, \( {\hat{y}}_i^{\left(t-1\right)} \) is the prediction of tree i-1, and ft(xi) is the prediction of tree i; Ω(ft) is the regular term; C is a constant.

By using the Taylor expansion to approximate the loss function, we have

Define the parameters gi and hi

Then rewrite Eq. (3) as

For the XGBoost algorithm, once the prediction result of the former t-1 trees is obtained, the tree t will then be added to predict the difference between yi and \( {\hat{y}}_i^{\left(t-1\right)} \). Therefore, the final predicted Obj is the sum of all trees by the end of the model construction. In the actual simulation, the maximum number of trees needed for the prediction and the deepest depth of each tree will be set as hyper-parameters to stop the tree splitting when the model complexity reaches the preset, thus preventing the overfitting.

2.3 LSTM

The LSTM model (long-short term memory model) was proposed by Hochreiter and Schmidhuber (1997), through which the gradient vanishing and exploding problems in previous deep learning models can be effectively avoided. Compared with the aforementioned two machine learning models, the LSTM model maintains some unique characteristics, while it requires more training time and thus is computationally costly. In addition, the LSTM model is highly dependent on the data size. For a relatively small data set, the prediction accuracy would fall below the expectation. However, for certain large data set, appreciable prediction accuracy can be thereby achieved. Until now, the LSTM model has been applied to assess the safety of industrial facilities, such as tailings ponds, as well as the heating and cooling equipment (Li et al. 2019; Wang et al. 2019). In the field of civil engineering, this model is ever employed to predict the failure of bearings, seismic response of nonlinear structures, and displacement of dams (Gu et al. 2018; Zhang et al. 2019a, b; Liu et al. 2020). Relatively high prediction accuracy was obtained in these studies.

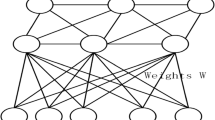

Figure 1 shows the structure of the LSTM model with demonstrative three cells, where the inside structure of the middle cell associated with time t (in short, cell t) is explicated given. Note here ht − 1 represents the information transmitted from the cell t-1, ht refers to the short time memory output from cell t, xt denotes the newly acquired information, tanh function is the activation function.

Each cell in LSTM contains three key components: the forget gate, input gate, and output gate. The forget gate controls how much memories can be retained from cell t-1 at time t, the input gate determines the amount of information that can be transferred into cell t from xt, and the output gate decides the information that can be transferred to ht. The information at the forget gate, i.e., ft, can be expressed as

where Wf and Wi, WC, Wo in the following equations are weight matrices, bf and bi, bC, bo in the following equations are bias vectors, and σ is a sigmoid function.

Consequently, at the input gate, the model obtains new information it and \( {\hat{C}}_t \) by

Next, the memory transformed from the forget gate and input gate can be combined to get Ct

At last, the output gate outputs the result ot and ht as

3 Demonstration cases

Common dynamic loads and structural responses for coastal bridge engineering can be roughly divided into three forms according to the characteristics of their amplitude and periodicity. For the first form, the load has a clear periodicity and its amplitude fluctuates within a certain range. For example, in case the bridge girder is fully submerged under the action of regular waves, the time histories of the wave forces on deck largely show this pattern. Secondly, the time-history data fluctuates within a certain range, whereas its frequency distribution is relatively complex and there are no obvious periodicities on the data; this data pattern can be witnessed on the time-history displacements of the tower top and mid span for long-span sea-crossing bridges under random waves and turbulence winds. As for the third form, the time-history data has certain tendency, generally increasing or decreasing with time. Demonstratively, the wave height variation along with typhoon approaching favors this pattern.

In this section, the aforementioned three machine learning techniques will be utilized in three demonstrative cases with typical datasets in the time domain. This aims to provide guidance for the structure health monitoring for coastal bridges during their service life.

3.1 Wave-load-on-deck under regular waves

In the design of long-span ocean bridges, ocean waves generally exert wave forces on the bridge pile foundation, thus indirectly affecting the time-history displacement of the main girder (superstructure). However, under special circumstances when hurricanes (or tropical cyclones) approach, the bridge girder may be partial or completely submerged due to the rising water level. In this scenario, the wave force will not only affect the bridge pile foundation but also impact the superstructure directly, probably leading to much more severe damage.

As evidenced from the damage of many low-lying bridges induced by Hurricanes Ivan and Katrina in 2004 and 2005, respectively, huge waves and rising storm surge largely lead to the superstructure, in the form of simply supported spans in most instances, displaced and/or falling from the bent (Okeil and Cai 2008; Padgett et al. 2008). Many subsequent studies reveal that the wave loads largely surpass the capacities of the supporting interface between the bridge superstructure and substructure (Douglass et al. 2006; Robertson et al. 2007; O'Connor and McAnany 2008; Robertson et al. 2011; Yuan et al. 2018; Huang et al. 2018; Xu et al. 2020).

It is noticed that Huang (2019) used a wave flume to experimentally investigate the variation of the time-history wave forces on the bridge superstructure under hurricane induced regular waves. The schematic diagram of the experimental setup is shown in Fig. 2, where the total length of the wave flume is 68 m and the regular waves are generated at the left boundary, with a distance of 39 m from the target bridge deck model. The wave induced loads on the deck model are measured by a force transducer placed adjacently above the deck model in a suspended rigid steel frame. Figure 3 shows a typical time history of the wave force in the transverse direction of the bridge, i.e., horizontal wave load, when the bridge superstructure is completely immersed. Because the measurement frequency of the transducer is 40 Hz, a total of 516 data points in the scope of the time history curve, corresponding to equal steps of data measurement, will be thereafter analyzed.

Flume arrangement for the experimental study by Huang (2019) (unit: m)

As shown in Fig. 3, the wave period here is 2.5 s and the variation of the wave forces due to the presence of high frequency signals enables that the variation pattern is different in each period. This motivates the necessity of time series prediction of the wave forces, potentially benefiting the timely monitoring of the structural vibration and safety.

To start the work, the autocorrelation function is used to confirm the autocorrelation of wave forces in time series, and the result is shown in Fig. 4. In the figure, the abscissa represents the lag time step in wave force dataset, and the ordinate indicates the value of autocorrelation coefficient. As observed in Fig. 4, the horizontal wave force on the bridge superstructure has a strong autocorrelation in the time series. Note that the values of the structural force, displacement and other data at time t can all be regarded as the sum of itself at time t − 1 and the variation within the time period ∆t. In the following two demonstrated cases, the variation of data values also shows this pattern, and therefore the autocorrelation results will not be presented in the context for simplicity purpose.

In the training procedure, the proportion of the training set, validation set and prediction set for the considered prediction models, the XGBoost, LSTM and ARIMA is correspondingly different for each model, as shown in Table 2. It should be noted that the amount of data required for the model training and the number of forecast steps for the ARIMA model are different with the other two models. In addition, there is no validation set for the XGBoost and ARIMA models.

Figure 5 shows the overall prediction results, along with the measured data by Huang (2019). Based on the comparison between the predicted results and the experiment data, it can be concluded that the prediction results by using the three models agree with the experiment data quite well, and the overall trend and the peak values, exhibited as the pulse component of the wave forces, can be favorably predicted in advance. The metrics of mean absolute error (MAE) and mean squared error (MSE) are used to evaluate the performance of three prediction models, and the results are listed in Table 3.

By comparing the predictive power of the three models, it can be found that the XGBoost model features a higher prediction accuracy across multiple time steps when the autocorrelation coefficient remains over 0.5. The prediction accuracy of the LSTM model is relatively lower, probably because the LSTM model needs the validation set to support multiple rounds of training. When the original data set is small, the prediction accuracy will be lower due to the reduction of the training set. When the data collected for training is sufficiently large, the error will be reduced. The ARIMA model requires a small amount of data during training, and the accuracy on the predicted data can be similar to the results obtained by the XGBoost model. However, the disadvantage of the ARIMA model is that it predicts only one step ahead, which leaves a shorter response time after obtaining the predicted results.

3.2 Structural displacement under combined wind and wave loads

In the design of sea-crossing bridges, the influence of combined wind and wave loads is more obvious with the increasing of the bridge span, as well as the complex natural environment condition. Fang et al. (2020) carried out numerical analysis for a typical sea-crossing bridge under the combined action of wind and waves, and the overall elevation view of the prototype bridge is shown in Fig. 6. Based on the analysis, time histories of the vibration displacement with 250 s long at three key locations, the tower top, mid-span, and joint of the tower and main girder, as also shown in Fig. 6. Since the attenuation of the structural transient response takes certain amount of time after the load is applied, the time history displacement within the range from 50 s to 250 s is selected for the prediction analysis. During the calculation of the finite element model, the data is saved every 0.025 s. Therefore, the displacement response curve at each of the three discussed locations contains 8000 data points correspondingly. The time histories of the displacement obtained at the monitored locations are shown in Fig. 7.

The model parameter setup for the three prediction models is similar to that listed in Table 2. The prediction results of the structural displacement at three typical locations are shown in Fig. 8, where expected refers to the target time history curve from the finite element analysis.

Based on the analysis of the vibration response at three different locations on the bridge, it can be concluded that the response at the middle span is mainly consistent with the symmetric lateral vibration mode, thus the prediction results obtained by the three prediction methods agree with the simulated time-history displacement favorably. However, because the time history displacement at the other two locations consists of multiple vibration modes, the time autocorrelation is not as obvious as that associated with the mid-span vibration, resulting in more difficulties for the prediction task. Failures can be observed for both the XGBoost and LSTM models, such as the prediction misses the extreme values and prediction tendency goes in the reverse direction occasionally, leading to unfavorable prediction results. The ARIMA model can predict the future data much better, but the time step of predictions is still limited. Therefore, the time history displacement at the joint and tower top should be preprocessed before the model training.

Based on the analysis of the data in the frequency domain, the displacement response features large amplitudes at some frequencies, as shown in Fig. 9.

The process of the optimized prediction can be specified as four steps. Firstly, for the vibration signals at the joint and tower top locations, the scipy and numpy modules of the Python language were used to particularly extract five most prominent vibration frequencies with corresponding maximum amplitudes. Then, the FFT (Fast Fourier transform) filter was applied to separate the extracted time history displacement signals from the raw data, and therefore, the extracted signals show the characteristics of stable frequency and strong time-autocorrelation, which indicates the prediction is more likely to get favorable results. As follows, the XGBoost and LSTM models are applied to predict the five sets of signals. Finally, the rest signals with lower amplitude can be summed up and predicted together. With this data preprocessing, the XGBoost and LSTM models perform well for the prediction task in the context of the time history displacement at the joint and tower top locations and attain higher prediction accuracy, as evidenced in Fig. 10.

The MAE and MSE values predicted with raw data and preprocessed data are listed in Table 4. From the table, it can be seen that the prediction error of the two models decreases obviously after the preprocess, which means the machine learning models can better conclude rules from the preprocessed data.

To summarize, with certain data preprocessing, the XGBoost and LSTM models can yield desirable results for the prediction of the time-history structural displacement with complex frequency domain signals. In addition, the prediction time span is longer than that of the ARIMA model. Thus, it is promising to use both models for prediction tasks regarding datasets without obvious periodicity.

3.3 Wave height variation along with typhoon/hurricane approaching

Coastal Bridges may be often visited by typhoons or hurricanes in their service life. With the typhoon approaching, the wave height rises continuously, along with the particular storm surge. As a result, the bridge structure may be damaged by huge waves. However, since the transit time of typhoon is relatively short as compared with the previous two circumstances, the amount of collected data will be marginally limited. Furthermore, before the typhoon comes, the model training data cannot be collected in advance. In case of insufficient samples, the prediction accuracy of the model will be significantly affected. To solve the above two issues, an improved prediction framework for LSTM model after the rolling forecast prediction, as shown in Fig. 11, is proposed. The framework firstly gathers a small dataset to establish the initial model with k-fold verification method. Although training on future data sets and validating on past data sets is an inverse time series behavior, the k-fold verification can still result in appreciable accuracy. This is due to the fact that data sets have inherent characteristics that can be exploited. The next step is to gradually update the model in the following time steps by append new observations. When the accuracy of predicted value meets the criterion, it means that the model can be applied for the future prediction.

Due to the lack of specific observation data for typhoons, a public dataset with certain variation trend is adopted here for demonstration purpose. The dataset processed in both the time- and frequency-domains is shown in Fig. 12.

In the training of the LSTM model, a small sample with 40 steps, i.e., data points, is firstly used, thus the initial model can be built. With the time moving, the data set can be augmented with real-time monitored data and the prediction model can be updated until the prediction accuracy meets the set criteria. As can be seen from the analyzed data in the frequency domain in Fig. 12 (b), the peak amplitude appears at the four frequencies, i.e., 0.08, 0.169, 0.25 and 0.33, which correspond to the input number of 12, 6, 4 and 3 data points for one batch size. The batch size put into the model should be emphasized, because when the batch size is too large for a single iteration, the model updating time will be longer, which will affect the immediacy of prediction. However, when the batch size is too small, the model update times before meeting the criteria will be excessive due to the information can be obtained in one update is insufficient. Therefore, in the process of model updating, the batch size being four is chosen in the present study.

Figure 13 shows the prediction results by using the three prediction models. In the updating procedure for the LSTM model, the prediction error is controlled within 5% after 5 epochs and the MAE for the LSTM model is 13.08 cm pertaining to the prediction set, indicating favorable fitting results have been obtained. By this time, the trained model can be used to predict the subsequent wave height.

The XGBoost and ARIMA models, as Fig.13 shows, can marginally predict the tendency of the data series. Since the learning capability for these two techniques is relatively high, the prediction procedure can be initiated when the first group of data is collected, and subsequently the prediction model is updated in each following time step. However, the forecast accuracy is far less than that predicted by the LSTM model, as obvious time lag phenomenon is observed. Compare the MAE and MSE value of predictions given by three models in Table 5, it can also be concluded that the result of LSTM model is much more favorable than that predicted by the rest of the two models. The unfavorable prediction results by XGBoost and ARIMA model may be related to the difficulties in controlling the complexity of models’ architecture in the training process, thus overfitting in small sample learning would largely happen.

4 Concluding remarks

In this study, three machine learning techniques, i.e., the XGBoost (Extreme Gradient Boosting), ARIMA (Autoregressive Integrated Moving Average Model) and LSTM (Long Short-Term Memory Networks) were applied in three demonstrative cases with datasets that are closely related to the safety and resilience of coastal bridges during their service life. A typical data preprocessing method was adopted and an improved prediction framework for the LSTM model after the rolling forecast prediction was proposed to enhance the prediction accuracy. Based on the comparative results in the demonstration cases, the following conclusions can be obtained:

-

1.

For datasets with clear periodicity, all three considered machine learning models demonstrate rather favorable performance in the time series prediction. Both the XGBoost and LSTM models can predict multi-step ahead, whereas a relatively larger accuracy on a small training dataset can be achieved by using the XGBoost model and employing the LSTM model cannot reach a high precision yet due to the partitioning ways on datasets. Therefore, it is necessary to ensure a sufficiently large dataset when using the LSTM model for time series prediction. By using the ARIMA model a high prediction accuracy is remained, but this model predicts only one step ahead.

-

2.

For datasets with fluctuating values within certain range and complex frequency distribution, using the ARIMA model can achieve a relatively higher prediction accuracy on the original dataset than that associated with the XGBoost and LSTM models. However, with adopting a typical preprocessing method where the five most prominent wave bands with corresponding maximum amplitudes in the frequency domain are extracted for individual prediction, higher prediction accuracy can thus be achieved.

-

3.

The LSTM model features with high prediction accuracy with an improved framework after the rolling forecast prediction, where overfitting issues can be avoided. The k-fold method and model updating overcomes the lack of data points to some extent. However, the low accuracy and phase lag phenomenon can be observed for the prediction results by using the XGBoost and ARIMA models and this is because overfitting in small sample learning usually occurs.

The availability of the data largely limits the model training process. Currently, the models have been trained based on the available datasets in the literature. Once given a larger data set, it is worth analyzing the model performance more extensively, especially for the rolling forecast models. The overfitting problem may then be resolved, but the efficiency of the model training needs to be emphasized.

Availability of data and materials

Some or all data, models, and code used during the study are available from the corresponding author by request.

Abbreviations

- ACF:

-

Autocorrelation Function

- ANN:

-

Artificial Neural Network

- AR:

-

Autoregressive

- ARIMA:

-

Autoregressive Integrated Moving Average Model

- ARMA:

-

Autoregressive Moving Average Model

- BP:

-

Back Propagation

- DBN:

-

Deep Belief Network

- FFT:

-

Fast Fourier transform

- GARCH:

-

Generalized Autoregressive Conditional Heteroskedasticity

- GBDT:

-

Gradient Boosting Decision Tree

- GRU:

-

Gated Recurrent Unit

- LS:

-

Least-Squares

- LSTM:

-

Long Short-Term Memory Networks

- MA:

-

Moving Average

- MAE:

-

Mean Absolute Error

- MSE:

-

Mean Squared Error

- PACF:

-

Partial Autocorrelation Function

- RLS:

-

Robust Least-Squares

- RWTLS:

-

Robust Weighted Total Least-Squares

- SHM:

-

Structural Health Monitoring

- SVM:

-

Support Vector Machine

- XGBoost:

-

Extreme Gradient Boosting

- WTLS:

-

Weighted Total Least-Squares

References

Bradner C (2008) Large-scale laboratory observations of wave forces on a highway bridge superstructure. Master’s thesis. Oregon State University, Corvallis, OR

Chen T, Guestrin C (2016) Xgboost: a scalable tree boosting system. In: Proceedings of the 22nd acm sigkdd international conference on knowledge discovery and data mining

Chen K, Chen H, Liu L, Chen S (2019) Prediction of weld bead geometry of MAG welding based on XGBoost algorithm. The International Journal of Advanced Manufacturing Technology 101(9–12): 2283–2295

Cuomo G, Shimosako KI, Takahashi S (2009) Wave-in-deck loads on coastal bridges and the role of air. Coast Eng 56(8):793–809

Douglass S, Chen Q, Olsen J (2006) Wave forces on bridge decks draft report. Coastal Transportation Engineering Research and Education Center, University of South Alabama

Fang C, Tang H, Li Y (2020) Stochastic response assessment of Cross-Sea bridges under correlated wind and waves via machine learning. J Bridg Eng 25(6):04020025

Gong X, Li Z (2018) Bridge pier settlement prediction in high-speed railway via autoregressive model based on robust weighted total least-squares. Surv Rev 50(359):147–154

Gu Y, Liu S, He L (2018) Research on failure prediction using dbn and lstm neural network. In: 2018 57th Annual Conference of the Society of Instrument and Control Engineers of Japan

Guo A, Liu J, Chen W, Bai X, Liu G, Liu T, Li H (2016) Experimental study on the dynamic responses of a freestanding bridge tower subjected to coupled actions of wind and wave loads. J Wind Eng Ind Aerodyn 159:36–47

Gurnani M, Korke Y, Shah P, Udmale S, Sambhe V, Bhirud S (2017) Forecasting of sales by using fusion of machine learning techniques. In: 2017 international conference on data management, analytics and innovation

Hirata T, Kuremoto T, Obayashi M, Mabu S, Kobayashi K (2015) Time series prediction using DBN and ARIMA. In: 2015 International Conference on Computer Application Technologies

Hochreiter S, Schmidhuber J (1997) Long short-term memory. Neural Comput 9(8):1735–1780

Huang B (2019) Research on extreme wave force on coastal bridge based on space-time finite element method, Ph.D. thesis. Southwest Jiaotong University, Chengdu

Huang B, Zhu B, Cui S, Duan L, Cai Z (2018) Influence of current velocity on wave-current forces on coastal bridge decks with box girders. J Bridg Eng 23(12):04018092

Kaloop MR, Hussan M, Kim D (2019) Time-series analysis of GPS measurements for long-span bridge movements using wavelet and model prediction techniques. Adv Space Res 63(11):3505–3521

Lee J, Sanmugarasa K, Blumenstein M, Loo YC (2008) Improving the reliability of a bridge management system (BMS) using an ANN-based backward prediction model (BPM). Autom Constr 17(6):758–772

Li C, Liu Y, Yang J, Gao Z (2012) Prediction of flooding velocity in packed towers using least squares support vector machine. In: Proc. of the 10th world congress on intelligent control and automation, pp 3226–3231

Li J, Chen H, Zhou T, Li X (2019) Tailings pond risk prediction using long short-term memory networks IEEE Access 7, pp 182527–182537

Li J, Shen L, Tong Y (2009) Prediction of network flow based on wavelet analysis and ARIMA model. In: 2009 international conference on wireless networks and information systems

Liu W, Pan J, Ren Y, Wu Z, Wang J (2020) Coupling prediction model for long-term displacements of arch dams based on long short-term memory network. Struct Control Health Monit 27(7):e2548

Liu Y, Lu D, Fan X (2014) Reliability updating and prediction of bridge structures based on proof loads and monitored data. Constr Build Mater 66:795–804

Lu W, Rui Y, Yi Z, Ran B, Gu Y (2020) A hybrid model for lane-level traffic flow forecasting based on complete ensemble empirical mode decomposition and extreme gradient boosting

McPherson RL (2010) Hurricane induced wave and surge forces on bridge decks Ph.D. thesis. Texas A & M University, Texas

Meng S, Ding Y, Zhu H (2018) Stochastic response of a coastal cable-stayed bridge subjected to correlated wind and waves. J Bridg Eng 23(12):04018091

O'Connor J, McAnany PE (2008) Damage to bridges from wind, storm surge and debris in the wake of hurricane Katrina (no. MCEER-08-SP05)

Okeil A, Cai CS (2008) Survey of short- and medium-span bridge damage induced by hurricane Katrina. J Bridg Eng 13(4):377–387

Ordóñez C, Lasheras F, Roca-Pardiñas J, de Cos Juez FJ (2019) A hybrid ARIMA–SVM model for the study of the remaining useful life of aircraft engines. J Comput Appl Math 346:184–191

Padgett J, DesRoches R, Nielson B, Yashinsky M, Kwon O, Burdette N, Tavera E (2008) Bridge damage and repair costs from hurricane Katrina. J Bridg Eng 13(1):6–14

Robertson I, Yim S, Riggs H, Young Y (2007) Coastal bridge performance during hurricane Katrina. In: Third International Conference on Structural Engineering

Robertson I, Yim S, Tran T (2011) Case study of concrete bridge subjected to hurricane storm surge and wave action. In: Solutions to Coastal Disasters, p 2011

Sanayha M, Vateekul P (2017) Fault detection for circulating water pump using time series forecasting and outlier detection. In: 2017 9th international conference on knowledge and smart technology

Sheppard DM, Marin J (2009) Wave loading on bridge decks: final report

Shi X, Zhao B, Yao Y, Wang F (2019) Prediction methods for routine maintenance costs of a reinforced concrete beam bridge based on panel data. Advances in Civil Engineering, p 2019

Sun L, Hao X (2011) Analysis of bridge deflection based on time series. In: Applied Mechanics and Materials, Trans Tech Publications Ltd 71, pp 4545–4548

Tang H, Tang G, Meng L (2015) Prediction of the bridge monitoring data based on support vector machine. In: 2015 11th international conference on natural computation

Ti Z, Wei K, Qin S, Li Y, Mei D (2018) Numerical simulation of wave conditions in nearshore island area for sea-crossing bridge using spectral wave model. Adv Struct Eng 21(5):756–768

Wang Y, Guo Y (2020) Forecasting method of stock market volatility in time series data based on mixed model of ARIMA and XGBoost. China Communications 17(3):205–221

Wang Y, Yang C, Shen W (2019) A deep learning approach for heating and cooling equipment monitoring. In: 2019 IEEE 15th international conference on automation science and engineering

Wei C, Zhou D, Ou J (2017) Experimental study of the hydrodynamic responses of a bridge tower to waves and wave currents. Journal of waterway, port, coastal, and. Ocean Eng 143(3):04017002

Xin J, Zhou J, Yang S, Li X, Wang Y (2018) Bridge structure deformation prediction based on GNSS data using Kalman-ARIMA-GARCH model. Sensors 18(1):298

Xu G, Chen Q, Zhu L, Chakrabarti A (2018) Characteristics of the wave loads on coastal low-lying twin-deck bridges. J Perform Constr Facil 32(1):04017132

Xu G, Kareem A, Shen L (2020) Surrogate modeling with sequential updating: applications to bridge deck-wave and bridge deck-wind interactions. J Comput Civ Eng 34(4):04020023

Yang JX, Zhou JT (2011) Prediction of chaotic time series of bridge monitoring system based on multi-step recursive BP neural network. In Advanced Materials Research 159:138–143

Yi L (2015) Nonlinear time series prediction based on the dynamic characteristics clustering neural network. In: 2015 sixth international conference on intelligent systems design and engineering applications, pp 522–525

Yuan P, Xu G, Chen Q, Cai CS (2018) Framework of practical performance evaluation and concept of Interface Design for Bridge Deck-Wave Interaction. J Bridg Eng 23(7):04018048

Zhang M, Yu J, Zhang J, Wu L, Li Y (2019a) Study on the wind-field characteristics over a bridge site due to the shielding effects of mountains in a deep gorge via numerical simulation. Adv Struct Eng 22(14):3055–3065

Zhang R, Chen Z, Chen S, Zheng J, Büyüköztürk O, Sun H (2019b) Deep long short-term memory networks for nonlinear structural seismic response prediction. Comput Struct 220:55–68

Zheng H, Wu Y (2019) A xgboost model with weather similarity analysis and feature engineering for short-term wind power forecasting. Appl Sci 9(15):3019

Zhu J, Zhang W (2017) Numerical simulation of wind and wave fields for coastal slender bridges. J Bridg Eng 22(3):04016125

Acknowledgements

The authors would like to thank Dr. Huang Bo and Dr. Fang Chen for providing original data for the demonstration cases.

Funding

The financial support from NSFC (Grant No. 52078425) is highly appreciated. All the opinions presented here are those of the writers, not necessarily representing those of the sponsors.

Author information

Authors and Affiliations

Contributions

Conceptualization, GX; Formal analysis, EY and HW; Investigation, EY; Supervision, GX, YH and PH; Writing—original draft, EY; Writing—review & editing, GX. All authors have read and agreed to the published version of the manuscript.

Corresponding author

Ethics declarations

Competing interests

The author(s) declared no potential conflicts of interests with respect to the research, authorship, and/or publication of this article.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Yu, E., Wei, H., Han, Y. et al. Application of time series prediction techniques for coastal bridge engineering. ABEN 2, 6 (2021). https://doi.org/10.1186/s43251-020-00025-4

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s43251-020-00025-4