Abstract

In the era of Big Data, with the increasing use of large-scale data-driven applications, visualization of very large high-resolution images and extracting useful information (searching for specific targets or rare signal events) from these images can pose challenges to the current display wall technologies. At Bellarmine University, we have set up an Advanced Visualization and Computational Lab using a state-of-the-art next generation display wall technology, called Hiperwall (Highly Interactive Parallelized Display Wall). The 16 ft \(\times\) 4.5 ft Hiperwall visualization system has a total resolution of 16.5 Megapixels (MP) which consists of eight display-tiles that are arranged in a \(4\times 2\) tile configuration. Using Hiperwall, we can perform interactive visual data analytics of large images by conducting comparative views of multiple large images in Astronomy and multiple event displays in experimental High Energy Physics. Users can display a single large image across all the display-tiles, or view many different images simultaneously on multiple display-tiles. Hiperwall enables simultaneous visualization of multiple high resolution images and its contents on the entire display wall without loss of clarity and resolution. Hiperwall’s middleware also allows researchers in geographically diverse locations to collaborate on large scientific experiments. In this paper we will provide a description of a new generation of display wall setup at Bellarmine University that is based on the Hiperwall technology, which is a robust visualization system for Big Data research.

Similar content being viewed by others

Introduction

One of the greatest scientific challenges of the 21st century is to understand the plethora of information from the various Big Data sources. Therefore, finding new information and patterns in Big Data is the current imperative [1]. Large-scale data driven applications are on the rise, and so is the need to extract useful information from terabyte and petabyte scale data sets (Big Data). Additionally, the amount of data from large scientific instruments as well as the resolution of large images are also increasing which creates visualization challenges that require a larger viewing area to capture the details [2]. So, visualizing multidimensional, time-varying data sets is both a challenge to the computational infrastructure and to the current display wall technologies.

Large tiled-display walls are enabling a new era in large-scale visualization and scientific explorations, for the various research and scientific communities. These large display walls are very useful in order to interpret and visualize the enormous amount of experimental data that is being collected by large science experiments. These tiled-display walls allow users and researchers to explore features that are either very hard or practically impossible to identify using a conventional PC monitor.

This paper focuses on a novel visualization and display wall technology for displaying large high resolution images from very large data sets. A single computer monitor can constrain the way in which we can analyze large volumes of data. We can view either a low-resolution image that represents the entire extent of the data and thus risk missing the smaller details, or we can view small portions of the data in high resolution while losing the full context. In order to extract new information from Big Data, we need advanced visualization and new data analytics tools for Big Data environments [3]. In general, scientific data can be meaningless if it cannot be represented in a meaningful way, not only to the scientific community, but also to the general public. With Hiperwall, we can see both the broad view of the data sets and the details concurrently which enables the shared view of complex results. With our Hiperwall visualization system, we can display very large high-resolution images, conduct interactive analysis of large data sets, view multiple images or parameters of large data sets, and conduct a comparative view of many data sets at once and stream HD videos from remote collaborators (e.g. from CERN and LSST) [4, 5].

Methods

Visualization methods

Data visualization refers to the techniques that help the user transform data in the form of visual objects contained in graphics. Visualization is defined as a mental image or visual representation of an object or scene or person or abstraction that is similar to visual perception [6]. Visualization of the data can be a challenge to the user’s perception and capabilities [7]. Other definitions of visualization most commonly used in the literature are “the use of computer-supported, interactive, visual representation of data to amplify cognition”, where cognition is the acquisition or use of knowledge [8].

Over the past decades, data visualization has evolved and it has been categorized differently by different authors. This section presents a summary of the information visualization techniques and some of the data visualization categories used in this analysis. More categories could be found in the periodic table of visualization, which is an interactive chart displaying various data visualization methods [9]. Information visualization is an interactive interface of data to increase cognition or perception ability. It utilizes graphics to assist people in comprehending and interpreting the data [7, 10]. Data visualization represents quantitative data with or without axes in schematic or diagrammatic form such as a table, line chart, pie chart, histogram, etc. These methods become more important when the data is massive, which needs to be analyzed and represented in a meaningful form, which is easy to understand and interpret [10]. A recent research study in the white paper by the SAS Institute showed that 98% of the most effective companies working with Big Data are presenting results of the analysis via visualization [11].

Visual Analytics combines both visualization and data analysis [12,13,14]. In data visualization, a variety of techniques are commonly used by data scientists. A table is the simplest and the easiest data representation technique. The information is structured in rows and columns. Rows represent variables and columns represent records with a set of values [15]. The role of the table is the essential part of research and data analysis [10]. Next, pie charts, which is a circle divided into number of slices. Each of the slices describe a numerical proportion. Pie chart visualization could be difficult when comparing different sections of different pie charts. The next category is 2D/3D representations. This category includes various types of graphs and bar charts [16], histograms, and line charts. Bar charts represent a single data series, and related data points are grouped in one series [10]. Histograms, which are commonly used in statistics, represent an accurate distribution of numerical data. Line charts represent information in connected points or “markers” by straight line segments. Line charts are often used to visualize a trend in data over time intervals [10]. These techniques however, offer some limitation when complex data structures need to be visualized. Next, there is geometric transformation techniques which include the scatter plot and parallel coordinate plot. Scatter plot is a graphical display of a set of data using Cartesian coordinates to display the relationship between two variables for a set of data. Typically, one of the variables represents the horizontal axis, and the second variable represents the vertical axis of the data points. These plots are useful to determine trends in data and to identify outliers easily [10, 17]. The parallel coordinate plot helps the user to visualize multi-dimensional data [18]. Next, we have hierarchical image plots, which is a great method to visualize hierarchical structure data [19]. Finally, we have methods that focus on pixels, which is a visualization technique that focuses on the pixels [20]. The main purpose of this technique is display a single value per pixel. In our case, this is an excellent technique to visualize large data sets using the big display walls such as the Hiperwall (see "Visual and data analytics with Hiperwall").

Information Visualization focuses on the use of visualization methods to help the user understand the data. Information visualization is the transmission of abstract data through the use of interactive visual interfaces [21]. Olshannikova et al. [7] presents a detailed list of visualization techniques such as tree map, tag cloud, clustering, motion charts and dashboard which is used in text analysis to depict keyword metadata, typically on websites [7].

The third area for data visualization is interactivity techniques. In these techniques, the user interacts directly with the data through mechanisms that make it possible to manipulate visualization effectively. When the user needs to reveal more details hidden in the data, the zooming technique is a great choice, or when the users need “zoom-in” capabilities, a big display wall such as the Hiperwall is an excellent visualization tool for this technique (see "Visual and data analytics with Hiperwall"). Another technique is the overview + detail which uses multiple views at the same time. This technique works for 2D and 3D views. Others methods such as zoom + pan, focus + context or fish eye, offer different interaction techniques to users [10].

Visual and data analytics with Hiperwall

Large display walls have traditionally been the domain of air-traffic control centers, NASA command centers, and a few big budget corporations [22]. Nowadays, large high-resolution display walls that show large quantities of information at a single glance can be seen everywhere—in shopping malls, airports, athletic arenas, museums, large corporations, and at TV/News stations and broadcasting studios. The uses of display walls are indeed very broad. In the commercial arena, digital signage is by far the most common usage of display walls. In recent years, a number of universities have also installed these display walls in sports arenas and VIP lounges to help attract students and sports fans to their institution to create a better fan experience and for advertising from sponsors. In academia, in the past few years, a number of universities have installed display walls in classrooms, libraries and lobbies. A few universities have installed display walls mostly for conducting interactive data visualization and analysis in a collaborative research environment.

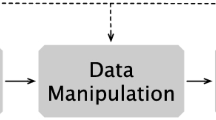

Our tiled-display wall setup, known as Hiperwall (Highly Interactive Parallelized Display Wall) was inspired by an earlier tiled-display wall design architecture, called the OptlPortal that was developed almost 10 years ago [23]. The OptlPortal design used regular LCD PC monitors that were driven by PCs (which was attached to back of each LCD monitor), with optimized graphics processors and network interface cards. Our Hiperwall display was built using commercial-brand 55″ Samsung LED (backlit) HDTVs (instead of LCD PC monitors used in the OptlPortal design) and is driven by a separate rack-mount PCs—one PC for each Hiperwall display tile (instead of each PC attached to back of each display-tile). Hiperwall Inc. is a spinoff company that was created from the NSF funded OptlPuter project at UC Irvine’s California Institute for Telecommunications and Information Technology (Calit2) [24, 25]. It was designed to be a collaborative visualization platform capable of displaying information in real time. The Hiperwall software runs as an application operating on standard PCs connected via a standard 1 Gbps network switch (see Fig. 1 for terminology/topology overview of the Hiperwall visualization system).

Hiperwall terminology/topology overview [119]

Hiperwall is a high performance, high resolution visualization system. Hiperwall’s visualization system software architecture applies parallel processing techniques to overcome the performance limitations typically associated with the display of large multiple images on multiple display devices (flat-panel tiles) simultaneously. Hiperwall is a collaborative visualization platform that is designed to visualize enormous data sets and allows viewers to see the details, while retaining the context of the surrounding data. This allows a group of scientists/researchers/students to collaborate and share detailed information. The Hiperwall system allows the user to display a wide variety of high-resolution 2D and 3D images, animations, movies, and time-varying data in real time all at once on multiple display-tiles that can be configured according to the needs of the user. Users can project a single image across the entire display area or many different images simultaneously. Hiperwall’s middleware even allows researchers in geographically diverse locations to collaborate on Big Science Experiments that generate Big Data.

Members of this research team from Bellarmine University (BU) have recently joined the Large Synoptic Survey Telescope (LSST) project and are part of LSST’s Dark Energy Science Collaboration (DESC). Equipped with a 3.2 Gigapixel camera (the world’s largest digital camera), the goal of the LSST project is to conduct a 10-year survey of 37 billion stars and galaxies that will deliver large volumes of images and data sets (astronomical catalogs) that is thousands of times larger than previously compiled to address some of the most pressing questions about the structure and evolution of the universe, such as understanding the mysterious Dark Energy that is driving the acceleration of the cosmic expansion.

Starting from 2022, LSST will produce 15 Terabytes of raw data images per night, that’s about 200 Petabytes of imaging data over the 10 years of operation, which will be the largest data set in astronomy/astrophysics and cosmology [29, 30]. BU’s Hiperwall visualization system will be used for some of the LSST data analysis tasks to study and visualize some of these large LSST images in order to extract useful and specific information from these large high resolution images. Table 1 shows the current single display technology resolutions which are much smaller than most current astronomical cameras’ full resolution. Figure 2 shows a portion of a high resolution image of a supernova remnant displayed on the Hiperwall Display Wall.

A high resolution picture of a Supernova remnant [34] displayed on the Hiperwall Display Wall

In the field of experimental High Energy Physics (HEP), the four big HEP experiments (ATLAS, CMS, LHCb, and ALICE) at the Large Hadron Collider (LHC) at CERN in Switzerland are producing colossal volumes of data (Big Data). As of June 2018, these HEP experiments at the LHC have recorded over 250 Petabytes of raw data that are stored permanently at the CERN Data Center (DC) [31].

When proton beams collide head-on at high energies inside the LHC detectors, new subatomic particles are created that decay in complicated ways as they travel through layers of the detector. The collision energy is converted into mass that create these short-lived subatomic particles. Currently, protons collide head-on inside the LHC detectors at an unprecedented rate of 1 billion times per second [31]. Most (99%) of the raw data events are filtered out by the LHC experiments, and the “interesting” data events are stored for further analysis. The filtered LHC data are stored at the CERN Data Center, where the initial data reconstruction is performed [31]. Even after this data reduction, the CERN Data Center (Tier0 Grid site) processes on average about one petabyte of data per day the equivalent of around 210,000 DVDs [32]. As of June 2018, even after filtering out 99% of the raw data, around 50 Petabytes (50 PB) of data have been stored [33] for data analysis. That’s 50,000 Terabytes of data, the equivalent to nearly 15 million HD movies [33]. This 50 PB of data that have been stored can be retrieved and accessed by thousands of LHC physicists worldwide for analysis via the Worldwide LHC Computing Grid (WLCG) and the Open Science Grid (OSG) cyberinfrastructure that is based on tiered grid computing technology. On any given day, on average, more than two million grid jobs run on WLCG and OSG for the LHC experiments. These subatomic particles that decay within the detector, leaves tracks or signatures of their presence that are registered by converting the particles’ paths and energies into electrical signals to create a digital snapshot of the “collision event”.

The control node

The control node (labeled as DN CN in Fig. 3) is the brain of the Hiperwall system. The control node gives the user the ability to control what, where, how, and when the contents are displayed. The user interface shows a miniature view of the eight Hiperwall display-tiles. The interactive Hiperwall drag-and-drop simplicity gives the user the ability to place contents on the display-tiles.

Advanced Visualization and Computational Lab (AVCL) schematics. See Table 2 for description of components

The secondary control node

The secondary control node provides the user the ability to control the Hiperwall system (nodes) remotely from a distant location via a portable device, such as a tablet. The secondary control node is not shown in Fig. 3.

The display node

The 8 display node PCs in the rack (labeled as DN A1 through DN D2) as shown in Fig. 3.F.1 are connected to the Hiperwall display-tiles (one display node for each display tile). Each display node consists of a display device that is driven by a PC (mini-tower or rack-mount) running the Hiperwall software. The Hiperwall software runs as an application operating on a standard PC connected via a standard 1 Gbps network switch. Hiperwall’s tiled display system software architecture applies parallel processing techniques to overcome the performance limitations typically associated with the display of large multiple images on multiple display devices (flat-panel tiles) at once. A display node software receives content over a 1 Gbps network switch from a sender or a streamer node and projects it on the display wall tile via a HDMI cable. All display-node computers work in parallel, thus giving flexibility and scalability while also allowing the user to place contents on one or more multiple display-tiles all at once.

The display wall

Each of the 8 display-tiles (labeled as DN A1 through DN A2) as shown in Fig. 3.F.6 are connected to the corresponding display node PC in the rack. The display wall projects the contents from the user’s Workstation (sender and streamer nodes) onto the display wall (flat-panel tiles) via the control node. Each tile is a high-end commercial-brand 55″ (UD55C) Samsung LED (backlit) HDTV with a very narrow bezel size. The top and the left bezel size is 3.7 mm (about 0.15″), whereas the bottom and the right bezel size is 1.8 mm (about 0.07″). Each of the display node PCs are responsible for displaying and rendering a portion of the overall image that is being shown/displayed on the Hiperwall. Thus, all the PCs work in parallel to render the total image.

The Hiperwall software runs on each display node and renders images though the control node which transfers the data displayed on Hiperwall display-tiles via the display nodes. The Hiperwall system provides robust visualization of multiple forms of display and monitoring capabilities simultaneously with a lot of user flexibility. With the Hiperwall visualization system we can display various types of images (1 GB or larger) and contents on the display wall. We can add, resize, scale, rotate, zoom and reposition large images, charts, plots and HD video feeds for visualization, simultaneously. Users can spread one large piece of image or spread a variety of images across the entire display wall. In our setup, the 8 display-tiles are arranged in a \(4\times 2\) format. The \(16'\times 4.5'\) display wall has an effective bezel-to-bezel size of only 5.5 mm (0.2″). The total resolution of the display wall is \(7680\times 2160\) pixels which is about 16.5 Megapixels (MP). Figure 4 shows an actual \(24457\times 24576\) pixels (600 MP) large astronomical image of a supernova remnant. In comparison, the total viewing area of our Hiperwall Display Wall and a standard HD \(1920\times 1080\) (2 MP) monitor are shown as white boxes. A single LSST image [34] will be about five times larger than this astronomical image.

The total viewing area of the \(7680\times 2160\) pixels (16.5 Megapixels) Hiperwall Display and a standard HD \(1920\times 1080\) (2 Megapixels) monitor superimposed (shown as white boxes) on top of a \(24457\times 24576\) pixels (600 Megapixels) large astronomical image of a supernova remnant (SNR) [34]. Note that a single LSST image [120] will be about five times larger than this astronomical image

The sender/streamer nodes

The sender and streamer nodes deliver content to the Hiperwall display-tiles via the 1 Gbps network switch. The sender/streamer nodes are high-end workstations. It is called a sender node if it is running the Hiperwall sender software license for displaying still images and animations. A streamer node runs the Hiperwall streamer software license for displaying HD videos. Sender nodes allow users to view the contents of the monitor(s) connected to the users’ workstation. The sender/streamer nodes can run multiple applications of the Hiperwall sender/streamer software. A Java application allows the output of the user’s application to be transported across the network for viewing on the Hiperwall display wall tiles. Users can send the entire screen to the display wall or divide it up into rectangular regions (tiles) and deliver each region to the Hiperwall as an independent object. Figure 5 shows a high resolution picture of the surface of Mars taken by NASA’s Curiosity rover that was displayed/projected from one of the sender nodes (workstation) onto the Hiperwall Display Wall. Figure 6 shows students analyzing particle tracks from HEP proton-proton collision events from the ATLAS experiment using the Hiperwall Display Wall.

A high resolution picture of the surface of Mars taken by NASA’s Curiosity rover displayed on the Hiperwall Display Wall [121]

The screen sender software can also run remotely. At BU, we have 11 sender licenses and 1 streamer license, that allows us to have up to 12 display projections at a time. Streamer software can provide high frame rate feeds to the Hiperwall displays and are used for HD videos and animations. The streamer system can stream up to 60 frames per second (FPS) at a resolution of 1080P or higher. FPS is the frequency (rate) at which an imaging device produces consecutive images. 60 FPS is typically used because high definition televisions (HDTV) are designed around 60 Hz signals as a common base capability. 60 FPS is optimal to the eye and gives a much more realistic sense of motion for what is happening on the screen [35]. A high resolution image or HD video feed can be enlarged and stretched across the entire display wall or multiple arrangements of many images or HD video feeds can be arranged in any size or position across the display-tiles and zoomed without loss of clarity.

Hiperwall user portal

The original version of the Hiperwall software had numerous challenges as described further in section challenges while using the Hiperwall in educational and research pursuits. When any user needs to display an image from any one of their monitors to the display-tiles, the user first clicks on the Sender Icon on their workstation’s taskbar to open a window as shown in Fig. 7. Then the user selects the “Configure Source Windows” button to open a window, labeled HiperSource Sizer (shown in Fig. 8), which the user can move and resize to select full space or “dragged area” of their workstation’s monitors to send to the Hiperwall control node. The user has a choice to select the contents of one monitor, two monitors, or all three monitors. The layout of the workstation’s three monitors are shown in Fig. 9. Regardless of how many monitors the user sends or the contents of each of the monitors, Hiperwall treats them as a single image. Users can use the HiperSource Sizer window in Fig. 8 to resize and maximize the HiperSource Sizer to send the contents of a single monitor (any one of the workstation’s three monitors) to the control node. Users can also click and drag the HiperSource Sizer window border to select any two or all three adjacent monitors’ display area. The HiperSource Sizer allows a click and drag operation to select any subset of the total viewing area, but for simplicity, we only present to our users the options of one, two or three monitors. Besides resizing to customize the sent area, there is also a button for sending all screens, “Full Desktop (All Screens)” as shown in Fig. 8. This will skip the “Commit All” step and immediately broadcast all three monitors. For the other methods of resizing, the user needs to click “Commit All” after they have determined and selected what they want to send to the control node.

Once the user has used the sender software (see “The sender/streamer nodes”) to choose what they will broadcast to the Hiperwall, they can then use the Hiperwall User Portal to decide how the broadcast will be displayed or placed on the display-tiles as shown in Fig. 10. We modified Hiperwall’s environment settings and developed our own User Portal, starting from the workstations and building from the Hiperwall software’s own environment. We were able to individually make display environments for each specific workstation that has access to the Hiperwall as shown in Fig. 11a–c. In Fig. 11a, the user is given the links to choose how to display on the Hiperwall their broadcast, depending on if the user is sending one, two, or all three of their workstation’s monitors. We had to decrease some of the control features of the control node for the users in order to make the system more user friendly. For example, they are not able to rotate or resize or manually move the displayed areas on the Hiperwall. Figure 10 shows the nomenclature of Hiperwall’s 8 display-tiles, Fig. 10b shows an image from any one of the monitors displayed on the center 4 display-tiles, Fig. 10c shows an image from any one of the monitors displayed on the left 4 display-tiles, Fig. 10d shows an image from any one of the monitors displayed on the right 4 display-tile, and Fig. 10e shows an image from all three monitors displayed on all 8 tiles. Fig. 12 shows the scenario where the user has selected to send all three monitors and then choose the LCR option in Fig. 11b to center the broadcast on the Hiperwall. This leaves black bands on the top and the bottom of the displayed image which can be removed by using the Zoom link option in Fig. 11b.

a shows nomenclature of Hiperwall’s 8 display-tiles (A1, A2, B1, B2, C1, C2, D1 and D2); b shows an image from any one of the monitors displayed on the center 4 dislay-tiles (B1, B2, C1 and C2) by selecting P209-07-C.hwe from Fig. 11c; c shows an image from any one of the monitors displayed on left 4 display-tiles (A1, A2, B1 and B2) by selecting P209-07-L.hwe from Fig. 11c; d shows an image from any one of the monitors displayed on the right 4 display-tiles (C1, C2, D1 and D2) by selecting P209-07-R.hwe from Fig. 11c; e shows an image from all three monitors displayed on all 8 display-tiles by selecting P209-07-LCR.hwe in Fig. 11b

Results and discussion

Display wall cost benefit analysis

In the US, planar-tiled projected displays were first built in the 1990s at Princeton University and at Argonne National Laboratory [25]. These display walls were all prototypes. The first screen display, called PowerWall was built in 1994 at the University of Minnesota in collaboration with Silicon Graphics as a single \(6'\times 8'\) screen illuminated from the rear by a \(2\times 2\) matrix of video projectors that were driven by 4 RealityEngine2 graphics engines [36]. Around the same time a number of academic research groups began building display walls using commodity DLP or LCD projectors [37].

One of the first-generation display walls was built by Princeton University in 1998, that used a \(18'\times 8'\) rear projection screen and eight Proxima LCD commodity projectors [38]. This system had a resolution of \(4096\times 1536\) pixels (6.3 MP) that was driven by a network of eight Pentium II PCs running Windows NT. In 2000, this display was later scaled up to a resolution of \(6144\times 3072\) pixels (19 MP) with 24 Compaq MP1800 digital light-processing (DLP) projectors and a network of 24 Pentium III PCs running Windows 2000 [38].

A notable early LCD tiled-display wall was built in August 2002 at the NASA Ames Research Center as a \(7\times 7\) array flat panel LCD screens powered by a Beowulf cluster [23]. In September 2002, the Electronic Visualization Laboratory (EVL) [39] at the University of Illinois at Chicago built a 100 MP LambdaVision display wall with NEC monitors using custom-built PCs [23]. In 2005, a group at UC-Irvine built a 200 MP 50-tiled display wall out of 3000 Apple Cinema displays and Apple Power Mac G5s that was later adopted by the OptIPuter project that used PCs driving the 24″ LCD monitor displays. In 2008, a group at UC-Irvine’s California Institute for Telecommunications and Information Technology (Calit2) received funding from NSF for their OptlPortal open-source project [25] and built a 27 MP 12-tile OptlPortal display wall. The OptlPortal project used desktop LCD tiles, PCs to power the display-tiles (one PC per tile), graphics card, and local networking to build a 200 MP OptlPortal tiled display wall at a cost of about $1000/MP [23]. So the 200 MP OptIPortal display wall would have costed about $200,000. Since the OptIPortal 50-tiled display used regular \(1920\times 1080\) (2 MP) 24″ LCD displays for desktop computers, the group at Calit2 was able to keep the cost down. The main disadvantage with the OptIPortal tiled-display was that the LCD displays had thick bezels size that created broad mullions (horizontal and vertical strips where there is no visible image) in between two adjacent tiles which the users found to be distracting [23]. Back then, the typical 24″ desktop LCD monitors had a bezel size of 15 mm (about 0.6″); so bezel-to-bezel size was 30 mm about (1.2″).

A notable display wall, later dubbed as the “IQ-Wall” was installed at Indiana University’s (IU) Advanced Visualization Laboratory (AVL) in 2004, utilizing relatively expensive high-end components and a professional audio/video integrator for uses varying from research visualization to collaborative presentations to artistic creations [40]. The “IQ-Wall” struck a balance between cutting-edge technology, good performance, and cost [40]. IU has been a pioneer in building display walls in academia. From 2004 to 2015, various groups at IU have built nine separate display walls with \(2\times 2\, (8'\times 4.5'),\, 3\times 3\, (10'\times 6'),\, 3\times 4\, (10'\times 8'),\, 4\times 4\, (13.5'\times 8'),\, 6\times 2\, (24'\times 4.5'),\, 6\times 4\, (24'\times 9'),\) and \(8\times 2\, (27'\times 4')\) tile configurations and size, with display wall resolution ranging from 2.1 MP to 42 MP [40].

As mentioned earlier, our \(16'\times 4.5'\) (\(4\times 2\) tile configuration) 16.5 MP custom-made tiled-display wall setup was built upon the OptlPortal display wall’s PC-based architecture to power each display tile. The installation of our Hiperwall visualization system was completed by October 2013 in the Advanced Visualization and Computational Lab (AVCL). Since we received $209,347.00 from our NSF grant, our total budget for everything (including the shipping and handling costs, and other miscellaneous expenses) had to be within that amount.

The cost for the hardware components of the BU’s Hiperwall set-up that includes the 8-tiled display-tiles (each display tile is a 55″ Samsung commercial-brand UD55C HDTV), Rack-Mounted Control and Display Node (9 Dell Optiplex PCs), Secondary Control Node (1 Dell Latitude Tablet), 1 Gbps Dell 24-port Network Switch, Audio Digital Signal Processor, Audio Power Amplifier, Ceiling Speakers, plus the HDMI and Network cables and wires was $70,830. We also paid $54,194 ($10,839/year) for the Hiperwall software licenses and full-technical and software support for 5 years, of which $33,884 ($6777/year) was for the Hiperwall software licenses and $20,310 ($4062/year) was for the full-technical/software support for 5 years. The Hiperwall software license cost is for the Hiperwall Master license, 1 Secondary Control license, and 11 Sender licenses for the 9 Sender nodes (Data Analysis workstations), the Hiperwall Control Node and the Secondary Control Node, and 8 Display licenses for the 8 Hiperwall Display Nodes. Additionally, the hardware installation cost was $11,285. So all the Hiperwall hardware components, software licenses and technical/software support, including the installation expenses was $136,309.

We also purchased 9 high-end Dell T5600 Data Analysis Workstations (Sender/Streamer Nodes) each with 64 GB RAM, Dual-CUDA-768 cores and 4TB HD with triple 24″ monitors that is separate from the Hiperwall display wall at a cost of $64,846. These Data Analysis Workstations were configured to run on both Windows and the Linux OS environments, simultaneously. Therefore, the total of cost of the 8-tiled \(16'\times 4.5'\) (\(4\times 2\) configuration) 16.5 MP (\(7680\times 2160\) pixel) Hiperwall visualization system that includes the 9 Dell T5600 Data Analysis Workstations and the Hiperwall software licenses and full-technical/software support for 5 years and the installation expenses was $201,155. The most expensive hardware component of our Hiperwall setup was the eight 55″ \(1920\times 1080\) pixel resolution (2 MP or 2K) Samsung commercial-brand UD55C HDTV for the 8 display-tiles, which was $5994 each ($47,952 for the eight HDTVs). The reason we decided to go with these 55″ Samsung commercial-brand UD55C HDTV was due to its narrows bezel size. Bezel size is perhaps the single most critical aspect of any display wall since thick bezels can be distracting when viewing images that create the appearance of a mullion between two adjacent display-tiles. Moreover, there is loss of information from displayed images in the bezel sections when images are displayed on multiple display-tiles. Even though these HDTVs were pricey, at that time these was the least expensive 55″ commercial-brand HDTVs in the market which had the narrowest bezel size (Left/Top bezel size of 3.7 mm and Right/Bottom bezel size of 1.8 mm); so a bezel-to-bezel size would be 5.5 mm (about 0.2″). We also looked at the non-commercial-brand (regular) 55″ (2 MP or 2K) Samsung UE55A HDTV which were cheaper ($3750 each) but these UE55A models have twice the bezel size, a bezel-to-bezel size of 10 mm (about 0.4″) compared to the UD55C model that has a bezel-to-bezel size of 5.5 mm (about 0.2″).

Even though the hardware expenses for our Hiperwall set up was relatively low, the only added expense with Hiperwall is the cost for the annual software license and technical/software support of the Hiperwall Master license, 1 Secondary Control license, and 11 Sender licenses for the 9 Sender nodes (Data Analysis workstations), the Hiperwall Control node and the Secondary Control Node, and 8 Display licenses for the 8 Hiperwall Display Nodes which is $10,839/year. For the future, when we renew our license for another 5 years from October 2018 to October 2024, we will not purchase the technical/software support since we are now very familiar with the Hiperwall software and the system.

Additionally, since we rarely used the Secondary Control Node (the Tablet) to control the Hiperwall, we will no longer renew the license for the secondary control. Moreover, we hardly used all 9 sender nodes (the 9 Data Analysis workstations) all at once to display images and contents to the display-tiles for visualization. So, we have decided to purchase 6 sender licenses for the next 5 years from 2018 to 2024. Therefore, we will be spending $5237 annually (which is reasonable) from 2018 to 2024 for the Hiperwall Master license, 7 Sender licenses for the 6 Sender nodes (Data Analysis workstations) and the Hiperwall Control node, and 8 Display licenses for the 8 Hiperwall Display nodes.

Before we decided to go with Hiperwall, we explored RGB Spectrum visualization system’s MediaWall 4200 Display Processor for the same 8-tiled \(16'\times 4.5'\) (\(4\times 2\) tile configuration) 16.5 MP (\(7680\times 2160\) pixel) display wall. At the time, the cost of this expensive high-end display wall processor was $50,000. The total cost of RGB Spectrum’s MediaWall would have been about $20,000 more than our budget amount of about $200,000 (excluding shipping/handling and any miscellaneous expenses) for a comparable 8-tiled \(16'\times 4.5'\) (\(4\times 2\) tile configuration) 16.5 MP (\(7680\times 2160\) pixel) Hiperwall visualization system that includes the nine Dell T5600 Data Analysis Workstations using the same eight Samsung commercial-brand UD55C display-tiles.

Recently this year, the School of Computing at Weber State University set up a comparable 8-tiled 16.5 MP (\(7680\times 2160\) or \(8\text{K}\times 2\text{K}\) pixel resolution) Visualization system from Extron Electronics [41], along with four T5600 Dell Data Analysis Workstations (each with 16 GB RAM and 0.5TB HD) at a cost of $95,913. Their \(16'\times 4.5'\) display wall uses the Extron Quantum Connect Display Wall Processor [42] to power the 8 display-tiles that cost $17,815, which is relatively inexpensive for a display wall processor. For the display-tiles, Weber State University purchased the latest 55″ Panasonic TH55LFV70U commercial display (\(1920\times 1080\) pixel) HDTV at $5190 apiece, that has a Left/Top bezel size of 2.25 mm and Right/Bottom of 1.25 mm; so bezel-to-bezel size would be 3.5 mm (about 0.14″) which is considered among the very best for a tiled-display wall. The main advantage is that there are no annual license fees for the Extron display wall which is a significant cost saving. This Extron display wall is a very good option for a control/surveillance command center. The setup can be easily expanded up to 14 display-tiles. Unlike Hiperwall, Extron is not the best display wall to conduct research tasks as a group to carry out visualization studies of images to study rare events in high resolution since zooming an image on the display wall across all the 8 tiles results in a reduction of the resolution image since Extron’s software does not run on each display tile to render the images, instead projects the source images to the display wall. Even though, the Extron display wall has some limitations in retaining the resolution of zoomed images, it gives the best bang for the buck as a general-purpose display wall due to its ultra-narrow bezel size of the display-tiles, the relatively cheap display wall processor and no annual license fees.

In the US, in academia, in the past few years, Hiperwall display walls have been installed at Clemson University, La Sierra University, Gonzaga University, UC-Irvine, and Arizona State University. Hiperwall is also being used in Command and Control Centers at Santa Ana Police Department, Kansas City Police Department, Los Angeles Metro-Rail and Bus System, Western States Information Network (WSIN), and at the US Department of Veterans Affairs. Notably, in entertainment, the Blue Man Group uses Hiperwall to enhance the entertainment experience to transform their digital signage at their Las Vegas venue and by the T3 Enterprise in Media and Advertising.

In recent years, some of the universities have already installed display walls (from other vendors) for teaching and research, notably, the University of Michigan (75 MP—\(12'\times 7'\) in a \(3\times 3\) tile configuration), Georgia State University’s Collaborative University Research & Visualization Environment Lab (25 MP—\(14'\times 4.5'\) in a \(6\times 2\) tile configuration), Dartmouth College (16.5 MP—\(16'\times 4.5'\) in a \(4\times 2\) tile configuration), Brown University’s Digital Lab (24 MP—\(16'\times 7'\) in a \(4\times 3\) tile configuration), University of Texas at Austin’s Texas Advanced Computing Center (328 MP—\(36.5'\times 8'\) in a \(16\times 5\) tile configuration with 30″ Dell monitors), Stanford University’s Rumsey Center (33 MP—\(16'\times 7'\) in a \(3\times 3\) tile configuration), University of Oregon’s Visualization Lab (\(50\;\text{MP} - 24'\times 9'\) in a \(6\times 4\) tile configuration), and Weber State University’s School of Computing (\(16.5\;\text{MP} - 16'\times 4.5'\) in a \(4\times 2\) tile configuration).

In Table 3 we have provided a detailed comparison chart of Hiperwall and eight other leading display-wall products [43]. The cost comparisons are for the display wall hardware only. Based on the hardware and the features of these eight display wall products, only one company (Userful Corporation) best matches the Hiperwall’s features and capabilities. Based on our assessment, the PC cluster-based distributed display software architecture to power the display-tiles is an improvement over the display wall Processor-based systems. As evident by the comparison chart in Table 3, Hiperwall’s features and capabilities are broader than others.

In summary, the final cost will depend on the physical size of the display wall, the number of pixels that can be packed into the wall (pixel density, i.e. pixel count per screen area) based on the HDTV model, the bezel size of the displays, and any custom-made features added to the display wall.

Big Data analytics and techniques

Today large data sources are ubiquitous throughout the world due to the dramatic increase in human and machine generated data [7]. Big Data is generated from the large Big Science experiments/instruments such as LHC in HEP and LIGO in Gravitational Wave Astrophysics, other measuring devices, radio frequency identifiers, social media and social network portals such as Facebook, Twitter, and Instagram, search engines like Google, meteorological and remote sensing instruments, and data from various mobile devices, including a plethora of audio and video recordings from various devices. Currently, there are a number of different Big Data analysis techniques in use in a wide variety of areas that generate Big Data that are primarily based on tools used in statistics and computer science [7].

The Big data analysis tasks involving visual analytics is an emerging area in visualization. A typical visual analytics problem is often complex and could require multiple data analysis and visualization task. Visual analytics of Big Data requires both automated analysis and interactive visualizations for effective understanding, reasoning and decision making on the basis of a very large and complex dataset [53, 54]. A number of Big Data Visual Analytics techniques have been developed over the years. The rapid technical developments have greatly enhanced the use of visual analytics techniques to solve real-world problems. Due to the growing need for visual analytics, there is an increasing need for a comprehensive survey covering the recent advances of the field.

As the data acquisition and processing rates are growing, traditional data analytics methods are being replaced by advanced Big Data analytics and visualization techniques using Big Data technologies such as Statistical Analysis System (SAS) [55, 56] for data visualization from a business and corporate perspective. In the scientific arena, there are number of data-driven advanced data analysis techniques used today to analyze large amounts of data (Big Data) such as Neural Networks (NN) [57,58,59,60,61], Data Mining [62,63,64,65], Signal Processing [66,67,68], Visualization Methods [69,70,71], and Machine Learning [72,73,74] that includes various Multivariate Analysis (MVA) techniques such as Boosted Decision Trees (BDT) [75,76,77,78,79,80,81,82,83,84], Likelihood [81, 84], Artificial Neural Networks (ANN) [62, 85, 86], and Bayesian Neural Network (BNN) [81, 87,88,89].

In HEP, to search for new and rare subatomic particles in ever larger data sets at the LHC experiments at CERN indeed challenges our ability to analyze and visualize more scientific information in a timely manner than we had ever encountered in science where we need to extract the maximum available information from the datasets. Extracting rare “discovery” signals by separating (filtering) background events from a large number of parameters in the petabyte-scale datasets is indeed a very challenging task both in terms of data analysis and visualization at the TeV scale LHC energies.

The subatomic particles that decay within the detector, leaves tracks or signatures of their presence that are registered by converting the particle paths and energies into electrical signals to create a digital snapshot of the “collision event”. At the LHC, data recorded by the ATLAS detector corresponding to proton-proton annihilation events can typically consist of about 2000 measurements of position, deposited energy and timing for a large number of tracks, etc. During each collision, many new particles are created. After passing through the reconstruction algorithm, and after being combined with calibration constants, these measurements are typically reduced to about 200 reconstructed objects, such as tracks from all decay products, electromagnetic clusters, hadronic clusters, etc.

With the emergence of such large and high dimensional data sets, the task of data visualization has become increasingly important in both machine learning and data mining [89]. So, visualization is crucial for analyzing and exploring such large-scale complex multivariate data. The footprints or electronic signals that are recorded by the detectors during a collision that can be visualized are called “Event Displays”. These Event Displays are very useful for pattern recognition of particle tracks. A visual representation of the data as an Event Display shows the correspondence between the data and the detector geometry that provides a snapshot of what has occurred inside the detector. These Event Displays are dynamic images that enable us to see the various parameters/features about the subatomic particle, and shows how these subatomic particles traveled through the detector. The recorded Event Displays of the collisions are stored in the XML file format. The visualization of these collision data is essential for conveying the underlying physics of these data events. The Event Display contains objects such as Tracks and Hits. They are usually represented by simple (Hits) or unique (Tracks) geometrical objects, which can be displayed in special (2D) projections. Detector Display contains Detector elements (Chambers, and the various Detector layers). They are usually represented by replicated complex geometrical objects.

Visualization is especially useful when the information being portrayed is positional in nature and the spatial correlations are important. For example, color and other visual cues are employed to convey information about the amount of energy deposited at the spatial points. For the ATLAS experiment, visualization of the Event Displays can be done by the data analysis software packages/toolkits- ATLANTIS and HYPATIA (a student version of ATLANTIS) in 2-dimension and in 3-dimension using CAMELIA using a built-in a XML event loader. All three visualization toolkits are a collection of software modules capable of displaying all types of data from the ATLAS experiment and have sophisticated graphics, GUIs, debugging tools and analysis capabilities for the visual investigation and physical understanding of all types of events inside the ATLAS detector.

All of these three data analysis toolkits are graphics packages for visual analytics that provides a graphical representation of what is happening in a collision event to understand the underlying physics processes inside the ATLAS detector which displays the trajectories of the particles produced by the proton-proton collisions. These toolkits allow us to interact with the data visually and study the traces of the particles in different parts of the ATLAS detector in order to discover new particles by reconstructing them from their decay products. These toolkits also help to develop reconstruction and analysis algorithm and are also used for debugging. These toolkits display the tracks together with their signature in the different parts of the ATLAS detector and provide information about the particles, and their energy etc. These toolkits also provide real-time interactive control of the display, processing and I/O of the data, and has all the basic modules of a complete visualization system that represent both the main data flow and the interactive links controlling program flow. The I/O streams are being accessed interactively.

One can select specific tracks for analyses, display event properties for detailed study, filter events, and display them in perspective, orthogonal and cut views. The reconstruction code combines data from the experiment with calibration constants and geometric information about the detector from the database into reconstructed objects such as clusters in the calorimeters. The user can interact “locally” to manipulate the rendering of the contents of the display list, rotating, shading, zooming the image, etc. Two-dimensional representations of the data are usefully employed to display a high resolution “fish-eye” view of the event so that a range of sub-detectors of vastly different sizes can be included in the same image panel [90, 91].

The Hiperwall visualization display wall allows us to carry out the visualization studies of the decay products of particle collisions using these data analysis software packages/toolkits installed in the workstations. In Hiperwall, these Event Display images can be displayed anywhere, within or across the display-tiles, in correct aspect ratio or stretched to fit, in whole or can be zoomed on specific features of an event to emphasize details and display its properties. A full array of features includes dynamic window sizing and positioning, smooth zooming within the images, custom borders, titling, programmable presets, and backgrounds. CAMELIA offers browsing and manipulating 3D data with full functionality for 3D display, such as 3D visual operations, like Rotation, Translation, Zooming, Scaling, Sheering and Skewing. With CAMELIA, we can pick a track in a current display and zoom in to show the position of the track with respect to the recorded hits and detector elements. We can also move the view in 3D to inspect the track from all angles and display the reconstructed properties and parameters of the fitted track.

At the LHC, pile-up (multiple interaction points) presents both a serious data analysis and visualization challenge when searching for new and rare types of subatomic particles from the complex and massive petabyte scale experimental datasets. At the LHC, decay particles containing electrons or muons provide clear trigger signals and rich event signatures for new particle searches but due to pile-ups, the identification of the correct interaction point to determine the origin of these electron and muon tracks is a challenge. Figure 13 shows the pile-up situation from the proton-proton collisions in the ATLAS detector at the LHC. The image in Fig. 13 that is part of the Event Display is a good example of visualization challenges in HEP. We are looking for only two particle tracks- a pair of muon and an anti-muon track from the decay of the Z Boson (\(Z^{0} \rightarrow \mu ^{-} \mu ^{+}\)) from just one interaction point where the rest of the tracks are simply background events. To complicate the scenario that creates a visualization challenge is that very small (tiny) fraction of these background tracks are what we call “fake” muon-antimuon pairs, since these have decayed from hadrons (which are called hadronic jets) and are not from the Z Boson. So these “fake” muon-antimuon tracks which are part of the background events have to be filtered out. Such a scenario requires a high-resolution display wall such as Hiperwall which can assist with the challenging visualization task to filter out the signal events from the myriad background events.

ATLAS Pile-up. a and b shows the pile-up (multiple interaction points) situation from proton-proton collision at the LHC inside the ATLAS detector in a region that is only about 5 cm wide. The pile-up in a has 25 interaction points and in b has 17 interactions points, where only one interaction point produced the decay tracks from the Z Boson. Image courtesy ATLAS Collaboration at CERN

Using the Hiperwall, we are able to zoom in to this 5 cm region of the detector to a size of \(16'\times 4.5'\) using all 8 display-tiles to create a 16.5 MP image without losing any resolution to determine and identify the correct particle track pairs (a muon and an anti-muon) from the decay of the Z Boson. Figure 14a and b show the full 2D Event Display of the corresponding to the Fig. 13a and b.

a and b shows the visualization of a full 2D Event Displays of the corresponding Fig. 13a and b using the ATLANTIS toolkit. Image courtesy ATLAS Collaboration at CERN.

Signal extraction from large heterogeneous background events using Multivariate Analysis (MVA) techniques in HEP

Large-scale data driven applications are on the rise, and so is the need to extract useful information from terabyte to petabyte scale data sets (Big Data). The main goal in data analysis is to obtain the maximal information and extract the best possible results from the data. Multivariate analysis (MVA) methods are discriminating techniques for extracting small signals based on machine learning to separate the signal from the background data in very large datasets that are very useful in fields that generate Big Data.

Each MVA technique is well characterized mathematically and use different algorithms to approximate the same mathematical object. One of the reasons for applying MVA techniques is simply the lack of knowledge about the mathematical dependence of the quantity of interest on the relevant measured variables. So either there is no mathematical model at all and a detailed analysis is the only possibility of finding the correct dependence, or the known models are simply insufficient and MVA techniques provides a better description of the data.

In HEP, for the large-scale data analysis and visual analytics tasks to search for new subatomic particles, Machine Learning and MVA techniques are becoming ever crucial in extracting rare signal events (new subatomic particles) from huge number of background events in the complex experimental HEP environments. As HEP data analysis is becoming more and more challenging, MVA methods are routinely being used to suppress background and for parameter estimation (regression), where a physical quantity is extracted from a set of directly measured observables. Typically one in a trillion data events will lead to a new particle discovery, such as the recent discovery of the Higgs Boson at the LHC. The main challenge is to identify the rare signal events involving multiple processes in the presence of pile-ups and huge background events from the experimental data sets that contains over 100 trillion events with a plethora of multiple and complex heterogeneous variables. The data analysis process, becomes even more challenging since some of the background events can look exactly like the signal events, which are “fake” signal events (signal-like events coming from various processes such as from other decay channels). The process of data analysis and visualization in HEP is indeed like a detective work. It’s like finding needle in a hay-stack. The key task is to intelligently search for the specific particle of interest by distinguishing it from the background particles and processes by filtering them using numerous detector filter parameters, without throwing out any of the particle of interest (i.e. signal events).

Moreover, pile-up (multiple interaction points) as shown in Fig. 13 presents a challenging visualization environment. Due to the high resolution of the Hiperwall visualization display wall, we are able to zoom into this pile-up region of the HEP Event Displays and identify the right interaction point of these particle tracks. To obtain best possible results it is important to make use of maximum possible information in the data and hence employ optimal multivariate MVA methods for data analysis.

So, for the large-scale HEP data analysis tasks, MVA techniques are extensively being used for signal optimization studies in order to extract useful information about these rare and new subatomic particles from the myriad background events, thus maximizing the chances of a discovery. We have used MVA techniques to guide our signal optimization and visualization studies. The main idea of the MVA is the choice of input variables, where we can simultaneously analyze multiple measurements (variables) on the object being studied. These variables can be correlated in various complex ways.

MVA techniques are particularly useful when dealing with large experimental data sets where multi-dimensional features of the data could be missed in a conventional data analysis. In conventional statistical techniques, one starts with a mathematical model and finds parameters of the model either analytically or numerically by using some optimization criteria. MVA addresses the problem of signal/background classification through supervised machine learning. Starting with samples of data of known type (signal or background), the data are divided randomly into training and testing sets, each of which consists of a mix of signal and background events. MVA techniques are also “trainable”, i.e. it can be designed to self-organize and optimize the classification ability by analyzing events of a known class. This trait makes MVA techniques very efficient for optimization of various parameters in order to separate signal from the myriad complex and heterogeneous background events. For the data analysis tasks, MVA techniques help to suppress the background events without further reducing the signal events. The training data set is used to fix the parameters of the MVA classifier, a function that assigns to each input event a measure of its consistency with the signal or background hypotheses. The parameters are chosen to achieve the best separation between the signal and background training events. The trained classifier is then applied to the testing data set to obtain an unbiased evaluation of the classifier’s detection performance from the number of correctly classified signal and background events. MVA techniques can mine the full parameter space of the events to better discriminate between signal and background.

Subatomic particles that decay to produce multiple hadronic jets (e.g. quarks from Mesons and Baryons) such as in the decay of the Top quark and in the search for the charged Higgs (\(H^{+}\)) Boson, a high resolution visualization display wall that can zoom into the various parts of the detector in high resolution to identify the particle tracks is crucial during data analysis. Using the Hiperwall, we can simultaneously analyze up to eight Event Displays at a time (each with a resolution of 2 MP) one on each of the eight display-tiles (as shown in Fig. 15) or two Event Displays one at a time (each with a resolution of 8 MP) on all eight of display-tiles (i.e. one event display per four display-tiles) or 1 event displays on at a time (with a resolution of 16 MP) on all eight of display-tiles.

Integrated into our HEP data analysis framework ROOT [92] is the Toolkit for Multivariate Analysis (TMVA) [83] which is designed for machine learning applications in HEP. The TMVA toolkit unifies highly customizable MVA classification and regression algorithms in a single framework. The latest version, TMVA 4.0.1 also features more flexible data handling allowing one to arbitrarily form combined MVA methods [83]. TMVA is specially designed for the complex HEP data analysis environments.

In this paper we will describe the TMVA framework for our data analysis tasks in HEP. TMVA provides an environment for the processing, parallel evaluation and application of multivariate classification and multivariate regression techniques, where all multivariate methods respond to supervised learning, i.e. the input information is mapped in feature space to the desired outputs [83]. The mapping function contains various degrees of approximations and may be a single global function, or a set of local models. TMVA provides an unbiased performance comparison between the various MVA methods using the same data. Training, testing, performance evaluation algorithms and visualization scripts of all available classifiers can be carried out simultaneously via the TMVA user-friendly interfaces. TMVA analysis consists of two steps [83] as shown in Fig. 16.

a shows a schematic of the flow (top to bottom) of a typical TMVA training application. The TMVA analysis proceeds by consecutively calling the training, testing and performance evaluation methods of the Factory; b shows a schematic of the flow (top to bottom) of a typical TMVA analysis application. The MVA methods qualified by the preceding training and evaluation step are used to classify data of unknown signal and background composition or to predict a regression target [83]

1. Training phase The ensemble of optimally customized MVA methods are trained and tested on independent signal and background data samples; the methods are evaluated and the most appropriate (performing and concise) ones are selected. In the training phase, the communication of the user with the data sets and the MVA methods is performed via a Factory object that is created at the beginning of the program. The user script can be a ROOT macro [92], C++ executable, or a python script. The TMVA Factory provides member functions to specify the training and the targeted data sets, to register the discriminating input variables, and to book the multivariate methods selected by the user. Subsequently the Factory calls for training, testing and the evaluation of the booked MVA methods. Specific result (“weight”) files are created in XML format after the training phase by each booked MVA method and the evaluation histograms are stored in the output file [83].

2. Application phase Selected trained MVA methods are used for the classification of data samples with unknown signal and background composition, or for the estimation of unknown target values (regression). The application of training results to a data set with unknown sample composition (classification)/target value (regression) is governed by the Reader object [83]. During initialization, the user registers the input variables together with their local memory addresses, and books the MVA methods that were found to be the most appropriate after evaluating the training results. As booking argument, the name of the weight file is given [83]. The weight file provides for each of the methods full and consistent configuration according to the training setup and results. Within the event loop, the input variables are updated for each event, and the MVA response values are computed [83].

During the Factory running, standardized outputs and dedicated ROOT [92] macros allow a refined assessment of each method’s behavior and performance for classification and regression. Once the appropriate MVA method(s) have been chosen by the user, it can be applied to data samples with unknown classification or target values. The interaction with the methods occurs through a Reader class object created by the user. A method is booked by giving the path to its weight file resulting from the training stage [83]. Then, inside the user’s event loop, the MVA response is returned by the Reader for each of the booked MVA method, as a function of the event values of the discriminating variables used as input for the classifiers. Alternatively, for classification, the user may request from the Reader the probability that a given event belongs to the signal hypothesis [83].

In HEP, data analysis tasks, typically include (i) classification (the process of assigning objects or events to one of the possible discrete classes), (ii) parameter estimation (extraction and measurements of track parameters, vertices, physical parameters such as production cross sections, branching ratios, and masses) and (iii) function fitting. Classification of objects or events is, by far, the most important analysis task in HEP, such as for example the identification of Electrons, Photons, Muons, Tau-leptons, etc. and the discrimination of signal events from those arising from background processes [81]. Optimal discrimination between classes is crucial to obtain signal-enhanced samples for precision physics measurements. In HEP data analysis, MVA techniques are mainly used for signal-to-background discrimination, variable selection (finding variables which give the maximum signal/background discrimination), finding regions of interest in the data and measuring parameters (regression). MVA algorithms can be also classified according to two main classes: Supervised Training, where a set of training events with correct outputs is given, and Unsupervised Training, where no outputs are given and the MVA algorithm has to find them.

Boosted Decision Tree (BDT) analysis is similar to the machine learning technique. Let’s first start with a Decision Tree (DT) which is a binary tree structured classifier with two terminal nodes called leafs- the Signal “S” leaf and the Background “B” leaf. DT classifies events by following a sequence of decisions depending on the events variable content- S or B leaf node. Repeated “Pass/Fail” decisions are taken on one single variable at a time until a stop criterion is fulfilled [83]. The “tree” essentially provides a flowchart describing how to classify an observation. We start at the root of the tree, and the leaf ends up determining the classification as shown in Fig. 17.

Shows a schematic view of a decision tree. Starting from the root node, a sequence of binary splits using the discriminating variables xi is applied to the data. Each split uses the variable that gives the best separation between signal and background. The leaf nodes of the tree are labeled “S” for signal and “B” for background depending on the majority of events that end up in the respective nodes [83]

The decision trees are robust in many dimensions but needs a boost and hence refereed as a Boosted Decision Tree (BDT). The boosting of a decision (regression) tree extends from one tree to several trees which form a forest of decision trees and an event is classified on the majority outcome of the classifications done by each tree in the forest [83]. In BDT, the training and building of a decision tree that starts with the root node is the process that defines the splitting criteria for each node, where an initial splitting criterion for the full training sample is determined. The split results in two subsets of training events that each go through the same algorithm of determining the next splitting iteration which is repeated until the whole tree is built [83]. At each node, the split is determined by finding the variable and corresponding cut value that provides the best separation between signal and background. The node splitting stops once it has reached the minimum number of events which is specified by the person performing the data analysis. The leaf nodes are classified as signal or background according to the class the majority of events belongs to.

All trees in the forest are derived from the same training sample, with the events being subsequently subjected to so-called boosting, a procedure which modifies their weights in the sample. In BDT, the tree is split into many regions that are either classified as signal or background, depending on the majority of training events that end up in the final leaf node [83]. Boosting is done by re-weighting events in such a way (a weighted average of the individual decision trees) that higher weight is assigned for misclassified events and a lower weight for correctly classified events. Boosting thus increases the statistical stability of the classifier and also improves the separation performance compared to a single decision tree [83]. Once boosting is done it retrains and repeats the given set of classifiers thus classifies data by a weighted vote of the classifiers. Boosting stabilizes the response of the decision trees with respect to fluctuations in the training sample and is able to considerably enhance the performance with respect to a single tree [83]. With the BDT technique, we are able to detect signals that have different morphologies to those used in the classifier training, and improve on the false signal probabilities.

A simple illustration of a BDT is shown is Fig. 18 [81]: (a) characterized with two feature variables \(x_{1}\) and \(x_{2}\) with the resulting partition of the 2D feature space are shown in the schematics in (b).

a shows a simple illustration of a BDT characterized by two variables \(x_{1}\) and \(x_{2}\) and b shows an Illustration of the corresponding partitions of the 2D feature space [81]

BDT is especially useful to recover events that fail criteria in a traditional data analysis. In the BDT technique, for each variable, we first have to find splitting value (called a “cut”) with best separation between two “entities”—mostly signal (signal-like) in one, and mostly background (background-like) in the other as shown in Fig. 19a and b. The terminal node leaf is assigned a \(Purity\; Value = S/(S+B)\). So the BDT output for each event is determined by the leaf Purity value which is closer to − 1 for background and closer to 1 for signal. The total of all trees in BDT is combined into a “score”. Once a variable (data parameter) is selected, it is then split into two values with the best separation to produce two “branches”. This process is repeated recursively on each node, which ends when there is no longer an improvement in the signal efficiency or when too few events are left. During the data analysis phase, we need to display this BDT plot of the datasets in high resolution using the full display-tiles so that we can accurately determine this splitting (“cut”) value that best separates the signal events from the background events. In that regard, high resolution visualization of the BDT plot is a critical task during data analysis.

a shows a BDT plot for classifier output distributions for signal and background events from a Data sample using the BDT technique [83]. b Shows another example of a BDT plot which shows the optimal “cut” value to separate the signal-like events that have positive cut values from the background-like events which have a negative cut value as determined by the BDT technique [83]

By TMVA convention, signal events have a large classifier output value, while background events have small classifier output values. Hence, retaining the events with splitting value larger than the cut requirement selects signal samples with efficiencies that decrease with the cut value, while the significance of the signal increases. Since the splitting criterion is always a cut on a single variable, the training procedure selects the variable and cut value that optimizes the increase in the separation index between the parent node and the sum of the indices of the two daughter nodes, weighted by their relative fraction of events [83]. In principle, the splitting could continue until each leaf node contains only signal or only background events.

BDT is used for signal optimization in our data analysis to determine the optimal filter parameters in order to efficiently extract signal events from the plethora of complex and heterogeneous background events in LHC datasets by improving the Signal to Background ratio. BDT allows us to study the complicated patterns and parameters in the datasets and make intelligent decisions about the signal extraction by finding new ways to tune and to combine classifiers for signal optimization and gain in performance.

In addition to the recently discovered Higgs Boson, according to Minimally Supersymmetric Standard Model (MSSM) [93,94,95,96], there ought to also be a charged Higgs Boson. Finding the charged Higgs Boson would be an enormous scientific breakthrough. Undoubtedly, the observation of the charged Higgs Boson, would indicate physics beyond the Standard Model and provide pathways to new frontiers in HEP. The MSSM models predict that the charged Higgs Boson will decay into a Tau Lepton and a Tau-Neutrino as long its mass is less than the mass of the Top quark. The data analysis of the charged Higgs Boson is immensely challenging since the LHC produces a lot of Top quark events which will be a large background for the charged Higgs Boson search. So sophisticated MVA techniques such as Boosted Decision Trees (BDT) are needed to separate signal from background, by maximizing the signal acceptance. Figure 20a and b show the event topology of the charged Higgs Boson and Fig. 20c and d show the corresponding event topology of the Top quark decay, which mimics the charged Higgs Boson signal, and are therefore background events to the charged Higgs Boson search. The main difference between the data sample in the BDT plot in Fig. 19 and a data sample in the BDT plot of a charged Higgs Boson is that the background events will be overwhelmingly large while the signal events will be very small.

Another example of the usefulness of the Hiperwall display system is for the search of the lightest Supersymmetric (SUSY) particles (LSPs) [97,98,99,100,101,102,103,104,105,106,107,108,109,110,111], such as the Neutralinos and the Charginos which remedies many of the shortcomings of the Standard Model in HEP involving the instability of the mass of the Higgs Boson. Fig. 21a shows a Top quark decay at the LHC which has strikingly very similar visualization characteristics of the lightest SUSY particles (LSPs), the Neutralinos and the Charginos, as depicted by the subtle purple and orange dots (for visualization purposes), respectively, as shown in Fig. 21b. To detect these rare subatomic particles, the Neutralinos and the Charginos, the Top quark decay as depicted in Fig. 21a would be an overwhelming background when searching for these very rare SUSY particles-Neutralinos and the Charginos in the LHC data. Such complex data visualization studies for this search requires a high resolution visualization system like Hiperwall.

a shows the visualization characteristics of the Top quark decay at the LHC and b shows the visualization characteristics of the lightest SUSY particles (LSPs), the Neutralinos and the Charginos, as depicted by the subtle purple and orange dots, respectively. The Neutralinos and the Charginos signal events as depicted in b needs to be extracted from the background Top quark decay events using the BDT Multivariate Analysis (MVA) technique. Image courtesy ATLAS Collaboration at CERN

Another example of the usefulness of the BDT technique is for the separation of the different hadronic jets that are produced from the Top quark (t-quark) and other subatomic particles, where we need to separate the hadronic jets that are coming from the Up, Down and the Strange quarks (u, d, and s quarks) called the “light-jets” from the hadronic jets that are coming from the heavy quarks- the Beauty and the Charm quark (b and c quarks), which is a very challenging task. We use a BDT based technique called b-tagging [112,113,114] to identify a jet associated with a b-quark by reconstructing the decay vertex of a B-hadron within the jet. It turns out that the b-quarks form B-hadrons that result in b-jets, which then decay into lighter hadrons that result in the light-jets. This complex task of the identification of b-jets in presence of pile-ups is a major visualization challenge in ATLAS. Since decays of the Top quark, Higgs Boson, and SUSY particles involves decay to b-quark, we need to tag the jets that come from a b-quark and from all other background tracks and light-jets. The identification of b-jets originating from b-quarks is an effective way to reject backgrounds events. Figure 21a and b show a sketch of two Top quark decay channels that shows the tagged b-jets from the other jets using the BDT-based b-tagging technique. Figure 22a and b show ATLAS Event Displays of a subatomic particles that has produced different jets (shown in different colors) which has been tagged using the BDT-based b-tagging technique. Figure 23 shows a 3D visualization using the CAMELIA toolkit displayed on the Hiperwall that shows a subatomic particle decaying into several hadronic jets and leptons inside the ATLAS detector and Fig. 24 shows a zoomed 3D visualization using the CAMELIA toolkit displayed on the Hiperwall that shows a subatomic particle decaying into several hadronic jets and leptons in presence of large background events inside the ATLAS detector.

Collaborative research and learning environment using Hiperwall

As an extension of the desktop display environment, tiled displays are still an emerging technology for constructing custom-made visualization environments capable of displaying high-resolution images and visualizing complex data. Tiled display technologies offer a wide range of opportunities for exploring scalable large displays for high-resolution scientific visualization. A high-resolution display wall can show the details much more clearly than a low-resolution display of the same physical size that could be critical in decision making and scientific discovery. These next-generation tiled-display walls are increasingly becoming affordable for building visualization display environments.

The implementation of the Hiperwall visualization system has facilitated an advanced visualization and data analysis environment beyond what is typically possible on desktop systems. In a single desktop monitor, we are limited by the resolution of a single display. The total viewing space (area) of the Hiperwall display wall is over 30 times larger than a 24″ desktop monitor and the total resolution of the Hiperwall display wall is eight times that of a typical 24″ desktop monitor. At BU, the Hiperwall visualization system has made a significant impact and has indeed created an inspiring vision of the future of interactive visualization studies.