Abstract

Background

Maintaining an up-to-date record of the number, type, location, and condition of high-quantity low-cost roadway assets such as traffic signs is critical to transportation inventory management systems. While, databases such as Google Street View contain street-level images of all traffic signs and are updated regularly, their potential for creating an inventory databases has not been fully explored. The key benefit of such databases is that once traffic signs are detected, their geographic coordinates can also be derived and visualized within the same platform.

Methods

By leveraging Google Street View images, this paper presents a new system for creating inventories of traffic signs. Using computer vision method, traffic signs are detected and classified into four categories of regulatory, warning, stop, and yield signs by processing images extracted from Google Street View API. Considering the discriminative classification scores from all images that see a sign, the most probable location of each traffic sign is derived and shown on the Google Maps using a dynamic heat map. A data card containing information about location and type of each detected traffic sign is also created. Finally, several data mining interfaces are introduced that allow for better management of the traffic sign inventories.

Results

The experiments conducted on 6.2 miles of I-57 and I-74 interstate highways in the U.S. –with an average accuracy of 94.63 % for sign classification– show the potential of the method to provide quick, inexpensive, and automatic access to asset inventory information.

Conclusions

Given the reliability in performance shown through experiments and because collecting information from Google Street View imagery is cost-effective, the proposed method has potential to deliver inventory information on traffic signs in a timely fashion and tie into the existing DOT inventory management systems. Such spatio-temporal representations provide DOTs with information on how different types of traffic signs degrade over time and further provides useful condition information necessary for predicting sign replacement plan.

Similar content being viewed by others

Background

The fast pace of deterioration in existing infrastructure systems and limited funding available have motivated U.S. Departments of Transportation (DOTs) to prioritize rehabilitation or replacement of roadway assets based on their conditions. For bridge and pavement assets– which are high-cost and low-quantity assets– many state DOTs have already established asset management systems to track their inventory and conditions (Golparvar-Fard et al. 2012). For traffic assets, however, most state DOTs do not have a good statewide inventory and condition information because the traditional methods of collecting asset information are cost prohibitive and offset the benefit of having such information.

Replacing each sign rated as poor in the U.S. can cost up to a high of $75 ((TRIP) 2014; Moeur 2014). Considering current practices, at best the DOTs can only decide on costly alternatives such as completely replacing signs in a traffic zone or a road section without carefully filtering those which can still serve for another few additional years. The need for prioritizing the replacement of the existing traffic signs and the increasing demand for installing new ones have created a new demand for the DOTs to identify cost-effective methods that can efficiently and accurately track the total number, type, condition, and geographic location of every traffic sign.

To address the growing needs for complete inventories, many state and local agencies have proactively looked into videotaping roadway assets using inspection vehicles that are equipped with high resolution cameras and GPS (Global Positioning System). Roadway videos provide accurate visual information on inventory and condition of high-quantity and low-cost roadway assets. Sitting in front of the screens, the practitioners can visually detect and assess the condition of the assets based on their own experience and a condition assessment handbook. The location information is also extracted from the GPS tag of these images. Nevertheless, due to the high costs of manual assessments, the number of inspections with these vehicles is very limited. This results in a survey cycle of one year duration for critical roadways and many years of complete negligence for all other local and regional roads. The high-volume of the data that needs to be analyzed manually has an undoubted impact on the quality of the analysis. Hence, many critical decisions are made based on inaccurate or incomplete information, which ultimately affects the asset maintenance and rehabilitation process. Such accurate and safe video-based data collection and analysis method –if widely and repeatedly implemented– can streamline the process of data collection and can have significant cost savings for the DOTs (Hassanain et al. 2003; Rasdorf et al. 2009).

The limitations associated with manual inventorying and maintaining records of the roadway assets from videos has motivated the development of automated computer vision methods. These methods (Balali and Golparvar-Fard 2015c; Z. Hu and Tsai 2011; Huang et al. 2012) have potential to improve quality of the current inspection processes from these large volumes of visual data (Balali et al. 2013). Yet, there are two key issues that have remained as open challenges:

-

(1)

Capturing a comprehensive record is still not feasible. This is because current video-based inventory data collection methods do not typically involve videotaping local roadways and are not frequently updated.

-

(2)

Training computer vision methods requires large datasets of relevant traffic sign images which is not available. Due to the high rate of false positive and miss rates in current methods, condition assessment is still conducted manually on roadway videos.

Today, several online services collect street-level panoramic images on a truly massive scale. Examples include Google Street View, Microsoft street side, Mapjack, Everyescape, and Cyclomedia Globspotter. The availability of these databases offers the possibility to perform automated surveying of traffic signs (Balali et al. 2015; I. Creusen and Hazelhoff 2012) and address the current problems. In particular, using Google Street View images can reduce the number of redundant enterprise information systems that collect and manage traffic inventories. Applying computer vision methods to these large collections of images has potential to create the necessary inventories more efficiently. One has to keep in mind that beyond changes in illumination, clutter/occlusions, varying positions and orientations, the intra-class variability can challenge the task of automated traffic sign detection and classification.

Using these emerging and frequently updated Google Street View images, this paper presents an end-to-end system to detect and classify traffic signs and map their locations –together with type – on Google Maps. The proposed system has three key components: 1) an API (Application Programming Interface) that extracts location information using Google Street View platform, 2) a computer vision method that is capable of detecting and classifying multiple classes of traffic signs; and 3) a data mining method to characterize the data attributes related to clusters of traffic signs. In simple terms, the system outsources the task of data collection and in return provides an accurate geo-spatial localization of traffic signs along with useful information such as roadway number, city, state, zip-code, and type of traffic sign by visualizing them on the Google Map. It also provides automated inventory queries allowing professionals to spend less time searching for traffic signs, rather focus on the more important task of monitoring existing conditions. In the following, the related work for traffic sign inventory management is briefly reviewed. Next, the algorithms for predicting traffic sign patterns and identifying heat map are presented in detail. The developed system can be found at http://signvisu.azurewebsites.net/, and a companion video (Additional file 1) is also provided with the online version of this manuscript.

Related work

To date, state DOTs and local agencies in the U.S. have used a variety of roadway inventory methods. These methods vary based on cost, equipment type, the time requirements for data collection and data reduction, and can be categorized into four categories as shown in Table 1.

A nationwide survey was recently conducted by the California Department of Transportation (CalTrans) to investigate popularity of these methods among practitioners (Ravani et al. 2009). The results show the integrated GPS/GIS mapping method is considered to be the best short-term solution. Nevertheless, remote sensing methods such as satellite imagery and photo/video logs were indicated as the most attractive long-term solutions. The report also emphasizes that there is no one-size-fits-all approach for asset data collection. Rather the most appropriate approach depends on an agency’s needs and culture as well as the availability of economic, technological, and human resources. (Balali and Golparvar-Fard 2015b; de la Garza et al. 2010; Haas and Hensing 2005; Jalayer et al. 2013) have shown that the utility of a particular inventory technique depends on the type of features to be collected such as location, sign type, spatial measurement, and material property visual measurement. As shown in Table 2, in all these cases the data is still collected and analyzed manually and thus inventory databases cannot be quickly or frequently updated.

Computer vision methods for traffic sign detection and classification

In recent years, several vision-based driver assistance systems capable of sign detection and classification (on a limited basis) have become commercially available. Nevertheless these systems do not benefit from Google Street View images for traffic sign detection and classification. This is because these systems need to perform in real-time and thus leverage high frame rate methods such as optical flow or edge detection methods are not applicable to the relatively coarse temporal resolutions available in Google Street View images (Salmen et al. 2012). Several recent studies have shown that Histograms of Oriented Gradients (HOG) and Haar wavelets could be more accurate alternatives for characterization of traffic signs in street level images (Hoferlin and Zimmermann 2009; Ruta et al. 2007; Wu and Tsai 2006). For example (Z. Hu and Tsai 2011; Prisacariu et al. 2010) characterize signs by combining edge and Haar-like features, and (Houben et al. 2013; Mathias et al. 2013; Overett et al. 2014) leverages HOG features. More recent studies such as (Balali and Golparvar-Fard 2015a; I. M. Creusen et al. 2010) augment HOG features with color histograms to leverage both texture/pattern and color information for sign characterizations. The selection of a machine learning method for sign classification is constrained to the choice of features. Cascaded classifiers are traditionally used with Haar-like features (Balali and Golparvar-Fard 2014; Prisacariu et al. 2010). Support Vector Machines (SVM) (I. M. Creusen et al. 2010; Jahangiri and Rakha 2014; Xie et al. 2009), neural networks, and cascaded classifiers trained with some type of boosting (Balali and Golparvar-Fard 2015a; Overett et al. 2014; Pettersson et al. 2008) are used for classification of traffic signs.

(Balali and Golparvar-Fard 2015a) benchmarked and compared the performance of the most relevant methods. Using a large visual dataset of traffic signs and their ground truth, they showed that the joint representation of texture and color in HOG + Color histograms with multiple linear SVM classifiers result in the best performance for classification of multiple categorizes of traffic signs. As a result, HOG + Color with linear SVM classifier are used in this paper. We briefly describe this method and modifications in method section. More detailed information on available techniques can be found in (Balali and Golparvar-Fard 2015a). One missing thread is that the scalability of these methods. Different from state-of-the-art, we do not make any assumption on the location of traffic signs in 2D image. Rather by sliding a window of fixed spatial ratio at multiple scales, candidates for traffic signs are detected from 2D Google Street View images. A key benefit here is that the detection and classification results from multiple overlapping images in Google Street View can be used for improving detection accuracy.

Data mining and visualization for roadway inventory management systems

In recent years many data mining and visualization methods are developed that analyze and map spatial data at multiple scales for roadway inventory management purposes (Ashouri Rad and Rahmandad 2013). Examples are predicting travel time (Nakata and Takeuchi 2004), managing traffic signals (Zamani et al. 2010), traffic incident detection (Jin et al. 2006), analyzing traffic accident frequency (Beshah and Hill 2010; Chang and Chen 2005), and integrated systems for traffic information intelligent analysis (Hauser and Scherer 2001; Kianfar and Edara 2013; Y.-J. Wang et al. 2009). (Li and Su 2014) developed a dynamic sign maintenance information system using Mobile Mapping System (MMS) for data collection. (Mogelmose et al. 2012) discussed the application of traffic sign analysis in intelligent driver assistance systems. (De la Escalera et al. 2003) also detected and classified traffic signs for intelligent vehicles. Using these tools, it is now possible to mine spatial data at multiple layers (i.e., CartoDB) (de la Torre 2013) or spatial and other data together (i.e., GeoTime for analyzing spatio-temporal data) (Kapler and Wright 2005). (I. Creusen and Hazelhoff 2012) visualized detected traffic signs on a 3D map based on GPS position of the images. (Zhang and Pazner 2004) presented an icon-based visualization technique designed for co-visualizing multiple layers of geospatial information. A common problem in visualization is that these methods require adding a large number of markers to a map which creates usability issues and the degraded performance of the map. It can be hard to make sense of a map that is crammed with markers (Svennerberg 2010).

The utility of a particular inventory technique depends on the type of features to be collected such as location, sign type, spatial measurement, and material property visual measurement. In all these cases the data is still collected and analyzed manually and thus inventory databases cannot be quickly or frequently updated. The current methods of data collection and analysis are field inventory methods, photo/video logs, integrated GPS/GIS mapping system, and aerial/satellite photography. However, applications for detection, classification, and localization of U.S. traffic signs in Google Street View Images have not been validated before. Overall there is a lack of automation in integrating data collection, analysis, and representation. In particular, creating and frequently updating traffic sign databases, the availability of techniques for mining, and spatio-temporal interaction with this data still require further research. In the following, a new system is introduced that has the potential to address current limitations.

Method

This paper presents a new system for creating and mapping inventories of traffic signs using Google Street View images. As shown in Fig. 1, the system does not require additional field data collection beyond the availability of Google Street View images. Rather by processing images extracted from Google Street View API using a computer vision method, traffic signs are detected and categorized into four categories of regulatory, warning, stop, and yield signs. The most probable location of each detected traffic sign is also visualized using heat maps on Google Earth. Several data mining interfaces are also provided that allow for better management of the traffic sign inventories. The key components of the system are presented in the following:

Extracting location information using google street view API

To detect and classify traffic signs for a region of interest, it is important to extract street view images from a driver’s perspective so that the traffic signs can exhibit maximum visibility. To do so, the user of the system provides latitude and longitude information of the road of interest. The system takes this information as input and through an HTTP (Hyper Text Transfer Protocol) request, Google Street View image are queried at the spatial frequency of one image per 10 m via Google Maps static API. Since the exact geo-spatial coordinates of the street view images are unknown, the starting coordinates are incremented in a grid pattern to ensure that the area of interest is fully examined. Once the query is placed, the Google Direction API will return navigation data in JSON (JavaScript Object Notation) file format, which will then be parsed to extract the polylines that represent the motion trajectory of the cars used to take the images. The polylines will be further parsed to extract the coordinates of points that define the polylines along the road of interest. By adding 90° to the azimuth angle between each two adjacent points, the forward-looking direction of the Google vehicle is extracted. The adopted strategy for parsing the polyline enables identification of the moving direction for all straight and curved roads as well as ramps, loops, and roundabouts. These coordinates and direction information are finally fed into the developed API to extract the Street View images at the best locations and orientations. Figure 2 shows the Pseudo code for deriving the viewing angles for each set of locations, where atan2 returns the viewing direction θ at location (x, y) [between − Π and Π].

This API is defined with URL (Uniform Resource Locator) parameters which are listed in Table 3. These parameters as shown in Fig. 3 are sent through a standardized HTTP which links to an embedded static (non-interactive) image within the Google database. While looping through the parameters of interest, the code generates a string matching the HTTP request format of the Google Street View API. After the unique string is created, the urlretrieve function is used to download the desired Google Street View images.

Detection and classification traffic signs using google street view images

In this paper we use HOG + Color with linear SVM classifier for detection and classification traffic signs since (Balali and Golparvar-Fard 2015a) showed this method has the best performance. Different from the state-of-the-art methods (Stallkamp et al. 2011; Tsai et al. 2009), we do not make any prior assumption on the 2D location of traffic signs in the images. Rather, using a multi-scale sliding window that visits the entirety of the image pixels, candidates are selected in each image and passed on to multiple binary discriminative classifiers to detect and classify the traffic signs. Thus the method independently processes each image, keeping the number of False Negatives (FN – the number of missed traffic signs) and False Positives (FP – the number of accepted background regions) low. It is assumed that each sign is visible from a minimum of three views. The sign detection is considered to be successful if detection boxes (from the sliding windows) in three consecutive images have a minimum overlap of 67 %. This constraint is enforced by warping the image after and before of each detection using homography transformation (Hartley and Zisserman 2003).

Characterizing detections with histogram of oriented gradients (HOG) + color

The basic idea is that the local shape and appearance of traffic signs in a given 2D detection window can be characterized by distribution of local intensity gradients and color. The shape properties can be captured via Histogram of Oriented Graidents (HOG) descriptors (Dalal and Triggs 2005) that create a template for each cateorigy of traffic signs. Here, these HOG features are computed over all the pixels in the candidate 2D template extracted from the Google Street View image by capturing the local changes in the image intensity. The resulting features are concatenated into one large feature vector as a HOG descriptor for each detection window. In order to do so, the magnitude |∇g(x, y)| and orientation θ(x, y) of the intensity gradient for each pixel within the detection window are calculated. Then the vector of all calculated orientations and their magnitude is quantized and summarized into HOG. More precisely, each detection window is divided into dx × dy local spatial regions (cells) where each cell contains pixels. Each pixel casts a weighted vote for an edge orientation histogram bin, based on the orientation of the image gradient at that pixel. This histogram is normalized with respect to neighboring histograms, and can be normalized multiple times with respect to different neighbors. These votes are accumulated into n evenly-spaced orientation bins over the cells (See Fig. 4).

To account for the impact of the scale – i.e., the distance of the signs to the camera– and effectively classify the testing images with the HOG descriptors, a detection window slides over each image visiting all pixels at multiple spatial scales.

A histogram of local color distribution is also formed similar to the HOG descriptors to characterize local color distributions in each traffic sign. For each template window which contains a candidate traffic sign, the image patch is divided into dx × dy non-overlapping pixel regions. A similar procedure is followed to characterize color in each cell, resulting a histogram representation of local color distributions. To minimize the effect of varying brightness in images, hue and saturation color channels are chosen and values are ignored. Normalized hue and saturation colors are histogrammed and vector-quantized. These color histograms are then concateneated with HOG to form the HOG + Color descriptors.

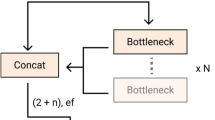

Discriminative classification of the HOG + C descriptors per detection

To identify whether or not a detection window at a given scale contains a traffic sign, multiple SVM classifiers are used, each classifying the detection in a one-vs.-all scheme. Thus, each binary SVM decides whether the HOG + C descriptor belongs to a category of traffic signs or not. The score of mutliple classifiers is compared to identify which category of traffic signs best represents the detection (or simply not detecting the observation as traffic sign). As with any supervised learning mode, first each SVM is trained and then the classifiers are cross-validated. The trained models are used to classify new data (Burges 1998).

Given n labeled training data points {x i , l i } wherein x i (i = 1, 2,.. n; x i ∈ ℝd) is the set of d-dimensional HOG + C descriptors calculated from each bounding box (i) at a given scale, and l i ∈ {0, 1} the traffic sign class label (e.g., stop sign or non-stop sign), each SVM classifier at the training phase, learns an optimal hyper-plane w T x + b = 0 between the positive and negative samples. No prior knowledge is assumed about the distribution of the descriptors. Hence the optimal hyper-plane maximizes the geometric margin (γ) which is shown in Equation (1):

The presence of noise, occlusions, and scene clutter which is typical in roadway dataset produces outliers in the SVM classifiers. Hence the slack variables ξ i are introduced and consequently the SVM optimization problem can be written as:

Where C represents a penalty constant which is determined by cross-validation. As observed in Fig. 5, the inputs to the training algorithm are the training examples for different categories of traffic signs and the outputs are the trained models for multi-cateorgy classification of the traffic signs.

To effectively classify the testing candidates with the HOG descriptors, the detection windows—with a fixed aspect ratio—slide over each video frame at multiple spatial scales. In this paper, comparison across different scales is accomplished by rescaling each sliding window candidate and transforming the candidates to the spatial scale of each template traffic sign model. For detecting and classifying multiple categories of traffic signs, multiple independent one-against-all classifiers are leveraged, where each is trained to detect one traffic sign category of interest. Once these models are learned in the training process, the candidate windows are placed into these classifiers, and the label from the classifier, which results in the maximum classification score is returned.

Mining and spatial visualization of traffic sign data

The process of extracting traffic signs data including how True Positive (TP), False Positive (FP), and False Negative (FN) detections are handled is key to the quality of the developed inventory management system. Especially, with respect to missing attributes (FPs and FNs), it is necessary to decide whether to exclude all missing attributes from analysis. Because each sign is visible in multiple images, it is expected that the missed traffic signs (FNs) in some of the images will be successfully detected in the next sequence of images and as a result the rate if FNs would be very low. In the developed visualization, the most probable location of each detection is visualized on Google Map using a heat map. Hence, those locations that are falsely detected as signs (FPs) – which their likelihood of being falsely detected in multiple images is small- could be easily detected and filtered out. In other words, if the missing signs have specific pattern, the prediction of missing values would performed. The adopted strategy for dealing with FNs and FPs significantly lowers these rates (the experimental results validate this). In the following, the mechanisms provided to the users for data interaction are presented:

Structuring and mining comprehensive databases of detected traffic signs

For structing a comprehensive database and mining the extracted traffic signs data, a fusion table is developed including the type and geo-location information –latitude/longitude– of each detected traffic sign along with correspoding image areas. Using Google data management toolbox for fusion tables (Gonzalez et al. 2010), a user can mine the structured data on the detected traffic signs. Figure 6 presents an example of a query based on two latitude and longitude coordinates wherein the the number of images in which the detected regularity and warning signs are returned and visualized to the user. Figure 7 is another example where the analysis is done directly on the spatial data to map detected warning signs between two specified locations.

Spatial visualization of traffic signs data

In the developed web-based platform, Google map interface is used to visualize the spatial data and the relationships between different signs and their characteristics. More specifically, a dynamic ASP .NET webpage is developed based on the fusion table and a clustering package that visualizes the result of detected signs on Google Map, Street View, and Earth, by calling needed data using queries from the SQL database and the JSON files. A javascript is developed to sync a Google map interface with three other views of Google Map, Street View, and Earth (See Fig. 8). Markers are added for the derived location of each detected sign in this Google Map interface. A user can click on these markers to query the top view (Goole map view), bird-eye view (Google Earth view), and street-level view of the detected sign in the other three frames. In the developed interface, two scenarios can happen based on the size of traffic signs and the distance between each two consecutive images taken in the road:

Scenario 1

Each sign may appear and detect in multiple images– To derive the most probable location for this sign, the area of bounding box in each of these images is calculated. The image that has the highest overall back-projection area is chosen as the most probable location of the traffic sign. This is intuitive, because as the Google vehicle gets closer to the sign, the area of the bounding box containing the sign increases.

Scenario 2

Multiple signs can be detected within a single image and thus, a single latitude and longitude can be assigned to mutiple signs. In these situations, the same as scenario 1 the size of the bounding boxes in images that see these signs is used to identify the most probable location for each of the traffic signs. To show that multiple signs are visible in one image, multiple markers are placed on the Google map.

To visualize these scenarios, the developed interface contains a static and a dynamic map. In the static map, all detections are marked thus multiple markers are placed when several signs are in proximity of one another. Detailed information about latitude/longitude, roadway number, city, state, zip-code, country, traffic sign type, and likelihood of each detected traffic sign are also shown by clicking on these markers.

To enhance the user experience on the dynamic map, the MarkerClusterer algorithm (Svennerberg 2010) is used following by a grid-based clustering procedure to dynamically change the collection of markers based on the level of zoom on the map. This technique iterates through the markers and adds each marker to its nearest cluster based on a predefined threshold which is the cluster grid size in pixel. The final result is an interactive map in which the number of detected signs and the exact location of each sign are visualized. As shown in Fig. 9, a user-click on each cluster brings the view closer to smaller clusters until the underlying individual sign markers are reached.

Figure 10 shows an example of the dynamic heat maps which visualizes the most probable 3D locations for the detected traffic signs. As one gets close to a sign, the most probable location is visualized using a line perpendicular to the road axis. This is because the GPS data cannot differentiate whether a detected sign is on the right side of the road, is over mounted in the middle of the view or is on far left. Figure 11 presents the Pseudo code for mining and representing traffic signs information.

Data collection and setup

For evaluating the performance of proposed method, the multi-class traffic sign detection model of (Balali and Golparvar-Fard 2015a) was trained using regular images collected from a highway and many secondary roadways in the U.S. This dataset –shown in Table 4– contains different categories of traffic signs based on the messages they communicate. The dataset exhibits various viewpoints, scales, illumination, and intra-class variability. The manually annotated ground truths are used for fine tuning the candidate extraction method and also training the SVM classifiers. The models were trained to classify U.S. traffic signs into four categories of warning, regulatory, stop, and yield signs.

In this paper, the data collected from Google Street View API is used purely as the testing dataset. This dataset is collected on 6.2 miles in two segments of U. S. I-57 and I-74 interstate highways (see Fig. 12).

Google Street View images can be downloaded in any size up to 2048 × 2048 pixels. Figure 13 shows a snapshot of the API with the information and associated URL for downloading the shown image.

Table 5 shows the properties of the HOG + C descriptors. Because of the large size of the training datasets, linear kernel is chosen for classification in the multiple one-vs.-all SVM classifiers. The base spatial resolution of the sliding windows was set to 64 × 64 pixel with 67 % spatial overlap for localization which builds on a non-maxima suppression procedure. More details on the best sliding window size and the impact of multi-scale searching mechanism can be found in (Balali and Golparvar-Fard 2015a). The performance of our implementation was benchmarked on an Intel(R) Core(TM) i7-3820 CPU @ 3.60 GHz with 64.0 GB RAM and NVIDIA GeForce GTX 400 graphics card.

Results and discussion

In the first phase of validation, experiments were conducted to detect and classify traffic signs from Google Street View images. Figure 14 shows several example results from the application of the multi-category classifiers. As observed, different types of traffic sign with different scales, orientation/pose, and under different background conditions are detected and classified correctly.

Based on detected traffic signs, a comprehensive database of detected signs is created in which each sign is associated with its most probable location (the image with maximum bounding box size is kept). Figure 15 shows an example of data cards which are created for detected signs.

Figure 16 shows the results from localizing the detected traffic signs: (a) the number of detected signs with the clickable clusters on the Google Map, (b) the location markers for the detected signs on Google Earth, (c) the detected sign and its type in the associated Google Street View imagery, and (d) the Google Street View image of the desired location and roadway in which the detected sign is marked. An example of the dynamic heat map for visualizing the most probable 3D location of the detected signs on the Google Earth is shown in Fig. 16(e). Figure 16(f) further illustrates the mapping of all detected signs in multiple locations. The report card for each sign which contains latitude/longitude, roadway number, type of traffic sign, and detection/classification score are shown in this map. These cards facilitate the review of specific sign information in a given location without searching through the large databases. Such spatio-temporal representations can provide DOTs with information on how different types of traffic signs degrade over time and further provides useful condition information necessary for predicting sign replacement plan.

To quantify the performance of the detection and classification method, precision-recall and miss rate metrics are used. Here, precision is the fraction of retrieved instances that are relevant to the particular classification, while recall is the fraction of relevant instances that retrieved:

In the precision-recall graph, the particular rule used to interpolate precision at recall level i is to use the maximum precision obtained from the detection class for any recall level greater than or equal to i Miss rate, as shown in Equation 5, shows rate of FNs for each category of traffic signs while FPPW measure the rate of False Positives Per Window of detection. Based on this metric, a better performance of the detector should achieve minimum miss rate. The average accuracy in traffic sign detection and classification using Google Street View images is also calculated using Equation 7:

The precision-recall, miss rate, and accuracy of detection and localization applied to the I-74 and the I-57 corridors for different types of traffic signs per image and per asset are shown in Table 6. Since the categories of Stop and Yield signs are rarely visible in highways, we did not have any of those categories in our experimental test. Figure 17, left to right, shows the Precision-Recall graphs for different types of traffic signs per asset (if it is at least detected from three images) and per image respectively.

The average miss rate and accuracy in classification among all images is 0.63 and 97.29 % and among all types of traffic signs is 2.04 and 91.96 %. It other words, only 2.04 % signs are not detected in the developed system. Figure 18 shows the rate of TPs based on the size of traffic signs in Google Street View Images. As shown, the majority of the traffic signs have been detected using bounding boxes of 40 × 40 pixels.

While this work focused on achieving high accuracy in detection and localization of traffic signs, yet the computational time was also benchmarked. Based on the experiments conducted, the computation time for detecting and classifying traffic signs is almost near real-time (5–30 s per image). The developed Google API also retrieves and downloads approximately 23 Google Street View images per second. Future study will focus on leveraging Graphic Processing Unit (GPU) to improve the computational time (expected to a high of 10–fold). Under current computational time, the system allows the Traffic Signs and Marking Division of DOTs to create new traffic sign databases while updating existing sign asset locations, attributes, and work orders. Some of the open research problems for the community include:

-

Detection and classification of all types of traffic signs. In this paper, the traffic signs were classified based on the signs’ message. There are more than 670 types of traffic signs specified in MUTCD (Manual on Uniform Traffic Control Devices) and developing and validating the proposed system that can detect all type of traffic signs associated with MUTCD code is left as future work.

-

Testing the proposed system on local streets and non-interstate highways. Since there are no Stop Signs and very limited Yield Signs on interstate highways, the validation of our proposed system for urban area is left as future work.

Conclusion

By leveraging Google Street View images, this paper presented a new system for creating comprehensive inventories of traffic signs. By processing images extracted from Google Street View API– using a computer vision method based on joint Histograms of Oriented Gradients and Color– traffic signs were detected and classified into four categories of regulatory, warning, stop, and yield signs. Considering the discriminative classification scores from all images that see a sign, the most probable location of each traffic sign was derived and shown on the Google Maps using a heat map. A data card containing information about location and typeof each detected traffic sign was also created. Finally, several data mining interfaces were introduced that allow for better management of the traffic sign inventories. Given the reliability in performance shown through experiments and because collecting information from Google Street View imagery is cost-effective, the proposed method has potential to deliver inventory information on traffic signs in a timely fashion and tie into the existing DOT inventory management systems. With the continuous growth and expansion of the roadway networks, the use of the proposed method will allow DOTs’ practitioners to accommodate the demands of the installation of new traffic sign and other assets, maintain existing signs, and perform future replacements in compliance with the Manual on Uniform Traffic Control Devices (MUTCD). The report cards which contain latitude/longitude, roadway number, type of traffic sign, and detection/classification score facilitate the review of specific sign information in a given location without searching through the large databases. Such spatio-temporal representations provide DOTs with information on how different types of traffic signs degrade over time and further provides useful condition information necessary for predicting sign replacement plan. The method can also automate the data collection process for ESRI ArcView GIS databases.

References

(TRIP), & N. T. R. G. (2014). Michigan transportation by the numbers: meeting the state’s need for safe and efficient mobility.

Ai, C., & Tsai, Y. J. (2011). Hybrid active contour–incorporated sign detection algorithm. Journal of Computing in Civil Engineering, 26(1), 28–36.

Ai, C, & Tsai, Y (2014). Geometry preserving active polygon-incorporated sign detection algorithm. Journal of Computing in Civil Engineering. http://ascelibrary.org/doi/10.1061/%28ASCE%29CP.1943-5487.0000422.

Ashouri Rad, A., & Rahmandad, H. (2013). Reconstructing online behaviors by effort minimization. In A. Greenberg, W. Kennedy, & N. Bos (Eds.), Social computing, behavioral-cultural modeling and prediction (Vol. 7812, pp. 75–82). Heidelberg: Springer Berlin. Lecture Notes in Computer Science.

Balali, V., & Golparvar-Fard, M. (2014). Video-based detection and classification of US traffic signs and mile markers using color candidate extraction and feature-based recognition. In Computing in civil and building engineering (pp. 858–866).

Balali, V, & Golparvar-Fard, M (2015a). Evaluation of multi-class traffic sign detection and classification methods for U.S. roadway asset inventory management. ASCE Journal of Computing in Civil Engineering, 04015022. http://dx.doi.org/10.1061/(ASCE)CP.1943-5487.0000491.

Balali, V., & Golparvar-Fard, M. (2015b). Recognition and 3D localization of traffic signs via image-based point cloud models. Austin: Paper presented at the International Workshop on Computing in Civil Engineering.

Balali, V., & Golparvar-Fard, M. (2015c). Segmentation and recognition of roadway assets from car-mounted camera video streams using a scalable non-parametric image parsing method. Automation in Construction, 49, 27–39.

Balali, V., Golparvar-Fard, M., & de la Garza, J. (2013). Video-based highway asset recognition and 3D localization. In Computing in civil engineering (pp. 379–386).

Balali, V., Depwe, E., & Golparvar-Fard, M. (2015). Multi-class traffic sign detection and classification using google street view images. Washington: Paper presented at the the 94th Transportation Research Board Annual Meeting (TRB).

Beshah, T., & Hill, S. (2010). Mining road traffic accident data to improve safety: role of road-related factors on accident severity in Ethiopia (AAAI Spring Symposium: Artificial Intelligence for Development).

Burges, C. J. (1998). A tutorial on support vector machines for pattern recognition. Data Mining and Knowledge Discovery, 2(2), 121–167.

Caddell, R., Hammond, P., & Reinmuth, S. (2009). Roadside features inventory program (Washington State Department of Transportation).

Chang, L.-Y., & Chen, W.-C. (2005). Data mining of tree-based models to analyze freeway accident frequency. Journal of Safety Research, 36(4), 365–375.

Creusen, I, & Hazelhoff, L (2012) A semi-automatic traffic sign detection, classification, and positioning system. In IS&T/SPIE Electronic Imaging, 2012 (pp. 83050Y-83050Y-83056): International Society for Optics and Photonics. doi:10.1117/12.908552.

Creusen, IM, Wijnhoven, RG, Herbschleb, E, & De With, P (2010) Color exploitation in hog-based traffic sign detection. In Image Processing (ICIP), 2010 17th IEEE International Conference on, 2010 (pp. 2669–2672): IEEE. doi:10.1109/ICIP.2010.5651637.

Dalal, N, & Triggs, B (2005) Histograms of oriented gradients for human detection. In Computer Vision and Pattern Recognition, 2005. CVPR 2005. IEEE Computer Society Conference on, 2005 (Vol. 1, pp. 886–893): IEEE. doi:10.1109/CVPR.2005.177.

De la Escalera, A., Armingol, J. M., & Mata, M. (2003). Traffic sign recognition and analysis for intelligent vehicles. Image and Vision Computing, 21(3), 247–258.

de la Garza, J., Roca, I., & Sparrow, J. (2010). Visualization of failed highway assets through geocoded pictures in google earth and google maps. In Proceeding, CIB W078 27th International Conference on Applications of IT in the AEC Industry.

de la Torre, J (2013) Organising geo-temporal data with CartoDB, an open source database on the cloud. In Biodiversity Informatics Horizons 2013.

DeGray, J, & Hancock, KL (2002). Ground-based image and data acquisition systems for roadway inventories in New England: A synthesis of highway practice. New England Transportation Consortium, No. NETCR 30.

Golparvar-Fard, M., Balali, V., & de la Garza, J. M. (2012). Segmentation and recognition of highway assets using image-based 3D point clouds and semantic Texton forests. Journal of Computing in Civil Engineering, 29(1), 04014023.

Gonzalez, H., Halevy, A. Y., Jensen, C. S., Langen, A., Madhavan, J., Shapley, R., et al. (2010). Google fusion tables: web-centered data management and collaboration. In Proceedings of the 2010 ACM SIGMOD International Conference on Management of data (pp. 1061–1066). New York: ACM.

Haas, K., & Hensing, D. (2005). Why your agency should consider asset management systems for roadway safety.

Hartley, R., & Zisserman, A. (2003). Multiple view geometry in computer vision. Cambridge: Cambridge University Press.

Hassanain, M. A., Froese, T. M., & Vanier, D. J. (2003). Framework Model for Asset Maintenance Management. Journal of Performance of Constructed Facilities, 17(1), 51–64. doi:10.1061/(ASCE)0887-3828(2003)17:1(51).

Hauser, T. A., & Scherer, W. T. (2001). Data mining tools for real-time traffic signal decision support & maintenance. In Systems, man, and cybernetics, 2001 IEEE International Conference on (Vol. 3, pp. 1471–1477). doi:10.1109/ICSMC.2001.973490.

Hoferlin, B, & Zimmermann, K (2009) Towards reliable traffic sign recognition. In Intelligent Vehicles Symposium, 2009 IEEE, (pp. 324–329): IEEE. doi:10.1109/IVS.2009.5164298.

Houben, S, Stallkamp, J, Salmen, J, Schlipsing, M, & Igel, C (2013) Detection of traffic signs in real-world images: the german traffic sign detection benchmark. In Neural Networks (IJCNN), The 2013 International Joint Conference on, (pp. 1–8): IEEE. doi:10.1109/IJCNN.2013.6706807.

Hu, Z., & Tsai, Y. (2011). Generalized image recognition algorithm for sign inventory. Journal of Computing in Civil Engineering, 25(2), 149–158.

Hu, X., Tao, C. V., & Hu, Y. (2004). Automatic road extraction from dense urban area by integrated processing of high resolution imagery and lidar data. Istanbul: International Archives of Photogrammetry, Remote Sensing and Spatial Information Sciences. 35, B3.

Huang, YS, Le, YS, & Cheng, FH (2012) A method of detecting and recognizing speed-limit signs. In Intelligent Information Hiding and Multimedia Signal Processing (IIH-MSP), 2012 Eighth International Conference on, (pp. 371–374): IEEE. doi:10.1109/IIH-MSP.2012.96.

Jahangiri, A., & Rakha, H. (2014). Developing a Support Vector Machine (SVM) classifier for transportation mode identification by using mobile phone sensor data (p. 14-1442). Washington: Transportation Research Board 93rd Annual Meeting.

Jalayer, M., Gong, J., Zhou, H., & Grinter, M. (2013). Evaluation of remote-sensing technologies for collecting roadside feature data to support highway safety manual implementation (p. 13-4709). Washington: Transportation Research Board 92nd Annual Meeting.

Jeyapalan, K. (2004). Mobile digital cameras for as-built surveys of roadside features. Photogrammetric Engineering & Remote Sensing, 70(3), 301–312.

Jeyapalan, K., & Jaselskis, E. (2002). Technology transfer of as-built and preliminary surveys using GPS, soft photogrammetry, and video logging.

Jin, Y, Dai, J, & Lu, CT (2006) Spatial-temporal data mining in traffic incident detection. In Proc. SIAM DM 2006 Workshop on Spatial Data Mining (Vol. 5): Citeseer.

Jones, F. E. (2004). GPS-based Sign Inventory and Inspection Program. International Municipal Signal Association (IMSA) Journal, 42, 30–35.

Kapler, T., & Wright, W. (2005). GeoTime information visualization. Information Visualization, 4(2), 136–146.

Khattak, A. J., Hummer, J. E., & Karimi, H. A. (2000). New and existing roadway inventory data acquisition methods. Journal of Transportation and Statistics, 3, 3.

Kianfar, J., & Edara, P. (2013). A data mining approach to creating fundamental traffic flow diagram. Procedia - Social and Behavioral Sciences, 104(0), 430–439. http://dx.doi.org/10.1016/j.sbspro.2013.11.136.

Li, D., & Su, W. Y. (2014). Dynamic maintenance data mining of traffic sign based on mobile mapping system. Applied Mechanics and Materials, 455, 438–441.

Maerz, N. H., & McKenna, S. (1999). Mobile highway inventory and measurement system. Transportation Research Record: Journal of the Transportation Research Board, 1690(1), 135–142.

Mathias, M, Timofte, R, Benenson, R, & Van Gool, L (2013) Traffic sign recognition—How far are we from the solution? In Neural Networks (IJCNN), The 2013 International Joint Conference on, (pp. 1–8): IEEE. doi:10.1109/IJCNN.2013.6707049.

Moeur, R. C. (2014). Manual of traffic signs. http://www.trafficsign.us/signcost.html. Accessed 12/19 2014.

Mogelmose, A., Trivedi, M. M., & Moeslund, T. B. (2012). Vision-based traffic sign detection and analysis for intelligent driver assistance systems: Perspectives and survey. Intelligent Transportation Systems, IEEE Transactions on, 13(4), 1484–1497.

Nakata, T, & Takeuchi, JI (2004) Mining traffic data from probe-car system for travel time prediction. In Proceedings of the tenth ACM SIGKDD international conference on Knowledge discovery and data mining, (pp. 817–822): ACM. doi:10.1145/1014052.1016920.

Overett, G, Tychsen-Smith, L, Petersson, L, Pettersson, N, & Andersson, L (2014). Creating robust high-throughput traffic sign detectors using centre-surround HOG statistics. Machine Vision and Applications, 1–14. doi:10.1007/s00138-011-0393-1.

Pettersson, N, Petersson, L, & Andersson, L (2008) The histogram feature-a resource-efficient weak classifier. In Intelligent Vehicles Symposium, 2008 IEEE, (pp. 678–683): IEEE. doi:10.1109/IVS.2008.4621174.

Prisacariu, VA, Timofte, R, Zimmermann, K, Reid, I, & Van Gool, L (2010) Integrating object detection with 3d tracking towards a better driver assistance system. In Pattern Recognition (ICPR), 2010 20th International Conference on, (pp. 3344–3347): IEEE. doi:10.1109/ICPR.2010.816.

Rasdorf, W., Hummer, J. E., Harris, E. A., & Sitzabee, W. E. (2009). IT issues for the management of high-quantity, low-cost assets. Journal of Computing in Civil Engineering, 23(2), 91–99. doi:10.1061/(ASCE)0887-3801(2009)23:2(91).

Ravani, B., Dart, M., Hiremagalur, J., Lasky, T. A., & Tabib, S. (2009). Inventory and assessing conditions of roadside features statewide. California State Department of Transportation: Advanced Highway Maintenance and Construction Technology Research Center.

Robyak, R., & Orvets, G. (2004). Video based Asset Data Collection at NJDOT. New Jersey: Department of Transportation.

Ruta, A, Li, Y, & Liu, X (2007) Towards real-time traffic sign recognition by class-specific discriminative features. In BMVC, (pp. 1–10). doi:10.5244/C.21.24.

Salmen, J, Houben, S, & Schlipsing, M (2012) Google Street View images support the development of vision-based driver assistance systems. In Intelligent Vehicles Symposium (IV), 2012 IEEE, (pp. 891–895). doi:10.1109/IVS.2012.6232195.

Stallkamp, J, Schlipsing, M, Salmen, J, & Igel, C (2011) The German traffic sign recognition benchmark: a multi-class classification competition. In Neural Networks (IJCNN), The 2011 International Joint Conference on, (pp. 1453–1460): IEEE. doi:10.1109/IJCNN.2011.6033395.

Svennerberg, G (2010). Dealing with massive numbers of markers. In M Wade, C Andres, S Anglin, M Beckner, E Buckingham, G Cornell, et al. (Eds.), Beginning Google Maps API 3 (pp. 177–210): Apress. doi:10.1007/978-1-4302-2803-5.

Tsai, Y., Kim, P., & Wang, Z. (2009). Generalized traffic sign detection model for developing a sign inventory. Journal of Computing in Civil Engineering, 23(5), 266–276.

Veneziano, D, Hallmark, SL, Souleyrette, RR, & Mantravadi, K (2002) Evaluating Remotely Sensed Images for Use in Inventorying Roadway Features. In Applications of Advanced Technologies in Transportation (2002), (pp. 378–385): ASCE. doi:10.1061/40632(245)48.

Wang, YJ, Yu, ZC, He, SB, Cheng, JL, & Zhang, ZJ (2009) A data-mining-based study on road traffic information analysis and decision support. In Web Mining and Web-based Application, 2009. WMWA ‘09. Second Pacific-Asia Conference on, (pp. 24–27). doi:10.1109/WMWA.2009.58.

Wang, K. C., Hou, Z., & Gong, W. (2010). Automated road sign inventory system based on stereo vision and tracking. Computer‐Aided Civil and Infrastructure Engineering, 25(6), 468–477.

Wu, J., & Tsai, Y. (2006). Enhanced roadway geometry data collection using an effective video log image-processing algorithm. Transportation Research Record: Journal of the Transportation Research Board, 1972(1), 133–140.

Xie, Y, Liu, LF, Li, CH, & Qu, YY (2009) Unifying visual saliency with HOG feature learning for traffic sign detection. In Intelligent Vehicles Symposium, 2009 IEEE, (pp. 24–29): IEEE. doi:10.1109/IVS.2009.5164247.

Zamani, Z, Pourmand, M, & Saraee, MH (2010) Application of data mining in traffic management: case of city of Isfahan. In Electronic Computer Technology (ICECT), 2010 International Conference on, (pp. 102–106): IEEE. doi:10.1109/ICECTECH.2010.5479977.

Zhang, X., & Pazner, M. (2004). The icon imagemap technique for multivariate geospatial data visualization: approach and software system. Cartography and Geographic Information Science, 31(1), 29–41.

Zhou, H., Jalayer, M., Gong, J., Hu, S., & Grinter, M. (2013). Investigation of methods and approaches for collecting and recording highway inventory data.

Acknowledgement

The authors would like to thank Illinois Department of Transportation for providing I-57 dataset of years 2013 and 2014. The work of the undergraduate students of RAAMAC Lab in developing the ground truth data is also appreciated.

Author information

Authors and Affiliations

Corresponding author

Additional information

Competing interests

The authors declare that they have no competing interests.

Authors’ contributions

VB developed the computer vision method for detecting and classifying the traffic signs from Google Street View images. He developed an API that can download the Google Street View images and all relevant information. AAR created a comprehensive database of traffic signs and visualized these data on Google Map platform. This study has been completed under supervision of MGF. All authors read and approved the final manuscript.

Additional file

Additional file 1:

VB_AA_MGF_Traffic sign inventory management system video demo. (MP4 7645 kb)

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Balali, V., Ashouri Rad, A. & Golparvar-Fard, M. Detection, classification, and mapping of U.S. traffic signs using google street view images for roadway inventory management. Vis. in Eng. 3, 15 (2015). https://doi.org/10.1186/s40327-015-0027-1

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s40327-015-0027-1