Abstract

The centered difference discretization of the spatial fractional coupled nonlinear Schrödinger equations obtains a discretized linear system whose coefficient matrix is the sum of a real diagonal matrix D and a complex symmetric Toeplitz matrix T̃ which is just the symmetric real Toeplitz T plus an imaginary identity matrix iI. In this study, we present a medium-shifted splitting iteration method to solve the discretized linear system, in which the fast algorithm can be utilized to solve the Toeplitz linear system. Theoretical analysis shows that the new iteration method is convergent. Moreover, the new splitting iteration method naturally leads to a preconditioner. Analysis shows that the eigenvalues of the corresponding preconditioned matrix are tighter than those of the original coefficient matrix A. Finally, compared with the other algorithms by numerical experiments, the new method is more effective.

Similar content being viewed by others

1 Introduction

Fractional operators have been more and more applied to model acoustics and thermal systems, rheology and modeling of materials and mechanical systems, signal processing and systems identification, control and robotics, and so on [1–5]. Many researchers have considerable interest in the existence of solutions and numerical methods for fractional differential equations [2, 6–13], the topics that have been developing rapidly over the last few decades. We are interested in designing an iteration method for solving the spatial fractional coupled nonlinear Schrödinger (CNLS) equations

under the boundary values and initial conditions

Here and in the sequel, we use \(i=\sqrt{-1}\) to denote the imaginary unit, \(1< \alpha < 2\) and \(\gamma , \rho >0, \beta \geq 0\) are all constants. The fractional Laplacian [1] can be characterized as

where \(\mathcal{F}\) denotes the Fourier transform acting on the spatial variable x. Moreover, it is just equivalent to the Riesz fractional derivative [2], say the following equation:

where \({}_{-\infty }D_{x}^{\alpha }u(x,t)\) and \({}_{x}D_{+\infty }^{\alpha }u(x,t)\) are the left-sided and the right-sided Riemann–Liouville fractional derivatives defined as follows:

here and in the sequel \(\Gamma (\cdot )\) is the Gamma function.

The spatial fractional Schrödinger equations have attracted wide attention in recent years. Their classical forms describe the evolution of microscopic particles, and they arise in the path integral over the Brownian motion. In [3, 4], Laskin extended firstly the path integral method to obtain the spatial fractional Schrödinger equations, which comes from the Brownian motion to the Lévy process. Guo and Xu [5] studied some physical applications of fractional Schrödinger equations. The existence of a global smooth solution of fractional nonlinear Schrödinger equations was discussed by Guo et al. [14]. Many authors have concentrated on the solution of fractional Schrödinger equations in one dimension [15–17]. Since one of the major characteristics of the fractional differential operator is being nonlocal, it is pretty hard to gain the true solution of fractional differential equations generally. Therefore, the numerical methods (e.g., some splitting iteration methods) have become usable and powerful tools in order to understand the behaviors of fractional differential equations. Recently, another approach based on the study of Lie symmetries of fractional differential equations can be found in the papers [18, 19].

From what we known, there exist certain studies on numerical methods for the fractional Schrödinger equations. The Crank–Nicolson difference scheme for the coupled nonlinear fractional Schrödinger equations was proposed by Wang et al. [20] in 2013. Subsequently, Atangana and Cloot [21] studied its stability and convergence for the space fractional variable-order Schrödinger equations. Since the scheme is nonlinearly implicit and coupled in computation, the iteration costs at each step are expensive. Moreover, the nonlocal property of the fractional operator resulted in dense or full matrices. Consequently, with the fractional centered difference formula, Wang et al. [22, 23] proposed recently an implicit conservative difference scheme, which is unconditionally stable, to discretize the spatial fractional CNLS equations. The coefficient matrix A of the discretized linear system about Eqs. (1.1) is \(D+\widetilde{T}\), the sum of a real diagonal matrix D and a complex symmetric Toeplitz matrix T̃, which is just the real symmetric Toeplitz matrix T plus an imaginary-identity matrix Ĩ, \(\widetilde{T}=T+iI\). In view of the coefficient matrix being full or dense, the Gaussian elimination method is unfeasible because of its heavy computational and storage load. The computational costs of the Krylov subspace iterations [24–27] when solving the discretized linear system are quite expensive. Moreover, their convergence rates tend to be considerably worse.

It is appropriated that the coefficient matrix \(A=D+\widetilde{T}\) is non-Hermitian but positive definite. And then those derived iteration methods based on the famous HSS can be used to solve this complex symmetric and non-Hermitian positive definite linear system \(Au=b\), introduced by Bai et al. [28, 29] and so on. For example, the Hermitian and skew-Hermitian splitting (HSS) iteration method [30] was directly applied to solve the discretized linear system, and the following two variants were designed [31]:

Case I

where ω is a given positive constant.

Case II

where ω is a given positive constant.

Cases I and II almost shared the same computational efficiency, reported in the original paper. That is to say, at each HSS iteration step of Case I, the solution of a subsystem with the shifted matrix \(\omega I+\widetilde{I}\), where ω is the shifted parameter, made easily due to its diagonal structure. However, it is very costly and impractical in solving the shifted Hermitian subsystem with the coefficient matrix holding full and Toeplitz-like rather than Toeplitz structure. In order to reduce the complexity, the IHSS iteration or preconditioned HSS iteration [32, 33] were come up with to solve the non-Hermitian positive definite linear system. However, the tolerances (or the number of inner iterations) for the inner iterates about the first linear subsystem may be different and may be changed according to the outer iteration scheme. Therefore, the IHSS iteration is actually a nonstationary iteration method for solving the linear system, and the preconditioning matrix is not easy to choose for the preconditioned HSS iteration method. In order to overcome the disadvantages of these methods, Ran and Wang [31] proposed another HSS-like iteration method, and it is precisely stated in Case II.

In this paper, a new splitting iteration method with medium-shifting (MS) can be presented to solve the discretized linear system of the spatial fractional coupled nonlinear Schrödinger Eqs. (1.1), in which the inconvenience of alternation can be avoided. The shifted parameter is the average value of the maximum and minimum elements of the real diagonal matrix D, say medium-shifting. Theoretical analysis shows that the new iteration method is convergent. Moreover, the new splitting iteration method naturally induces a matrix splitting preconditioner. To further reduce the computational costs of the MS preconditioning matrix, we replace the real Toeplitz matrix T involved in T̃ by a circulant matrix, obtaining a preconditioner for the discretized linear system from the space fractional coupled nonlinear Schrödinger equations. Thus, the linear system can be solved economically by the fast Fourier transform. Numerical results show that the PMS iteration method can significantly improve the convergent property.

Here are some essential notations. As usual, we use \(\mathbb{C}^{M\times M}\) to denote the \(M\times M\) complex matrix set and \(\mathbb{C}^{M}\) the M-dimensional complex vector space. \(X^{*}\) represents the conjugate transpose of a matrix or a vector X. The spectral radius of the matrix A is denoted by \(\rho (A)\). \(\lambda (\cdot )\) and \(\Sigma (\cdot )\) stand for the eigenvalues set and the singular values set of a corresponding matrix, respectively. \(\Vert \cdot \Vert _{2}\) represents the Euclidean norm of a corresponding matrix. By the way, I represents an identity matrix in general.

The organization of this paper is as follows. In Sect. 2, we derive the discretized linear system from spatial fractional CNLS equation (Eq. (1.1)). In Sect. 3, we present the MS iteration method and preconditioned MS iteration method, and establish the convergent theory. The numerical results are reported in Sect. 4. Finally, we end this document with some conclusions in Sect. 5.

2 Discretization of the spatial fractional CNLS equations

In this section, the spatial fractional coupled nonlinear Schrödinger equations were discretized to a linear system. The implicit conservative difference scheme with the fractional centered difference formula, that is unconditionally stable, will be given.

Let \(\tau =\frac{\theta }{N}\) and \(h=\frac{x_{\mathrm{{R}}}-x_{\mathrm{{L}}}}{M+1}\) be the sizes of time step and spatial grid, respectively, where N and M are positive integers. A temporal and spatial partition can be defined as \(t_{n}=n\tau \), \(n=0,1,\ldots ,N\), and \(x_{j}=x_{\mathrm{{L}}}+jh\), \(j=0,1,\ldots ,M+1\). The corresponding numerical solutions are defined by \(u_{j}^{n}\thickapprox u(x_{j},t_{n})\) and \(v_{j}^{n}\thickapprox v(x_{j},t_{n})\). In terms of the fractional centered difference [34], the fractional Laplacian \((-\Delta )^{\frac{\alpha }{2}}\) in the truncated bounded domain can be discretized as

where \(c_{k}^{(\alpha )}\) (\(k=0,1,\ldots ,M-1\)) are defined by

It is clear that \(c_{k}^{(\alpha )} \) satisfy the following properties:

Then the following implicit difference scheme for the spatial fractional CNLS Eqs. (1.1) can be obtained:

where \(j=1, 2, \ldots , M, n=1,2,\ldots ,N-1\), and it has been proved that scheme (2.1) conserves the discrete mass and energy, and is unconditionally stable and convergent with the order \(\mathcal{O}(\tau ^{2}+h^{2})\) in the discrete \(l^{2}\)-norm [22, 23]. By the boundary values and initial conditions, we have

Moreover, the first step can be obtained by some second or higher order time integrators. The structure of the first difference equation in (2.1) is the same as that of the second one. By introducing two M-dimensional vectors

and two parameters

with

the first difference scheme in (2.1) can be rewritten as the following matrix vector form:

where

Here, \(D^{n+1}\) is a diagonal matrix defined by \(D^{n+1}=\operatorname{diag}(d_{1}^{n+1},d_{2}^{n+1},\ldots ,d_{M}^{n+1})\), and T̃ is a complex Toeplitz matrix which is just the symmetric real Toeplitz matrix T plus an imaginary identity matrix iI as follows:

Because of the factors \(\gamma ,\rho >0,\beta \geq 0\), and the properties of the coefficients \(c_{k}^{(\alpha )}\), it is clear that the Toeplitz matrix T is symmetric and strictly diagonally dominant, then symmetric positive definite, and \(D^{n+1}\) is a nonnegative diagonal matrix. Thus, the matrix \(D^{n+1}+T\) is symmetric positive definite. Consequently, the coefficient matrix \(A^{n+1}\triangleq D^{n+1} +\widetilde{T}\) is a diagonal-plus-Toeplitz matrix and is symmetric and non-Hermitian positive definite.

3 Algorithms

We consider matrix splitting iteration methods for solving the following system of linear equations:

where A is a complex symmetric and non-Hermitian positive definite matrix of the form \(A=D+\widetilde{T}, D \in \mathbb{R}^{M\times M}\) is a real diagonal matrix of nonnegative diagonal elements, and \(\widetilde{T}\in \mathbb{C}^{M\times M}\) is a complex symmetric Toeplitz and non-Hermitian positive definite matrix.

Splitting A with respect to its diagonal and Toeplitz parts D and T̃, for a medium-shifting, we have

with

where \(\omega =\frac{d_{\min }+d_{\max }}{2}\), \(d_{\min }\) and \(d_{\max }\) are the smallest and largest elements of the diagonal matrix D. Thus, a splitting iteration method with medium-splitting (MS) is precisely stated in the following.

Algorithm 3.1

(MS)

Given an initial guess \(u^{(0)}\), for \(k=0,1,\ldots \) , until \(\{u^{(k)}\}\) converges, compute

The iteration matrix is given by

The following theorem demonstrates the convergence of Algorithm 3.1 under the reasonable assumptions.

Theorem 3.1

Let \(A=D+\widetilde{T}=D+T+iI \in \mathbb{C}^{M\times M}\), where \(\widetilde{T}\in \mathbb{C}^{M\times M}\) is a symmetric and non-Hermitian positive definite Toeplitz matrix, and \(D=(d_{1},d_{2},\ldots ,d_{M})\in \mathbb{R}^{M\times M}\) is a diagonal matrix with nonnegative elements. Assume that \(d_{\min }\), \(d_{\max }\) and ω are described as

then the spectral radius \(\rho (G_{\omega })\) is bounded by

where \(\lambda _{\min }\) is the smallest eigenvalue of T.

Moreover, it holds that \(\rho (G_{\omega })\leq \delta _{\omega } < 1\). That is to say, the MS method converges to the unique solution \(u_{*}\) of (3.1).

Proof

By the similarity invariance of the matrix spectrum as well as (3.4), we have

The upper bound of \(\rho (G_{\omega })\) is obtained.

It is known that \(\delta _{\omega }=\frac{\omega -d_{\min }}{\sqrt{(\omega +\lambda _{\min })^{2}+1}} < 1\) is equivalent to

It is easily obtained that \(\rho (G_{\omega })\leq \delta _{\omega }<1\) from \(\lambda _{\min }\), \(d_{\min }\) and \(d_{\max }\) are all nonnegative. Hence, the MS method converges to the unique solution \(u_{*}\) of (3.1). □

In Algorithm 3.1, the matrices \(\omega I+\widetilde{T}\) and \(\omega I-D\) are Toeplitz and diagonal matrices, respectively. In order to reduce the computing time and improve the efficiency of the MS method, we can employ the fast and superfast algorithms of the inverse of the Toeplitz matrix to solve the linear system.

The splitting iteration Algorithm 3.1 naturally induces the preconditioning matrix \(M_{\omega }^{-1}\) defined in (3.2) for the coefficient matrix \(A \in \mathbb{C}^{M\times M}\) of the linear system (3.1). We know that the Toeplitz matrix T can be approximated well by a circulant matrix. In order to further reduce the computational costs and accelerate the convergence rate of the MS iteration method, we changed equivalently the symmetric linear system (3.1) by choosing a circulant matrix, \(C\in \mathbb{C}^{M\times M}\), obtaining the preconditioned MS (PMS) iteration method. More concretely, the preconditioner is generated from Strang’s circulant preconditioner. We can take C to be Strang’s circulant approximation obtained by copying the central diagonals of T and bringing them around to complete the circulant requirement. When M is odd,

when M is even, it can be treated similarly as follows:

According to the property of the coefficients \(c_{k}^{(\alpha )}, k=0, 1, \ldots , M-1\), we see that C is symmetric and strictly diagonally dominant, resulting in it being symmetric positive definite.

Algorithm 3.2

(PMS)

Given an initial guess \(u^{(0)}\), for \(k=0,1,\ldots \) , until \(\{u^{(k)}\}\) converges, compute

here \(\omega =\frac{d_{\min }+d_{\max }}{2}\) is the medium of \(d_{\min }\) and \(d_{\max }\), where \(d_{\min }\) and \(d_{\max }\) are the minimum and maximum elements of the diagonal matrix D.

Remark 3.1

In Algorithm 3.2, the matrix \(\omega I+C+iI\) is circulant, we can complete effectively via fast and superfast algorithms. In fact, scheme (3.5) can be regarded as a standard stationary iteration method as follows:

where \(\widetilde{M}_{\omega }=\omega I+C+iI\) and \(\widetilde{N}_{\omega }=\omega I-D+C-T\). Hence, \(\widetilde{M}_{\omega }^{-1}\) can be considered as a preconditioner of (3.1). We have naturally

where \(\kappa =\frac{1}{\sqrt{(\omega +\nu _{\min })^{2}+1}}<1\). Therefore, the eigenvalues distribution of the matrix \(\widetilde{M}_{\omega }^{-1}A\) is tighter than that of the matrix A by rough estimate. It is verified by numerical experiments in the next section.

Remark 3.2

Because the circulant preconditioner is the approximation of the Toeplitz matrix, the proof of the convergence property for the PMS iteration method is similar to the MS iteration method. Therefore, we will not repeat the proof process of the convergence property in Algorithm 3.2 here.

4 Numerical experiments

In this section, two numerical examples to assess the feasibility and effectiveness of Algorithms 3.1–3.2 in terms of iteration number (denoted by “IT”), computing time (in seconds, denoted by “CPU”), and the relative error (denoted as “Error”) are provided. All our tests are started from zero vector \(u^{(0)}\) and terminated once either the maximal number of iteration steps is over 10,000 (denoted as “–”) or the current iterate satisfies

In addition, all the experiments are carried out using MATLAB (version R2013a) on a personal computer with 2.4 GHz central processing unit (Intel® CoreTM 2 Quad CPU), 4.00 GB memory and Windows 7 operating system.

Example 1

Let \(\gamma =1, \rho =2, \beta =0, 1<\alpha <2\). Then system (1.1) is decoupled and becomes

subjected to the initial boundary value conditions

Here and in the sequel, the direct methods can be used to solve the linear systems (3.3) and (3.5), named MS1 and PMS1 methods, respectively. Correspondingly, we employ the fast Zohar algorithm and the fast Fourier transform (FFT) to solve the linear systems (3.3) and (3.5), named MS2 and PMS2 methods, respectively.

In Tables 1–2, we report the number of iteration steps, the computing time, and the relative error of the methods used in the experiments for Example 1 when \(\alpha =1.2\) and \(\alpha =1.4\) with respect to different spatial grids. The time step sizes for all numerical experiments are set to be 0.05.

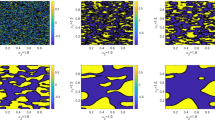

From Tables 1–2, we see that the iteration steps of PMS2, PMS1, MS2, and MS1 methods are pretty stable and always kept in 3 with the growth of the number of spatial grid points, but those of the SOR iteration method are increasing quickly. As for the computing time, that of the PMS2 method is the least of all methods in the experiments, while the time of the SOR method has already reached over 1000 seconds when \(M=10\text{,}000\). Figure 1, which depicts the comparison of resulting CPU of PMS2, MS2, and SOR iteration methods for Example 1, intuitively shows that the PMS2 method performs pretty well.

Comparison of the resulting CPU of these algorithms in Example 1

Example 2

Let \(\gamma =1\), \(\rho =2\), \(\beta =1\), \(1<\alpha <2\), then we have the following coupled system:

We take the initial boundary value conditions in the form

In Tables 3–4, we list the number of iteration steps, the computing time, and the relative error of the methods used in the experiments for Example 2 when \(\alpha =1.2\) and \(\alpha =1.4\) with respect to different spatial grids. The time step sizes for all numerical experiments are also set to be 0.05.

Tables 3–4 show that the iteration steps of PMS2 and MS2 methods are constant no matter what the number of the spatial grid points is, but those of the SOR iteration method are increasing quickly. The PMS2 and MS2 methods require less computing time than the SOR iteration method. The advantage of the computing time of the PMS2 method over the other methods for different values of α and M can be visually shown in Fig. 2.

Comparison of the resulting CPU of these algorithms in Example 2

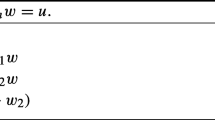

We also exhibit the preferable numerical behavior for Example 1 in terms of the eigenvalues of \(\widetilde{M}_{\omega }^{-1}A\). We can see that the eigenvalues of \(\widetilde{M}_{\omega }^{-1}A\) are tighter than those of A by sketching the circles in Figs. 3–6, whose centers are all the middle points of the smallest and largest eigenvalues of the preconditioner \(\widetilde{M}_{\omega }^{-1}\), and their radii are half of the distance between the two points.

5 Conclusion

According to the discretization of the spatial fractional coupled nonlinear Schrödinger equations, we have obtained that the coefficient matrix is the sum of a real diagonal matrix D and a complex symmetric Toeplitz matrix T̃, which is just the symmetric real Toeplitz T plus an imaginary identity matrix iI, and then established a new splitting iteration method with medium-shifting (MS). Since the structure of the coefficient matrix is Toeplitz matrix, the iteration method with medium-shifting sufficiently employs the fast and superfast direct methods of complexity \(\mathcal{O}(M^{2})\) and \(\mathcal{O}(M\log^{2}M)\), respectively. Furthermore, we replace the Toeplitz matrix T by an appropriate circulant matrix C and obtain the preconditioned MS (PMS) iteration method. In terms of the special structure of coefficient matrix in Algorithms 3.1–3.2, we need to solve the linear system associated with the shifted Toeplitz matrix and the shifted circulant matrix, respectively, by employing fast and superfast algorithms. Theoretical analysis shows that the approach is convergent, and numerical experiments have already demonstrated its efficiency.

Abbreviations

- CNLS:

-

coupled non-linear Schrödinger

- HSS:

-

Hermitian and skew-Hermitian splitting

- IHSS:

-

inexact Hermitian and skew-Hermitian splitting

- MS:

-

medium-shifting

- PMS:

-

preconditioned MS

- FFT:

-

fast Fourier transform

References

Demengel, F., Demengel, G.: Fractional Sobolev spaces. In: Functional Spaces for the Theory of Elliptic Partial Differential Equations, pp. 179–228. Springer, London (2012). https://doi.org/10.1007/978-1-4471-2807-6

Yang, Q., Liu, F., Turner, I.: Numerical methods for fractional partial differential equations with Riesz space fractional derivatives. Appl. Math. Model. 34(1), 200–218 (2010)

Laskin, N.: Fractional quantum mechanics. Phys. Rev. E 62, 3135–3145 (2000)

Laskin, N.: Fractional quantum mechanics and Lévy path integrals. Phys. Lett. A 268, 298–305 (2000)

Guo, X.Y., Xu, M.Y.: Some physical applications of fractional Schrödinger equation. J. Math. Phys. 47, Article ID 082104 (2006)

Giacomoni, J., Mukherjee, T., Sreenadh, K.: Positive solutions of fractional elliptic equation with critical and singular nonlinearity. Adv. Nonlinear Anal. 6(3), 327–354 (2017)

Lyons, J., Neugebauer, J.: Positive solutions of a singular fractional boundary value problem with a fractional boundary condition. Opusc. Math. 37(3), 421–434 (2017)

Bisci, G.M., Radulescu, V., Servadei, R.: Variational Methods for Nonlocal Fractional Problems. Encyclopedia of Mathematics and Its Applications, vol. 162. Cambridge University Press, Cambridge (2016)

Bisci, G.M., Repovs, D.: Multiple solutions of p-biharmonic equations with Navier boundary conditions. Complex Var. Theory Appl. 59(2), 271–284 (2014)

Pucci, P., Xiang, M., Zhang, B.: Existence and multiplicity of entire solutions for fractional p-Kirchhoff equations. Adv. Nonlinear Anal. 5(1), 27–55 (2016)

Xiang, M., Zhang, B., Rǎdulescu, V.D.: Existence of solutions for perturbed fractional p-Laplacian equations. J. Differ. Equ. 260(2), 1392–1413 (2016)

Xiang, M., Zhang, B., Rǎdulescu, V.D.: Multiplicity of solutions for a class of quasilinear Kirchhoff system involving the fractional p-Laplacian. Nonlinearity 29(10), 3186–3205 (2016)

Zhang, X., Zhang, B., Repovš, D.: Existence and symmetry of solutions for critical fractional Schrödinger equations with bounded potentials. Nonlinear Anal., Theory Methods Appl. 142, 48–68 (2016)

Guo, B.L., Han, Y.Q., Xin, J.: Existence of the global smooth solution to the period boundary value problem of fractional nonlinear Schrödinger equation. Appl. Math. Comput. 204, 468–477 (2008)

Laskin, N.: Fractional Schrödinger equation. Phys. Rev. E 66, Article ID 056108 (2002)

Jeng, M., Xu, S.L.Y., Hawkins, E., Schwarz, J.M.: On the nonlocality of the fractional Schrödinger equation. J. Math. Phys. 51, Article ID 062102 (2010)

Luchko, Y.: Fractional Schrödinger equation for a particle moving in a potential well. J. Math. Phys. 54, Article ID 012111 (2013)

Jannelli, A., Ruggieri, M., Speciale, M.P.: Analytical and numerical solutions of fractional type advection-diffusion equation. AIP Conf. Proc. ICNAAM 1863(1), 81–97 (2017)

Leo, R.A., Sicuro, G., Tempesta, P.: A theorem on the existence of symmetries of fractional PDEs. C. R. Math. 352(3), 219–222 (2014)

Wang, D.L., Xiao, A.G., Yang, W.: Crank-Nicolson difference scheme for the coupled nonlinear Schrödinger equations with the Riesz space fractional derivative. J. Comput. Phys. 242(1), 670–681 (2013)

Atangana, A., Cloot, A.H.: Stability and convergence of the space fractional variable-order Schrödinger equation. Adv. Differ. Equ. 1, Article ID 80 (2013). https://doi.org/10.1186/1687-1847-2013-80

Wang, D.L., Xiao, A.G., Yang, W.: A linearly implicit conservative difference scheme for the space fractional coupled nonlinear Schrödinger equations. J. Comput. Phys. 272, 644–655 (2014)

Wang, D.L., Xiao, A.G., Yang, W.: Maximum-norm error analysis of a difference scheme for the space fractional CNLS. Appl. Math. Comput. 257, 241–251 (2015)

Axelsson, O.: Iterative Solution Methods. Cambridge University Press, Cambridge (1996)

Golub, G.H., Van Loan, C.F.: Matrix Computations, 3rd edn. The Johns Hopkins University Press, Baltimore (1996)

Varga, R.S.: Matrix Iterative Analysis. Prentice Hall, Englewood Cliffs (1962)

Bai, Z.Z.: Motivations and realizations of Krylov subspace methods for large sparse linear systems. J. Comput. Appl. Math. 283, 71–78 (2015)

Bai, Z.Z., Benzi, M., Chen, F.: Modified HSS iteration methods for a class of complex symmetric linear systems. Computing 87, 93–111 (2010)

Bai, Z.Z., Benzi, M., Chen, F.: On preconditioned MHSS iteration methods for complex symmetric linear systems. Numer. Algorithms 56, 297–317 (2011)

Bai, Z.Z., Golub, G.H., Ng, M.K.: Hermitian and skew-Hermitian splitting methods for non-Hermitian positive definite linear systems. SIAM J. Matrix Anal. Appl. 24(3), 603–626 (2003)

Ran, Y.H., Wang, J.G., Wang, D.L.: On HSS-like iteration method for the space fractional coupled nonlinear Schrödinger equations. Appl. Math. Comput. 271, 482–488 (2015)

Bai, Z.Z., Golub, G.H., Li, C.K.: Convergence properties of preconditioned Hermitian and skew-Hermitian splitting methods for non-Hermitian positive semidefinite matrices. Math. Comput. 76, 287–298 (2007)

Bai, Z.Z., Golub, G.H., Pan, J.Y.: Preconditioned Hermitian and skew-Hermitian splitting methods for non-Hermitian positive semidefinite linear systems. Numer. Math. 98(1), 1–32 (2004)

Ortigueira, M.D.: Riesz potential operators and inverses via fractional centred derivatives. Int. J. Math. Math. Sci. 2006, Article ID 48391 (2006). https://doi.org/10.1155/IJMMS/2006/48391

Acknowledgements

The authors are very much indebted to the anonymous referees for their helpful comments and suggestions.

Availability of data and materials

Not applicable.

Author information

Authors and Affiliations

Contributions

All authors contributed equally to the writing of this paper. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare that they have no competing interests.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Wen, R., Zhao, P. A medium-shifted splitting iteration method for a diagonal-plus-Toeplitz linear system from spatial fractional Schrödinger equations. Bound Value Probl 2018, 45 (2018). https://doi.org/10.1186/s13661-018-0967-1

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s13661-018-0967-1