Abstract

This paper presents a sequential Kalman asynchronous track fusion algorithm based on the effective sound velocity method proposed to deal with the problem of random asynchrony of multi-node measurement information in the distributed underwater multi-target detection system due to the propagation effect of the sound channel. This algorithm updates the time stamp of target information reported by each sensor by using the effective sound velocity method, so as to obtain the actual time when the target state information appears in the underwater acoustic channel. Then, according to the actual asynchronous situation of the multi-sensor, it uses the sequential filter algorithm to fuse the asynchronous sensors. The simulation results show that the algorithm can improve the positioning accuracy of the original algorithm, has a strong adaptability to sensor target loss and accuracy loss, and has a certain application value.

Similar content being viewed by others

1 Introduction

The distributed detection system has high positioning accuracy, wide detection range, and strong anti-interference ability and plays an important role in underwater target detection and tracking. It is an inevitable development trend of information warfare and three-dimensional warfare.

Multi-sensor data fusion is one of the important algorithms for distributed systems, and its main research results are concentrated in the field of synchronous data fusion. Based on the Kalman filter, the literature [1,2,3] established adaptive filter, sequential filter, and other methods to perform fusion estimation on multi-sensor measurements. Paper [4] proposed a distributed fusion estimation in the sense of least squares to solve the problem of data packet loss. Paper [5] proposed a distributed tracking method for maneuvering targets by cross-correlation, which has a high value in engineering applications.

However, differences in sensor sampling rate, measurement accuracy, and processing step size will lead to data asynchrony in the fusion system [6]. For the problem of data asynchrony in distributed systems, Professor Han Chongzhao divided the multi-sensor asynchronous fusion problem in detail. On this basis, Blair uses the least squares method to estimate the equivalent synchronous state from the asynchronous measurement and then uses the synchronous fusion method to obtain the global estimation result [7, 8]. The literature [9] proposed an estimation algorithm based on the covariance intersection (CI) fusion algorithm. Bar-Shalom proposed the A1, B1, and C1 fusion methods and derived the Bl, Al1, and Bl1 fusion methods for the asynchronous problem of lag measurement [10]. In addition, distributed cubic information filter [11, 12], particle filter [13], strong unscented tracking filter [14], and other algorithms are also used to deal with asynchronous problems caused by different sampling and transmission speeds. Although many scholars are devoted to the research of asynchronous information fusion, most of the existing methods rely on accurate prior information, which is difficult to practically apply in engineering.

For the underwater multi-sensor distributed detection system, due to the limited sound velocity and the discreteness of the sensor, the target sound signals received by the distributed system at the same time come from different times. Because of the time-varying and space-varying variation of underwater acoustic channels, it is impossible to accurately obtain the specific time of acoustic signal propagation, which makes it difficult to estimate the asynchronous situation of multi-sensor data [15]. Referring to the sound ray correction algorithm commonly used in long-baseline underwater acoustic positioning systems, this paper proposes a modified sequential Kalman filter algorithm in asynchronous track fusion for the data asynchrony caused by sound propagation. When the observation result of a sensor is selected as the fusion benchmark, the asynchronous sequential Kalman filter algorithm based on constant sound velocity feedback (AS-KF-CSV) or synchronous sequential Kalman filter based on effective sound velocity feedback (AS-KF-ESV) is used to update the asynchronous information of each sensor from the same target, and the track fusion calculation of asynchronous information is performed after adjusting the algorithm parameters. The simulation results show that the algorithm has higher estimation accuracy under the same measurement.

The structure of the paper is as follows: Section 2 introduces the asynchronous measurement model of multiple sensors. Section 3 presents the main result of this paper, namely the sequential Kalman filter algorithm based on sound propagation time feedback. Section 4 gives the performance analysis of the algorithm under various application conditions. Finally, concluding remarks are given in Sect. 5.

2 Multi-sensor asynchronous measurement model

Figure 1 shows a multi-sensor detection scenario. For a discrete-time system, the motion state of the target can be expressed as:

\({\varvec{\varPhi}}(t) \in {\mathbf{R}}^{n,n}\) is the transition matrix of the target state, \({\varvec{X}}(t) \in {\mathbf{R}}^{n}\) represents the state vector of the target at the time \(t\); \({\varvec{G}}(t) \in {\mathbf{R}}^{{{\text{n}},h}}\) is the process noise distribution matrix;\({\varvec{V}}(t) \in {\mathbf{R}}^{{\text{h}}}\) is a Gaussian white noise sequence with mean value 0 and covariance \(Q(t)\); \({\mathbf{R}}\) is the set of real numbers, \(n\) is the number of rows of matrix \(X\), and \(h\) is the number of rows of matrix \(V\).

Given the real coordinates of the target and the sensor, combined with the corresponding acoustic environment, the state sequence \(\left\{ {X_{ij} ;t} \right\}\) of the radiation signal from the target \(i\) to the sensor \(j\) can be calculated by the gamma acoustic model. Then, the observation value of the sensor \(j\) to target \(i\) can be expressed as:

where \(Z_{{t_{1} }}^{ij}\) is the observation of the target \(i\) by the sensor \(j\) at a time \(t_{1}\), \(X_{{\min (t - t_{1} )}}^{ij} \in \left\{ {X_{ij} ;t} \right\}\) is the closest target state to \(t_{1}\), \(v_{{t_{1} }}\) and \(a_{{t_{1} }}\) are the system observation error and environmental noise, respectively, when \(t_{1}\), \(v_{{t_{1} }}\) and \(a_{{t_{1} }}\) are not correlated. \(Pf_{j}\) is the detection probability of the sensor \(j\). For the multi-target moving scene, the observation \(Z_{{t_{1} }}^{j}\) of the sensor \(j\) at \(t_{1}\) is the union of multi-target and false track. To highlight the problem and simplify the model, this paper defaults to no false track generated by each sensor:

3 Modified sequential Kalman filter algorithm in asynchronous track fusion

3.1 Sequential Kalman filter

The essence of sequential is recursion. Because of its strong adaptability to the number of sensors and the form of data, it is often used in multi-sensor information fusion. As a linear expression form of the Bayesian filter, the Kalman filter solves the shortcomings of the Bayesian filter without an analytical solution and is a common method for dealing with target-tracking problems. For the distributed detection system, each sensor estimates the target state to obtain the incomplete posterior estimation results in the current state of the target and then updates the incomplete posterior estimation with the sequential filter to obtain the complete posterior estimation.

Suppose there is a distributed detection system composed of \(n\) sensors, and a target enters the monitoring area. Taking the sensor \(j_{1}\) as the fusion benchmark, the state estimation, and covariance matrix at \(t_{1}\) are calculated according to the posterior estimation \(x_{{t_{1} - 1}}^{{j_{1} }}\) and \(P_{{t_{1} - 1}}^{{j_{1} }}\) at \(t_{1} - 1\):

Then, Kalman gain can be calculated by the covariance matrix at \(t_{1}\):

Then, the observation value \(Z_{{t_{1} }}^{{ij_{1} }}\) of the sensor \(j_{1}\) is processed to calculate the incomplete posterior estimate:

Based on the estimation results of the sensor \(j_{1}\), the estimation of other sensors in the distributed system is predicted:

Then, the latest Kalman gain can be expressed as:

Then, calculate the predicted estimates of all probe nodes based on the reference nodes:

After iterative calculation of the measurement \(z_{{t_{1} }}^{{k_{n} }}\) of all sensors, a complete posterior estimate of the target state is obtained:

This completes the theoretical derivation of the sequential Kalman filter.

3.2 Asynchronous information correction based on sound propagation time

In the underwater acoustic positioning system, it is often assumed that the acoustic environment is a constant sound velocity gradient. That is, constant sound velocity is used for approximate calculation. Thus, the propagation time \(\tau_{{t_{1} }}^{{ik_{n} }}\) of the radiated sound from the target to each sensor can be obtained, and the asynchronous time of each sensor to the target observation output can be updated successively:

However, in the marine environment, the sound velocity is time-varying and spatially varying with the change in the underwater acoustic environment, and the bending phenomenon of the sound line also causes errors in the estimation of sound propagation delay.

For the scenario in Fig. 1, the time of radiation signal from the target \(i\) reaching the sensor \(j_{1}\) and \(j_{2}\) is \(t_{1}\) and \(t_{2},\) respectively, and the average sound speed in the propagation process is \(c_{1}\) and \(c_{2},\) respectively. There are:

Transform the coordinate system, let \(j_{1x} = - j_{2x}\), \(j_{1y} = j_{2y}\), then:

If \(c_{1}\) is chosen to calculate the sound speed, the positioning error can be calculated as:

It is easy to know that the positioning error of the distributed system is proportional to the sound velocity error and propagation time. According to the Snell theorem in layered media, it is necessary to know the source depth, initial grazing angle, and vertical distribution of sound velocity to calculate the accurate sound propagation time. These are often unpredictable in the process of target location, so this paper chooses an effective sound velocity method to correct the specific propagation time of the sound line.

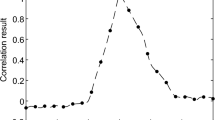

Effective sound velocity is defined as the ratio of the geometric distance between the transceiver and the actual sound propagation time [15]. According to the application scenario of the distributed underwater detection system, the effective sound velocity table of the monitoring area relative to the sensor can be easily measured. In practical engineering applications, the value of sound velocity is checked according to the prior information of the target, and then, the propagation time \(\tau_{{t_{1} }}^{{ik_{n} }}\) of the target sound wave relative to each sensor is updated. In the simulation calculation in this paper, the Bellhop model is used to traverse the monitoring area to generate an effective sound velocity table for each sensor. The sound velocity gradient used for calculation and the sound ray track at a certain time is shown in Figs. 2 and 3.

3.3 Algorithm adaptive adjustment

Detailed performance analysis results of the AS-KF-ESV algorithm are given above. But in the actual marine environment, due to the influence of noise and other factors, the phenomenon of track frame missing and track loss often occurs, which will lead to the difficulty of algorithm convergence. Therefore, fusion rules are designed based on the algorithm to improve the adaptability and robustness of the algorithm.

In principle, we hope to use the measurement of the same sensor as the fusion benchmark to track the target. Therefore, the sensor \(j_{1}\) closest to the target is selected as the fusion benchmark at the beginning of the fusion, and marks are set to record the missed detection probability \(p_{m}\) of each sensor to track the target in a period of the time window. When the reference node is the missing frame, the node with the lowest probability of missing frame will be selected as the temporary fusion reference node. When the reference node misses three frames continuously, the sensor will be considered to have lost the target, and the temporary fusion reference node will be promoted to the fusion reference node.

4 Numerical simulation analysis

4.1 Performance evaluation method

Due to the discrete nature of the simulation system, the performance evaluation method represented by root-mean-squared error (RMSE) has misjudgment in practical use. Therefore, the optimal subpattern assignment (OSPA) distance is used to evaluate performance. OSPA distance is defined as the distance between two sets. Let the target state estimation set be \(X = \left\{ {x_{1} ,x_{2} , \ldots x_{n} } \right\}\), and the truth set of the target state be \(Y = \left\{ {y_{1} ,y_{2} , \ldots y_{m} } \right\}\) and satisfy \(0 < n \le m\). OSPA distance can be defined as:

where \(d_{c} \left( {x_{i} ,y_{\pi i} } \right)\) is the cutoff distance measure of correlation degree between set \(X\) and set \(Y\), expressed as:

In OSPA, distance \(c\) is the correlation cutoff radius and \(p\) is a dimensionless real number. The OSPA can be obtained by the definition:

In the simulation performance analysis of this paper, the OSPA parameter is set as \(c = 100,p = 1\).

4.2 Simulation analysis of algorithm performance

(1) Algorithm validity analysis

Situation 1: It is assumed that there are four sensors in the region to form a distributed detection system for cooperative detection, and the observation output of each sensor is the target track under the background of interference. The standard deviation is set as \(\left[ {25\;{\text{m}},20\;{\text{m}},20\;{\text{m}},20\;{\text{m}}} \right]\), the detection period is set as \(T = 5\;{\text{s}}\), and the startup time is set as \([10\;{\text{s}},11\;{\text{s}},12\;{\text{s}},13\;{\text{s}}]\). The target appeared at 0, and its initial state was set as \([3000\;{\text{m}},8\;{\text{m/s}},0.1\;{\text{m/s}}^{2} ,1000\;{\text{m}},1\;{\text{m/s}}, - 0.01\;{\text{m/s}}^{2} ]\). The sensor was sampled 157 times in the whole simulation process. Monte Carlo simulation time is \(N = 100\), and the time resolution of target motion is 1 ms. That is, the simulation error of sound propagation time is less than 1 ms. It is assumed that the whole moving process of the target can be observed by four sensors. The AS-KF-ESV algorithm is used to process the asynchronous measurement. The simulation results are illustrated in Fig. 4. The x and y coordinates in the figure are the coordinates in the geodetic coordinate system, and the unit is m. At the same time, OSPA is used to evaluate the algorithm performance, and the evaluation results are illustrated in Fig. 5.

In this simulation experiment, the AS-KF-ESV algorithm can realize the asynchronous measurement fusion of distributed detection system, which verifies the effectiveness of the algorithm. As the initial value is the direct output of the sensor to the target location, the AS-KF-ESV algorithm's initial estimation accuracy is low. After several iterations, the algorithm converges to the optimal state. Near frame 20, the observed value of the sensor and the estimated value of the AS-KF-ESV algorithm both fluctuated to some extent, but the AS-KF-ESV algorithm soon completed convergence, and the algorithm reached a stable state.

The simulation conditions remain unchanged. Direct fusion method (D-KF), AS-KF, AS-KF-CSV, and AS-KF-ESV are, respectively, used for fusion processing. The simulation results are shown in Fig. 6.

In this simulation experiment, the D-KF algorithm failed to complete convergence due to the time mismatch of the observation data of the four sensors, and the target position could not be estimated. The other three asynchronous fusion algorithms all completed the target estimation. At the beginning of the simulation, the accuracy curves of AS-KF-ESV and AS-KF-CSV were close because the target was close to the sensor. As the target was far away from the sensor, the estimation accuracy of AS-KF-ESV began to be better than that of the AS-KF-CSV algorithm. Overall, the estimation accuracy after propagation time correction is better than without time correction.

(2) Analysis of algorithm influence factors

Situation 2: It is assumed that there are four sensors in the region to form a distributed detection system for cooperative detection. The observation output of each sensor is the target track in the background of interference, the standard deviation is set as \(\left[ {25\;{\text{m}},20\;{\text{m}},20\;{\text{m}},20\;{\text{m}}} \right]\), the detection period is set as \(T = 5\;{\text{s}}\), and the startup time is set as \([10\;{\text{s}},11\;{\text{s}},12\;{\text{s}},13\;{\text{s}}]\). There are two uniformly moving targets in the region, both of which appear at the moment 0. The initial state of target one is set as \([ - 1000\;{\text{m}},25\;{\text{m/s}},1000\;{\text{m}},13\;{\text{m/s}}]\), and the initial state of target two is set as \([ - 1000\;{\text{m}},11\;{\text{m/s}}, - 1000\;{\text{m}},13\;{\text{m/s}}]\). The sensor has carried out 157 sampling times in the simulation process. Monte Carlo simulation time is \(N = 100\), and the time resolution of target motion is 1 ms. It is assumed that four sensors can observe the whole moving process of the target.

Figure 7 shows the simulation results of situation 2, the x and y coordinates in the figure are the coordinates in the geodetic coordinate system, and the unit is m. Figure 8 shows the comparison of performance analysis between situation 1 and situation 2. In this simulation experiment, the AS-KF-ESV algorithm completes the asynchronous track fusion of three different motion situation targets. There is no direct linear or nonlinear relationship between fusion accuracy and target velocity.

Other elements in situation 1 remain unchanged, and the standard deviation of sensors is successively changed to \([5\;{\text{m}},5\;{\text{m}},5\;{\text{m}},5\;{\text{m}}]\), \([15\;{\text{m}},20\;{\text{m}},25\;{\text{m}},30\;{\text{m}}]\), \([15\;{\text{m}},20\;{\text{m}},25\;{\text{m}},30\;{\text{m}}]\) and \([35\;{\text{m}},35\;{\text{m}},35\;{\text{m}},35\;{\text{m}}]\). Simulation results are shown in Fig. 9.

In this simulation experiment, the accuracy of the AS-KF-ESV algorithm for the target fusion location fluctuates with the performance of the sensor. AS-KF-ESV algorithm can give more accurate target location results when all sensors are consistent. On the contrary, when the consistency of each sensor is poor, the fusion result of the AS-KF-ESV algorithm tends to be the higher precision measurement.

Keep other elements unchanged in situation 1, and change the startup time of each sensor to \([10\;{\text{s}},10.5\;{\text{s}},11\;{\text{s}},11.5\;{\text{s}}]\) and \([10\;{\text{s}},11.5\;{\text{s}},13\;{\text{s}},14.5\;{\text{s}}]\). The simulation results are shown in Fig. 10.

In this simulation experiment, when the time delay of each sensor is small, the accuracy of the fusion estimation result is relatively high. Figure 11 shows the fusion results of a different number of sensors in the same situation. It can be seen that the increase in the number of fused sensors, the higher the accuracy of fusion results.

(3) Robustness analysis of the algorithm

To simulate the frame loss phenomenon during actual detection and analyze the algorithm's robustness, three control experiments were designed based on situation 1: each of the four sensors randomly lost 10, 20, and 30 frames. 100 Monte Carlo simulation experiments were performed, respectively. The simulation results are shown in Fig. 12.

In this simulation experiment, the missing frame of the sensor will affect the fusion accuracy. The more the number of missing frames, the lower the accuracy of fusion estimation, which is consistent with the actual situation. Meanwhile, in these three groups of control experiments, AS-KF-ESV has completed the track fusion of multiple sensors, and the fusion accuracy is higher than the original measurement. In particular, when the number of missing frames is controllable, the fusion precision loss of the AS-KF-ESV algorithm is within the controllable range, which verifies the robustness and practical value of the algorithm.

(4) Algorithm complexity analysis

To analyze the complexity of the algorithm proposed in this paper, based on Situation 1, the AS-KF-ESV algorithm is used to compare the time and space resource occupation with the benchmark algorithm. Among them, effective sound velocity meters with an accuracy of 5 m, 10 m, and 15 m are designed, respectively, for AS-KF-ESV query. This experiment has conducted 10,000 Monte Carlo simulation calculations, and the comparison results are shown in Table 1.

From the data in the table, it can be seen that under the same simulation conditions, the running time of the AS-KF-ESV algorithm is about 8% longer than the basic algorithm, and there is no obvious linear relationship between the running time and the accuracy of the effective sound velocity algorithm. At the same time, compared with the basic algorithm, the AS-KF-ESV algorithm significantly increases the occupation of computer resources, and with the improvement of the accuracy of the effective sound velocity algorithm, this algorithm significantly increases the occupation of memory. In addition, in this simulation experiment, the calculation of the effective sound velocity meter needs a lot of time, but because the effective sound velocity method algorithm is pre-built data, the time complexity calculation in this paper does not include the calculation of the effective sound velocity method algorithm.

5 Conclusion

This paper proposes a modified sequential Kalman asynchronous track fusion based on sound propagation time to solve the problem of signal asynchronism caused by different sound propagation times in distributed underwater acoustic detection systems. After each sensor of the distributed system locates the stable tracking target, the constant sound speed method or the effective sound speed method is used to calculate the sound propagation time of the target signal from the sound source to the sensor reception. The asynchronous information of the tracking target is updated, and then, the asynchronous tracks are fused by sequential Kalman filter. During the fusion process, according to the detection results of each sensor, the members of the fusion group are dynamically updated, which solves the phenomenon that the point sensor loses the target, or the target escapes from the detection range of a certain sensor in the actual detection. The simulation results show that the algorithm can effectively realize the asynchronous measurement fusion of distributed detection system. And it has higher estimation accuracy than the original algorithm under the same conditions. Robustness analysis also verifies the applicability of the algorithm in practical situations.

Availability of data and materials

Not applicable.

Abbreviations

- CI:

-

Covariance intersection

- AS-KF-CSV:

-

Asynchronous sequential Kalman filter algorithm based on constant sound velocity feedback

- AS-KF-ESV:

-

Asynchronous sequential Kalman filter algorithm based on effective sound velocity feedback

- RMSE:

-

Root-mean-squared error

- OSPA:

-

Optimal subpattern assignment

- D-KF:

-

Direct fusion method

References

Z. Zhou, Y. Li, J. Liu, G. Li, Equality constrained robust measurement fusion for adaptive Kalman-filter-based heterogeneous multi-sensor navigation. IEEE Trans. Aerosp. Electron. Syst. 49(4), 2146–2157 (2013)

W.-A. Zhang, S. Liu, L. Yu, Fusion estimation for sensor networks with nonuniform estimation rates. IEEE Trans. Circuits Syst. I Regul. Pap. 61(5), 1485–1498 (2014)

B. Jia, M. Xin, Multiple sensor estimation using a new fifth-degree cubature information filter. Trans. Inst. Meas. Control 37(1), 15–24 (2015)

R. Caballero-Aguila, A. Hermoso-Carazo, J. Linares-Perez, Networked distributed fusion estimation under uncertain outputs with random transmission delays, packet losses and multi-packet processing. Signal Process. 156(MARa), 71–83 (2019)

F. Govaers, W. Koch, An exact solution to track-to-track-fusion at arbitrary communication rates. IEEE Trans. Aerosp. Electron. Syst. 48(3), 2718–2729 (2012)

C. Han, H. Zhu, Z. Duan, Multisource Information Fusion, 3rd edn. (Tsinghua University Press Ltd, 2010)

W.D. Blair, T.R. Rice, A.T. Alouani, et al., Asynchronous data fusion for target tracking with a multitasking radar and optical sensor. In Acquisition, Tracking, and Pointing V, vol 1482 (International Society for Optics and Photonics, 1991), pp. 234–245

W.D. Blair, T.R. Rice, B.S. McDole, et al., Least-squares approach to asynchronous data fusion. In Acquisition, Tracking, and Pointing VI, vol 1697 (International Society for Optics and Photonics, 1992), pp. 130–141

X.H. Wu, S.M. Song, Covariance intersection-based fusion algorithm for asynchronous multirate multisensor system with cross-correlation. IET Sci. Meas. Technol. 11(7), 878–885 (2017)

Á.F. García-Fernández, W. Yi, Continuous-discrete multiple target tracking with out-of-sequence measurements. IEEE Trans. Signal Process. 69, 4699–4709 (2021)

M. Imani, U.M. Braga-Neto, Particle filters for partially-observed Boolean dynamical systems. Automatica 87, 238–250 (2018)

X. Wang, Z. Xu, X. Gou et al., Tracking a maneuvering target by multiple sensors using extended kalman filter with nested probabilistic-numerical linguistic information. IEEE Trans. Fuzzy Syst. 28(2), 346–360 (2020)

A.F. Garcia-Fernandez, Track-before-detect labeled multi-bernoulli particle filter with label switching. IEEE Trans. Aerosp. Electron. Syst. 52(5), 2123–2138 (2017)

W. Muhammad, A. Ahsan, Airship aerodynamic model estimation using unscented Kalman filter. J. Syst. Eng. Electron. 31(6), 1318–1329 (2020)

D. Sun, H. Li, C. Zheng, X. Li, Sound velocity correction based on effective sound velocity for underwater acoustic positioning systems. Appl. Acoust. 151(AUGa), 55–62 (2019)

Acknowledgements

Not applicable.

Funding

National Natural Science Foundation of China (U20A20329).

Author information

Authors and Affiliations

Contributions

XS provides research ideas, oversight, and leadership responsibility for the research activity planning and execution, including mentorship external to the core team. YW provides algorithm design and computer code implementation, and was a major contributor in writing the manuscript. MM analyzes or synthesizes data. LS analyzes or synthesizes data. ZW analyzes or synthesizes data. All authors read and approved the final manuscript.

Authors' information

Xueli Sheng was born in 1978. She received her Dr. Eng. Degree, in 2005. She is currently a Professor and a Doctoral Adviser. She presided over more than ten projects including the National Natural Science Foundation of China, the Pre-Research Project of the Naval Equipment Department, the Advanced Innovation Project of the Central Military Science and Technology Commission, and the project of the National Defense Key Laboratory Foundation. She has published more than 80 academic papers, applied for four national technology inventions, and published one book called Underwater Acoustic Channel. Her research interests mainly include underwater acoustic signal processing, multi-platform/bionic sonar technology, and semi-physical simulation of complex acoustic systems. She was a recipient of Science and Technology Awards at provincial and ministerial levels four times.

Yan Wang was born in 1987. He received his M.S. degree in underwater acoustic engineering from Harbin Engineering University, Harbin, China, in 2020, where he is currently pursuing a Ph.D. degree. His research interests include multi-target tracking and data fusion.

Mengfei Mu was born in 1998. He received a bachelor's degree in underwater acoustic engineering from Harbin Engineering University, Harbin, China, in 2020, where he is currently pursuing a Ph.D. degree in 2021. His research interests include underwater acoustic signal processing and active sonar detection.

Song Lai, Male, Harbin Engineering University, Master of Engineering, mainly engaged in data fusion.

Zeyi Wu, M.Sc., Harbin Engineering University, Harbin, Heilongjiang 150001, China, mainly engaged in data fusion.

Corresponding author

Ethics declarations

Competing interests

The authors declare that they have no competing interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Sheng, X., Wang, Y., Mu, M. et al. A modified asynchronous sequential Kalman track fusion based on sound propagation time. EURASIP J. Adv. Signal Process. 2023, 26 (2023). https://doi.org/10.1186/s13634-023-00987-3

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s13634-023-00987-3