Abstract

Background

Random survival forest (RSF) models have been identified as alternative methods to the Cox proportional hazards model in analysing time-to-event data. These methods, however, have been criticised for the bias that results from favouring covariates with many split-points and hence conditional inference forests for time-to-event data have been suggested. Conditional inference forests (CIF) are known to correct the bias in RSF models by separating the procedure for the best covariate to split on from that of the best split point search for the selected covariate.

Methods

In this study, we compare the random survival forest model to the conditional inference model (CIF) using twenty-two simulated time-to-event datasets. We also analysed two real time-to-event datasets. The first dataset is based on the survival of children under-five years of age in Uganda and it consists of categorical covariates with most of them having more than two levels (many split-points). The second dataset is based on the survival of patients with extremely drug resistant tuberculosis (XDR TB) which consists of mainly categorical covariates with two levels (few split-points).

Results

The study findings indicate that the conditional inference forest model is superior to random survival forest models in analysing time-to-event data that consists of covariates with many split-points based on the values of the bootstrap cross-validated estimates for integrated Brier scores. However, conditional inference forests perform comparably similar to random survival forests models in analysing time-to-event data consisting of covariates with fewer split-points.

Conclusion

Although survival forests are promising methods in analysing time-to-event data, it is important to identify the best forest model for analysis based on the nature of covariates of the dataset in question.

Similar content being viewed by others

Background

The Cox-proportional hazards model [1] is a popular choice for analysis of right censored time-to-event data. The model is convenient for its flexibility and simplicity, however, it has been criticised for its restrictive proportional hazards (PH) assumption [2–4] which is often violated. A number of extensions to the Cox proportional hazards model to handle time-to-event data where the PH assumption is not met have been suggested and implemented [5–7]. These extensions often remain dependent on restrictive functions such as the heaviside functions that may be difficult to construct and implement or fail to fit the dataset in question. Other analysis approaches to handle non-proportional hazards include methods such as stratification, but these limit the ability to estimate the effect(s) of the stratification variable(s).

Survival trees and random survival forests (RSF) are an attractive alternative approach to the Cox proportional hazards models when the PH assumption is violated [8]. These methods are extensions of classification and regression trees and random forests (RF) [9, 10] for time-to-event data. Survival tree methods are fully non-parametric, flexible, and can easily handle high dimensional covariate data [11–13]. Drawbacks of random survival forests include the common drawbacks of random forests including a bias towards inclusion of variables with many split points [14–17]. This effect leads to a bias in resulting summary estimates such as variable importance [15, 17]. Conditional inference forests (CIF) are known to reduce this selection bias by separating the algorithm for selecting the best covariate to split on from that of the best split point search [15, 17, 18].

Despite the fact that the CIF survival model has been identified to reduce bias in covariate selection for splitting in survival forest models, no study has been done to compare the predictive performance of the CIF model and random survival forest models on time-to-event data in the presence of covariates that have many and fewer split-points. This study for the first time, examines and compares the predictive performance of the CIF and the two random survival forest models through a simulation study. Bootstrap cross-validated estimates of the integrated Brier scores were used as measures of predictive performance [19]. In total, twenty-two time-to-event datasets were simulated. Eighteen of the datasets were simulated in such a way that they either have binary covariates (few split-points), polytomous covariates (many split-points) or both. Four of the datasets were simulated in such a way that they have covariate interactions. Other properties of these datasets are further described in “Methods” section. The two real datasets used in this study are Dataset 1, which investigates the survival of 6692 children under the age of five in Uganda and contains categorical covariates with many levels (polytomous covariates). Dataset 2 evaluates the survival of 107 patients with extremely drug resistant tuberculosis (XDR TB) in South Africa. It is a small dataset and contains only categorical binary covariates.

This article is structured as follows: The “Methods” section describes the methods used. We discuss the methods used to evaluate the methods in the “Model evaluation” section. In the “Simulation study” section, we present the simulation study together with the simulation results. The “Real data application” section introduces the two real datasets that we used in this study and also gives the corresponding real data analyses results and lastly the “Discussion and conclusions” section presents the discussion and conclusions drawn from this study.

Methods

A random survival forest (RSF) is an assemble of trees method for analysis of right censored time-to-event data and an extension of Brieman’s random forest method [14, 20]. Survival trees and forests are popular non-parametric alternatives to (semi) parametric models for time-to-event analysis. They offer great flexibility and can automatically detect certain types of interactions without the need to specify them beforehand [13]. A survival tree is built with the idea of partitioning the covariate space recursively to form groups of subjects who are similar according to the time-to-event outcome. Homogeneity at a node is achieved by minimizing a given impurity measure. The basic approach for building a survival tree is by using a binary split on a single predictor. For a categorical covariant X, a split is defined as X≤c where c is some constant. For a categorical covariate X with many split-points, the potential split is X∈{c 1,…,c k } where c 1,…,c k are potential split values of a predictor variable X. The goal in survival tree building is to identify prognostic factors that are predictive of the time-to-event outcome. In tree building, a binary split is such that the two daughter nodes obtained from the parent node are dissimilar and several split-rules (different impurity measure) for time-to-event data have been suggested over the years [13, 21].

The impurity measure or the split-rule of the algorithm is very important in survival tree building. In this article, we used the log-rank and the log-rank score split-rules [22–24].

The log-rank split-rule

Suppose a node h can be split into two daughter nodes α and β. The best split at a node h, on a covariate x at a split point s ∗ is the one that gives the largest log-rank statistic between the two daughter nodes [22]. The algorithm for building a survival tree using the split-rule based on the log-rank statistic [13, 22, 25, 26] is given in Algorithm 1 below.

The log-rank score split-rule

The log-rank score split-rule [23] is a modification of the log-rank split-rule mentioned above. It uses the log-rank scores [24]. Given r=(r 1,r 2,…,r N ), the rank vector of survival times with their indicator variable (T,δ)=((T 1,δ 1),(T 2,δ 2),…,(T N ,δ N )) and that a=a(T,δ)=(a 1(r),a 2(r),…,a N (r)) denotes the score vector depending on ranks in vector r. Assume that the ranks order the predictor variables in such away that x 1<x 2<…<x N . The log-rank scores for an observation at T l is given by:

where

is the number of individuals that have had the event of interest or were censored before or at time T k .

where \(\bar {a}\) and \(S_{a}^{2}\) are the mean and sample variance of the scores {a j :j=1,2,…n}. The best split is the one that maximizes |i(x,s ⋆)| over all \(x_{j}^{'}\)s and possible splits s ⋆.

Trees are generally unstable and hence researchers have recommended the growing of a collection of trees [10, 27], commonly referred to as random survival forests [20, 26].

Random survival forests algorithm

The random survival forests algorithm implementation is shown in Algorithm 2 [20, 26].

For this study, we used the log-rank and the log-rank score split-rules in Step 2 of the algorithm. Two random survival forest algorithms were generated denoted as RSF1 and RSF2. RSF1 consists of survival trees built using the log-rank split-rule whereas RSF2 consists of survival trees built using the log-rank score split-rule.

The random survival forests algorithm, has been criticised for having a bias towards selecting variables with many split points and the conditional inference forest algorithm has been identified as a method to reduce this selection bias. Conditional inference forests are formulated in such a way that it separates the algorithm for selecting the best splitting covariate is separated from the algorithm for selecting the best split point [15–18]. To illustrate this, consider a dataset with a time-to-event outcome variable T and two explanatory variables x 1 and x 2 with k 1 and k 2 possible split-points, respectively. Furthermore, consider that T is independent of x 1 and x 2, and that k 1<k 2. In the random survival forests algorithm, the search for the best covariate to split on and the best split-point by comparing the effect for both the covariates on T, gives x 2 the highest probability of being selected just by chance.

Conditional inference trees and forests

Algorithm 3 outlines the general algorithm for building a conditional inference tree as presented by [28].

For time-to-event data, the optimal split-variable in step 1 is obtained by testing the association of all the covariates to the time-to-event outcome using an appropriate linear rank test [28, 29]. The covariate with the strongest association to the time-to-event outcome based on permutation tests [28], is selected for splitting. In covariates selection, a linear rank test based on the log-rank transformation (log-rank scores) is performed. Using the distribution of the resulting rank statistic, p-values are evaluated and the covariates with minimum p-value is known to have the strongest association to the outcome [17, 30, 31]. Although the standard association test is done in the first step, a standard binary split is done in the second step. A single tree is considered unstable and hence research has recommended the growing of an entire forest [9, 10, 20]. The forest of conditional inference trees results into a conditional inference (CIF) model. The CIF model algorithm for time-to-event data is implemented in the R package called party.

To compare the performance of the three models used in this study, integrated Brier scores are used [32] which are described in the section below.

Model evaluation

Brier scores [32] are used to compare the predictive performance of the two random survival forests models of all models. At a given time point t, the Brier score for a single subject is defined as the squared difference between observed event status (e.g., 1=alive at time t and 0=dead at time t) and a model based prediction of surviving time t. Using the test sample of size denoted as N test, Brier scores at time t are given by

Where \(\widehat {G}\left (t|x\right)\approx P\left (C > t|X=x\right)\) is the Kaplan-Meier estimate of the conditional survival function of the censoring times.

The integrated Brier scores (IBS) are given by

To avoid the problem of overfitting that arises from using the same dataset to train and test the model, we used the Bootstrap cross-validated estimates of the integrated Brier scores [19]. The prediction errors are evaluated in each bootstrap sample.

These have been implemented in the pec package [19]. We fit a pec object with the three rival prediction models (RSF1, RSF2 and CIF). The three models were passed on as a list to the pec object and chose s p l i t M e t h o d=B o o t632p l u s. We set B=5, to have reasonable run times and reported Bootstrap cross-validated estimates for integrated Brier scores. Prediction error rates of 50% or higher are useless because they are no better than tossing a coin [12, 33].

Simulation study

Simulated time-to-event datasets

To simulate time-to-event datasets for this study, two frameworks were used. The first framework is more flexible and it generates time-to-event data from known distributions for proportional hazard models by inverting the cumulative baseline hazard function. The desired censoring parameters were achieved by randomly generating them from a binomial distribution. This framework was used to generate polytomous and datasets with covariate interactions [34–36]. The second framework uses a nested numerical integration and a root-finding algorithm to choose the censoring parameter that achieves predefined censoring rates in simulated time-to-event datasets [34]. This framework was used to generate time-to-event datasets with binary covariates.

Time-to-event datasets with binary covariates

Ten covariates are considered in each simulated dataset that is, X j ={X 1,X 2,X 3,X 4,X 5,X 6,X 7,X 8,X 9, X 10}. Each of the covariates is randomly generated from a Bernoulli distribution with probability p j , X j ∼B(p j ). Weibull event times were considered, these were generated using a baseline hazard function \(h_{0}(t)=\frac {\theta }{\rho ^{\theta }}t^{\theta -1}\,\). The scale parameter is given by \(\lambda =\exp \left (-\frac {\beta _{0}}{\theta }-\sum _{j=1}^{n}\frac {\beta _{j}}{\theta }X_{j}\right)\), where β 0 is the coefficient of the intercept term log(ρ −θ). The shape parameter θ was set at 0.8,1.5, or 1 to represent decreasing, increasing and constant hazard, respectively. The corresponding intercept for each dataset was β 0=−0.98,−1.44, or 0.9. The regression coefficients for the 10 covariates were defined as {0.5,−0.045,0.6,−0.03,−2,0.5,0.25,−0.04,0.33,0.3}, {0.1, −0.8,0.5,−0.2,−3,0.7,0.2,0.4,0.3}, {0.4,−0.7,1,−2,−3,0.7,0.06,0.5−0.43,0.3}, respectively. Censoring times were generated from a Weibull distribution with a shape parameters 0.4,2.4, or 1. The censoring parameter θ was computed numerically to get 50%,20% and 80%, respectively. In total, six time-to-event datasets were generated from this covariate design and other properties of these datasets are stated in Table 1.

Time-to-event datasets with polytomous covariates

Covariates were generated by sampling with replacement from a list of desired categories. Event times were generated by inverting cumulative hazard function. T=−1∗ log(U)∗ρ∗e x p(−λ)(1/θ). Where \(\lambda =\exp \left (-\frac {\beta _{0}}{\theta }-\sum _{j=1}^{n}\frac {\beta _{j}}{\theta }X_{j}\right)\). The shape parameter θ was set at 0.5,1.5 and 1 to yield time-to-event datasets with a decreasing, increasing and a constant hazard, respectively. The corresponding censoring times were also generated from a Weibull distribution with a shape and scale parameter of 0.4, 1.2 and 1.1, receptively. In this covariate design, six datasets were generated. Other properties for these datasets are given in Table 1.

Time-to-event datasets with binary and polytomous covariates

We used the same frame work for generating survival times as that of generating time-to-event datasets with polytomous covariates described above. Binary covariates, were added to the dataset by generating them from a Bernoulli distribution. In total, six datasets were generated.

Time-to-event datasets with covariate interactions

We used the same frame work for generating survival times as that for generating time-to-event datasets with polytomous covariates and four datasets were generated. The codes used to generate these datasets is provided as an additional file. In total, twenty-two datasets are generated for this simulation study. The Table 1 presents properties for each simulated dataset.

In this study, the two random survival forest methods, that is, the one consisting of trees built with the log-rank split-rule (RSF1) and the other consisting of survival trees built with the log-rank score split-rule (RSF2) are fit to the data and compared to the CIF model.

To have reasonable run times, 100 survival trees are grown for each survival forest fitted on each simulated dataset and this is repeated 100 times. The models are then evaluated using bootstrap cross-validated estimates of the integrated Brier scores. This estimate is recorded from each fitted survival forest [37–39]. All computations and analyses were carried out in R, using R version 3.3.2. We used the randomForestSRC [26], party [40], pec [19], rms [41], and doMC packages.

Results on simulated datasets

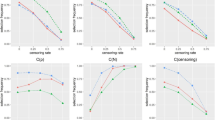

For each repetition, bootstrapped-cross-validated integrated brier scores were recorded. The results are then reported using box-plots as shown below.

Figure 1 presents box plots of the prediction errors for RSF1, RSF2 and the CIF models on all the six datasets simulated with binary covariates. In general, all models have a good predictive performance on the dataset.This is because all the prediction error values are below the 50% cut-off point. However, there are some unique differences in the predictive performance between two random survival forests and the CIF model which can not be ignored. On Data 1, the prediction errors for the CIF model are sandwiched between the error values for RSF1 and RSF2. The prediction error values for The CIF model on the remaining five datasets are lowest compared to those of the RSF1 and RSF2 model. The box plots of the error values for the three models appear to be almost symmetrical. The results therefore indicate that the predictive performance for the two random survival forests and the conditional inference survival forest model is similar or comparable in performance on simulated time-to-event data with only binary covariates. Figure 2 indicates that all the three models have a good predictive performance on all the six datasets because the error values are below the 50% mark. Although the prediction error values for the CIF model appear to be at par with those of RSF2 on Data 1, the model has the lowest error values compared to RSF1 and RSF2 on the remaining five datasets. This is not a surprise because the CIF model is known to be superior in performance to random survival forests models in the presence of covariates with many split-points.

The results presented by using box plots in Fig. 3 give prediction errors of RSF1, RSF2 and the CIF model on six datasets simulated to have both binary and polytomous covariates. On all the six datasets, the CIF model has the lowest prediction error rates. This is because conditional inference forests have an added advantage for prediction in the presence of covariates with many split-points because of the way it does the search for covariate selection and split-point.

Figure 4 presents box plots for predictive performance of the three survival forest model on simulated time-to-event data with covariate interactions. The prediction error values for the CIF model are lowest on all the six datasets. Since covariate interactions are simulated in such a way that some of the covariates have many split-points, the superiority in performance of the CIF model was not a surprise.

Generally, all the three survival forest models have a good predictive performance based on the bootstrap cross-validated estimates of integrated Brier score. However, there are some differences in the performance of each of the models on each of the simulated dataset as discuss above. The results in summary suggest that conditional inference forests have a good predictive performance compared to the two random survival forest models especially on time-to-event datasets with polytomous covariates. The model is comparable in predictive performance to random survival forests models in the analysis of simulated time-to-event datasets with binary covariates.

Real data application

To further investigate the results obtained from the simulation study on the predictive performance of the three survival forest models, we analysed two real datasets whose covariate properties are similar to those used in the simulation study. Note that in identifying the most important covariates in explaining survival in the analysis of both datasets, permutation importance was used as measure of variable importance [20, 42].

Dataset 1

The dataset can be found on the demographic health survey website [43]. In this survey, a representative sample of 10,086 households was selected during the 2011 Uganda Demographic and Health Survey (UDHS). The sample was selected in two stages. First a total of 404 enumeration areas (EAs) were selected from among a list of clusters sampled for the 2009/10 Uganda National Household Survey (2010 UNHS). In the second stage of sampling, households in each cluster were selected from a complete listing of households. Eligible women for the interview were aged between 15–49 years of age who were either usual residents or visitors present in the selected household on the night before the survey. Out of 9247 eligible women, 8674 were successively interviewed with a response rate of 94% (91% in urban and 95% in rural areas). The study population for this analysis includes infants born between exactly one and five years preceding the 2011 UDHS.

Explanatory variables

In this dataset, 19 covariates are considered for analysis and their choice was based on literature studies [44–46]. To some extent, other limitations like high level of missingness in the dataset influenced our covariate choice. The dataset is readily available from the Demographic and Health Survey Data website [43]. Summary characteristics can be found in Table 2.

The time-to-event outcome of interest is time to death of children under the age of five. The range of values of this outcome lie between one month and 59 months of age. Children that were alive at the time of the interview were considered to be right censored. The dataset has a high censoring rate of 93%.

Dataset 2

Between August 2002 and October 2012, a total of 107 adult patients with microbiologically confirmed XDR-TB from three provinces in South Africa, were hospitalised for treatment in three tuberculosis treatment facilities (Brooklyn Chest Hospital, Western Cape [B], Gordonia Hospital, Upington, Northern Cape, [H] and Sizwe Tropical Disease Hospital, Johannesburg, Gauteng [S]). All the three hospitals are specialist referral centres for the treatment of drug resistant TB, aimed at serving patients from across respective provinces. This dataset has been published in [47].

Explanatory variable

The covariates of interests were selected based on literature [47, 48] and limitations of high level of missingness.

Table 3 shows the distribution of deaths in each of the covariates considered. The outcome variable is survival time in days from diagnosis of XDR-TB.

The median survival time was 30.9(IQR =19.5) months. A total of 79 (74%) patients died, with a low censoring rate of 26%.

Analysis

Dataset 1

Results from the two random survival forest models applied to Dataset 1 shown in Fig. 5 identify the number of children under the age of five in the household as the most informative predictor of time to death for children under-five in Uganda.

Other covariates strongly associated to under-five child mortality in Uganda include; the number of births in the past five years, birth order, wealth index and the total number of children ever born. Both random survival forest models have similar results in identifying the same factors affecting the time to survival of children under the age of five years in Uganda.

The results from the CIF model on the same dataset in Fig. 6, agree with the top two predictors by RSF1 and RSF2. The top predictors are; number of children under the age of five in a household and the number of births in the past five years. Some covariates in the CIF model that move up in ranks compared to RSF1 and RSF2 include; the number of births in the past one year and the sex of the household head. These two covariates were also found important in explaining under-five child mortality rates by [49] using the Cox proportional hazards models.

Dataset 2

Figure 7, presents results of variable importance from RSF1 and RSF2 on Dataset 2. The covariates are ranked according to their degree of importance in RSF1. The combined HIV/ART status is ranked most important among the covariates considered in predicting the time to death of patients with XDR TB in both random survival forest models. Age at diagnosis and specific prescribed drugs (Isoniazid, Amoxicillin and Clofazamine) are also ranked highly important. The same drugs were found to be predictive in the multivariate Cox analysis [47].

The results obtained from fitting the CIF model on the same dataset in Fig. 8, indicate that the age at diagnosis and HIV/ART status are again highly associated with the outcome.

The three survival forest models give similar results in determining the factors affecting the survival of patients with XDR TB.

Results on real datasets

For each survival forests, 100 trees were built and this was repeated 50 times. For each repetition, bootstrapped-cross-validated integrated brier scores were recorded. The results on predictive performance of all the models used in this study on Dataset 1 and Dataset 2 is shown in Fig. 9. Overall, the three models show a good predictive performance on the two real datasets as shown in Fig. 9. On Dataset 1, the conditional inference forest model has the lowest prediction error values compared to the two random survival forest models. On Dataset 2, however, the two random survival forest model are at par in predictive performance compared to the conditional inference forests model. Infact the prediction error values of the three models are all positively skewed. These results on the predictive performance of the three survival forest models confirm that the CIF model is superior in predictive performance to the two random survival forest models on the real survival dataset with covariates that have many split-points. The study also shows that the CIF model and the two random survival forests are comparable in predictive performance on real time-to-event datasets with covariates that have fewer split-points. Similar results were obtained from the simulation study.

Discussion and conclusions

In this study, we compared the predictive performance of three survival forests models on twenty-two simulated time-to-event datasets and two real time-to-event datasets. First, eighteen datasets were simulated to have covariate properties of interest that is, fewer split-points vs many split-points. Four more time-to-event datasets with covariate interactions were also simulated. The first two forest models are random survival forests models with trees built based on the log-rank and the log-rank score split-rule, respectively. The third survival forest model consists of conditional inference trees.

The results from comparing the predictive performance on three survival forest models on simulated time-to-event datasets indicate that the three random survival forest models have a good predictive performance. Despite this fact, the study has shown that there are some variations in predictive performance for these three models in the presence of covariates with many vs those with fewer split-points. The study suggests that conditional inference forests are superior in predictive performance to random survival forests on time-to-event datasets with polytomous covariates. The results also indicate that the three models are comparable in predictive performance on time-to-event datasets with categorical covariates that are binary in nature. The superiority in performance of the CIF model is likely due to the way it handles the split variable and the split point selection especially in the presence of covariates with many split-points. These results are similar to those from the simulation study. This study therefore confirms the results that conditional inference forests are desirable in analysing time-to-event data consisting of covariates with many split-points. This results is therefore in agreement with the assertion made from a study by [28] that the CIF model is desirable in analysing time-to-event data in the presence of covariates with many split points.

The main finding of this study is that random survival forests perform comparably to conditional inference forests in analysing time-to-event data consisting of covariates with few split-points and that conditional inference forests are desirable in situations where the data consists of covariates with many split-points. It is therefore important for researchers to select the best survival forest model to analyse any time-to-event dataset based on the nature of its covariates.

Note that the conditional inference forest for time-to-event analysis and random survival forests have a difference in the way they calculate the predicted time-to-event probabilities and it is not yet clear whether this has an influence on their overall predictive performance [19]. The CIF model utilizes a weighted Kaplan-Meier estimate based on all subjects from the training dataset and it therefore put more weight on terminal nodes where there is a large number of subjects at risk whereas random survival forests use equal weights on all terminal nodes. Further studies need to be done to understand whether this property has an influence on the predictive performance of these models.

The limitation of this study is that we used random survival forest models that consists of survival trees based on the log-rank split-rule. Recent studies have raised concerns that since the log-rank split-rule is based on the proportional hazards assumption, it may negatively affect the predictive performance of the survival forest model. A recent study has recommended the use of the integrated absolute difference between the two daughter nodes survival functions as the splitting rule especially in circumstances when the hazard functions cross [50]. Further studies would therefore compare the predictive performance of the CIF model to robust random survival forest models resulting from using robust split-rules especially in the presence of covariates that violate the PH assumption. Another limitation of the study is that the real datasets used had missing data and we assumed that the data was missing completely at random. Only a complete case analysis was considered which negatively affect outcomes.

References

Cox DR. Regression models and life tables (with discussion). J R Stat Soc Ser B. 1972; 34(2):187–220.

Platt RW, Joseph K, Ananth CV, Grondines J, Abrahamowicz M, Kramer MS. A proportional hazards model with time-dependent covariates and time-varying effects for analysis of fetal and infant death. Am J Epidemiol. 2004; 160(3):199–206.

Ng’andu NH. An empirical comparison of statistical tests for assessing the proportional hazards assumption of cox’s model. Stat Med. 1997; 16(6):611–26.

Fisher LD, Lin DY. Time-dependent covariates in the cox proportional-hazards regression model. Annu Rev Public Health. 1999; 20(1):145–57.

Therneau TM. Extending the Cox model In: Lin DY, Fleming TR, editors. Proceedings of the First Seattle Symposium in Biostatistics. New York: Springer Verlag: 1997. p. 51–84.

Wei L. The accelerated failure time model: a useful alternative to the cox regression model in survival analysis. Stat Med. 1992; 11(14-15):1871–9.

Therneau TM, Grambsch PM. Modeling survival data: extending the Cox model.New York: Springer Verlag; 2000.

Ehrlinger J. ggRandomForests Exploring random forest survival. R Vignette. 2016.

Breiman L, Friedman J, Stone CJ, Olshen RA. Classification and regression trees. Belmont: CRC press; 1984.

Breiman L. Random forests. Mach Learn. 2001; 45(1):5–32.

Fernández T, Rivera N, Teh YW. Gaussian processes for survival analysis. In: Advances in Neural Information Processing Systems.New York: Curran Associates: 2016. p. 5015–023.

Taylor JM. Random survival forests. J Thorac Oncol. 2011; 6(12):1974–5.

Bou-Hamad I, Larocque D, Ben-Ameur H, et al. A review of survival trees. Stat Surv. 2011; 5:44–71.

Ziegler A, König IR. Mining data with random forests: current options for real-world applications. Wiley Interdiscip Rev Data Min Knowl Disc. 2014; 4(1):55–63.

Strobl C, Boulesteix AL, Zeileis A, Hothorn T. Bias in random forest variable importance measures: Illustrations, sources and a solution. BMC Bioinforma. 2007; 8(1):1.

Loh WY. Fifty years of classification and regression trees. Int Stat Rev. 2014; 82(3):329–48.

Wright MN, Dankowski T, Ziegler A. Unbiased split variable selection for random survival forests using maximally selected rank statistics. Stat Med. 2017; 36(8):1272–84. doi:10.1002/sim.7212.sim.7212.

Das A, Abdel-Aty M, Pande A. Using conditional inference forests to identify the factors affecting crash severity on arterial corridors. J Saf Res. 2009; 40(4):317–27.

Mogensen UB, Ishwaran H, Gerds TA. Evaluating random forests for survival analysis using prediction error curves. J Stat Softw. 2012; 50(11):1.

Ishwaran H, Kogalur UB, Blackstone EH, Lauer MS. Random survival forests. Ann Appl Stat. 2008;841–60.

Gordon L, Olshen RA. Tree-structured survival analysis. Cancer Treat Rep. 1985; 69:1065–1069.

Ciampi A, Chang CH, Hogg S, McKinney S. Recursive partition: A versatile method for exploratory-data analysis in biostatistics. In: Biostatistics.New York: Springer: 1987. p. 23–50.

Hothorn T, Lausen B. On the exact distribution of maximally selected rank statistics. Comput Stat Data Anal. 2003; 43(2):121–37.

Lausen B, Schumacher M. Maximally selected rank statistics. Biometrics. 1992;73–85.

Segal MR. Regression trees for censored data. Biometrics. 1988;35–47.

Ishwaran H, Kogalur UB. randomForestSRC: Random Forests for Survival, Regression and Classification (RF-SRC). R package version 1.4.0. 2014. https://cran.r-project.org/.

Dietterich T. Ensemble Learning. Tha Handbook of Brain Theory and Neural Networks. Cambridge MA: The MIT Press; 2002.

Hothorn T, Hornik K, Zeileis A. Unbiased recursive partitioning: A conditional inference framework. J Comput Graph Stat. 2006; 15:651–74.

Strasser H, Weber C. On the asymptotic theory of permutation statistics. Math Methods Stat. 1999; 8:220–50.

Harrington D. Linear rank tests in survival analysis In: Armitage P, Colton T, editors. Encyclopedia of biostatistics(2nd edn). New York: Wiley Online Library: 2005. p. 2802–2812.

Hothorn T, Hornik K, Strobl C, Zeileis A. Party: a laboratory for recursive partitioning. R package version 1.0-23. 2015. https://cran.r-project.org/web/packages/party/index.html.

Graf E, Schmoor C, Sauerbrei W, Schumacher M. Assessment and comparison of prognostic classification schemes for survival data. Stat Med. 1999; 18(17-18):2529–45.

Chen G, Kim S, Taylor JM, Wang Z, Lee O, Ramnath N, Reddy RM, Lin J, Chang AC, Orringer MB, et al.Development and validation of a quantitative real-time polymerase chain reaction classifier for lung cancer prognosis. J Thorac Oncol. 2011; 6(9):1481–7.

Wan F. Simulating survival data with predefined censoring rates for proportional hazards models. Stat Med. 2017; 36.5:838.

Bender R, Augustin T, Blettner M. Generating survival times to simulate cox proportional hazards models. Stat Med. 2005; 24(11):1713–23.

Crowther MJ, Lambert PC. Simulating biologically plausible complex survival data. Stat Med. 2013; 32(23):4118–34.

Kohavi R, et al. A study of cross-validation and bootstrap for accuracy estimation and model selection. In: Ijcai. vol. 14, no. 2. Morgan Kaufmann: Los Altos: 1995. p. 1137–1145.

Refaeilzadeh P, Tang L, Liu H. Cross-Validation In: Liu L, Özsu MT, editors. Boston: Springer: 2009. p. 532–8.

Bengio Y, Grandvalet Y. No unbiased estimator of the variance of k-fold cross-validation. J Mach Learn Res. 2004; 5(Sep):1089–05.

Hothorn T, Hornik K, Strobl C, Zeileis A, Hothorn MT. Package ‘party’. Packag Ref Man Party Version 0.9-998. 2015; 16:37.

Harrell Jr FE, Harrell Jr MFE, Hmisc D. Package ‘rms’; 2017. https://cran.r-project.org/web/packages/rms/index.html.

Strobl C, Boulesteix A, Kneib T, Augustin T, Zeileis A. Conditional variable importance for random forests. BMC Bioinforma. 2008; 9:307.

Demographic and Healthy Survey Datasets. http://dhsprogram.com/data/available-datasets.cfm. Accessed 25 Oct 2016.

Ssewanyana S, Younger SD. Infant mortality in uganda: Determinants, trends and the millennium development goals. J Afr Econ. 2008; 17(1):34–61.

Ayiko R, Antai D, Kulane A. Trends and determinants of under-five mortality in uganda. East Afr J Public Health. 2009; 6(2):136–40.

Demombynes G, Trommlerová SK. What has driven the decline of infant mortality in kenya?Washington: Policy research working paper No. WPS 60572010: World Bank; 2012.

Pietersen E, Ignatius E, Streicher EM, Mastrapa B, Padanilam X, Pooran A, Badri M, Lesosky M, van Helden P, Sirgel FA, et al. Long-term outcomes of patients with extensively drug-resistant tuberculosis in south africa: a cohort study. Lancet. 2014; 383(9924):1230–9.

Kim DH, Kim HJ, Park SK, Kong SJ, Kim YS, Kim TH, Kim EK, Lee KM, Lee SS, Park JS, et al. Treatment outcomes and long-term survival in patients with extensively drug-resistant tuberculosis. Am J Respir Crit Care Med. 2008; 178(10):1075–82.

Nasejje JB, Mwambi HG, Achia TN. Understanding the determinants of under-five child mortality in uganda including the estimation of unobserved household and community effects using both frequentist and bayesian survival analysis approaches. BMC Public Health. 2015; 15(1):1.

Moradian H, Larocque D, Bellavance F. L_1 splitting rules in survival forests. Lifetime Data Anal. 2016:1–21.

Acknowledgements

The first author acknowledges financial support from DAAD and the University of Kwazulu-Natal for her PhD studies. We thank Prof Keertan Dheda, Director of the Lung Infection and Immunity Unit and Head of the Division of Pulmonology, Department of Medicine, at the University of Cape Town for permission to utilised the TB XDR data in this analysis, and all of the contributors and participants to the study. We also acknowledge the DHS Program for making data from Uganda available, and the contributions of all the women who participated in the survey together with the team that conducted the survey. And lastly, the first author acknowledges Mr. Andile Gumede for his technical support during the production of this research article.

Funding

We thank the University of Kwazulu-Natal for supporting NJB in her PHD work. The first author is also financially supported by DAAD.

Availability of data and materials

The authors confirm that all data underlying the findings are fully available without restriction. Dataset 1 is held by the Demographic and Health Survey program and freely available to the public but a request has to be sent to the Demographic and Health Survey program. The link to access it is http://www.dhsprogram.com/data/dataset_admin/download-datasets.cfm.

Dataset 2 maybe requested from Prof Keertan Dheda, Director of the Lung Infection and Immunity Unit and Head of the Division of Pulmonology, Department of Medicine, at the University of Cape Town.

Author information

Authors and Affiliations

Contributions

Conceived by NJB, MH, ML and KD. Analyzed the data: NJB. Wrote the first draft of the manuscript: NJB. Contributed to the writing of the manuscript: NJB, MH, ML and KD. Agree with the manuscript’s results and conclusions: NJB, MH, ML and KD. All authors have read and reviewed the manuscript.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

The ethical statement for Dataset 1 is available on the DHS ethical clearance certificate and it states that: The IRB-approved procedures for DHS public-use datasets do not in any way allow respondents, households, or sample communities to be identified. There are no names of individuals or household addresses in the data files. The geographic identifiers only go down to the regional level (where regions are typically very large geographical areas encompassing several states/provinces). Each enumeration area (Primary Sampling Unit) has a PSU number in the data file, but the PSU numbers do not have any labels to indicate their names or locations. In surveys that collect GIS coordinates in the field, the coordinates are only for the enumeration area (EA) as a whole, and not for individual households, and the measured coordinates are randomly displaced within a large geographic area so that specific enumeration areas cannot be identified. No ethical clearance was required from the University of Kwazulu-Natal.

The ethical statement for Dataset 2, however, is available on request from Prof Keertan Dheda, the Director of the Lung Infection and Immunity Unit and Head of the Division of Pulmonology, Department of Medicine, at the University of Cape Town.

Consent for publication

Dataset 1 was obtained from the Demographic and Health Survey data project. The Demographic Health Survey Data is collected according to the rules and guidelines stipulated by WHO World Health Survey on consent from the participants stated below:

Participation in the survey is voluntary and the respondent can refuse to be interviewed. The interviewer is responsible for explaining what the survey is about, providing all the necessary information, and making sure the respondent understands the implications of his/her participation before giving his/her consent. The information given should be simple and clear and adapted to the respondent’s level of understanding. Consents must be documented by asking the respondents to sign an Informed Consent Forms (Household Informant Consent Form; Individual Consent Form) before doing the interview. These forms must mention who will be doing the study, the types of questions that will be asked, why the study is being done, and who will have access to the information provided. The interviewer must check that the respondent has read and understood the form before signing, and should offer to go over it with him /her emphasizing the different items mentioned. If the respondent is illiterate or unable to read for himself/herself (e.g. due to a visual impairment), the form will be read and explained to him/her. In cases where it is not appropriate for the respondent to sign the form, the interviewer alone will sign the form. In cases where the respondent is being dissuaded from, or coerced into, participating in the study by a third party such as a spouse, relative or any other member in the community, the interviewer should make it clear that it is the respondent alone who must decide whether or not s/he wishes to be interviewed.

Dataset 2 was obtained from a study conducted by a group lead by Prof Keertan Dheda, the Director of the Lung Infection and Immunity Unit and Head of the Division of Pulmonology, Department of Medicine, at the University of Cape Town. He gave permission to utilise the dataset in this analysis, and all of the contributors and participants to the study consented to the study.

Competing interests

The authors declare that they have no competing interests.

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License(http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The Creative Commons Public Domain Dedication waiver(http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated.

About this article

Cite this article

Nasejje, J.B., Mwambi, H., Dheda, K. et al. A comparison of the conditional inference survival forest model to random survival forests based on a simulation study as well as on two applications with time-to-event data. BMC Med Res Methodol 17, 115 (2017). https://doi.org/10.1186/s12874-017-0383-8

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s12874-017-0383-8