Abstract

Background

The use of big data and machine learning within clinical decision support systems (CDSSs) has the potential to transform medicine through better prognosis, diagnosis and automation of tasks. Real-time application of machine learning algorithms, however, is dependent on data being present and entered prior to, or at the point of, CDSS deployment. Our aim was to determine the feasibility of automating CDSSs within electronic health records (EHRs) by investigating the timing, data categorization, and completeness of documentation of their individual components of two common Clinical Decision Rules (CDRs) in the Emergency Department.

Methods

The CURB-65 severity score and HEART score were randomly selected from a list of the top emergency medicine CDRs. Emergency department (ED) visits with ICD-9 codes applicable to our CDRs were eligible. The charts were reviewed to determine the categorization components of the CDRs as structured and/or unstructured, median times of documentation, portion of charts with all data components documented as structured data, portion of charts with all structured CDR components documented before ED departure. A kappa score was calculated for interrater reliability.

Results

The components of the CDRs were mainly documented as structured data for the CURB-65 severity score and HEART score. In the CURB-65 group, 26.8% of charts had all components documented as structured data, and 67.8% in the HEART score. Documentation of some CDR components often occurred late for both CDRs. Only 21 and 11% of patients had all CDR components documented as structured data prior to ED departure for the CURB-65 and HEART score groups, respectively. The interrater reliability for the CURB-65 score review was 0.75 and 0.65 for the HEART score.

Conclusion

Our study found that EHRs may be unable to automatically calculate popular CDRs—such as the CURB-65 severity score and HEART score—due to missing components and late data entry.

Similar content being viewed by others

Background

The use of big data and machine learning within clinical decision support systems (CDSSs) has the potential to transform medicine through better prognosis, diagnosis and automation of tasks [1, 2]. Real-time application of machine learning algorithms, however, is dependent on data being present and entered prior to, or at the point of, CDSS deployment [3]. While simple CDSSs may request the user to enter in data necessary to run the algorithm, this process becomes infeasible when tens or even hundreds of data elements are needed, thus requiring some form of automated capture and real-time integration of data from the electronic health record (EHR) [4].

The emergency department (ED), with a highly condensed time frame for decision making, represents a unique and challenging environment for CDSS deployment [5]. In the ED, patient medical problems are often acute with limited supporting information already present in the EHR, most of the information necessary for decision making is generated within a few hours, and recording of parts of this information in the form of provider notes may be delayed until well after the patient has left the department [6]. Currently, there is limited knowledge on when components necessary for calculation of CDSS in the ED are available in the EHR, what data type (structured vs unstructured) they exist in, and data completeness for specific algorithms [7].

Our study, therefore, aimed to examine the EHR data entry process for two common, simple clinical decision rules (CDRs) as a first step in assessing the potential feasibility of constructing more complex automated, real-time clinical decision support systems in the ED. Specifically, we examined whether the CDRs were calculable using EHR data, how the individual CDR components are recorded (structured versus unstructured data), and the timeliness of data entry.

Methods

Study design

This was a retrospective study of ED visits between 1/1/2015 and 1/1/2016. The study was approved by the institutional review board.

Study site

The study involved five different emergency department sites with combined annual visits > 250,000. All of the study sites are part of a larger hospital healthcare system with a single EHR vendor. The hospital system serves both an urban and suburban patient population. The sites are staffed with certified nursing assistants, midlevel providers, residents, and attending physicians.

Identification of clinical decision rules

The research team contacted the creators of the website, www.MDcalc.com, to acquire a list of the calculators used in the Emergency Medicine section. This table included the full list of calculators and the percentage each calculator composed of the total searches for all calculators in the 3 months prior to July 16, 2015. From this list of calculators, we ordered them from highest to lowest percentage of searches. We defined a clinical decision rule as a “clinical tool that quantifies the individual contributions that various components of the history, physical examination, and basic laboratory results make toward the diagnosis, prognosis, or likely response to treatment in a patient” [8]. A calculator from the list was eligible for selection if it met this definition and excluded if it was not created for the purpose of being a CDR, was targeted to a population less than 18 years of age, had no diagnostic significance, no prognostic significance for a condition or treatment, or cannot be calculated in the emergency department. From this definition, the research team identified the top 10 clinical decision rules, and randomly selected two from the list to investigate, the CURB-65 score and HEART score.

Patient chart selection and review

The calculated sample size of each CDR, assuming 70% structured data, 95% confidence intervals for a 7.5% margin of error was 140 charts. We selected 145 charts to review for both the CURB-65 score and HEART score. In order to identify charts where the CDRs would be used, the team performed a keyword search to make a list of ICD-9 codes that corresponded with the diagnoses targeted by each CDR (Additional file 1: Table S1). From this list, the team performed a database query for all emergency department diagnoses within the study period that matched the ICD-9 codes identified for each CDR. Prior to full review, the team randomly selected and reviewed 10 separate charts from each CDR list to ensure the chosen ICD-9 codes appropriately selected patients with medical presentations applicable to their corresponding CDR.

Data collection

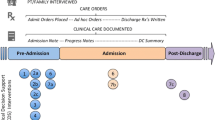

Patient charts were stored in a database (FileMaker, Inc., FileMaker International) as multiple iterations with noted timestamps to document changes made to the patient chart (i.e. every time a chart was modified a new timestamped instance was generated). The team excluded a chart if the chart had more iterations than is supported by the query text field and was therefore truncated. In the analysis, the charts were reviewed to categorize the individual CDR components as structured or unstructured data. The team defined structured data as any data with designated fields containing discrete data elements within the electronic health record. All other data was considered unstructured. Each element documented, had its corresponding timestamp of when it appeared in the EHR documented as well. The team documented the portion of charts with all structured data components required to calculate each corresponding CDR. Furthermore, the total time from triage to disposition, and the portion of charts with all data components entered as structured data prior to the patient’s departure from the emergency department were obtained. All chart reviewers had at least 5 months of experience using EPIC and went through training to ensure all members understood what data is structured versus unstructured. The team agreed on a guide of instructions for both the CURB-65 score and HEART score CDRs to standardize the review (Additional file 2: Appendix B).

Data analysis

Results were analyzing using standard descriptive statistics. Box plots were generated for time to date entry of the various components and operational metrics. A kappa score was calculated to assess the interrater reliability among reviewers. All analysis was performed in R (R Core Team (2017). R: A language and environment for statistical computing).

Results

A total of 83 calculators were received from the creators of www.MDcalc.com. Seventeen calculators were excluded to reach our final list of ten emergency medicine clinical decision rules. To view a full list of these calculators and why each one was excluded, please refer to Additional file 3: Appendix C. One hundred and forty-five charts were reviewed for the CURB-65 rule and HEART score, each. In the HEART score group, 2 charts had either missing or errant data from the review process and were excluded in the data analysis. The demographic information for the patients is demonstrated in Table 1. The Kappa statistic was 0.75 and 0.65 for the CURB-65 and HEART score respectively. Overall, the HEART score was able to be calculated 67.8% of the time and the CURB-65 rule 26.8% of the time from the provided structured data.

Percentage documentation of structured and unstructured date elements for the HEART and CURB-65 score are presented in Table 2. Median times and interquartile ranges for each unstructured and structured data components of each rule are presented in Table 3 and Figs. 1 and 2. The median time from triage to a disposition was 280 (IQR 195–386] and 248 (IQR 170–381] minutes for the CURB-65 score and HEART score, respectively. Approximately 21% of the CURB-65 score patients and 11% of the HEART score patients had all their CDR components entered prior to their departure from the emergency department.

Time from Arrival to Presence Within EHRa of HEARTb Score Data. The above figure is a box and whiskers plot representation of Table 3 data from the HEART score. a electronic health record. b History, Electrocardiogram, Age, Risk Factors, Troponin. c emergency department. d electrocardiogram

Time from Arrival to Presence Within EHRa of CURB-65b Score Data. The above figure is a box and whiskers plot representation of Table 3 data from the CURB-65 score. a electronic health record. b Confusion, Urea, Respiratory Rate, Blood Pressure, Age ≥ 65. c emergency department. d blood urea nitrogen leve

Discussion

Automated real-time CDSSs integrating machine learning algorithms trained on big data have the potential to transform healthcare, however necessary data for the algorithms must be present at the time of use, and little is known about how data availability impacts the feasibility of these systems in general, and more specifically within the emergency department. This study examines the data entry process for two popular clinical decision rules, CURB-65 score and HEART score as a first step in assessing the feasibility of more complex CDSSs. Our findings demonstrate that, even for these simple CDRs, the HEART score and CURB-65 rule were only able to be calculated prior to ED departure in 21, and 11% of visits, respectively. For structured data only, regardless of timing, the HEART score was able to be calculated in 67.8% of visits and the CURB-65 rule in 26.8% of visits. Data components that depended on historical information (confusion, risk factors, etc.) or provider entry of data were entered frequently after ED departure.

Prior studies have noted the need for EHR integration of CDSSs, however previous studies examining the ability of CDRs to be automated are limited [7, 9,10,11]. Aakre et al. [10] investigated the programmability of clinical decision rules in an EHR based on the ability of their components to be extracted from structured data sources or retrieved using advanced technology such as natural language processing (NLP) and Boolean logic text search. Out of the 168 CDRs investigated in this study, only 26 (15.5%) were programmable exclusively using structured objective data elements. If additional advanced technology, such as NLP and Boolean logic text search, were utilized to extract both the structured and unstructured subjective components, this theoretical number increased to 43 (25.6%). This study did not examine the data completeness or timeliness of these CDRs within an existing EHR. Sheehan et al. [7], examined through qualitative analysis the Pediatric Emergency Care Applied Research Network (PECARN) clinical prediction rules for children with minor blunt head trauma and found the need for seamless integration, flowsheets to facilitate data entry, among other workflow, organizational, and human factors impeding automization. Our study adds to this knowledge about the difficulty and planning necessary for CDSSs.

Our findings have important implications for CDSS implementation within the ED. If CDDSs are to be effectively utilized, the data must be available at the point in time the CDSS provides value in the decision-making process. The fact that in our study the CDRs were often unable to be calculated from structured data indicates that for CDSSs to be effective structured fields must be built within the EHR that are designed to capture the components of the CDSSs and/or natural language processing should be utilized. In addition, CDSSs, must take into consideration the timing of data entry. For the two CDRs in this study, most of the delay in calculability resulted from data components collected through the history and physical or were dependent on provider entry of data (e.g. EKG interpretation). This is not surprising as ED providers often get behind in documentation during a shift or wait until after their shifts to complete documentation [6]. This lack of timeliness implies that the performance of CDSSs within the ED will be hampered by data entry and future efforts at CDSSs should consider more effective methods of timely data entry such as speech recognition with natural language processing pipelines.

Limitations

Our study has several limitations. We only chose two clinical decision rules to examine. While it is possible that other CDRs would have better data-entry, we believe that the themes derived from our results (late entry of historical/physical findings, and overall poor ability to calculate in a timely fashion) would be the same. It is possible that emergency physicians at our site could have used other CDRs relevant to our patient population, but the research team did not observe other CDRs documented as unstructured data in the patient chart. Some of the clinicians may not believe in the value of our selected CDRs; however, we chose our CDRs randomly from a reliable source of the top searched emergency medicine calculators. The guidelines for presence of the data components we used in our study were not strict, but this method optimized the number of components that satisfied our criteria and provided a best-case scenario. Even with these relaxed parameters, the findings show that all components are not documented for the CURB-65 and HEART scores. Lastly, our study had a small sample size of patients and thus we were unable to determine the effects of different ED environments for documentation (e.g., effect of provider type, presence or absence of dictation software).

Conclusion

Our study assessing the potential feasibility of constructing more complex automated, real-time CDSSs in the ED through examination of the EHR data entry process for two common, simple CDRs demonstrates that the CDRs cannot be calculated reliably during the emergency department visit because of problems with data completeness and timeliness of entry.

Abbreviations

- CDR:

-

Clinical Decision Rule

- CDSSs:

-

Clinical decision support systems

- ED:

-

Emergency department

- EHR:

-

electronic health records

References

Obermeyer Z, Emanuel EJ. Predicting the future - big data, machine learning, and Clinical Medicine N. Engl J Med. 2016;375:1216–9.

Murdoch TB, Detsky AS. The inevitable application of big data to health care. JAMA. 2013;309:1351–2.

Bates DW, Kuperman GJ, Wang S, et al. Ten commandments for effective clinical decision support: making the practice of evidence-based medicine a reality. J Am Med Inform Assoc. 2003;10:523–30.

Amarasingham R, Patzer RE, Huesch M, Nguyen NQ, Xie B. Implementing electronic health care predictive analytics: considerations and challenges. Health Aff (Millwood). 2014;33:1148–54.

Bennett P, Hardiker NR. The use of computerized clinical decision support systems in emergency care: a substantive review of the literature. J Am Med Inform Assoc. 2017;24:655–68.

Perry JJ, Sutherland J, Symington C, Dorland K, Mansour M, Stiell IG. Assessment of the impact on time to complete medical record using an electronic medical record versus a paper record on emergency department patients: a study. Emerg Med J. 2014;31:980–5.

Sheehan B, Nigrovic LE, Dayan PS, et al. Informing the design of clinical decision support services for evaluation of children with minor blunt head trauma in the emergency department: a sociotechnical analysis. J Biomed Inform. 2013;46:905–13.

McGinn TG, Guyatt GH, Wyer PC, et al. Users' guides to the medical literature: xxii: how to use articles about clinical decision rules. JAMA. 2000;284:79–84.

Ebell M. AHRQ White Paper: Use of clinical decision rules for point-of-care decision support. Med Decis Mak. 2010;30:712–21.

Aakre C, Dziadzko M, Keegan MT, Herasevich V. Automating clinical score calculation within the electronic health record. A Feasibility Assessment. Appl Clin Inform. 2017;8:369–80.

Green SM. When do clinical decision rules improve patient care? Reply. Ann Emerg Med. 2014;63:373.

Funding

There was no funding for the study.

Availability of data and materials

The data for analysis contains protected health information (PHI) and is therefore not able to be provided for publication.

Author information

Authors and Affiliations

Contributions

WP and RAT designed the study. WP, RH and RAT performed chart review. RAT analyzed the results. WP drafted the manuscript. All authors contributed to revisions. All authors have read and approved the manuscript.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

The study was approved by the Yale Human Research Protection Program and informed consent was waived.

Consent for publication

Not applicable.

Competing interests

The are no competing interests to disclose.

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Additional files

Additional file 1:

Appendix A. Applicable ICD-9 Codes for Clinical Decision Rules. ICD Codes used for identifying cases for chart review. (DOCX 23 kb)

Additional file 2:

Appendix B. Guideline to the Standard Evaluation of Charts. Outline and set of instructions of how each data element was encoded by the reviewers. (DOCX 15 kb)

Additional file 3:

Appendix C. Inclusion and Exclusion of Emergency Medicine Calculators. List of Calculators, Clinical Decision Rules from MDCalc. (DOCX 16 kb)

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated.

About this article

Cite this article

Perry, W.M., Hossain, R. & Taylor, R.A. Assessment of the Feasibility of automated, real-time clinical decision support in the emergency department using electronic health record data. BMC Emerg Med 18, 19 (2018). https://doi.org/10.1186/s12873-018-0170-9

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s12873-018-0170-9