Abstract

The seismic design of most civil structures is usually accomplished using the response spectrum approach or simplified equivalent lateral force methods. However, some special tasks require the use of dynamic time history analyses. In the nuclear industry, for example, dynamic analyses are required in the design verification and seismic assessment of critical buildings and in the development of floor response spectra and free-field ground response spectra. The input motion for these analyses requires acceleration time series whose response spectrum matches a target design spectrum. This article revises the continuous wavelet transform (CWT) approach to generate spectrum-compatible records from the modification of acceleration time histories recorded in actual seismic events. The computational efficiency of the algorithm is increased greatly by performing the wavelet decomposition and details reconstruction via fast convolution using fast Fourier transforms. The new algorithm is evaluated using a typical design spectrum from the nuclear industry and different seed records.

Similar content being viewed by others

Introduction

Independent of the approach selected, either displacement or force-based, most of the seismic designs of civil structures are performed using variations of the response spectrum method, where the ground motion is represented as a design response spectrum that is representative of the seismic hazard on the site. The (pseudo-accelerations or relative displacements) design response spectrum is used to estimate the force or deformation demand imposed on the structure. The main goal of this technique is to translate the ground motion into forces acting on the building. Nevertheless, for some special tasks or structures, dynamic time history analyses are required. In the standard American Society of Civil Engineers (ASCE) 7–10, for example, if the structure meets the characteristics necessary to be considered as irregular, dynamic analyses (either modal response spectrum or time history) are required to verify the design of the structure. In the nuclear industry, dynamic time history analyses are required in the design, verification, and seismic assessment of critical buildings. In addition, they are needed for the development of floor (or in-structure) response spectra and free-field ground response spectra. When dynamic time history analyses are to be performed, the seismic input needs to be defined as a time history of accelerations. To comply with code requirements, the acceleration series used should be compatible with the design spectrum, i.e., the response spectrum of the acceleration time history should match with the design spectrum in average.

To select these input records, one can look at historic records with their amplitudes scaled by a factor to optimize the fitting over the design spectrum (e.g., Bommer and Acevedo 2004). Alternatively, there are different methodologies available to generate ‘synthetic’ spectrum-compatible records. When using the first option, i.e., amplitude scaling, a larger number of records are required to obtain a reliable average of the system response due to the natural scatter of the records. On the other hand, if spectrum-compatible records are used, the number of analyses required to obtain a reliable estimate of the response is substantially reduced (e.g., Watson-Lamprey and Abrahamson 2006; Heo et al. 2011; Hancock et al. 2008).

There are several methodologies that have been proposed for the generation of spectrum-compatible records, like the adjustment of the power spectrum of a random process or the manipulation in the time or frequency domain of historic records. The criteria for the generation and evaluation of such records specified in standard seismic provisions (e.g., ASCE 7; American Society of Civil Engineers 2010) are rather slight when compared to the requirements in the nuclear regulatory guide RG 1.208 (US-NRC 2007). For example, RG 1.208 explicitly prohibits the use of synthetic motions that are not based on seed-recorded time histories so that only modified recorded ground motions can be used for site response analysis.

The available methodologies for obtaining spectrum-compatible accelerograms based on the modification of historic records can be classified into three groups: (1) those based on matching in the frequency domain by manipulation of the Fourier spectrum (e.g., Tsai 1972), (2) those grounded on wavelet adjustments to the recorded accelerograms at specific times (e.g., Lilhanand and Tseng 1988; Abrahamson 1992; Hancock et al. 2006; Al Atik and Abrahamson 2010), and (3) those centered on the manipulation of the wavelet coefficients obtained via continuous wavelet transform (e.g., Mukherjee and Gupta 2002; Suarez and Montejo 20032005). Although an acceptable level of matching can be obtained by means of any of the available methodologies, the use of spectrum-compatible records is a matter of discussion in the earthquake engineering community, as the characteristics of the final compatible record may largely differ from those observed in real records e.g., (Naeim and Lew 1995; Bommer and Ruggeri 2002; Bommer and Acevedo 2004). Although these arguments may be valid, the discussion of this issue is beyond the scope of the paper. This article revises the continuous wavelet transform approach to generate spectrum-compatible records from the modification of actual registered seismic events and propose an alternative computation algorithm to reduce its computational cost.

Methods

CWT-based methodology for the generation of spectrum-compatible records

For completeness, this section briefly describes the methodology to manipulate historic earthquake records to obtain spectrum-compatible accelerograms based on the continuous wavelet transform (CWT). A comprehensive presentation of the CWT theory behind the methodology and the properties of the wavelet function used are available elsewhere (Suarez and Montejo 2005).

The CWT of a signal f(t) can be defined as the sum over all times of the signal multiplied by scaled and shifted versions of a wavelet function Ψ as defined by Equation 1

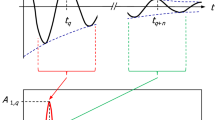

The parameter s and p are used to scale and shift the wavelet, respectively. The asterisk * indicates complex conjugation and the normalizing factor 1/√s ensures that the energy is the same for all values of s. The result of the transform is a matrix of wavelet coefficients C(s,p) that contain information about the signal at the scale s and time position p, that is, the CWT can be viewed as a two-dimensional transform that maps an original time signal f(t) into a time-scale domain. Since the scale s can be related to frequency, the CWT is occasionally used in earthquake engineering to obtain simultaneous time-frequency representations of earthquake records (e.g., Montejo and Kowalsky 2008). Once the wavelet coefficients are calculated, the signal can be reconstructed using Equation 2:

where K ψ is a constant that depends on the wavelet function selected for the analysis and the functions D(s,t) are

They are referred to as the detail functions. They have a dominant frequency that depends on the type of wavelet. In practice, a set of n discrete values of the continuous scale s are used.

The selection of an appropriate wavelet function is crucial for the effective implementation of a given application. For strong motion data synthesis and analysis, the impulse response wavelet has been successfully used in the past. It is define as

where ζ and Ω are the parameters that define the shape and central frequency of the wavelet, respectively. For the applications presented here, values of ζ = 0.05 and Ω = π are used. The mathematical properties and advantages of this wavelet in the analysis of earthquakes records are discussed in Suarez and Montejo (2005) and Hancock et al. (2006).

To obtain spectrum-compatible records one may proceed as follows:

-

1.

Perform the CWT, using Equations 1 and 4, to obtain the wavelet coefficients C(s,p) at selected values of s. The values of s are defined based on the periods or frequencies where a match with the target spectrum is desired. For the impulse response wavelet, the relation between s and the predominant frequency (f) in hertz and period (T) is given by Equation 5:

(5)

In the applications presented in this work, a total of n = 100 frequency values uniformly spaced over the log frequency scale from 0.1 to 50 Hz is used as required in Appendix F of the Nuclear Regulatory Guide RG 1.208 (US-NRC 2007). Depending on the application, the user may decide to modify these values.

-

2.

Once the wavelet coefficients C(s,p) are calculated, the detail functions are constructed using Equation 3.

-

3.

Calculate the ratios between the target spectrum Sa T (f j ) and the response spectrum of the historical record Sa R (f j ) at the frequencies defined in step 1:

(6)

-

4.

Multiply each detail function D(s j ,t) by the corresponding spectral ratio R(f j ) and reconstruct the signal with Equation 2.

-

5.

Calculate and compare the response spectrum of the reconstructed signal Sa R (f j ) with the target spectrum and repeat steps 3, 4, and 5 until a desired level of matching is attained or a maximum number of iterations are reached.

Improved efficiency using fast convolution via FFT

The CWT approach previously described for generating spectrum-compatible records has been largely used in the past, offering stable solutions and good matching with different target spectra (e.g., Velez 2007; Priestley et al. 2007; Bahar and Taherpour 2008; Linzell and Nadakuditi 2011; Montejo et al. 2012). However, a common complaint is the amount of time that takes for the algorithm to run, especially when lengthy records are used as input. To improve the processing time, a new algorithm is proposed in this paper. It computes the wavelet decomposition, Equation 1, and constructs the detail functions, Equation 3, using a fast convolution implementation by means of the fast Fourier transform (FFT).

By looking at Equation 1, it can be said that the CWT for a fixed scaled s is the convolution of the signal f(t) with the wavelet function scaled by s. The matrix of wavelet coefficients C(s,p) is formed by changing the values of s and repeating the convolution operation. The original implementation of the algorithm computed these convolutions in the time domain. However, according to the convolution theorem, convolution in the time domain is equivalent to multiplication in the frequency domain, that is, if we have the two functions f and g in the time domain, its convolution can be calculated in the frequency domain using Equation 7:

where ⊗ denotes convolution, • denotes pointwise product, and F and F−1 denote the forward and inverse Fourier transform, respectively. Since we are dealing with discrete signals, transformations between the time and frequency domains call for the application of the discrete Fourier transforms (DFT). If the DFT is implemented using the FFT algorithm, the convolution via the frequency domain can be significantly faster than directly convolving the two time domain signals. While the computational cost for direct time domain convolution of two N-point sequences is of the order O(N2), the cost for frequency domain convolution using the FFT algorithm is O(N logN). Considering the typical length of the signals used in this application and the number of convolution operations required by the algorithm (one per each value of s), this translates into large savings in the computational cost. In the new implementation, the CWT is executed using Equation 8:

where FFT and IFFT are, respectively, the direct and inverse discrete Fourier Transforms calculated with the FFT algorithm.

Note that in order to be able to compute the pointwise product (•), the lengths of the FFT for the record and for the wavelet must be the same. To obtain a faster and most precise implementation, the lengths of the wavelet and the record are set to the next power of 2 of the expected convolution length (2 * N − 1) (Smith 2002).

Finally, notice from Equation 3 that the detail functions can also be calculated using the FFT convolution between the wavelet coefficients for a fixed s and the wavelet function scaled by the same s value, as shown in Equation 9:

Figure 1 shows the computational savings obtained using the proposed algorithm and assuming that the record is decomposed at 100 scale (frequency) values. It is seen that for a relative short record of 2,000 data points (e.g., 10 s sampled at 200 Hz), the estimated ‘real time’ that will take the new implementation of the algorithm to run is about 42 s, while the old implementation will take approximately 37 min. As the length of the seed record increases, the differences become exponentially larger (notice the logarithmic scale used in the y-axis). The estimated real times in Figure 1 were computed based on a laptop PC i7 at 2.90 GHz, 8 GB RAM, and 64-bit OS.

Results and discussion

The new implementation of the algorithm is evaluated using a design spectrum typical of the US nuclear industry. The target spectrum is extracted from Section 5 of RG 1.208 (US-NRC 2007) and represents a typical uniform hazard response spectrum (UHRS) at the outcrop rock (Figure 2). In practice, synthetic records compatible with this spectrum need to be generated to perform site analyses and develop a site-specific design spectrum.

Three different seed records to be used as input for the algorithm were selected from the PEER NGA database (PEER 2013) based on the similarity of the response spectrum shape of the records with the shape of the target spectrum. Table 1 summarizes the main characteristics for each of the records selected. Since the selection is based on spectral shape similarities, the amplitude of the records is scaled to provide a best fit. The scaled response spectra for the three records are displayed and compared with the target spectrum in Figure 2 (top).

Figure 2 (bottom) shows the response spectra for 5% damping of the resulting compatible records along with the target spectrum. The 90% and 130% design spectra are also displayed (dashed lines) as these are the spectral amplitude limits specified in RG 1.208. To prevent the spectra in large frequency windows from falling below the spectrum, RG 1.208 also requires that no more than nine adjacent frequency points fall below the target spectrum. It is seen that the compatible records generated satisfy these requirements.

Figures 3,4,5 display the time histories of acceleration, velocity, displacement, Arias intensity (AI), cumulative absolute velocity (CAV), and cumulative squared velocity (CSV) for the linearly scaled and compatible records generated. It is seen that the characteristics of the seed motions are, in general, retained in the compatible records.

Conclusions

An improved algorithm for faster generation of spectrum-compatible records was developed. The algorithm is based on the modification of the CWT coefficients of a historic record. The efficiency of the algorithm is improved by performing the required convolution operations in the frequency domain via fast Fourier transforms. It was shown that the algorithm can be used to obtain compatible records that satisfy current US NRC requirements. A Matlab implementation of the algorithm is available upon request from the corresponding author.

References

Abrahamson NA: Non-stationary spectral matching. Seismol Res Lett 1992,63(1):30.

Al Atik L, Abrahamson N: An improved method for non-stationary spectral matching. Earthq Spectra 2010,26(3):601–617. 10.1193/1.3459159

American Society of Civil Engineers: ASCE 7 - minimum design loads for buildings and other structures. Reston, VA; 2010.

Bahar O, Taherpour A: Nonlinear dynamic behavior of RC buildings against accelerograms with partial compatible spectrum. In 14th World Conference on Earthquake Engineering. Beijing, China; 2008. 12–17 October 2008 12–17 October 2008

Bommer JJ, Acevedo AB: The use of real earthquake accelerograms as input to dynamic analysis. J Earthq Eng 2004,8(1):43–91.

Bommer JJ, Ruggeri C: The specification of acceleration time-histories in seismic codes. Euro Earthquake Eng 2002, 16: 3–16.

Hancock J, Watson-Lamprey J, Abrahamson NA, Bommer JJ, Markatis A, McCoy E, Mendis R: An improved method of matching response spectra of recorded earthquake ground motion using wavelets. J Earthq Eng 2006,10(special issue 1):67–89. 10.1142/S1363246906002736

Hancock J, Bommer JJ, Stafford PJ: Number of scaled and matched accelerograms required for inelastic dynamic analyses. Earthquake Eng Struct Dynam 2008, 37: 1585–1607. 10.1002/eqe.827

Heo Y, Kunnath S, Abrahamson N: Amplitude-scaled versus spectrum-matched ground motions for seismic performance assessment. J Struct Eng 2011, 137: 278–288. 10.1061/(ASCE)ST.1943-541X.0000340

Lilhanand K, Tseng WS: Development and application of realistic earthquake time histories compatible with multiple damping design spectra. In Proceedings of the 9th WCEE, vol 2. Tokyo-Kyoto, Japan; 1988:819–824.

Linzell DG, Nadakuditi VP: Parameters influencing seismic response of horizontally curved, steel, I-girder bridges. Steel Compos Struct 2011, 11–1: 21–38.

Montejo LA, González-Román LA, Kowalsky MJ: Seismic performance evaluation of reinforced concrete-filled steel tube pile/column bridge bents. J Earthq Eng 2012,16(3):401–424. 10.1080/13632469.2011.614678

Montejo LA, Kowalsky MJ: Estimation of Frequency Dependent Strong Motion Duration via Wavelets and its Influence in Nonlinear Seismic Response. Computer Aided Civil and Infrastructure Engineering 2008,23(4):253–264. 10.1111/j.1467-8667.2007.00534.x

Mukherjee S, Gupta VK: Wavelet-based generation of spectrum compatible time-histories. Soil Dyn Earthq Eng 2002,22(9–12):799–804.

Naeim F, Lew M: On the use of design spectrum compatible time histories. Earthq Spectra 1995,11(1):111–127. 10.1193/1.1585805

PEER - Pacific Earthquake Engineering Research Center: PEER NGA database. 2013.http://peer.berkeley.edu/peer_ground_motion_database . Accessed January 2013

Priestley MJN, Calvi GM, Kowalsky MJ: Direct displacement based seismic design of structures. IUSS Press, Pavia, Italy; 2007.

Smith S: Digital signal processing: a practical guide for engineers and scientists. California Technical Publishing, San Diego, CA; 2002.

Suarez LE, Montejo LA: Generación de registros artificiales compatibles con un espectro de respuesta mediante la transformada wavelet. In Proc. del II Congreso Nacional de Ingeniería Sísmica. Medellín, Colombia; 2003. November 2003 November 2003

Suarez LE, Montejo LA: Generation of artificial earthquakes via the wavelet transform. Int J Solids Struct 2005, 42: 5905–5919. 10.1016/j.ijsolstr.2005.03.025

Tsai NC: Spectrum-compatible motions for design purposes. J Eng Mech Div ASCE 1972, 98: 345–356.

US-NRC: Regulatory guide 1.208 - a performance-based approach to define the site-specific earthquake ground motion. 2007.http://www.nrc.gov/reading-rm/doc-collections/reg-guides/power-reactors/rg/01-208/01-208.pdf . Accessed January 25, 2013

Velez E: Experimental cyclic behavior of reinforced concrete wall houses loaded in their weak direction. Thesis. Department of Civil Engineering, University of Puerto Rico at Mayaguez; 2007.

Watson-Lamprey JA, Abrahamson NA: Bias caused by use of spectrum compatible motions. In Proceedings of the 8th US National Conference on Earthquake Engineering. San Francisco, California; 2006. 18–22 April 2006 18–22 April 2006

Acknowledgements

This work was performed at the University of Puerto Rico at Mayaguez under award NRC-HQ-12-G-38-0018 from the US Nuclear Regulatory Commission. The statements, findings, conclusions, and recommendations are those of the authors and do not necessarily reflect the view of the US Nuclear Regulatory Commission.

Author information

Authors and Affiliations

Corresponding author

Additional information

Competing interests

The authors declare that they have no competing interests.

Authors’ contributions

Both authors contributed equally to this research work.

Authors’ original submitted files for images

Below are the links to the authors’ original submitted files for images.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 2.0 International License (https://creativecommons.org/licenses/by/2.0), which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

About this article

Cite this article

Montejo, L.A., Suarez, L.E. An improved CWT-based algorithm for the generation of spectrum-compatible records. Int J Adv Struct Eng 5, 26 (2013). https://doi.org/10.1186/2008-6695-5-26

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/2008-6695-5-26