Abstract

This article discusses our research on polyphonic music transcription using non-negative matrix factorisation (NMF). The application of NMF in polyphonic transcription offers an alternative approach in which observed frequency spectra from polyphonic audio could be seen as an aggregation of spectra from monophonic components. However, it is not easy to find accurate aggregations using a standard NMF procedure since there are many ways to satisfy the factoring of V ≈ WH. Three limitations associated with the application of standard NMF to factor frequency spectra are (i) the permutation of transcription output; (ii) the unknown factoring r; and (iii) the factoring W and H that have a tendency to be trapped in a sub-optimal solution. This work explores the uses of the heuristics that exploit the harmonic information of each pitch to tackle these limitations. In our implementation, this harmonic information is learned from the training data consisting of the pitches from a desired instrument, while the unknown effective r is approximated from the correlation between the input signal and the training data. This approach offers an effective exploitation of the domain knowledge. The empirical results show that the proposed approach could significantly improve the accuracy of the transcription output as compared to the standard NMF approach.

Similar content being viewed by others

1 Introduction

Automatic music transcription concerns the translation of music sounds into written manuscripts in standard music notations. Important components for automated transcription are pitch identification, onset-offset time identification and dynamics identification. Research activities in this area have been reported in [1–19]. Up to now, it is still not possible to accurately transcribe polyphonic notes from an orchestra, a popular band or even a solo instrument. The mixture of sounds from different pitches pose difficulties for the existing techniques. To date, the transcription of a single melody line (monophonic) is quite accurate but transcribing polyphonic audio is still an open research area.

Commonly employed features in audio analysis could be derived from time domain and frequency domain components of the input sound wave. Transcribing a single melody line (i.e., monophonic case) involves tracking only a single note at any given time. The fundamental frequency, F0, can usually be reliably estimated using autocorrelation in the time domain or by tracking the F0 in the frequency domain. In the polyphonic case, multiple F0 tracking has been attempted using both time domain and frequency domain approaches [20]. However, harmonic interference from simultaneous notes complicate the multiple F0 tracking process. Standard techniques relying on either time domain or frequency domain approaches do not seem to be powerful enough to address the issue of harmonic interference.

This challenge has been approached from different perspectives, one of which is the blackboard architecture that incorporates various knowledge sources in the system [21]. These knowledge sources provide information regarding notes, intervals, chords, etc., which could be used in the transcription process. Explicitly encoded knowledge in this style is usually effective but requires a laborious knowledge engineering effort. Soft computing techniques such as the Bayesian approach [4, 8, 11, 19, 22] graphical modeling [23]; artificial neural networks [24]; and factoring techniques (e.g., ICA, NMF) [16, 25] have emerged as other popular alternatives since knowledge elicitation and maintenance could be performed from the training data.

This article investigates the application of NMF for an automatic transcription task. Although this is not the first time for NMF to be applied in polyphonic transcription, this study is different because it addresses three limitations of the conventional automatic transcription using NMF (see [16]): (i) the permutation of transcribed notes; (ii) the determination of the factor r which plays a major role in the accuracy of the transcribed output; and (iii) the factorisation process via alternating projected gradient method that may get trapped in local optima.

These three issues will be addressed by the use of heuristics. In brief, polyphonic audio is transformed into its frequency domain counterpart as a matrix Vm×n, where each column corresponds to the frequency m at time n. NMF factors the matrix V to two components Vm×n≈ Wm×rHr×n. In our approach, the columns of the matrix W contain r Tone-models that represent the frequency spectra of notes. Each row of matrix H is the weight corresponding to the activation of note r (i.e., the transcribed notes).

The scope of this article is limited to the discussion of polyphonic transcription of Bach chorales using NMF. The materials in this article are organised as follows: in Section 2, related studies are reviewed; in Section 3, the concepts behind our approach are discussed; in Section 4. The experimental results are presented and critically discussed; and finally Section 5 contains the conclusion of this study.

2 Related works

The transcription of polyphonic audio has a long history. Moorer [14] was among the pioneers who investigated automatic transcriptions from polyphonic audio. In his Ph.D thesis in 1975, he demonstrated the transcriptions of a two-part guitar duet as well as a synthesised violin duet (both examples have at most two notes being played simultaneously at any time). Moorer approached this problem by devising a comb filter for each musical note. Each comb filter had many narrow bandpass centered at all the harmonics of the note. The transcribed notes were inferred from the output of these comb filters.

There have been many variations to the research activities in transcribing polyphonic audio in the past few decades. Attempts to solve the polyphonic transcription problem could be viewed along a spectrum in which at one end is a knowledge-based approach and at the other end, a soft computing approach. Examples of a knowledge-based approach are the organised processing toward intelligence music scene analysis (OPTIMA) [11]; and the blackboard architecture [2, 21]. A knowledge-based approach exploits relevant knowledge in terms of rules to assist decision-making process. For example, the blackboard architecture [21] houses thirteen knowledge sources which hierarchically deal with notes, intervals, chords, etc. Exploiting expert knowledge in problem solving is usually effective since specialised knowledge is explicitly coded for the task. However, there are well known bottlenecks in knowledge acquisition and knowledge exploitation in a conventional knowledge-based system, especially if the knowledge is encoded in terms of production rules. The bigger the knowledge-based system, the longer the decision process takes. A soft computing approach is more flexible in terms of knowledge acquisition and knowledge exploitation since knowledge can be learned from examples. Once the system has learned that piece of knowledge, the exploitation is very effective since the decision process does not involve traditional searches as in conventional knowledge-based systems.

Marolt [24] experimented with various types of neural networks (e.g., time-delay neural network, Elman's neural network, multilayer perceptrons, etc.) in note classification tasks. Seventy-six neural network modules were used to recognise 76 notes from A1 to C8. Each neural network was trained to recognise one piano note with the frequency spectral features from approximately 30,000 samples where one-third of them were positive examples. Soft computing approaches such as connectionism, support vector machine, hidden Markov model [23, 24, 26], etc., usually require complete training data as the performance of the model highly depends on the decision boundary constructed using the information from the training examples. Sometimes, this is an undesirable requirement. The Bayesian approach is one of the most popular techniques for polyphonic transcription tasks. This may be because it provides a middle ground between the effectiveness of encoding prior knowledge in the model (as in knowledge-based approaches) and the ability to cope with uncertainties (found in soft computing approaches). Bayesian harmonic models have been used in pitch tracking in [8, 19]. A Bayesian model exploits the prior knowledge of fundamental frequency and the harmonic characteristics of notes produced by an instrument.

More recently, the non-negative factoring technique has received a lot of attention [16, 27, 28]. NMF factors a positive matrix V into two other positive matrices WH where W and H could bear the interpretation of additive parts of V. NMF has been used in many domains as a technique for part-based representation such as image recognition [28]. Smaragdis and Brown [16] were among the pioneers who exploited NMF in music transcription problems. They showed that NMF could be used to separate notes from polyphonic audio. In a recent study by [29], a nearest subspace search technique is employed to find the weight factor (contribution) of different sources in a dictionary.

In [1], the dictionary of atomic spectra was learned from audio examples. The learned dictionary comprised atomic spectra, which could be mapped back to pitches. This learned dictionary represents the basis vector, which could be used to factor out the transcribed notes. It should be noted that the learned atomic spectra often could not successfully represent the spectral characteristic of each pitch. From the learning process, a note may be represented by more than one atomic spectra. Furthermore, the mapping process between the pitches and the atomic spectra must still be done manually. In our approach, the matrix W of basis vectors is learned from each pitch from a desired instrument. This ensures that the basis vector (a.k.a. dictionary, Tone-model) represents the harmonic structure of each pitch at the expense of the basis vector matrix being applicable for that particular instrument only (e.g., the Tone-model learned from a piano will not work well with, for example, a violin). Many applications such as a performance analysis module in a guitar tutoring system, could benefit from this.

3 Exploring NMF for polyphonic transcription

We investigate the application of NMF to extract polyphonic notes from a given polyphonic audio. Our research problem can be summarised and illustrated using Figure 1. Let S be unobserved MIDI note-on/off signals that produce audio signal y(t). The source frequency spectra V derived from polyphonic audio could be seen as an aggregation of the components from the basis vector matrix W and their activation pattern H.

Intuitively, H should approximate the activation of note events if W could successfully learn the harmonic structure of those notes events. Although learning W from the data is flexible and adaptive, there is no known means to control or to guide the learning of W. If the basis vectors w r in the matrix W do not successfully represent the basis of each note event, then this would result in an erroneous note transcription in the matrix H.

Conventionally, the initial values of W and H are randomly initialised and the NMF algorithms use alternating minimisation of a cost function to find the optimal values of H and W. In one step, W is fixed and H is updated, while in the next step, H is fixed and W is updated. This method often results in an erroneous transcribed matrix, H, since there are many plausible solutions that could satisfy V ≈ WH. As pointed out in [30], it is impossible to separate polyphonic notes from a single polyphonic sound channel without employing some kind of constraints to the signal.

Here, we propose a novel strategy by constructing a basis vector matrix W using a Tone-model of the desired instrument (instead of randomly initialising W as in the standard NMF). Constraining W using Tone-models has many positive side effects. It resolves the issue of the permutation of transcribed output notes since the output notes would be in the same order as the employed Tone-models. Furthermore, we propose to employ heuristics to switch off the components corresponding to the inactive Tone-models (see Section 3.3). This should help improve the quality of the obtained solution, since the search is started with a more or less correct value of W.

3.1 Non-negative matrix factorisation

NMF decomposes the input matrix V into its basis vector matrix W and its activation matrix H as follows:

where , , and all the elements in V, W and H are constrained to real positive numbers V ≥ 0, W ≥ 0, H ≥ 0. We also assume that r ≤ m < n. Lee and Seung [28, 31], suggested two styles of cost function. One is to minimise the squared Frobenius norm DF(V || WH) and the other is to minimise the generalised Kullback-Leibler (KL) divergence DKL(V || WH) (see Equations 2 and 3). They also proposed multiplicative update rules, which compromised between speed and ease of implementation (of conjugate gradient and gradient descent). Note that V mn denotes an entry at row m and column n of the matrix V.

3.2 Knowledge representation

Let x be a vector representing a sequence x n , where x1 is sample number one, sampled from analog audio signal with a sampling rate f s . The sequence of discrete input samples x n could be transformed from its time domain representation to its frequency domain counterpart using Fourier transform. A discrete Fourier coefficient X k is defined as follows:

where N is the number of samples in a single window; h n is the hamming window defined as ; x n are the time domain samples; k is the coefficient index and X k is the corresponding frequency domain component. Each X k coefficient is a complex number; its corresponding magnitude and phase represent the corresponding magnitude and phase of frequency at Hz, where .

3.2.1 Piano roll representation

It is decided that the input to NMF be abstracted at the activation level of each pitch in the standard equal tempered scale [32]. This abstraction reduces the size of the input vector significantly (as compared to using the magnitude of STFT coefficients). Smaller input size also reduces the computation effort required for the same task. In our representation, the input matrix V and the matrix W are represented as piano rolls, where the center frequency of each pitch i in the piano roll is calculated using the following equation:

where i is the MIDI note number, i.e., 60 denotes middle , C4 (note that C3 is pitch that is an octave below C4 and C5 is pitch that is an octave above C4). The magnitude of the pitch i is the average of the magnitude of FT coefficients in the range of 0.99f c (i) to 1.01f c (i). For example, according to Equation 5, the pitch C4 has the center frequency of 261.63 Hz and has the lower and upper boundaries of 259.0 Hz and 264.2 Hz, respectively. With a sample rate f s = 44, 100 Hz and window size N = 8192, these correspond to k ∈ {48, 49, 50}.a Hence, the activation magnitude of the pitch i can be calculated using the equation below:

where k l and k u is the lowermost and the uppermost k index for the pitch i. The sequence of values of pitch(i) form a column of a piano roll.

3.2.2 Representing input V

At each time step, a short time fourier transform (STFT) is employed to transform the input sound wave into its frequency counterpart. Here, the STFT window is set to 8192 samples. The frequency resolution between each fourier transform (FT) coefficient is 5.38 Hz. These FT coefficients are binned according to the pitch on a piano (see Equation 6). For example, the input v n of a monophonic note C4 would show the overtone series of pitch C4. The input V is presented in a piano roll representation by concatenating the column vectors v n to form the matrix V = [v1 . . . v n ].

3.2.3 Representing the tone-model

In our implementation, the basis vector matrix (Tone-model), W tm , is also represented in a piano roll representation. The matrix W tm is called the Tone-model, since it describes the harmonic structure of the pitches of an instrument. The matrix W tm is calculated from a set of training examples which are monophonic pitches from C2 to B6. The magnitude of FT coefficients obtained from each training pitch are averaged across time frames and then binned to each pitch on the piano roll using Equation 6. Hence, each column of W tm represents the Tone-model of each pitch. The matrix W tm is constructed by concatenating the column vectors w r together to form W tm = [wc 2. . . wb 6].

3.3 Proposed transcription strategy

The overall concepts are outlined and summarised below. According to Figure 2, the Tone-models W tm are learned in a separate offline process. At run time, polyphonic audio is transformed into the input matrix V. At each time frame, the correlation between V and each component in W tm is computed and this information is employed as a heuristic to guess which w r components in the Tone-models should be switched-off. The NMF process initialises the matrix H with random values uniformly distributed on the closed interval 0[1]. NMF updates H and W until WH successfully approximate V, i.e., V - WH ≤ acceptable error. The switching-off heuristics and the NMF procedure implemented in this work will be discussed next.

3.3.1 Switching off inactive pitches

Probable active pitches are guessed by comparing input V with the Tone-model W tm . This is based on the fact that since W tm is a matrix representing Tone-model vectors, each column w r represents the Tone-model of each pitch from C2 to B6. For a given time frame n, if there is no sounding note, then entries of v n are expected to be less than the entries of the Tone-model vectors w r . On the other hand, entries of v n are expected to share common harmonic structures with w r if the note r is sounding. Hence, an overlap in an overtone series between the input v n and the Tone-model w r is defined as a vector OL r :

The ratio ||OL r ||/||w r || has its value lie in the closed interval 0[1]. OL r is 1 when there is no overlap and OL r is 0 when w r is completely overlapped by v n . The note r is considered not sounding if the ratio ||OL r ||/||w r || is more than a threshold value and considered sounding if it is otherwise. The threshold value is empirically determined.

This heuristic is used to guess whether the pitch r is active by comparing the input spectrum at a time frame n to all the w r and flagging the active pitch r. For each time frame n, a vector L n = [l1, . . . , l r ] T estimates whether the pitch r is active or inactive. After running through all the time frames of the input signal, the active pitches are determined as a disjunction of all the active pitch flags L = L1 ν L2 ν . . . ν L n . The pseudo code below summarises this process.

function probablePitch(W tm , V) return Lr×1an active pitch vector

for each v n associated with time frame n

L n = [ ]

for each w r of each Tone-model r = 1, 2 , . . . , 60

if ||OL r ||/||w r || > threshold

then l r = 1 else l r = 0

end

L n ← append(l r ,L n )

end

end

L ← L1 ν L2 ν . . . ν L n

return L

end

The switch L estimated from the input V is used to switch off irrelevant basis vectors w r , i.e., the constrained W = W diag(L), where diag(L) returns a diagonal matrix.

3.3.2 Transcribing polyphonic notes using NMF

Multiplicative update rules that minimise the cost functions (see Equations 2 and 3) proposed by Lee and Seung [31] are considered to be the standard NMF algorithms [33]. They are guaranteed to find at least locally optimum solutions [31]. The update rules in (Equations 8 and 9) corresponding to DKL(V || WH) are reproduced here for readers' convenience.

Factoring the matrix V to two components W and H can be viewed as a search process. The update rules guide the search to a solution using the gradient of the cost functions. There are many plausible solutions that could satisfy this factoring. Sometimes, the search will get stuck in sub-optimum solutions. Most search techniques could benefit from extra knowledge introduced in terms of constraints. Depending on applications, extra information introduced to guide the search can be in different forms. In our application, initialising the basis vector matrix W with Tone-models and switching off inactive r components help initialise the search near optimum solutions and better solutions are usually obtained if the search starts near good solutions in the search space. In our implementation, the cost function updated is closely related to expectation maximisation maximum likelihood (EMML) which has been studied in image processing [34]. In EMML, H is iteratively updated while W is assumed to be known and fixed. In our experiment, H is updated using (following [28]):

In our experiment, two experimental designs have been carried out: (i) Tone-model NMF (TM-NMF) where the matrix W is initialised using the Tone-model W tm and r constraint and its values are fixed throughout the run; and (ii) initialised constrained Tone-model (ICTM-NMF), where W is initialised in the same fashion as in TM-NMF but W is updated as below:

A column of V is formed from columns of W weighted by value given in H. In other words, a column of H is a new representation of a column of V based on the basis of W. Hence, each w r is updated by scaling it to the predicted activation of max r (Σ n Hrn) (Equation 11), each w r is then normalised (Equation 12). The pseudo code below summarises the two NMF processes (TM-NMF and ICTM-NMF) employed in our experiments.

function transcribeBach(W tm , V) return Pitch activation H

L ← probablePitch(W tm , V)

Initialise H randomly s.t. H rn ∈ {h| 0 ≤ h ≤ 1}

Initialise W using Tone-models and heuristics; W ← W tm diag(L)

/* Stopping criteria:

-

(i)

Exceed max-iteration-set at 3000 iterations, or

-

(ii)

The matrix H converges, their values become stable, or

-

(iii)

V - WH ≤ acceptable error */

while some stopping criteria is not satisfied

update H using Equation 10

if TM-NMF then W ← W tm diag(L)

if ICTM-NMF then update W using Equations 11, 12

end

return H

end

The output H is then converted to a binary (note on/off) by applying a threshold to it. To evaluate H, the note on/off information of each original chorale is extracted from the MIDI file. This forms a ground truth for each chorale. In this process, the MIDI time is retimed to linearly map with the number of frames in H.

4 Experimental results

In this experiment, the input wave files were generated by playing back MIDI files using a standard PC sound card. Recording was done with 16 bit mono and with a sampling rate of 44100 Hz. The recorded wave file was transformed to the frequency domain using STFT. The STFT window size was set at 8192 samples. The Hamming window function was applied to the signal before converting it to the frequency domain. The experimental results of Bach chorales are summarised in Table 1. Two variations of the Tone-model usage were carried out (i) TM-NMF, and (ii) ICTM-NMF.

4.1 Evaluation measures

The literature uses a variety of ways to define the correct transcription of notes. Should a note be classified as correctly transcribed or incorrectly transcribed if the note is accurately transcribed in terms of pitch but the duration is not exact? In [35], note detections were calculated on each frame. The transcription output was converted to a binary note on/off and was compared to MIDI note on/off on a frame by frame basis. This was a good approach since it took the note duration into account. This work evaluated the transcribed output using the same approach in [35]. The results were evaluated based on the standard precision and recall measures where, in each frame, true positive tp is the number of correctly transcribed note events, false positive fp is the number of spurious note events and false negative fn is the number of note events that are undetected.

The true positive was calculated based on the matched pixels between the original piano roll and the transcribed piano roll H (i.e., Original and Transcribed in Equations 13, 14 and 15). False positive and false negative were calculated from the unmatched pixels.

where max(a, b) returned the a if a >= b otherwise returned b. The Original and Transcribed were r × n binary matrices (note on = 1 and note off = 0). The Transcribed matrix was obtained by thresholding the output H (see Section 3.3.2). The Original matrix was obtained by time-scaling the note on/off matrix to match the number of time frames in H. In this study, the note on/off matrix was obtained from the note on/off events extracted from the MIDI files and this provided the ground truth reference.

We resort to the precision, recall and f measures to judge the performance of the system. Precision provides measurement on the percentage of the correct transcribed note-on events from all the transcribed note-on events. Recall provides measurement on the percentage of the correct transcribed note-on events from all actual note-on events (i.e., reference ground truth).

In the transcription task, the precision and recall measures are equally important since it is undesirable to have a system with high precision but poor recall (or vise versa). Hence, the f-measure is computed since it provides an evenly weighted result of both precision and recall measures. These measures are defined as:

4.2 Transcribing Bach chorales using ICTM-NMF

Figure 3 illustrates the effectiveness of our approach in tackling the weakness of applying standard NMF; the top pane shows the piano roll from the original chorale; the bottom left pane shows the output from a standard NMF where W and H are randomly initialised and r set at 60. Noise is observed in many places. This is a common problem with standard NMF algorithms used for transcribing pitches. This problem was mitigated with our proposed NMF with a constrained Tone-model. A great improvement in the output quality was observed in Figure 3 (bottom right pane). Although the transcribed output did not show an exact match to the input piano roll, a great improvement was observed in the bottom right pane.

Effectiveness of ICTM-NMF as compared to standard NMF. Top pane: original piano roll of chorale Aus tiefer Not schrei ich zu dir; Bottom left pane: the transcription output from a standard NMF. Bottom right pane: the transcription output of the same chorale with ICTM-NMF. The y-axes represent the pitches from C2 (MIDI note number 36) to C7 (MIDI note number 96) while the x-axes represent time.

Figure 4 shows the piano roll output from the transcription of chorale Aus tiefer Not schrei ich zu dir using ICTM-NMF. The first row is a piano roll representation of an original chorale. The second row shows the transcribed output. The fourth and the fifth rows are fp and fn, respectively. From the Figure, fp and fn were calculated from the differences between the original chorales and the transcribed chorales.

Table 1 summarises the transcription results of Bach chorales. A total of fourteen chorales were arbitrarily chosen (chorales ID follows Riemenschneider. 371 harmonized chorales and 69 chorale melodies with figured bass). The input wave files of all the Bach Chorales used here were obtained by playing back the Bach chorale MIDI files downloaded from http://www.jsbchorales.net/bwv.shtml.

The output from ICTM-NMF shows a great improvement over the output from TM-NMF (around 7.5% improvement in f values). ICTM-NMF differed from TM-NMF in the following points: the Tone-models (i.e., the matrix W) was fixed in the TM-NMF but not fixed in ICTM-NMF. The W was allowed to be varied in ICTM-NMF, subjected to the constraint . As a consequence from the above point, all active basis vector (columns of W) remained active in TM-NMF. However, it was possible for active basis vectors in ICTM-NMF to be inactive during the W update process.

4.3 Performance comparison with related works

4.3.1 Beethoven's Bagatelle Opus 33, No. 1 in E

In this report, two transcriptions of the pieces demonstrated in previous studies were carried out using our proposed method. The first one was the transcription output from Beethoven's Bagatelle using NMF presented in [35]. The input sound wave, in [35], was recorded from a MIDI controlled acoustic piano.

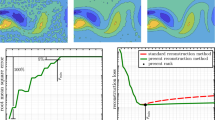

The plot between recall and specificityb is reproduced in Figure 5 along with the output from our approach. Varying the threshold values that control the binary note on/off conversion of the output H produces the performance curve plot shown in Figure 5. The plot can be used to visually compare the performance of our system to the NMF output in [35]. The optimal f values for both NMF runs in the previous work were about 0.54 and 0.60 while our system obtained the optimal f value of 0.72 (recall 73.29% and precision 70.56%).

4.3.2 Mozart's piano Sonata No. 1 (KV279)

There are three movements in this sonata: Allegro, Andante and Allegro. Polyphonic transcription of the first two minutes of the first movement from KV279 was attempted using non-negative matrix division in [15]. Here, it was decided that, the whole first movement would be used in our experiment. The main difference in our work is that in [15], the update of W step (see 3.1) was omitted. The input sound wave was recorded from a MIDI controlled synthesised piano in our experiment while the input sound wave was recorded from a computer controlled Bösendorfer SE290 grand piano in [15]. It was reported that the recall rate was 99.1%, the precision rate was 21.8% and the f value was 0.35. The issue of poor f value was tackled in [15] by further post-processing the output from NMF using classifiers (rule based, instance based and frame based). This improved the f value significantly. Unfortunately, due to the limited length of [15], information given about the process was incomplete. There was no transcription output from [15], so a visual inspection of the output generated by both systems was not possible. For this piece, our approach yielded the optimal f value of 0.63 (recall 63.0% and precision 63.9%).

The performance statistics reported in our experiments were calculated using the precision and recall measures based on the graphical representation of a piano roll (as discussed in Section 4). It should also be pointed out that the counting of true-positive in [15] was based on correctly found notes,c which is unlike our true-positive which was based on frame by frame counting. The evaluations of the transcription of Beethoven's Bagatelle in [35] and our study have been based on similar assumptions.

4.4 Transcribing polyphonic sound from acoustic instruments

Sounds produced from real acoustic instruments possess a much more complex harmonic structure. The manner of note executions, the physical characteristic of the string, the soundboard, etc., all work together to determine the harmonic structures. The dictionary approach, such as the proposed Tone-model, represents complex harmonic structure of a note using a static Tone-model prototype. A static dictionary might not be effective in such a circumstance. Thus it is important to test the performance of the proposed approach on real acoustic musical instruments.

For this purpose, the chorale numbers 10, 26 and 28 were played on a classical acoustic guitar (model Yamaha CG 40) and on an upright acoustic piano (model Atlas). The sound was recorded directly via a single micropone with 16 bit bit-depth, and a sample rate of 44,100 Hz. The microphone had the following specifications: frequency response: 20 Hz - 16 KHz, sensitivity: -58 ± 3 dB, S/N ratio: 40 dB.

The transcription accuracy of polyphonic pieces performed by acoustic and synthesised instruments is displayed in Table 2. It was observed that the transcription accuracy obtained from acoustic sources was generally poorer than those from synthesised sources. Figure 6 shows the transcription output from the synthesised guitar sound and the acoustic guitar sound. The transcription output from synthesised sound (first row) shows better recall than the output from the acoustic sound (third row). It was observed from the experiment that transcription output from acoustic instruments tended to give inaccurate duration even though the pitch was correctly transcribed. This was common at a high pitch range region. The synthesised instruments did not suffer from this behaviour.

Plots of true positive obtained from syntheised guitar sound (first row) and from acoustic guitar sound (third row). The true positive values are overlaid on top of the original chorale: overlay of synthesised guitar sound (second row), overlay of acoustic guitar sound (fourth row). Note that in the overlaid tp on the original chorale, the colour code of the correct transcription (tp) is presented in white colour and the missing transcription (fn) is presented in gray colour.

The overlays of the true positive output on the original chorale (the second and the fourth rows of Figure 6) shows that the degradation in performance in the acoustic case is mainly from the inaccuracy in transcribed duration. This could be caused by the harmonic complexity of real acoustic instruments and, from our observation, the faster decay rate of acoustic sound as compared to the synthesised sound (especially at the high pitch range).

We would also like to highlight that the degrading performance from the discrepancies in the duration did highlight the potential of our proposed approach. It implies that fine tuning in duration using information from the onset-offset time would greatly improve the quality of the transcriptions.

5 Conclusions

In this article, we proposed a new strategy to tackle the three limitations of standard NMF in the polyphonic transcription task. By constructing a basis vector matrix W using a Tone-model of the desired instrument and relying on heuristics to switch off the components corresponding to the inactive pitches, the experimental results showed an improvement in the transcription performance. This strategy worked because of the importance of the learned basis vector matrix and the ability of the NMF to switch off inactive basis vectors.

The number of r played a crucial role in extracting note events. If the number of r was set higher than the actual active pitches, noise would appear as transcribed notes. On the contrary, if the number of r was set too low, events from different pitches would be transcribed as coming from the same pitch. To find the exact number of r is therefore a big challenge for polyphonic transcription using NMF [16]. In recent works by [6, 15], NMF with a fixed W that learned from a desired instrument was proposed. In these works, the dictionary matrix, the pitch templates and the Tone-models acted as the basis vector matrix. This work extended the same concept to handle common limitations of NMF in polyphonic transcribing application.

Initialising the basis vector matrix using FFT spectra as a Tone-model is a powerful heuristic. However, this alone would not be enough to produce good output. From our study, the following heuristics should be included.

-

1.

The Tone-model must characterise the input instrument;

-

2.

the estimated r should be equal to or more than the actual r; and

-

3.

the fixed Tone-model might not work well if r is not accurate.

To elaborate on the above heuristics, let us compare the NMF to a search process. If the NMF factoring process is seen as a search, the act of initialising W with a Tone-model is analogous to starting the search near the global optimum. When the search begins, fixing W biases the search to a certain direction. If the basis vector matrix W characterises the Tone-model of the input instrument and the value of active pitches r are determined correctly, then, it is likely that the obtained solution would be of good quality. If the value of r is wrongly determined, then the search might be guided to any non-optimal solution. Allowing the W to vary should lower the magnitude of inactive w r and it is possible to compensate for an overestimated number of r. The experiment showed that the best results were obtained when the W was initialised using Tone-model and W was also allowed to be adjusted. In future work, we hope to further explore the extension of the Tone-model concept to handle sound produced from acoustic instruments.

Endnotes

aThe index k might need to be rounded up/down since the boundary frequency of each pitch would not fall exactly on the desired value. bSpecificity = 1-Precision. cAs reported in [15]: "A note event is counted as correct if the transcribed and the real note do overlap".

References

Abdallah SA, Plumbley MD: Polyphonic music transcription by non-negative sparse coding of power spectra. In Proceedings of International Conference on Music Information Retrieval (ISMIR 2004). Barcelona, Spain; 2004:318-325.

Bello JP: Toward the automated analysis of simple polyphonic music: a knowledge-based approach. Ph.D. dissertation, Department of Electrical Engineering, Queen Mary, University of London, London, UK; 2003.

Brown GJ, Cooke M: Perceptual grouping of musical sounds-a computational model. J New Music Res 1994, 23(2):107-132. 10.1080/09298219408570651

Cemgil AT, Kappen B, Barber D: Generative model based polyphonic music transcription. In IEEE Workshop on Applications of Signal Processing to Audio and Acoustics. New Paltz, NY, USA; 2003:181-184.

Chafe C, Jaffe D: Source separation and note identification in polyphonic music. In Proceedings of IEEE international Conference on Acoustic Speech and Signal Processing. Tokyo, Japan; 1986:1289-1292.

Cont A: Realtime multiple pitch observation using sparse non-negative constraints. In Proceedings of the 7th International Symposium on Music Information Retrieval. (ISMIR), Victoria, BC, Canada; 2006.

Dannenberg RB, Hu N: Polyphonic audio matching for score following and intelligent audio editors. In Proceedings of the International Computer Music Conference (ICMC 2003). San Francisco, USA; 2003:27-33.

Davy M, Godsill SJ: Bayesian Harmonic Models for Musical Signal Analysis. In Bayesian Statistics 7. Edited by: Bernardo JM, Bayarri MJ, Berger JO, Dawid AP, Heckerman D, Smith AFM, West M. Oxford University Press, Oxford; 2003:105-124.

Dixon S: On the computer recognition of solo piano music. In Proceedings of the Australian Computer Music Conference. Brisbane, Australia; 2000:31-37.

Goto M: A real-time music-scence-description system: predominant-F0 estimation for detecting melody and bass lines in real-world audio signals. Speech Commun 2004, 43: 311-329. 10.1016/j.specom.2004.07.001

Kashino K, Nakadai K, Kinoshita T, Tanaka H: Application of bayesian probability network to music scence analysis. In Proceedings of IJCAI workshop on CASA. Montreal, Canada,; 1995:52-59.

Klapuri A: Sound onset detection by applying psychoacoustic knowledge. In Proceedings of ICASSP. Volume 6. Phoenix, Arizona, USA; 1999:3089-3092.

Klapuri A: Automatic music transcription as we know it today. J New Music Res 2004, 33(3):269-282. 10.1080/0929821042000317840

Moorer JA: On the segmentation and analysis of continuous musical sound by digital computer. PhD thesis, Department of Music, Standford University, USA; 1975.

Niedermayer B: Non-negative matrix division for the automatic transcription of polyphonic music. Proceedings of International Conference on Music Information Retrieval (ISMIR 2008), Austria 2008, 545-549.

Smaragdis P, Brown JC: Non-negative matric factorization for polyphonic music transcription. In Proceedings of IEEE workshop Applications of Signal Processing to Audio and Acoustics. New Paltz, NY, USA; 2003:177-180.

Phon-Amnuaisuk S: Transcribing Bach chorales using non-negative matrix factorization. In Proceedings of the 2010 International Conference on Information Technology Convergence on Audio, Language and Image Processing (ICALIP2010). Shanghai China; 2010:688-693.

Sophea S, Phon-Amnuaisuk S: Determining a suitable desired factor for non-negative matrix factorisation for polyphonic music transcription. In Proceedings of the 2007 International Symposium on Information Technology Convergence, (ISITC 2007). Sori Arts center, Jeonju, Republic of Korea; 2007:166-170.

Walmsley PJ, Godsill SJ, Rayner PJW: Bayesian graphical models for polyphonic pitch tracking. In Proceedings of diderot forum on mathematics and music. Vienna, Austria; 1999:1-26.

de Cheveigné A: Multiple F0 estimation. Edited by: DeLiang W, Brown GJ. Computational Audio Scene Analysis IEEE Press, New York; 2006:45-79.

Martin KD: A blackboard system for automatic transcription of simple polyphonic music. M.I.T. Media Lab, Perceptual Computing, Technical Report 1996, 385.

Barbancho I, Barbancho AM, Jurado A, Tardón LJ: An information-proach to blind separation and blind deconvolution. Appl Acoust 2004, 65: 1261-1287. 10.1016/j.apacoust.2004.05.007

Raphael C: Aligning music audio with symbolic acores using a hybrid graphical model. Mach Learn 2006, 65(2-3):389-409. 10.1007/s10994-006-8415-3

Marolt M: A connectionist approach to automatic transcription of polyphonic piano music. IEEE Trans Multimedia 2004, 6(3):439-449. 10.1109/TMM.2004.827507

Vincent E, Rodet X: Music transcription with ISA and HMM. In Proceedings of the Fifth International Conference on Independent Component Analysis and Blind Signal Separation (ICA2004). Gradana, Spain; 2004:1197-1204.

Poliner GE, Ellis DPW: A discriminative model for polyphonic piano transcription. EURASIP J Adv Signal Process 2007, 2007(1):154-154.

Hoyer PO: Non-negative sparse coding. In Proceedings of IEEE Workshop on Neural Networks for Signal Provcessing XII. Martigny, Switzerland; 2002:557-565.

Lee DD, Seung HS: Learning the parts of objects by non-negative matrix factorozation. Nature 1999, 401: 788-791. 10.1038/44565

Smaragdis P: Polyphonic pitch tracking by example Proceedings of IEEE workshop Applications of Signal Processing to Audio and Acoustics. New paltz, NY, USA; 2011:125-128.

Ellis DPW: Model-based Scene Analysis. Edited by: DeLiang y, Brown GJ. Computational Audio Scene Analysis IEEE Press, New York; 2006:115-146.

Lee DD, Seung HS: Algorithms for Non-Negative Matrix Factorization. Edited by: Leen Todd K, Dietterich Thomas G, Volker T. Advances in Neural Information Processing Systems 13 MIT Press, Cambridge, MA; 2001:556-562.

Backus J: The Acoustical Foundations of Music. 2nd edition. W.W. Norton & Company, Inc, New York; 1977.

Cichocki A, Zdunek R: NMFLAB MATLAB Toolbox for non-negative matrix factorization.2006. [http://www.bsp.brain.riken.jp/ICALAB/nmflab.html]

Byrne CL: Accelerating the EMML algorithm and related iterative algorithms by rescaled block-iterative (RBI) methods. IEEE Trans Image Process 1998, 7(1):100-109. 10.1109/83.650854

Plumbley MD, Abdullah SA, Blumensath T, Davies ME: Sparse representation of polyphonic music. Signal Process 2005, 86(3):417-431.

Acknowledgements

We wish to thank anonymous reviewers for their comments, which help improve this article. We would also like to thank IPSR-Universiti Tunku Abdul Rahman for their partial financial support given to this research.

Author information

Authors and Affiliations

Corresponding author

Additional information

Competing interests

The author declares that they have no competing interests.

Authors’ original submitted files for images

Below are the links to the authors’ original submitted files for images.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 2.0 International License (https://creativecommons.org/licenses/by/2.0), which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

About this article

Cite this article

Phon-Amnuaisuk, S. Transcribing Bach chorales: Limitations and potentials of non-negative matrix factorisation. J AUDIO SPEECH MUSIC PROC. 2012, 11 (2012). https://doi.org/10.1186/1687-4722-2012-11

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/1687-4722-2012-11