Abstract

Background

Primary healthcare in developed countries is undergoing important reforms, and these require evaluation strategies to assess how well the population's expectations are being met. Although numerous instruments are available to evaluate primary healthcare (PHC) from the patient perspective, they do not all measure the same range of constructs. To analyze the extent to which important PHC attributes are covered in validated instruments measuring quality of care from the patient perspective.

Method

We systematically identified validated instruments from the literature and by consulting experts. Using a Delphi consensus-building process, Canadian PHC experts identified and operationally defined 24 important PHC attributes. One team member mapped instrument subscales to these operational definitions; this mapping was then independently validated by members of the research team and conflicts were resolved by the PHC experts.

Results

Of the 24 operational definitions, 13 were evaluated as being best measured by patients, 10 by providers, three by administrative databases and one by chart audits (some being best measured by more than one source). Our search retained 17 measurement tools containing 118 subscales. After eliminating redundancies, we mapped 13 unique measurement tools to the PHC attributes. Accessibility, relational continuity, interpersonal communication, management continuity, respectfulness and technical quality of clinical care were the attributes widely covered by available instruments. Advocacy, management of clinical information, comprehensiveness of services, cultural sensitivity, family-centred care, whole-person care and equity were poorly covered.

Conclusions

Validated instruments to evaluate PHC quality from the patient perspective leave many important attributes of PHC uncovered. A complete assessment of PHC quality will require adjusting existing tools and/or developing new instruments.

Similar content being viewed by others

Background

Primary healthcare (PHC) in developed countries is undergoing significant changes in scope and organizational form. Depending on the reforms' main objectives and components, the evaluations may focus on different attributes, including peoples' experience of care. In addition, the notion of experience of care can be conceptualized in diverse ways and the constructs developed to measure it will vary depending on the instruments used to assess patients' perceptions and expectations. Instruments trying to capture the experience of care have looked at various attributes: access and organization of care, continuity and longitudinality, comprehensiveness, patient-centeredness and community orientation, interpersonal communication and behaviour, cultural sensitivity and discrimination, coordination and integration, empowerment and enablement, courtesy and trust.

Some instruments have divided these attributes into distinct conceptual dimensions such as organizational/structural features of care (including organizational access, visit-based continuity, integration of care, clinical team) and quality of interactions with the primary care physician (including communication, whole-person orientation, health promotion, interpersonal treatment, patient trust) [1]. Not too dissimilarly, others have grouped constructs into clinical behaviour factors and organization of care, subsuming the various attributes into different dimensions of quality related to the technical and interpersonal aspects of clinical encounters [2].

The array and overlap of attributes measured by the different instruments can generate confusion around which instruments are the most appropriate to evaluate reforms. An important first step in bringing some consistency to multiple evaluation efforts is to use a common lexicon to describe which attributes of care are covered by different instruments. The objectives of this paper are to assess the attribute coverage of various instruments that evaluate PHC from the patient perspective and to identify PHC attributes that would benefit from further instrument development. Our overall aim is to inform decision-makers and evaluation researchers about the scope of instruments available and the need for methodological developments to evaluate PHC reforms. This was part of a sequence of studies leading ultimately to in-depth, comparative psychometric information on a subset of instruments that evaluate PHC from the patient perspective, to guide decision-makers and evaluation researchers in the selection of tools to evaluate the effects of major reform initiatives in PHC.

Method

In 2004 a Delphi consultation process with Canadian PHC experts produced operational definitions for 24 attributes that should be evaluated in current and proposed PHC models in the Canadian context [3] (Table 1). Among these, 13 were identified by the Canadian PHC experts in the study by [3], as being best measured from the patient perspective, 10 by providers, three by administrative databases and one by chart audits. This mapping builds on that study and is the groundwork for selecting a smaller subset of tools for in-depth comparative study. This study has received ethical approval from the Comité d'éthique de la recherche de l'Hôpital Charles-Lemoyne.

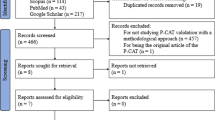

Identifying validated instruments for evaluating PHC

We searched the scientific literature for validated measurement instruments available in the public domain. We restricted our focus to instruments that evaluate primary or ambulatory care from the client or patient perspective and that address attributes or dimensions expected to change with the reforms. We first conducted a systematic electronic search of the MEDLINE and CINAHL databases using as keywords: primary healthcare, outcome and process measurement; questionnaires; and psychometrics. We eliminated questionnaires used for screening for illnesses, functional health status, or perceived outcomes of care for specific conditions (e.g. migraines, mental healthcare). We then supplemented the list by consulting local experts in health services research and by scanning references of review papers on primary care.

We identified 17 tools that had undergone different levels of quantitative and qualitative validation. Several had long and short versions (Primary Care Assessment Tool), or variations of one another (General Practice Assessment Survey and General Practice Assessment Questionnaire, both largely informed by the Primary Care Assessment Survey). After excluding these duplications, 13 unique questionnaires remained. One instrument, the Canadian Community Health Survey--Health Services Access component, was retained despite the absence of reported psychometric assessment because of its widespread use in some provincial evaluation initiatives and relevance in the Canadian context.

Mapping the instruments' subscales to operational attributes of PHC

Information available from questionnaires was entered into linked data tables in Microsoft Access. Information on questionnaire items, subscales and psychometric properties was entered to create a measurement tools database. For each questionnaire, one of the authors (GB) matched the content of each subscale to the experts' operational definitions of PHC. A similar exercise was repeated for each individual item in the subscales. The mapping was constrained to 16 attributes that can be ascertained by patients (including 3 components of Technical Quality of Care).

To validate the mapping of subscales to attributes, we applied two different levels of rigour. We were most rigorous in mapping nine questionnaires focusing on usual source of care that were the candidate instruments for more detailed comparison in a subsequent study: the Components of Primary Care Index (CPCI, [4]); the Interpersonal Processes of Care (IPC, [5]); the EUROPEP instrument [2]; the General Practice Assessment Questionnaire (GPAQ, [6, 7]); the Medical Interview Satisfaction Scale (MISS-21, [8]); the Patient Assessment of Chronic Illness Care (PACIC, [9]); the Primary Care Assessment Survey (PCAS, [1]); the Primary Care Assessment Tool (PCAT, [10, 11]); and the Veterans Affairs National Outpatient Satisfaction Survey (VANOCSS, [12]). Three visit-based questionnaires (PEQ, [13]; MISS-21, [8]; COAHS, [14]) were also mapped to attributes using this method. It should be noted that the CAHPS, GPAQ and VANOCSS questionnaires also integrated a visit-based scale.

Five investigators independently examined the initial mapping. Consensus was achieved if four out of the five agreed on the original mapping or on a proposed alternate mapping. Discrepancies were resolved and final consensus was achieved in a meeting.

The remaining five questionnaires--the Consumer Assessment of Health Plans Study (CAHPS 2.0, [15]); the Canadian Community Health Survey (CCHS); the Patient Experience Questionnaire (PEQ, [13]); the Patient Satisfaction Questionnaire Short Form (PSQ-18, [16]); and the Opinion de la clientèle au sujet des services ambulatoires (Consumer Opinions on Ambulatory Health Services (COAHS, [14]) tool--underwent a less rigorous validation by two of the authors (JH, CB).

Results

The 17 validated questionnaires identified through the literature review comprised 118 subscales. These subscales mapped to 15 of the 24 attributes of PHC identified in our Delphi process. Nine operational attributes of PHC were not covered by any of the assessed questionnaires. Only one subscale mapped to any attributes in structural dimensions or system performance: the PCAT Coordination (Information Systems) to clinical information management. Consequently we removed the structural dimensions and system performance attributes from presentation of results. From Figure 1, we can see a high variability of coverage across attributes and that some dimensions--such as Person-Oriented and Clinical Practice Attributes Dimensions--are better covered than others. In general, the attributes best covered in terms of number of questionnaires are those that experts have evaluated as being most validly measured from the patient perspective. The community-oriented dimension has very low coverage among assessed questionnaires.

The detailed mapping of subscales to attributes, as well as items, psychometric information and developer contact are available upon request to the author. Table 2 describes the coverage of attributes by the measurement instruments. Note that both first-contact accessibility and accommodation are subsumed under accessibility and that client/community participation and intersectoral team, two attributes not measured in any instrument, are not included in the table.

The attributes widely covered by available instruments were accessibility, relational continuity, interpersonal communication, management continuity, respectfulness and technical quality of clinical care. In contrast, advocacy, management of clinical information, comprehensiveness of services, cultural sensitivity, family-centred care, whole-person care, population orientation and equity are poorly covered.

Figure 2 shows the number of PHC attributes covered in each of the validated questionnaires examined in our study. Again, we see important variations, with some questionnaires covering up to nine attributes while others restrict their focus to two. The PCAT, PCAS, GPAS, CPCI, and IPC questionnaires show the best coverage, with six or more attributes covered. In contrast, the EUROPEP, CAHPS 2.0 and Canadian Community Health Survey (CCHS) show much narrower coverage, with only three attributes or fewer being covered. We also mapped individual items to attributes, and not surprisingly, found a broader coverage (results not shown), though not of attributes such as advocacy, cultural sensitivity, family-centred care, whole-person care, population orientation and equity.

Discussion

Assessing PHC performance from the patient perspective

In reviewing the literature, we found many tools that assess PHC attributes from the patient perspective. The 13 unique validated instruments we retained covered many of the PHC attributes identified through our previous Delphi consultation of experts [3]. However, not all aspects of PHC are covered by existing tools. Attributes related to practice structure, community orientation and system performance dimensions were scarcely addressed, while clinical practice and person-oriented attributes were widely covered. This is not surprising since more structural attributes and attributes related to communities or population aspects have been identified as not being as well measured from the patients' perspective compared to attributes related to clinical encounter and interpersonal aspects of care [3]. The clinical practice attributes were identified by the experts as core and essential to the functioning of all PHC models [17]. We can therefore conclude that although there is only partial coverage in validated instruments of all PHC attributes best addressed by patient perspective and relevant for health reform evaluation, the core attributes are well covered. To be able to get more comprehensive assessments of PHC from the patient perspective, further development is needed for items or subscales to assess advocacy, management of clinical information, comprehensiveness of services, cultural sensitivity, family-centred care, whole-person care, population orientation and equity. Some tools offer better coverage than others. The PCAT and PCAS are the two instruments with the best coverage of PHC attributes. Whether the current field of evaluative research resorts preferentially to the instruments that cover the broader range of attributes goes beyond the scope of this study but would be important to assess in future studies.

The visit-based instruments cover a narrower range of attributes than do usual care instruments. This is understandable, given that some attributes, such as those related to continuity of care or comprehensiveness; involve the notions of multiple visits or services being provided by more than one professional. Therefore, focusing on usual care enables questionnaires to touch on attributes that could be hard to evaluate in a single visit. However, some attributes related to technical quality of care or first-contact accessibility might be more accurately and precisely measured through visit-based instruments. It may therefore be relevant to integrate visit-based and usual care items in instruments to optimize the coverage of attributes addressed in PHC reforms.

Choosing the right measurement tool for reform objectives

Our study suggests that some instruments might be more appropriate than others for specific reform evaluations. Reforms specifically targeting accessibility, for instance, would be best evaluated by instruments that provide more coverage of accessibility attributes. However, customizing tools to specific organizational reform activities remains a challenge, given that most reforms involve a complex set of measures and could produce unexpected outcomes [18–20]. Capturing this complexity and these unexpected effects requires keeping a broad scope of measurement.

Nonetheless, some specificity could be achieved by using certain instruments rather than others, according to the particular priorities addressed in various jurisdictions. This highlights the importance of clarifying the objectives of PHC evaluation before choosing an evaluative instrument. Since no single tool offers complete coverage, optimal measurement of PHC attributes may be achieved using combinations of instrument subscales. The results of the study subsequent to this one, comparing in-depth the performance of attribute measurement in six selected instrument, has now been published as a supplement [21, 22]. These results can guide the selection of tools given particular evaluation objectives. However, one must keep in mind that measuring a vast array of constructs might pose challenges related to the length of the instrument to be used and the related consequences' on costs and response rates. Ultimately, the selection of instruments involves trade-offs and the instruments' coverage is only one of the aspects to be considered.

Limitations and strengths

In this study, we limited our analyses to validated instruments available in the public domain. Therefore, although other instruments with various levels of validation or previous utilization exist as well, our results apply solely to these commonly used instruments of PHC evaluation. In addition, our mapping was independent of the instruments developers' initial intent and conceptualisation. Some instruments had a clear intent of capturing a broader array of attributes of experience of care (IPC, MMISS-21), some were specific to primary care (e.g. PCAS, PCAT) or to specific dimensions (e.g. PACIC). However, using common lexicon is strength. This study should not be seen as a summative assessment of the quality of the tools. Our intent was solely to map the instruments to a set of attributes being seen as important to evaluate in primary care [3].

In addition, our results are reflecting mapping of whole subscales. Mapping by items showed that some subscales cover more than one attribute. However, given that single items have much less capacity to represent constructs of interest and to measure PHC attributes validly, we believe that basing our study on subscales provided more insight into the real coverage of attributes.

We also could not assess the appropriateness of coverage of various attributes and have resorted to a simple covered/not covered dichotomy in our mapping. It would be interesting, in future research, to pay attention to the different aspects of specific attributes and to assess how the available subscales are measuring them. It could be that some tools cover more attributes than others but measure them only very superficially. Other tools that restrict their coverage may measure individual attributes in depth. However, for the purpose of evaluating PHC reforms, a balance must be struck between breadth of coverage and in-depth measurement. This study could not assess what that balance should be given the multiple contexts of PHC reform evaluation.

Our study captured the vast majority of validated tools currently available to measure experience of care from the patient perspective. We did not aim at assessing the validity of currently used non validated tools. However, many non validated tools borrow from the concepts being measured in the comprehensive set of validated tools we found. Many of these non validated tools are also very descriptive in nature, aiming at reporting facts about the experience of care more than aiming at measuring reliably an underlying construct. Therefore, we feel confident that this does not represent a major issue for our study.

We used operational definitions of attributes developed by a panel of recognized Canadian experts that represented a common understanding of PHC and its desired outcomes in the Canadian context (internal validity), but do not guarantee that the results can be generalized to other contexts (external validity). However, we could argue that trying to involve experts from various settings might have resulted in the identified attributes not being useful for the Canadian context. On the other hand, such a panel of experts would most certainly have achieved consensus on the core attributes of accessibility, continuity, comprehensiveness of services, and person-centeredness, which are the most measured. We therefore feel that our results are applicable to many contexts sharing similarities with the Canadian health system.

In addition, given that our study used published validated tools as a base of comparison, our study could not assess the coverage for more recent constructs related to patients' experience, such as self-efficacy, shared-decision making, participation and enablement. Future studies should pay attention to this both in terms of complementing the existing validated instruments with items and scales that can capture these constructs as well as in evaluating their psychometric properties.

Conclusion

A comprehensive assessment of PHC requires measuring different attributes. The available validated instruments to evaluate the quality of PHC from the patient perspective leave many important attributes uncovered, but they do address most attributes that are considered essential. For a complete assessment of PHC quality, existing tools will need to be improved and/or new evaluation instruments developed.

References

Safran DG, Kosinski J, Tarlov AR, Rogers WH, Taira DA, Lieberman N, Ware JE: The primary care assessment survey: tests of data quality and measurement performance. Medical Care. 1998, 36 (5): 728-739. 10.1097/00005650-199805000-00012.

Grol R, Wensing M, M and Task Force on Patient Evaluations of General Practice: "Patients Evaluate General/Family Practice: The EUROPEP instrument.". 2000, Nijmegen, the Netherlands: Center for Research on Quality in Family Practice, University of Nijmegen

Haggerty J, Burge F, Lévesque J-F, Gass D, Pineault R, Beaulieu M-D, Santor D: Operational definitions of attributes of primary health care: consensus among Canadian experts. Ann Fam Med. 2007, 5: 336-344. 10.1370/afm.682.

Flocke S: Measuring attributes of primary care: development of a new instrument. J Fam Pract. 1997, 45 (1): 64-74.

Stewart AL, Nápoles-Springer A, Pérez-Stable EJ: "Interpersonal processes of care in diverse populations". Milbank Q. 1999, 77 (3): 305-339. 10.1111/1468-0009.00138.

Mead N, Bower P, Roland M: The general practice assessment questionnaire (GPAQ)--development and psychometric characteristics. BMC Fam Pract. 2008, 9: 13-10.1186/1471-2296-9-13.

Ramsay J, Campbell JL, Schroter S, Green J, Roland M: The general practice assessment survey (GPAS): tests of data quality and measurement properties. Fam Pract. 2000, 17 (5): 372-379. 10.1093/fampra/17.5.372.

Meakin R, Weinman J: The 'medical interview satisfaction scale' (MISS-21) adapted for British general practice. Fam Pract. 2002, 19 (3): 257-263. 10.1093/fampra/19.3.257.

Glasgow RE, Wagner EH, Schaefer J, Mahoney LD, Reid RJ, Greene SM: Development and validation of the patient assessment of chronic illness care (PACIC). Medical Care. 2005, 43 (5): 436-444. 10.1097/01.mlr.0000160375.47920.8c.

Cassady CE, Starfield B, Hurtado MP, Berk RA, Nanda JP, Friedenberg LA: Measuring consumer experiences with primary care. Pediatrics. 2000, 105 (4): 998-1003.

Starfield B, Cassady C, Nanda J, Forrest CB, Berk R: Consumer experiences and provider perceptions of the quality of primary care: implications for managed care. J Fam Pract. 1998, 46: 216-226.

Borowsky SJ, Nelson DB, Fortney JC, Hedeen AN, Bradley JL, Chapko MK: VA community-based outpatient clinics: performance measures based on patient perceptions of care. Medical Care. 2002, 40 (7): 578-586. 10.1097/00005650-200207000-00004.

Steine S, Finset A, Laerum E: A New, Brief Questionnaire (PEQ) developed in primary health care for measuring patients' experience of interaction, emotion and consultation outcome. Fam Pract. 2001, 18 (4): 410-418. 10.1093/fampra/18.4.410.

Haddad S, Potvin L, Roberge D, Pineault R, Remondin M: Patient perception of quality following a visit to a doctor in a primary care unit. Fam Pract. 2000, 17 (1): 21-29. 10.1093/fampra/17.1.21.

Hargraves JL, Hays RD, Cleary PD: Psychometric properties of the consumer assessment of health plans study (CAHPS®) 2.0 adult core survey. Health Serv Res. 2003, 38 (6 Pt 1): 1509-1527.

Marshall GN, Hays RD: The Patient Satisfaction Questionnaire Short-Form (PSQ-18). 1994, Rand, (Rep. No. P-7865)

Lévesque J-F, Haggerty J, Burge F, Beaulieu M-D, Gass D, Pineault R, Santor D: "Canadian Experts' Views on the Importance of Attributes within Professional and Community-oriented Primary Healthcare Models.". Healthcare Policy. 7 (Sp): 21-30.

Hutchison B, Levesque J-F, Strumpf E, Coyle N: Primary health care in Canada: systems in motion. Milbank Q. 2011, 89 (2): 256-288. 10.1111/j.1468-0009.2011.00628.x.

Nutting PA, Miller WL, Crabtree BF, Jaen CR, Stewart EE, Stange KC: Initial lessons from the first national demonstration project on practice transformation to a patient-centered medical home. Ann Fam Med. 2009, 7 (3): 254-260. 10.1370/afm.1002.

Roland M, Rosen R: English NHS embarks on controversial and risky market-style reforms in health care. N Engl J Med. 2011, 364 (14): 1360-1366. 10.1056/NEJMhpr1009757.

Haggerty J, Burge F, Beaulieu M-D, Pineault R, Levesque J-F, Beaulieu C: Validation of Instruments to evaluate primary health care from the consumer perspective: overview of the method. Healthcare Policy. 2011, 7 (Sp): 31-46.

Santor D, Haggerty J, Levesque J-F, Beaulieu M-D, Burge F, Pineault R: An overview of the analytic approaches used in validating instruments that evaluate primary health care from the patient perspective. Healthcare Policy. 2011, 7 (Sp): 79-92.

Pre-publication history

The pre-publication history for this paper can be accessed here:http://www.biomedcentral.com/1471-2296/13/20/prepub

Author information

Authors and Affiliations

Corresponding author

Additional information

Competing interests

The authors declare that they have no competing interest.

Authors' contributions

JFL and JH designed the study and analyses plan, conducted the analyses, drafted a first version of the manuscript and finalise the manuscript. GB realised the scoping of questionnaires and classification of items. DG, MDB, RP and DS participated in the interpretation of findings and revised earlier versions of the manuscript. CB participated in the analyses and classification of items. All authors read and approved the final manuscript.

Authors’ original submitted files for images

Below are the links to the authors’ original submitted files for images.

Rights and permissions

This article is published under license to BioMed Central Ltd. This is an Open Access article distributed under the terms of the Creative Commons Attribution License (http://creativecommons.org/licenses/by/2.0), which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

About this article

Cite this article

Lévesque, JF., Haggerty, J., Beninguissé, G. et al. Mapping the coverage of attributes in validated instruments that evaluate primary healthcare from the patient perspective. BMC Fam Pract 13, 20 (2012). https://doi.org/10.1186/1471-2296-13-20

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/1471-2296-13-20