Abstract

Background

Microarray data have a high dimension of variables and a small sample size. In microarray data analyses, two important issues are how to choose genes, which provide reliable and good prediction for disease status, and how to determine the final gene set that is best for classification. Associations among genetic markers mean one can exploit information redundancy to potentially reduce classification cost in terms of time and money.

Results

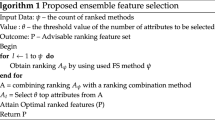

To deal with redundant information and improve classification, we propose a gene selection method, Recursive Feature Addition, which combines supervised learning and statistical similarity measures. To determine the final optimal gene set for prediction and classification, we propose an algorithm, Lagging Prediction Peephole Optimization. By using six benchmark microarray gene expression data sets, we compared Recursive Feature Addition with recently developed gene selection methods: Support Vector Machine Recursive Feature Elimination, Leave-One-Out Calculation Sequential Forward Selection and several others.

Conclusions

On average, with the use of popular learning machines including Nearest Mean Scaled Classifier, Support Vector Machine, Naive Bayes Classifier and Random Forest, Recursive Feature Addition outperformed other methods. Our studies also showed that Lagging Prediction Peephole Optimization is superior to random strategy; Recursive Feature Addition with Lagging Prediction Peephole Optimization obtained better testing accuracies than the gene selection method varSelRF.

Similar content being viewed by others

Background

Using microarrays techniques, researchers can measure the expression levels for tens of thousands of genes in a single experiment to provide scientists functional relationship information between the cellular and physiological processes of biological organisms and genes at a genome-wide level. The preprocessing procedure for the raw microarray data consists of back-ground correction, normalization, and summarization. After preprocessing, high level analyses, such as gene selection, classification, or clustering, are executed for profiling gene expression patterns [1]. In the past decade, two main tracks of analyses of microarray data have been to partition genes into closely related groups across time using clustering techniques and to classify patients with different health statuses based on selected gene signatures [2–6]. Various standards related to systems biology are discussed by Brazma et al. [7]. When sample sizes are substantially smaller than the number of features/genes, statistical modeling and inference issues are challenging, which is known as the "large p small n problem". Two important questions and challenges for the high dimensional data analyses are how to choose features that provide reliable and good prediction and how to determine the final optimal feature set that is best for prediction and classification.

To address the "curse of dimensionality" problem, three strategies have been proposed: filtering, wrapper and embedded methods. Filtering methods select subset features independently from the learning classifiers and do not incorporate learning [8–11]. One of the weaknesses of filtering methods is that they only consider the individual features in isolation and ignore their possible interactions. Yet, the combination of these features may have a combined effect that does not necessarily follow from the individual performance of features in that group [12]. One of the consequences of filtering methods is that we may end up with many highly correlated features/genes; this highly redundant information will worsen classification and prediction performance. Furthermore, if there is a limit on the number of features to be chosen, we may not be able to include all informative features.

To avoid weakness in filtering methods, wrapper methods wrap around a particular learning algorithm that can assess the selected feature subsets in terms of estimated classification errors to build the final classifier [13]. Wrapper methods use a learning machine to measure the quality of subsets of features. One recent well-known wrapper method for feature/gene selection is Support Vector Machine Recursive Feature Elimination (SVMRFE), proposed by Guyon et al. [14], which refines the optimum feature set by using Support Vector Machine (SVM). The idea of SVMRFE is that the orientation of the separating hyper-plane found by the SVM can be used to select informative features: if the plane is orthogonal to a particular feature dimension, then that feature is informative, and vice versa. In addition to gene selection, SVMRFE has been successfully used in other feature selection and pattern classification situations [15, 16].

Wrapper methods can noticeably reduce the number of features and significantly improve classification accuracy [17, 18]. However, wrapper methods have the drawback of high computational load. With better computational efficiency and similar performance to wrapper methods, embedded methods process feature selection simultaneously with a learning classifier. Examples of embedded methods are LASSO [19, 20] and logistic regression with the regularized Laplacian prior [21].

Combining the sequential forward selection (SFS) and sequential floating forward selection (SFFS) with LS (Least Squares) Bound measure, Zhou and Mao proposed SFS-LS bound and SFFS-LS bound algorithms for optimal gene selection [22]. Tang et al. also proposed two gene selection methods, leave-one-out calculation sequential forward selection (LOOCSFS) and the gradient based leave-one-out gene selection (GLGS) [23]. Diaz-Uriarte and De Andres [24] presented a new method for gene selection that uses random forest [25]. The main advantage of this method is that it returns very small sets of genes that retain high predictive accuracy. The algorithms are publicized in the R package of varSelRF. Additionally, Guyon and Elisseeff elaborated a wide range of aspects in feature selection including a better definition of the objective function, feature construction, feature ranking, multivariate feature selection, efficient search methods and feature validity assessment methods [26].

In human genetic research, exploiting information redundancy from highly correlated genes may potentially reduce the cost of classification in terms of time and money. To deal with redundancy issues and to improve classification for microarray data, we designed a gene selection method recursive feature addition (RFA) in our previous work [27], however, the optimal feature set associated with the best training was not solved. In this paper, we compare this method to SVMRFE, LOOCSFS, GLGS, SFS-LSbound, SFFS-LSbound and T-test by using six benchmark microarray data sets; meanwhile, we propose an algorithm, Lagging Prediction Peephole Optimization (LPPO), to choose the final optimal feature/gene set. We evaluate LPPO by comparing it with random strategy under the best training condition and valSelRF [24].

Results

Under feature dimension j, the training accuracy of the ith experiment is r(i, j), and the testing accuracy of the ith experiment is s(i, j), i = 1, 2,..., I; j = 1, 2,..., J; where I is the number of experiments and J is the number of chosen features. The average testing accuracy of the experiments under the feature dimension j, s(j), j = 1, 2,..., J, is calculated as follows:

The average testing accuracy, ms_hr(i), of the ith experiment under the condition that the associated/corresponding training accuracy is the highest, which is defined as follows:

The average testing accuracy ms_hr(i) is the expected value of the random strategy under the best training classification of the ith experiment.

The highest testing accuracy, hs_hr(i), of the ith experiment under the condition that the associated/corresponding training accuracy is the highest, which is defined as follows:

Average testing accuracy

Figure 1 lists the average testing accuracies of the gene selection methods with classifiers NMSC, SVM, NBC, and RF. Again, the performances of NBC-MMC, NMSC-MMC, NBC-MSC, and NMSC-MSC are close to one another; therefore, the average testing accuracies of the gene selection methods NBC-MMC, NMSC-MMC, and NBC-MSC are not listed in the figures. It indicates that the average testing accuracy of NMSC-MSC is the best, followed by GLGS, LOOCSFS, and SVM-RFE. SFS-LS bound, SFFS-LS bound, and T-TEST did not perform well. Figure 1 also demonstrates that, spanning several data sets and learning classifiers, the performance and stabilization of the gene selection method of NMSC-MSC is the best.

Testing results under the best training

Table 1 provides the mean values and standard errors of the testing accuracies ms_hr(i), (i = 1, 2,..., 20) and the highest testing accuracies hs_hr(i), (i = 1, 2, ..., 20) under the highest training classification, respectively. After applying each classifier to each data set, the highest mean value of the ten gene selection methods is shaded. In each data set, the highest mean value in the shade is in bold. With the use of the four learning classifiers, under the best training, RFA, GLGS, LOOCSFS, SVMRFE, SFS-LSBOUND, SFFS-LSBOUND, and T-test respectively achieve the highest testing accuracies (HS_HR), 99.9%, 99.6%, 99.3%, 98.0%, 97.4%, 97.3%, and 96.8% for the leukemia data set; 99.5%, 98.6%, 93.0%, 99.2%, 95.1%, 96.1%, and 94.4% for lymphoma; 96.9%, 96.1%, 95.2%, 95.7%, 93.4%, 92.7%, and 94.0% for prostate; 91.1%, 90.5%, 86.8%, 86.8%, 87.1%, 86.0%, and 85.5% for colon; 94.0%, 91.1%, 85.0%, 85.1%, 76.2%, 76.2%, and 77.4% for CNS; and 85.9%, 83.7%, 80.3%, 80.4%, 81.5%, 81.3%, and 77.6% for the breast cancer data set. In applying the ten gene selection methods to the six benchmark data sets, all the highest testing accuracies are obtained from the gene set chosen by RFA.

Table 2 lists the number of occurrences for each gene selection method that achieved the best testing accuracy. Table 2 shows that 61 out of 67 highest mean values were obtained by MMC- or MSC-based methods; GLGS, LOOCSFS, and SVMRFE obtained the best twice, three times, and once, respectively; LSBOUND and T-TEST never got the best value. Results indicate that RFA outperforms other gene selection methods.

On the other side, to see whether the new methods are superior to others, regression models were built based on average testing accuracy (ms_hr) and highest testing accuracy (hs_hr), respectively, with data set (six benchmark microarray data set), gene selection method (four new methods and six other methods) and classifier (four classification methods) as independent variables. After adjusting data set effect and classifier effect, the main effects for the new feature selection methods (NBC-MMC, NMSC-MMC, NBC-MSC, and NMSC-MSC) and others (GLGS, LOOCSFS, SVMRFE, SFS-LSBOUND, SFFS-LSBOUND, and T-test) are 91.86%, 91.67%, 92.47%, 92.27%, 90.65%, 86.96%, 88.89%, 84.70%, 85.38%, and 83.93% for the highest testing accuracy, and 86.38%, 86.15%, 87.30%, 86.97%, 85.48%, 82.76%, 83.96%, 79.45%, 80.58%, and 79.36% for the average testing accuracy, respectively. Table 3 gives the p-values of testing superiority of each new method to other six methods, which are calculated based on one-tailed t-test from the output of the regression models. From the p-values, the performances of our new methods are statistically significantly better than all other methods (most p-values are <0.0001) except for GLGS. From Table 3 MSC-based methods (NBC-MSC, NMSC-MSC) are significantly better than GLGS based on both highest testing accuracy and average testing accuracy at a significance level of 0.05. Although the p-values for NBC-MMC and NMSC-MMC to GLGS are not small enough due to the small sample size (only six testing data sets) and therefore lower power, we would expect that the differences will be detected at lower significance levels if more data sets are used. To see whether the four new gene methods perform differently, we also test each pair of the four methods and calculate the p-values based on two-tailed t-test from the output of the regression models. All the p-values are bigger than 0.2, so the four new methods perform equally well.

Comparison of LPPO and random strategy

Table 4 lists the mean values of the differences between the testing values (denoted as S_LPPO) by applying NMSC, SVM, NBC, and RF to LPPO and ms_hr. This table shows that, on average, LPPO is superior to the random strategy under the best training accuracies. In summary, spanning the six benchmark data sets, in comparison with ms_hr, LPPO improves the testing accuracy by 0.8% for NMSC, 0.7% for SVM, 0.4% for NBC, and 0.9% for RF on average.

Comparison of LPPO and varSelRF

Figure 2 gives the boxplots of the testing values with the use of learning classifier random forest for the feature sets from LPPO with RFA and varSelRF. The gene selection methods are NBC-MMC, NMSC-MMC, NBC-MSC, NMSC-MSC, and varSelRF from left to right in each subfigure. Figure 2 indicates that the testing accuracies by applying random forest to the feature sets of LPPO with RFA are better than those of varSelRF. In comparison with varSelRF, LPPO with RFA increases the average testing accuracy by about 5% for the leukemia data set, 9% for lymphoma, 3% for colon and prostate, 10% for CNS, and 14% for the breast cancer data set.

Boxplots of testing accuracies of the LPPO with four gene selection methods using two different classifiers (NBC, NMSC) compared to varSelRF for six data sets. RF is the final classifier. All six data sets demonstrate that varSelRF accuracies are lower than our proposed feature selection and optimization algorithm with the same RF classifier.

Computational efficiency

In microarray data analysis, generally, the number of features in the final feature set is far smaller than the total variables. Suppose the number of total variables is n, the number of features of the final feature set is m (m <<n). In forward feature selection, with the use of some learning classifier, the computational time is F(s, d) for a s×d feature matrix, here s is the number of data samples (s << n) and d is the feature dimensionality at each sample. Without losing the generality, if d1<d2, F(s, d1) <F(s, d2). The computational cost of our feature selection algorithm is analyzed as follows.

Let T1 denote the total computational time for supervised learning

Let T2 denote the computational time for similarity calculation among the candidates and chosen genes, the calculation time between two single- variant vectors with s samples is C(s), then

Due to the fact of m << n and s << n with microarray data, the computational cost of our feature selection is obtained by

Conclusions

Our study shows that our gene selection method Recursive Feature Addition (RFA) obtained the best classification performance in the comparison. RFA utilizes supervised learning to obtain the best classification, and indentifies the subsequent gene recursively based on the similarity measures between the chosen gene set and the candidates to minimize the redundancy of the genes within the selected subset; hence it obtains more informative and differently expressed genes. Based on RFA, we also propose an algorithm, Lagging Prediction Peephole Optimization (LPPO), to determine the optimal feature set. Using six popular benchmark data sets, we compared RFA with other gene selection methods. Our studies showed that RFA outperformed other methods with the use of the four popular classifiers: NMSC, NBC, SVM, and random forest. Results also showed that, on average, LPPO is superior to a random strategy under the best training and that it outperformed the random forest based gene selection method varSelRF.

Methods

Supervised recursive learning

Our method of RFA uses supervised learning to achieve the highest level of training accuracy and statistical similarity measures to choose the next variable with the least dependence on or correlation to the already identified variables as follows:

1. Insignificant genes are removed according to their statistical insignificance. Specifically, a gene with a high p-value is usually not differently expressed and therefore has little contribution in distinguishing normal tissues from tumor tissues or in classifying different types of tissues. To reduce the computational load, those genes should be removed. The filtered gene data is then normalized. Here we use the standard normalization method, MANORM, which is available from MATLAB bioinformatics toolbox.

2. Each individual gene is selected by supervised learning. A gene with highest classification accuracy is chosen as the most important feature and the first element of the feature set. If multiple genes achieve the same highest classification accuracy, the one with the lowest p-value measured by test-statistics (e.g., score test), is the target of the first element. At this point the chosen feature set, G1, contains just one element, g1, corresponding to the feature dimension one.

3. The (N+1)st dimension feature set, GN+1= {g1, g2, ..., g N , gN+1} is obtained by adding gN+1to the Nthdimension feature set, G N = {g1, g2, ..., g N }. The choice of gN+1is described as follows:

Add each gene g i (g i ∉ G N ) into G N and obtain the classification accuracy of the feature set G N ∪{g i }. The g i (g i ∉ G N ) associated with the group, G N ∪{g i } that obtains the highest classification accuracy, is the candidate for gN+1(not yet gN+1). Considering the large number of variables, it is highly possible that multiple features correspond to the same highest classification accuracy. These multiple candidates are placed into the set C, but only one candidate from C will be identified as g N+1 . How to make the selection is described next.

Candidate feature addition

To find the most informative (or least redundant) next feature gN+1, two formulas may be designed by measuring the statistical similarity between the chosen feature set and each candidate. Here we use, say, Pearson's correlation coefficient [28] between chosen features g n (g n ∈ G N , n = 1, 2, ..., N) and candidate g c (g c ∈ C) to measure the similarity.

In the first formula, the sum of the square of the correlation, SC, is calculated to measure the similarity and is defined as follows:

Where, g c ∈ C , g n ∈ G N .

Then selection of gN+1can be based on the Minimum Sum of the square of the Correlation (MSC), that is,

In the second formula, the maximum value of the square of the correlation, MC, is calculated:

Where, g c ∈ C , g n ∈ G N .

The selection of g N+1 follows the criterion that the MC value is the minimum, which we call Minimum of Maximum value of the square of the Correlation (MMC).

In the methods mentioned above, a feature is recursively added to the chosen feature set based on supervised learning and the similarity measures. With the use of a classifier XXX, we call the first gene selection method XXX-MSC and the second one XXX-MMC. For example, if the classifier is Naive Bayes Classifier (NBC), we call the two strategies NBC-MSC and NBC-MMC, respectively.

Lagging Prediction Peephole Optimization (LPPO)

We want to find a combination of features (genes) that yields the best performance on breaking down solvents. Normally, with the recursive addition for the next feature, the training accuracy will increase and reach a peak classification performance at some point, and then may maintain it with subsequent feature additions; but after that the training accuracy may decrease. Generally speaking, all strategies for determining the final feature set should be based on the best training classification. In high-volume data analysis, it is common that the best training accuracy corresponds to different feature sets; that is, multiple feature sets achieve the same highest training accuracy. However, although all these feature sets are associated with the same highest training accuracy, the testing accuracy of these feature sets may be different. Among these highest training feature sets, the one having the best testing accuracy is called the optimal feature set, which is highly complicated to characterize when a sample size is small. Either applying different gene methods to the same training samples, or applying the same gene selection method to different training samples, or applying different learning classifiers to the same training samples, will produce a different optimization of the feature set. Pochet et al. [29] presented a method of determining the optimal number of genes by means of a cross-validation procedure; the drawback of this method is that it actually utilizes whole data information, including training samples and testing samples.

How do we choose the optimal feature set? If there are multiple best training classifications, a random choice, called random strategy, works for best training classification. In the recursive addition of the features, for training samples, a classification model is one of the best methods. But for testing samples, at this point, the classification model may not be optimal because of the difference between the training samples and the testing samples; the optimal classification model will lag in appearance (see Figure 1). Based on this observation, we propose the following algorithm for optimization.

Under feature dimension j, the training accuracy of the ith experiment is r(i, j). If the feature set G k , corresponding to feature dimension k, has the best training accuracy in the trainings from the feature set G1 to GD, corresponding to the feature dimensions from 1 to D, let HR denote the set that contains all the combinations of G k , corresponding to all the feature set having the highest classification accuracy under feature dimension 1 to D.

In general, the best classification model for testing samples will lag in appearance behind the initial best training model. We will exclude the elements of HR that correspond to the initial best training. The remaining elements in HR constitute the candidate set HRC for optimization.

Each element in HRC is associated with the best training accuracy. We set a peephole for each element and choose the element associated with the optimal peephole. The details are described as follows:

a. For each element G k ∈ HRC, the peephole over G k with length of 2l+1 covers the feature sets G k-l , Gk-l+ 1, ..., G k , ..., Gk+l-1, G k+l , corresponding to the training accuracy r(i, k-l), r(i, k-l+ 1), ..., r(i, k), ..., r(i, k+l- 1), r(i, k+l). The mean training value of the peephole is denoted by mp_r(i, k).

The peephole with the best classification of mp_r is then chosen as the optimal one.

b. If there are multiple optimal peepholes, then we apply random forest to these peepholes and check the mean values of the Out-of-Bag (OOB) error rates [24, 25, 30]. The feature sets G k-l , Gk-l+1, ..., Gk,, ..., Gk+l-1,G k+l correspond to the OOB errors, oob_e(i, k-l), oob_e(i, k-l+ 1), ..., oob_e(i, k), ..., oob_e(i, k+l- 1), oob_e(i, k+l). The mean value of the OOB errors is denoted by mp_oob_e(i, k)

The peephole with minimum mp_oob_e is the optimal one.

c. If there are multiple peepholes corresponding to the best mp_r and minimum mp_oob_e, then set l +1 → l, and repeat 'a' to 'c', until a unique optimal peephole is determined.

d. The feature set located at the center of the final optimal peephole is chosen as the final optimal feature set.

This optimization of RFA is called Lagging Prediction Peephole Optimization (LPPO). Figure 3 briefly outlines the LPPO on the prostate data set, which was studied by Singh et a l. [31].

Data sets

The following six benchmark microarray data sets have been extensively studied and used in our experiments to compare the performances of our methods with others. Data sources that are not specified are available at: http://www.broad.mit.edu/cgi-bin/cancer/datasets.cgi.

1) The LEUKEMIA data set consists of two types of acute leukemia: 48 acute lymphoblastic leukemia (ALL) samples and 25 acute myeloblastic leukemia (AML) samples with over 7129 probes from 6817 human genes. It was studied by Golub et al. [32].

2) The LYMPHOMA data set consists of 58 diffuse large B-cell lymphoma (DLBCL) samples and 19 follicular lymphoma (FL) samples. It was studied by Shipp et al. [33]. The data file, lymphoma_8_lbc_fscc2_rn.res, and the class label file, lymphoma_8_lbc_fscc2.cls were used in our experiments for identifying DLBCL and FL.

3) The PROSTATE data set used by Singh et al. [31] contains 52 prostate tumor samples and 50 non-tumor prostate samples.

4) The COLON cancer data set used by Alon et al. [34] contains 62 samples collected from colon-cancer patients. Among them, 40 tumor biopsies are from tumors, and 22 normal biopsies are from healthy parts of the colons of the same patients. Based on the confidence in the measured expression levels, 2000 genes were selected. The data source is available at: http://microarray.princeton.edu/oncology/affydata/index.html.

5) The Central Nervous System (CNS) embryonal tumor data set that was originally studied by Pomeroy et al. [35] contains 60 patient samples. Among them, 21 are survivors who are alive after treatment, and 39 are failures who succumbed to their diseases. There are 7129 genes.

6) The Breast cancer data set studied by Van et al. [36] contains 97 patient samples, 46 of which are relapse patients who had developed distance metastases within 5 years, and 51 patients who are non-relapsed who remained healthy for at least 5 years from the distance after their initial diagnosis. This data source is available at: http://www.rii.com/publications/2002/vantveer.htm.

Experiments

Our experiments are designed as follows:

1. The data sets are first divided randomly into training samples and testing samples. The ratio of training samples to testing samples is approximately 1:1 in each class.

2. Recursive feature additions with Naive Bayes Classifier (NBC) and Nearest Mean Scaled Classifier (NMSC) for gene selection (NBC-MSC, NBC-MMC, NMSC-MSC, and NMSC-MMC) were applied to the training samples for gene selection. Different feature sets of the gene expression data are produced under feature dimensions 1 to 100. We compared the above proposed methods to several recently developed and published gene selection methods: LOOCSFS, GLGS, SVMRFE, SFFS-LS bound, SFS-LS bound, and also T-TEST.

3. To compare different gene selection methods, the learning classifiers including NBC, NMSC, SVM [37, 38], and Random Forest are applied to the testing samples.

4. The experiments were performed in 20 runs, and the average testing accuracies were compared to evaluate performance.

References

Chen Z, McGee M, Liu Q, Scheuermann RH: A distribution free summarization method for Affymetrix GeneChip Arrays. Bioinformatics. 2007, 23 (3): 321-327. 10.1093/bioinformatics/btl609.

Quackenbush J: Computational analysis of microarray data. Nat Rev Genet. 2001, 2 (6): 418-427. 10.1038/35076576.

Hand DJ, Heard NA: Finding groups in gene expression data. J Biomed Biotechnol. 2005, 215-225. 2

Segal E, Friedman N, Kaminski N, Regev A, Koller D: From signatures to models: understanding cancer using microarrays. Nat Genet. 2005, 37 (Suppl): S38-45.

Torrente A, Kapushesky M, Brazma A: A new algorithm for comparing and visualizing relationships between hierarchical and flat gene expression data clusterings. Bioinformatics. 2005, 21 (21): 3993-3999. 10.1093/bioinformatics/bti644.

Qin Z: Clustering microarray gene expression data using weighted Chinese restaurant process. Bioinformatics. 2006, 22 (16): 1988-1997. 10.1093/bioinformatics/btl284.

Brazma A, Krestyaninova M, Sarkans U: Standards for system biology. Nat Rev Genet. 2006, 7: 593-605.

Newton MA, Kendziorski CM, Richmond CS, Blattner FR, Tsui KW: On differential variability of expression ratios: improving statistical inference about gene expression changes from microarray data. J Comput Biol. 2001, 8 (1): 37-52. 10.1089/106652701300099074.

Long A, Mangalam H, Chan B, Tolleri L, Hatfield G, Baldi P: Improved statistical inference from DNA microarray data using analysis of variance and a Bayesian statistical framework. J Biol Chem. 276 (23): 19937-19944.

Bo T, Jonassen I: New feature subset selection procedures for classification of expression profiles. Genome Biol. 2002, 3 (4): research0017-

Yu J, Chen X-W: Bayesian neural network approaches to ovarian cancer identification from high-resolution mass spectrometry data. Bioinformatics. 2005, 21 (suppl 1): i487-i494. 10.1093/bioinformatics/bti1030.

Pavlidis P, Noble WS: Analysis of strain and regional variation in gene expression in mouse brain. Genome Biol. 2001, 2 (10): 0042.1-0042.15.

Inza I, Sierra B, Blanco R, Larranaga P: Gene selection by sequential search wrapper approaches in microarray cancer class prediction. Journal of Intelligent and Fuzzy Systems. 2002, 12 (1): 25-33.

Guyon I, Weston J, Barnhill S, Vapnik VN: Gene selection for cancer classification using support vector machines. Machine Learning. 2002, 46 (19): 389-422.

Liu Q, Sung AH, Chen Z, Xu J: Feature mining and pattern classification for steganalysis of LSB matching steganography in grayscale images. Pattern Recognition. 2008, 41 (1): 56-66. 10.1016/j.patcog.2007.06.005.

Liu Q, Sung AH, Qiao M, Chen Z, Ribeiro B: An improved approach to steganalysis of JPEG images. Information Sciences. 2010, 180 (9): 1643-1655. 10.1016/j.ins.2010.01.001.

Monari G, Dreyfus G: Withdrawing an example from the training set: an analytic estimation of its effect on a nonlinear parameterized model. Neurocomputing. 2000, 35 (1-4): 195-201. 10.1016/S0925-2312(00)00325-8.

Rivals I, Personnaz L: MLPs (Mono-Layer Polynomials and Multi-Layer Perceptrons) for nonlinear modeling. Journal of Machine Learning Research. 2003, 3: 1383-1398.

Tibshirani R: Regression shrinkage and selection via the lasso. J Royal Statist Soc B. 1996, 58 (1): 267-288.

Tibshirani R: The lasso method for variable selection in the Cox model. Stat Med. 1997, 16 (4): 385-395. 10.1002/(SICI)1097-0258(19970228)16:4<385::AID-SIM380>3.0.CO;2-3.

Krishnapuram B, Carin L, Figueiredo M, Hartemink A: Sparse multinomial logistic regression: fast algorithms, and generalization bounds. IEEE Trans Pattern Anal Mach Intell. 2005, 27 (6): 957-968.

Zhou X, Mao K-Z: LS bound based gene selection for DNA microarray data. Bioinformatics. 2005, 21 (8): 1559-1564. 10.1093/bioinformatics/bti216.

Tang E-K, Suganthan PN, Yao X: Gene selection algorithms for microarray data based on least square support vector machine. BMC Bioinformatics. 2006, 7: 95-10.1186/1471-2105-7-95.

Diaz-Uriarte R, de Andres SA: Gene selection and classification of microarray data using random forest. BMC Bioinformatics. 2006, 7: 3-10.1186/1471-2105-7-3.

Breiman L: Random forests. Machine Learning. 2001, 45 (1): 5-32. 10.1023/A:1010933404324.

Guyon I, Elisseeff A: An introduction to variable and feature selection. Journal of Machine Learning Research. 2003, 3: 1157-1182.

Liu Q, Sung AH, Chen Z, Liu J, Huang X, Deng Y: Feature selection and classification of MAQC-II breast cancer and multiple myeloma microarray gene expression data. PLoS One. 2009, 4 (12): e8250-10.1371/journal.pone.0008250.

Tan P, Steinbach M, Kumar V: Introduction to Data Mining. 2005, Addison-Wesley, 76-79.

Pochet N, De Smet F, Suykens J, De Moor B: Systematic benchmarking of microarray data classification: assessing the role of non-linearity and dimensionality reduction. Bioinformatics. 2004, 20 (17): 3185-3195. 10.1093/bioinformatics/bth383.

Liaw A, Wiener M: Classification and regression by random forest. R News. 2 (3): 18-22.

Singh D, et al: Gene expression correlates of clinical prostate cancer behavior. Cancer Cell. 2002, 1 (2): 227-235.

Golub T, et al: Molecular classification of cancer: class discovery and class prediction by gene expression. Science. 1999, 286 (5439): 531-537. 10.1126/science.286.5439.531.

Shipp M, et al: Diffuse large B-cell lymphoma outcome prediction by gene expression profiling and supervised machine learning. Nat Med. 2002, 8 (1): 68-74. 10.1038/nm0102-68.

Alon U, et al: Broad patterns of gene expression revealed by clustering analysis of tumor and normal colon tissues probed by oligonucleotide arrays. Proc Natl Acad Sci USA. 1999, 96 (12): 6745-6750. 10.1073/pnas.96.12.6745.

Pomeroy SL, et al: Prediction of central nervous system embryonal tumor outcome based on gene expression. Nature. 2002, 415: 436-442. 10.1038/415436a.

Van LJ, et al: Gene expression profiling predicts clinical outcome of breast cancer. Nature. 2002, 415: 530-536. 10.1038/415530a.

Vapnik V: Statistical Learning Theory. 1998, John Wiley, ISBN-10: 0471030031

Heijden F, Duin R, Ridder D, Tax D: Classification, Parameter Estimation and State Estimation. 2004, John Wiley, ISBN-10: 0470090138

Acknowledgements

The authors are grateful to Matteo Masotti, E Ke Tang, and Xin Zhou for offering their codes of RfeRank, GLGS, LOOCSFS, SFS-LSbound, and SFFS-LSbound, to Ramon Diaz-Uriarte for the help with the use of varSelRF R package. Partial support for this study from the Institute for Complex Additive Systems Analysis, a division of New Mexico Tech, and from Sam Houston State University, is greatly acknowledged.

Author information

Authors and Affiliations

Corresponding authors

Additional information

Authors' contributions

QL designed the algorithms, performed the study, and wrote the draft; AHS supervised the study and revised the draft; ZC helped designing the algorithms and experiments, provided the statistical analysis, and co-drafted the manuscript; LC, JL, MQ, YP, ZW and HX participated in algorithm design, programming, and helped editing the draft; and YP coordinated the project and assisted the draft and data analyses

Competing interests

The authors do not claim any confliction of interests.

Rights and permissions

Open Access This article is published under license to BioMed Central Ltd. This is an Open Access article is distributed under the terms of the Creative Commons Attribution License ( https://creativecommons.org/licenses/by/2.0 ), which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

About this article

Cite this article

Liu, Q., Sung, A.H., Chen, Z. et al. Gene selection and classification for cancer microarray data based on machine learning and similarity measures. BMC Genomics 12 (Suppl 5), S1 (2011). https://doi.org/10.1186/1471-2164-12-S5-S1

Published:

DOI: https://doi.org/10.1186/1471-2164-12-S5-S1